Abstract

In this paper, we present a pair of iterative methods for solving a system of nonlinear equations. Both methods are constructed without using the second-order derivative. It is further shown that these iterative methods possess third and fifth order convergence respectively. Finally, some numerical experiments are given to confirm our theoretical findings.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Solution of nonlinear equations and system of nonlinear equations using iterative methods are the most attractive fields in numerical analysis. There are good amounts of research contributions toward these areas but still there is some space for modification. The quadratically convergent Newton’s approximation method [1, 2, 14] is a classic work in this regard.

Let the system of nonlinear equations be

where each function \(f_i\) maps a vector \(x=(x_1,x_2,\ldots ,x_n)^T\) of n-dimensional space \({\mathrm{I{\!}\mathrm R}}^n\) into the real line \( {\mathrm{I{\!}\mathrm R}}\). System (1) involves n nonlinear equations and n unknowns.

We need to find a vector \({{\alpha }}=({{\alpha }}_1,{{\alpha }}_2,\ldots ,{{\alpha }}_n)^T\) such that \(F({{\alpha }})=0\). Newton’s method to solve (1) involves the scheme

where \(F'(x_n)\) is the first Frechet derivative of \(F(x_n)\).

Subsequently, many Newton type iterative methods have been developed by using different quadrature rules to solve the system of nonlinear equations with third-order convergence, see [3,4,5,6,7,8,9,10]. To discuss a few of them, we have

Noor and Waseem [4] have established two cubically convergent methods using open quadrature formula.

and

where \(y_{n}=x_n-\frac{F(x_n)}{F'(x_n)},\,\,\,\,\, n= 0, 1, 2, \ldots \)

Further, Liu et al. [3] have developed a cubically convergent method using two-point Gauss quadrature formula.

where \(y_{n}=x_n-\frac{F(x_n)}{F'(x_n)},\,\,\,\,\, n= 0, 1, 2, \ldots \)

Also they have constructed a fifth-order convergent method using two-point Gauss quadrature formula.

where \(y_{n}=x_n-\frac{F(x_n)}{F'(x_n)},\,\,\,\,\, n= 0, 1, 2, \ldots \).

Some multi-step iterative methods with fith-order convegence has been developed by using quadrature rule. For detail see [11,12,13].

In this paper, we develop a couple of new iterative schemes to solve the system of nonlinear equations. These schemes posses third- and fifth-order convergence, respectively. Further, we estimate the Efficiency Index \(E.I=p^{1/w}\), where p is the order of convergence and w is the sum of the number of functional evaluations and derivative evaluations per iteration. Additionally the computational order of convergence has been established.

This paper is organized as follows. Section 2 deals with development of new methods. In Sect. 3 the convergence of these methods are established. Finally in Sect. 4, several numerical examples are tested to find the solution of nonlinear equations and boundary value problems of nonlinear ODEs, affirming the consistency of the numerical results with the theoretical findings, and also a comparison is done corresponding to some existing results of some rules of the same class.

2 Development of the Methods

Modified Newton’s method—(I)

we have proposed the new Newton type iterative method as

where \(y_{n}=x_n-\frac{F(x_n)}{F'(x_n)},\,\,\,\,\, n= 0, 1, 2, \ldots \)

In particular choosing \(M=1\), i.e., \(N=2\), we have

where \(y_{n}=x_n-\frac{F(x_n)}{F'(x_n)},\,\,\,\,\, n= 0, 1, 2, \ldots \)

The scheme (9) is named as NH-1 method.

Modified Newton’s method—(II)

Let

be the nth iterate in the Newton’s method. And let \(s_n\) be the (n+1)th iterate in generalized NH-1 method, i.e.,

We can use the iterative values from (10) and (11) to construct a new method with higher order convergence.

The scheme (12) is named as generalized NH-2 method.

In particular choosing \(M=1\), i.e., \(N=2\), we have

The scheme (13) is named as NH-2 method.

3 Convergence Analysis

Lemma 3.1

Let \(F:A \subset {\mathrm{I{\!}\mathrm R}}^n \rightarrow {\mathrm{I{\!}\mathrm R}}^n\) be sufficiently Frechet differentiable in a convex set A. For any \(x_0, t \in A\), the Taylor’s expansion is as follows

where \(\Vert R_{m}\Vert \le {\frac{1}{m!}}\sup {\Vert F^{m}(x_{0}+{{\beta }}t)\Vert \Vert t\Vert ^m}\) and \(0\le {{\beta }}\le 1\).

Theorem 3.1

Let \(F:A \subset {\mathrm{I{\!}\mathrm R}}^n \rightarrow {\mathrm{I{\!}\mathrm R}}^n\) be sufficiently Frechet differentiable in a convex set A. Let \(F({{\alpha }})=0\) and \({{\alpha }} \in D\). If the initial guess \(x_0\) is close to \({{\alpha }}\) then the iterative method NH-1 converges cubically to \({{\alpha }}\) and the error equation is

where \(e_n=x_n-{{\alpha }}\) and \(c_k=(\frac{1}{k!})\times \frac{F^{(k)}({{\alpha }})}{F'({{\alpha }})}\).

Proof

Using Taylor’s series expansion for F(x) at \(x=x_0\) and taking \(F({{\alpha }})=0\) and \(e_n=x_n-{{\alpha }}\), we get

Expanding \(F'(x)\) at \(x=x_n\), we get

From the above two equations

Again, taking \(P_k=\frac{k-0.5}{N}\)

Then

Now using (16) and (20) in (8), we get

Thus, (22) shows that the iterative scheme NH-1 is cubically convergent. This completes the rest of the proof. \(\Box \)

Theorem 3.2

Let the vector function \(F(x)=0\) satisfies all the conditions of theorem-1. Then the iterative scheme NH-2 posses fifth-order convergence. More over the error equation will be

where \(e_n=x_n-{{\alpha }}\) and \(c_k=(\frac{1}{k!})\times \frac{F^{(k)}({{\alpha }})}{F'({{\alpha }})}\).

Proof

Using third-order convergence formula

that is

Replacing \(x_{n+1}\) by \(s_n\) in (24), we have

where \(h_n=(c_{2}^2-\frac{c_3}{4N^2})e_{n}^3+(c_{2}^3+3c_2c_3+\frac{3c_2c_3}{4n^2}-\frac{2c_4}{N^2}-\frac{3c_4}{2N^2})e_{n}^4+O(\Vert e_{n}\Vert ^5)\).

Now

Again \(y_n=x_n-\frac{F(x_n)}{F'(x_n)}\).

So by using (18), we obtain

And

From (11), we get

and

Using (26) and (28) in (29) yields

where \(K=F'({{\alpha }})\), \(L=e_{n+1}\),

\(U=[1+2c_{2}^2+4(c_2c_3-c_{2}^3)e_{n}^3+O(\Vert e_{n}\Vert ^4)]\), and

\(V=[(c_{2}^2-\frac{c_3}{4N^2})e_{n}^3+(c_{2}^3-3c_2c_3+\frac{3c_2c_3}{4n^2}-\frac{2c_4}{N^2}-\frac{3c_4}{2N^2})e_{n}^4+O(\Vert e_{n}\Vert ^5)]\).

Simplifying (30), we obtain

and

Further

Hence, from (32) it is seen that the iterative scheme NH-2 posses fifth-order convergence. This completes the rest of the proof. \(\Box \)

4 Numerical Examples

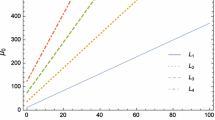

Efficiency index We see that in each of the same class of methods, the functional values as well as derivatives are evaluated in each iteration. The efficiency index as \(E.I=p^{1/w}\), where p is the order of convergence and w is the sum of the number of functional evaluations and derivative evaluations per iteration in the method. For a system of nonlinear equations with n equations and n unknowns, evaluating the function F is to calculate n functional values \(f_i=1,2,\ldots ,n\) and evaluating a derivative \(F'\) is to calculate \(n^2\) derivative values \(\frac{\partial f_i}{\partial x_j}, i=1,2,\ldots ,n\,\,\, and\,\,\, j=1,2,\ldots ,n.\) It is seen that efficiency index of various convergent methods as follows:

NR-1: \(E.I.=3^\frac{1}{n+2n^{2}}\), NR-2: \(E.I.=3^\frac{1}{n+3n^{2}}\), NGM: \(E.I.=3^\frac{1}{n+3n^{2}}\) and M: \(E.I.=5^\frac{1}{2n+4n^{2}}\) and the efficiency index of the proposed methods are as follows:

NH-1: \(E.I.=3^\frac{1}{n+3n^{2}}\) and NH-2: \(E.I.=5^\frac{1}{n+4n^{2}}\)

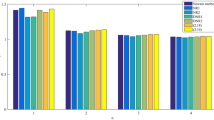

We have taken some examples to examine the convergence of our constructed methods. We have also compared this result with the same class of methods discussed earlier, namely, NR-1 [4], NR-2 [4], NGM [3] and M [3]. We have evaluated the number of iterations k, the error of the approximate solution \(x_{(k)}\), the computational order of convergence (COC1/COC2), the computational asymptotic convergence constant (CACC), approximate value of the function \(F{x_{(k)}}\) for our constructed methods NH-1, NH-2 and are compared with some of the same class of methods. The comparison is shown in respective tables.

To compute the computational order of convergence (COC), we have used the following formulae:

For the nonlinear system with unknown exact solution (\({{\alpha }}\))

For the nonlinear system with known exact solution (\({{\alpha }}\))

For the computation of the above factor, we have used the last three approximations of the corresponding iterations.

Additionally, we have evaluated the computational asymptotic convergence constant (CACC) for pth order convergence as defined below

Example 4.1

Consider the following system with five equations and five unknowns:

where \(x_{(0)}=(1.5,1.5,1.5,1.5,1.5)^T\) is the initial trial solution and \({\alpha }\!=\!(1,1,1,1,1)^T\) is the exact solution. The numerical comparison of the results of the system (33) is shown in Table 1.

Example 4.2

Consider the following system with two equations and two unknowns:

where \(x_{(0)}=(0.5,-0.5)^T\) is the initial trial solution, and the approximate numerical solution of the above nonlinear system is \((1.271384307950132,-0.880819073102661)^T\). The numerical comparison of the results of the system (34) is shown in Table 2.

Example 4.3

Consider the following nonlinear equation:

where \(x_{(0)}=2\) is the initial trial solution and \({\alpha }=4\) is the exact solution. The numerical comparison of the results of the Eq. (35) is shown in Table 3.

Example 4.4

Consider the following nonlinear equation:

Let the interval [0, 1] be partitioned as \(t_0 = 0< t_1<t_2< \cdots<t_{n-1} < t_n,\) \(t_i=t_0+ih\) and \(h=1/n.\)

Let \(y_{0}=y(t_0)=y(0)=0, y_{1}=y(t_1), \ldots y_{n-1}=y(t_{n-1})\) and \(y_n=y(t_{n})=y(1)=1\). Now using numerical defferential formula, we have \(y_{i}''=\frac{y_{i-1}-2y_i+y_{i+1}}{h^2}\), \(i=1,2, \ldots (n-1)\). Choosing \(p=\sqrt{3}\) and \(n=10\), we obtain the following system of nonlinear equation involving nine variables.

where \(y_{(0)}=(1,1,1,1,1,1,1,1,1)\) is the initial trial solution and the approximate numerical solution of the above nonlinear system is \((0.10630974179490, 0.21259757834295, 0.31873991751981, 0.42444234737133, 0.52918276231997, 0.63216575267634, 0.73229207302272, 0.82814954506063, 0.91803296518217 )^T\). The numerical comparison of the results of the system (37) is shown in Table 4.

Example 4.5

Consider the following system of nonlinear equations:

where \(x_{(0)}=(3.5,3.5,3.5,3.5,3.5)^T\) is the initial trial solution and \({\alpha }\!=\!(1,1,1,1,1)^T\) is the exact solution. The numerical comparison of the results of the system (38) is shown in Table 5.

5 Conclusion

We have constructed a pair of iterative methods for the solution of nonlinear system of equations with third- and fifth-order convergence, respectively. The computational order of convergence (COC1/COC2), the computational asymptotic convergence constant (CACC), and the efficiency index (E.I) for these methods are equivalent to those of the same class of discussed methods. The numerical results also agree to the theoretical claim. Above all, these methods are very handy in solving the system of nonlinear equations as well as boundary value nonlinear ordinary differential equations. The proposed methods can be seen as alternatives to the existing methods of the same class.

References

S.D. Conte, C. De Boor, Elementary Numerical Analysis (Kogakusha Ltd., Mc Graw Hill, 1972)

A.M. Ostrowski, Solution of Equations in Euclidean and Banach Space, 3rd edn. (Academic Press, New York, 1973)

Z. Liu, Q. Zheng, C. Huang, Third- and fifth-order Newton-Gauss methods for solving nonlinear equations with n variables. Appl. Math. Comput. 290, 250–257 (2016)

M.A. Noor, M. Waseem, Some iterative methods for solving a system of nonlinear equations. Comput. Math. Appl. 57, 101–106 (2009)

M.A. Noor, Some applications of quadrature formulas for solving nonlinear equations. Nonlinear Anal. Forum 12(1), 91–96 (2007)

M.T. Darvishi, A. Barati, A third-order Newton-type method to solve systems of nonlinear equations. Appl. Math. Comput. 187, 630–635 (2007)

M. Frontini, E. Sormani, Third-order methods from quadrature formulae for solving systems of nonlinear equations. Appl. Math. Comput. 149, 771–782 (2004)

H.H.H. Homeier, A modified Newton method with cubic convergence: the multivariate case. J. Comput. Appl. Math. 169, 161–169 (2004)

M.Q. Khirallah, M.A. Hafiz, Novel three order methods for solving a system of nonlinear equations. Bull. Math. Sci. Appl. 2, 01–14 (2012)

J. Kou, A third-order modification of Newton method for systems of nonlinear equations. Appl. Math. Comput. 191, 117–121 (2007)

M.A. Noor, Fifth-order convergent iterative method for solving nonlinear equations using quadrature formula. J. Math. Control Sci. Appl. 1, 241–249 (2007)

A. Golbabai, M. Javidi, A new family of iterative methods for solving system of nonlinear algebraic equations. Appl. Math. Comput. 190, 1717–1722 (2007)

A. Cordero, J.R. Torregrosa, Variants of Newtons method using fifth-order quadrature formula. Appl. Math. Comput. 190, 686–698 (2007)

J.E. Dennis, R.B. Schnable, Numerical Methods for Unconstrained Optimization and Nonlinear Equations (Prentice Hall, 1983)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2021 The Author(s), under exclusive license to Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper

Mishra, B., Pany, A.K., Dutta, S. (2021). New Iterative Methods for a Nonlinear System of Equations with Third and Fifth-Order Convergence. In: Mishra, S.R., Dhamala, T.N., Makinde, O.D. (eds) Recent Trends in Applied Mathematics . Lecture Notes in Mechanical Engineering. Springer, Singapore. https://doi.org/10.1007/978-981-15-9817-3_30

Download citation

DOI: https://doi.org/10.1007/978-981-15-9817-3_30

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-15-9816-6

Online ISBN: 978-981-15-9817-3

eBook Packages: EngineeringEngineering (R0)