Abstract

In physical science, nonlinear singular Lane–Emden and pantograph delay differential equations (LE–PDDEs) have abundant applications and thus are of great interest for the researchers. The presented investigation is related to the development of a new application of intelligent computing for the solution of the LE–PDDEs-based system introduced recently by merging the essence of delay differential equation of Pantograph type and standard second-order Lane–Emden equation. Intelligent computing is exploited through Levenberg–Marquardt backpropagation networks (LMBNs) and Bayesian regularization backpropagation networks (BRBNs) to provide the solutions to nonlinear second-order LE–PDDEs. The performance of design LMBNs and BRBNs is substantiated on three different case studies through comparative analysis from known exact/explicit solutions. The correctness of the designed solvers for LE–PDDEs is further certified by accomplishing through assessment on error histograms, regression measures and index of mean squared error.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

During the sixteenth century, the research in the area of differential models shows the introduction of a specific type of differential model; namely delay differential (DD) model. The literature of delay differential models offers substantial contribution to solving real-life problems. These contributions can be seen in the several applications of DDEs in a myriad of physical phenomena, for instance, transport and propagation, communication network model, economical systems, and population dynamics engineering system, [1,2,3,4,5]. In an extensive research, Forde [5] illustrates the solutions using delay differential equations of mathematical biology, whereas Beretta and Kuang [6] utilize the delay-dependent factors of DDEs to control their geometric constancy. Forde solves the DDEs in mathematical biology [7], in the study of DD models, delay/non-delay differential models were solved by Chapra [8] applying the Runge–Kutta method. Rangkuti and Noorani [9] provided exact solutions while taking help of both variation iterations coupled technique, as well as the Taylor series. Frazier solved second-order DDEs by using the wavelet Galerkin method [10].

This research study uses pantograph delay differential equations (PD-DEs), a certain type of proportional delay differential equation which has multifarious applications considerably in mathematical models of broad problems in applied science and technology [11, 12]. Based on the significance of PD-DEs, several numerical, as well as analytical techniques, have been posited. Methods used to solve PD-DEs in literature include, but are not limited to, Collocation [13], multi-wavelets Galerkin [14], Taylor operation [15], modified Chebyshev collocation [16], multistep block [17], fully geometric mesh one-leg [18], the partially truncated Euler–Maruyama [19], Laplace decomposition [20] methods and numerous other methodologies [21,22,23,24,25,26,27], while non-traditional computing procedures are also implemented for solving PD-DEs including neuro-heuristic computational intelligence [28], Bernstein neural network [29], neuro-swarm intelligent computing [30], computational intelligence approach to pantograph delay differential system [31], backpropagated artificial neural networks for pantograph differential models [32] and machine learning approach for pantograph ODEs systems [33]. Beside these Pantograph models, researchers have exhibited keen interest in solving the ‘singular problems.’ The Lane–Emden singular system (LESS) is one such valuable and recognized model. LESS explains a good deal of physical phenomena such as cooling of radiators, clouds, cluster galaxies, polytrophic stars and many other physical problems. The LESSs have benefited numerous fields of study, including, physical sciences [34], oscillating magnetic problem [35], electromagnetic problem [36], mathematical physics [37], study of gaseous star [38], catalytic diffusion in chemical reaction [39], stellar structure model [40], quantum mechanics [41] and isotropic media [42]. Alongside these deterministic solver, stochastic methods based in artificial intelligence have been utilized exhaustively in different applications [43,44,45,46,47,48,49]. A few of these solver for singular system include Thomas–Fermi system of atomic physics [50,51,52], Lane–Emden and Emden–Fowler based system models in astrophysics [30, 53,54,55], doubly singular system [56] and thermal analysis of human head model [57]. Based on our examination of relevant literature, up till now, no researcher has made use of the applied AI techniques for the recently introduced model based on integration of singular and proportional delay system, named as Lane–Emden pantograph delay differential equations (LE–PDDEs). This encourages motivation for the authors to explore or exploit the said AI algorithms to solve recently reported nonlinear singular LE–PDDEs equation.

Enlisted below are certain prominent characteristics or features of the proposed research:

-

An innovative application of backpropagated intelligent networks (BINs) is introduced for numerical treatment of nonlinear, singular, delay differential models.

-

The BINs comprising of Levenberg–Marquardt backpropagation networks (LMBNs) and Bayesian regularization backpropagation networks (BRBNs) are designed effectively for Lane–Emden pantograph delay differential equations (LE–PDDEs).

-

The mean squared error (MSR) as a figure of merit is exploited for the training, testing and validation of LMRNs and BRBNs for estimated modeling of the LE–PDDEs-based system.

-

The superior accomplishment of the developed methodologies via LMBNs and BRBNs is certified through assessment on error histograms, regression measures and index of mean squared error.

The rest of the study is organized in this paper as follows: Sect. 2 describes the overview of system model based on LE–PDDEs. In Sect. 3, numerical experimentation with interpretations of the outcomes is given, while the conclusions are provided in Sect. 4 with potential future applications of presented methodology.

2 Lane–Emden Pantograph Delay Differentiation Equations

Given below is the standard form of LESS [30, 53,54,55]

where µ shows the shape parameters, x = 0 be the location of the singularity ,while α denotes a constant value.

Inspired from Eq. (1), introducing the proportional delay as reported in [58], the LE–PDDEs-based system is given as follows

where β represents the pantograph factor in a singular system.

The three different variants of LE–PDDEs are chosen in the presented analysis of the proposed methodology as follows:

Problem 2.1

Consider nonlinear LE–PDDE equation in (2) for µ = 3, β = 0.5, h(f) = f2 and g(x) = x8 + 2x4 + 3x2 + 1 as follows [58]

The reference exact solution of (3) is provided as:

Problem 2.2

We consider in this case, the LE–PDDEs Eq. (2) for µ = 3, β = 0.5, h(f) = ef and g(x) = e(1+x3) + 3.75 × as follows [58]

The reference exact solution of (4, 5) is written as:

Problem 2.3

Suppose in this case, the LE–PDDEs (2) for µ = 3, β = 0.5, h(f) = x−2 and g(x) = -0.05cos(0.5x) + sec2x –

3x−1sin(0.5x) as follows [58]

The reference solution of (7) is provided as:

3 Numerical Computing with Discussion

We present here the numerical experimentation with necessary discussion on solving LE–PDDEs using proposed LMBNs and BRBNs.

The methodology adopted in the presented study is illustrated in the flowchart in Fig. 1, while the outcomes of the numerical experimentation conducted by using LMBNs and BRBNs to solve the three selected problems on LE–PDDEs as presented in Eqs. (3–8) are provided along with necessary interpretations. Using backpropagation based networks incorporating Levenberg–Marquardt and Bayesian regularization schemes for training of weights by implementation of function in Matlab neural networks modeling toolbox through ‘nftool’ routine. Figure 2 manifests the design of networks by nine neurons having log-sigmoid transfer function in the hidden layers.

For all three Problems 2.1–2.3 of LE–PDDEs, the dataset has been developed while making use of Eqs. (4), (5), (6) and (8) for 201 inputs in interval [0, 2] for both LMBN and BRBNs. The developed dataset was divided randomly in three parts: the first 15% for testing, the second 15% for the validation, whereas the last 70% are utilized for training of the networks. As can be seen in Fig. 2, the fitting tool through ‘nftool’ routine based on two-layered structure of feed forward networks is applied to provide solutions for the all 03 problems of LE–PDDEs.

In the three LE–PDDEs, i.e., Problem 2.1 to Problem 2.3, respective results of LMBN and BRBNs are listed in Tables 1 and 2, which portrayed the performance in relation to fitness on MSE, epochs, training/testing/validation performance, backpropagation measures and time duration. For LMBNs, the values of performance are around 10–10, however, for BRBNs, the performance values are 10–12 to 10–11. The corresponding values of MSE for training, validation and testing of LMBNs are about 10–10 and about 10–12 to 10–11 for BRBNs. The algorithms complexity in the form of executing time utilized for training of weights of both backpropagated networks is also listed in Tables 1 and 2 for all three problems. Both backpropagation methodologies LMBN and BRBNs show almost similar computational time. Generally, these outcomes manifest similar, consistent, accuracy in finding the numerical solution of LE–PDDEs.

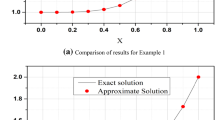

Figs. 3 and 4 manifest the results of MSE-based objective function, performance of testing validation and training process, state transition index, regression outcomes of LMBN and BRBNs for LE–PDDEs as presented in Problem 2.1. However, Figs. 5 and 6 exhibit the approximate solutions with error dynamics, i.e., difference between proposed results and available exact solutions. Consequently, the outcomes of LMBN and BRBNs for LE–PDDEs in problems 2.2 and 4.3 are put forth, respectively, in Figs. 7, 8, 9, 10, 11, 12, 13 and 14.

The results of LMBNs for LE–PDDE of Problem 2.1a convergence curves, b transition states, c histograms d regression index

The results of BRBNs for LE–PDDE in Problem 2.1a convergence curves, b transition states, c, histograms, d regression index

Comparison of results for LMBNs in case of LE–PDDE in Problem 2.1

Comparison of results for BRBNs in case of LE–PDDE in Problem 2.1

The results of LMBNs for LE–PDDE of Problem 2.2a convergence curves, b transition states, c histograms, d regression index

The results of BRBNs for LE–PDDE of Problem 2.2a convergence curves, b transition states, c histograms, d regression index

Comparison of results for LMBNs in case of LE–PDDE in Problem 2.2

Comparison of results for BRBNs in case of LE–PDDE in Problem 2.2

The results of LMBNs for LE–PDDE of Problem 2.3a convergence curves, b transition states, c histograms, d regression index

The results of BRBNs for LE–PDDE of Problem 2.3a convergence curves, b transition states, c histograms, d regression index

Comparison of results for LMBNs in case of LE–PDDE in Problem 2.3

Comparison of results for BRBNs in case of LE–PDDE in Problem 2.3

In connection with training, validation and testing against epochs, the performance of MSE is exhibited in Figs. 3a, 4a, 7a, 8a, 11a and 12a about the developed problems (respectively, for 2.1, 2.2 and 2.3) of LE–PDDE. It can be observed that optimum curves of the networks are obtained at 1000, 1000 and 168 epochs having respective MSE approximately 10–10 to 10–09, 10–10 to 10–09 and 10–10 to 10–09 for LMBNs, whereas 1000, 662 and 201 epochs with MSE about 10–10 to 10–09, 10–11 to 10–10 and 10–12 to 10–11 for BRBNs for respective problems 2.1 to 2.3.

The sub-Figs. 3b, 4b, 7b, 8b, as well as 11b and 12b, layout the gradient index and parameter Mu of backpropagation procedure in LMBNs for LE -PDDEs in each of the problems 2.1, 2.2 and 2.3, respectively. The approximate values 10–08 to 10–06 and 10–09 to 10–07 of gradient and Mu values for Levenberg–Marquardt-based backpropagation, on the other hand, the corresponding values for BRBNs are about 10–07 to 10–08 and 10–10 to 10–07. The reasonably stable performance of BRBNs over LMBNs is indicated via a slight change in the parameters of gradient index and Mu.

The dissimilarity of estimated solutions of LMBNs and BRBNs from reference solutions is revealed in Figs. 5, 6, 9, 10, 13 and 14 for respective 2.1 to 2.3 problems. These solutions indicate the consistency in both results with 5–7 decimal precision. Furthermore, it can be inferred that performance of LMBNs for LE–PDDE in Problem 2.2 is comparatively less effective as compared to BRBNs whereas the reliable and viable performance of BRBNs is attained for all three variants of LE–PDDEs.

Histogram-based error analysis has been carried out for the pair LMBN and BRBNs, and the outcome of the error analysis for LE–PDDEs in problems 2.1, 2.2 and 2.3 is figuratively described for each system, in the following Figs 3c, 4c, 7c, 8c, 11c and 12c, respectively. For LMBNs, the value is found to be close to 10–06 to 10–07, however, value for BRBNs is 10–06 to 10–08 of the error bin with reference to desire optimal value of zero. It is shown clearly from the results that there is only a miniscule variance in the performance for LE–PDDE for both methodologies; small error values (better) are obtained using BRBNs over LMBNs.

For the complete and evident inferences, the regression analysis intended for training, testing, validation for both LMBNs and BRBNs. Figs. 3d, 4d, 7d, 8d and 11d, 12d for LE–PDDEs in problems 2.1, 2.2 and 2.3, respectively, show the results of regression index. For LMBNs, as well as BRBNs, the value of correlation is very close to unity, i.e., R = 1, for almost each variant of LE–PDDE.

The analysis is continued further for the scenario of the LE–PDDEs other than those presented in problems 2.1 to 2.3 without known exact solutions or reference numerical solutions. In these scenarios, we are unable to get the training target for an equation, e.g., nonlinear singular Thomas Fermi equation [51], nonlinear fractional Riccati equation [59], nonlinear singular Flierl–Petviashivili equations [60], etc. Thus, we cannot implement the proposed LMBNs, as well as BRBNs, straightforwardly, however, unsupervised versions of these neural networks as reported in [61, 62] can be exploited for finding the solution in such scenarios of LE–PDDEs.

4 Conclusions

The present research looks into the pursuit of intelligent backpropagated networks exploiting the Levenberg–Marquardt and Bayesian Regularization optimization mechanism to discover the solution of recently introduced nonlinear, singular and delay systems known as nonlinear second order Lane–Emden pantograph delay differential equations. Based on acknowledged standard results, i.e., available exact solutions, for the variants of LE–PDDEs, a dataset for training, testing, in addition to, validation was formed. The two different types of intelligent backpropagated networks via LMBNs and BRBNs are employed on a given dataset for approximate modeling of the LE–PDDEs-based systems on fitness through mean squared error. The performance of the developed intelligent backpropagated algorithms LMBNs and BRBNs on LE–PDDEs is authenticated by attaining a good agreement, i.e., a close matching, with the available solutions and additionally validated using regression analyses and error histograms. Beside the reasonably precise solutions of LE–PDDE proposed LMBNs and BRBNs, simple concept, implementations ease, stability, convergence, robustness, extendibility and applicability are other key advantages.

In future, one should investigate in Bernstein and Legendre ANNs, as well as deep version of LMBNs and BRBNs along with their proof of theoretical convergence such that these methodologies can be exploited effectively to solve variety of nonlinear systems of paramount interest [29, 63,64,65,66,67,68]. Additionally, the proposed LMBNs and BRBNs, as well as the deep versions of both intelligence computing paradigms, can be extended to be applicable for singularly perturb variants of LE–PDDEs.

References

Rakhshan, S.A.; Effati, S.: A generalized Legendre–Gauss collocation method for solving nonlinear fractional differential equations with time varying delays. Appl. Numer. Math. 146, 342–360 (2019)

Kuang, Y. (ed.): Delay Differential Equations: with Applications in Population Dynamics. Academic Press, Cambridge (1993)

Li, W.; Chen, B.; Meng, C.; Fang, W.; Xiao, Y.; Li, X.; Hu, Z.; Xu, Y.; Tong, L.; Wang, H.; Liu, W.: Ultrafast all-optical graphene modulator. Nano Lett. 14(2), 955–959 (2014)

Saray, B.N.; Lakestani, M.: On the sparse multi-scale solution of the delay differential equations by an efficient algorithm. Appl. Math. Comput. 381, 125291s (2020)

Narasingam, A.; Kwon, J.S.I.: Application of Koopman operator for model-based control of fracture propagation and proppant transport in hydraulic fracturing operation. J. Process Control 91, 25–36 (2020)

Beretta, E.; Kuang, Y.: Geometric stability switch criteria in delay differential systems with delay dependent parameters. SIAM J. Math. Anal. 33(5), 1144–1165 (2002)

Forde, J.E.: Delay Differential Equation Models in Mathematical Biology. University of Michigan, Ann Arbor, pp. 5436–5436 (2005)

Chapra, S.C.: Applied Numerical Methods. McGraw-Hill, Columbus (2012)

Rangkuti, Y.M.; Noorani, M.S.M.: The exact solution of delay differential equations using coupling variational iteration with Taylor series and small term. Bull. Math. 4(01), 1–15 (2012)

Frazier, M.W.: Background: complex numbers and linear algebra. In: An Introduction to Wavelets Through Linear Algebra. Undergraduate Texts in Mathematics, pp. 7–100. Springer, New York (1999). https://doi.org/10.1007/0-387-22653-2_2

Getto, P.; Waurick, M.: A differential equation with state-dependent delay from cell population biology. J. Differ. Equ. 260(7), 6176–6200 (2016)

Wazwaz, A.M., et al.: Reliable treatment for solving boundary value problems of pantograph delay differential equation. Rom. Rep. Phys 69, 102 (2017)

Isah, A.; Phang, C.; Phang, P.: Collocation method based on Genocchi operational matrix for solving generalized fractional pantograph equations. Int. J. Difer. Equ. 2017, 2097317 (2017). https://doi.org/10.1155/2017/2097317

Saray, B.N.; Manafian, J.: Sparse representation of delay differential equation of Pantograph type using multi-wavelets Galerkin method. Eng. Comput. 35(2), 887–903 (2018)

YÜZBAŞI, Ş; IsmailovSaray, N.: A Taylor operation method for solutions of generalized pantograph type delay differential equations. Turk. J. Math. 42(2), 395–406 (2018)

Yang, C.: Modified Chebyshev collocation method for pantograph-type differential equations. Appl. Numer. Math. 134, 132–144 (2018)

Katani, R.: Multistep block method for linear and nonlinear pantograph type delay differential equations with neutral term. Int. J. Appl. Comput. Math. 3(1), 1347–1359 (2017)

Wang, W.: Fully-geometric mesh one-leg methods for the generalized pantograph equation: approximating Lyapunov functional and asymptotic contractivity. Appl. Numer. Math. 117, 50–68 (2017)

Zhan, W.; Gao, Y.; Guo, Q.; Yao, X.: The partially truncated Euler–Maruyama method for nonlinear pantograph stochastic differential equations. Appl. Math. Comput. 346, 109–126 (2019)

Koroma, M.A.; Zhan, C.; Kamara, A.F.; Sesay, A.B.: Laplace decomposition approximation solution for a system of multi-pantograph equations. Int. J. Math. Comput. Sci. Eng. 7(7), 39–44 (2013)

Eriqat, T.; El-Ajou, A.; Moa’ath, N.O.; Al-Zhour, Z.; Momani, S.: A new attractive analytic approach for solutions of linear and nonlinear neutral fractional pantograph equations. Chaos Solitons Fractals 138, 109957 (2020)

Ezz-Eldien, S.S.; Wang, Y.; Abdelkawy, M.A.; Zaky, M.A.; Aldraiweesh, A.A.; Machado, J.T.: Chebyshev spectral methods for multi-order fractional neutral pantograph equations. Nonlinear Dyn. 100, 1–13 (2020)

Alsuyuti, M.M.; Doha, E.H.; Ezz-Eldien, S.S.; Youssef, I.K.: Spectral Galerkin schemes for a class of multi-order fractional pantograph equations. J. Comput. Appl. Math. 384, 113157 (2020)

Wang, L.P.; Chen, Y.M.; Liu, D.Y.; Boutat, D.: Numerical algorithm to solve generalized fractional pantograph equations with variable coefficients based on shifted Chebyshev polynomials. Int. J. Comput. Math. 96(12), 2487–2510 (2019)

Dehestani, H.; Ordokhani, Y.; Razzaghi, M.: Numerical technique for solving fractional generalized pantograph-delay differential equations by using fractional-order hybrid bessel functions. Int. J. Appl. Comput. Math. 6(1), 1–27 (2020)

Hashemi, M.S.; Atangana, A.; Hajikhah, S.: Solving fractional pantograph delay equations by an effective computational method. Math. Comput. Simul. 177, 295–305 (2020)

Rabiei, K.; Ordokhani, Y.: Solving fractional pantograph delay differential equations via fractional-order Boubaker polynomials. Eng. Comput. 35(4), 1431–1441 (2019)

Raja, M.A.Z.; Ahmad, I.; Khan, I.; Syam, M.I.; Wazwaz, A.M.: Neuro-heuristic computational intelligence for solving nonlinear pantograph systems. Front. Inf. Technol. Electron. Eng. 18(4), 464–484 (2017)

Sun, H.; Hou, M.; Yang, Y.; Zhang, T.; Weng, F.; Han, F.: Solving partial differential equation based on Bernstein neural network and extreme learning machine algorithm. Neural Process. Lett. 50(2), 1153–1172 (2019)

Sabir, Z., et al.: Neuro-swarm intelligent computing to solve the second-order singular functional differential model. Eur. Phys. J. Plus 135(6), 474 (2020)

Raja, M.A.Z.: Numerical treatment for boundary value problems of pantograph functional differential equation using computational intelligence algorithms. Appl. Soft Comput. 24, 806–821 (2014)

Khan, I., et al.: Design of neural network with Levenberg–Marquardt and Bayesian regularization backpropagation for solving pantograph delay differential equations. IEEE Access 8, 137918–137933 (2020)

Mosavi, A.; Shokri, M.; Mansor, Z.; Qasem, S.N.; Band, S.S.; Mohammadzadeh, A.: Machine learning for modeling the singular multi-pantograph equations. Entropy 22(9), 1041 (2020)

Mandelzweig, V.B.; Tabakin, F.: Quasi linearization approach to nonlinear problems in physics with application to nonlinear ODEs. Comput. Phys. Commun. 141(2), 268–281 (2001)

Dehghan, M.; Shakeri, F.: Solution of an integro-differential equation arising in oscillating magnetic fields using He’s homotopy perturbation method. Progress Electromagn. Res. 78, 361–376 (2008)

Khan, J.A., et al.: Nature-inspired computing approach for solving non-linear singular Emden–Fowler problem arising in electromagnetic theory. Connect. Sci. 27(4), 377–396 (2015)

Bhrawy, A.H.; Aloi, A.S.; Van Gorder, R.A.: An efficient collocation method for a class of boundary value problems arising in mathematical physics and geometry. Abstr. Appl. Anal. 2014, 425648 (2014). https://doi.org/10.1155/2014/425648

Luo, T.; Xin, Z.; Zeng, H.: Nonlinear asymptotic stability of the Lane–Emden solutions for the viscous gaseous star problem with degenerate density dependent viscosities. Commun. Math. Phys. 347(3), 657–702 (2016)

Rach, R.; Duan, J.S.; Wazwaz, A.M.: Solving coupled Lane–Emden boundary value problems in catalytic diffusion reactions by the Adomian decomposition method. J. Math. Chem. 52(1), 255–267 (2014)

Taghavi, A.; Pearce, S.: A solution to the Lane–Emden equation in the theory of stellar structure utilizing the Tau method. Math. Methods Appl. Sci. 36(10), 1240–1247 (2013)

Ramos, J.I.: Linearization methods in classical and quantum mechanics. Comput. Phys. Commun. 153(2), 199–208 (2003)

Rădulescu, V.; Repovš, D.: Combined effects in nonlinear problems arising in the study of anisotropic continuous media. Nonlinear Anal. Theory Methods Appl. 75(3), 1524–1530 (2012)

Ahmad, I., et al.: Integrated neuro-evolution-based computing solver for dynamics of nonlinear corneal shape model numerically. Neural Comput. Appl. (2020). https://doi.org/10.1007/s00521-020-05355-y

Umar, M., et al.: A stochastic computational intelligent solver for numerical treatment of mosquito dispersal model in a heterogeneous environment. Eur. Phys. J. Plus 135(7), 1–23 (2020)

Mehmood, A., et al.: Integrated computational intelligent paradigm for nonlinear electric circuit models using neural networks, genetic algorithms and sequential quadratic programming. Neural Comput. Appl. 32(14), 10337–10357 (2020)

Bukhari, A.H., et al.: Design of a hybrid NAR-RBFs neural network for nonlinear dusty plasma system. Alex. Eng. J. 59, 3325–3345 (2020)

Umar, M., et al.: Stochastic numerical technique for solving HIV infection model of CD4+ T cells. Eur. Phys. J. Plus 135(6), 403 (2020)

Raja, M.A.Z., Manzar, M.A., Shah, S.M. and Chen, Y., 2020. Integrated intelligence of fractional neural networks and sequential quadratic programming for Bagley–Torvik systems arising in fluid mechanics. J. Comput. Nonlinear Dyn., 15(5).

Ahmad, I., et al.: Novel applications of intelligent computing paradigms for the analysis of nonlinear reactive transport model of the fluid in soft tissues and microvessels. Neural Comput. Appl. 31(12), 9041–9059 (2019)

Siraj-ul-Islam, A., et al.: A new heuristic computational solver for nonlinear singular Thomas–Fermi system using evolutionary optimized cubic splines. Eur. Phys. J. Plus 135(1), 1–29 (2020)

Sabir, Z., et al.: Neuro-heuristics for nonlinear singular Thomas-Fermi systems. Appl. Soft Comput. 65, 152–169 (2018)

Raja, M.A.Z.; Zameer, A.; Khan, A.U.; Wazwaz, A.M.: A new numerical approach to solve Thomas–Fermi model of an atom using bio-inspired heuristics integrated with sequential quadratic programming. Springerplus 5(1), 1400 (2016)

Sabir, Z., et al.: Novel design of Morlet wavelet neural network for solving second order Lane–Emden equation. Math. Comput. Simul. 172, 1–14 (2020)

Sabir, Z., et al.: Design of neuro-swarming-based heuristics to solve the third-order nonlinear multi-singular Emden–Fowler equation. Eur. Phys. J. Plus 135(6), 1–17 (2020)

Sabir, Z., et al.: Heuristic computing technique for numerical solutions of nonlinear fourth order Emden–Fowler equation. Math. Comput. Simul. 178, 534–548 (2020)

Raja, M.A.Z.; Mehmood, J.; Sabir, Z.; Nasab, A.K.; Manzar, M.A.: Numerical solution of doubly singular nonlinear systems using neural networks-based integrated intelligent computing. Neural Comput. Appl. 31(3), 793–812 (2019)

Raja, M.A.Z.; Umar, M.; Sabir, Z.; Khan, J.A.; Baleanu, D.: A new stochastic computing paradigm for the dynamics of nonlinear singular heat conduction model of the human head. Eur. Phys. J. Plus 133(9), 364 (2018)

Adel, W.; Sabir, Z.: Solving a new design of nonlinear second-order Lane–Emden pantograph delay differential model via Bernoulli collocation method. Eur. Phys. J. Plus 135(5), 427 (2020)

Lodhi, S., et al.: Fractional neural network models for nonlinear Riccati systems. Neural Comput. Appl. 31(1), 359–378 (2019)

Raja, M.A.Z.; Khan, J.A.; Chaudhary, N.I.; Shivanian, E.: Reliable numerical treatment of nonlinear singular Flierl–Petviashvili equations for unbounded domain using ANN, GAs, and SQP. Appl. Soft Comput. 38, 617–636 (2016)

Raja, M.A.Z.; Samar, R.; Manzar, M.A.; Shah, S.M.: Design of unsupervised fractional neural network model optimized with interior point algorithm for solving Bagley–Torvik equation. Math. Comput. Simul. 132, 139–158 (2017)

Raja, M.A.Z.; Khan, J.A.; Haroon, T.: Stochastic numerical treatment for thin film flow of third grade fluid using unsupervised neural networks. J. Taiwan Inst. Chem. Eng. 48, 26–39 (2015)

Shamshirband, S.; Fathi, M.; Dehzangi, A.; Chronopoulos, A.T.; Alinejad-Rokny, H.: A review on deep learning approaches in healthcare systems: taxonomies, challenges, and open issues. J. Biomed. Inf. 113, 103627 (2020)

Shamshirband, S.; Rabczuk, T.; Chau, K.W.: A survey of deep learning techniques: application in wind and solar energy resources. IEEE Access 7, 164650–164666 (2019)

Rajaei, P.; Jahanian, K.H.; Beheshti, A.; Band, S.S.; Dehzangi, A.; Alinejad-Rokny, H.: VIRMOTIF: a user-friendly tool for viral sequence analysis. Genes 12(2), 186 (2021)

Ilyas, H., et al.: A novel design of Gaussian WaveNets for rotational hybrid nanofluidic flow over a stretching sheet involving thermal radiation. Int. Commun. Heat Mass Transf. 123, 105196 (2021)

Shoaib, M., et al.: A stochastic numerical analysis based on hybrid NAR-RBFs networks nonlinear SITR model for novel COVID-19 dynamics. Comput. Methods Programs Biomed. 202, 105973 (2021)

Chen, Y.; Yu, H.; Meng, X.; Xie, X.; Hou, M.; Chevallier, J.: Numerical solving of the generalized Black-Scholes differential equation using Laguerre neural network. Digit. Signal Process. 112, 103003 (2021)

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interest

All the authors of the manuscript declared that there is no conflict of interest.

Rights and permissions

About this article

Cite this article

Khan, I., Raja, M.A.Z., Khan, M.A.R. et al. Design of Backpropagated Intelligent Networks for Nonlinear Second-Order Lane–Emden Pantograph Delay Differential Systems. Arab J Sci Eng 47, 1197–1210 (2022). https://doi.org/10.1007/s13369-021-05814-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13369-021-05814-1