Abstract

This paper introduces a novel hybrid evolutionary algorithm that combines particle swarm optimization (PSO) algorithm with sine–cosine algorithm (SCA) and Nelder–Mead simplex (NMS) optimization technique. However, the algorithm of PSO has some drawbacks like locating local minima rather than global minima, low converge rate and low balance between exploration and exploitation. In this paper, the combination of PSO algorithm with update positions mathematical equation in SCA and NMS technique is presented in order to solve these problems. So a new hybrid strategy called PSOSCANMS is introduced. The SCA algorithm is based on the behavior of sine and cosine functions in the mathematical formula used for solutions. However, the NMS mathematical formulations attempt to replace the worst vertex with a new point, which depends on the worst point and the center of the best vertices. The combined effect of both mathematical formulations of PSO ensures a consistency of exploitation and exploration that makes the search in the search space more effective. Further, it escapes into the local minimum issue and resolves the low converge rate problem. In order to test PSOSCANMS’s performance, a set of 23 well-known unimodal and multimodal functions have been benchmarked. Experimental results showed that PSOSCANMS is more successful than PSO and outperforms the other state-of-the-art compared algorithms over the tested optimization problems. Moreover, an engineering design problem such as spring compression, welded beam is also considered. The result of the problems in engineering design and application problems shows that the algorithm proposed is relevant in difficult cases involving unknown search areas.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

In recent decades, several population-based random optimization techniques have been widely used for solving global optimization problems, for example evolutionary algorithms and swarm intelligence optimizations. The particle swarm optimization (PSO) meta-heuristic is part of a wider class of the swarm intelligence algorithms that aim to solve global optimization problems [1].

Meta-heuristic algorithms are needed for the optimization process in various fields of science including monitoring, classification, engineering, etc. In order to find optimum solutions, they try to satisfy the convergence needs. Fast and successful convergence is needed especially for online (real-time) applications [2]. Convergence time has been shortened by developing new or hybrid optimization algorithms over time. In order to do this, two ways of achieving better results were used, new methods or a hybridizing of some optimization methods. An alternative (hybridization) is being followed in this study, and an effective method of benchmarking optimization is being examined. PSO is being used in several disciplines to many newly developed optimization algorithms [3]. An algorithm or inspirational formula can, however, increase the efficiency of the PSO standard. The hybrid or improved PSO architectures can therefore be developed. These modifications are necessary to improve convergence. The hybrid algorithm, however, is usually assessed by a set of benchmarking functions which examine the efficiency of the developed hybrid optimizer [2].

Many hybrid PSO algorithms were developed. The algorithm for original particle swarm has been modified by Mendes et al. [4], and the fully informed particle swarm is designed to fully inform individuals. Different topologies for numerical function optimization of fully informed PSO were also examined [5]. The results were better based on their study, and fully informed PSO performed canonical PSO on the optimization of the benchmarking function [6, 7].

A hybrid PSO, based on the sine–cosine algorithm (SCOSCALF), has been developed to address optimization problems [8]. The exploration capability of the original PSO algorithm is increased by combining SCA and Levy flight in the PSOSCALF algorithm and is also avoided in the local minimum.

This article proposes a combination of the Nelder–Mead simplex (NMS) position update equations and the cosine optimization of the scene algorithm (SCA). The proposed hybridization algorithm significantly speeds up the convergence rate for a number of famous benchmark issues. It adds a user component to the algorithm for the optimization of particle swarming that maintains a suitable balance among exploration and exploitation. One of the population-based algorithms recently proposed for optimization solutions is the SCA optimization algorithm [9].

In the proposed PSOSCANMS algorithm, each new solution shall be calculated using sine and cosine function characteristics and then using Nelder–Mead simplex function characteristics. This algorithm enables exploration and exploitation capabilities to oscillate and change the value of these trigonometric functions. The Nelder–Mead simplex technique hybridization SCA thus allows for a further research into the design space to find an optimal solution.

The position of the particle is updated at the proposed PSOSCANMS with the best value between the three methods PSO, SCA and NMS for each iteration; PSO is the first to acquire new location of the particle, SCA is updated to the position of the particle when SCA’s best value is better than the value found for PSO, and the new position of the particle is found by SCA equations. Otherwise, NMS will update the particle’s position.

The main advantages of the hybridization of NMS and SCA with PSO are that they are easy to implement deterministic local optimization techniques which use only function evaluations to direct their search, and hence, they do not require the computation of derivative. Further they increased the search capability of PSO. Another main advantage is that the hybrid that is to overcome the problems that PSO has such as locating local minima rather than global minima, low convergence rate and low balance between exploration and exploitation. However, these problems are solved by changing the direction of particle movement at each iteration.

The novelty of this work is that the hybrid algorithm of NMS and SCA with PSO will offer five solutions at each step of the optimization process. The particle can choose the best solution between all these points rather than one point and one movement direction in PSO. This will extend the exploration feature of particles to cover most of the search space. Moreover, NMS will cover three points around each particle in the search space and SCA will cover two more points using sine and cosine functions. This expansion will make each particle to choose the best position between five points rather than heading in one direction using one global point. However, if one of the five points has better solution than the one that PSO has, then the particle direction will be toward this area which contains a better value and possibly has the global minimum solution and not local minimum.

The next sections of this article are as follows: Sect. 2 describes the basics of the PSO algorithm. The SCA and the Nelder–Mead simplex algorithms are presented in the third and fourth sections. The details of the PSOSCANMS algorithm proposed are described in Sect. 3. Section 4 compares the ability of the suggested algorithm to optimize benchmarking functions with family PSO and other algorithms. Section 5 has been assessed for the ability of the PSOSCANMS method to resolve engineering problems. In Sect. 6, the paper is finalized.

2 Optimization Algorithms

2.1 Particle Swarm Optimization (PSO) Algorithm

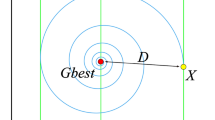

The overall PSO’s idea of how birds or fish schools move is based. The population is called a swarm in this algorithm and every spot is a particle. These swarms are solutions that are possible. The particles in the search area of the objective function are randomly initialized. Each particle makes a compromise between its own best position in history (i.e., pbest), its strongest position and its random look (i.e., gbest) in the course of consecutive iterations following initialization. In PSO, every particle has two characteristics (velocity vector V and vector X) and moves in the search space at a time of velocity adjustment based on the experience of the particle and the experience of the particle companion. The speed and position of the part according to Eqs. 1 and 2 shall be updated mathematically [10]:

where Vid(t + 1) and Vid are particle velocities at iterations t and t + 1. Pid is particle’s best position. Pgd is neighborhood’s best position at iteration t. c1 and c2 are acceleration coefficients that reflect the weight of stochastic acceleration terms pulling each particle to pbest and gbest positions, respectively. r1 and r2 denote two uniformly distributed random numbers (0, 1). ω is the weight of inertia used in global and local search balance. A large weight of inertia generally makes global exploration easier while a small weight of inertia facilitates local use. And xid is the position of the t-iterative particle.PSO starts by generating particles randomly in the search space [11].

The disadvantage of PSO is that the convergence of the swarm is slow. This problem is based on the fact that particles converge for the global best, because all particles move to a single point between the best global position and the best personal position. This is not ensured for to be the global optimum value [3]. The rapid flow of information between particles is another reason for this problem, resulting in the creation of similar particles with a loss of diversity which increases the risk of trapping them in local optima. However, PSO is likely to pre-converge over multimodal fitness areas and PSO is relatively weak in local search capacities. Moreover, numerous studies have demonstrated that PSO can easily be stored in an optimal solution region in the local market and that its complex multimodal functions are slowly converging. It is important to balance exploration and exploitation to overcome those disadvantages, and we thus use the PSO hybrid strategy with SCA and NMS to improve the performance of PSO [12].

2.2 Sine–Cosine Algorithm (SCA)

In 2016, Mirjalili proposed sine–cosine algorithm (SCA) to solve problems related to optimization. SCA produces a variety of random solutions and then approaches them with the optimum solution using equations such as sine and cosine functions [9]. Of course, apart from the characteristics of these functions, the distance between the best particle of the population and each of the alternatives affects their motion. The SCA algorithm offers fewer operators than other algorithms in terms of operation and exploration. This algorithm applies sine and cosine functions to update solutions for exploration and exploitation using equations (Eqs. 3 and 4) [9]:

where Xi(t) is the position of the current solution in ith dimension at tth iteration, r1, r2 and r3 are random numbers, Pi is position of the destination point in ith dimension and | | symbol indicates the absolute value.

Equations (3) and (4) indicate the space defined by the proposed equations by the two search solution solutions. The cyclical synthetics and cosine make it possible to rearrange a solution around another one. There can be guaranteed space use between two solutions. In order to explore the search area, solutions must also be able to look outside the space between their destinations. Yet changes in the range of sine and cosine functions require a solution to change the range of sine and cosine functions outside or inside the space between oneself and another alternative. If the random number is defined for r2 in [0, 2β] in Eq. 3, the changed position inside and outside can be achieved [13].

2.3 Nelder–Mead Simplex

A very popular multi-dimensional optimization search method, the Nelder–Mead simplex algorithm (31), was released in 1965. However, Spendley et al. [14] and Nelder and Mead [15] subsequently developed the simplex search method [15, 16]. This algorithm, which only uses the basic operations of reflection, expansion, contract and shrink to find the solution directly, does not need to obtain the first or second derivative for the property of derivative-free. The Nelder–Mead algorithm keeps a simple, optimum point approximation. The vertices are sorted by the value of the objective function. The algorithm tries to replace the worst corner with a new point, depending on the worst and middle of the best corners [17].

A construction called a simplex is used in the Nelder–Mead approach. N + 1 solutions, X (k), k = {1, 2, n + 1} [6], are a simplex in n dimensions. This is like a two-dimensional plane triangle. Each step assesses the solutions and identifies the worst solution. Simplex center is calculated as described in Eq. 5 [17].

A simplex S in Rn is defined as the convex hull of n + 1 vertices x0,…,xn ∈ Rn. For example, a simplex in R2 is a triangle as shown in Fig. 1. However, a simplex-based direct search method begins with a set of n + 1 points x0,…,xn ∈ Rn that are considered as the vertices of a working simplex S, and the corresponding set of function values at the vertices fj: = f(xj), for j = 0,…,n. The initial working simplex S has to be nondegenerate, i.e., the points x0,…,xn must not lie in the same hyperplane. The method then performs a sequence of transformations of the working simplex S, aimed at decreasing the function values at its vertices. At each step, the transformation is determined by computing one or more test points, together with their function values, and by comparison of these function values with those at the vertices. This process is terminated when the working simplex S becomes sufficiently small in some sense, or when the function values fj is close enough in some sense (provided f is continuous) [15, 17].

2.4 Swarm Intelligence (SI) and Meta-heuristics

Swarm intelligence (SI) inspired algorithms. SI describes the collective behavior of a decentralized self-organizing system. It is considered in the theory of artificial intelligence as an optimization method [18]. The term was introduced by Beni and Jin [18], in the context of a system of cellular robots. SI has very wide inspired meta-heuristics that increase every day taking into consideration the hybrid algorithms that result from combining any two meta-heuristics. The first SI meta-heuristic was particle swarm optimization (PSO), a meta-heuristic proposed by Eberhart and Kennedy [10], and they took inspiration from flying birds in nature to propose the PSO meta-heuristic. PSO is based on the collaboration of individuals with each other; each particle moves and at each iteration the closest to the optimum and then communicates with other particles to send its position to change their position. After PSO, many SI meta-heuristics have been proposed and recent ones are: ant colony optimizer (ACO) [19]; fast evolutionary programming (FEP) [20]; cuckoo search (CS) algorithm [21]; bees algorithm (BA) [13]; artificial bee colony (ABC) algorithm [22]; gravitational search algorithm [23]; firefly algorithm (FA) [24]; grey wolf optimizer (GWO) [25]; dolphin echolocation (DE) [26]; fruit fly optimization algorithm (FFOA) [27]; glowworm swarm optimization (GSO) [28]; tree-seed algorithm (TSA) [29]; cuckoo optimization algorithm (COA) [30]; hunting search (HS) [31]; atom search optimization (ASO) [32]; butterfly optimization algorithm: a novel approach for global optimization (BOA) [33]; augmented grey wolf optimizer (AGWO) [34]; enhanced grey wolf optimizer (EGWO) [33]; bat algorithm (BA) [35]; moth swarm algorithm (MSA) [36]; hybrid PSO–GWO [36]; and supernova optimizer [3].

3 The Proposed Hybrid Particle Swarm Optimization with Sine–Cosine Algorithm and Nelder–Mead Simple (PSOSCANMS) Algorithm

Although PSO algorithm has the excellence of high-speed computation with a small number of parameters and simplicity in implementation; it has two major deficiencies including slow convergence and falling into the local minima. However, in recent studies such as Singh et al. [37], the PSO velocity and position updating equations have been enhanced by the behavior of sine and cosine [37]. Further, Chin et al. (2018) used hybridization with SCA, where new solutions are generated and the ability to examine the search space is increased [2]. In addition, the Nelder–Mead simplex (NMS) technique was used to speed up the convergence rate [1]; the hybridization with Nelder–Mead simplex, as discussed in Sect. 3, has been a popular means of accelerating convergence, for example hybridization of NMS with evolutionary algorithms. This technique has been reported by several papers [2].

For hybridization of PSO and other optimization algorithms, one of the two (I) tandem and (II) cascading methods has been widely used. If the approach is tandem, the entire population is divided into two subsets in tandem hybridization strategy. One or more of the algorithms are used for a set of individuals. At the end, they combine the two subsets. However, when the approach is cascaded, the stochastic optimization process is used for everyone in the population and the solutions obtained are further improved by the combined algorithm. We used cascade hybridization in this paper.

In this paper, the equations of position updating on SCA (Eqs. 3 and 4) are combined in Eq. 6 [9] and added to PSO. After that, the update position of Nelder–Mead simplex (Eqs. 7–9) [17] is secondly cascaded after SCA (Eq. 6). These two enhancements will modify the PSO particles movement strategy to be able for better search in the search space for better detecting the global optima and to speed up the converge rate.

where parameter r1 determines the region (or direction of motion) in the next position that might be either in or outside the space between the solution and the destination. The r2 parameter defines the distance to and from the destination of the movement. Parameter r3 gives random weight to the destination, so that the effect of the destination in defining the distance is stochastically emphasized (r3 > 1) or decanted (r3 < 1). In conclusion, the r4 parameter switches equally between the sine and cosine components of Eq. 6 [9].

In Eq. 7, the summation is excluded and the worst point is reflected in the center; however, the worst point W shall be replaced with point r reflected in the simplexes, but the simplex will be extended further if the point reflected is better than any simplex solution, as described in Eq. 8.

where W is the worst solution. C is the simplex center calculated in Eq. 5, and the expansion coefficient is called η. R is the reflected solution.

If the reflected R solution was worse than W, however, the simplexes would be contracted and the reflected solution would be positioned on the same center. When solution R is not worse than solution W, but worse than any other solution, simplex still contracts but reflection is permitted to remain on the other side of the centroid. Reflection is done accordingly as described in Eq. 9 [10].

where κ is the contraction coefficient in the above equation. In the next step, the new [17] R, Re or Rc will replace solution W. It is possible to run the simplex algorithm several steps prior to completion. Every step of the simplex shall be referred to as a reverse.

In the new proposed hybrid algorithm, at each iteration the particles position is first updated according to PSO equations. The particles at this point will move toward the global optimum point (gbest). Then its motion will be affected by SCA and NMS equations, respectively. However, if the solution found by any of the two algorithms gives better optimum solution than PSO then the particles will move toward the better solution. However, if the particle position does not improve at the end of every iteration of the optimization process, then the combination of SCA and Nelder–Mead simplex will generate a new position for every particle in the search space.

3.1 PSOSCANMS Algorithm

The PSOSCNSM steps are described in Algorithm 1. The populations are represented in the same set as the actual parameter vectors xi = (x1,…, xD), I = 1,…, N, in which D is a problem dimension and NOP the population size. At the beginning of the search, the single vectors xi are randomly initialized. MaxIt is the maximum iteration, the lower and upper bounds in search space are Xmin and Xmax. At the start of the proposed algorithm are those parameters set. The position and velocity of particles are initially generated randomly.

However, by calculating the objective function value, the best local (XpBesti) for each particular and the global (Xgbest) of the whole population is determined. During each iteration, the value of the constants c1, c2 and w is determined. Before updating the vector for the velocity of each particle, the limit value of the particle is checked. Firstly, particle velocity and position are updated by the simple PSO Eqs. 1 and 2. Secondly, Eq. 6 which is the SCA algorithm shall determine the next position of the particle. The fitness value of applying the equations is better than the value obtained from Eqs. 1 and 2. However, in this case, this particle has a random value. When rand (0.5), in Eq. 6 the particle position is calculated.

Thirdly, the algorithm then applies to Eqs. 7–9, respectively, which constitutes to Nelder–Mead simplex. Then, the global value is chosen among the three equations if the founded solution is better than the previous founded solution. The algorithm then saves the best solutions, assigns them to the destination and updates further solutions. In the meantime, the upper and lower search space range is updated so that search space is used to achieve the global optimal point at each iteration.

New particles are produced in the design space by upgrading the speed and position of all particles. After that, all new particles will have an objective function determined. Then, each new particle’s objective value is compared with its best personal experience (Xpbesti). If the location is improved, Xpbesti will be updated and its index resets to zero and the index will increase by one piece, otherwise. Finally, for all particles Xgbest is determined. The process above is repeated up to the MaxIt iteration number.

PSOSCANMS has increased the search capacity to include more particle positions during the motion of particles, which increased the possibility of finding the global minimal and avoiding local minimal problems rather than stuck in a local lowest point.

When the termination condition is archived, the PSOSCANMS algorithm terminates the optimization process and the iteration number reaches its maximum limit by default. However, every other termination condition, such as the maximum number of function assessments or the accuracy of the global optimal achieved, can be considered. In order to avoid local minimum standards, and for the following reasons, the proposed algorithm can theoretically identify the global optimum for optimization problems first and then improves the PSO algorithm.

The following section examines the efficacy of PSOSCANMS with a variety of standard functions representing problems known for testing optimization algorithms. The efficiency of PSOSCANMS algorithm is investigated, analyzed and confirmed.

4 Results and Discussion

In order to evaluate the performance of the proposed PSOSCANMS algorithm, extensive simulations have been carried out. For comparison, several state-of-the-art optimization algorithms (PSO, BOA, GSA, FEP, GWO, ASO, CHPSO) have been applied.

4.1 Benchmark Functions and Parameter Setting

PSOSCANMS has been experimented over 23 benchmark functions utilized by many researchers to test performance for global optimization algorithms [3, 6, 9, 30, 33, 34, 36, 37].

These benchmark functions are listed in Tables 1 [5]. Further function descriptions are available in Arora and Singh [37]. However, the unimodal functions are functions {F1–F7}. Functions {F8–F13} represent multimodal basic functions, and fixed-dimension multimodal functions are from function {F14–F23}.

The unimodal {F1—F7} functions have only one optimum global point, no local optimum points, and they are therefore suited for benchmarking algorithms exploitation feature. However, the functions {F8–F13} are classified as multimodal to test the exploration feature and to avoid local optima optimization algorithms because they have many local optima points. The algorithms tested should avoid falling at those points to reach the global optimum point. The functions {F14–F23} are functions of a fixed multimodal dimension, where the fixed-dimension functions mathematical formulations do not allow us to adjust the number of design variables, but offer different search spaces in contrast to multimodal test functions. Graphical representation for certain benchmark functions that are considered in this research is shown in Fig. 2.

4.2 Experiment and Comparison

The experiment must be performed up to n number of times in order to produce stable statistic results for the performance of meta-heuristic algorithms. And for testing the stability of the algorithm every run must be carried out to m number of iterations [37]. The same experimental procedure was followed to produce and report and then verify the performance of PSOSCANMS algorithm, including statistical measurement (mean, standard deviation, Min and Max). A population size and a maximum iteration of 51 and 10,000, respectively, were used for all algorithms.

Results of the comparing PSOSCANMS with state-of-the-art meta-heuristic algorithms (BOA, ASO, GWO, CHPSO, SCA, PSOGWO and MSA) as well as PSO over tested benchmark are described in Table 2. The grouped statistical test results for the 23 functions are also demonstrated. As seen in the table, the results show that the hybrid strategy in PSOSCANMS has clearly enhanced PSO. The results over six of the tested unimodal test functions (F1–F7) show that the improved method has successfully contributed to PSOSCANMS performance over PSO. However, in traditional PSO all of the particles update their position to move toward one point that may be a local minima and not a global minimum, PSOSCANMS update the particle positions simultaneously at each iteration to new position according to the mathematical equations in SCA and NMS which overcome the problem of local minima.

Table 5 presents a summary of the results. The aggregated results of statistical testing over the functions are shown in Table 5. The symbols (B) and (W) indicate that a given algorithm performed better (B), worse (W), in comparison with PSOSCANMS after the compared algorithm had reached the termination criteria. The maximum number of objective function evaluations is 10,000. All results are based on 51 runs.

4.3 Discussion of the Results Presented in Tables 2, 3 and 4 and Summary Table 5

This section is divided into two parts, and the first part discusses the evaluation of exploitation capability, which is presented in Tables 2, 3 and 4 for the functions (F1–F7). However, the second part presents evaluation of exploration capability and the balance between exploration and exploitation for functions (F8–F23). In addition, it explains the summary of mean results presented in Table 5.

4.3.1 Evaluation of Exploitation Capability (Functions F1–F7)

The results of Tables 2, 3 and 4 and the summary presented in Table 5 show that the proposed algorithm is able to provide very competitive results on the unimodal test functions. These functions do not have a local solution but only one global optimum. However, PSOSCANMS outperformed previous state-of-the-art optimization algorithms (PSO, BOA, ASO and GWO) on all of the seven functions {F1–F7}. Moreover, the compared algorithms have shown the superior results of PSOSCANMS over CHPSO and PSOGWO algorithm, with six functions. The reason for these results is that hybridization between the three algorithms allowed the function to reach the optimum global point. Since all of the seven unimodal testing functions have the best results with the PSOSCANMS algorithm, it proofs that it has strong exploitation behavior which is important for numerous issues of optimization that need to be solved. As already mentioned, unimodal functions are appropriate for benchmarking algorithms. The results therefore show that the PSOSCANMS algorithm is highly operational. Further, the superior of the results over both original PSO algorithm and SCA algorithm proves that the hybridization is very successful and very beneficial for solving the optimization problems with single objective point and in reaching the global optimum.

4.3.2 Evaluation of Exploration Capability and the Balance Between Exploration and Exploitation (Functions F8–F23)

Tested benchmark functions {F8–F13} represent multimodal functions that include many local optima points whose number increases exponentially with the problem size. To evaluate the exploration capacity of optimization algorithms, multimodal functions are used. As shown in Tables 2, 3 and 4 and the summary in Table 5, the PSOSCANMS algorithm highly outperforms (PSO, BOA, ASO, GWO, CHPSO, SCA, PSOGWO and MSA) over at least eleven functions over the tested multimodal functions {F8–F13}. This proves that PSOSCANMS can well balance exploration and exploitation phases. This may be due to the fact that PSOSCANMS provides more exploring points in the search space for the reason of using the two equations of SCA algorithm and the three equations of NMS algorithm. It generates new positions for each particle at each iteration and updates the current particle position to the best position in the different dimensions that guide PSOSCANMS algorithm to reach the global optimum point in the search space. However, PSOSCANMS outperforms PSOGWO over fifteen functions and ASO over fourteen tested functions indicating that PSOSCANMS has also a very good exploration capability. In fact, the present algorithm always appeared the most efficient or the second best algorithm in the majority of test problems. Functions F8 to F23 are divided into two sections, the first is unimodal functions and the second is fixed unimodal functions. However, Tables 2, 3 and 4 show that PSOSCANMS performance over the tested fixed-dimension functions {F14–F23} is better than the compared algorithms. PSOSCANMS outperforms (ASO, GWO and PSOGWO) over nine functions out of ten and outperforms (PSO, BOA, SCA and MSA) in six functions.

These results show that PSOSCANMS can perform good at real-world problems which may not have uniform functions. Further it can be seen that in the fixed-dimension functions {F14-F23}, PSOSCANMS performs better than PSO. The multimodal fixed-dimensional functions have very difficult search spaces and high exploration requirements for the accurate approximation of their global optimal. The results show that the PSOSCANMS algorithm has an excellent scan capability which leads to global optimization. Moreover, high local optimum avoidance of this algorithm is from which these results can be deduced. In addition to multimodal functionality, the algorithm can further explore the search space and find promising search space areas, improving the number of local solutions.

4.4 Stability of PSOSCANMS Algorithm

The plot of the standard deviation values for all functions from F1 to F23 is shown in Fig. 3. Except for F8, all other functions are near zero and this means that POLARPSO is stable over the 51 experiments that were performed, the reason for the high standard deviation value of F8 is due to the very large values in the search space.

4.5 Convergence Curves Analysis and Discussion

The convergence curves of the tested benchmark functions of PSOSCANMS in comparison with PSO are shown in Fig. 4. Please note that the average best solution for every iteration indicates the average best solution obtained up to now in every iteration.

It can be seen in Fig. 4 that the convergence behaviors of PSOSCANMS fall into different categories: First, it tends to be accelerated as iteration increases; second it finds the optimum value at the later stages of the iterations. However, according to Van Den Berg et al. [38], this behavior can guarantee that a population-based algorithm eventually converges to a point and searches locally in a search space. This is an evidence that the proposed hybrid algorithm can successfully improve the fitness of all particles and guarantee finding better solutions as iteration increases. This can be discussed and reasoned according to the hybridization of the proposed algorithm. Since the particles of the swarm tend to move from a high value to global minimum value, similarly to the evolution concepts in PSO, the whole particles and their fitness average tend to be improved over the course of iterations. In addition, we save the best value and move it to the next iteration, so the best value formed so far is always available to improve the fitness of other particles. It can be also observed that when comparing the behavior of PSOSCANMS with PSO, in most of the functions, PSOSCANMS has a higher success rate in finding the optimum global value than PSO and it is faster to reach the global value as it can be seen in {F1, F2, F3, F4 F5, F7, F9, F10, F11, F14, F15, F17, F18, F19, F21}.

The first behavior of the acceleration as iteration increases is due to the increasing number of search points resulted from the hybridization between the three algorithms, which help the particles in the initial stages of the iteration to search for new regions in the search space that speed up finding the optimal values with a minimal number of iterations. This performance of the algorithm can be seen in all of the unimodal functions as well as in most of the multimodal functions {F1, F2, F3, F4 F5, F7, F9, F10, F11, F14, F15, F17, F18, F19, F21}. The second behavior of PSOSCANMS convergence curve is to find the optimum value at the later stages of the iterations. This behavior can be seen in functions {F6, F8, F12, F15, F20, F21, F22}. The explanation of this is probably because PSOSCANMS exploits the solutions, and it has been found so far before exploring new search regions; it benefits from both exploration and exploitation features that are improved in PSOSCANMS, which helps this algorithm find the global optimum. It shows that PSOSCANMS is able to escape local minimum and find the global optimum in the search space.

4.6 Confusion Matrix

The overall confusion matrix of PSOSCANMS using different dimensions over four selected functions (F1, F3, F6, F9) has been calculated since these functions dimension is flexible and not fixed. The results of applying the algorithm over (10, 30, 50) dimension are provided in Table 5. It is known in the optimization field that as the dimension increases the problem will be harder and the accuracy of reaching the optimal point will be lower; however, performance was good at high dimension as illustrated in the confusion matrix (Tables 6, 7, 8 and 9). The confusion matrix has been guided by the method used by [39, 40].

5 PSOSCANMS for Solving Problems in Engineering Design

In this section, a traditional engineering design problem such as spring compression, welded beam is also considered. The PSOSCANMS should be fitted with a limiting technique to optimize restricted issues. These issues are restricted in equal and in inequality measure. In general, limit handling is very difficult when the fitness function directly impacts the search agent’s position update. However, any kind of restrictive constraint can be used for fitness algorithms without changing the algorithm system. While the PSOSCANMS algorithm searches, agents update their positions regarding alpha, beta and delta places. Between the search agents and fitness function, there is no immediate relationship. For example, the simplest limit handling method may be used to effectively handle restrictions of PSOSCANMS when search agents are given high objective function values if they break one of the restrictions. If the alpha, beta or delta are restricted, then the next iteration will automatically replace them with a new search agent. Any kind of feature can be used to penalize search agents on the basis of the rate of infringement. If the penalty is lower than any other, the alpha, beta or delta are substituted automatically in the next iteration by a fresh search agent [41].

5.1 Tension/Compression Spring Design

This problem is aimed at reducing the voltage/pressure source weight as shown in Fig. 4. The minimization method is subject to certain restrictions, including shear stress, floating frequency and minimum floating deflections. Both mathematical and heuristic methods have addressed this problem. A constantly limited issue lays in the tension/compression spring design (TCSD) issue as shown in Fig. 4. The issue is that a coil spring volume V is kept below a steady voltage compression load. Three design factors are as follows: number of spring coils that is active as shown in mathematical Eq. 10, winding diameter as shown in Eq. 11, wire diameter as shown in Eq. 12 [42].

The tension/compression spring design problem mathematically describes as the following (Fig. 5).

minimize subject to:

The upper and lower limit variables are design problems: 2 ≤ X1 ≤ 15, 0.25 ≤ X2 ≤ 1.3, 0.05 ≤ X3 ≤ 2.

This is a convex optimization problem, and the closed-form optimum solution of the problem is f(X) = 0.0126652327883 for X = [x1, x2, x3] = [0.051689156131, 0.356720026419, 11.288831695483] [6].

The numerical optimization (continuous constraints) and mathematical optimization technique are the mathematical methods used to fix tension/compression spring design problem. The results are compared to PSOSCANMS in Table 10. It should be noted that PSOSCANMS uses the same penalty feature to make a fair comparison. It indicates that PSOSCANMS finds a design for this issue with the minimum weight.

Experimental results showed that PSOSCANMS is more successful than PSO and outperforms the other state-of-the-art compared algorithms over the tested optimization problems [6].

5.2 Welded Beam Design

This issue is aimed at reducing the price of manufacturing a welded beam, as shown in Fig. 6 . The figure shows a rigid component which is welded on a beam. At the end of the member, a load is applied. The complete manufacturing price corresponds to the labor costs (depending on the size of the sod) plus the price for the soldering and beams. By changing weld and member dimensions x1, x2, x3 and x4, the beam is to be optimized for minimal costs. Limits to shear stress, bending stress, hinge load and end deflection include restrictions. The x1 and x2 variables are generally 0.0625-inch integer, but are presumed to be constant for this implementation [43].

The following restrictions apply: shear stress (s), beam bending stress (h), bar bending load (Pc), beam deflection end (d) and side constraint. This problem has four variables such as thickness of weld (h), length of attached part of bar (l), the height of the bar (t) and thickness of the bar (b). The mathematical formulation is as follows [44].

Consider minimize subject to [6]:

variable ranges: 0.1 ≤ X1 ≤ 2, 0.1 ≤ X2 ≤ 2, 0.1 ≤ X1 ≤ 10, 0.1 ≤ X3 ≤ 10, 0.1 ≤ X4 ≤ 2.

where

The results of the comparison are shown in Table 11. It indicates that PSOSCANMS over the welded beam design problem finds a design whose costs are minima when comparing it with other designs algorithms.

6 Conclusion

In this paper, a novel hybrid evolutionary algorithm that combines particle swarm optimization (PSO) with sine–cosine optimizer and Nelder–Mead simplex optimization technique has been proposed in order to solve the drawbacks in PSO algorithm in locating local minima rather than global minima, low converge rate and low balance between exploration and exploitation. The proposed algorithm is called PSOSCANMS. The solutions are updated by the proposed algorithm with the best position found by the three cascaded hybrid algorithms. The range of the search points has increased due to using the mathematical equations of SCA and NMS. These equations allow particles to continuously update their position and reassign the solutions that they already have found. Further, they enhance PSO to explore new areas and dimensions in the search space. The proposed algorithm is tested on 23 benchmark functions in order to investigate its performance on the different scale functions. The results showed that PSOSCANMS has high rate in finding the optimum global value and high exploration feature over the original PSO and the other compared algorithms. However, this is due to the fact that cascading the three hybrid algorithms allows particles to examine every possible solution from the initial iteration to the end of the optimization procedure in the different dimensions. PSOSCANMS has demonstrated fast behavior, which simultaneously reaches the optimal global point and a high local optimum avoidance speed. Experimental results of comparison PSOSCANMS showed that PSOSCANMS has outperformed the other state-of-the-art compared algorithms over the compared algorithms and when applying to solve engineering design problems such as tension/compression spring design and welded beam design problems.

References

Krawiec, K., Simons, C., Swan, J., Woodward, J.: Metaheuristic design patterns: new perspectives for larger-scale search architectures. In: Vasant, P., Alparslan-Gok, S.Z., Weber, G. (eds.) Handbook of Research on Emergent Applications of Optimization Algorithms, pp. 1–36. IGI Global, Pennsylvania (2018)

Ong, P.; Chin, D.D.V.S.; Ho, C.S.; Ng, C.H.: Metaheuristic approaches for extrusion manufacturing process: utilization of flower pollination algorithm and particle swarm optimization. In: Handbook of Research on Applied Optimization Methodologies in Manufacturing Systems, pp. 43–56. IGI Global, Pennsylvania (2018)

Hudaib, A.A.; Fakhouri, H.N.: Supernova optimizer: a novel natural inspired meta-heuristic. Mod. Appl. Sci. 12(1), 32 (2017)

Mendes, R.; Kennedy, J.; Neves, J.: The fully imformed particle swarm: Simpler, mabe better. IEEE Trans. Evol. Comput. 8, 204–210. (2004). https://doi.org/10.1109/TEVC.2004.826074

Kennedy, J.: Particle swarm optimization. Encyclopedia of machine learning, pp. 760–766. Springer, US (2011)

Al-Sayyed, R.M.; Fakhouri, H.N.; Rodan, A.; Pattinson, C.: Polar particle swarm algorithm for solving cloud data migration optimization problem. Mod. Appl. Sci. 11(8), 98 (2017)

Altay, E.V.; Alatas, B.: Performance comparisons of socially inspired metaheuristic algorithms on unconstrained global optimization. In Advances in Computer Communication and Computational Sciences, pp. 163–175. Springer, Singapore (2019)

Chegini, S.N.; Bagheri, A.; Najafi, F.: PSOSCALF: a new hybrid PSO based on Sine Cosine Algorithm and Levy flight for solving optimization problems. Appl. Soft Comput. 73, 697–726 (2018)

Mirjalili, S.: SCA: a sine cosine algorithm for solving optimization problems. Knowl. Based Syst. 96, 120–133 (2016)

Eberhart, R.; Kennedy, J.: Particle swarm optimization. In: Proceedings of the IEEE International Conference on Neural Networks, vol. 4, pp. 1942–1948 (1995)

Benítez-Hidalgo, A.; Nebro, A.J.; Durillo, J.J.; García-Nieto, J.; López-Camacho, E.; Barba-González, C.; Aldana-Montes, J.F.: About designing an observer pattern-based architecture for a multi-objective metaheuristic optimization framework. In: International Symposium on Intelligent and Distributed Computing, pp. 50–60. Springer, Cham (2018)

Li, Y.G.; Gui, W.H.; Yang, C.H.; Li, J.: Improved PSO algorithm and its application. J. Central South Univ. Technol. 12(1), 222–226 (2005)

Pham, D.T.; Ghanbarzadeh, A.; Koç, E.; Otri, S.; Rahim, S.; Zaidi, M.: The bee’s algorithm—a novel tool for complex optimization problems. In: Intelligent Production Machines and Systems, pp. 454–459. Elsevier Science Ltd., Amsterdam (2006)

Spendley, W. G. R. F. R.; Hext, G. R.; Himsworth, F. R.: Sequential application of simplex designs in optimisation and evolutionary operation. Technometrics, 4(4), 441–461 (1962)

Nelder, J.A.; Mead, R.: A simplex method for function minimization. Comput. J. 7(4), 308–313 (1965)

Wright, M.H.: Nelder, Mead, and the other simplex method. Doc. Math. 7, 271–276 (2010)

Sörensen, K.; Sevaux, M.; Glover, F.: A history of metaheuristics. In: Handbook of Heuristics, pp. 1–18 (2018)

Beni, G.; Wang, J.: Swarm intelligence in cellular robotic systems. In: Proceedings of NATO Advanced Workshop on Robots and Biological Systems, Tuscany, Italy, June 26–30 (1989). https://doi.org/10.1007/978-3-642-58069-7_38

Dorigo, M.; Di Caro, G.: Ant colony optimization: a new meta-heuristic. In: Proceedings of the 1999 Congress on Evolutionary Computation-CEC99 (Cat. No. 99TH8406), Vol. 2, pp. 1470–1477. IEEE, Washington (1999)

Yao, X.; Liu, Y.; Lin, G.: Evolutionary programming made faster. IEEE Trans. Evol. Comput. 3(2), 82–102 (1999)

Yang, X.S.; Deb, S.: Cuckoo search via Lévy flights. In: 2009 World Congress on Nature and Biologically Inspired Computing (NaBIC), pp. 210–214. IEEE, Washington (2009)

Karaboga, D.; Basturk, B.: A powerful and efficient algorithm for numerical function optimization: artificial bee colony (ABC) algorithm. J. Global Optim. 39(3), 459–471 (2007)

Rashedi, E.; Nezamabadi-Pour, H.; Saryazdi, S.: GSA: a gravitational search algorithm. Inf. Sci. 179(13), 2232–2248 (2009)

Yang, X.S.: Firefly algorithm. In: Engineering Optimization, pp. 221–223 (2010)

Mirjalili, S.; Mirjalili, S.M.; Lewis, A.: Grey wolf optimizer. Adv. Eng. Softw. 69, 46–61 (2014)

Kaveh, A.; Farhoudi, N.: A new optimization method: Dolphin echolocation. Adv. Eng. Softw. 59, 53–70 (2013)

Pan, W.T.: A new fruit fly optimization algorithm: taking the financial distress model as an example. Knowl. Based Syst. 26, 69–74 (2012)

Krishnanand, K.N.; Ghose, D.: Glowworm swarm optimization: a new method for optimising multi-modal functions. Int. J. Comput. Intell. Stud. 1(1), 93–119 (2009)

Kiran, M.S.: TSA: tree-seed algorithm for continuous optimization. Expert Syst. Appl. 42(19), 6686–6698 (2015)

Rajabioun, R.: Cuckoo optimization algorithm. Appl. Soft Comput. 11(8), 5508–5518 (2011)

Oftadeh, R.; Mahjoob, M.J.; Shariatpanahi, M.: A novel meta-heuristic optimization algorithm inspired by group hunting of animals: hunting search. Comput. Math Appl. 60(7), 2087–2098 (2010)

Zhao, W.; Wang, L.; Zhang, Z.: Atom search optimization and its application to solve a hydrogeologic parameter estimation problem. Knowl. Based Syst. 163, 283–304 (2019)

Joshi, H.; Arora, S.: Enhanced grey wolf optimization algorithm for global optimization. Fundam. Inf. 153(3), 235–264 (2017)

Qais, M.H.; Hasanien, H.M.; Alghuwainem, S.: Augmented grey wolf optimizer for grid-connected PMSG-based wind energy conversion systems. Appl. Soft Comput. 69, 504–515 (2018)

Fakhouri, S.N., Hudaib, A., Fakhouri, H.N.: Enhanced optimizer algorithm and its application to software testing. J. Exp. Theor. Artif. Intell. (2019). https://doi.org/10.1080/0952813X.2019.1694591

Mohamed, A.A.A.; Mohamed, Y.S.; El-Gaafary, A.A.; Hemeida, A.M.: Optimal power flow using moth swarm algorithm. Electr. Power Syst. Res. 142, 190–206 (2017)

Arora, S.; Singh, S.: Butterfly optimization algorithm: a novel approach for global optimization. Soft. Comput. 23, 715–734 (2018)

Van Den Berg, R. A.; Pogromsky, A. Y.; Leonov, G. A.; Rooda, J. E.: Design of convergent switched systems. In Pettersen K.Y., Gravdahl J.T., Nijmeijer H. (eds.) Group coordination and cooperative control (pp. 291–311). Springer, Berlin, Heidelberg (2006)

Semwal, V.B.; Singha, J.; Sharma, P.K.; Chauhan, A.; Behera, B.: An optimized feature selection technique based on incremental feature analysis for bio-metric gait data classification. Multimed. Tools Appl. 76(22), 24457–24475 (2017)

Semwal, V.B., Gaud, N., Nandi, G.C.: Human gait state prediction using cellular automata and classification using ELM. In: Tanveer, M., Pachori, R. (eds.) Machine Intelligence and Signal Analysis, pp. 135–145. Springer, Singapore (2019)

Kumar, S.; Aaron, J.; Sokolov, K.: Directional conjugation of antibodies to nanoparticles for synthesis of multiplexed optical contrast agents with both delivery and targeting moieties. Nat. Protoc. 3(2), 314 (2008)

Valsange, P.S.: Design of helical coil compression spring: a review. Int. J. Eng. Res. Appl. 2(6), 513–522 (2012)

Deb, K.: Optimal design of a welded beam via genetic algorithms. AIAA J. 29(11), 2013–2015 (1991)

Azqandi, M.S., Delavar, M., Arjmand, M.: An enhanced time evolutionary optimization for solving engineering design problems. Eng. Comput. (2019). https://doi.org/10.1007/s00366-019-00729-w

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Fakhouri, H.N., Hudaib, A. & Sleit, A. Hybrid Particle Swarm Optimization with Sine Cosine Algorithm and Nelder–Mead Simplex for Solving Engineering Design Problems. Arab J Sci Eng 45, 3091–3109 (2020). https://doi.org/10.1007/s13369-019-04285-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13369-019-04285-9