Abstract

This paper develops a coevolutionary model of social coordination and matching in which agents are embedded in an arbitrary fixed network and are matched in pairs to play a coordination game. In each period, based on payoff comparison with their neighbors, agents decide whether to imitate their neighbors’ actions and whether to end their present partnerships. Inertia exists in action revision and partnership updating. Each agent can exit a partnership unilaterally. All separated agents are randomly matched in pairs at the beginning of the next period. Occasionally, agents make mistakes in action revision and partnership updating. When the size of the society is large, in the long run, all agents will play the Pareto-efficient action for a particular subset of networks.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Many day-to-day economic interactions occur between total strangers. These types of interactions include consumer-to-consumer electronic commerce, peer-to-peer lending and loans, trading goods in online communities, peer-to-peer file-sharing and online real-time multi-player games. However, individuals can learn from their friends’ experiences to help them make “correct” or “informed” decisions.

For example, individuals typically have family members, friends, colleagues, business partners and neighbors (collectively referred to as friends) in the real world. Individuals may participate in online real-time multi-player games against anonymous players and face decisions concerning the amount of effort to put in and whether to continue playing, especially if they are playing in a team role. By communicating with friends, an individual can find out how much effort to devote and determine whether it is worthwhile to continue playing.

The present paper aims to capture these particular phenomena and develops a coevolutionary model of social coordination and matching in networks in which agents interact with strangers and learn from neighbors.

Formally, we consider an even number of agents embedded in an arbitrary, fixed network. Agents are matched in pairs to play a \(2\times 2\) coordination game where there is a conflict between Pareto-efficiency and risk dominance. In each period, three things, which are assumed to be independent, occur. First, all separated agents are randomly matched in pairs. Any two separated agents are matched to each other with strictly positive probability. Second, with a strictly positive probability, if each agent’s payoff is strictly less than the maximum of all neighbors’ payoffs, the agent will imitate the action choice of the most successful neighbor. Third, with a strictly positive probability, when each agent performs strictly worse than the most successful neighbor, the agent will exit the present partnership. A single member can end any partnership unilaterally. Occasionally, agents make mistakes in action revision and partnership updating, and the noise rates in these two processes may be different.

First, we explore the convergence of unperturbed dynamics. With probability one, the unperturbed dynamics will converge to one of the limit states. The underlying intuition is as follows. For simplicity, consider a connected network. Assume that there are only two agents in a partnership choosing the Pareto-efficient action; these two agents receive the highest possible payoff. If there is a unique neighbor of the initial two players of the Pareto-efficient action, then with strictly positive probability, the unique neighbor switches to the Pareto-efficient action and is matched to a player of the risk-dominant action, regardless of whether she has an opportunity for partnership updating. Note that the conflict between Pareto-efficiency and risk dominance implies that the payoff of an agent who chooses the Pareto-efficient action and plays against an agent choosing the risk-dominant action is lowest. The unique neighbor of the initial two players of the Pareto-efficient action receives the lowest possible payoff. As a result, the Pareto-efficient action fails to spread to any other agent. Now, we consider the case in which the initial two players of the Pareto-efficient action have two or more neighbors. With strictly positive probability, two neighbors of the initial two players of the Pareto-efficient action choose the Pareto-efficient action and exit from the present partnership. These neighbors they will be matched to each other in the next period. Following this logic, the Pareto-efficient action may spread to the entire population. The case of the complete network falls into the second case. The logic of the contagion of the Pareto-efficient action can be generalized to certain networks that are not complete, in which a concept of widely connected networks is introduced to identify a particular subset of these networks.

Next, we identify the stochastically stable states of perturbed dynamics when the network is connected and the population size is large. If the network is widely connected, only the limit states where all agents choose the Pareto-efficient action are stochastically stable. The underlying intuition is as follows. When the network is widely connected, in any limit state, all agents play the same action. Consider the transition from a limit state where all agents choose the risk-dominant action to one where all agents choose the Pareto-efficient action. Assume that two agents in a partnership switch from the risk-dominant action to the Pareto-efficient action by mistake. As an implication of wide connectedness, two mutants have two or more neighbors. With positive probability, in the next period, the neighbors of the mutants choose the Pareto-efficient action and are matched to each other if possible. Following this logic, the Pareto-efficient action will spread to the entire population. The discussion also suggests that to induce the reverse transition, for each partnership, one member should mutate to choose the risk-dominant action. As a result, when the population size is larger than six, in the long run, all agents will choose the Pareto-efficient action. In addition, we introduce an example in which all agents are distributed along a line. The line is not widely connected. In this example, there exist polymorphic-action limit states. When partnership mutation is much more likely than action mutation, only the limit states where all agents choose the Pareto-efficient action are stochastically stable. When partnership mutation has the same frequency as action mutation, only the limit states where all agents choose the risk-dominant action are stochastically stable.

Our paper contributes to the literature on learning dynamics in local interaction games.Footnote 1 Many existing papers model strategy revision as myopic best response and show the emergence of the risk-dominant convention [2, 7, 8, 11, 27]. However, there also exists another strand of research in which strategy revision is modeled as imitation. Eshel et al. [14] assume that agents are situated around a circle and play Prisoner’s Dilemma with their two immediate neighbors. They find that imitation of actions with the highest average payoffs might help individuals reach a cooperative outcome. Alós-Ferrer and Weidenholzer [1] assume that agents are situated around a circle and play a coordination game with their neighbors, not necessarily two immediate ones. They show that if interactions are neither global nor limited to the immediate neighbors, the Pareto-efficient convention can be uniquely selected provided that agents follow imitation rules. Although Alós-Ferrer and Weidenholzer [3] consider a coordination game and Alós-Ferrer and Weidenholzer [4] consider a minimum-effort game, they both assume that agents located within an arbitrary fixed network can observe the strategies and performances of agents beyond their interaction neighborhoods and imitate the most successful choices. Using this assumption, they derive sufficient conditions for the efficient convention to be unique selected. Cui [9] studies the case where agents located within an arbitrary, fixed network play a coordination game with their neighbors and imitate the strategies of their better-performing neighbors. For each agent, if one neighbor’s payoff from a specific interaction exceeds her average payoff per interaction, the neighbor is perceived as better performing. He shows that if each agent’s neighborhood is large, in the long run, all agents will choose the Pareto-efficient action. Cui and Wang [10] investigate the situation where in each period, a small proportion of agents located within an arbitrary fixed network are randomly chosen to play a minimum-effort game. The agents learn from their own and neighbors’ experiences and imitate the most successful choices. They show that if each agent’s neighborhood is large, in the long run, all agents choose the highest effort level. As a complement, when agents are located within an arbitrary, fixed network, both the present paper and Khan [25] show that (random) matching and imitation might help agents reach the Pareto-efficient convention.

Furthermore, our paper is related to the literature on random matching in learning dynamics in games. In the seminal paper of Robson and Vega-Redondo [29], a fixed even number of agents are randomly matched in pairs, once every period, to play a coordination game and then choose the strategy with the highest (random) average payoff. They then show that the Pareto-efficient convention is selected. Both the present paper and Khan [25] extend Robson and Vega-Redondo [29] by assuming that agents are located within an arbitrary, fixed network in which agents can only observe the action choices and payoffs of neighbors. Although both papers assume that (1) the observation network is fixed and the interaction network varies and (2) agents use imitation rules to revise actions, this paper is distinguished from Khan [25] by introducing (bounded) rationality into the evolution of the interaction network. In Khan [25], in each period, all agents are randomly matched in pairs; that is, the updating of the interaction network is totally random and does not take into account agents’ (bounded) rationality. By contrast, in our setting, each partnership will survive unless some member has a neighbor performing strictly better than herself, and only the separated agents will be randomly matched at the beginning of the next period. Because of this difference, in Khan [25, the proof of Proposition 1], the Pareto- efficient action can spread from two matched agents to the entire population, and there does not exist any polymorphic-action limit state; by contrast, in our paper, there may exist polymorphic-action limit states when the network is not widely connected.

In addition, our paper also contributes to the literature on the coevolution of games and networks in which individuals choose interaction partners in addition to the action.Footnote 2 The great majority of existing papers model action revision as the myopic best response [18, 19, 21, 31,32,33]. However, there exists another strand of research. In Fosco and Mengel [15], agents learn about action choices and link choices by imitating the successful behavior of others. The present paper falls into this second strand. In particular, we assume that based on payoff comparison with neighbors, agents decide whether to imitate neighbors’ action choices and whether to maintain their present partnerships.

The rest of this paper is organized as follows. Section 2 introduces the basic building blocks of the model. Section 3 studies the convergence of the unperturbed dynamics and introduces the definition of wide connectedness to exclude the polymorphic-action component. Section 4 examines the stochastic stability of the perturbed dynamics in widely connected networks and introduces an example (the line) to show that when the network is not widely connected, there exist polymorphic-action limit states, and the stochastically stable states may be different. Section 5 explores the properties of widely connected networks. Section 6 concludes.

2 Model

2.1 The Base Game

The base game is a symmetric \(2\times 2\) coordination game G. Each player has two actions, A and B. Let \(u(x,x^{'})>0\) be the payoff of a player choosing action x given that the other player is choosing action \(x^{'}\). The payoff function is represented by the following table:

where \(a>d\), \(b>c\), \(a>b\) and \(b+d>a+c\). Hence, G has two strict Nash equilibria, (A, A) and (B, B), where (A, A) is Pareto efficient and (B, B) is risk-dominant. In addition, it is assumed that \(b>d\). Thus, an A-player (a B-player) always strictly prefers to interact with an A-player (a B-player). In summary, we have \(a>b>d>c>0\), where \(d>c\) follows from \(b+d>a+c\).

The game G can be normalized as

where \(\alpha =(d-c)/(a-c)\) and \(\beta =(b-c)/(a-c)\). We have \(1>\beta>\alpha >0\) and \(\alpha +\beta >1\).

2.2 Local Observation

Let \({\mathbf {N}}= \{1, \ldots ,n\}\) be the set of agents where \(n\ge 4\) is an even number. For any \(i,j\in {\mathbf {N}}\), the relationship between them is captured by a binary variable, \(g_{ij}\in \{0,1\}\). If i and j are able to observe each other’s action and payoff in the last period, \(g_{ij}=1\); otherwise, \(g_{ij}=0\). Although each agent always knows her action and payoff, for clarity, it is assumed that \(g_{ii}=0\). Thus, \(g=\{(g_{ij}) _{i,j\in {\mathbf {N}}}\}\) defines an undirected graph and is referred to as an observation network over \({\mathbf {N}}\).

For any agent \(i\in {\mathbf {N}}\), the neighborhood\(N(i)=\{j\in {\mathbf {N}}:g_{ij}=1\}\) is the set of agents with whom mutual observation exists. Assume that \(N(i)\ne \emptyset \) for any \(i\in {\mathbf {N}}\). Each element in N(i) is called a neighbor. The neighborhood sized(i) is the cardinality of N(i); that is, \(d(i)=|N(i)|\). The notion of neighborhood can be extended as follows. For any nonempty proper subset \({\mathbf {N}}^{'}\subset {\mathbf {N}}\), \(N({\mathbf {N}}^{'})=\{j\in {\mathbf {N}}{\setminus } {\mathbf {N}}^{'}:\exists i\in {\mathbf {N}}^{'} \text { such that }g_{ij}=1\}\).

For any \(i,j\in {\mathbf {N}}\), a path from i to j is a sequence of distinct elements \((i_{1}, \ldots ,i_{L})\) satisfying \(i=i_{1}\), \(g_{i_{L}j}=1\) and \(g_{i_{l}i_{l+1}}=1\) for any \(1\le l\le (L-1)\). When \(i=j\), a circular path is defined. For any \({\mathbf {N}}^{'}\subset {\mathbf {N}}\), \({\mathbf {N}}^{'}\ne \emptyset \), the subgraph \(\big ({\mathbf {N}}^{'},(g_{ij}) _{i,j\in {\mathbf {N}}^{'}}\big )\) is a component if (1) for any \(i,j \in {\mathbf {N}}^{'}\), \(i\ne j\), there is a path from i to j and (2) there is no strict superset \({\mathbf {N}}^{''}\) of \({\mathbf {N}}^{'}\) such that (1) holds. Let \({\mathbf {c}}\) denote a component. Let \({\mathbf {C}}\) denote the set of components. The network g is connected if \({\mathbf {C}}\) is a singleton. The network g is complete if \(g_{ij}=1\) for any two distinct agents \(i,j\in {\mathbf {N}}\).

2.3 Matching

The following definition describes how agents are matched in pairs.

Definition 1

(Matching) The set of unordered pairs \(m=\{(i,j):i,j \in {\mathbf {N}} \text { and } i\ne j\}\) defines a matching over \({\mathbf {N}}\) if for any \(i\in {\mathbf {N}}\), there exists only one agent \(j\in {\mathbf {N}}\) such that \((i,j)\in m\) holds.

Any pair \((i,j)\in m\) will be referred to as a partnership, where agent j will be referred to as the partner of agent i under the matching m. Let \(m_{i}\) be i’s partner under m. The matching m defines a function \(f_{m}: {\mathbf {N}}\rightarrow {\mathbf {N}}\) by setting \(f_{m}(i)=m_{i}\) for any \(i\in {\mathbf {N}}\). The function \(f_{m}\) is a one-to-one correspondence, and \(f_{m}(f_{m}(i))=i\) for any \(i\in {\mathbf {N}}\). Let \({\mathbf {M}}\) denote the set of all possible matchings over \({\mathbf {N}}\).Footnote 3

2.4 Imitation Dynamics and Endogenous Matching

Time is discrete; i.e., \(t=0,1,2, \ldots \). In each period t, each agent i commits to an action \(a_{i}(t)\in \{A,B\}\). Let \({\mathbf {a}}(t)=(a_{1}(t), \ldots ,a_{n}(t))\) denote the action profile. All agents are matched in pairs to play the game G, where the matching is m(t). By interacting with her partner \(m_{i}(t)\), agent i receives a payoff \(u(a_{i}(t),a_{m_{i}(t)}(t))\). In each period t, three things, which are assumed to be independent, occur.

First, all separated agents are randomly matched in pairs. Suppose that each of the possible ways of pairing up all separated agents is chosen with strictly positive probability.

Second, with common probability \((1-p)\), each agent i receives an opportunity to revise her action. The probability p, \(0<p<1\), is an indicator of action inertia. Having the opportunity of action revision, if i’s payoff is strictly less than the maximum of all neighbors’ payoffs, i will switch to the action choice of the most successful neighbor in period \(t+1\); otherwise, i continues to play \(a_{i}(t)\). Formally,

where \(j_{0}\in \text {arg} \max _{k\in N(i)}u(a_{k}(t),a_{m_{k}(t)}(t))\). Note that \(0<\alpha<\beta <1\). This definition implies that if there are two or more elements in \(\text {arg} \max _{k\in N(i)\cup \{i\}}u(a_{k}(t),a_{m_{k}(t)}(t))\), these agents choose the same action.

Third, with common probability \((1-q)\), each agent i updates her partnership. The probability q, \(0<q<1\), is an indicator of partnership inertia. Having the opportunity of partnership updating, if \(u(a_{i}(t),a_{m_{i}(t)}(t))\ge \max _{k\in N(i)}u(a_{k}(t),a_{m_{k}(t)}(t))\), i is satisfied with the status quo; otherwise, i is dissatisfied. For any partnership \((i,m_{i}(t))\in m(t)\), one member’s dissatisfaction can end the partnership and can leave both i and \(m_{i}(t)\) separated. Thus, to repeat a partnership, when each member has the opportunity of partnership updating, she must have no discontent.

The behavioral rule deserves a brief discussion. There exists inertia in action revision and partnership updating, and whether to revise an action and whether to update the partnership are mutually independent. Thus, agent i may only have the opportunity to revise her action or may only have the opportunity to update her partnership. Furthermore, agents are assumed to be boundedly rational. If \(u(a_{i}(t),a_{m_{i}(t)}(t))\ge \max _{k\in N(i)}u(a_{k}(t),a_{m_{k}(t)}(t))\), agent i will neither alter the action nor update the partnership; otherwise, i will imitate the action choice of the most successful neighbor or exit from the present partnership. When the opportunity of partnership updating is available, each agent updates the partnership following the rule outlined, regardless of whether she has already altered the action. In addition, to repeat the present partnership, mutual consent of both members is required. In other words, either member can break up a partnership unilaterally. The partnership-updating mechanism is analogous to the rule of link formation and deletion in the definition of pairwise stability given by Jackson and Wolinsky [22].

The action-revision and partnership-updating process defines a Markov chain \(\{S(t)\}_{t\in {\mathbb {N}}}\) over \(\varOmega =\big (\prod _{i\in {\mathbf {N}}}\{A,B\}\big )\times {\mathbf {M}}\). We call this process unperturbed dynamics. An absorbing set is a minimal subset of \(\varOmega \) such that once the dynamics reaches the set, the probability of leaving it is zero. An absorbing state is an element that forms a singleton absorbing set. Let \({\bar{\varOmega }}\) be a union of one or more absorbing sets. The basin of attraction\(D({\bar{\varOmega }})\) is the set of states from which the unperturbed dynamics will converge to \({\bar{\varOmega }}\) with probability one.

There exist multiple absorbing sets. For instance, for any \(m\in {\mathbf {M}}\),

is an absorbing state. In fact, given that all agents choose the same action, each agent receives an identical payoff and is satisfied with the status quo. Neither her action nor her partnership will be altered.

To select among all likely absorbing sets, we introduce random noises to identify the long-run equilibria [11, 24, 35]. Suppose that in each period t, when receiving the opportunity of action revision or partnership updating,

-

agent i makes mistakes in action revision with probability \(\epsilon \in (0,1)\);

-

agent i makes mistakes in partnership updating with probability \(\epsilon ^{\lambda }\), \(\lambda >0\);

-

these two processes (of making mistakes) are independent, and random noises are independent across agents and periods.

In the case of action-revision mutation, any element in \(\{A,B\}\) is randomly chosen with strictly positive probability. In the case of partnership-updating mutation, the partnership is broken despite both agents being satisfied with the partnership, or the relationship survives despite one or both agents being unsatisfied. The parameter \(\lambda \) measures the likelihood of partnership-updating mutation relative to that of action-revision mutation. The lower \(\lambda \) is, the more likely that the partnership mutation will occur. This behavior gives rise to perturbed dynamics\(\{S^{\epsilon }(t)\}_{t\in {\mathbb {N}}}\). For any \(\epsilon \in (0,1)\), the Markov chain \(\{S^{\epsilon }(t)\}_{t\in {\mathbb {N}}}\) is irreducible and has a unique invariant distribution \(\mu ^{\epsilon }\). A state \(s\in \varOmega \) is stochastically stable if \(\lim _{\epsilon \rightarrow 0}\mu ^{\epsilon }(s)>0\). Let \(\varOmega ^{*}\) denote the set of all stochastically stable states.

Jackson and Watts [21] model partner choice and action choice as independent processes where only one link may be changed in each period. Furthermore, action-revision mutation occurs with probability \(\epsilon \), and link-updating mutation occurs with probability \(\gamma \), where it is assumed that \(\gamma =f\epsilon \) for some \(f>0\). Thus, action-revision mutation and link-updating mutation vanish at the same rate. As in Jackson and Watts [21], in our model, action revision and partner updating are two independent processes. However, when \(\lambda <1\), partnership-updating mutation vanishes at a lower rate than action-revision mutation; when \(\lambda =1\), partnership-updating mutation and action-revision mutation vanish at the same rate; when \(\lambda >1\), partnership-updating mutation vanishes at a higher rate than action-revision mutation.

3 Convergence of the Unperturbed Dynamics

In this section, we examine the convergence of the unperturbed dynamics. First, we show that the unperturbed dynamics always converges to a limit state where (1) no agent has an incentive to alter her action; (2) at most one A-agent (B-agent) wants to update her partnership; and (3) there exists at most one component such that both actions coexist. Second, we identify a particular class of networks such that for each limit state the coexistence of both actions in any component is impossible.

3.1 General Networks

Consider a component \({\mathbf {c}}\) of the network g. For any \(s\in \varOmega \) and \(a\in \{A,B\}\), let \({\mathbf {I}}_{a}(s,{\mathbf {c}})\) be the set of a-players in \({\mathbf {c}}\) who are motivated to switch to the other action. Let \({\mathbf {P}}_{a}(s,{\mathbf {c}})\) be the set of a-players in \({\mathbf {c}}\) who are willing to continue to choose action a but are dissatisfied with their present partners. Recall that in the game G, \(0<\alpha<\beta <1\). We have \(i\in {\mathbf {I}}_{A}(s,{\mathbf {c}})\) if and only if i plays the game G with a B-player and receives a payoff of 0 and one neighbor plays action B, while any neighbor who plays A also gets a payoff of 0. Formally,

We have \(i\in {\mathbf {I}}_{B}(s,{\mathbf {c}})\) if and only if one neighbor plays A and receives a payoff of 1. Formally,

Following from the discussion immediately after the behavioral rule, \(i\in {\mathbf {P}}_{a}(s,{\mathbf {c}})\) if and only if the partner plays the other action and one neighbor receives a payoff of u(a, a) and is the most successful among all agents in N(i). Formally,

and

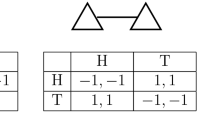

Illustration of \({\mathbf {I}}_{a}(\cdot ,\cdot )\) and \({\mathbf {P}}_{a}(\cdot ,\cdot )\) for any \(a\in \{A,B\}\): (1) each node represents an agent; (2) each solid line indicates a link, whereas a dotted line indicates that two end-agents are matched to each other; and (3) a blank (gray) node indicates that the agent plays action A (B)

The following example clarifies the definitions of \({\mathbf {I}}_{a}(\cdot ,\cdot )\) and \({\mathbf {P}}_{a}(\cdot ,\cdot )\) for any \(a\in \{A,B\}\).

Example 1

Assume that \({\mathbf {N}}=\{1, \ldots ,8\}\) and the network g is given by \(g_{i(i+1)}=1\) for any \(1\le i\le 7\) and \(g_{18}\)=1. Thus, g is a circle. Consider the state s illustrated by Fig. 1 where 1 and 2 play action B and are matched to each other, 5 and 6 play action A and are matched to each other, and A-players 3 and 7 are matched to B-players 4 and 8. Each agent in \(\{3,4,7,8\}\) receives a strictly lower payoff than some neighbor. Either of 7 and 8 plays the same action as the most successful neighbor and is not motivated to alter the action. As a result,

The following theorem explores the convergence of the unperturbed dynamics. The proof is provided in “Appendix”.

Theorem 1

The unperturbed dynamics \(\{S(t)\}_{t\in {\mathbb {N}}}\) will converge to one of the limit states with probability one. The state \(s=\big ({\mathbf {a}},m\big )\in \varOmega \) is a limit state if and only if

-

1.

\({\mathbf {I}}_{A}(s,{\mathbf {c}})= {\mathbf {I}}_{B}(s,{\mathbf {c}})=\emptyset \) for any \({\mathbf {c}}\in {\mathbf {C}}\) and \(\sum _{{\mathbf {c}}\in {\mathbf {C}}} |{\mathbf {P}}_{a}(s, {\mathbf {c}})| \le 1\text { for all } a\in \{A,B\}\);

-

2.

there is at most one component \({\mathbf {c}}_{AB}\) such that both actions are played by its members where if \({\mathbf {c}}_{AB}\) exists, \(|{\mathbf {P}}_{A}(s,{\mathbf {c}}_{AB})|=1\).

The unperturbed dynamics will converge to one of the limit states with certainty. Assume that there are only two A-players, \(i_{0}\) and \(j_{0}\), \(g_{i_{0}j_{0}}=0\), and that they are matched to each other. Any agent in \(N(i_{0})\) (\(N(j_{0})\)) receives a strictly lower payoff than \(i_{0}\) (\(j_{0}\)) and is motivated to switch from B to A and end the present partnership. In the next period, with strictly positive probability, all agents in \(N(i_{0})\cup N(j_{0})\) play A and are matched to each other if possible. Following this logic, the Pareto-efficient action A can spread from \(i_{0}\) and \(j_{0}\) to others. When \(|\cup _{i\in \{i_{0},j_{0}\}}\big (\{i\}\cup N(i)\big )|\) is odd, one A-player \(i_{1}\) is matched to a B-player \(j_{1}\). Furthermore, if \(N(\cup _{i\in \{i_{0},j_{0}\}}\big (\{i\}\cup N(i)\big ))\) is a singleton consisting of \(j_{1}\), A fails to spread from \(i_{1}\) to \(j_{1}\). Then, a polymorphic-action limit state is reached. In addition, for any \(a\in \{A,B\}\), the number of a-players who are discontent with their present partners never exceeds one; otherwise, these a-players will be matched to each other.

When the polymorphic-action component \({\mathbf {c}}_{AB}\) exists, there are two distinct agents \(i_{0}\) and \(j_{0}\) in \({\mathbf {c}}_{AB}\), \(g_{i_{0}j_{0}}=1\), such that \(i_{0}\) is an A-player and \(j_{0}\) is a B-player. As a B-player, \(j_{0}\) receives a payoff of \(\alpha \) or \(\beta \) that is strictly less than 1. Agent \(i_{0}\) is matched to a B-player and receives a payoff of zero, which is strictly less than \(\alpha \) or \(\beta \); otherwise, \(j_{0}\) is motivated to alter the action. This implies that \(i_{0}\) is the unique A-player who is motivated to exit from the present partnership. Note that \(i_{0}\) is not motivated to change her action. Therefore, for agent \(i_{0}\), one neighbor plays A and is matched to an A-player. That is, the number of A-players is greater than three.

As an illustration, the following example presents two limit states where for one of them, there is a polymorphic-action component.

Example 2

Assume that the network g consists of two components:

Figure 2 illustrates two limit states in g. In the left panel, all members in each component choose the same action. Only agent 3 is motivated to end the present partnership because she receives a lower payoff than one neighbor 4. In the right panel, \({\mathbf {c}}_{1}\) is the polymorphic-action component. Due to the poor performance of agent 2, the Pareto-efficient action A fails to spread to all members of \({\mathbf {c}}_{1}\). However, 2 has no incentive to alter action because the most successful neighbor 1 chooses A. Only 2 and 3 are willing to end the present partnerships. Following the partnership-updating mechanism, they continue to be matched.

3.2 Wide Connectedness

When the network g is complete, there is no polymorphic-action limit state. If both actions coexist, the discussion following Theorem 1 implies that there exist two A-players, denoted by \(i_{0}\) and \(j_{0}\), who are matched to each other. Note that a link exists between any two distinct agents. Each B-player has one neighbor \(i_{0}\) or \(j_{0}\) who receives the highest possible payoff by choosing A and is motivated to switch to A. This yields a contradiction.

A fascinating question that arises is whether this conclusion can be extended to more general networks? Fortunately, a positive answer can be given. We now present a particular class of networks that exclude polymorphic-action limit states.

Definition 2

(Wide connectedness) A component \({\mathbf {c}}=\big ({\mathbf {N}}^{'},(g_{ij}) _{i,j\in {\mathbf {N}}^{'}}\big )\) is widely connected if either \(|{\mathbf {N}}^{'}|=2\) or for any proper subset \({\mathbf {N}}^{''}\subset {\mathbf {N}}^{'}\), \(N({\mathbf {N}}^{'}{\setminus } {\mathbf {N}}^{''})\) is not a singleton when \({\mathbf {N}}^{''}\ge 2\). The network g is widely connected if g is connected and the unique component is widely connected.

Consider a component \({\mathbf {c}}=\big ({\mathbf {N}}^{'}, (g_{ij}) _{i,j\in {\mathbf {N}}^{'}}\big )\) where \(|{\mathbf {N}}^{'}|\ge 3\). Informally, wide connectedness implies that for any partition \(\{{\mathbf {N}}^{'}_{1}, {\mathbf {N}}^{'}_{2}\}\) of \({\mathbf {N}}^{'}\), when \(|{\mathbf {N}}^{'}_{1}|\ge 2\), there are at least two agents in \({\mathbf {N}}^{'}_{1}\) who have at least one link with agents in \({\mathbf {N}}^{'}_{2}\). Formally,

Furthermore, when \({\mathbf {N}}^{'}_{1}={\mathbf {N}}^{'}{\setminus }\{i\}\) for any \(i\in {\mathbf {N}}^{'}\), wide connectedness implies that i has at least two neighbors.

The following example shows that the circle is widely connected.

Example 3

Assume that agents are spatially distributed around a circle. Formally, for any agent \(i\in {\mathbf {N}}=\{1, \ldots ,n\}\), \(N(i)=\{i-1,i+1\}\) where \(i\pm 1\) is understood as modulo n. As an illustration, see Fig. 1. This network is widely connected. For any nonempty proper subset \({\mathbf {N}}^{'}\subset {\mathbf {N}}\), \(N({\mathbf {N}}{\setminus } {\mathbf {N}}^{'})\) contains two or more agents.

The following example illustrates a network that is connected but not widely connected.

Example 4

Assume that there are 2m, \(m\ge 2\), agents; that is, \(n=2m\). The network g is defined as follows: \(g_{ij}=1\) for any distinct agents \(i,j\in \{1, \ldots ,m\}\) and \(i,j\in \{m+1, \ldots ,2m\}\), and \(g_{m(m+1)}=1\). This network is not widely connected. In fact, \(N({\mathbf {N}}{\setminus }\{1, \ldots ,m\})=N(\{m+1, \ldots ,2m\})=\{m\}\).

Examples 3 and 4 show that wide connectedness has no relationship with the minimum degree among all agents. Example 3 illustrates a widely connected network where \(d(i)=2\) for any \(i\in {\mathbf {N}}\), whereas Example 4 presents a connected but not widely connected network where \(\min _{i\in {\mathbf {N}}}d(i)\), which is \(m-1\), can be as large as possible when m goes to infinity.

Informally, when there are two members in a component \({\mathbf {c}}\) who play A and are matched to each other, wide connectedness of \({\mathbf {c}}\) implies that the Pareto-efficient action A is able to spread from these two agents to all members in \({\mathbf {c}}\). The following theorem shows that wide connectedness excludes the polymorphic-action limit states. The proof is provided in “Appendix”.

Theorem 2

If a component is widely connected, then all members choose the same action in any limit state.

If a component \({\mathbf {c}}\) is widely connected, for any limit state, the coexistence of both actions is prevented. The intuition is as follows. For a limit state s, when \({\mathbf {c}}\) is the polymorphic-action component, (1) at least two members play action A, and (2) \({\mathbf {P}}_{A}(s,{\mathbf {c}})\) is a singleton. Following from Definition 2, the set of B-players in \({\mathbf {c}}\) has two or more neighbors from the set of A-players in \({\mathbf {c}}\). However, the neighborhood of the set of B-players in \({\mathbf {c}}\) coincides with \({\mathbf {P}}_{A}(s,{\mathbf {c}})\). This result contradicts the fact that \({\mathbf {P}}_{A}(s,{\mathbf {c}})\) is a singleton.

As an immediate result of Theorem 2, we have the following corollary.

Corollary 1

Assume that each component of the network g is widely connected. Then, in any limit state, there exists no component such that both actions coexist.

Wide connectedness denies the possibility of the coexistence of both actions in any component for each limit state. In other words, when the network is widely connected, in any limit state, all members of each component choose the same action.

4 Stochastic Stability of the Perturbed Dynamics

In this section, we identify the stochastically stable states of the perturbed dynamics. Without loss of generality, assume that the network is connected. First, we study the case of widely connected networks. Then, we explore an example where agents are distributed along a line, i.e., a network that is not widely connected.

For clarity, we introduce the following notation. For any \(a\in \{A,B\}\), let \(\varOmega ^{a}\subset \varOmega \) denote the set of states where all agent play action a. Formally,

Each element in \(\varOmega ^{a}\) is a limit state of the unperturbed dynamics \(\{S(t)\}_{t\in {\mathbb {N}}}\), irrespective of the architecture of the network g. Let \(\varOmega ^{AB}\subset \varOmega \) be the set of limit states where both actions A and B coexist.

4.1 Perturbed Dynamics in Widely Connected Networks

Given that the network g is widely connected, the following theorem characterizes the stochastically stable states of the perturbed dynamics.

Theorem 3

Assume that the network g is widely connected. Consider the perturbed dynamics \(\{S^{\epsilon }(t)\}_{t\in {\mathbb {N}}}\), \(\epsilon \in (0,1)\). Then,

Proof

Following from Corollary 1, the set of all limit states of the unperturbed dynamics \(\{S(t)\}_{t\in {\mathbb {N}}}\) is \(\varOmega ^{A}\cup \varOmega ^{B}\).

First, the following lemma investigates the transition from \(\varOmega ^{B}\) to \(\varOmega ^{A}\). The proof is in “Appendix”.\(\square \)

Lemma 1

Assume that g is widely connected. Starting from any limit state in \(\varOmega ^{B}\), after two action mutations followed by the unperturbed dynamics, \(\{S^{\epsilon }(t)\}_{t\in {\mathbb {N}}}\) will arrive at a limit state in \(\varOmega ^{A}\) with positive probability.

Then, the following explores how to traverse all limit states in \(\varOmega ^{a}\) for any \(a\in \{A,B\}\). The proof is in “Appendix”.

Lemma 2

Assume that g is widely connected. For any two distinct limit states \(s, s^{'}\in \varOmega ^{a}\), \(a\in \{A,B\}\), there is a sequence \((s_{0}, \ldots ,s_{\ell })\) of distinct elements in \(\varOmega ^{a}\) such that (1) \(s_{0}=s\) and \(s_{\ell }=s^{'}\); and (2) for any \(0\le \iota \le (\ell -1)\), to transit from \(s_{\iota }\) to \(s_{\iota +1}\), two partnership mutations are required when \(\lambda \le 1\), and two action mutations are required when \(\lambda >1\).

Following from Lemmas 1 and 2, for any \(s\in \varOmega ^{A}\), when \(\lambda \le 1\), the minimum resistance among all s-trees is

where the first term is the resistance of transition from other limit states in \(\varOmega ^{B}\) to one state \(s^{'}\) in \(\varOmega ^{B}\); the second term is the resistance of transition from \(s^{'}\) to a state in \(\varOmega ^{A}\); and the third term is the resistance of transition from other limit states in \(\varOmega ^{A}\) to s; similarly, when \(\lambda >1\), the minimum resistance among all s-trees is \(2 (|{\mathbf {M}}|-1)+2 |{\mathbf {M}}|\).Footnote 4 However, the Proof of Lemma 1 shows that to transit from a limit state in \(\varOmega ^{A}\) to any limit state in \(\varOmega ^{B}\), 0.5n action mutations of resistance 0.5n are required. As a result, if \(n\ge 6\), the set of stochastically stable equilibria is \(\varOmega ^{A}\); if \(n=4\), all limit states are stochastically stable. \(\square \)

Consider the transition from \(\varOmega ^{B}\) to \(\varOmega ^{A}\). When one agent and her partner play action A by mistake, they both receive the highest possible payoff of 1 and are never motivated to switch to action B or end the partnership between them. Then, in the next period, with strictly positive probability, all neighbors of these two agents play action A and are matched to one another if possible, where at most one A-player is matched to a B-player. Note that the network g is widely connected. This widely connected network implies that when the set of B-players is not empty, some B-players observe A-players who are matched to A-players and are motivated to switch to A. Following the same logic, the Pareto-efficient action A can spread from two agents in a partnership to the entire population. Therefore, two action mutations can induce the transition from \(\varOmega ^{B}\) to \(\varOmega ^{A}\). The reasoning also implies that to transit from \(\varOmega ^{A}\) to \(\varOmega ^{B}\), there should be at least one B-player in any partnership, and thus at least 0.5n action mutations are required. According to the radius–coradius theorem in Ellison [12], the radius of \(\varOmega ^{A}\) is at least 0.5n, and its coradius is 2. If \(n\ge 6\), in the long run, only limit states from \(\varOmega ^{A}\) will be observed with strictly positive probability.

Next, consider the transition from \(((a, \ldots ,a),m)\) to \(((a, \ldots ,a),m^{'})\) for any \(a\in \{A,B\}\), \(m\ne m^{'}\), provided that \(\lambda \le 1\). When agents i and \(m^{'}_{i}\), \(m_{i}\ne m^{'}_{i}\), receive the opportunity of partnership updating, they end their present partnerships by mistake. In the next period, with strictly positive probability, i and \(m^{'}_{i}\) are matched to each other. After two partnership mutations, another limit state \(s^{''}\in \varOmega _{a}\) is arrived at where, in comparison with s, \(s^{''}\) has at least one more partnership in common with \(s^{'}\). Thus, any two limit states in \(\varOmega _{a}\) are accessible through transitions between elements in \(\varOmega _{a}\), where two partnership mutations are required for each transition. According to the least-resistance tree construction, when \(n=4\), all limit states are stochastically stable.

4.2 Perturbed Dynamics in a Line

When the network g is connected but not widely connected, there may exist polymorphic-action limit states. The transition from \(\varOmega ^{A}\) to \(\varOmega ^{B}\) may proceed by passing through polymorphic-action limit states. In this subsection, we introduce an example to explore the perturbed dynamics in nonwidely connected networks provided that n is large.

Suppose that all agents are distributed along a line\(g^{l}\). Specifically, \(g^{l}_{i(i+1)}=1\) for any \(1\le i\le n-1\) and \(g^{l}_{ij}=0\) otherwise. Note that there is only one link between \(\{1, \ldots ,i\}\) and \(\{i, \ldots ,n\}\) for any \(1\le i\le n\). The network \(g^{l}\) is not widely connected.

As in the case of widely connected networks, for any \(a\in \{A,B\}\) and \(m\in {\mathbf {M}}\), \(((a, \ldots ,a),m)\) is a monomorphic-action limit state. As an illustration, see Fig. 3.

In addition to the monomorphic-action limit states in \(\varOmega ^{A}\cup \varOmega ^{B}\), there exist polymorphic-action limit states. Following from Theorem 1, for each polymorphic-action limit state, there exists only one A-player \(i_{0}\) such that (1) one neighbor plays B and one neighbor plays A and (2) \(i_{0}\) is matched to a B-player. The set of A-players is either \(\{1,2, \ldots , i_{0}\}\) or \(\{i_{0}, \ldots ,n\}\). Each A-player except \(i_{0}\) is matched to an A-player; otherwise, there is another A-player besides \(i_{0}\) who is motivated to end the present partnership, or \(i_{0}\) is motivated to switch to B. As an illustration, see Fig. 4.

In the remaining part of this subsection, we identify the stochastically stable states of the perturbed dynamics in the line. For clarity, we focus on the case where in any polymorphic-action limit state the set of A-players is \(\{1,2, \ldots , i_{0}\}\).

First, as in the case of widely connected networks (Lemma 2), any two distinct limit states in \(\varOmega ^{a}\), \(a\in \{A,B\}\), are accessible via a path of elements in \(\varOmega ^{a}\) where each transition between two neighboring elements requires two action or partnership mutations, and they have the same stochastic stability.

Second, consider the transition from \(\varOmega ^{A}\) to \(\varOmega ^{AB}\). Suppose that in the initial state, \(n-1\) and n are matched to each other. As an illustration, see the top panel of Fig. 3. Assume that n switches from A to B by mistake. Then, a polymorphic-action limit state with one single B-player is reached.

Third, consider the transition from \(\varOmega ^{B}\) to \(\varOmega ^{AB}\). In any polymorphic-action limit state, there exist two A-players who are matched to each other. The inequality \(0<\alpha<\beta <1\) implies that at least two action mutations are required to induce the transition from \(\varOmega ^{B}\) to \(\varOmega ^{AB}\). Suppose that in the initial state, \(m(i)=i+1\) for any odd i. Assume that 1 and 2 switch from B to A by mistake. In the next period, 1 and 2 receive the highest possible payoff of 1. Then, 3 will play A by imitating the action choice of the most successful neighbor 2. A polymorphic-action limit state with three A-players is reached. The discussion also shows that to leave the basin of attraction of\(\varOmega ^{B}\), two action mutations are required. In addition, two action mutations by 1 and n can induce the transition from \(\varOmega ^{B}\) to \(\varOmega ^{A}\) provided that in the initial state, \(m(i)=n+1-i\) for any i.

Next, consider the transition from \(\varOmega ^{AB}\) to \(\varOmega ^{A}\). Suppose that in the initial state, \(m(i)=i+1\) for any odd i. As an illustration, see the bottom panel of Fig. 4. Assume that \(i_{0}-1\) ends the present partnership by mistake. In the next period, with positive probability, \(i_{0}-1\) and \(i_{0}\) are matched to each other and receive the highest possible payoff of 1, while \(i_{0}+1\) and \(i_{0}-2\) are matched to each other. Then, \(i_{0}+1\) will switch to A because the most successful neighbor \(i_{0}\) chooses A, which implies that \(i_{0}+2\) will switch to A too. A polymorphic-action limit state with two more A-players will be reached. This discussion suggests that any polymorphic-action limit state is connected to a limit state in \(\varOmega ^{A}\)via a path of elements in\(\varOmega ^{AB}\), where each transition requires one single partnership mutation.

Now, consider the transition from \(\varOmega ^{AB}\) to \(\varOmega ^{B}\). Suppose that in the initial state, \(m(i)=i+1\) for any odd i. As an illustration, see the bottom panel of Fig. 4. There are two ways to complete the transition. In the first, assume that \(i_{0}-1\) switches from A to B by mistake. Then, \(i_{0}\) will switch to B because both neighbors play B. After one single action mutation, a polymorphic-action limit state with two more B-players will be reached. In the second, assume that A-player \(i_{0}-2\) ends the partnership with A-player \(i_{0}-1\) by mistake and that B-player \(i_{0}+2\) ends the partnership with B-player \(i_{0}+3\) by mistake. In the next period, with strictly positive probability, A-player \(i_{0}-1\) (\(i_{0}-2\)) is matched with B-player \(i_{0}+3\) (\(i_{0}+2\)). Then, \(i_{0}\) will switch to B because the most successful neighbor \(i_{0}+1\) chooses B, which implies that \(i_{0}-1\) will switch to B too. After two partnership mutations, a polymorphic-action limit state with two moreB-players will be reached. Note that when there is only one B-player in the initial state, only the first route is possible.

When \(\lambda <1\), the partnership mutation is more likely than the action mutation. The above discussion shows that in this case, the resistance of the transition from a polymorphic-action limit state to another polymorphic-action limit state with more A-players is \(\lambda \), whereas the resistance of the transition in the reverse direction is \(\min \{2\lambda , 1\}\). Note that \(\min \{2\lambda , 1\}>\lambda \). Therefore, for any \(s\in \varOmega ^{A}\) and \(s^{'}\in \varOmega ^{B}\), the least resistance of all s-trees is strictly less than the least resistance of all \(s^{'}\)-trees when n is large. The set of stochastically stable states is \(\varOmega ^{A}\) when \(\lambda <1\).

When \(\lambda =1\), the partnership and action mutations occur with the same frequency. In this case, both the resistance of the transition from a polymorphic-action limit state to another polymorphic-action limit state with more A-players and the resistance of the transition in the reverse direction are 1. However, leaving the basin of attraction of \(\varOmega ^{A}\) requires one single action mutation, whereas leaving the basin of attraction of \(\varOmega ^{B}\) requires two action mutations. Thus, the set of stochastically stable states is \(\varOmega ^{B}\) when \(\lambda =1\).

5 Discussion

Wide connectedness plays an important role in the previous two sections. Now, we examine the properties of widely connected networks.

To facilitate the analysis, we first present the following definition.

Definition 3

(Tree) The network g is a tree if g is connected and there is no circular path.

An equivalent definition is as follows. A network g is a tree if for any \(i,j\in {\mathbf {N}}\), when \(g_{ij}=1\), \(g-ij\) is no longer connected, where \(g-ij\) is the network obtained from g by deleting the link between i and j. Let \(g^{t}\) denote a tree.

The line \(g^{l}\) given in Sect. 4.2 is a tree. Recall that \(g^{l}\) is not widely connected. The following lemma shows that this conclusion can be extended to any tree.

Lemma 3

Any tree is not widely connected.

The proof is trivial. For any \(i,j\in {\mathbf {N}}\), if \(g^{t}_{ij}=1\), the network \(g^{t}-ij\) consists of two components with one component \(({\mathbf {N}}^{'},(g_{ij}) _{i,j\in {\mathbf {N}}^{'}})\) satisfying \(|{\mathbf {N}}^{'}|\ge 2\). It is straightforward to conclude that either \(N({\mathbf {N}}{\setminus } {\mathbf {N}}^{'})=\{i\}\) or \(N({\mathbf {N}}{\setminus } {\mathbf {N}}^{'})=\{j\}\) holds. Definition 2 implies that \(g^{t}\) is not widely connected.

Example 3 shows that the circle is widely connected. One may question that the tree is not widely connected because of the nonexistence of a circular path. To address this issue, consider the following example.

Example 5

Consider a society comprising six agents. Assume that the network g is specified as \(g_{i(i+1)}=1\) for any \(i=1, \ldots ,5\); \(g_{13}=1\) and \(g_{ij}=0\) otherwise. Agents 1, 2, 3 and the links between them define a circular path. It is straightforward to determine that \(N(\{4,5,6\})= \{i\in \{1,2,3\}:\exists j\in \{4,5,6\} \text { s.t. } g_{ij}=1 \}=\{3\}\). That is, g is not widely connected. Figure 5 illustrates a polymorphic-action limit state in g.

Example 5 presents a connected network that is neither a tree nor widely connected. This network implies that there is no dichotomy between widely connected networks and trees.

However, the following lemma shows that if there is a circular path passing through all agents, g is widely connected. The proof is trivial, and we omit it here.

Lemma 4

If there exists a circular path passing through all elements of \({\mathbf {N}}\), g is widely connected.

Note that any network containing a circular path passing through all elements of \({\mathbf {N}}\) is denser than the circle. Lemma 4 can also be extended further. The proof is trivial, and we omit it here.

Lemma 5

Consider two networks g and \(g^{'}\) where for any \(i,j\in {\mathbf {N}}\), \(g_{ij}=1\) implies that \(g^{'}_{ij}=1\). Then, if g is widely connected, \(g^{'}\) is widely connected.

Lemma 4 only provides a sufficient condition for wide connectedness. To clarify this point, consider the following example.

Example 6

Assume that the network is a core-periphery network \(g^{cp}\). Formally, there is a partition \(\{{\mathbf {N}}_{1},{\mathbf {N}}_{2}\}\) of \({\mathbf {N}}\) such that \(N(i)={\mathbf {N}}{\setminus } \{i\}\) for any \(i\in {\mathbf {N}}_{1}\) and \(N(j)={\mathbf {N}}_{1}\) for any \(j\in {\mathbf {N}}_{2}\). That is, \({\mathbf {N}}_{1}\) (\({\mathbf {N}}_{2}\)) is the set of core (periphery) agents.Footnote 5 For any core-periphery network \(g^{cp}\), there is no circular path passing through all agents provided that \(|{\mathbf {N}}_{1}|<|{\mathbf {N}}_{2}|\). However, it is straightforward to see that when \(2\le |{\mathbf {N}}_{1}|<|{\mathbf {N}}_{2}|\), \(g^{cp}\) is widely connected.Footnote 6 Figure 6 illustrates a limit state where all agents play A.

6 Concluding Remarks

We consider the coevolution of social coordination and matchings. Agents are located within an arbitrary, fixed network and are matched in pairs to play a \(2\times 2\) coordination game. In each period, by comparing their own and neighbors’ payoffs, agents decide whether to imitate neighbors’ actions and whether to maintain their present partnerships. There is inertia in both action revision and partnership updating. For any partnership, one member’s unwillingness can end it and can separate the two members. All separated agents are randomly matched in pairs at the beginning of the next period. Occasionally, agents make mistakes in action revision and partnership updating.

We first characterize the limit states of the unperturbed dynamics. To exclude the possibility that agents in a component play different actions in a limit state, we introduce the definition of wide connectedness. Wide connectedness of a component implies that for any proper subset of two or more members, two or more elements have links to elements in the complement of the subset. A network is widely connected if it is connected and the unique component is widely connected. Given that the network is widely connected, if the size of the population is four, all monomorphic limit states are stochastically stable; if the size is strictly larger than four, only the limit states where all agents choose the Pareto-efficient action are stochastically stable. We also introduce an example, the line, to show that when the network is not widely connected, the likelihood of partnership mutation relative to that of action mutation matters, and stochastically stable states may be different.

When the network is widely connected, the Pareto-efficient action can spread from two agents in a partnership to the entire population (Lemma 1 in the Proof of Theorem 3). Therefore, the main results can be generalized to the case that agents are matched in pairs to play a 2-person symmetric game where there is a Nash equilibrium strictly Pareto-dominating any other strategy profile. One example is a 2-person minimum-effort game. Another example is a 2-person symmetric game satisfying that there exists a strictly dominant strategy such that when all players play the strictly dominant strategy, each player receives the highest possible payoff.

There are several natural extensions to the research presented here. First, as in Alós-Ferrer and Weidenholzer [3, 4], agents are assumed to be optimistic and to focus on the highest observed payoffs only. It would be desirable to consider other measures of the performance of each action; for instance, agents focus on the lowest observed payoffs of each action only. Second, agents are assumed to be matched in pairs to play a 2-person coordination game. We would be encouraged to consider the case in which agents are matched in groups to play an n-person symmetric game in which \(n\ge 3\). In this case, any proper subgroup of two or more agents may be tempted to regroup to play a “smaller” game. Third, it would be interesting to assess whether or not the main results are supported by laboratory experiments.

Notes

The cardinality of the set \({\mathbf {M}}\) is \((n-1)\times (n-3)\times \cdots \times 3\times 1=(n-1)!!\).

The case that \(|{\mathbf {N}}_{1}|=1\) defines a star network, which is a tree and is not widely connected.

References

Alós-Ferrer C, Weidenholzer S (2006) Imitation, local interactions, and efficiency. Econ Lett 93:163–168

Alós-Ferrer C, Weidenholzer S (2007) Partial bandwagon effects and local interactions. Games Econ Behav 61:179–197

Alós-Ferrer C, Weidenholzer S (2008) Contagion and efficiency. J Econ Theory 143:251–274

Alós-Ferrer C, Weidenholzer S (2014) Imitation and the role of information in overcoming coordination failures. Games Econ Behav 87:397–411

Bhaskar V, Vega-Redondo F (2004) Migration and the evolution of conventions. J Econ Behav Organ 55:397–418

Blume A, Temzelides T (2003) On the geography of conventions. Econ Theory 22:863–873

Blume LE (1993) The statistical mechanics of strategic interaction. Games Econ Behav 5:387–424

Blume LE (1995) The statistical mechanics of best-response strategy revision. Games Econ Behav 11:111–145

Cui Z (2014) More neighbors, more efficiency. J Econ Dyn Control 40:103–115

Cui Z, Wang R (2016) Collaboration in networks with randomly chosen agents. J Econ Behav Organ 129:129–141

Ellison G (1993) Learning, local interaction, and coordination. Econometrica 61:1047–1071

Ellison G (2000) Basins of attraction, long-run stochastic stability, and the speed of step-by-step evolution. Rev Econ Stud 67:17–45

Ely I (2002) Local conventions. Adv Theor Econ 2, Article 1

Eshel I, Samuelson L, Shaked A (1998) Altruists, egoists, and hooligans in a local interaction model. Am Econ Rev 88:157–179

Fosco C, Mengel F (2011) Cooperation through imitation and exclusion in networks. J Econ Dyn Control 35:641–658

Gerber A, Bettzüge M (2007) Evolutionary choice of markets. Econ Theory 30:453–472

Goyal S (2011) Learning in networks. In: Benhabib J, Bisin A, Jackson M (eds) Handbook of social economics, vol 1. North Holland, Amsterdam

Goyal S, Vega-Redondo S (2005) Network formation and social coordination. Games Econ Behav 50:178–207

Hojman D, Szeidl A (2006) Endogenous networks, social games, and evolution. Games Econ Behav 55:112–130

in’t Veld D, van Lelyveld I (2014) Finding the core: network structure in interbank markets. J Bank Finance 49:27–40

Jackson M, Watts A (2002) On the formation of interaction networks in social coordination games. Games Econ Behav 41:265–291

Jackson M, Wolinsky A (1996) A strategic model of social and economic networks. J Econ Theory 71:44–74

Jackson M, Zenou Y (2014) Games on networks. In: Young P, Zamir S (eds) Handbook of game theory with economic applications, vol 4. North Holland, Amsterdam

Kandori M, Mailath G, Rob R (1993) Learning, mutation, and long run equilibria in games. Econometrica 61:29–56

Khan A (2014) Coordination under global random interaction and local imitation. Int J Game Theory 43:721–745

Langfield S, Liu Z, Ota T (2014) Mapping the UK interbank system. J Bank Finance 45:288–303

Morris S (2000) Contagion. Rev Econ Stud 67:57–78

Oechssler J (1997) Decentralization and the coordination problem. J Econ Behav Organ 32:119–135

Robson A, Vega-Redondo F (1996) Efficient equilibrium selection in evolutionary games with random matching. J Econ Theory 70:65–92

Shi F (2015) Long-run technology choice with endogenous local capacity. Econ Theory 59:377–399

Staudigl M (2011) Potential games in volatile environments. Games Econ Behav 72:271–287

Staudigl M (2013) Co-evolutionary dynamics and Bayesian interaction games. Int J Game Theory 42:179–210

Staudigl M, Weidenholzer S (2014) Constrained interactions and social coordination. J Econ Theory 152:41–63

Weidenholzer S (2010) Coordination games and local interactions: a survey of the game theoretic literature. Games 1:551–585

Young HP (1993) The evolution of conventions. Econometrica 61:57–84

Author information

Authors and Affiliations

Corresponding author

Additional information

I am indebted to the associate editor and two anonymous referees for suggesting ways to improve both the content and exposition of this paper. I am also grateful to Fei Shi for helpful comments and suggestions. This work was financially supported in part by the National Science Foundation of China (Nos. 71671010, 71690245) and the Fundamental Research Funds for the Central Universities (YWF-14-JGXY-016).

Appendix

Appendix

1.1 Radius–Coradius Theorem

Here, we present the modified radius-coradius theorem of Ellison [12]. Some preliminary definitions are necessary. Consider the perturbed dynamics \((\{X^{\epsilon }(t)\}_{t\in {\mathbb {N}}},S)\), \(\epsilon \in (0,1)\). For any \(\epsilon \), \(\epsilon \in (0,1)\), \(\{X^{\epsilon }(t)\}_{t\in {\mathbb {N}}}\) is an irreducible Markov chain. For any two states s and \(s^{'}\), the resistance is defined as

For any two subset \({\bar{\varOmega }}\) and \({\bar{\varOmega }}^{'}\) of \(\varOmega \), \({\bar{\varOmega }}\cap {\bar{\varOmega }}^{'}=\emptyset \), a path from \({\bar{\varOmega }}\) to \({\bar{\varOmega }}^{'}\) is a sequence of distinct states \((s^{1}, \ldots ,s^{m})\) with \(s^{1}\in {\bar{\varOmega }}\), \(s^{l}\notin {\bar{\varOmega }}^{'}\) for \(2\le l\le m-1\) and \(s^{m}\in {\bar{\varOmega }}^{'}\). Define the resistance of the path \((s^{1}, \ldots ,s^{m})\) as

Let \(\mathbf {\text {P}}({\bar{\varOmega }},{\bar{\varOmega }}^{'})\) be the set of all paths from \({\bar{\varOmega }}\) to \({\bar{\varOmega }}^{'}\).

For any absorbing set \({\bar{\varOmega }}\), the radius\(R({\bar{\varOmega }})\) is

If a path \((s^{1}, \ldots ,s^{m})\) passes through absorbing sets \({\bar{\varOmega }}^{1}, \ldots ,{\bar{\varOmega }}^{k}\), the modified-resistance is

The notation of modified-resistance can be extended to a point-set concept by setting

For any absorbing set \({\bar{\varOmega }}\), the modified-coradius\(CR^{*}({\bar{\varOmega }})\) is defined by

Theorem 2 in Ellison [12], p.24) shows that for any union of absorbing sets \({\bar{\varOmega }}\), when \(R({\bar{\varOmega }})>CR^{*}({\bar{\varOmega }})\), \({\bar{\varOmega }}\) contains all stochastically stable equilibria. Intuitively, if there is less resistance to enter the basin of attraction \(D({\bar{\varOmega }})\) than to leave it, \({\bar{\varOmega }}\) is relatively stable against the perturbation of random noises and will be observed most of the time when random noises vanish.

1.2 Proofs

Proof of Theorem 1

The proof consists of two parts. The first part shows that a state is a limit state if and only if the conditions in Theorem 1 are satisfied. The proof of sufficiency is trivial. For the sake of space, we only prove the necessity. The second part proves the convergence of the unperturbed dynamics \(\{S(t)\}_{t\in {\mathbb {N}}}\).

\(\mathbf {Part}\)\({\mathbf {I}}\): Characterization of limit states

The state \(s=((a_{1}, \ldots ,a_{n}),m)\) is an absorbing state if and only if (1) no one is motivated to alter her action and (2) the number of agents who are motivated to end the present partnerships is no more than two. If the number is two, then these two agents are in a partnership. Formally, for any agent \(i\in {\mathbf {N}}\), either (i) \(u(a_{i},a_{m_{i}})\ge \max _{j\in N(i)}u(a_{j},a_{m_{j}})\) or (ii) \(u(a_{i},a_{m_{i}})<\max _{k\in N(i)}u(a_{k},a_{m_{k}})=u(a_{j_{0}},a_{m_{j_{0}}})\) for some neighbor \(j_{0}\) with \(a_{i}=a_{j_{0}}\). There exists no agent, only one agent \(i_{0}\) or two agents \(i_{0}\) and \(m_{i_{0}}\) satisfying condition (ii). Note that agents play a \(2\times 2\) coordination game G with partners. When there are three or more agents satisfying condition (ii), two of them choose the same action, and in the next period, with positive probability, they will be matched to each other and will have no incentive to end the present partnership between them. This behavior implies that the number of agents satisfying condition (ii) can be reduced strictly. Accordingly, the proof of Part \({\mathbf {I}}\) is divided into three cases: no agent, only one agent and two agents satisfying condition (ii). We only focus on the first two cases. The last case can be analyzed similarly.

\(\mathbf {Case}\)\(\mathbf {1}\): \(\not \exists i_{0}\) satisfying condition (ii).

This nonexistence means that for any \(i\in {\mathbf {N}}\), \(u(a_{i},a_{m_{i}})\ge \max _{j\in N(i)}u(a_{j},a_{m_{j}})\). As a result, for any \(i,j\in {\mathbf {N}}\), if \(g_{ij}=1\), \(a_{i}=a_{j}\), and \(a_{m_{i}}=a_{m_{j}}\). This result implies that for each component, all members choose the same action, and their partners also choose the same action. Therefore, there is no polymorphic-action component. It is straightforward to conclude that for any component \({\mathbf {c}}\), \({\mathbf {I}}_{A}(s,{\mathbf {c}})={\mathbf {I}}_{B}(s,{\mathbf {c}})=\emptyset \) and \({\mathbf {P}}_{A}(s,{\mathbf {c}})={\mathbf {P}}_{B}(s,{\mathbf {c}})=\emptyset \).

\(\mathbf {Case}\)\(\mathbf {2}\): \(\exists \) only one agent \(i_{0}\) satisfying condition (ii).

Assume that \(i_{0}\) is from the component \({\mathbf {c}}^{'}\). Note that for any \(a,a^{'}\in \{A,B\}\), when \(a\ne a^{'}\), \(u(a,a^{'})<u(a,a)\) holds. It implies that \(a_{i_{0}}\ne a_{m_{i_{0}}}\). The uniqueness of \(i_{0}\) implies that there exists at most one component \({\mathbf {c}}_{AB}\) such that both actions are played by its members.

The component \({\mathbf {c}}^{'}\) is \({\mathbf {c}}_{AB}\) when \({\mathbf {c}}_{AB}\) exists. Note that in any other component, all members play the same action, and their partners also play the same action. Otherwise, there is at least one more agent satisfying condition (ii). As a result, it must be true that \({\mathbf {c}}_{AB}\) coincides with \({\mathbf {c}}^{'}\). Furthermore, for \({\mathbf {c}}^{'}\), \({\mathbf {I}}_{A}(s,{\mathbf {c}}^{'})={\mathbf {I}}_{B}(s,{\mathbf {c}}^{'})=\emptyset \), \({\mathbf {P}}_{a_{i_{0}}}(s, {\mathbf {c}}^{'})=\{i_{0}\}\) and \({\mathbf {P}}_{a_{m_{i_{0}}}}(s, {\mathbf {c}}^{'})=\emptyset \).

Similarly, when \({\mathbf {c}}_{AB}\) does not exist, then for each component, all members choose the same action. Furthermore,

\(\mathbf {Part}\)\(\mathbf {II}\): Convergence of the unperturbed dynamics.

Let \(s(t)=\big ({\mathbf {a}}(t),m(t)\big )\) be the state in period t. For any \(s\in \varOmega \), let \({\mathbf {N}}_{AA}(s)\) be the set of A-players who are matched to A-players. Note that if \(i\in {\mathbf {N}}_{AA}(s)\), then \(m(i)\in {\mathbf {N}}_{AA}(s)\). According to whether \({\mathbf {N}}_{AA}(s(0))\) is empty or not, the proof is divided into two cases.

\(\mathbf {Case}\)\(\mathbf {1}\): \({\mathbf {N}}_{AA}(s(0))\ne \emptyset \).

Note that A is the Pareto-efficient action. In period 0, any agent \(i\in N({\mathbf {N}}_{AA}(s(0)))\) performs strictly worse than some neighbor in \({\mathbf {N}}_{AA}(s(0))\). In period 1, with strictly positive probability, all agents in \(N({\mathbf {N}}_{AA}(s(0)))\) play A, and they are matched into \(\lfloor \frac{|N({\mathbf {N}}_{AA}(s(0)))|}{2}\rfloor \) pairs. The remaining agent is matched to a B-player when \(|N({\mathbf {N}}_{AA}(s(0)))|\) is odd. Furthermore, all agents in \({\mathbf {I}}_{A}(s(0),{\mathbf {c}}){\setminus } N({\mathbf {N}}_{AA}(s(0)))\) switch from A to B for any \({\mathbf {c}}\in {\mathbf {C}}\). It is straightforward to see that \({\mathbf {N}}_{AA}(s(0))\subseteq {\mathbf {N}}_{AA}(s(1))\) and when \(t\ge 1\), \({\mathbf {I}}_{A}(s(t),{\mathbf {c}})=\emptyset \) for any \({\mathbf {c}}\in {\mathbf {C}}\).

Following the same logic, in some period \(t_{1}\ge 1\), \(\{S(t)\}_{t\in {\mathbb {N}}}\) arrives at a state \(s(t_{1})\) where \({\mathbf {N}}_{AA}(s(t_{1}))={\mathbf {N}}_{AA}(s(t_{1}-1))\). The state \(s(t_{1})\) is a limit state. When the number of A-players in \(s(t_{1})\) is even, all A-players constitute \({\mathbf {N}}_{AA}(s(t_{1}))\); otherwise, at least one A-player is motivated to switch to B, which yields a contradiction. The coincidence of \({\mathbf {N}}_{AA}(s(t_{1}))\) and the set of A-players and the equation \({\mathbf {N}}_{AA}(s(t_{1}))={\mathbf {N}}_{AA}(s(t_{1}-1))\) together imply that each agent plays the same action as her partner; for each B-player, all neighbors play action B. That is, in each component, all members play the same action, and for any \(i,j\in {\mathbf {N}}\), if \(g_{ij}=1\), \(u(a_{i}(t_{1}),a_{m_{i}(t_{1})}(t_{1}))= u(a_{j}(t_{1}),a_{m_{j}(t_{1})}(t_{1}))\). Thus, \(s(t_{1})\) is a limit state. When the number of A-players in \(s(t_{1})\) is odd, all A-players except one, denoted by \(i_{0}\), constitute \({\mathbf {N}}_{AA}(s(t_{1}))\), and \(i_{0}\in N({\mathbf {N}}_{AA}(s(t_{1})))\). The equation \({\mathbf {N}}_{AA}(s(t_{1}))={\mathbf {N}}_{AA}(s(t_{1}-1))\) implies that except \(i_{0}\) and her partner, each agent is matched to an agent playing the same action, and for each B-player, all neighbors except \(i_{0}\) play action B. Therefore, \(u(a_{i}(t_{1}),a_{m_{i}(t_{1})}(t_{1}))\ge \max _{j\in N(i)}u(a_{j}(t_{1}),a_{m_{j}(t_{1})}(t_{1}))\) for any \(i\in {\mathbf {N}}{\setminus }\{i_{0},m_{i_{0}}(t_{1})\}\), while neither \(i_{0}\) nor her partner is motivated to alter action. Therefore, \(s(t_{1})\) is also a limit state.

\(\mathbf {Case}\)\(\mathbf {2}\): \({\mathbf {N}}_{AA}(s(0))=\emptyset \).

In this case, in the initial state, all A-players are matched to B-players and receive a common payoff of zero.

Assume that in the initial state, for each A-player, all neighbors play action A. In any component, all members play the same action, and all A-players are matched to B-players. If there are two or more B-players such that the partner of each is an A-player and one neighbor is matched to a B-player, each of these B-players is motivated to exit from the present partnership. In the initial period, with strictly positive probability, two of these B-players, denoted by \(i_{0}\) and \(j_{0}\), have the opportunity to update their partnerships. In the next period, with strictly positive probability, only two A-players \(m_{i_{0}}(0)\) and \(m_{j_{0}}(0)\) are matched to each other. Then, we return to Case 1. If there is a unique B-player satisfying that the partner is an A-player and one neighbor is matched to a B-player, then among all agents, only this B-player wants to exit from the partnership, while nobody is motivated to alter action. The initial state therefore is a limit state.

Assume that there exist two or more A-players such that for each of them, one neighbor plays action B. Each of these A-players receives a strictly lower payoff than some neighbor. This lower payoff implies that she is motivated to exit from the present partnership. In the initial period, with strictly positive probability, two of these A-players only have the opportunity to update their partnerships. In the next period, with strictly positive probability, the two A-players are matched to each other. Thus, we return to Case 1.

Assume that in the initial state, there is a unique A-player, denoted by \(i_{1}\), satisfying that one neighbor plays action B. Agent \(i_{1}\) receives a strictly lower payoff than some neighbor. In the next period, with strictly positive probability, \(i_{1}\) switches to action B. For the state s(1), either the condition in one of the above two paragraph holds, or the condition in this paragraph holds. Following the same logic, after a finite number of periods, only the condition in one of the above two paragraph holds. \(\square \)

Proof of Theorem 2

Assume that there is a limit state \(s=((a_{1}, \ldots ,a_{n}),m)\) such that both actions coexist in a widely connected component \({\mathbf {c}}=\big ({\mathbf {N}}^{'},(g_{ij}) _{i,j\in {\mathbf {N}}^{'}}\big )\). Let \({\mathbf {N}}_{a}(s)\) denote the set of all a-players for any \(a\in \{A,B\}\). Theorem 1 shows that \({\mathbf {P}}_{A}(s,{\mathbf {c}})\) is nonempty. Furthermore, the discussion Theorem 1 implies that there exist at least two A-players in \({\mathbf {c}}\); that is, \(|{\mathbf {N}}^{'}\cap {\mathbf {N}}_{A}(s)|\ge 2\).

Let \(\mathbf {B}(s,{\mathbf {c}})\) denote the set of A-players in \({\mathbf {c}}\) who have neighbors playing B. Formally,

It is straightforward to see that

Note that \(|{\mathbf {N}}^{'}\cap {\mathbf {N}}_{A}(s)|\ge 2\). The wide connectedness of \({\mathbf {c}}\) shows that \(|\mathbf {B}(s,{\mathbf {c}})|=|N({\mathbf {N}}^{'}{\setminus }({\mathbf {N}}^{'}\cap {\mathbf {N}}_{A}(s)))|\ge 2\). In addition, following from the fact that s is a limit state, \({\mathbf {P}}_{A}(s,{\mathbf {c}})=\mathbf {B}(s,{\mathbf {c}}_{AB})\) holds. Theorem 1 shows that \({\mathbf {P}}_{A}(s,{\mathbf {c}})\) is a singleton, which yields a contradiction. \(\square \)

Proof of Lemma 1

Assume that the initial state is \(\displaystyle \big ((B,B, \ldots ,B),m\big )\in \varOmega _{B}\). In the initial period, only agents \(i_{0}\) and \(m_{i_{0}}\) receive the opportunity of action revision and switch from B to A by mistake. No one is provided with the opportunity to update her partnership.

In the next period, both agents \(i_{0}\) and \(m_{i_{0}}\) obtain the highest possible payoff of 1 from the interaction. In the following periods, each of these two agents has no incentive to either switch to B or end the partnership between them.

Following the same logic as in the Proof of Theorem 1, after a finite number of periods, with probability one, \(\{S^{\epsilon }(t)\}_{t\in {\mathbb {N}}}\) will arrive at a limit state in \(\varOmega _{A}\). \(\square \)

To facilitate the Proof of Lemma 2, we introduce the following notation. The distance between any two matchings m and \(m^{'}\) is defined as the number of partnerships between different pairs of agents. Formally,

It is straightforward to see that two matchings m and \(m^{'}\) are identical if and only if \(d(m,m^{'})=0\), and for any two distinct matchings m and \(m^{'}\), \(d(m,m^{'})\ge 2\).

Proof of Lemma 2

We only focus on the case that \(\lambda \le 1\). The case that \(\lambda >1\) can be analyzed in a similar manner. Let s denote \(((a,a, \ldots ,a),m)\), and let \(s^{'}\) denote \(((a,a, \ldots ,a),m^{'})\). Assume that the initial state is s.

Let \({\mathbf {N}}_{p}(m,m^{'})\) be the set of agents whose partners are different in m and \(m^{'}\). Formally, \({\mathbf {N}}_{p}(m,m^{'})\doteq \{i\in {\mathbf {N}}:m_{i}\ne m^{'}_{i}\}\). It is straightforward to see that \(|{\mathbf {N}}_{p}(m,m^{'})|=2\times d(m,m^{'})\).

In the initial period, no one receives the opportunity of action revision, and only \(i_{0}\) and \(m^{'}_{i_{0}}\), \(i_{0}\in {\mathbf {N}}_{p}(m,m^{'})\), receive the opportunity of partnership updating. By two partnership mutations, \(i_{0}\) and \(m^{'}_{i_{0}}\) end their partnerships in m.

Following the unperturbed behavior rule, in the next period, with strictly positive probability, \(i_{0}\) and \(m^{'}_{i_{0}}\) form a partnership, and \(m_{i_{0}}\) and \(m_{m^{'}_{i_{0}}}\) form a partnership. Therefore, another limit state \(((a,a, \ldots ,a),\tilde{m})\in \varOmega ^{a}\) is arrived at where (1) \(\tilde{m}_{i}=m_{i}\) for any \(i\in {\mathbf {N}}{\setminus } \{i_{0},m_{i_{0}},m^{'}_{i_{0}},m_{m^{'}_{i_{0}}}\}\) and (2) \(\tilde{m}_{i_{0}}=m^{'}_{i_{0}}\) and \(\tilde{m}_{m_{i_{0}}}=m_{m^{'}_{i_{0}}}\). Furthermore, for the matching \(\tilde{m}\),

Following the same logic, the sequence \((s_{0}, \ldots ,s_{\ell })\) can be obtained. \(\square \)

Rights and permissions

About this article

Cite this article

Cui, Z. Matching, Imitation, and Coordination in Networks. Dyn Games Appl 9, 47–67 (2019). https://doi.org/10.1007/s13235-018-0243-0

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13235-018-0243-0