Abstract

We develop a two-stage, two-location model to investigate long-run technology choice with endogenous capacity constraints. Rational managers determine the maximum capacities (and mobility constraints). Then, boundedly rational agents play a coordination game with the possibility to migrate. We consider two alternative strategy sets and two different objective functions for the managers and show that they affect the long-run technology choice in a non-trivial way. If the managers only care about efficiency in their respective locations, either coexistence of conventions or global coordination on the risk-dominant equilibrium will be selected, depending on the (effective) capacities of both locations. If they are concerned with scale and can choose mobility constraints, global coordination on the risk-dominant equilibrium without mobility will be selected in the long run. We then change the basic interaction to a \(n\times n\) pure coordination game where mis-coordination results in zero payoff, and show that, regardless of the constraint choices, all the agents will coordinate on the most efficient equilibrium.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Stochastic learning models provide a rich framework for studying the evolution of human behavior in socioeconomic contexts and hence offer “evolutionary” foundations for the selection of Nash equilibrium. In most of the studies in this field, agents are assumed to be homogeneous and boundedly rational. With this assumption, these studies mainly focus on manipulating the stage game, agents’ interaction structure, the information agents can observe, and the strategy-updating rule, in order to see their effects on behavioral evolution in the long run. Although, in reality, agents are very likely to be heterogeneous (e.g., people may use different behavioral rules, or have different rationality levels), this situation is not sufficiently explored in the literature on stochastic learning. The purpose of this paper is to model the heterogeneity of players’ rationality levels and to show its crucial effect on strategic interactions in the long run, within the specific framework called “location models.”

Our model consists of two stages and two locations. In stage 1, the rational manager in each location chooses the maximum capacity to optimize a certain objective function, which is related to the payoffs of the agents in his location. After that, there is a learning dynamics in stage 2. In every period, the boundedly rational agents in each location repeatedly play a \(2 \times 2\) coordination game, in which one strategy is more efficient, while the other is safer. Across periods, the agents adjust their choices using a certain behavioral rule, with the opportunity to relocate.

The principal feature of this model is that it involves players with different rationality levels. The rational managers play a one-shot game first, followed by the stochastic learning dynamics of the boundedly rational agents. Although players with different rationality levels do not interact directly, the decisions of the managers in stage 1 will have a non-trivial effect on the strategic choices in the coordination game in the long run. Within this framework, we would like to answer the following questions: What are the capacity choices of the managers? What are the choices of the agents in different locations in the long run?

We present two examples which are related to the model outlined briefly above. Consider a case of two investors, and each of them plans to build an industrial park for firms that specialize in software development. To do this, each investor has to choose the maximum capacity of his park. Suppose that, for each firm, there are two different development platforms available: One is more efficient, and the other is more compatible. Suppose also that firms are so small that it is beneficial for each of them to use only one platform once and to have joint development programs with the others in the same industrial park. Coordination issues will then arise when two firms are engaged in a joint program: The payoffs for both firms to use the same platform are higher than when they use different ones. To obtain higher payoffs, firms can change their platforms and locations across periods. Assume that the profit of the investor of each industrial park is related to the payoffs of the firms within his park in a certain way (e.g., each investor charges for a fixed percentage of the payoffs of the firms located in his park). Hence, the investors care about the coordination among the firms and would like to affect the firms’ choices on platforms within their respective parks by choosing the capacity constraint in the very beginning.Footnote 1

A second example is the interaction between firms’ enrollment policies and employees’ choices on unobservable effort levels. Suppose that two firms are enrolling employees in the labor market, and both of them have to decide how many employees to hire first. The employees can choose two different effort levels (high and low), which are unobservable. Both firms use the same joint-production technology as described in Khan (2014). That is, the employees have to work in pairs, and the output level of a pair is determined by the minimum effort. The wage of each employee hinges on the output level. Adjusting employees’ wages by their effort levels, this situation can be captured by a coordination game. Coordinating on high effort is preferred to coordinating on low effort. In case of mis-coordination, the payoff of the employee with low effort is higher than the one with high effort. To get higher payoffs, employees can change their effort levels or firms. Assume that the profit of each firm depends on how employees coordinate with their colleagues. Since the managers cannot observe the employees’ effort levels, they cannot just require them to work with high effort. Hence, to maximize the profit, the managers would like to use capacity choices to affect the coordination among the employees.

In either case, we are interested in investigating (i) how the long-run consequences of the learning dynamics will influence the rational players’ optimal choices, considering that they have access to all the details of the learning dynamics, and, in turn, (ii) how the rational players’ one-shot choices finally shape the behavioral pattern of the boundedly rational players in the long run. Doing this exercise, we study the evolution of social behavior in a more realistic situation, where players with heterogenous rationality levels interact. We illustrate, within our framework, that the interaction among heterogenous players indeed has a crucial effect on both the strategic choices of the rational players and that of the boundedly rational ones.

We first consider the case where both managers care about the average of the agents’ payoffs in their respective locations. We identify two classes of Nash equilibria (NE) in the managers’ game. In one class, both managers choose relatively small capacities, which leads to global coordination on the risk-dominant equilibrium in the long run. In the other class, one location is relatively large and the other is relatively small, which leads the agents in the small location to coordinate on the Pareto-efficient equilibrium and those in the large one to coordinate on the risk-dominant equilibrium (coexistence of conventions).

We then extend the basic model to the following three directions. First, we consider the case where the managers are concerned with the total payoffs of the agents in their respective locations. We find that, in this case, for \(N\) large enough, there is only one possible candidate of NE. Second, we allow the managers to also restrict the mobility of the agents in their locations. We show that, if the managers are only concerned with efficiency, the result is similar to the case where the managers can only choose capacity constraints. However, if they care about scale, they would like to restrict the mobility to forbid migration completely, which leads agents in both locations to coordinate on the risk-dominant equilibrium. Third, we change the basic interaction to a pure coordination game with more than two strategies. We show that, when mis-coordination leads to zero payoffs, all the agents will coordinate on the most efficient equilibrium in the long run. Focusing on the situation that the managers are concerned with scale, we find that, if they can choose capacity constraints only, each of them will set a capacity large enough to accommodate all the agents; if they can also choose mobility constraints, no agents will be allowed to move out.

There are a series of studies in the literature on stochastic learning, which are related to the work in this paper. In our model, the basic interactions in the learning dynamics are coordination games. Hence, our model is related to the studies which apply evolutionary models to select equilibrium in coordination games (see, for example, Kandori et al. 1993; Ellison 1993; Robson and Vega-Redondo 1996; Alós-Ferrer and Weidenholzer 2007, 2008; Alós-Ferrer 2008; Alós-Ferrer and Netzer 2014). Our model is different from those studies in that we introduce strategic interaction between rational managers, which will have an important impact on the equilibrium selection.

Our model involves two different locations, and boundedly rational agents are allowed to change their locations. Hence, it encompasses the multiple location models of Ely (2002) and Anwar (2002).Footnote 2 In Ely (2002), it is assumed that each location is large enough to accommodate all the agents, and there is no mobility constraint. He shows that, in this case, all the agents will agglomerate in one location and coordinate on the Pareto-efficient equilibrium. Anwar (2002) considers the case where both locations have the same restrictive capacity and mobility constraints. He finds that, if the effective capacity of both locations is small, the risk-dominant equilibrium will prevail globally; if the effective capacity of both locations is large, agents in different locations will coordinate on different equilibria. Instead of taking capacity and mobility constraints as exogenous parameters, we allow the managers of both locations to choose these constraints. We find that the optimal choices of the managers do not include the policy arrangements in Ely (2002) and Anwar (2002). This paper is also related to Ania and Wagener (2009). Both of the models share the common feature that there are multiple locations, and each of them has a manager. Further, agents will react to the policies and choose locations if possible. However, the major difference is that, in their study, the managers are not rational. Instead of making a one-shot decision, they use an imitate-the-best rule to change their policies every period.

In this paper, players with different rationality levels interact, and hence, it is related to several studies involving heterogeneous players. Alós-Ferrer et al. (2010) develop a two-stage model where rational platform designers attempt to maximize the profits of their respective platforms, and the boundedly rational traders choose among the platforms by imitating the most successful behavior in the previous period. Similar to our model, rational players make a one-shot decision first, and boundedly rational players are involved in a learning dynamics afterward. Unlike our model, though, the basic interaction in the learning dynamics is Cournot competition rather than a coordination game. Juang (2002) considers the case where players use different behavioral rules to update their strategies in an evolutionary model of coordination games. Geber and Bettzüge (2007) investigate the evolutionary choice of asset markets when boundedly rational traders have idiosyncratic preferences for the markets. Alós-Ferrer and Shi (2012) explore the effect of asymmetric memory length in the population on the long-run consequences of stochastic learning. Although we all have heterogenous players, our model is different from these three studies in that different types of players in our setup have to play sequentially.

Remainder of the paper is organized as follows. Section 2 develops a simple two-stage model. Using this model, we analyze the long-run outcome of the learning dynamics and investigate the strategic capacity choices of the managers. Section 3 discusses three extensions. In the first one, we suppose managers are concerned with the scales of their respective locations. In the second one, we allow managers to also choose mobility constraints. In the last one, we consider a general \(n\times n\) pure coordination game with more than two strategies. Section 4 briefly concludes. All the proofs are relegated to the Appendix.

2 The two-stage model

In this section, we develop a simple two-stage model, where rational managers choose capacity constraints and boundedly rational agents are involved in a learning dynamics of coordination games. Then, we use this model to analyze the long-run behavior of the agents and the strategic choices of the managers.

2.1 The setting

2.1.1 Locations

A total of \(2N\) agents are distributed in two different locations \(k \in \{1,2\}\), initially with \(N\) agents in each location. That is, the two locations are identical in the very beginning. Each location \(k\) has a rational manager, referred to as manager \(k\).

2.1.2 Managers

The model involves two stages. Stage 1 consists of a static game between the two rational managers. The managers can neither relocate nor interact with the agents. Instead, to optimize a certain objective function, manager \(k\) can choose a capacity constraint \(c_k \in [1,2]\) such that \(\lfloor c_k N \rfloor \) determines the maximum capacity of location \(k\).Footnote 3 Since the two locations compose the whole world in this model, we assume that each location can accommodate at least \(N\) agents (\(c_k \ge 1\) for both \(k=1,2\)) to avoid the situation where there is no place for some agents to stay (i.e., \(c_1+c_2<2\)). Hence, the capacity of location \(k\) can be represented by \(M_k \equiv \lfloor c_kN \rfloor \), and the minimum number of players in location \(k\) is \(m_k \equiv 2N-M_\ell \), for \(k,\ell \in \{1,2\}, k \ne \ell \).

2.1.3 Learning dynamics of agents

Stage 2 of the model consists of a discrete-time learning dynamics, given the capacity constraints determined by the managers in both locations. It is related to Anwar (2002) and includes Ely (2002) as a particular case.

Basic coordination game Time is discrete, i.e., \(t=1,2,\ldots \). In each period \(t\), agents within each location play a pure coordination game (Table 1) with each other (i.e., round-robin tournament), where \(e,r,g,h >0\), \(e>r>h\), \(r>g\), and \(h+r>e+g\).Footnote 4 Hence, \((P,P)\) is the Pareto-efficient equilibrium and \((R,R)\) is the risk-dominant equilibrium. We focus on pure coordination games to capture the fact that if two agents use different technologies, the incompatibility leads their respective payoffs to be lower than what they could obtain by both using the less efficient technology.Footnote 5 Let \(\varPi :S \times S \rightarrow \mathbb {R}^+\) be the payoff function of the game, where \(S=\{P,R\}\) is the strategy set of each player. \(\varPi (s_i,s_j)\) denotes the payoff of playing \(s_i\) against \(s_j\). We denote by \(q^*\) the probability of playing \(P\) in the mixed-strategy Nash equilibrium, i.e., \(q^*=(r-g)/(e-g-h+r)\). Since \((R,R)\) is risk-dominant, \(q^*\) is strictly larger than \(1/2\). To facilitate the discussion later, we also define \(\hat{q}\) through the equality \(\varPi (P,(\hat{q},1-\hat{q}))=r\), which yields \(\hat{q}=(r-g)/(e-g)\). That is, \(\hat{q}\) is the probability of playing \(P\) in a mixed-strategy such that playing \(P\) against it gives the same payoff as in the risk-dominant equilibrium. Since every agent repeatedly plays the coordination game with all the others in one period, we assume that each agent’s payoff in one period is the average payoff of all his interactions.

Behavioral rule In every period, agents can adjust their strategies in the coordination game. Further, they can relocate with an independent and identical positive probability. We assume that agents update their behavior based on myopic best reply to the state. That is, each agent will choose a strategy and a location (if possible) that is a best reply to the strategy profile in the last period.

Formally, let \(q^t_k\) be the proportion of \(P\)-players in location \(k \in \{1,2\}\) in period \(t\). We assume that, in each period \(t+1\), every player observes \(q^t_k\) for both \(k=1,2\), and anticipates the payoff of playing \(s \in \{P,R\}\) in location \(k\), \(\bar{\varPi }^{t+1} (s, k)\), based on the strategy distribution of players in location \(k\) in period \(t\); that is,

Then, each player will choose a strategy and a location (when such an opportunity arises) that maximizes \(\bar{\varPi }^{t+1} (s, k)\), given the capacity constraints. Specifically, an immobile agent will choose a strategy \(s^{t+1}_i\in \{P,R\}\) in period \(t+1\) given his current location, such that

where \(k^t_i\) denotes agent \(i\)’s location in period \(t\). A mobile agent will choose a strategy \(s^{t+1}_i\) and a location \(k^{t+1}_i\), such that

If several choices give a player the maximum payoff, she will play each of them with a positive probability. Relocation is restricted by the capacity constraints of the two locations. If the number of agents who attempt to move to location \(k\) is more than the number of vacancies, agents will be randomly chosen from the set of candidates to fill all the vacancies. Those who are not allowed to move out will behave as immobile agents and play the myopic best reply to the state given their current locations.

Remark 1

When computing the best response to the state in the other location, this approach is equivalent to the standard myopic best reply; that is, a player will choose a strategy to maximize his payoff, given the strategies played by all the remaining players in the last period. However, when computing the best response to the state in the current location, this approach differs from the standard myopic best reply, in that the player counts himself in the last period as an opponent. For a large population, this corresponds to Oechssler’s (1997) best response for a large population. That is, if the population is large enough, it is innocuous for an agent to include himself in the strategy profile of the opponents when he computes his payoff, since the strategy of one player almost has no effect on the strategy distribution of a large population. For a small population, though, this kind of behavior can differ from the standard myopic best reply, as the agent ignores the fact that changing his strategy also changes the population proportions at his location. Particularly, when there is only one player in a location, he will behave as if he could play with himself (i.e., self-matching).Footnote 6

2.2 The long-run behavior of agents

This subsection analyzes the learning dynamics in stage 2. We first identify the absorbing sets of the unperturbed learning dynamics. From these candidates, we single out the long-run equilibria (LRE) given the capacity constraints in stage 1.

2.2.1 The absorbing sets

Let \(\omega =(v_1,v_2,n_1)\) represent a state of the dynamics described above, where \(v_k\) denotes the number of \(P\)-players in location \(k\), and \(n_k\) denotes the total number of players in location \(k\) for \(k=1,2\). The state space is given by

Denote

We can equivalently rewrite a state as \(\omega =(q_1,q_2,n_1)\). The stochastic dynamics above gives rise to a Markov process, whose transition matrix is given by \(P=[P(\omega , \omega ')]_{\omega ,\omega ' \in \varOmega }\). An absorbing set is a minimal subset of states such that, once the process reaches it, it never leaves.

Lemma 1

The absorbing sets of the unperturbed process above depend on \(c_k\) for \(k=1,2\) as follows.

-

(a)

If \(c_k<2\) for both \(k=1,2\), there are four absorbing sets: \(\varOmega (PR)\), \(\varOmega (RP)\), \(\varOmega (RR)\) and \(\varOmega (PP)\).

-

(b)

If \(c_1=2\) and \(c_2 < 2\), there are three absorbing sets: \(\varOmega (RO)\), \(\varOmega (PO)\), and \(\varOmega (RP)\). Similarly, if \(c_2=2\) and \(c_1< 2\), the absorbing sets are: \(\varOmega (OR)\), \(\varOmega (OP)\) and \(\varOmega (PR)\).

-

(c)

If \(c_1=c_2=2\), there are four absorbing sets: \(\varOmega (RO)\), \(\varOmega (OR)\), \(\varOmega (PO)\) and \(\varOmega (OP)\).

where \(\varOmega (PR)=\{(1, 0, M_1)\}\), \(\varOmega (RP)=\{(0, 1, m_1)\}\), \(\varOmega (RR)=\{(0,0,n_1)|\) \(n_1 \in \{m_1,\ldots ,M_1\} \}\), \(\varOmega (PP)=\{(1,1,n_1)|n_1 \in \{m_1, \ldots , M_1\} \}\), \(\varOmega (RO)=\{(0,0,2N)\}\), \(\varOmega (PO)=\{(1,0,2N)\}\), \(\varOmega (OR)=\{(0,0,0)\}\), and \(\varOmega (OP)=\{(0,1,0)\}\).

2.2.2 The equilibrium concept

Following the standard approach in the literature on stochastic learning in games, we introduce mutations in this Markov dynamics. We assume that, in each period, with an independent and identical probability \(\varepsilon \), each agent randomly chooses a strategy and a location if possible. The perturbed Markov dynamics is ergodic, i.e., there is a unique invariant distribution \(\mu (\varepsilon )\). We want to consider small perturbations. It is a well-established result that \(\mu ^{*}=\lim _{\varepsilon \rightarrow 0}\mu (\varepsilon )\) exists and is an invariant distribution of the unperturbed process \(P\). It describes the time average spent in each state when the original dynamics is slightly perturbed and time goes to infinity. The states in its support, \(\{\omega | \mu ^{*}(\omega )>0\}\), are called stochastically stable states or long-run equilibria.

For small population size, the analysis of LRE in our model runs into integer problems, which arise because both the number of agents in each location and the number of mutants involved in any considered transition need to be integers. For this reason, the boundaries of the sets in the \((c_1,c_2)\)-space in which different LRE are selected are highly irregular. In the limit, these boundaries become clear-cut, but, for a fixed population size, there is always a small area where long-run outcomes are not clear. However, for large population size, these boundary areas effectively vanish. In order to tackle this difficulty, we introduce the following definition.

Definition 1

\(\tilde{\varOmega }\) is the LRE set for large \(N\) uniformly for the set of conditions \(\{J_z (c_1,c_2)\) \(>0\}^Z_{z=1}\) if, for any \(\eta >0\), there exists an integer \(N_\eta \) such that for all \(N>N_\eta \), \(\tilde{\varOmega }\) is the set of LRE for all \((c_1,c_2) \in [1,2]^2\) such that \(\{J_z (c_1,c_2)>\eta \}^Z_{z=1}\).

In words, an LRE set for large population size is such that, given “ideal” boundaries described by certain inequalities (which will be specified in each of our result below), for every point arbitrarily close to the boundaries, there exists a minimal population size such that the LRE corresponds to the given set. Further, the definition incorporates a uniform convergence requirement, in the sense that the minimal population size depends only on the distance to the ideal boundaries and not on the individual point. This uniform convergence property is important in order for us to be able to analyze the managers’ game in stage 1.

2.2.3 The LRE in stage 2

Following the standard approach developed by Freidlin and Wentzell (1988), we construct the minimum-cost tree for each absorbing set given in Lemma 1 and then use them to identify the LRE. We summarize the results in Theorem 1 and illustrate them in Fig. 1. Denote \(\bar{q} \equiv 1/q^*-2+2q^*\) and let

Theorem 1

The LRE of the learning dynamics in stage 2 depends on \(c_1\) and \(c_2\).

-

(a)

\(\varOmega (RR)\) is the LRE set for large \(N\) uniformly for \(c_1 < \varPhi (c_2)\) and \(c_2 < \varPhi (c_1)\);

-

(b)

\(\varOmega (RP)\) is the LRE set for large \(N\) uniformly for \(c_1 > \varPhi (c_2)\) and \(c_1 > c_2\); and

-

(c)

\(\varOmega (PR)\) is the LRE set for large \(N\) uniformly for \(c_2 > \varPhi (c_1)\) and \(c_1 < c_2\).

Theorem 1 says that, for \(N\) large enough, in the main area of the \((c_1,c_2)\)-space, only the elements in these three absorbing sets (\(\varOmega (RR)\), \(\varOmega (RP)\) and \(\varOmega (PR)\)) can be possibly selected as stochastically stable. If the maximum capacities of both locations are relatively small, the risk-dominant equilibrium will prevail globally (statement (a)). To see the intuition, one can consider the transitions between \(\varOmega (PR)\) and \(\varOmega (RR)\), which play a key role in the selection of \(\varOmega (RR)\). To complete the transition from \(\varOmega (PR)\) to \(\varOmega (RR)\), it is necessary to change the convention from \((P,P)\) to \((R,R)\) in location 1. The transition in the opposite direction requires the reverse change. The absorbing set \(\varOmega (RR)\) will be selected if the number of the mutants required for the former transition is smaller than that for the latter one. For it to hold, the maximum capacity in location 1 (determined by \(c_1\)) has to be small enough, and the minimum number of agents in location 1 (determined by \(2-c_2\)) has to be large enough. Hence, both \(c_1\) and \(c_2\) are required to be small enough for the selection of \(\varOmega (RR)\).

However, if the capacity of one location is larger than that of the other, the agents in the larger location will coordinate on the risk-dominant equilibrium, while those in the smaller location on the Pareto-efficient one (statements (b) and (c), respectively). The intuition can be understood by considering the transitions between \(\varOmega (PR)\) and \(\varOmega (RP)\), which play a decisive role in the selection of coexistence of conventions. The minimum-cost transition in either direction will be achieved indirectly through \(\varOmega (PP)\). That is, the convention of one location will be changed from \((R,R)\) to \((P,P)\), then, that of the other location will be changed from \((P,P)\) to \((R,R)\). Since the population share of the mutants required for the first-step transition is \(q^*\), larger than that for the second-step transition \(1-q^*\), the former transition has a dominant effect. Hence, we can focus only on the transition in the first step. In the transition from \(\varOmega (PR)\) to \(\varOmega (PP)\), the number of mutants is determined by the minimum number of agents in location 2 (determined by \(2-c_1\)). Similarly, for the transition in the opposite direction, the number of mutants is determined by the minimum number of agents in location 1 (determined by \(2-c_2\)). To select \(\varOmega (RP)\) in the long run, the number of mutants required for the transition from \(\varOmega (PR)\) to \(\varOmega (PP)\) has to be less than that for the transition in the reverse direction, which holds for \(c_1>c_2\).

Further, the parameter regions of the different LRE depend on the values of \(\hat{q}\) and \(\bar{q}\). If \(\hat{q} \le \bar{q}\), the payoff for the risk-dominant equilibrium is relatively low. In this case, \(\varOmega (RR)\) will be selected in a relatively small parameter region. By contrast, if \(\hat{q} > \bar{q}\), the payoff of the risk-dominant equilibrium is relatively high. In this case, \(\varOmega (RR)\) will be selected in a larger parameter region. This is reflected by the fact that, for \(\hat{q}>\bar{q}\), \(2-\frac{1-\hat{q}}{2q^*-1}c_k > 2-\frac{1-q^*}{q^*}c_k\) for \(c_k \in [1,2]\), \(k=1,2\). The reason is that, when the payoff of the risk-dominant equilibrium is larger, the basin of attraction of \((R,R)\) is larger, and the transition toward \(\varOmega (RR)\) requires less mutants.

The LRE in the vanishing areas are not clear-cut; multiple LRE may coexist. This result is summarized in Proposition 1. To facilitate the discussion, we define several subareas in the \((c_1,c_2)\)-space and illustrate them also in Fig. 1. For any \(\eta >0\), denote the area on the border of the three main regions by \(V(\eta ) = [1,2]^2 \setminus (\{\varPhi (c_2)-c_1 > \eta \ \text{ and } \ \varPhi (c_1)-c_2 >\eta \}\cup \{c_1 -\varPhi (c_2)>\eta \ \text{ and } \ c_1 - c_2>\eta \} \cup \{c_2 -\varPhi (d_1)>\eta \ \text{ and } \ c_2 - c_1>\eta \})\), and the subareas of \(V(\eta )\), respectively, by

Note that \(V(\eta )\) is vanishing as \(\eta \) decreases: \(V(\eta )\) will shrink to the lower-dimensional set \(\{(c_1,c_2)|c_1=\varPhi (c_2) \ \text{ for } \ c_2 \in [1,2q^*]\} \cup \{(c_1,c_2)|c_2=\varPhi (c_1) \ \text{ for } \ c_1 \in [1,2q^*]\} \cup \{(c_1,c_2)|c_1=c_2 \ \text{ for } \ c_2 \in [2q^*,2]\}\) as \(\eta \rightarrow 0\).

Proposition 1

For any \(\eta >0\), there exists an integer \(\bar{N}\), such that for all \(N > \bar{N}\), for \((c_1,c_2) \in V(\eta )\), the LRE form a subset of

-

(a)

\(\varOmega (RP) \cup \varOmega (RR)\) if \((c_1,c_2) \in V_{a}(\eta )\);

-

(b)

\(\varOmega (PR) \cup \varOmega (RR)\) if \((c_1,c_2) \in V_{b}(\eta )\);

-

(c)

\(\varOmega (PR)\cup \varOmega (RP)\cup \varOmega (OP) \cup \varOmega (PO) \cup \varOmega (PP)\) if \((c_1,c_2) \in V_c(\eta )\!\setminus \!\{(2, 2)\}\);

-

(d)

\(\varOmega (PR)\cup \varOmega (RP)\) if \((c_1,c_2) \in V_{d}(\eta )\); and

-

(e)

\(\varOmega (PR)\cup \varOmega (RP) \cup \varOmega (RR)\) if \((c_1,c_2) \in V_e(\eta )\).

-

(f)

Further, the LRE are the elements in \(\varOmega (PP)\) for \(c_1=c_2=2\).

2.3 The equilibrium policies

This section analyzes the optimal choices of the two managers on the capacity constraints. The managers in both locations are assumed to be rational. They have perfect information about the learning dynamics and can accurately anticipate the long-run consequences of the capacity choices in both locations. Hence, given this knowledge, each manager makes a one-shot decision on the capacity constraint \(c_k \in [1,2]\) of his own location to achieve the particular objective that he is pursuing.

In this section, we consider the case where the objective function of each manager is associated with the average of the payoffs of the agents in his location in the long run (we explore the case where the managers care about scale in Sect. 3). It captures the situation where only the efficiency of the coordination matters. For example, in a research group, the success of a long-run project is usually determined by how efficiently the group members can coordinate with each other and has little to do with the scale of the group.

Let \(x_k(\omega )\) be the number of agents in location \(k\) in state \(\omega \) and \(y_k(\omega )\) be the number of \(P\)-players in location \(k\) in state \(\omega \). Hence, for any state \(\omega =(v_1,v_2,n_1)\), we have \(x_k(\omega )=n_k\) and \(y_k(\omega )=v_k\). Given a state \(\omega \), denote the payoffs of \(P\)-players and \(R\)-players in location \(k\), respectively, by

for \(x_k(\omega )>1\). Note that \(\pi ^P_k(\omega )\) for \(y_k(\omega )=0\) and \(\pi ^R_k(\omega )\) for \(y_k(\omega )=n_k\) are not defined for any \(n_k\). If there is only one player in a location, then the player cannot find a partner to play the game, and we assume that the payoff of this player is zero.Footnote 7

Hence, the average of the payoffs of the agents in location \(k\) in state \(\omega \) is

for \(x_k(\omega ) > 0\) (\(k=1,2\)). It is natural to assume that, if a location \(k\) is empty, then its payoff is zero; that is, if \(x_k(\omega )=0\), then \(\bar{\pi }_k(\omega )=0\).

The limit invariant distribution \(\mu ^*\) is a function of the capacity constraints. Hence, we have \(\mu ^*(c_1,c_2) \in \varDelta (\varOmega )\), where \(\varDelta (\varOmega )\) is the set of probability distributions over \(\varOmega \). The payoff function of manager \(k\) is defined by the average of payoffs at location \(k=1,2\) in the long run:

where \(\mu ^*(c_1,c_2)(\omega )\) is the probability of \(\omega \) in the limit invariant distribution given \(c_1\) and \(c_2\). We introduce an auxiliary quantity \(c^*=2q^*\) if \(\hat{q} \le \bar{q}\) and \(c^*=\frac{2(2q^*-1)}{2q^*-\hat{q}}\) if \(\hat{q} > \bar{q}\). The NE of the one-shot game between the two managers are provided in Theorem 2.

Theorem 2

Let the payoff function of each manager \(k\) be given by (6), and \(c^{*}\) and \(\varPhi (c_k)\) be as defined above.

-

(a)

for any \((c_1,c_2)\) such that \(c_1> \varPhi (c_2)\) and \(c_2<c^*\), or \(c_2>\varPhi (c_1)\) and \(c_1<c^*\), or \(c_1<c^*\) and \(c_2<c^*\), there exists an integer \(\bar{N}\) such that for all \(N>\bar{N}\), \((c_1,c_2)\) is a NE;

-

(b)

for any \((c_1,c_2)\) such that \(c_1< \varPhi (c_2)\) and \(c_2>c^*\), or \(c_2<\varPhi (c_1)\) and \(c_1>c^*\), or \(c_1>c^*\) and \(c_2>c^*\), there is no NE corresponding to \((c_1,c_2)\).

Theorem 2 says that, for \(N\) large enough, if the objective function of both managers is the average of the payoffs of the agents at their respective locations in the long run, there are two different classes of NE. In one class, both managers will choose relatively small capacities (\(c_1<c^*\) and \(c_2<c^*\)), which leads to the selection of global coordination on the risk-dominant equilibrium. In the other class, one manager will choose a large capacity and the other a small one (\(c_k> \varPhi (c_\ell )\) and \(c_\ell <c^*\), \(k, \ell =1,2, k\ne \ell \)), which leads to coexistence of conventions in the long run. Further, the strategy profiles in which both managers choose large capacities are not NE. Figure 2 illustrates these results.

Using Fig. 2, we explain the intuition for the result that the strategy profiles stated in \((a)\) are NE. Consider the parameter regions which support coexistence of conventions. The manager of the location coordinating on the Pareto-efficient equilibrium cannot be better off, hence having no incentive to deviate. Given the capacity choice of this manager, the manager in the other location will not deviate either. The reason is that, whatever his choice is, agents in his location will always coordinate on the risk-dominant equilibrium. Similarly, in the area where global coordination on the risk-dominant equilibrium is selected, no manager can increase his payoff by changing the capacity of his location.

Now we explain the reason for the fact that choosing large capacities for both locations (\(c_1>c^*\) and \(c_2>c^*\)) is not stable. If such a situation were to occur, the LRE would be either coexistence of conventions or global coordination on the Pareto-efficient equilibrium. However, the manager in the location with weakly lower payoff would always have an incentive to decrease the capacity of his location, in order to have all the players in his location coordinating on the Pareto-efficient equilibrium.

3 Extensions

This section discusses several extensions of the basic model. The first extension analyzes the capacity choices of the managers when their payoffs are associated with the total payoffs of the agents at their respective locations in the long run. Hence, in this case, the managers have to consider both the efficiency of the coordination and the scales of their locations. In the second extension, the managers are allowed to have a second choice, mobility constraint. As a result, each manager can choose both the number of immobile agents and the capacity constraint at his location. The last extension considers the case where the agents have more than two strategies in pure coordination games.

3.1 When scale matters

In this subsection, we consider the case where the managers are concerned with the total payoff of the players at their locations. For instance, in some evaluation systems for economics departments, the total number of academic publications is regarded as an important factor. Hence, if the dean of a department really cares about the evaluation (or his performance is measured by the evaluation), he may want to both improve the efficiency of the coordination on research among the faculty members and increase the number of researchers in the department.

The total payoff of location \(k\) at state \(\omega \) is given by \(x_k(\omega ) \bar{\pi }_k(\omega )\). We define the payoff function of both managers who care about the total payoffs of the agents at their respective locations by

Proposition 2

Let the payoff function of each manager \(k\) be given by (7). For any \((c_1,c_2) \in [1,2]^2\setminus \{(c^*,c^*)\}\), there exists an integer \(\bar{N}\) such that for all \(N>\bar{N}\), there is no NE corresponding to \((c_1,c_2)\).

Proposition 2 says that if the managers care about the total payoffs of the agents in their locations in the long run, for \(N\) large enough, the only candidate of the NE of the managers’ game is \((c^*,c^*)\). The reason that the other strategy profiles cannot be NE is the following. In the region where coexistence of conventions is selected, the manager of the location in which agents coordinate on the risk-dominant equilibrium has an incentive to decrease \(c_k\) to the extent that both locations will coordinate on the risk-dominant equilibrium, because it will increase the expected population size in his location. In the region where agents in both locations coordinate on the risk-dominant equilibrium, each manager has an incentive to increase \(c_k\), which may either increase the expected population size or lead his location to coordinate on the Pareto-efficient equilibrium.

The only exception is the point \((c^*,c^*)\). For \(N \rightarrow \infty \), \((c^*,c^*)\) is a NE if the payoff of the Pareto-efficient equilibrium is high enough. The reason is that, given this strategy profile, in each location, both the Pareto-efficient and the risk-dominant equilibria can be selected in the long run. However, any unilateral deviation of the manager will lead his location to coordinate only on the risk-dominant equilibrium. For any fixed \(N\), whether or not this point is a NE crucially depends on the parameter of the model, and we cannot provide a general result.

3.2 Mobility constraints

In this subsection, we consider the optimal policies of the managers when there is an additional choice, mobility constraint. In reality, mobility constraint can be specified in the working contract. For instance, in airline companies, pilots are usually required by contract to work in the same company for a relatively long time (e.g., two decades), and leaving before the stated time always causes a large penalty.

Denote by \(p_k\), the mobility constraint of location \(k\) such that \(\lceil p_k N \rceil \) specifies the number of immobile players in each period.Footnote 8 Since each location has \(N\) agents in the beginning, we assume that each manager can restrict the mobility of at most \(N\) players (i.e., \(p_k \le 1\)). It is natural that manager \(k\) cannot restrict the mobility of the agents not in location \(k\) when making the decision. Then, a strategy of manager \(k\) is a pair \((c_k, p_k) \in [1,2]\times [0,1]\).

Given the capacity and mobility constraints, the effective capacity of location \(k\) can be represented by \(M_k \equiv \lfloor d_kN \rfloor \) with \(d_k=\min \{c_k, 2-p_\ell \}\), and the minimum number of players in location \(k\) is \(m_k \equiv 2N-M_\ell \), for \(k,\ell \in \{1,2\}, k \ne \ell \). That is, a location has the maximum number of agents either by reaching its capacity constraints, or by having all agents, apart from the immobile ones, in this location.

Since \(d_k \in [1,2]\), when replacing \(c_k\) with \(d_k\) \((k=1,2)\) in Lemma 1, the absorbing sets of the unperturbed dynamics stated in this lemma remain true. Further, with the same replacement, the LRE are the same as in Theorem 1 and Proposition 1.

Now we explore the policy choice of each manager. We first consider the case where the payoff function of each manager \(k\) is the average of the agents’ payoffs in his location in the long run.

Proposition 3

Let the payoff function of each manager \(k\) be given by (6), \(\varPhi (d_k)\) be as defined above, and \(d^*=2q^*\) if \(\hat{q} \le \bar{q}\) and \(d^*=\frac{2(2q^*-1)}{2q^*-\hat{q}}\) if \(\hat{q} > \bar{q}\).

-

(a)

for any \((d_1,d_2)\) such that \(d_1<d^{*}\) or \(d_2<d^{*}\) there exists an integer \(\bar{N}\) such that for all \(N>\bar{N}\), \((d_1,d_2)\) corresponds to at least one NE, provided that \(d_1 \ne \varPhi (d_2)\) and \(d_2 \ne \varPhi (d_1)\);

-

(b)

for any \((d_1,d_2)\) such that \(d_1>d^{*}\) and \(d_2>d^{*}\) there exists an integer \(\bar{N}\) such that for all \(N>\bar{N}\), there is no NE corresponding to \((d_1,d_2)\).

If the managers can choose both the capacity and the mobility constraints, and care about the average of the agents’ payoff in their locations in the long run, the parameter regions in the \((d_1,d_2)\)-space that correspond to NE are similar to the ones when managers can choose capacity constraints only. The difference is that the strategy profiles projected on the two areas \(d^* < d_1 < \varPhi (d_2)\) and \(d^* < d_2 < \varPhi (d_1)\) are now NE. Consider an arbitrary point in the first area. Manager 1 cannot be better off from deviation, because agents in location 1 coordinate on the Pareto-efficient equilibrium. In order to lead his location to coordinate on the Pareto-efficient equilibrium, manager 2 would like to increase \(d_2\) by choosing a higher \(c_2\). However, his effort may not be effective, for \(d_2\) is determined by \(\min \{c_2, 2-p_1\}\). If \(d_2=2-p_1\), increasing \(c_2\) cannot change \(d_2\) at all. A similar argument holds for any point in the second area.

Now we explore the situation where the managers are concerned with the total payoffs of the agents in their respective locations in the long run. Proposition 4 summarizes the result.

Proposition 4

Let the payoff function of each manager \(k\) be given by (7), and \(d^*\) and \(\varPhi (d_k)\) be as defined above.

-

(a)

\(d_1=d_2=1\) corresponds to at least one NE;

-

(b)

for any \((d_1,d_2) \in [1,2]^2 \setminus (\{(1,1)\} \cup \{(d^{*},d^{*})\})\), there exists an integer \(\bar{N}\) such that for all \(N>\bar{N}\), there is no NE corresponding to \((d_1,d_2)\).

The only difference between this result and Proposition 2 is that now \(d_1=d_2=1\) corresponds to at least one NE. If managers can only choose capacity constraint, \(c_1=c_2=1\) is not a NE, because both managers have an incentive to increase their respective capacities, in order to have a larger expected population size. However, since the managers now can also choose mobility constraint, if they care about the number of agents in their locations, both managers have an incentive to increase the mobility constraint to prevent the agents from moving out. As a result, both managers will set \(p_k=1\) so that no agents can change locations. This result confirms the argument in Anwar (2002, footnote 7).

When the managers can choose both capacity and mobility constraints, Anwar’s (2002) result corresponds to the LRE on the diagonal of the \((d_1,d_2)\)-space (the segment connecting \((1,1)\) and \((2,2)\) but not including \((2,2)\))Footnote 9, and Ely’s (2002) result corresponds to the LRE at \((2,2)\). Hence, Propositions 3 and 4 show that the assumptions on capacity and mobility constraints in Ely (2002) and Anwar (2002) are not the optimal choices of the managers.

3.3 Pure coordination game with more than two strategies

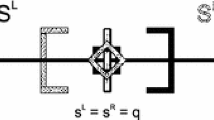

This subsection considers the case where the basic pure coordination game has more than two strategies. Consider the game \(G=\left[ I, \{S^i\}_{i \in I}, \{\pi ^i\}_{i \in I}\right] \), where \(I=\{1,2\}\), \(S^i=\{s_1, s_2, \ldots , s_n\}\), and \(\pi ^i(s^i,s^j)=\tau \) if \(s^i=s^j=s_\tau \), and \(\pi ^i(s^i,s^j)=0\) otherwise. Hence, \(G\) is a \(n\times n\) pure coordination game, where the payoff for mis-coordination is zero. Straightforwardly, any \((s_\tau ,s_\tau )\) for \(\tau \in \{1,2,\ldots ,n\}\) is a strict NE of this game. The model setup remains the same.

Hence, for \(d_1=d_2<2\), the unperturbed learning dynamics has \(n^2\) absorbing sets, in which agents in each location coordinate on a strict NE. For \(d_1=d_2=2\), there are \(2n\) absorbing sets, in which one location is empty and the other location has all the agents coordinating on a strict NE. For \(d_k=2\) and \(d_\ell <2\), the unperturbed dynamics has \(n+n(n-1)/2\) absorbing sets: There are \(n\) absorbing sets where all the agents in location \(k\) coordinate on a strict NE, and there are \(n(n-1)/2\) absorbing sets where agents in location \(k\) coordinate on a less efficient equilibrium than that in location \(\ell \). We single out the LRE by comparing the minimum transition costs toward these absorbing sets and summarize the result in Proposition 5.

Proposition 5

If the basic interaction among agents is the \(n \times n\) coordinate game \(G\) defined above, the LRE of the learning dynamics in stage 2 of the model are as follows.

-

(a)

For \(d_1<2\) and \(d_2<2\), the LRE are the states in \(\varOmega (s_n,s_n)\).

-

(b)

For \(d_1=2\) and \(d_2<2\), the LRE is the state in \(\varOmega (s_n,0)\). Similarly, for \(d_1<2\) and \(d_2=2\), the LRE is the state in \(\varOmega (0,s_n)\).

-

(c)

For \(d_1=2\) and \(d_2=2\), the LRE are the states in \(\varOmega (s_n,0)\) and \(\varOmega (0,s_n)\),

where \(\varOmega (s_n,s_n)\) is the absorbing set where agents in both locations play \(s_n\), and \(\varOmega (s_n,0)\) (\(\varOmega \mathrm{(}0,s_n\mathrm{)}\)) is the absorbing set where all the agents agglomerate in location 1 (2) and play \(s_n\).

Proposition 5 says that only the strict NE \((s_n,s_n)\) will be selected in the long run. The reason is that \((s_n,s_n)\) is both Pareto-efficient and risk-dominant and hence has a larger basin of attraction comparing with any other strict NE. That is, given a location, the transition from any other strict NE to \((s_n,s_n)\) always requires less mutants than the one in the opposite direction.

Then, we consider the managers’ game in stage 1. We focus on the case where the managers are concerned with the total payoffs of their respective locations in the long run,Footnote 10 and explore two alternative strategy sets for managers.

Proposition 6

Let the payoff function of each manager \(k\) be given by (7). The NE of the managers’ game in stage 1 are as follows:

-

(a)

If both managers can only choose capacity constraint, \(c_1=c_2=2\) corresponds to the unique NE.

-

(b)

If both managers can choose the capacity and the mobility constraints, \(d_1=d_2=1\) corresponds to at least one NE.

The intuition is that, if the managers can choose capacity constraints only, they always have an incentive to increase the capacities of their respective locations. As a result, each location becomes large enough to accommodate all the agents. If the managers can also choose mobility constraint, they have an incentive to increase the capacity and to restrict the mobility. However, since the effective capacity of location \(k\) is determined by \(\min \{c_k, 2-p_\ell \}\), the effect of restricting mobility will dominate that of increasing capacity. In the end, managers will completely forbid migration. Regardless of the choices available to the managers, given the basic interaction \(G\), agents will always coordinate on the Pareto-efficient NE \((s_n,s_n)\).

4 Conclusion

In this paper, we develop a simple model to study long-run technology choice with endogenous local capacity constraints. We introduce a manager for each location and allow them to choose capacity constraints (and mobility constraints). Given these constraints, the agents are involved in a learning dynamics of a coordination game. We assume that the managers are rational, while the agents are boundedly rational, which gives rise to a model of “asymmetric rationality.” The managers make decisions first, with perfect knowledge of the effect of different policies on the long-run consequences, and the agents take the constraints as given and choose strategies of a coordination game repeatedly. To our knowledge, this is one of the few studies that explore the sequential interaction among agents with different rationality levels within the framework of stochastic learning in games.

Clearly, the strategy set and the objective function of the managers have significant effects on the equilibrium selection of coordination games in the long run. Within our framework, we consider two alternative strategy sets and investigate two different objective functions. If the managers care about efficiency, a set of NE exist, leading to either the coexistence of conventions or the global coordination of the risk-dominant equilibrium. If the managers also care about the scale, they may end up with a medium capacity, when they can choose capacity constraint only, and they may completely forbid migration of the agents, when they are also allowed to choose mobility constraint. In either case, a set of symmetric policy arrangements exogenously given in Ely (2002) and Anwar (2002) are not stable. Hence, our work tests the validity of the assumptions of policy constraints that have been considered in the related literature and also demonstrates how policy adjustments may change the long-run outcomes.

There are many situations in social and economic activities where agents with different rationality levels interact with each other. Hence, in our opinion, further research should focus on developing more realistic models to analyze such interactions in different contexts. A deeper understanding of these issues will allow us to obtain better insights into the consequences of the interactions among heterogenous agents. This paper takes a step forward by illustrating how the choices of capacity constraints interact with the long-run technology convention in a non-trivial way; hence, it is necessary to explicitly treat policy parameters and their optimality as important factors for the establishment of social conventions in an organized society.

Notes

Of course, it could be more convenient for each investor to require all the firms in his park to use the same platform. However, the investors normally do not have the authority to do so.

We denote by \(\lfloor x\rfloor \) the maximum integer that is weakly smaller than \(x\), and by \(\lceil x \rceil \) the minimum integer that is weakly larger than \(x\). For instance, if \(c_k=1.25\), \(N=50\), the firm can hire at most \(\lfloor 62.5 \rfloor =62\) agents.

In Anwar (2002), \(e,g,h\) and \(r\) are not required to be larger than zero. We add this assumption here to avoid negative payoffs in the manager’s game. It has no effect on the LRE of the learning dynamics.

Our results do not rely on the nature of pure coordination games. The results are similar if we use stag hunt games.

We use myopic best reply to the state rather than the standard myopic best reply to simplify the analysis. With this behavioral updating rule, all the mobile players have the same best reply, and all the immobile players within the same location have the same best reply, regardless of the strategies they have adopted in the previous period.

Alternatively, as in Ely (2002), we can assume that the loner will obtain some positive payoff that is smaller than the payoff at the risk-dominant equilibrium. We can also assume that if the loner plays \(P\), his payoff is \(\varPi (P,P)\), and if he plays \(R\), his payoff is \(\varPi (R,R)\). None of these assumptions will change the results in the following theorem and propositions.

Denote by \(\lceil x \rceil \) the minimum integer that is weakly larger than \(x\). For instance, if \(p_k=0.25\) and \(N=10\), the number of immobile players is \(\lceil 2.5 \rceil =3\).

The NE for the case where managers only care about the average location payoff in the long run is trivial. If managers can only choose capacity constraints, any point in the \((d_1,d_2)\)-space corresponds to at least one NE. If managers can choose capacity and mobility constraints, any point in the \((d_1,d_2)\)-space except the ones in the set \(d_1=2\) or \(d_2=2\) corresponds to at least one NE.

References

Alós-Ferrer, C.: Learning, memory, and inertia. Econ. Lett. 101, 134–136 (2008)

Alós-Ferrer, C., Kirchsteiger, G., Walzl, M.: On the evolution of market institutions: the platform design paradox. Econ. J. 120, 215–243 (2010)

Alós-Ferrer, C., Netzer, N.: Robust stochastic stability. Econ. Theory (2014). doi:10.1007/s00199-014-0809-z

Alós-Ferrer, C., Shi, F.: Imitation with asymmetric memory. Econ. Theory 49, 193–215 (2012)

Alós-Ferrer, C., Weidenholzer, S.: Partial bandwagon effects and local interactions. Games Econ. Behav. 61, 179–197 (2007)

Alós-Ferrer, C., Weidenholzer, S.: Contagion and efficiency. J. Econ. Theory 143, 251–274 (2008)

Ania, A.B., Wagener, A.: The Open Method of Coordination (OMC) as an Evolutionary Learning Process, Working Paper (2009)

Anwar, A.W.: On the co-existence of conventions. J. Econ. Theory 107, 145–155 (2002)

Bhaskar, V., Vega-Redondo, F.: Migration and the evolution of conventions. J. Econ. Behav. Organ. 13, 397–418 (2004)

Blume, A., Temzelides, T.: On the geography of conventions. Econ. Theory 22, 863–873 (2003)

Dieckmann, T.: The evolution of conventions with mobile players. J. Econ. Behav. Organ. 38, 93–111 (1999)

Ellison, G.: Learning, local interaction, and coordination. Econometrica 61, 1047–1071 (1993)

Ely, J.C.: Local conventions. Adv. Theor. Econ. 2, 1–30 (2002)

Freidlin, M., Wentzell, A.: Random Perturbations of Dynamical Systems. Springer, New York (1988)

Gerber, A., Bettzüge, M.O.: Evolutionary choice of markets. Econ. Theory 30, 453–472 (2007)

Juang, W.-T.: Rule evolution and equilibrium selection. Games Econ. Behav. 39, 71–90 (2002)

Kandori, M., Mailath, G.J., Rob, R.: Learning, mutation, and long run equilibria in games. Econometrica 61, 29–56 (1993)

Khan. A.: Coordination under global random interaction and local imitation. Int. J. Game Theory (2014). doi:10.1007/s00182-013-0399-1

Oechssler, J.: An evolutionary interpretation of mixed-strategy equilibria. Games Econ. Behav. 21, 203–237 (1997)

Robson, A.J., Vega-Redondo, F.: Efficient equilibrium selection in evolutionary games with random matching. J. Econ. Theory 70, 65–92 (1996)

Shi, F.: Comment on “on the co-existence of conventions” [J. Econ. Theory 107 (2002) 145–155]. J. Econ. Theory 148, 418–421 (2013)

Weidenholzer, S.: Coordination games and local interactions: a survey of the game theoretic literature. Games 1, 551–585 (2010)

Author information

Authors and Affiliations

Corresponding author

Additional information

An early version of this paper with the title “Social Planners and Local Conventions” was written when the author was a PhD student at the University of Konstanz (Germany). The author thanks the editor, two anonymous referees, Carlos Alós-Ferrer, Larry Blume, Johannes Kern, Jaimie Lien, Jianxia Yang, Jie Zheng, and the participants in the World Congress of the Game Theory Society 2012, the European Winter Meeting of the Econometric Society 2011, the UECE Lisbon Meeting in Game Theory and Applications 2011, the 11th SAET conference, and the 6th Spain-Italy-Netherlands Meeting on Game Theory for helpful suggestions and comments. Financial supports from the Center for Psychoeconomics at the University of Konstanz and the Department of Economics at Shanghai Jiao Tong University are gratefully acknowledged.

Appendix: Proofs

Appendix: Proofs

Proof of Lemma 1

We show that all the sets listed in Lemma 1 are absorbing, i.e., once they are there, the dynamics will remain there forever, and all the other states are transient, i.e., there is a positive probability that the dynamics will never move back to them. Suppose, in period \(t\), the dynamics reaches an arbitrary state \(\omega \). Let \((\tilde{k}, s^*)\) be a myopic best reply to \(\omega \) for the agents who can relocate. Hence, a myopic best reply for the agents who are currently located in \(\tilde{k}\) and cannot relocate is \(s^*\). Denote by \(s'\) a myopic best reply to \(\omega \) for those who are not in location \(\tilde{k}\) and cannot relocate. Then, with a positive probability, the agents in location \(\tilde{\ell } \ne \tilde{k}\) who can relocate will move to location \(\tilde{k}\) and play \(s^*\), while those staying in location \(\tilde{\ell }\) and cannot relocate will play \(s'\). The players in location \(\tilde{k}\) will remain there and play \(s^*\). Hence, in \(t+1\), agents in location \(\tilde{k}\) will coordinate on \(s^*\), while those in location \(\tilde{\ell }\) will coordinate on \(s'\).

Case 1 \(d_k <2\) for all \(k \in \{1,2\}\). If \(s^* = s'\), in \(t+2\), all the players will play the same strategy and randomly choose their locations if such an opportunity arises. This corresponds to the sets \(\varOmega (RR)\) or \(\varOmega (PP)\). They are absorbing, because \((R,R)\) and \((P,P)\) are strict NE. Once the dynamics reaches any one of the states in the set \(\varOmega (RR)\)(\(\varOmega (PP)\)), a myopic best reply to the previous state will always lead players to play \(R\) (\(P\)) and randomize their location choices given the capacity constraints.

If \(s^* \ne s'\), players in one location \(k \in \{1,2\}\) must coordinate on \(P\). Then, all the players in location \(\ell \) who have an opportunity to relocate will move to location \(k\) and play \(P\) until the population in location \(k\) reaches \(M_k\). Once there, myopic best replying will lead all the \(P\)-players to stay in the current location and play \(P\). The \(R\)-players would have an incentive to move to the other location and play \(P\), but are not allowed to do so due to the constraints. Hence, they will play \(R\) in their current location. This corresponds to \(\varOmega (PR)\) or \(\varOmega (RP)\) for \(k=1,2\), respectively.

Case 2 \(d_k=2\) and \(d_\ell < 2\). If \(s^*=s'\), with a positive probability, all the players will move to location \(k\) and play \(s^*\). Once there, the dynamics will remain there forever. This corresponds to \(\varOmega (PO)\) or \(\varOmega (RO)\) for \(k=1\), or to \(\varOmega (OP)\) or \(\varOmega (OR)\) for \(k=2\). If \(s^* \ne s'\), agents will coordinate on \(P\) in one location, and on \(R\) in the other location. If the agents in location \(k\) coordinate on \(P\), while those in location \(\ell \) coordinate on \(R\), all the players in location \(\ell \) will move to \(k\) and play \(P\). If the reverse case occurs, all the mobile players in location \(k\) will move to location \(\ell \) until it reaches its effective maximum capacity. Therefore, the absorbing sets in this case are \(\varOmega (PO)\), \(\varOmega (RO)\), and \(\varOmega (RP)\) for \(k=1\), and \(\varOmega (OP)\), \(\varOmega (OR)\), and \(\varOmega (PR)\) for \(k=2\).

Case 3 \(d_k=2\) for both \(k=1,2\). Similarly to Case 2, if \(s^*=s'\), all the players will move to one location and coordinate on \(s^*\). If \(s^* \ne s'\), all the players will move to the location with \(P\)-players and play \(P\). Once there, the dynamics will stay there forever. Hence, the absorbing sets in this case are \(\varOmega (RO)\), \(\varOmega (PO)\), \(\varOmega (OR)\), and \(\varOmega (OP)\). \(\square \)

Proof of Theorem 1 and Proposition 1

The standard approach to identifying the LRE in the literature on learning in games relies on the graph-theoretical techniques developed by Freidlin and Wentzell (1988). In general, we construct minimum-cost transition trees for all the absorbing sets in each of the three cases in Lemma 1, and then, we identify the absorbing set whose transition tree has the lowest cost. As an illustration, we show how to identify the LRE at the point \(d_1=d_2=2\) in the following.

By Lemma 1, for \(d_1=d_2=2\), there are four absorbing sets, \(\varOmega (RO)\), \(\varOmega (PO)\), \(\varOmega (OR)\) and \(\varOmega (OP)\). The transitions between \(\varOmega (PO)\) and \(\varOmega (OP)\) (in both directions) only need one mutant, who moves to the empty location and does not change her strategy, just as in the transitions between \(\varOmega (RO)\) and \(\varOmega (OR)\). The transition from \(\varOmega (OR)(\varOmega (RO))\) to \(\varOmega (PO) (\varOmega (OP))\) only requires one mutant to move to the empty location and change her strategy. Consider the following \(\varOmega (PO)\)-tree.

It is easy to see that the minimum cost of this tree is 3. For a tree with four absorbing sets, it must be the minimum cost. Hence, the element in \(\varOmega (PO)\) is a LRE. Since the transition from \(\varOmega (PO)\) to \(\varOmega (OP)\) needs only one mutant, one can build an \(\varOmega (OP)\)-tree simply by reversing the direction of the arrow between \(\varOmega (OP)\) and \(\varOmega (PO)\) in the \(\varOmega (PO)\)-tree above. Therefore, the minimum cost of this \(\varOmega (OP)\)-tree is also 3. Hence, the element in \(\varOmega (OP)\) is a LRE as well.

We skip the details for identifying the LRE in the remaining cases, since they use the same techniques and involve cumbersome computations. \(\square \)

Proof of Theorem 2

The proof can be better understood with Fig. 2. We first show that the set of points in the \((c_1,c_2)\)-space given in (a) of the theorem are NE. Let \(N\) be large enough. Consider first an arbitrary point in the set \(c_1> \varPhi (c_2)\) and \(c_2<c^*\). By Theorem 1, any point \((c_1,c_2)\) in this set corresponds to the LRE set \(\varOmega (RP)\). Since both managers are concerned with the average of the agents’ payoffs in their respective locations in the long run, manager 2 has no incentive to deviate. The reason is that the agents in location 2 have coordinated on the Pareto-efficient equilibrium \((P,P)\). Manager 1 has no incentive to deviate either, because given \(c_2\) in this case, for any \(c_1 \in [1,2]\), the agents in location 1 will always coordinate on \((R,R)\) in the long run. Using a similar analysis, one can show that any point in \(c_2> \varPhi (c_1)\) and \(c_1<c^*\) is a NE. Then, we consider an arbitrary point in the set \(c_1<c^*\) and \(c_2<c^*\). In this region, the LRE set is \(\varOmega (RR)\). No managers have an incentive to deviate, because, given the capacity choice of one manager, agents in the other location will always coordinate on \((R,R)\), whatever is the capacity choice of the manager.

Now we show that the set of points in the \((c_1,c_2)\)-space given in item (b) of the theorem are not NE. We still consider large enough \(N\). We first show that the points in the set \(c_1>c^*\) and \(c_2>c^*\) are not NE. For an arbitrary point in this set, the LRE set is \(\varOmega (RP)\) or \(\varOmega (PR)\), or both. The manager of the location in which the agents coordinate on \((P,P)\) with probability one (say, location 1) has no incentive to deviate. The other manager (in location 2), however, always has an incentive to decrease the capacity so that \(c_2<c_1\), because doing so will lead the agents in his location to coordinate on \((P,P)\) with probability one. We then show that the points in the set \(c_1 > c^*\) and \(c_1 <\varPhi (c_2)\) are not NE. For any point \((c_1,c_2)\) in this set, the LRE set is \(\varOmega (RR)\). Manager 2 always has an incentive to increase the capacity \(c_2\) such that \(c_1>\varPhi (c_2)\) and \(c_2<c_1\), because then the agents in location 2 will coordinate on \((P,P)\). Analogously, we can show that the points in the set \(c_2 > c^*\) and \(c_2 <\varPhi (c_1)\) are not NE. \(\square \)

Proof of Proposition 2

We show that if the managers care about the total payoffs in the long run, the only possible candidate for the NE in the managers’ game is \((c^*,c^*)\). It is easy to show that, for \(N\) large enough, the points in the areas stated in (b) of Theorem 2 are not NE. As shown in the proof of Theorem 2, in these areas, it is always possible to change the capacity constraint of one location, so that agents in this location coordinate on \((P,P)\) with probability one. It not only improves the average payoff, but also increases the number of the agents (by attracting agents from the other location) in this location, hence increasing the total payoff of this location.

For any point in the remaining area, except \((c^*,c^*)\), the manager of the location in which agents coordinate on \((R,R)\) in the long run always has an incentive to set a larger capacity, because it will increase the expected population size in his location, without decreasing the average payoff. Consequently, it increases the (expected) total payoff.

The only point left is \((c^*,c^*)\). Whether or not it is a NE depends on the population \(N\) and the payoff parameters in the basic coordination game, and we cannot provide a general result. \(\square \)

Proof of Proposition 3

When managers can also choose mobility constraint and care about the average of the location payoff, the points in the areas stated in (a) of Theorem 2 still correspond to NE for large \(N\). As explained in the proof of Theorem 2, no managers would deviate, either because the location has coordinated on \((P,P)\), or because changing the effective capacity cannot improve efficiency. As in Theorem 2, the points in the area \(c_1>c^*\) and \(c_2>c^*\) do not correspond to NE for large \(N\). The reason is that the manager of the location in which agents do not coordinate on \((P,P)\) always has an incentive to decrease the effective capacity (by setting a lower \(c_k\)). As explained in the proof of Theorem 2, this could change his location to coordinate on \((P,P)\), hence raising the average of the location payoff.

The only difference between this result and that in Theorem 2 is that the points in the two areas \(c_1 \ge c^*, c_1 < \varPhi (c_2)\) and \(c_2 \ge c^*, c_2 < \varPhi (c_1)\) correspond to NE now. Consider an arbitrary point in the area \(c_1 \ge c^*, c_1 < \varPhi (c_2)\). If managers cannot choose mobility constraints, manager 2 has an incentive to increase \(c_2\) so that \(c_1>\varPhi (c_2)\) and the agents in location 2 coordinate on \((P,P)\) in the long run. Now, since managers can also choose mobility constraints, increasing \(c_2\) may not increase \(d_2\). The reason is that the effective capacity of location 2 is now determined by the capacity constraint of location 2 (\(c_2\)) and the mobility constraint of location 1 (\(p_1\)): \(d_2=\min \{c_2,2-p_1\}\). If \(d_2\) is equal to \(2-p_1\) then, increasing \(c_1\) cannot change \(d_2\), hence, manager 2 has no incentive to deviate. Similarly, one can show that, with mobility constraints, the points in the area \(c_2 \ge c^*, c_2 < \varPhi (c_1)\) correspond to NE as well. \(\square \)

Proof of Proposition 4

Given any point in the set \((d_1,d_2) \in [1,2]^2\!\setminus \!\!(\{(1,1)\} \cup \{(d^{*},d^{*})\})\), each manager \(k\) who cares about the total payoff of his location has an incentive to increase \(p_k\) in order to decrease the effective capacity of the other location \(d_\ell \), because it will either increase the expected population of location \(k\) or lead the agents in location \(k\) to coordinate on \((P,P)\) (which will also increase the expected population size). The incentive will disappear when the managers choose \(p_k=1\) for both \(k=1,2\), which correspond to \((1,1)\) in the \((d_1,d_2)\)-space. Again, whether or not the point \((d^*,d^*)\) corresponds to a NE is very sensitive to the parameters of this model, and a general result is not available. \(\square \)

Proof of Proposition 5

Consider the \(n\times n\) pure coordinate game \(G\) defined in Sect. 3.3. We first identify the LRE for \(d_1<2\) and \(d_2<2\). In this case, there are \(n^2\) absorbing sets, in which each location coordinates on a strict NE. Then, we have the follow observations: (i) Given a location, the population share of the mutants required for the transition toward a more efficient equilibrium is smaller than \(1/2\) and that for the transition toward a less efficient equilibrium is larger than \(1/2\). (ii) For \(a<b<\tau \), given that a location coordinates on some equilibrium \((s_\tau , s_\tau )\), it requires more mutants to achieve \((s_a,s_a)\) than to achieve \((s_b,s_b)\), and it requires less mutants to achieve \((s_\tau , s_\tau )\) from \((s_a,s_a)\) than from \((s_b,s_b)\).

We prove that \(\varOmega (s_n,s_n)\) is the LRE by contradiction. Suppose that \(\varOmega (s_\tau ,s_{\tau '})\) with \(\tau \ne n\) or \(\tau ' \ne n\) is a LRE. That is, there exists an \(\varOmega (s_\tau ,s_{\tau '})\)-tree that has the smallest cost among all the other absorbing sets. Without loss of generality, suppose \(\tau \ne n\). Then, by (ii), there must be a direct link from \(\varOmega (s_{\tau +1},s_{\tau '})\) to \(\varOmega (s_\tau ,s_{\tau '})\) in this tree. Changing the direction of the link between \(\varOmega (s_{\tau +1},s_{\tau '})\) and \(\varOmega (s_\tau ,s_{\tau '})\), we can obtain a \(\varOmega (s_{\tau +1},s_{\tau '})\)-tree. By (i), it has a lower transition cost than \(\varOmega (s_\tau ,s_{\tau '})\)-tree, a contradiction. Hence, the LRE is \(\varOmega (s_n,s_n)\).

For \(d_k=2\) and \(d_\ell <2\) (\(k,\ell =1,2, k \ne \ell )\), the unperturbed learning dynamics has \(n+n(n-1)/2\) absorbing sets. There are \(n\) absorbing states, in which \(2N\) agents in location \(k\) coordinate on a strict NE, and location \(\ell \ne k\) is empty. There are \(n(n-1)/2\) absorbing states, in which location \(k\) coordinates on a less efficient equilibrium than location \(\ell \). Hence, by the same analysis as above, one can prove that the unique LRE is the state in \(\varOmega (s_n,0)\) for \(k=1\), or the state in \(\varOmega (0,s_n)\) for \(k=2\).

For \(d_1=d_2=2\), there are \(2n\) absorbing sets, in which one location has \(2N\) agents coordinating on a strict NE, and the other location is empty. Again, by (i) and (ii) and a similar analysis, it is straightforward to show that the states in \(\varOmega (s_n,0)\) and \(\varOmega (0,s_n)\) are the LRE. \(\square \)

Proof of Proposition 6

Consider the \((d_1,d_2)\)-space when the basic interaction is \(G\). For any point \((d_1,d_2)\), all the agents coordinate on \((s_n,s_n)\). The managers are supposed to be concerned with the total payoffs of their respective locations. Hence, if the managers can only choose the capacity constraint, they will always have an incentive to increase \(c_k\) in order to accommodate more agents. As a result, they will choose \(c_1=c_2=2\). Given this strategy profile, the expected total payoff of each location is larger than 0. If manager \(1\) deviates from this profile to a lower \(c_1\), then the LRE will become \(\varOmega (0,s_n)\), hence the total payoff in location 1 becomes 0. Similarly, manager 2 has no incentive to deviate either. Hence, \(c_1=c_2=2\) is the unique NE in this case.

If both managers can also choose the mobility constraints, each of them has an incentive to increase both the mobility and the capacity constraints, in order to increase the lower and the upper bounds of the population size in his location. Since \(d_k = \min \{c_k,2-p_\ell \}\), what really determines the effective capacity \(d_k\) of location \(k\) is the capacity constraint of the other location \(p_\ell \). In the end, both managers will choose \(p_1=p_2=1\). We show that \(p_k=1\) and \(c_k \in [1,2]\) for both \(k=1,2\) are NE. First, manager \(k\) has no incentive to change \(c_k\), because no agents in the other location can move to location \(k\). Second, manager \(k\) has no incentive to increase \(p_k\) either, because, if so, the agents in his location may move out, and hence decrease the (expected) population size in location \(k\). \(\square \)

Rights and permissions

About this article

Cite this article

Shi, F. Long-run technology choice with endogenous local capacity. Econ Theory 59, 377–399 (2015). https://doi.org/10.1007/s00199-014-0838-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00199-014-0838-7