Abstract

The fast growth of Internet technology in recent times has led to a surge in the number of users and amount of information generated. This substantially contributes to the popularity of recommendation systems (RS), which provides personalized recommendations to users based on their interests. A RS assists the user in the decision-making process by suggesting a suitable product from various alternatives. The collaborative filtering (CF) technique of RS is the most prevalent because of its high accuracy in predicting users' interests. The efficacy of this technique mainly depends on the similarity calculation, determined by a similarity measure. However, the traditional and previously developed similarity measures in CF techniques are not able to adequately reveal the change in users' interests; therefore, an efficient measure considering time into context is proposed in this paper. The proposed method and the existing approaches are compared on the MovieLens-100k dataset, showing that the proposed method is more efficient than the comparable methods. Besides this, most of the CF approaches only focus on the historical preference of the users, but in real life, the people's preferences also change over time. Therefore, a time-based recommendation system using the proposed method is also developed in this paper. We implemented various time decay functions, i.e., exponential, convex, linear, power, etc., at various levels of the recommendation process, i.e., similarity computation, rating matrix, and prediction level. Experimental results over three real datasets (MovieLens-100k, Epinions, and Amazon Magazine Subscription) suggest that the power decay function outperforms other existing techniques when applied at the rating matrix level.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

The Internet has become an integral part of people's lives since its inception in the twentieth century. There is a continuous data explosion with the advent of Web 2.0, social media, and online social networking. According to the report, 2.5 quintillion data bytes was generated per day in 2020, and by 2025, this number will increase to 463 exabytes of data (Bulau 2021). As a result of this data explosion, the user's ability for data absorption has reached its limit, and the issue of adverse selection has risen. Finding the right information from this data is like searching for a needle in the haystack. In this, the recommendation system (RS) (Jain et al. 2020) plays an important role. It is a software technology that serves as an information filtering tool to recommend the most favourable items to users depending on their previous personal preferences. The popularity of the recommendation system is continuously rising and is deployed in many domains like music, movie, news, joke, health care, article recommendations, etc. (Konstan et al. 1997; Jain et al. 2013; Anandhan et al. 2018). On e-commerce platforms (Jin et al. 2020), RS helps the users by suggesting the items of their interest. The presence of the Long Tail phenomenon (Suryakant and Mahara 2016) is also one of the prominent reasons for the ever-increasing popularity of the RS. According to this, users can find popular products quickly, but products in the Long Tail are more difficult and time taking to find. An RS solves this problem by recommending all related items, even if they aren't very popular.

Generally, there are three kinds of recommendation systems: (1) Content-Based (CB), (2) Collaborative Filtering (CF), and (3) Hybrid recommendation. In a content-based strategy (Wang et al. 2017), sufficient information about users and items is required to develop the profiles and provide recommendations. This method recommends the best-matched item after examining the previously rated items. The CB technique can change its recommendations very quickly according to the changes in the user's preferences, but enough information about users and items is required to create profiles. Unlike the CB technique, CF (Schafer et al. 2007; Jain and Mahara 2019) technique considers only the user-item ratings. It forecasts the utility of items for the target user based on the items previously rated by other users. The main benefit of CF is that, it requires less information about users/items to construct profiles and is more accurate than content-based techniques. It is divided into Model-based (Isinkaye et al. 2015) and Memory-based methods (Ghazarian and Nematbakhsh 2015). In the model-based approach, partial ratings are used to train the model, and once the model is trained, it is used to generate quick predictions. The memory-based method predicts the missing ratings based on the evaluations from other users/items. In this method, it is important to select a suitable similarity measure as it helps in finding similar users/items. Many experimental results show that the memory-based method has practical advantages such as simplicity, efficiency, and accuracy. Memory-based methods are classified into User-based (Tan and He 2017) or Item-based (Kant and Mahara 2018) techniques. When the similarity is calculated among users, it is called the user-based CF method; otherwise, it is known as item-based CF. After calculating the similarity among users/items, neighbors are determined for the target users to predict their unknown ratings. The hybrid methods (Ghazanfar and Prugel-Bennett 2010; Wang et al. 2017) combine collaborative and content-based approaches. Most of these similarity measures in the memory-based approach suffer from the data sparsity problem (Yu and Huang 2017; Kant and Mahara 2018), which occurs when the ratio of ratings needed to be predicted to the ratings already available is very high. For instance, predicting the 85% ratings from 15% of the available data. This problem gets aggravated with a continuous increase in users and items. A new similarity measure iGJ is proposed in this paper to overcome this. We compared the new method's performance with the existing method, and the experimental results show that our proposed method is superior.

In addition, the time-aware recommendation systems have been widely researched in recent years, and they have been found to be more successful than standard non-relevant recommendation systems (He and Wu 2009; Campos et al. 2014). Exploiting the context (e.g., location, time, weather, device, and mood) in which users express their preferences have been demonstrated to be very effective in increasing the performance of the recommendation system (Adomavicius et al. 2011). Time-aware recommendation (Ding and Li 2005; Koren 2009) systems focus on the idea that users' attraction to items in online systems diminishes over time. It means that users' most recent ratings on items reflect their current trend on such items. Although there has been a lot of research in this area, only a few studies described how time-based functions could help increase the recommendation system's performance (Larrain et al. 2015). Most research does not precisely describe which time functions should be used and when they can be integrated. This research aims at addressing this gap by applying various time decay functions at three levels, including rating matrix level, similarity computation level, and prediction level, during the recommendation process. The main contributions of this research are as follows:

-

1.

A novel CF-based RS algorithm is proposed to tackle the sparsity issue. The algorithm is validated by applying it to real-world data sets. The results confirm that the method is effective and scalable and outperforms existing CF-based methods.

-

2.

The concept of time was not considered in the traditional CF algorithms, but it is important as the user’s preferences change with time. Therefore, various time decay functions are integrated at three levels in the recommendation process to incorporate the time aspect. The experimental results on three real datasets indicate that the proposed time-based method is superior to all other methods.

The outline of the paper is as follows. The related work on CF and time context functions is presented in Sect. 2. The proposed algorithm is described in Sect. 3. The experiments and performance analysis are presented in Sect. 4. Finally, we conclude the paper in Sect. 5.

2 Related work

The Collaborative Filtering (CF) (Al-bashiri et al. 2017) is a popular suggestion approach that has been used on a variety of e-commerce platforms. It suggests potential items for target users by automatically learning and analysing their past preferences. In recommendation system, U = {U1, U2, …., UM} and I = {I1, I2, …., IN} be a set of users and items respectively, and all user rating data are regarded as a user–item rating matrix [rui]M×N. In this matrix, M and N represent the number of users and items, respectively; rui is a rating value made by the uth user on the ith item. This rating matrix is sparse in general, which means a substantial number of user’s ratings are unknown. As a result, a significant research emphasis of memory-based CF is how to construct an effective similarity measure to deal with the data sparsity problem. The literature about some traditional and recent work on similarity measures is discussed in Sect. 2.1. Many time decay functions are used to analyze the system performance, and a brief discussion on them is given in Sect. 2.2.

2.1 Collaborative filtering‑based recommendation

The Collaborative Filtering is one of the most popular methods of RS, which takes the users' preferences for items stored in a database (user-item matrix). It then makes recommendations based on the similarities calculated by a similarity measure. A similarity measure is a statistical measure used to show how two users or items are related. The CF technique is developed on the premise that users with common interests in the past will also share similar tastes in the future. Data sparsity and Cold-start are two main challenges faced by any CF-based RS (Patra et al. 2014). To alleviate them, many similarity measures, i.e., Cosine, PCC, TMJ, Rating Jaccard, RJaccard RJMSD, IPWR Var, Rating Jaccard RPB, etc., have been introduced in recent years. Table 1 highlights some of the traditional similarity measures along with newly developed methods.

Cosine (COS) (Su and Khoshgoftaar 2009) is a conventional similarity measure that computes similarity by calculating the cosine angle formed between user rating vectors. The main drawbacks of cosine measure are (1) It determines a high degree of similarity between two users, regardless of their rating differences. (2) It doesn't utilize all ratings provided by the users. (3) It cannot find the relationship between users if the number of common items is not enough. Adjusted Cosine (ACOS) (Wang et al. 2017) was proposed to overcome these drawbacks, but it also fails in calculating the effective similarity when users' cardinality is small. Another traditional similarity measure is Pearson Correlation Coefficient (PCC) (Senior 2017) which determined the similarity by considering only the co-rated items. Still, its efficiency is impaired when the number of co-rated items becomes less. Like the COS measure, PCC does not consider the global preference of the users. Some variants of PCC (Al-bashiri et al. 2017), such as CPCC, and SPCC, have been suggested to overcome these drawbacks. All the PCC and COS variants suffered either from the cold-start, sparsity, or both. The Jaccard coefficient (Sun et al. 2017) is the ratio of common ratings to all existing ratings. This technique only considers the common ratings. Unlike this, Mean Squared Difference (MSD) (Sun et al. 2017) measure considers only absolute ratings and ignores the proportion of common ratings. In Jaccard-Mean Squared Difference (JMSD) measure (Wang et al. 2017), Jaccard coefficient is combined with the MSD measure. It suffers from the local information and utilization of rating problems. Based on the Jaccard, PSS, and URP coefficients, a New Heuristic Similarity Measure (NHSM) is presented (Al-bashiri et al. 2017). It improvesthe system performance by eliminating the possibility of low similarity calculations despite having the same rating between users. This measure also fails (1) When more sparse entries are presents in the dataset (2) It does not utilize all users' ratings. (3) Similarity computation formula used in NHSM is complex.

Among the traditional similarity measures, the Jaccard is one of the popular and most frequently used similarity measure as it improves the system performance and give weightage to the common ratings. Several researchers recently used this measure to generate a new similarity measure. For instance, Sun et al. (2017) integrating the Jaccard and triangle similarity and proposed a Triangle Multiplying Jaccard (TMJ) similarity measure. It considers both the length and angle of the rating vectors between users. Still, it fails because, it does not consider the user's global preference. Based on the Jaccard measure, Bag et al. (2019) proposed two similarity measures: Relevant Jaccard (RJaccard) and Relevant Jaccard Mean Squared Difference (RJMSD). Their drawbacks are: (1) They compute inaccurate similarity when both the users rated the items with equal ratings. (2) They only consider the frequency of co-rated and non-co-rated items and ignore the similarity computation intensity. Apart from this, Ayub et al. (2019) also proposed the IPWR_Variance and IPWR_SD. The author integrated improved PCC with rating preference behavior (RPB) to calculate the similarity. Besides this, Ayub et al. (2020) also proposed two effective models, Rating-Jaccard and Rating-Jaccard-RPB. The new similarity models computed inaccurate similarity when the users did not have equal rating items. To overcome the sparsity issue of the CF technique, a new method improvised Gower's Jaccard (iGJ) is proposed in this paper. In the next section, we focussed on the time decay function used in CF technique.

2.2 Time decay functions in collaborative filtering‑based recommendation

Classic recommendation methods utilize the rating information to calculate the similarity whereas, time information is not considered. Therefore, the traditional recommendation algorithms may not generate the appropriate nearest neighbor set for the target user. In this case, the recommendation outcomes may have low precision. To overcome this, an time-weighted recommendation system is presented. Since the user's interests change over time, the same item may receive different ratings at various periods. Therefore, several researchers used the time function in their CF-based methods. In the implication of the time decay function, two things are crucial: (1) Selection of the appropriate time decay function and (2) The level at which the time decay functions are implemented. This section discusses the previous study on the time decay functions and the level at which they can be applied. The list of popular time decay functions is given in Table 2.

The first time-based recommendation algorithm was developed by Zimdars et al. (2013), who reframed the recommendation issue as a time series prediction problem. Nowadays, most of the subsequent research is cantered on time-based recommendations. Most time decay functions include Exponential, Power, Logistic, Convex, Concave and Linear (Ding and Li 2005; Larrain et al. 2015; Xu et al. 2019).

Since the taste of users changes over time and old data becomes obsolete, the relevance of time cannot be ignored in the accuracy of prediction algorithms (Ding and Li 2005). Lee et al. (2008) developed a pseudo-rating CF approach based on implicit feedback data. The author considered the user's purchase time and the item's rating time for finding the weight decay to improve suggestion accuracy. Gong and Cheng (2008) implemented a technique for analysing the user's interest change with the CF model. In this, a predetermined weight is used to decay all users' ratings based on item rating time. Xia et al. (2010) proposed a dynamic item-based recommendation system using concave, convex, and linear time decay functions. Zheng and Li (2011) used a power decay function to improve the performance of a tag-based recommender after filtering their data based on the recency of tagging interactions. Wu et al. (2012) integrated user and item-based collaborative filtering with the power decay function for social tagging label prediction in a digital library. Li et al. (2013) considered the time component and proposed a time weight iteration model based on the principle of memory. Huang and Song (2014) enhanced a tag-based recommender by using a two-step filtering method that used a linear decay function to simulate the recency effect of interactions. Chen et al. (2021) expand the concept of human brain memory to describe the degree of a user's interests (i.e., immediate, short-term, or long-term) and present the Dynamic Decay Collaborative Filtering (DDCF) method to modify the decay function depending on users' actions.

These temporal decay functions can be used at three separate stages of the recommendation process: Similarity Computation (SC), Rating Matrix (RM), and Prediction (P). For instance, Ma et al. (2016) applied exponential function at the prediction level to predict the time-weighted ratings. In this, author uses a hierarchical structure between items to improve similarity. Xu et al. (2019) applied the exponential function with improved ACOS functions at the similarity computation level. These current time-dependent recommendation algorithms generally add time factors in the training phase. Apart from these, a time weighting similar user selection technique is presented in Zhang et al. (2019) that employs the logistic function to weight the scores of users and items. In this, initially the evaluation time of the historical score is recorded, and then the logistic function is adopted to calculate the time weighting coefficient according to the time. In addition, some researchers use time-relative models to improve the quality of recommendations in the recommendation process. For instance, an opportunity model to estimate the probability of purchasing a product at a specific time was proposed (Wang and Zhang 2013).

To the best of our knowledge, only a single decay function is used in most of the literature work, to evaluate the changes in user preference. While a single decay function may not be sufficient to reflect the users' preference changes, we studied multiple decay functions in this paper. In addition, in the literature , the implementation of the time decay function only at one level (of the recommendation process) is described. In this paper, we applied multiple time decay functions at several levels of the recommendation process, i.e., similarity computation, rating matrix, and prediction levels and find out experimentally the level at which the results are more accurate. Table 3 lists all the notations used in the paper.

3 Motivation for the new similarity measure

In the CF approach, researchers have proposed many similarity measures. This section analyses the major shortcomings of existing similarity measures, stated in Table 1.

The cosine measure computes high correlation despite having significant differences between their ratings. For instance, it computes the maximum similarity between user 1 (0,0,1,0,0,0) and user 2(0,0,5,0,0,0). In contrast, their rating preferences indicate that incorrect similarity is calculated between these users, as both have rated only one item (I3). Apart from this, the PCC measure calculates zero similarity between user 3(1,0,1,2,0,0) and user 4 (5,0,1,0,0,0), even they give similar ratings to certain items. The MSD measure ignores the proportion of common ratings. JMSD measure computes less similarity between user 5 (4,0,3,0,0,0) and user 6 (4,3,3,4,4,3) in comparison to user 4 (5,0,1,0,0,0) and user 5 (4,0,3,0,0,0). This is an inaccurate similarity calculation as users 5 and 6 have more similar rating items than users 4 and 5. The drawback of the NHSM measure is that it has a complex formula for similarity computation, and it computes zero similarity between user 7(0,1,0,0,0,0) and user 8 (0,3,0,5,5,3). Similarly, TMJ computes zero similarity between user 5(4,0,3,0,0,0) and user 6(4,3,3,4,4,3). The RJaccard and RJMSD both compute inaccurate similarity when both the users rated the items with equal ratings. They only consider the frequency of co-rated and un-co-rated items and ignore the intensity for the similarity computation. RJaccard and RJMSD calculates the same similarity between user 3(1,0,1,2,2,0)–6(4,3,3,4,4,3), and 6 (4,3,3,4,4,3)–8 (0,3,0,5,5,3), while their rating preferences indicate that similarity between users 6–8 should be more. In the same way, Rating_Jaccard and Rating_Jaccard_RPB computed inaccurate similarity when the users do not have equal rating items. For example, consider user 7(0,1,0,0,0,0) and user 8(0,3,0,5,5,3), Rating_Jaccard and Rating_Jaccard_RPB computes zero similarity in this case. Furthermore, IPWR_Variance and IPWR_SD ignore the user’s global preference.

To overcome the drawback of the existing measures, a new similarity measure is proposed in the next section. Also, these measures use the historical ratings while computing similarity, but the user preferences change over time; therefore, with the help of proposed method and time function, a time-based recommendation system is also developed in this paper.

4 Proposed similarity method

The traditional CF based RS algorithms do not give importance to the fact that the user’s interest changes with time. Therefore, considering time becomes crucial for the performance of the recommendation system. Taking this into consideration, the research proposes to evaluate various time decay functions by applying them at various stages of the recommendation process using a novel similarity measure iGJ.

4.1 Improvised Gower Jaccard (iGJ) similarity coefficient

In 1971, J.C. Gower's introduced the most common proximity measure for mixed data types, known as Gower's coefficient (Podani 1999). It can work on heterogeneous data such as binary, categorical, and ordinal data and can also be applied to quantitative and qualitative data. It also has the advantage of working effectively when some ratings in the data matrix are missing (Fontecha et al. 2014). Podani (1999) developed an enhanced version of Gower's coefficient, which assessed the similarity between ordinal characters. ben Ali and Massmoudi (2013) presented a study on Gower's coefficient and k-means clustering. Fontecha et al. (2014) used Gower's coefficients to create a novel mobile service system to increase the accuracy of frailty assessments in an elderly population. Gower's coefficient is used to calculate similarity in the following way:

Equation 1 calculates the similarity value between 0 and 1, where zero indicates the lowest similarity and one corresponds to high similarity. Calculating the zero similarity is feasible only when no co-rated items exist between two users. Its value cannot be greater than one. For each user item, the values of \({\mathrm{W}}_{{\mathrm{u}}_{\mathrm{a}}{\mathrm{u}}_{\mathrm{b}}\mathrm{k}}\) and \({\mathrm{D}}_{{\mathrm{u}}_{\mathrm{a}}{\mathrm{u}}_{\mathrm{b}}\mathrm{k}}\) for various categories of data variables may be determined as follows:

For Categorical Variables:

For Binary Variables:

For Ordinal Variables:

Gower's coefficient was applied to the user-item rating matrix to calculate similarity among users, which helps determine the neighbours and generate the prediction. It is observed that Gower computes incorrect similarity in some instances during the similarity calculation phase. This is the case when the numerator and denominator become equal in Eq. 2. For example, consider two users' user-1 (5,3) and user-2 (2,1); Gower's coefficient calculates 0 similarities among these users, which is false. To address this, we suggest an improvised Gower (iG) coefficient in Jain et al. (2021). The following formula is used to compute the similarity using the improvised Gower's coefficient:

where,

The improvised Gower's coefficient computes the non-zero similarity between users who have rated at least one common item.

To give weightage to the common items, the Jaccard measure is combined with the improvised Gower’s coefficient. The Jaccard coefficient is defined as the ratio of the intersection to the union of sample sets. It considers only the number of common ratings among two users while computing similarity. Similarity using the Jaccard coefficient can be investigated as follows:

Thus, the proposed similarity measure improvised Gower-Jaccard (iGJ) is the combination of improvised Gower's and Jaccard's similarity measures. The main reason for selecting Gower's coefficient is that it works well even when some entries are missing in the data matrix. Moreover, most of the ratings are absent in the user-item rating matrix dataset, requiring the incorporation of all the common ratings for similarity computation. For this, Jaccard is the most suitable measure. The similarity computation formula of the proposed improvised Gower's Jaccard (iGJ) similarity measure is rendered in Eq. 5.

The proposed measure iGJ considers all available rating data while calculating similarity, thereby reducing the sparsity problem.

4.2 Theoretical performance evaluation of iGJ measure

This section states that the proposed iGJ successfully overcomes the existing methods' shortcomings (described in Sect. 3).

As discussed in Sect. 3, the cosine measure computes the maximum similarity between users when they have only one co-rated item. iGJ overcomes this flaw and computes non-zero similarity (0.125) in this case. Additionally, the proposed iGJ method calculates the non-zero similarity between users and removes the lack of zero similarity computation by some measures discussed in Sect. 3. For example, iGJ calculates the non-zero similarity between users 3 and 4 (= 0.125), between users 5 and 6 (= 0.333), and between users 7 and 8 (= 0.062). iGJ also overcames the drawback of MSD measure by considering the common and absolute ratings. It also considers the user's global preference. It computes better similarity between users 5 and 6 (0.333) as compared to users 4 and 5 (= 0.219) and overcomes the flaw of the JMSD measure. It also overcomes the weakness of RJaccard and RJMSD measures by computing more similarity between users 6 and 8(0.333) than users 3 and 6(0.194). The Rating_Jaccard and Rating_Jaccard_RPB computed inaccurate similarity when the users do not have equal rating items. iGJ computed 0.062 similarity in this case. Thus, with the help of this discussion, we can state that our proposed method performs better than existing approaches. Section 5, evaluated the performance of iGJ on a real-world dataset.

In e-commerce, it is found that time is also an important factor because the choice of individuals changes more frequently with time. So, to improve system efficiency and give importance to the recent data, six-time decay functions with our proposed measure in the collaborative filtering technique is used. These time decay functions can be applied in three ways, and a discussion is presented in the next section.

4.3 iGJ coefficient with time decay functions

In CF, a time decay function can be applied at the following three places of the recommendation process: (1) Construction of Rating Matrix, (2) Similarity Computation (3) Prediction of rating. These three steps are very important in CF technique to make the recommendation, therefore various time decay functions as listed in Table 2 are applied at these three levels to determine their accuracy. In each TDF, different tuning parameters are used. The value of those parameters can be obtained through various experiments. Since similarity computation is crucial in the recommendation process, we first applied all the time decay functions at the similarity computation level to obtain the best values of the tuning parameters. In the next two levels, i.e., rating matrix and prediction levels, we used the best values of the tuning parameters of the time function. A detailed definition of each of them is given below:

4.3.1 Time decay function at similarity computation level (TDF@SCL)

In this approach, iGJ with various time decay functions are applied at the similarity computation level of the recommendation system. To obtain similarity, the actual ratings present in the dataset are used and then iGJ similarity measure with various TDF given in Table 2 are applied. The appropriate similarity is calculated as follows:

where TDF (Δt) is a time decay function, it can be exponential, power, logistic, linear, concave, and convex. After calculating the similarity using Eq. 6, the nearest neighbors of the target users are determined to generate the prediction using the formula given below.

4.3.2 Time decay function at rating matrix level (TDF@RML)

In this approach, we first convert actual ratings of the dataset into time-weighted ratings using the TDF and then apply the iGJ similarity measure to them to obtain the similarity.

In the time-based RS, the ratings that are closer to the current time achieved the larger weighting coefficient. After calculating the similarity and determining the neighbors, a prediction is made for each target user, using Eq. 7.

4.3.3 Time decay function at prediction level (TDF@PL)

The prediction step is performed in the CF-based RS after calculating the similarity and determining the neighbors. In this approach, we first calculate the similarity using our proposed method (Eq. 5) and then determine each target user's neighbor set. After obtaining the neighbors, we incorporated the time decay function in the prediction formula to get the predicted ratings. The prediction formula (Jain et al. 2020) for this approach is given in Eq. 9.

In the next section, the efficiency of iGJ is displayed with a comparison of the traditional and recently proposed method. In addition, the performance of each time decay function is also examined at various levels of the recommendation process. Figure 1 shows the experimental model of the traditional and time-based collaborative filtering method and the algorithm for the same is depicted below.

5 Experimental results

The proposed algorithm is implemented with the existing algorithms on the Scientific Python Programming Framework Spider 3.3.3. The systems architecture is Intel(R) Xeon(R) CPU E5-2680 v2@2.80 GHz, 64 GB RAM, and Microsoft Windows 10 Education operating system. Initially, the experimental setup includes a description of the dataset used, followed by the prediction algorithm. In the following subsection, evaluation metrics are described. The proposed method's efficiency is examined in the last segment.

5.1 Datasets

MovieLens-100K, Epinions, and Amazon Magazine datasets are used for performance evaluation of iGJ. Table 4 presents the details of datasets.

MovieLens-100k (Tan and He 2017): The Group Lens Research Group created the MovieLens-100k dataset at the University of Minnesota. In the dataset, every user rated at least 20 movies on a scale of 1–5, with one being worst and five being best. This dataset covers a total of 8-months of data.

Epinions (Manochandar and Punniyamoorthy 2020): The sparsity level of this dataset is very high. It covers almost 12 years of data. The dataset ratings are present on a scale of 1–5. It's a system for rating goods and services from various sources.

Amazon Magazine Subscription (Ni et al. 2019): The ratings in the dataset are in the range of 1–5, where one being worst and five being best. This dataset covers more than 22 years of data.

5.2 Evaluation metrics

Determining the accuracy of an algorithm in a recommendation system is a challenging issue because algorithms behave differently depending on the input dataset. An evaluation metric (Nguyen et al. 2021) is used to test such algorithms, which can be classified mainly into Predictive accuracy metrics (MAE, RMSE) and Classification accuracy metrics (Precision, recall, F1-measure, and accuracy). Predictive accuracy metrics is applied to test the algorithms. In MAE and RMSE, the predicted ratings are compared with the actual ratings, and their lower values indicate that method's better performance. The formulas for the MAE and RMSE metrics are given below:

5.3 Experimental results

In this section, the experiment results are displayed in two parts. In the first part, the comparison between the proposed similarity measure iGJ and several other existing measures is shown. In the next part, the results of applying the various time decay functions with iGJ at various levels of the recommendation process, i.e., rating matrix, similarity computation levels, and the prediction level, are shown. Also, the number of neighbors is an important parameter to evaluate the performance of CF-based RS; therefore, in both the experiments, we assess the results at different-2 neighbors, i.e., 20, 60, 100, 150, 200. For the evaluation, the MAE and RMSE metrics are utilized.

5.3.1 Performance evaluation of iGJ

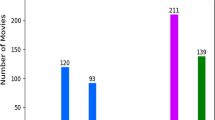

This experiment aims to test the predictive accuracy of the iGJ with the existing similarity measures, i.e., PCC, Cosine, Jaccard, NHSM Rating_Jaccard, RJaccard, etc. For this, a very popular and most commonly used ML-100k dataset is used. The MAE and RMSE results on the are displayed in Figs. 2 and 3, respectively.

From both the figures, it is clear that the proposed method iGJ calculated lower MAE and RMSE values compared to all other methods. After the iGJ, the performance of NHSM and Jaccard measures is good. At k = 20, the IPWR_Variance, IPWR_SD, Rating_Jaccard, and Rating_Jaccard_RPB are almost similar in MAE comparison, while RJMSD shows poor results for the remaining k's value. In RMSE comparison, at k = 20, Rating_Jaccard_RPB gives the worst results, while the RJSMD measure gives the worst results for the remaining values of k's.

Through this experiment, we can state that the iGJ outperforms the other methods as its calculated MAEs and RMSEs values are lower than other methods for every k’s values. In the next section, we display the results of applying the several time decay functions with iGJ on various level of recommendation process.

5.3.2 Experimental results of applying iGJ with time decay function (iGJ-TDF)

In the CF technique, three steps are very important to make the recommendation; construction of the user-item rating matrix, similarity computation, and predicting the rating for the target users. The time functions listed in Table 2 are applied on these three levels. Since, the performance of recommendation systems largely depends upon the effective similarity calculation, henceforth, we firstly apply all the time decay functions at the similarity computation level and then apply at rating matrix and prediction levels. To test the performance, we used three datasets i.e., ML100k, Epinions, and Amazon Magazine dataset. The best results in each Table are boldfaced.

5.3.2.1 Time decay function at similarity computation level (TDF@SC)

In this, iGJ measure is applied with exponential, concave, convex, linear, logistic, and power time functions at similarity computation level. A tuning parameter is used in each time decay function, whose best value in the range of 0.5 to 0.9 is obtained by experiments. Experimental results on ML-100k, Epinions, and Amazon Magazine datasets are shown in Tables 5, 6, and 7, respectively.

From the results, it is evident concave, convex, exponential, linear, logistic, and power function gives the best results at 0.9,0.5,0.5,0.7,0.9,0.6, respectively for all the three datasets. The experimental results also indicate that, among all time functions, the convex function gives better results, when applied that at the similarity computation level. Next, we applied a time function on the rating and prediction level using these best tuning parameter values, displayed through Table 8.

5.3.2.2 Time decay function at rating matrix level (TDF@RM)

Table 8 shows the best values of the tuning parameters of each time function, which are determined through the experiment in the previous step. In this section, the results of applying time decay functions at only the best values of tuning parameters at the rating matrix level are depicted. For this, original ratings are converted into time-weighted ratings using time decay functions and then iGJ similarity measure is applied to compute the similarity among users. Experimental results given in Table 9 indicate that the performance of the power function is incomparable from all other functions on all datasets. The MAE and RMSE value of that is very low than other; hence it is superior.

5.3.2.3 Time decay function at prediction level (TDF@P)

This section demonstrates the experimental results of applying time decay functions at the prediction level of the recommendation process. Like the rating matrix level, TDF is applied with the best values of the tuning parameters. The experimental results displayed in Table 10 show that the convex function gives lower MAE and RMSE than all other time functions on all three datasets.

The best values of iGJ with time functions are compared with iGJ without time function on the ML-100k, Epinions and Amazon Magazine datasets and their results are presented in Figs. 4, 5 and 6, respectively. From the results, we can conclude that at similarity computation and prediction level, convex function demonstrates best performance, while at rating matrix level power function depicts satisfactory results. With comparison to all these two, iGJ with power function at the rating matrix level gives the best results. Hence it is recommended as it also outperforms the iGJ similarity measure without the time function.

6 Conclusion and future scope

To overcome the sparsity issue, a novel CF-based method iGJ is proposed in this paper. It outperforms other algorithms, i.e., Rating_Jaccard, RJaccard, RJMSD, Cosine, TMJ, etc. Along with this, time decay functions also integrated with the iGJ algorithm at the three levels of recommendation process. The experimental results indicate that the convex function provides best results at the similarity computation level and prediction level. In contrast, the power function produces good results at the rating matrix level. In all three, the result at the rating matrix level is much better than the other two approaches. So, through this paper, we can state that the results of the time based approach is better than the non-time-based approach. When a time function is applied with iGJ at similarity computation and prediction level, the convex function gives the best results in the time-based approach. At the rating matrix level, power function depicts satisfactory results. In comparison to these three, iGJ works efficiently when implemented with the power function at the rating matrix levels of the recommendation process. It performs even better than iGJ without time function. Therefore, this work concludes that the performance of the iGJ with the power decay function at the rating matrix level is better.

Most of the existing CF-based recommender systems cannot scale up in a real-time environment. Thus, one of the future scopes of work will be the real-time implementation of the RS based on the iGJ with the time decay function. Future work also includes the deployment of the proposed system using contextual information.

References

Adomavicius G, Mobasher B, Ricci F, Tuzhilin A (2011) Context-aware recommender systems. AI Mag 32:67–80. https://doi.org/10.1609/aimag.v32i3.2364

Ahn HJ (2008) A new similarity measure for collaborative filtering to alleviate the new user cold-starting problem. Inf Sci 178:37–51. https://doi.org/10.1016/j.ins.2007.07.024

Al-bashiri H, Abdulgabber MA, Romli A, Hujainah F (2017) Collaborative filtering similarity measures: Revisiting. In: ACM international conference proceeding series part F1312, pp 195–200.https://doi.org/10.1145/3133264.3133299

Anandhan A, Shuib L, Ismail MA, Mujtaba G (2018) Social media recommender systems: review and open research issues. IEEE Access 6:15608–15628. https://doi.org/10.1109/ACCESS.2018.2810062

Ayub M, Ghazanfar MA, Khan T, Saleem A (2020) An effective MODEL for Jaccard coefficient to increase the performance of collaborative filtering. Arab J Sci Eng 45:9997–10017. https://doi.org/10.1007/s13369-020-04568-6

Ayub M, Ghazanfar MA, Mehmood Z et al (2019) Modeling user rating preference behavior to improve the performance of the collaborative filtering based recommender systems. PLoS ONE. https://doi.org/10.1371/journal.pone.0220129

Bag S, Kumar S, Tiwari M (2019) An efficient recommendation generation using relevant Jaccard similarity. Inf Sci. https://doi.org/10.1016/j.ins.2019.01.023

ben Ali B, Massmoudi Y (2013) K-means clustering based on gower similarity coefficient: a comparative study. In: 2013 5th international conference on modeling, simulation and applied optimization, ICMSAO 2013. https://doi.org/10.1109/ICMSAO.2013.6552669

Bulau J (2021) How much data is created every day in 2021? In: techjury. https://techjury.net/blog/how-much-data-is-created-every-day/#gref. Accessed 2 May 2022

Campos PG, Díez F, Cantador I (2014) Time-aware recommender systems: a comprehensive survey and analysis of existing evaluation protocols. User Model User-Adap Inter 24:67–119. https://doi.org/10.1007/s11257-012-9136-x

Chen YC, Hui L, Thaipisutikul T (2021) A collaborative filtering recommendation system with dynamic time decay. J Supercomput 77:244–262. https://doi.org/10.1007/s11227-020-03266-2

Ding Y, Li X (2005) Time weight collaborative filtering. In: International conference on information and knowledge management, proceedings, pp 485–492

Fontecha J, Hervás R, Bravo J (2014) Mobile services infrastructure for frailty diagnosis support based on Gower’s similarity coefficient and treemaps. Mob Inf Syst 10:127–146. https://doi.org/10.3233/MIS-130174

Ghazanfar MA, Prugel-Bennett A (2010) A scalable, accurate hybrid recommender system. In: 3rd international conference on knowledge discovery and data mining, WKDD 2010 94–98. https://doi.org/10.1109/WKDD.2010.117

Ghazarian S, Nematbakhsh MA (2015) Enhancing memory-based collaborative filtering for group recommender systems. Expert Syst Appl 42:3801–3812. https://doi.org/10.1016/j.eswa.2014.11.042

Gong SJ, Cheng GH (2008) Mining user interest change for improving collaborative filtering. In: Proceedings—2008 2nd international symposium on intelligent information technology application, IITA 2008, vol 3, pp 24–27. https://doi.org/10.1109/IITA.2008.385

He L, Wu F (2009) A time-context-based collaborative filtering algorithm. In: 2009 IEEE international conference on granular computing, GRC 2009, pp 209–213

Huang X, Song Z (2014) Clustering analysis on E-commerce transaction based on K-means clustering. J Netw 9:443–450. https://doi.org/10.4304/jnw.9.2.443-450

Isinkaye FO, Folajimi YO, Ojokoh BA (2015) Recommendation systems: principles, methods and evaluation. Egypt Inform J 16:261–273. https://doi.org/10.1016/j.eij.2015.06.005

Jain G, Mahara T (2019) An efficient similarity measure to alleviate the cold-start problem. In: 2019 15th international conference on information processing: Internet of Things, ICINPRO 2019—proceedings. IEEE

Jain G, Mahara T, Sharma SC (2021) A collaborative filtering-based recommendation system for preliminary detection of COVID-19. In: Advances in intelligent systems and computing, pp 27–40

Jain G, Mahara T, Tripathi KN (2020) A survey of similarity measures for collaborative filtering-based recommender system. In: Advances in intelligent systems and computing, pp 343–352

Jain G, Mishra N, Sharma S (2013) CRLRM: category based recommendation using linear regression model. In: Proceedings—2013 3rd international conference on advances in computing and communications, ICACC 2013, pp 17–20. https://doi.org/10.1109/ICACC.2013.11

Jin Q, Zhang Y, Cai W, Zhang Y (2020) A new similarity computing model of collaborative filtering. IEEE Access 8:17594–17604. https://doi.org/10.1109/ACCESS.2020.2965595

Kant S, Mahara T (2018) Merging user and item based collaborative filtering to alleviate data sparsity. Int J Syst Assur Eng Manag 9:173–179. https://doi.org/10.1007/s13198-016-0500-9

Konstan JA, Miller BN, Maltz D et al (1997) Applying collaborative filtering to Usenet news. Commun ACM 40:77–87. https://doi.org/10.1145/245108.245126

Koren Y (2009) Collaborative filtering with temporal dynamics. In: Proceedings of the ACM SIGKDD international conference on knowledge discovery and data mining, pp 447–455. https://doi.org/10.1145/1557019.1557072

Larrain S, Trattner C, Parra D et al (2015) Good times bad times: a study on recency effects in collaborative filtering for social tagging. In: RecSys 2015—proceedings of the 9th ACM conference on recommender systems. Association for Computing Machinery, Inc, pp 269–272

Lee TQ, Park Y, Park YT (2008) A time-based approach to effective recommender systems using implicit feedback. Expert Syst Appl 34:3055–3062. https://doi.org/10.1016/j.eswa.2007.06.031

Li D, Cao P, Guo Y, Lei M (2013) Time weight update model based on the memory principle in collaborative filtering. J Comput (finl) 8:2763–2767. https://doi.org/10.4304/jcp.8.11.2763-2767

Liu H, Hu Z, Mian A et al (2014) A new user similarity model to improve the accuracy of collaborative filtering. Knowl-Based Syst 56:156–166. https://doi.org/10.1016/j.knosys.2013.11.006

Ma H, King I, Lyu MR (2007) Effective missing data prediction for collaborative filtering. In: Proceedings of the 30th annual international ACM SIGIR conference on Research and development in information retrieval—SIGIR ’07, p 39. https://doi.org/10.1145/1277741.1277751

Ma T, Guo L, Tang M et al (2016) A collaborative filtering recommendation algorithm based on hierarchical structure and time awareness. IEICE Trans Inf Syst E99D:1512–1520. https://doi.org/10.1587/transinf.2015EDP7380

Manochandar S, Punniyamoorthy M (2020) A new user similarity measure in a new prediction model for collaborative filtering. Appl Intell. https://doi.org/10.1007/s10489-020-01811-3

Nguyen LV, Nguyen TH, Jung JJ (2021) Tourism recommender system based on cognitive similarity between cross-cultural users. In: Intelligent environments 2021: workshop proceedings of the 17th international conference on intelligent environments. IOS Press, pp 225–232

Ni J, Li J, McAuley J (2019) Justifying recommendations using distantly-labeled reviews and fine-grained aspects. In: EMNLP-IJCNLP 2019—2019 conference on empirical methods in natural language processing and 9th international joint conference on natural language processing, proceedings of the conference, pp 188–197. https://doi.org/10.18653/v1/d19-1018

Patra BK, Launonen R, Ollikainen V, Nandi S (2014) Exploiting Bhattacharyya similarity measure to diminish user cold-start problem in sparse data. In: Lecture notes in computer science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics), vol 8777, pp 252–263.https://doi.org/10.1007/978-3-319-11812-3_22

Podani J (1999) Extending Gower’s general coefficient of similarity to ordinal characters. Taxon 48:331–340

Schafer JB, Frankowski D, Herlocker J, Sen S (2007) Collaborative filtering recommender systems. In: Lecture notes in computer science (including subseries lecture notes in artificial intelligence and lecture notes in bioinformatics) vol 4321. LNCS, pp 291–324 https://doi.org/10.1007/978-3-540-72079-9_9

Senior A (2017) Pearson’s and cosine correlation. In: International conference on trends in electronics and informatics ICEI 2017 calculating 05 June 20, pp 1000–1004

Su X, Khoshgoftaar TM (2009) A survey of collaborative filtering techniques. Adv Artif Intell 2009:1–19. https://doi.org/10.1155/2009/421425

Sun SB, Zhang ZH, Dong XL et al (2017) Integrating triangle and Jaccard similarities for recommendation. PLoS ONE. https://doi.org/10.1371/journal.pone.0183570

Suryakant, Mahara T (2016) A new similarity measure based on mean measure of divergence for collaborative filtering in sparse environment. Procedia Comput Sci 89:450–456. https://doi.org/10.1016/j.procs.2016.06.099

Tan Z, He L (2017) An efficient similarity measure for user-based collaborative filtering recommender systems inspired by the physical resonance principle. IEEE Access 5:27211–27228. https://doi.org/10.1109/ACCESS.2017.2778424

Wang J, Zhang Y (2013) Opportunity models for e-commerce recommendation: right product, right time. In: SIGIR 2013—proceedings of the 36th international ACM SIGIR conference on research and development in information retrieval. ACM, pp 303–312

Wang Y, Deng J, Gao J, Zhang P (2017) A hybrid user similarity model for collaborative filtering. Inf Sci 418–419:102–118. https://doi.org/10.1016/j.ins.2017.08.008

Wu D, Yuan Z, Yu K, Pan H (2012) Temporal social tagging based collaborative filtering recommender for digital library, pp 199–208

Xia C, Jiang X, Liu S, et al (2010) Dynamic item-based recommendation algorithm with time decay. In: Proceedings - 2010 6th international conference on natural computation, ICNC 2010. IEEE Computer Society, pp 242–247

Xu G, Tang Z, Ma C et al (2019) A collaborative filtering recommendation algorithm based on user confidence and time context. J Electr Comput Eng. https://doi.org/10.1155/2019/7070487

Yu C, Huang L (2017) CluCF: a clustering CF algorithm to address data sparsity problem. SOCA 11:33–45. https://doi.org/10.1007/s11761-016-0191-8

Zhang L, Zhang Z, He J, Zhang Z (2019) Ur: a user-based collaborative filtering recommendation system based on trust mechanism and time weighting. In: Proceedings of the international conference on parallel and distributed systems—ICPADS. IEEE Computer Society, pp 69–76

Zheng N, Li Q (2011) A recommender system based on tag and time information for social tagging systems. Expert Syst Appl 38:4575–4587. https://doi.org/10.1016/j.eswa.2010.09.131

Zimdars A, Chickering DM, Meek C (2013) Using temporal data for making recommendations. https://doi.org/10.48550/arXiv.1301.2320

Funding

No funding was received to assist with the preparation of this manuscript.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Jain, G., Mahara, T. & C.Sharma, S. Effective time context based collaborative filtering recommender system inspired by Gower’s coefficient. Int J Syst Assur Eng Manag 14, 429–447 (2023). https://doi.org/10.1007/s13198-022-01813-z

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13198-022-01813-z