Abstract

This study focuses on evaluating the capability and contribution of using backscatter intensity image and textural bands from Sentinel-1A synthetic aperture radar (SAR) data for reducing the limitation of optical image classification and improving the classification accuracy. The study was carried out at Theni district of Tamil Nadu, India, which is characterized by very heterogeneous features. The optical multispectral images such as Linear imaging self scanning sensor-IV (LISS-IV), Sentinel-2A and Landsat8 were used. Support vector machine classifier performed on the different combination of SAR, optical image and texture features. Results showed that the optimal window size was 11 × 11, and mean and variance are optimal textural bands of gray-level co-occurrence matrix techniques. The best classification result was achieved with the combination of LISS-IV and Sentinel-1A-derived features (backscatter intensity and texture features) with an overall accuracy up to 78.49% and a kappa coefficient of up to 0.68, respectively. The combination of optical image and Sentinel-1A data decreased the spectral confusions between the classes, provided better classification results, and reasonably improved the accuracy.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Determination of land cover and green area change related to urban area and its immediate surroundings: land use change is due to human activities and natural factors. Land cover is one of the most important data used to demonstrate the effects of land use changes, especially human activities. Production of land use maps can be done using different methods on satellite images. Some studies have produced land cover maps of the controlled classification technique over Landsat satellite imagery. Using land cover maps, the changes in urban development and green areas over time have been evaluated. At the same time, the relationship between changes in the land cover over time and changes in the urban population has been (Cetin 2015, 2016; Cetin et al. 2018; Kaya et al. 2018).

The generation of the thematic maps, such as those describing land cover, using a land use/land cover (LULC) classification is one of the widely used applications of remote sensing. Remote sensing has the characteristics of synoptic repetitive coverage and provides accurate and detailed observation of data at various spatial and temporal scales. It is a powerful tool to monitor the Earth’s surface, particularly in LULC classification (Ward et al. 2000). Remote sensing in the optical band has numerous advantages such as image acquisition at near nadir, high resolution and easily understands the earth features. It is a well-established tool for LULC classification and many studies have been carried out since the last four decades. However, LULC classification remains a difficult task in optical sensor due to cloud cover (Asner 2001), adverse weather conditions (Lu and Weng 2006), large number of mixed pixels, similarities in spectral reflectance across a landscape (Joshi et al. 2016), and heterogeneous landscapes (Kuzucu and Balcik 2017). For countries like India with varied climatic zone, all these factors limit the accuracy of image classification and temporal LULC classification is also very difficult. In this context, few studies have also been conducted for multispectral image fused with hyperspectral images which enable more accurate discrimination of land features and improve the classification accuracy (Walsh et al. 2008). However, most of the hyperspectral sensors have medium resolution (30 m) which is a major drawback for the identification of highly heterogeneous environment, targets of poor densities or small objects, narrow swath width and fewer satellite data sources.

One of the most advanced technologies is synthetic aperture radar (SAR) sensors, which has numerous advantages over optical sensors that are used to acquire polarimetric properties of objects or target under observation and capable of determining the physical properties of objects (Zakeri et al. 2017). In addition, SAR data can provide complementary information on optical data that reduce the limitation of optical image classification and improve the classification accuracy (Zhang et al. 2015). A combined product of optical and SAR data is more informative than that of the individual sensor. The response of SAR data is based on geometry and structure of target features, while the optical sensors response depends on the reflectance from the target (Parihar et al. 2016). Therefore, a higher image classification accuracy is achievable by combined image products (Solberg et al. 1994; Brisco and Brown 1995; Stramondo et al. 2006).

Furthermore, spectral and backscattering intensity alone is not sufficient for image classification (Wu et al. 2006). Therefore, textural features represent a significant source of information regarding the spatial relation of the pixel value (Zakeri et al. 2017). The textural bands, in addition to the spectral bands or backscattering intensity, have been used in image classification to bring out subclass variation and to improve the LULC classification accuracy (Haralick et al. 1973; Franklin et al. 2001; Ndi Nyoungui et al. 2002; Wu et al. 2006, 2015; Zakeri et al. 2017; Mishra et al. 2017). The gray-level co-occurrence matrix (GLCM) is one of the most trustworthy methods which uses a gray-tone spatial dependence matrix to calculate texture values (Zakeri et al. 2017).

Classification is the process of categorizing the pixels of the image into LULC classes by creating maps (Lillesand et al. 2004). This helps to identify the features in the image in terms of the LULC which corresponds to actual features on the ground. Various methods have been proposed to assist the interpretation and the classification of optical and SAR data (Lu and Weng 2007; Mishra et al. 2011). The Maximum Likelihood (ML) classifier is the most common as it provides acceptable accuracy in spite of its limitations due to the normal distribution of class signature (Mishra et al. 2014). The support vector machine (SVM) is considered as appropriate techniques because of the normal distribution of data and, therefore, are more suitable for classifying optical and SAR images (Gao 2010). In recent years, SVM classifier has been frequently used for LULC classification (Fukuda and Hirosawa 2001; Zou et al. 2010; Niu and Ban 2013; Sambodo and Indriasari 2013; Wu et al. 2015; Muthukumarasamy et al. 2017; Mishra et al. 2017). The objective of this study was to assess the capability and contribution of using backscatter intensity image and textural bands from Sentinel-1A data to reduce the limitation of optical image classification and improve the classification accuracy.

Study area and datasets

Study area

The study area is the part of Theni district (9° 50′ N to 9° 54′ N and 77° 20′ E to 78° 24′ E) of Tamil Nadu, India and is a part of the Western Ghats System. It is a mostly hilly area with intermittent plain. This region enjoys a salubrious climate with temperature ranging from 26.3 to 38.5 °C (Thanabalan and Vidhya 2016). The seasonal Vaigai river meets the major water demand of the region (Magesh et al. 2012). Theni district was selected as the test case, as it is characterized by very heterogeneous features such as coconut plantation, cropland, barren land, scrub forest and settlement. At the time of image acquisition, the site had agriculture land as current fallow land and was a big challenge for classification due to the spectral confusion between current fallow land and settlement in optical data. The study area map is given in Fig. 1.

Satellite data sets

The data used in the research were acquired by LISS-IV, Landsat 8 and Sentinel series satellites, operated by the Indian Space Research Organization (ISRO), the National Aeronautics and Space Administration (NASA) and the European Space Agency (ESA), respectively. The specification and characteristic of optical and SAR sensors are given in Table 1. Resourcesat-2 was launched on 20th April 2011 and carries three sensors mounted on a single platform such as high-resolution sensors LISS-IV, medium-resolution LISS-III and a coarse-resolution AWiFS (NRSC 2011). The LISS-IV image was acquired on 10th February 2017 and includes 4 multi-spectral bands with 5.8 m spatial resolution. The Landsat 8 satellite was launched on February 11, 2013. It carries two instruments namely Operation Land Imager (OLI) and Thermal Infrared Sensor (TIRS) (Zakeri et al. 2017). OLI and TIRS contain nine spectral bands including one panchromatic band and two spectral bands, respectively. The Landsat 8 OLI image was acquired on 19th February 2017 and includes a panchromatic band with 15 m, and multi-spectral bands with 30 m spatial resolution. Sentinel-2A was launched on 23rd June 2015 and carries an optical instrument payload with 13 spectral bands for global land observation (Heiselberg 2016; Ng et al. 2017). Sentinel-2A was acquired on 23rd February 2017 and includes four bands at 10 m, six bands at 20 m and three bands at 60 m spatial resolution (Verhegghen et al. 2016; de Oliveira Silveira et al. 2017). The Sentinel-1A was launched on 3rd April 2014 and carries an advanced C-band radar instrument with a center frequency of 5.40 GHz in four exclusive imaging modes with different resolution (down to 5 m) and coverage (up to 400 km) (Omar et al. 2017; Zakeri et al. 2017). The Sentinel-1A image captured on 27th February 2017 had the following characteristics: polarization bands of VH and VV, interferometric wide swath (IW) mode, incident angle of 34.02° and a spatial resolution of 10 m. Moreover, both optical and SAR images cover the entire study area in one scene.

Accuracy assessment

An accuracy assessment was carried out using confusion matrix which shows the accuracy of a classification result by comparing the classification result with ground truth information. It gives the agreement between the classifier and the ground truth data region of interests (ROIs). In this study, the ROIs were selected using LISS-IV image with the help of ground truth measurements. The ROIs contains the polygon of different land features such as agriculture fallow land, plantation, scrub forest, barren land and settlement. The ground truth image or land use/cover map of the study area was prepared from LISS-IV by visual interpretation with very-high-resolution Google Earth image and ground truth verification. The ground truth ROI, ground truth image and ground truth verification are shown in Fig. 2.

Methods

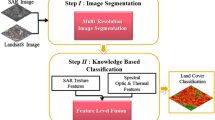

In this study, different techniques are involved such as image pre-processing, textural analysis, image classification techniques and accuracy assessment for land use/cover classification using dual polarization SAR data and optical multi-spectral data (Fig. 3).

Pre-processing

The preprocessing of Sentinel-1A ground range detected (GRD) product was conducted using SNAP (sentinel application platform) toolbox, including the following processes: radiometric calibration, speckle filtering and geocoding. The radiometric calibration process was performed to convert the digital numbers (DN) into sigma naught and backscatter coefficient (dB) images. The computation of backscatter (σ0 dB) can be performed as (Omar et al. 2017)

where, σ0 (dB)—backscattering image in dB and σ0—sigma naught image.

To reduce speckle, a lee filter with 3 × 3 windows was applied to backscattering (dB) images. Further, the images were geometrically rectified using Range Doppler Terrain Correction method using shuttle radar topography mission digital elevation model (SRTM DEM) with a spatial resolution of 30 m. The preprocessed Landsat8 OLI, Sentinel-2A and LISS-IV images were used in the present study. All the images were geometrically rectified using ground control points (GCPs) in ERDAS imagine software, with the root mean square error (RMSE) less than 0.5 pixels.

Texture features

Spatial information in the form of texture features can be used for image classification. The texture is the pattern of intensity variations in an image and can be a valuable tool in improving land cover classification accuracy. The GLCM is a matrix that is computed based on statistics the gray values among pixels with given distance and angle (Wei et al. 2012). Several texture features can be computed from the GLCM matrix, e.g., contrast, dissimilarity, homogeneity, angular second moment (ASM), energy, entropy, mean, variance and correlation. The window size is a most important parameter for measuring the texture features. In this study, after several experiments, different window sizes (5 × 5, 7 × 7, 9 × 9 and 11 × 11) were evaluated to obtain the appropriate window size. To reduce the influence of the angle (direction) and improve the texture features extraction accuracy, they are detected in different angles of 0°, 45°, 90°, 135° and combination of all angles.

Feature combination

To evaluate the ability and contribution of using backscattering images and texture features extracted from Sentinel-1A data to reduce the spectral confusion in optical images and improve classification accuracy, different combinations were made (Table 2).

Support vector machine (SVM)

The use of SVM classifier has significantly increased the classification accuracy in recent times. It was developed based on machine learning theory (Vapnik 1999) and provides higher accuracies in LULC classification using polarimetric SAR (PolSAR) data compared to other classifiers (Fukuda and Hirosawa 2001; Iyyappan et al. 2014; Mishra et al. 2014). SVM classifier was performed on Sentinel-1A backscattering alone, backscattering with GLCM textural bands, spectral alone and spectral with GLCM textural bands of three optical sensors. Later, the backscattering images (VV&VH) and best GLCM bands of Sentinel-1A were combined with three optical sensors. These images have resembled with lowest image resolution for merging. An accuracy assessment was carried out for all the combination of classified images and compared.

Results and discussion

GLCM textural band analysis of Sentinel-1A data

Figures 4 and 5 show the class separability analysis of texture features of Sentinel-1A using Jeffries–Matusita (JM) distance to obtain the optimum identification window size and angle (direction), respectively. The JM distance values vary from 0 to 2 and indicate how finely the selected training samples are statistically separated (Amarsaikhan et al. 2010). The values ≥ 1.9 indicate that the samples have good separability (Wei et al. 2012). The combination of all texture features obtained the maximum separability in 11 × 11 window size (minimum of 1.07 to the maximum of 1.99). The combination of all angles of 11 × 11 window size achieved better results compared to other angles. Minimum separability occurred between agriculture fallow land and barren land due to the same nature of scattering mechanism that means single bounce scattering, whereas plantation and settlement due to double-bounce and volume scattering mixed together between these two classes. The maximum separability has been achieved with all the remaining class pairs.

Optimum textural band selection

Statistical analysis was carried out to identify the most suitable textural band of Sentinel-1A. Figure 6 shows the maximum separability among the classes observed in the dissimilarity, homogeneity, mean and variance bands of GLCM.

Classification of SAR data

SVM classifier was performed on the different combination of four textural bands (dissimilarity, homogeneity, mean and variance) with two backscattering images (VH and VV). An accuracy assessment was carried out for all SVM classified images with the help of ground truth image (Table 3). Sentinel-1A backscattering images, backscattering images with all textural bands and backscattering images with mean and variance of textural bands were classified with overall accuracy of about 61.51%, 67% and 67.72%, respectively, and a kappa coefficient of 0.44, 0.51 and 0.52, respectively. The land cover classes were well classified with improved accuracy except for the settlement class. Based on the results, the GLCM textural band contributed most to the classification, followed by dissimilarity and homogeneity. Mean and variance bands are optimum GLCM textural features of Sentinel-1A for further analysis.

The classification of multi-sensor data

To investigate the advantages of the combination of backscattering images and textural features of Sentinel-1A, different classifications were carried out by combining three multispectral images. The classification results of LISS-IV, Sentinel-2A and Landsat 8 spectral alone gave an overall classification accuracy of 69.72%, 66.29% and 64.26%, respectively (Table 4). Settlement class was poorly classified in three sensors due to current fallow lands. Consequently, the agriculture lands were classified incorrectly as settlements. The spectral reflectance characteristics of settlement and the current fallow land of agriculture are remarkably similar. It was observed that the settlement and scrub forest were mostly classified as agriculture fallow land. Therefore, the three sensors do not seem appropriate for classifying the land use/cover of the study area.

Considering the limitation of the three sensors, mean and variance of GLCM textural bands were extracted from three sensors to overcome this issue. The combination of multispectral image and textural bands was classified with SVM classifier. The overall classification accuracy was improved from 69.72 to 74.41%, 66.29 to 75.45% and 64.26 to 68.64% for LISS-IV, Sentinel-2A and Landsat 8, respectively (Table 4). The spectral confusions between the classes were reduced and the classes become more uniform when compared to those using only the spectral bands alone. The barren area and plantation categories are classified better in the spectral combined with textural bands. The remaining categories need improvement for classification accuracy. The study observed that three sensors overall achieved better result and producer accuracy when spectral bands combined with textural bands. It was found that homogeneous features (very smooth textures) and heterogeneous features (textured) were better classified by spectral alone and spectral with GLCM texture band, respectively.

To reduce the spectral confusion between classes in optical images, the classification accuracy was potentially improved and the classification was performed by combining the three optical images with Sentinel-1A data-derived features (backscattering and mean and variance of textural bands). As seen in Table 4, the best classification results were achieved with the combination of LISS-IV and Sentinel-1A-derived features with an overall accuracy of up to 78.49% and a kappa coefficient of up to 0.68. Whereas, the accuracy achieved using combination of Sentinel-2A and Landsat 8 OLI combined with Sentinel-1A data was lower, with an overall accuracy above 75% and kappa coefficient above 0.64. The overall accuracy of LISS-IV, Sentinel-2A and Landsat8 with Sentinel-1A were increased by 4.08%, 0.36% and 8.25%, respectively, compared with the combination of spectral and textural bands of optical sensors.

An agriculture fallow land, scrub forest and settlement are easily confused in spectral classification and achieved poor classification in spectral bands of three multispectral images. The combination of optical images and Sentinel-1A data decreased the spectral confusions between the classes, provided better classification results, and improved accuracy reasonably. SAR data backscatter provided supplementary characteristics to these features such as surface roughness, orientation, signatures and roughness, object density and 3D information of the feature (Kurosu et al. 1999). SAR data contain geometry and structure of target feature, while the optical image provides reflectance information from the target (Parihar et al. 2016). Optical and SAR data can use the complementarities of their information to improve the classification accuracy. Figure 7 shows a closer view of SVM classification for the optical spectral bands only, spectral and textural bands of the optical image and optical spectral bands with derived features of Sentinel-1A. The improvement in the classification accuracy mentioned before can be visualized in this figure.

Conclusion

The study evaluated the capability and contribution of using backscattering image and texture feature from Sentinel-1A data for land cover classification. For combination of all textures features, the optimum window size was 11 × 11. The mean and variance bands represent optimum GLCM textural bands of Sentinel-1A data. The combination of optical images and Sentinel-1A-derived features reduced the spectral confusion between the classes, identified the most favorable bands for classification and improved classification accuracy. The limitation of GLCM technique mainly depends on the window size and the textural band pixel, which is summarized on the basis of neighboring pixels. The selection of optimal GLCM bands may vary with seasons and land features. In the future, the study can compare GLCM textural bands with hybrid decomposition parameters of SAR data that reduce the limitation of optical images classification such as spectral confusion and pixel mismatching.

References

Amarsaikhan D, Blotevogel HH, van Genderen JL et al (2010) Fusing high-resolution SAR and optical imagery for improved urban land cover study and classification. Int J Image Data Fusion 1(1):83–97. https://doi.org/10.1080/19479830903562041

Asner GP (2001) Cloud cover in Landsat observations of the Brazilian Amazon. Int J Remote Sens 22(18):3855–3862

Brisco B, Brown RJ (1995) Multidate SAR/TM synergism for crop classification in Western Canada. Photogramm Eng Remote Sens 61(8):1009–1014

Cetin M (2015) Using GIS analysis to assess urban green space in terms of accessibility: case study in Kutahya. Int J Sustain Dev World Ecol 22(5):420–424

Cetin M (2016) Sustainability of urban coastal area management: a case study on Cide. J Sustain For 35(7):527–541

Cetin M, Adiguzel F, Kaya O, Sahap A (2018) Mapping of bioclimatic comfort for potential planning using GIS in Aydin. Environ Dev Sustain 20(1):361–375. https://doi.org/10.1007/s10668-016-9885-5

Franklin SE, Wulder MA, Gerylo GR (2001) Texture analysis of IKONOS panchromatic data for Douglas-fir forest age class separability in British Columbia. Int J Remote Sens 22(13):2627–2632. https://doi.org/10.1080/01431160120769

Fukuda S, Hirosawa H (2001) Support vector machine classification of land cover: application to polarimetric SAR data. IEEE Geosci Remote Sens Symp 1(5):187–189. https://doi.org/10.1109/IGARSS.2001.976097

Gao G (2010) Statistical modeling of SAR images: a survey. Sensors 10:775–795. https://doi.org/10.3390/s100100775

Haralick RM, Shanmugam K, Dinstein I (1973) Textural features for image classification. IEEE Trans Syst Man Cybern 3(6):610–621. https://doi.org/10.1109/TSMC.1973.4309314

Heiselberg H (2016) A direct and fast methodology for ship recognition in sentinel-2 multispectral imagery. Remote Sens 8(12):1–11. https://doi.org/10.3390/rs8121033

Iyyappan M, Ramakrishnan SS, Srinivasa Raju K (2014) Study of discrimination between plantation and dense scrub based on backscattering behavior of C band SAR data. Int Arch Photogramm Remote Sens Spat Inf Sci (ISPRS Arch XL) 8:755–760. https://doi.org/10.5194/isprsarchives-xl-8-755-2014

Joshi N, Baumann M, Ehammer A, Fensholt R, Grogan K, Hostert P, Jepsen MR, Kuemmerle T, Meyfroidt P, Mitchard ET, Reiche J (2016) A review of the application of optical and radar remote sensing data fusion to land use mapping and monitoring. Remote Sens 8(1):70

Kaya E, Agca M, Adiguzel F, Cetin M (2018) Spatial data analysis with R programming for environment. Hum Ecol Risk Assess Int J. https://doi.org/10.1080/10807039.2018.1470896

Kurosu T, Uratsuka S, Maeno H, Kozu T (1999) Texture statistics for classification of land use with multitemporal JERS-1 SAR single-look imagery. IEEE Trans Geosci Remote Sens 37(1):227–235. https://doi.org/10.1109/36.739157

Kuzucu AK, Balcik FB (2017) Testing the potential of vegetation indices for land use/cover classification using high resolution data. ISPRS Ann Photogramm Remote Sens Spat Inf Sci 4:279

Lillesand TM, Kiefer RW, Chipman JW (2004) Remote sensing and image interpretation, 5th edn. Wiley, New York

Lu D, Weng Q (2006) Use of impervious surface in urban land-use classification. Remote Sens Environ 102:146–160. https://doi.org/10.1016/j.rse.2006.02.010

Lu D, Weng Q (2007) A survey of image classification methods and techniques for improving classification performance. Int J Remote Sens 28(5):823–870. https://doi.org/10.1080/01431160600746456

Magesh NS, Chandrasekar N, Soundranayagam JP (2012) Delineation of groundwater potential zones in Theni district, Tamil Nadu, using remote sensing. GIS and MIF techniques. Geosci Front 3(2):189–196. https://doi.org/10.1016/j.gsf.2011.10.007

Mishra P, Singh D, Yamaguchi Y (2011) Land cover classification of palsar images by knowledge based decision tree classifier and supervised classifiers based on SAR observables. Prog Electromagn Res B 30:47–70. https://doi.org/10.2528/PIERB11011405

Mishra VN, Kumar P, Gupta DK, Prasad R (2014) Classification of various land features using Risat-1 dual polarimetric data. Int Arch Photogramm Remote Sens Spat Inf Sci (ISPRS Arch XL) 8:833–837. https://doi.org/10.5194/isprsarchives-xl-8-833-2014

Mishra VN, Prasad R, Kumar P et al (2017) Dual-polarimetric C-band SAR data for land use/land cover classification by incorporating textural information. Environ Earth Sci. https://doi.org/10.1007/s12665-016-6341-7

Muthukumarasamy I, Shanmugam RS, Kolanuvada SR (2017) SAR polarimetric decomposition with ALOS PALSAR-1 for agricultural land and other land use/cover classification: case study in Rajasthan, India. Environ Earth Sci 76:455. https://doi.org/10.1007/s12665-017-6783-6

Ndi Nyoungui A, Tonye E, Akono A (2002) Evaluation of speckle filtering and texture analysis methods for land cover classification from SAR images. Int J Remote Sens 23(9):1895–1925. https://doi.org/10.1080/01431160110036157

Ng WT, Rima P, Einzmann K et al (2017) Assessing the potential of Sentinel-2 and pléiades data for the detection of prosopis and vachellia spp. in Kenya. Remote Sens. https://doi.org/10.3390/rs9010074

Niu X, Ban Y (2013) Multi-temporal RADARSAT-2 polarimetric SAR data for urban land-cover classification using an object-based support vector machine and a rule-based approach. Int J Remote Sens 34(1):1–26. https://doi.org/10.1080/01431161.2012.700133

NRSC (2011) RESOURCESAT-2 Manual. http://www.euromap.de/download/R2_data_user_handbook.pdf

de Oliveira Silveira EM, de Menezes MD, Acerbi Júnior FW, Santos Terra MCN, de Mello JM (2017) Assessment of geostatistical features for object-based image classification of contrasted landscape vegetation cover. J Appl Remote Sens 11(3):036004. https://doi.org/10.1117/1.JRS.11.036004

Omar H, Misman MA, Kassim AR (2017) Synergetic of PALSAR-2 and Sentinel-1A SAR polarimetry for retrieving aboveground biomass in dipterocarp forest of Malaysia. Appl Sci. https://doi.org/10.3390/app7070675

Parihar N, Rathore VS, Mohan S (2016) Combining ALOS PALSAR and AVNIR-2 data for effective land use/land cover classification in Jharia coalfields region. Int J Image Data Fusion. https://doi.org/10.1080/19479832.2016.1273258

Sambodo KA, Indriasari N (2013) Land cover classification of Alos Palsar data using support vector machine. Int J Remote Sens Earth Sci 10(1):9–18. https://doi.org/10.1128/JB.00758-15

Solberg AHS, Jain AK, Taxt T (1994) Multisource classification of remotely sensed data: fusion of Landsat TM and SAR images. IEEE Trans Geosci Remote Sens 32(4):768–778. https://doi.org/10.1109/36.298006

Stramondo S, Bignami C, Chini M et al (2006) Satellite radar and optical remote sensing for earthquake damage detection: results from different case studies. Int J Remote Sens 27(20):4433–4447. https://doi.org/10.1080/01431160600675895

Thanabalan P, Vidhya R (2016) A synergistic approach for soil moisture estimation using modified Dubois model with dual polarized SAR and optical satellite data. Land Surf Cryosphere Remote Sens III 9877:98770Y. https://doi.org/10.1117/12.2223751

Vapnik VN (1999) An overview of statistical learning theory. IEEE Trans Nueral Netw 10(5):988–999

Verhegghen A, Eva H, Ceccherini G et al (2016) The potential of sentinel satellites for burnt area mapping and monitoring in the Congo Basin forests. Remote Sens 8(986):1–22. https://doi.org/10.3390/rs8120986

Walsh SJ, McCleary AL, Mena CF, Shao Y, Tuttle JP, González A, Atkinson R (2008) QuickBird and Hyperion data analysis of an invasive plant species in the Galapagos Islands of Ecuador: implications for control and land use management. Remote Sens Environ 112(5):1927–1941

Ward D, Phinn SR, Murray AT (2000) Monitoring growth in rapidly urbanizing areas using remotely sensed data. Prof Geogr 52(3):371–386

Wei L, Hu Z, Gu M et al. (2012) Texture feature analysis in oil spill monitoring by SAR image. In: IEEE - 2012 20th international conference on geoinformatics, pp 1–6. https://doi.org/10.1109/Geoinformatics.2012.6270284

Wu S, Xu B, Wang L (2006) Urban land-use classification using variogram-based analysis with an aerial photograph. Photogramm Eng Remote Sens 72(7):813–822. https://doi.org/10.14358/PERS.72.7.813

Wu X, Peng J, Shan J, Cui W (2015) Evaluation of semivariogram features for object-based image classification. Geospat Inf Sci 18(4):159–170. https://doi.org/10.1080/10095020.2015.1116206

Zakeri H, Yamazaki F, Liu W (2017) Texture analysis and land cover classification of Tehran using polarimetric synthetic aperture radar imagery. Appl Sci. https://doi.org/10.3390/app7050452

Zhang H, Lin H, Li Y (2015) Impacts of feature normalization on optical and SAR data fusion for land use/land cover classification. IEEE Geosci Remote Sens Lett 12(5):1061–1065. https://doi.org/10.1109/LGRS.2014.2377722

Zou T, Yang W, Dai D, Sun H (2010) Polarimetric SAR image classification using multifeatures combination and extremely randomized clustering forests. EURASIP J Adv Signal Process. https://doi.org/10.1155/2010/465612

Acknowledgements

We are grateful to NRSC-ISRO, NASA and ESA for providing optical and SAR data sets for this study. I sincerely thank Dr. Kari Ramu and Dr. Mehmuna Bagum, Scientist, National Centre for Coastal Research (NCCR), Ministry of Earth Sciences, Government of India for their valuable suggestions.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Muthukumarasamy, I., S. Shanmugam, R. & Usha, T. Incorporation of textural information with SAR and optical imagery for improved land cover mapping. Environ Earth Sci 78, 643 (2019). https://doi.org/10.1007/s12665-019-8654-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s12665-019-8654-9