Abstract

Each of the urban land cover types has unique thermal pattern. Therefore, thermal remote sensing can be used over urban areas for indicating temperature differences and comparing the relationships between urban surface temperatures and land cover types. On the other hand, synthetic-aperture radar (SAR) sensors are playing an increasingly important role in land cover classification due to their ability to operate day and night through cloud cover, and capturing the structure and dielectric properties of the earth surface materials. In this research, a feature-level fusion of SAR image and all bands (optical and thermal) of Landsat 8 data is proposed in order to modify the accuracy of urban land cover classification. In the proposed object-based image analysis algorithm, segmented regions of both Landsat 8 and SAR images are utilized for performing knowledge-based classification based on the land surface temperatures, spectral relationships between thermal and optical bands, and SAR texture features measured in the gray-level co-occurrence matrix space. The evaluated results showed the improvements of about 2.48 and 0.06 for overall accuracy and kappa after performing feature-level fusion on Landsat 8 and SAR data.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Meteorological and climatic aspects in urban areas can be controlled or improved by a wise urban planning and management. Object recognition and land cover classification based on recent advances in airborne and space-borne sensor technologies and digital imaging techniques is a powerful solution for urban planning (Abbate et al. 1995; Alavipanah 2008).

The excessive use of fossil fuel energy and greenhouse gases caused by surface and atmospheric modifications due to the urbanization generally lead to a warmer climate than the surrounding non-urbanized areas. This phenomenon is the urban heat island. According to heat islands, thermal remote sensing data have been used over urban areas for indicating temperature differences and comparing the relationships between urban surface temperatures and land cover types (Voogt and Oke 2003). On the other hand, synthetic-aperture radar (SAR) sensors are playing an increasingly important role in land cover classification due to their ability to operate day and night through cloud cover, and capturing the structure and dielectric properties of the earth surface materials (Zhu et al. 2012).

Data fusion appears as an effective way for a synergistic combination of information from various sources in order to provide a better understanding of a given scene (Esteban et al. 2004; Stathaki 2008; Tabib Mahmoudi et al. 2013; Huang and Zhang 2012; Ran et al. 2012; Huang et al. 2012; Ye et al. 2014; Tabib Mahmoudi et al. 2014). According to the different characteristics of optic and SAR remote sensing data, they can complete each other. Thus, optic data fusion and SAR data fusion increase the capabilities of object recognition algorithms in urban areas (Abbate et al. 1995; Borghys et al. 2007).

As many land cover classes in an urban environment have similar spectral signatures, textural and structural information such as energy, entropy, contrast and topological relationships must be exploited to produce accurate classification maps. Already, many researchers have investigated the potential of the object-based image analysis (OBIA) approaches for dealing with high-resolution images and complexities in urban areas (Blaschke 2010; Laliberte et al. 2012; Tabib Mahmoudi et al. 2013).

In this research, an object-based image analysis methodology is proposed for feature-level fusion of high-resolution Landsat 8 optical and thermal bands and SAR image in order to improve the accuracy of land cover classification map in urban areas.

Object Recognition Algorithm

The proposed urban object recognition in this research is an object-based image analysis strategy composed of two main steps, image segmentation and knowledge-based classification of segmented regions (Fig. 1).

Image Segmentation

In the first step of the proposed object-based image analysis strategy, a multi-resolution segmentation technique is applied individually on the content of SAR and Landsat 8 images in order to segment each of them into image regions. The multi-resolution segmentation procedure starts with single image objects of one pixel and repeatedly merges a pair of image objects into larger ones. The merging decision is based on the local homogeneity criterion, describing the similarity between adjacent image objects (Baatz and Schape 2000; Tabib Mahmoudi et al. 2014).

Knowledge-Based Classification

For knowledge-based classification, the properties of each segmented region should be measured based on the characteristics of input data. In this research, per-segment spectral and textural characteristics of a segmented region generate region’s properties. After that, for optimum selection of spectral and textural features among all of the generated ones, it is necessary to perform diversity analysis. In this phase of our investigation, the process is based on the visual inspection of an expert operator and testing different thresholds for each feature.

Feature Measurement

In this proposed object-based image analysis algorithm, spectral features in the form of simple ratios (SR) and normalized difference indices (NDI) are measured based on the thermal and optical bands of Landsat 8 images (see Eqs. 1, 2).

The following spectral features are selected as the effective optical and thermal features for utilizing in the proposed knowledge base:

-

Temperature–vegetation index (TVX) is negatively related to water conditions. The major advantage of TVX is that it integrates both the reflective and thermal bands of remotely sensed data, which offers more spectral information for drought detection (Amiri et al., 2009; Jiang and Tian 2010; Orhan et al. 2014; Jiang et al. 2015).

where NDVI is the normalized difference vegetation index between red and near-infrared bands of Landsat 8 image (Eq. 4).

Standard algorithm was applied to retrieve the land surface temperature (LST). The DN values are converted back to the radiance units using Eq. 5 in order to obtain radiance values.

where \( Q_{\text{CalMin}} = 0, Q_{\text{CalMax}} = 65536 \;{\text{and}}\;Q_{\text{Cal}} = {\text{Digital Number}} \). Then, temperature is obtained from the following equation:

where \( K_{1} \) and \( K_{2} \) are the sensor calibration coefficients for each band of Landsat 8, \( L_{\lambda } \) is the radiance calculated value of each band, and T is the temperature measured in Kelvin. Finally, the land surface temperature (LST) is calculated in Erdas Imagine software.

-

Chlorophyll vegetation index (CVI) is a spectral feature based on the relations between near-infrared, red and green bands (Hunt et al., 2013).

$$ {\text{CVI}} = {\text{NIR}}\frac{\text{Red}}{{{\text{Green}}^{2} }} $$(7) -

Coloration index (CI) is a spectral feature based on the relations between red and blue bands (Vina et al. 2011).

$$ {\text{CI}} = \frac{{{\text{Red}} - {\text{Blue}}}}{\text{Red}} $$(8) -

SR (b11, b10) is simple ratio between two thermal bands of Landsat 8 data

$$ {\text{SR}}_{11.10} = \frac{{{\text{Band}}_{11} }}{{{\text{Band}}_{10} }} $$(9)

Moreover, for feature extraction from SAR image, gray value relationships between each pixel and its neighbors in the pre-identified segmented regions are utilized. Many researchers have been utilizing different texture analysis methods in their object recognition algorithms based on SAR data (Zhu et al. 2012). In this paper, entropy, contrast, correlation and mean are measured in gray-level co-occurrence matrix (GLCM) space as the optimum features on SAR image based on their capabilities for recognition of each of the individual urban object types (Table 1).

These measured features generate a knowledge base for urban object recognition and classification of the segmented image regions. After generating the knowledge base, the proposed methodology performs feature-level fusion in order to utilize the capabilities of both SAR and Landsat 8 images for improving the accuracy of the classification map. The object classification can be performed by encapsulating the knowledge base into a rule set (Table 2).

Experiments and Results

Dataset

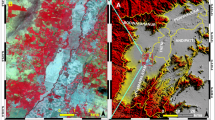

The potential of the proposed object recognition and classification methodology is evaluated for Landsat 8 and SAR data over an urban area in Barcelona, Spain. The utilized SAR data were collected on May 2011 with 2.5 m spatial resolution. These SAR data are Terra SAR-X Strip map with HH polarization channel, and its angle of incidence is equal to 35.2. Landsat 8 data were collected on June 2014 and are pan-sharpened with the panchromatic band of SPOT5 which is collected on the same region. Pan-sharpening increases the spatial resolution of the multispectral images in order to improve object recognition results. The pan-sharpened Landsat 8 image with all of the optical and thermal spectral channels is acceptable in both of the spatial and spectral resolutions for object recognition (Fig. 2).

The investigated area is a city including a number of large and high buildings and a mixture of community parks and private housing.

Obtained Results

In the stage of performing segmentation, the multi-resolution segmentation algorithm is applied to the content of each of the individual images using eCognition software. 250, 0.8 and 0.9 are defined for scale, shape and compactness parameters, respectively. Then, the spectral and textural features mentioned in “Feature Measurement” section are measured on all of the image regions on the Landsat 8 and SAR data.

Figure 3 depicts all of the measured spectral and textural features on input data those utilized for classification. Threshold setting for each object class is performed semiautomatically by an expert operator using the quantitative and visual analysis on the features in feature view of eCognition software.

As it is recognized from Fig. 3, after performing defined threshold values, CVI spectral feature is capable for filtering non-vegetation regions such as shadow and ground. Moreover, CI has been utilized for detecting vegetation and water bodies. According to the expert investigations performed, thermal features such as SR (b11, b10) and TVX are capable for detecting warmer structures such as built-up areas. However, SAR texture images are capable for recognizing vegetation and shadow areas. Therefore, fusion of these two kinds of data with various natures can help better classify urban objects.

In the second stage of the proposed object-based image analysis algorithm, building, road, vegetation, water bodies and shadow areas are recognized based on the feature-level fusion of Landsat 8 and SAR data. Moreover, for investigating the capabilities of the proposed feature fusion, the results of performing OBIA on Landsat 8 just using spectral features are also compared (Fig. 4).

Comparisons depict the improvements in road and vegetation detection after performing feature-level fusion. Moreover, for the quantitative evaluation of the results, some segmented regions of the pre-defined object classes are manually selected by an expert operator. Sample areas are compared with their corresponding results from object recognition algorithm. The comparison is based on the number of correctly detected pixels (true positive), wrongly detected pixels (false positive) and the not correctly recognized pixels (false negative), determined after the object recognition.

According to Table 3, after performing OBIA based on spectral features on Landsat 8, utilizing SAR texture features can improve the overall accuracy and kappa to 2.48 and 0.06, respectively. Moreover, using quantitative values for each object class, correctness and quality criteria are determined for the results.

Comparisons showed the improvements in the road and vegetation detection after performing feature-level fusion of SAR and Landsat 8 data. However, no considerable progress can be seen in recognition of other object classes. Also, there are some negative progress in building class because of spectral similarities between asphalt road and building’s roofs which can be solved using elevation data (Fig. 5).

Discussion and Conclusion

The object-based image analysis strategy is proposed for feature-level fusion of Landsat 8 spectral features and SAR texture features. According to the spectral and textural similarities between objects in urban areas such as building’s roofs and road surfaces, generating an accurate classification map in urban areas is so complicated. Fusion of the capabilities of SAR data in deriving soil moisture and surface roughness, with thermal patterns derived from thermal infrared data, is investigated as a solution for producing better classification map. The results of feature fusion of SAR and Landsat 8 data depicted some improvements in object recognition, especially in vegetation and road classes those SAR texture images affected on.

Despite the improvement in the accuracies, this method still needs further modifications in the field of defining the contextual information such as neighborhood definition for each of the regions, utilizing the digital elevation models as input data and using artificial intelligence techniques such as multi-agent system for decreasing the classification errors related to the lack of segmentation capabilities. For performing the proposed feature-level fusion method on the object-based image analysis of other remotely sensed datasets, all aspects of the method are transferable, but only the spectral features may need to modify according to the spectral capabilities of new datasets.

References

Abbate, G., Kowalewska, H., Borfecchia, F., & Della Rocca, B. (1995). Land cover characteristics of Rome urban and countryside area as observed by Landsat TM (bands 1-4) and ERS-1 SAR. In Proceedings of the 7-th URSI commission F open symposium, wave propagation and remote sensing, Ahmedabad, India, 20–24 Nov.

Alavipanah, S. K. (2008). Thermal remote sensing and its application in the earth sciences. Tehran: University of Tehran Press.

Amiri, R., Weng, Q. H., Alimohammadi, A., & Alavipanah, S. K. (2009). Spatial-temporal dynamics of land surface temperature in relation to fractional vegetation cover and land use/cover in the Tabriz urban area Iran. Remote Sensing of Environment, 113(2009), 2606–2617.

Baatz M., & Schape, A. (2000). Multi-resolution segmentation: An optimization approach for high quality multi-scale image segmentation. In Proceedings of the Angewandte Geographische Informations XII Beitragezum AGIT-Symposium, Salzburg, Austria (pp. 12–23).

Blaschke, T. (2010). Object based image analysis for remote sensing. ISPRS Int. J. Photogramm. Remote Sens., 65(1), 2–16.

Borghys, D., Shimoni, M., & Perneel, Ch. (2007). Change detection in urban scenes by fusion of SAR and hyperspectral data. Paper read at Proceedings of SPIE.

Esteban, J., Starr, A., Willetts, R., Hannah, P., & Bryanston-Cross, P. (2004). A review of data fusion models and architectures: Towards engineering guidelines. Journal of Neural Computing and application, 14(4), 273–281.

Huang, X., & Zhang, L. (2012). A multilevel decision fusion approach for urban mapping using very high resolution multi/hyperspectral imagery. International Journal of Remote Sensing, 33(11), 3354–3372.

Huang, B., Zhang, H., & Yu, L. (2012). Improving landsat ETM + urban area mapping via spatial and angular fusion with MISR multi-angle observations. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing, 5(1), 101–109.

Hunt, E. R., Doraiswamy, P. C., McMurtrey, J. E., Daughtry, C. S. T., Perry, E. M., & Akhmedov, B. (2013). A visible band index for remote sensing leaf chlorophyll content at the canopy scale. International Journal of Applied Earth Observation and Geoinformation, 21(2013), 103–112.

Jiang, Y., Fu, P., & Weng, Q. (2015). Assessing the impacts of urbanization-associated land use/cover change on land surface temperature and surface moisture: A case study in the Midwestern United States. Remote Sens., 2015(7), 4880–4898. https://doi.org/10.3390/rs70404880.

Jiang, J., & Tian, G. (2010). Analysis of the impact of land use/land cover change on land surface temperature with remote sensing. Procedia Environmental Sciences, 2, 571–575.

Laliberte, S. A., Browning, D. M., & Rango, A. (2012). A comparison of three feature selection methods for object-based classification of sub-decimeter resolution UltraCam-L imagery. International Journal of Applied Earth Observation and Geoinformation, 15(2012), 70–78.

Orhan, O., Ekercin, S., & Dadaser-Celik, F. (2014). Use of landsat land surface temperature and vegetation indices for monitoring drought in the Salt Lake Basin Area, Turkey. The Scientific World Journal. https://doi.org/10.1155/2014/142939.

Ran, Y. H., Li, X., Lu, L., & Li, Z. Y. (2012). Large-scale land cover mapping with the integration of multisource information based on the Dempster–Shafer theory. International Journal of Geographical Information Science, 26(1), PP.169–191.

Stathaki, T. (2008). Image fusion, algorithms and applications. Britain: Academic Press is an Imprint of Elsevier.

Tabib Mahmoudi, F., Samadzadegan, F., & Reinartz, P. (2013). Object oriented image analysis based on multi-agent recognition system. Computers and Geosciences, 54(2013), 219–230.

Tabib Mahmoudi, F. Samadzadegan, F., & Reinartz, P. (2014). Object recognition based on the context aware decision level fusion in multi views imagery. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing. https://doi.org/10.1109/jstars.2014.2362103.

Vina, A., Gitelson, A. A., Nguy-Robertson, A. L., & Peng, Y. (2011). Comparison of different vegetation indices for the remote assessment of green leaf area index of crops. Remote Sensing of Environment, 115(2011), 3468–3478.

Voogt, J. A., & Oke, T. R. (2003). Thermal remote sensing of urban climates. Remote Sensing of Environment, 86(2003), 370–384.

Ye, Z., Prasad, S., Li, W., He, M., & Fowler, J. E. (2014). Classification based on 3D DWT and decision fusion for hyper spectral image analysis. IEEE Geoscience and Remote Sensing Letters, 11(1), 173–177.

Zhu, Z., Woodcock, C. E., Rogan, J., & Kellndorfer, J. (2012). Assessment of spectral, polarimetric, temporal, and spatial dimensions for urban and peri-urban land cover classification using Landsat and SAR data. Remote Sensing of Environment, 117(2012), 72–82.

Author information

Authors and Affiliations

Corresponding author

About this article

Cite this article

Tabib Mahmoudi, F., Arabsaeedi, A. & Alavipanah, S.K. Feature-Level Fusion of Landsat 8 Data and SAR Texture Images for Urban Land Cover Classification. J Indian Soc Remote Sens 47, 479–485 (2019). https://doi.org/10.1007/s12524-018-0914-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12524-018-0914-8