Abstract

With data comes uncertainty, which is a widespread and frequent phenomenon in data science and analysis. The amount of information available to us is growing exponentially, owing to never-ending technological advancements. Data visualization is one of the ways to convey that information effectively. Since the error is intrinsic to data, users cannot ignore it in visualization. Failing to observe it in visualization can lead to flawed decision-making by data analysts. Data scientists know that missing out on uncertainty in data visualization can lead to misleading conclusions about data accuracy. In most cases, visualization approaches assume that the information represented is free from any error or unreliability; however, this is rarely true. The goal of uncertainty visualization is to minimize the errors in judgment and represent the information as accurately as possible. This survey discusses state-of-the-art approaches to uncertainty visualization, along with the concept of uncertainty and its sources. From the study of uncertainty visualization literature, we identified popular techniques accompanied by their merits and shortcomings. We also briefly discuss several uncertainty visualization evaluation strategies. Finally, we present possible future research directions in uncertainty visualization, along with the conclusion.

Graphic Abstract

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

With the exponential rise in data size and complexity, there is a demand for efficient representations of vast amounts of information, called big data. Data visualization involves creating a visual representation of data using images, pictures, diagrams, or animations to communicate abstract and concrete messages. Data visualization has emerged as a potent tool to explore, manage, and understand these massive, incredibly complex data sets. Effective visual representation of data can go a long way in making it more understandable and approachable to analysts and decision-makers by helping them make better and more informed decisions. In most cases, visualization is often thought to be an abstract visual representation of the actual information. However, that’s not always possible in real-world scenarios, as no data can be sufficiently reliable and complete. There is bound to be some degree of uncertainty associated with any data (Pang et al. 1997). Uncertainty is present everywhere, such as economic uncertainties due to the lack of precise measurements, weather uncertainties due to lack of prediction accuracy, medical diagnosis uncertainties due to lack of precision, and sensor data uncertainties due to inaccuracy, error, or lack of completeness in measurements. The key challenge is identifying and understanding the unpredictability involved with the given information and visualizing it efficiently. Therefore, uncertainty visualization is an important research issue with a notable amount of literature on the topic (Johnson 2004).

Uncertainty is a challenging problem that cannot be ignored; otherwise, it may lead to erroneous or ambiguous decision-making. It is a challenging and complex concept (MacEachren et al. 2012), and its visualization has now emerged as an essential component of data science and analytics. Since the error is integral to any data analysis, a mere representation of data will not serve the purpose. Hence, uncertainty and its efficient representation are vital to be included. The visualization community no longer treats uncertainty as trivial and accepts that the uncertainty representation is integral to data analysis and visualization. If new researchers were to look for approaches to the representation of errors in any information, they would not find too many surveys on the topic. Besides, not many studies have tried to group the various techniques into different applicable categories. Novice researchers will find it useful to have the uncertainty representation techniques classified to apply one of the methods based on the type of error their information possesses. Also, there are several open problems in uncertainty representations that future researchers can take up. These open issues, once tackled, can speed up the process of decision making in different domains. These limitations and the lack of enough literature surveys on the topic primarily motivated us to survey uncertainty representation techniques and present them to new researchers in the area.

1.1 Outline and contributions

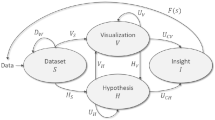

This work aims to review the state-of-the-art methodologies in uncertainty representation and provide classification to help researchers and users select appropriate techniques for their research/application domain. To classify the uncertainty representation techniques into different approaches, we first introduce the concept of uncertainty and its sources in Sect. 2. As a next step, we classify and discuss various uncertainty representation techniques in Sect. 3. Further, we discuss recent advances in the domain of uncertainty in Sect. 4 and summarize notable representative works and their methodologies in Table 1 to help readers identify their domain-appropriate techniques. Afterward, Sect. 5 discusses the evaluation strategies that users can use for the assessment of the representation methodologies, and Sect. 6 discusses open issues and future research directions. The survey then concludes with Sect. 7. To assist the readers better understand the topics discussed in the paper, we have provided a graphic outline of the article in Fig. 1.

Following are the main contribution of this review:

-

A new source of uncertainty called decision uncertainty is introduced, apart from the literature sources.

-

The uncertainty representation techniques have been classified into quantification and visualization approaches.

-

Various techniques, along with their representative works, merits, and drawbacks, have been summarized in a table for readers’ reference, serving as recommendations based on the type/attribute of the data being visualized.

-

A discussion of open problems in the field and suggestions of possible future research directions have been presented.

2 The concept of uncertainty

Multiple definitions of uncertainty exist in the literature as different authors have their interpretations of uncertainty, and there is no precise and simple definition (Wu et al. 2012). Uncertainty is a complicated and challenging concept with multiple facets to it, such as incompleteness, inconsistency, error, and unreliability (MacEachren et al. 2012; Sinton 1978; MacEachren et al. 2005; Riveiro 2007; Gahegan and Ehlers 2000; Hunter and Goodchild 1993). It signifies incomplete information and an extent to which the lack of understanding about the quantity of error is the cause of doubt in final results (Hunter and Goodchild 1993). In one of the earliest works in uncertainty representation, uncertainty was classified as error, range, and statistical (Pang et al. 1997). A measurement of the probability of errors was also termed to calculate uncertainty (Foody and Atkinson 2003; Dungan et al. 2002). Many other definitions exist, such as users’ knowledge imperfections, the extent of clarity, precision, and ignorance, to name a few (Crosetto et al. 2001; Duckham et al. 2001; Gershon 1998).

Information visualization has its classification of uncertainty owing to work done by Skeels et al. (2010). Thomson et al. (2005) proposed a new idea of uncertainty in the area of Intelligence Analysis. Their taxonomy included uncertainty of nine kinds: “Accuracy/Error, Precision, Completeness, Consistency, Lineage, Currency/Timing, Credibility, Subjectivity, and Interrelatedness”. They also discussed mapping these nine categories to different domains and quantitative representation using a probabilistic approach. Though their work was focused more on intelligence analysis, it can be extended to other areas easily. An extension of their typology was proposed by Zuk and Carpendale (2007). The concept of uncertainty is applicable in almost all areas, be it science, geography, cartography, medicine, or business. In the beginning, the representation of uncertainty received a lot of attention in scientific visualization (Pang et al. 1997). Later, researchers realized that there is a lack of certainty in other areas, such as information visualization (Aggarwal and Philip 2009). Visualization scientists and researchers have risen to the challenge of understanding the uncertainty associated with data and efficiently representing this uncertainty and the data. Hence, visualization of error and reliability has become an area of interest for many researchers within the scientific visualization and information visualization communities (Dungan et al. 2003; Johnson and Sanderson 2003; Cedilnik and Rheingans 2000). Uncertainty visualization was first implemented in Geographic Information Systems (GIS) (Hearnshaw and Unwin 1994). Since then, a lot of work has been done in other areas, some of which are fluid flow, geography, and medicine (Hlawatsch et al. 2011; Zukab et al. 2008; MacEachren et al. 2005; Prassni et al. 2010).

Despite the uncertainties associated with any information, data visualization has often assumed information to be mostly accurate. Visualization designers often find uncertainty difficult due to practical problems in visualization construction and uncertainty modeling relative to decision-making. Ignoring uncertainty in data and visualization can be deceptive to analysts, leading to faulty decisions (Olston and Mackinlay 2002; Johnson and Sanderson 2003; Deitrick and Edsall 2006; Thomson et al. 2005). For example, if analysts are trying to figure out the impact of a social networking website data breach, they would only be concerned with the number of people affected to gauge the immediate impact. However, other parameters, such as age, gender, race, and ethnicity, might be needed to assess the data breach’s long-term impact. Therefore, uncertainty in the data affects analysts’ decision-making strategy differently (Thomson et al. 2005). The decision-making strategy depends on the requirements. If the purpose is to find the immediate impact, the analysts will go for fewer parameters, while additional parameters would be required to gauge the long-term impact. An empirical evaluation of the uncertainty visualization in different domains was done by Skeels et al. (2010). They reiterated that incorporating uncertainty can help users better understand the data.

We can briefly summarize the concept of uncertainty into the following main points based on the comprehensive study of the literature:

-

Most authors associate uncertainty with error, incompleteness, and precision.

-

A few taxonomies were proposed that categorized uncertainty into different types.

-

Uncertainty was mostly part of scientific visualization initially and later gained popularity among information visualization researchers.

2.1 Uncertainty in visualization

Before analyzing uncertainty in visualization, the understanding of visualization and related approaches is crucial. Over the years, researchers have presented different methods for data visualization. Ziemkiewicz and Kosara (2008) claimed that the understanding of visualization involves an interaction between external visual metaphors and the user’s internal knowledge representation. To prove this claim, they conducted an experiment to test the effects of visual metaphor and verbal metaphor on understanding tree visualizations. The results of their investigation indeed backed their claim proving that the visual metaphor affects the derivation of information by the user from visualization. Similarly, Nguyen et al. (2012) discussed the readability criteria of visualizing graphs based on the graph drawing algorithm. According to this work, readable pictures of the graph produced by such an algorithm are not enough for graph visualization. They introduced the “faithfulness” criteria, relevant for modern methods that can handle large and complex graphs, and deemed a graph drawing algorithm “faithful” to map different graphs to distinct drawings. Sedlmair et al. (2012), on the other hand, provided definitions, proposed a methodological framework, and guided conducting design studies based on the combined experience of conducting previous design studies. In their work, they defined a design study as a project in which visualization researchers analyze a specific real-world problem, design a visualization system that supports solving the problem, validate the design, and demonstrate lessons learned to improve visualization design guidelines. They further characterized two axes—task clarity axis and information location axis—to help design study contribution, suitability, and uniqueness.

Integrating data visualization with the representation of unreliability is not trivial (Zuk and Carpendale 2006). Several approaches, frameworks, and taxonomies have been proposed in the past to represent uncertainty in data visualization (Pang et al. 1997; Thomson et al. 2005; Parsons and Hunter 1998; Brodlie et al. 1992; Buttenfield and Ganter 1990; Buttenfield and Beard 1994; MacEachren 1992). Parsons and Hunter (1998) covered some of the most important uncertainty formalisms. They discussed numerical and symbolic approaches for handling uncertainty and suggested that a hybrid approach is required to counter uncertainty in most cases. Uncertainty should never be confused with absence. While missing data results in data absence, uncertainty lies between falseness and truth (Smithson 1989). Brodlie et al. (2012) surveyed the newest uncertainty visualization methods and discussed visualizing uncertainty as well as the uncertainty of the visualization process. They attributed the complex nature of uncertainty to practical problems in visualization construction and uncertainty modeling for decision-making. These practical issues result in the absence of uncertainty representation from most visualizations (Johnson and Sanderson 2003; Johnson 2004).

2.2 Visualizing uncertainty

Khan et al. (2017) presented a novel visualization technique to help users gain more information about data transformation. They worked on understanding successful data transformation of desired results for a complex query. To understand data transformation and provide continuous feedback, they introduced the concept of “data tweening,” which involves presenting a series of incremental visual representations of a result set transformation to the user considering the queries in the session. Recently, to generate a visualization of data, Mittal et al. (2019) discussed using computer-implemented machine learning (ML) techniques to automatically determine insights of facts, segments, outliers, or other information associated with a dataset. Further, they proposed that such information be graphically displayed as text, graphs, charts, or other forms, for user interface and further analysis. Similarly, Li et al. (2019) proposed a parallel coordinate method to visualize high-dimensional data using both visual angle and the intrinsic meaning of data. Besides, they also developed color transition and coordinate transformation-based, high-dimensional data visualization methods and applied it to the car and financial data.

Another approach by Wu et al. (2012) depicted the course of uncertainties in analytical processes. In many diverse applications, analytical processes can lead to uncertainties at any stage (Olston and Mackinlay 2002; Slingsby et al. 2011; Wu et al. 2010). Another novel approach in uncertainty visualization of networks has been proposed using probabilistic graph layout techniques (Schulz et al. 2017). On the other hand, Hullman (2019) presented the rhetorical model of uncertainty omission in visualization-based communication. To argue that uncertainty in communication reduces the degree of freedom in the viewer’s statistical inferences, the author adopted a statistical model of how viewers judge the signal strength in a visual to visual-based communication. Similarly, Hullman et al. (2018) presented a taxonomy for characterizing visualization evaluation design decisions. The authors built their taxonomy to differentiate six levels of decisions: behavioral targets of the study, expected effects from uncertainty visualization, evaluation goals, measures, elicitation techniques, and analysis approaches. Also, Kim et al. (2019) proposed a model that served as a guide for improving visualization evaluation and proposed a Bayesian cognitive model for understanding people’s interpretation of visualization, including uncertainty, based on prior beliefs.

Recently, Weatherston et al. (2020) proposed the visualization design space for representing unqualified uncertainty in drug composition during a drug-checking test, using pie and cake charts. The design space generates alternatives for use in a visual drug report expected to improve decision-making concerning illicit drug use. Similarly, Ren et al. (2020) developed a framework for uncertainty analysis of an ensemble vector field. They proposed a robust and accurate metric to measure the pathline uncertainty, based on the classical edit distance on the real sequence and a visual analysis system called UP-Vis (Uncertainty Pathline Visualization), while Cho et al. (2020) proposed an ML-based framework for visualization of temporal representations learned from the deep neural network (DNN). For a given I/O, their work interpreted the decision of temporal DNN by extracting highly activated periods. They also visualized the sub-sequence of input data, where they characterized these sub-sequences with clustering and calculated the uncertainty of suggested type and actual data. In another interesting work, Yang et al. (2019) proposed a method to visualize the uncertainty of 3D geological surface, utilizing a stochastic model where the uncertainty was accessed by independent realization drawn by Monte Carlo sampling. The uncertainty was visualized by the “smooth” movie of evolving surface with the same distribution as Monte Carlo. Besides, Windhager et al. (2019) presented an omnipresent data uncertainty where they discussed a coordinated counter strategy for uncertainty visualization. They also developed the PloyCube framework for collection visualization and showed how multiple uncertainty representation techniques could be assessed and coordinated in a multi-perspective environment. Further, they discussed representing data uncertainty concerning geo-, set-typed-, and relational-temporal collection information.

The following sections discuss various uncertainty sources and how we can put a concept of uncertainty to visualization.

2.3 Uncertainty sources

It is important to note that uncertainty can arise at any stage of the visualization process—collection, transformation, or visualization (Pang et al. 1997). Various sources of uncertainty can be attributed to data sampling, modeling, mathematical manipulation, and visualization (Bonneau et al. 2014), and it is vital to establish the source of uncertainty to represent uncertainty accurately (Masalonis et al. 2004). Different scenarios can bring uncertainty in different ways. For example, uncertainty can result from discrepancies in the data sampling, gathering, or transformation stages. Several possible sources of error and uncertainty can be identified in a system, such as sensor readings, simulations, observations, and numerical transformations such as interpolation and approximations (Jää-Aro 2006). Sources of uncertainty have been classified into three groups in the literature: data sampling, modeling or simulation, and visualization uncertainties (Bonneau et al. 2014). However, the three groups of uncertainty sources have a computational pipeline bias that can be stated as follows. Uncertainty is a property of the relationship between data describing the world and the observed world. Therefore, it accumulates as a phenomenon is measured, data are propagated, and visualization is constructed. A few of the uncertainty typologies can be better stated as “uncertainty is a property of the relationship between data and decision-maker”. Considering this, there is a fourth source of uncertainty that needs to be considered—a relationship between data and usage—i.e., decision uncertainty. Figure 2 shows the four sources of uncertainty and how they affect each other (Bonneau et al. 2014).

Source of uncertainty, extended from Bonneau et al. (2014)

Data and the relationship between data and usage—both possess one or more types of uncertainty. Uncertainty is not only a property of data but also a property of the decision-maker. Visualize different forms of uncertainty effectively is always challenging. Visualization researchers have often focused on visual depictions of uncertainty than the sources of uncertainty. However, various researchers, including Pang et al. (1997), Thomson et al. (2005), Buttenfield and Ganter (1990), Deitrick and Edsall (2006), Pang et al. (1994), Couclelis (2003), Gershon (1992) and Boukhelifa and Duke (2009), have discussed the sources of uncertainty in their works. Figure 3 illustrates the Haber and McNabb model, which depicts data going through a pipeline structure (Haber and McNabb 1990). The following sections briefly discuss the different sources of uncertainty and how they impact each other.

2.3.1 Decision uncertainty

Uncertainty typologies such as completeness, credibility, subjectivity, and interrelatedness require the decision’s measurement. For example, to measure data integrity, decision-makers need to formally define the decision, including the expected decision strategy or criteria for which the data will be used. Moreover, the decision is subjective and can vary from one entity to another. This combination of decisions, along with the decision-makers, can also contribute to uncertainty. Therefore, this fourth source of uncertainty, the relationship between data and usage, needs to be considered and defined as decision uncertainty.

Haber and McNabb model depicting their uncertainty visualization pipeline, reproduced from Haber and McNabb (1990)

One of the latest works on decision-making under uncertainty proposed a simulated uncertainty range evaluation method to help decision-makers in the presence of a high level of uncertainty (Hodgett and Siraj 2019). Their approach used triangular distribution-based simulation to create a plot that visualizes the preferences and overlapping uncertainties of decision alternatives. Similarly, Ai et al. (2019) proposed a simulated annealing algorithm for decision making in map-like visualization, while Raglin et al. (2020) focused on decision making with uncertainty in an immersive system. The latter analyzed the impact of uncertainty on decision-making in multi-domain operational environments. Like the decision-making strategy, there is also uncertainty in how the provided information is taken into account. This involves both cognitive and non-cognitive processes. The decision is made by selecting a sequence of responses in an uncertain environment based on the self-generated action plan (Bechara et al. 1998).

Several methods have been proposed to examine decision-making in the presence of uncertainty, such as the two-choice prediction task (Elliott et al. 1999). In this method, the subject doesn’t have information about correctness and random reinforcement of responses. In contrast, Evenden and Robbins (1983) proposed a win-stay vs. lose-shift decision-making strategy. Under this strategy, subjects select their current response based on the previous response’s outcome, where knowledge of correctness of the previous response enforces selection of the same response. If such strategies are applied in decision-making, it will help different individuals arrive at the same decisions. Another recent work by Shen et al. (2019) presented a research study about incentive-based behavior repetition. During their study, it was found that the individual repeats the behavior more if the incentive is uncertain than certain, even when a certain incentive is financially better. Such research on the relationship between incentive uncertainty and repetition explains how individuals process the incoming information. Apart from work mentioned above, we encourage readers to refer to the recent work by Yager (2019) for a broader understanding of uncertain outcomes during decision making.

2.3.2 Visualization uncertainty

The visualization process itself can lead to uncertainty (Wittenbrink et al. 1996; Pang et al. 1997), and the visualization processes influence the propagation and perception of uncertainty (Riveiro 2007). Procedures such as mapping data of one form, such as ordinal data, into another form, such as categorical data, can cause the data to lose fidelity and add uncertainty in the visualization. Besides, mapping data from one type into another may not align with the users’ mental models. Furthermore, users can sometimes find it challenging to discriminate between categorical sets when a rich ordinal data is mapped into, for example, a thousand colors. Additionally, it depends on how the user perceives the given uncertainty visualization. Individual abilities and levels of understanding among the audience can lead to different types of uncertainty in the visualization. In addition, data sampling and modeling uncertainties can lead to uncertainties in the resulting visualization. For example, too much data can lead to cluttered visualization and add to the uncertainties. Likewise, computational models can give a range of nominal or categorical predictions and lead to variance in the visualization. Therefore, the uncertainties resulting from data collection and design influence one another and contribute to visualization uncertainties.

According to Bonneau et al. (2014), in the process of uncertainty visualization, uncertainty is treated as an unknown quantity. Such a method of visualization generates a more significant error and high variation along with missing information. Moreover, change indicates lowered confidence in the data and does not lead to more informed decision-making. Researchers have listed techniques used in the past, such as comparison, attribute modification, glyphs, and image discontinuity, to visualize data uncertainty. For uncertainty comparison, different methods have been proposed such as side-by-side comparison (Zuk et al. 2005), overlay data to be compared (Joseph et al. 1998), integration of isosurface and volume rendering (Johnson and Sanderson 2003), hierarchical parallel coordinates for the extensive collection of aggregated data set (Fua et al. 1999), and bounded uncertainty represented by boundaries and edges of pie charts, error bars, line charts, etc. (Olston and Mackinlay 2002).

Attribute modification suggests uncertainty visualization of data by mapping uncertainty to free variables in the rendering equation (Chlan and Rheingans 2005; Jones 2003) or by employing actions such as applying the bidirectional reflectance function to change surface reflectance or mapping uncertainty to color, i.e., pseudo-coloring (Sanyal et al. 2009; Strothotte et al. 1999). This method is generally used for communicating uncertainty in the areas of volume rendering, point cloud surface, isosurface, and flow fields (Kniss et al. 2005; Pauly et al. 2004; Pöthkow et al. 2011; Botchen et al. 2005). Glyphs are also used in uncertainty visualization to signal data through parameters such as size, color, orientation, location, and shape. Finally, it has been suggested to interpret image discontinuities as areas with distinct characteristics and use a technique such as animation to highlight the regions of distortion or blur or differences in visualization parameters (Gershon 1992; Haroz et al. 2008).

2.3.3 Data sampling uncertainty

The data gathering or sampling process can sometimes ignore important information or gather extraneous information resulting in uncertainty in data (Pang et al. 1997). Data collection is one of the prime causes of errors or unreliability in data (Wittenbrink et al. 1996). Missing information in the data collection process can lead to gaps in values. Algorithms such as interpolation and extrapolation, which are generally used to fill in the missing values, could also induce error and lead to uncertainty. Likewise, in the case of extraneous information, analysts may get overloaded with the amount of data. This could also lead to uncertainty in visualization due to the cluttered visualization resulting from data overload.

A few other notable contributions in addressing the uncertainty introduced due to data include Pöthkow and Hege (2013), where they used nonparametric models for computation of feature probabilities from uncertain data. Similarly, Demir et al. (2014) proposed bi-directional linking of multi charts and volume visualization to analyze visually 3D scalar ensemble filled at the data level. Demir et al. (2016) proposed a visualization technique for ensembles of isosurfaces based on the screen-space silhouettes while Pfaffelmoser and Westermann (2013) proposed a new visualization technique for iso-contours in ensemble datasets. Recently, Goubergrits et al. (2019) studied the uncertainty of various previously proposed geometric parameters for rupture risk assessment caused by the variability of reconstruction procedures.

Arras et al. (2019) presented a novel algorithm using the Bayesian method that combines cross-calibration, self-calibration, and imaging. The proposed algorithm predicted the sky brightness distribution and provided an estimate of the joint uncertainty that involves both the uncertainty of the calibrations and actual observations. The algorithm used Metric Gaussian Variational Inference as the statistical method. Similarly, Yan et al. (2019) developed a novel measure of uncertainty in data using a metric-space view of the input trees. They studied a structural average of a set of labeled merge trees and used them to encode data. In their work, they computed a 1-center tree that minimized its maximum distance to any other tree in the set of a well-defined metric called interleaving distance. In addition, they provided an interactive visualization system that resembled a numeric calculator where a group of merge trees was supplied as input and presented a tree of their structural average as output.

2.3.4 Modeling uncertainty

Uncertainty in data can be predicted by components in computational models as well. The models are designed to estimate any variations in computations. For example, there could be potential errors in model inputs or parameters. Such cases lead to a range of incorrect predictions from models. Such models may also give figures about the approximated inaccuracy through numerical values with their outputs. In a few cases, some models may give nominal or categorical predictions. Uncertainty can arise in mathematical or computational models and experimental results in several ways, and hence, data modeling is a source of uncertainty (Pang et al. 1997; Riveiro 2007). A few contexts through which uncertainty can enter a computational model are parameter uncertainty, algorithmic uncertainty, experimental uncertainty, and interpolation uncertainty.

3 Uncertainty representation approaches

There has been extensive work on various approaches to uncertainty representation. After an exhaustive literature survey, we concluded that most approaches could be grouped into two categories—quantification and visualization. Quantification approaches primarily deal with modeling data uncertainty through various mathematical models. Visualization approaches, on the other hand, involve displaying data uncertainty visually. Figure 4 shows the two approaches to uncertainty representation, as well as the various popular techniques under these two approaches. Many visualization designers have taken the route of a hybrid approach where they have tried to use quantitative approaches in conjunction with one or more visualization approaches (Schulz et al. 2017; Cedilnik and Rheingans 2000; Karami 2015; Grigoryan and Rheingans 2002, 2004; Djurcilov et al. 2001). For instance, Fig. 5 shows an example where uncertainty at each point was calculated and mapped to the noise/surface perturbation value to distinguish from surface geometry information (Grigoryan and Rheingans 2002). This section first examines the relationship between the quantification and visualization approaches and then presents a brief study of the two approaches separately. This section ends with a summary table that outlines popular visualization techniques and considers their pros and cons.

Hybrid representation approach, reproduced from Grigoryan and Rheingans (2002)

3.1 Uncertainty quantification approach

Several numerical approaches can be used to model and represent uncertainty. This sub-section briefly discusses a few of these mathematical approaches/theories: probability, possibility, and evidence theories (Parsons and Hunter 1998). The three formal ideas are not very different from each other. They are remarkably similar to each other, with a slight variation in their intended meaning and applications. Mostly, it comes down to assigning the degree of belief to various uncertain situations so that we can separate the most unambiguous ones. Belief is allocated to an event using assignment functions based on multiple factors, such as the event’s possibility, subjective analysis by an individual, and statistics related to the event. Traditionally, assigned belief values vary between 0 and 1, where the facts that are known to be false are assigned a value of 0, and 1 represents facts. Some theories, such as probability and evidence theory, do not allow the amount of belief to be higher than 1. In other words, individuals are not supposed to believe in uncertain events more than if they would have liked to think about certain events. Unlike probability and evidence theory, possibility theory does not have such limitations. So, an individual can believe in a set of alternative uncertain events (Parsons and Hunter 1998).

The mathematical modeling of uncertainty can quantify uncertainty and then put the quantified uncertainty to visualization. The past few decades have seen the rise of various numerical models that have been used to identify and quantify uncertainty in data. The classical probability techniques were the first ones to be used to manage data uncertainty. It has since become one of the most popular techniques in this area. Later, other mathematical models were employed for this task. Most notable are the Dempster-Shafer theory and the possibility theory. Apart from these models, researchers also introduced numerous other numerical techniques like probabilistic logic, certainty factors, etc. to tackle uncertainty (Parsons and Hunter 1998). Probability density functions (PDF), multi-value data or bounded data can also depict uncertainty (Brodlie et al. 2012).

In 1933, Andrey Nikolaevich Kolmogorov developed the probability measure, regarded as the classical probability theory (Kolmogorov-Smirnov et al. 1933). It has turned out to be the most popular model to tackle uncertainty. It has developed into a more sophisticated model owing to extensive mathematical studies over the topic (Parsons and Hunter 1998). Kolmogorov introduced the concept of probability space with a set of three values:

where, \(\Omega \) is the total number of probable results of an arbitrary event, \(\Omega \ \epsilon \ F\),

F is a set of non-empty combinations of the total outcomes and

P is the probability of the occurrence of any outcome corresponding to an event.

Later, Glenn Shafer, in collaboration with Arthur Dempster, introduced the concept of evidence theory to counter the restrictions of the classical probability theory (Shafer 1992). To dispose of the idea of precision in classical probability theory, Dempster came up with the concept of indefinite probabilities in 1968 (Dempster 1968). Such indistinct probabilities have multiple likelihood values instead of one value, like probability theory. Each set of probability measures contains lower and upper probabilities. In 1976, Shafer called these lower and upper probabilities belief and plausibility (Shafer 1976). Shafer leveraged the above works to get rid of the myth of completeness in classical probability theory (Parsons and Hunter 1998), and these combined efforts of Dempster and Shafer came to be widely recognized as Dempster-Shafer evidence theory (DSET). On the other hand, Zadeh (1999) introduced a concept of possibility theory to build on the idea of fuzzy sets. Fuzzy sets could translate to a collection where values are degrees rather than absolute numbers (Zadeh 1996). For example, a set of people short in height can be called a fuzzy set. In this case, values are degrees rather than absolute numbers. The possibility function was defined by Zadeh (1996) as a membership function related to each fuzzy set. The possibility measure was the value of the least upper bound of the possibility function associated with fuzzy sets (Bonneau et al. 2014).

Scientists have identified that data carry inherent uncertainty, and this intrinsic uncertainty needed to be modeled. They employed probability distributions extensively in uncertainty visualization research to model the underlying uncertainty (Pfaffelmoser and Westermann 2012; Pöthkow et al. 2011; Bensema et al. 2016; Liu et al. 2012; Thompson et al. 2011). Several methodologies are available today to model uncertainty associated with spatial positions using PDFs. Almost all of these methodologies focus on reducing the amount of data to investigate and visualize PDFs. Clustering techniques have been used to filter the data that have helped determine features such as outliers in the data (Bordoloi et al. 2004). Multi-dimensional, time-varying, multi-variate PDFs mapped to 2D or 3D have also been shown by using volume rendering and streamlines (Luo et al. 2003). Researchers have also used other methods to show spatially varying distributions. In one of the works, the researchers investigated NASA satellite images and LIDAR datasets. Here, the data’s mean was encoded as a 2D color map with the standard deviation as a displacement value (Kao et al. 2001). This work was essentially a case study on the specific data, and similar work was carried out again by (Kao et al. 2005) in another work.

A few other approaches have also been deployed to show spatially varying data. For example, researchers used a slicing approach to indicate the distribution data, while the mean of the distribution functions was encoded onto a color mapped plane. Cutting surfaces near the boundaries allowed for the analysis of distribution data (Kao et al. 2002). Sometimes, histograms were used to depict the PDFs’ density, and color mapped spatial surfaces were used to plot the PDFs.

3.2 Uncertainty visualization approach

The concept of uncertainty put into visualization has been described as a process that suggests that the visualization of reliable and unreliable data can be treated as separate processes integrated into one (Griethe et al. 2006), as shown in Fig. 6. The raw data and the associated uncertainty go through a transformation process to generate a visualization. The visualization of the normal data and its uncertainty can be considered separate for a better understanding of the entire visualization process. The process tries to convey the flow of raw data and raw uncertainty in any visualization process and show how data flows from acquisition to the final visualization. It is a general guideline for data flows in a visualization process and can be used to achieve any visualization task. In addition to the quantification approaches discussed in the previous section, many researchers have also devised various theories and techniques to reveal various types of uncertainties associated with data. Here, we discuss some of these popular visualization theories and techniques. These techniques and theories attempt to integrate the visualization of error or uncertainty information and the actual data, which earlier used to be ignored in data visualization.

Uncertainty visualization process, reproduced from Griethe et al. (2006)

3.2.1 Geometry

Many applications can visualize uncertainty with the inclusion or modification of geometry. In general, geometry is added to represent continuous data. Another technique, glyph, discussed in the subsequent sections, is also a method to add geometry; however, it is added only at discrete locations in the visualization. There are a variety of techniques to add geometry to a rendered scene. Some of the prominent techniques are contour lines, isosurfaces, streamlines, and volumes. Error in a scene can also be represented by modifying the existing geometry using distortion, scaling, or rotation (Pang et al. 1997).

3.2.2 Attributes

According to Pang et al. (1997), one can visualize uncertainty in a scene by changing attributes such as the control of shading, lighting, and coloring of geometry in the rendered scene. Various parameters can be mapped to unreliability with the control of these attributes. In this technique, these attributes can also be varied according to well-defined functions or mappings, such as textures, to give an appealing 3D appearance to visualizations. Users can also vary the polygon’s material properties in texture modification to make it more specular or diffused.

Another property utilized in visualization (or simulation), radiosity, simulates the diffuse propagation of light starting at the light sources when a 3D effect is being utilized. It is interesting to note that under different circumstances, altering the texture may result in an indication of the location of the light source—resulting in the introduction of uncertainty or even false information regarding the light source in the visualization. In some cases, such addition of information might be intentional, such as in computer-generated graphics to be used in an animation. Altering specular coefficients allows the user to focus on planes parallel to the viewing plane if the intention is to show that the light source is tied to the camera or eye position. This method is a better tool if the user is interested in viewing a particular area. On the other hand, altering the diffuse coefficients is better for viewing the error associated with the entire image (Pang et al. 1997). An example of the results of altering these coefficients in the visualization of a 3D metal ball is shown in Fig. 7 as proposed by Gebhardt (2003).

Use of specular (left) and diffuse coefficients for surface modification, reproduced from Gebhardt (2003)

3.2.3 Animation

Animation is another technique that can be used to map unpredictability in information (Pang et al. 1997). Confidence in the given information can be mapped to different variables, including speed, blinking, motion, range, blur, and duration, to show the users’ unreliability (Griethe et al. 2006). The range of movement in different animation frames can express a lack of certainty (Brown 2004). Since there are multiple parameters in animation to which uncertainty can be mapped, it is a powerful, innate, and unambiguous method to display uncertainty to the end-user without cluttering the display.

3.2.4 Visual variables

Various visual variables such as color, hue, brightness, and saturation are commonly used to visualize uncertainty. Figure 8 illustrates different types of visual variables that can be used to indicate uncertainty. One of the techniques involves the use of color gradient. Color progression or color gradient is also an effective way of showing the ambiguous region in a visualization. Figure 9a shows the area of uncertainty using color variation in a display where the solid red line represents data with no uncertainty. It is bounded by the progression of colors that denote the data set’s confidence intervals, with lighter colors representing a higher uncertainty. Another technique that can be used to show uncertainty is a noise-like pattern in a line curve. The noise represents the uncertainty and the amount of error in the values. The use of such a pattern in visualization is demonstrated in Fig. 9b. We used our hand-crafted data to generate these visualizations.

Use of visual variable for uncertainty visualization, reproduced from MacEachren et al. (2012)

3.2.5 Graphical techniques

Graphical methods are the traditional and most popular way of representing a large amount of data in a comprehensible manner. Graphical techniques such as box plots, scatter plots, and histograms help represent data and facilitate a better understanding of the data in cases where it can be used. Such techniques were first proposed by Tukey (1977) to effectively communicate features of a given data. Box plots have been commonly used to display uncertainty in one-dimensional data (Frigge et al. 1989; Haemer 1948; Spear 1952; Potter et al. 2006; Choonpradub and McNeil 2005; Tukey 1977). Box plots display the “minimum and maximum range values, the lower and upper quartiles, and the median” (Potter et al. 2006). Box plots can be modified to show various uncertainties in the data. One of the most common ways to display uncertainty is to make changes to the box plots’ sides to show density information (Benjamini 1988). There are many other variants of the box plots such as vase plot (Benjamini 1988), violin plot (Hintze and Nelson 1998), box-percentile plot (Potter et al. 2010; Potter 2010), dot plots (Wilkinson 1999, 2012), and sectioned density plots (Cohen and Cohen 2006), as shown in Fig. 10. In addition, Fig. 11 demonstrates a 2-D box-plot extension where the lower flat surface visualizes the meanwhile the distortion visualizes the standard deviation. The difference between the quartiles is represented using color. Furthermore, the variance between mean and median is visualized by the upward bars’ size, which has colors in sync with the base surface. Likewise, contours can also be used to depict unreliability (Potter et al. 2009; Prassni et al. 2010).

Different types of box plots depicting error information, reproduced from Bonneau et al. (2014)

2-D box-plot extension, reproduced from Kao et al. (2002)

3.2.6 Glyphs

Various attributes, for instance, size, shape, color, and orientation, as well as location, could be used to describe data in visualization using symbols known as glyphs. Since glyphs are multivariate, they can be used to show uncertainty. Researchers used the multivariate characteristics of glyphs to show the distribution of three features in a data set (Chlan and Rheingans 2005). In another work, the radius of conical glyphs has been used to depict uncertain information (Jones 2003). Attributes in glyphs can also be modified to express unreliable information. In one such approach, a procedure generation algorithm was used to alter the properties of the glyphs already in the visualization (Cedilnik and Rheingans 2000). This work emphasized the distortion of glyphs to visualize uncertainty and error. In another type of glyph, sharp glyphs, the area of low uncertainty was shown with decreasing sharpness. Contours can also be modified as glyphs to express information with errors (Allendes Osorio and Brodlie 2008; Sanyal et al. 2010). Recently, Holliman et al. (2019), in their work, proposed the use of visual entropy to construct an ordered scale of glyphs for representing both uncertainty and value in 2D and 3D environments. They used sample entropy as a numerical measure of visual entropy to construct a set of glyphs using R and Blender.

Glyphs are used to visualize only uncertain information in datasets. Visualizing the complete dataset using glyphs is challenging, e.g., the UISURF system (Joseph et al. 1998) where arrow glyphs were used to denote uncertain magnitude and direction in a scene. These can also be used to show natural phenomena such as hurricane or cloud movement on a map. A few other applications of glyphs include flow visualization, vector visualization, interpolation, and radiosity. Various glyph representations, such as “line, arrow, and ellipsoidal glyphs,” have been used to display unreliability in these and other applications (Li et al. 2007; Lodha et al. 1996a, b; Sanyal et al. 2009; Schmidt et al. 2004; Stokking et al. 2003; Wittenbrink et al. 1996; Zuk et al. 2008; Zehner et al. 2010). An application of glyphs in uncertainty depiction is shown in Fig. 12a, where the magnitude, direction, and length of glyphs represent uncertainty in vector fields. For comparison, Fig. 12b shows a glyph without any uncertainty information.

Glyphs, reproduced from Wittenbrink et al. (1996)

3.3 Relationship between quantification and visualization approaches

In this era of machine learning and big data, a massive amount of work is being performed to understand uncertainties or unknowns in data analytics. Because of this, visualization designers are trying to express the unknowns in different ways, using visualization as a tool after modeling and quantifying uncertainties (Schulz et al. 2017; Li et al. 2007). Most of the uncertainty information can be expressed via probability density functions (PDFs); however, it is challenging to visualize the PDFs using the existing visualization approaches, or the visualization is mostly limited to one-dimensional or two-dimensional displays. Thus, assumptions are usually made about the data, such as aggregating the data to a unique value, for example, standard deviation, to reduce the size or dimension of data. These single values can then be easily visualized without any visual clutter.

Another research study used the PDF as a building block and standard deviation for each node to obtain distribution and then used them as inputs to visualize bubble treemaps for uncertainty (Görtler et al. 2018). The authors obtained a distribution for each node at the leaf level and then computed uncertainty to gauge the model’s characteristics toward the root level. Later, they drew a treemap layout, leaf circles, and contours to encode uncertainty values. Figure 18b demonstrates their novel concept of bubble treemaps, which is used to visualize hierarchical data and the uncertainty within it in the form of contours and blur using PDF as a building block.

There are several approaches to using quantitative uncertainty information in final visualization results. Some researchers have attempted to quantify uncertainty in terms of cumulative density functions (CDFs) and quantile dot-plots to estimate more accurate probability intervals and later visualized them to improve the decision-making of end-users in a transit system (Fernandes et al. 2018). Another notable work that demonstrates the relationship between uncertainty quantification and final visualization is a probabilistic graph layout that may be used to visualize an uncertain network (Schulz et al. 2017). A force-directed layout was then applied, and the uncertain network was visualized using visualization approaches such as node and edge splatting, edge bundling, clustering, node coloring, and labeling, as illustrated in Fig. 13.

Probabilistic graph layout of a network reproduced from Schulz et al. (2017)

Visualization designers have employed uncertainty quantification mapping to visualization tools. Li et al. (2007) tried to estimate and visualize large-scale astronomical data’s positional uncertainty by taking into account the square root of total separation between astronomical objects and earth and the square root of variation of parallax. Figure 14 demonstrates their work of visualizing positional uncertainty through the use of visual cues (called the unified color-coding scheme) by computing the errors from variance values. There have been several other notable works (Karami 2015; Cedilnik and Rheingans 2000; Grigoryan and Rheingans 2004; Djurcilov et al. 2001) that demonstrate the relationship of uncertainty quantification with the visualization techniques as mentioned in Sect. 3.

Positional uncertainty visualization, reproduced from Li et al. (2007)

We can conclude from the above discussion that uncertainty quantification is used in several visualization approaches. It can be inferred that quantitative uncertainty or error values are passed as input to a visualization tool, which in turn visualizes the unknowns through popular visualization theories or techniques such as visual cues like a color map, blur, fuzziness, hue, and animation.

4 Recent advances in uncertainty visualization

Machine learning, ensemble data, and big data have posed new challenges to uncertainty visualization due to the enormous amount of data they process and generate. Large-scale data visualization intends to present a huge amount of data in a user-friendly and understandable manner. However, most of the visualization tends to ignore the uncertainties associated with huge data (Potter et al. 2012). In this section, we present a few instances of state-of-the-art research in the areas of machine learning, ensemble data, and big data with respect to uncertainty visualization.

4.1 For ensemble data

The recent decade is a witness to a noteworthy development in the ensemble visualization area due to the extensive accessibility to the ensemble data and their growing visualization demands. From the survey of ensemble data visualization works, it can be concluded that most of the ensemble visualization research leverages modeling of uncertainty. Researchers from various domains frequently investigate complex physical phenomena such as hurricane and weather forecasting using computer simulations. These simulations operate on modeling configurations, e.g., input values, initial conditions, boundary conditions, etc. Because of the inherent uncertainty, these simulations are performed multiple times varying parameters to produce different results; a collection called an ensemble (Wang et al. 2018). It can be observed from a broader perspective that ensemble data is a type of uncertain data, and uncertainty is usually a result of multiple occurrences of the same experiment. According to Wang et al. (2018), ensemble data has five orthogonal dimensions: ensemble, location, time, variable, and member. They identified four geometry-based visualization techniques for spatial ensemble data based on curve, volume, surface, and point. For non-spatial ensemble data, they suggested traditional information visualization techniques.

The variable associations are elaborate and complicated in multivariate ensemble datasets, making uncertainty analysis of complex variable associations challenging. Lately, researchers have proposed approaches to tackle this problem. Zhang et al. proposed a state-of-the-art method to visualize the uncertainty for variable associations between a reference variable and the associated variable in ensemble data (Zhang et al. 2018). The authors utilized a Gaussian mixture model (GMM) to measure the uncertainty and presented the use of this GMM-based method to filter the uncertainty isosurface of the reference variable, as shown in Figure 15. They proposed a unified rendering method to show the associations between variables. The reliable associations between variables were presented in a switchable view to assist the users in making established decisions. In contrast, the associations that were not credible were visualized with animation to expedite more investigation. Similarly, Hollister and Pang (2015) proposed a direct extension of 1D PDF interpolation using quantile interpolation for the bivariate case. This method was found to be more efficient compared to GMM interpolation. In addition, this method eliminates the ambiguity of GMM with respect to its pair-wise interpolants.

Ensemble data uncertainty visualization a Isosurface of original data, b Uncertainty isosurface using GMM-based method, reproduced from Zhang et al. (2018)

The ensemble data are being generated at a high rate due to the increase in computing power and the need to model more complex real-world phenomena (Hibbard et al. 2002; Thompson et al. 2011). Such complex phenomena and the ensemble data they generate are challenging to understand, and that’s where visualizations can come to the rescue. This necessity has led to numerous ensemble visualization techniques during the past decade to model uncertainty in ensemble data, including the use of probability distributions (Thompson et al. 2011; Bensema et al. 2016; Liu et al. 2012; Otto and Theisel 2012; Pfaffelmoser and Westermann 2012; Pöthkow et al. 2011; Hazarika et al. 2017), curve, isosurface, and contour oriented techniques for spatial ensembles (Diggle et al. 2002; Bensema et al. 2016; Ferstl et al. 2016b, 2015; Mirzargar et al. 2014; Whitaker et al. 2013), and time-series charts and comparative visualization techniques for temporal features (Bruckner and Moller 2010; Ferstl et al. 2016a; Fofonov et al. 2015; Hao et al. 2015; Obermaier et al. 2015; Shu et al. 2016). Due to the spatial-temporal characteristics of ensemble data, visualization is often achieved by leveraging multiple views connected, exploring, and analyzing the data through users’ interactions (Potter et al. 2009; Wang et al. 2016a; Matkovic et al. 2009; Piringer et al. 2012; Höllt et al. 2014; Jarema et al. 2016). Figure 16 shows a few examples of two notable attempts in ensemble data uncertainty visualization reproduced from Bensema et al. (2016) and Ferstl et al. (2016b).

4.2 For big data

Similar to ensemble data, there have been recent attempts to visualize uncertainties in big data. A few researchers have proposed uncertainty-aware visual analytic frameworks in big data. One such notable work is the proposal of a framework for analyzing uncertainty in big data (Karami 2015), which was inspired by earlier work (Correa et al. 2009). Karami (2015) designed a prototype for potent uncertainty visualization of KDD-CUP’99 traffic data. The prototype was developed in MATLAB, and the uncertain input data was fed into the system, then visualized. Uncertainty was visualized by mapping the degree of uncertain information to the node radius and the node color’s uncertainty levels. The developed prototype can be seen in Fig. 17. The blue nodes show normal traffic, and the red nodes represent attack traffic. In the figure, the impurity of the color representation suggests the level of uncertainty. For instance, an uncertainty of 100% is visualized by a color value of 0.5. Likewise, pure blue and red colors indicate certain information. The uncertainty regions are represented by mapping colors to the value of the amount of data uncertainty.

Uncertainty visualization in big data, reproduced from Karami (2015)

Treemap is another popular method to visualize big hierarchical data. Most of the real-world data is hierarchical, and therefore, representing it effectively and efficiently is essential (Schulz 2011. A considerable amount of research has gone into this area, and it has matured from earlier representations of treemaps. Conventional forms of treemaps did not have the option of adding additional information to accommodate unreliable data (Johnson and Shneiderman 1991). In contrast to traditional treemaps, circular treemaps suffered from the problem of space wastage (Zhao and Lu 2015). Other conventional approaches to treemaps such as Voronoi treemaps (Balzer et al. 2005) and squarified treemaps (Bruls et al. 2000) were used for hierarchical data. However, they lacked the flexibility to add extra variables to display uncertainty. An example of a squarified treemap is shown in Fig. 18a. A few researchers have also proposed the use of non-traditional treemaps, such as Gosper flow snake curve-based treemaps, to display hierarchy in data (Auber et al. 2013), which lacked the scalability to encode uncertain data. Schulz et al. (2011) described various ways to represent the hierarchy in data via their survey.

Recently, Görtler et al. (2018) proposed an innovative approach to visualize hierarchical data laced with uncertainty using bubble treemaps. In this work, they used the extra space to add more visual variables to visualize data unreliability. The authors proved the reliability of their approach with the help of distinct cases—one of them being an example of a study on consumer spending. This approach also described the relationship of uncertainty models with hierarchical data. Figure 18b shows this visualization of the U.S. consumer expenditure survey data. The node and leaf sizes in the map are proportional to their values, while the contours’ thickness was used to depict the model error. Notably, the uncertainty in the data was portrayed through blur in the contours. The data categories represented by cyan and yellow have higher error and uncertainty and are consequently illustrated with a more intense blur.

4.3 For machine learning

Recently, ML is being applied to a spectrum of fields, including data science and mining, human–computer interaction, visualization, and computer graphics. Despite its popularity, data scientists and users think of ML models as a black box and are unaware of how and why they work (Mühlbacher et al. 2014; Liu et al. 2017a; Fekete 2013). Therefore, there is a need for approaches that can help users comprehend ML models’ working mechanism—and that’s where visual analytics can play a significant role.

Current research trends point to integrating ML, predictive models, regression models, and visualization techniques to boost the illustration and comprehension of uncertainty in the intended process. Figure 19 depicts predictions of the occupation of individuals that attempt to visualize uncertainty in predictive models (Rheingans et al. 2014). Several occupations are predicted using input values, including age, gender, race, nationality, and income. It can be seen in the figure that predictions are concentrated in a particular region that is indicated by the localization of colors. The figure also suggests that some of the projections have higher uncertainty or error, as the pie/speckle glyphs do not represent the occupation classes. It can also be inferred from the figure that the ML model has a high error and less confidence.

Pie glyph (top), speckle glyph (bottom) depicting ML model prediction uncertainty, reproduced from Rheingans et al. (2014)

As discussed previously, visualization is being used to create explainable systems that can help ML experts understand ML models’ know-how. One such example is illustrated in Fig. 20 where Panorama Visualization can be seen in a data analytics system known as TopicPanorama (Wang et al. 2016b). The figure displays a visualization of the topics corresponding to respective software firms where uncommon topics related to distinct software companies are visualized in contrasting colors. The use of pie charts represents undistinguished topics. The figure also shows an uncertainty glyph, which is used here to examine uncertain matches in results. The angle between the sliders in the uncertainty glyph is used to represent the degree of uncertainty. As seen in the figure, the uncertain matches have uncertainty glyphs and are represented by Fig. 20A and B. In contrast, the correct matching result is shown by Fig. 20C and D in the figure.

A detailed summary of popular uncertainty visualization techniques studied in this survey, along with their advantages and disadvantages, is presented in Table 1. The purpose of this tabular representation is to enable researchers to use it as a quick reference for some of the preferred uncertainty visualization methods. The table also serves as a recommendation for choosing an appropriate visualization technique based on the data’s type or property being visualized. The type and attribute/property of data has been color-coded, as indicated in the table caption. Various visualization methods were ordered based on the data they were dealing with, in the following order—Temporal \( \rightarrow \) Categorical \( \rightarrow \) Numerical \(\rightarrow \) Spatial. Methods that applied to multiple data types were listed based on one of the four types mentioned. Readers can refer to the table in several ways based on their needs. For example, if the reader needs to find techniques used to visualize uncertainty in temporal data, the readers can refer to the rows with the cyan-colored label. Based on additional properties of data, such as data being numerical and having spatial properties, it would further shortlist the techniques that users could apply to such a scenario. On the contrary, for the development of new techniques, readers can note that very few techniques attempt uncertainty visualization for multivariate or hierarchical data. This may be an attractive area of exploration for new researchers.

A visual analytics system called TopicPanorama (left). Dotted section illustrates an uncertainty glyph (middle). A and B represent incorrect matching, C and D show correct matching in matching result. Reproduced from Liu et al. (2017b)

Categorical

Categorical  Numerical

Numerical  Multi-Variate

Multi-Variate  Hierarchical; Property:

Hierarchical; Property:  Spatial

Spatial  Temporal} {ML: Machine Learning, Viz: Visualization, UnViz: Uncertainty visualization}

Temporal} {ML: Machine Learning, Viz: Visualization, UnViz: Uncertainty visualization}5 Evaluation of uncertainty visualization techniques

While a larger body of work has evaluated visualization in general, the area of evaluation of uncertainty visualization techniques relatively remains unexplored. The absence of well-established assessments of these techniques indicates a major need for exploration in this area despite some specific user-studies based assessments in the past. The absence of assessments was particularly evident in traditional visualization domains instead of the latest uncertainty visualization domain. Nevertheless, the latest trends suggest that the researchers concentrate on the assessment aspects of the proposed techniques. The evaluation methods can be broken down mainly into two major classes, based on the available literature:

-

Theoretical assessments, primarily based on Tufte’s theories such as graphical excellence or graphical integrity (Tufte 1985; Tufte et al. 1998; Tufte 2006), Bertin’s work on visual variables (Bertin 1973, 1983), and Chamber’s research on the effect of patterns on visual perception (Chambers 2018).

-

User assessments, based on the study of the impact of uncertainty visualization techniques on end-users using either visual evaluation or task-oriented cognitive evaluation, or both ( Barthelmé and Mamassian 2009; Coninx et al. 2011; Newman and Lee 2004; Deitrick and Edsall 2006; Deitrick 2007).

5.1 Theoretical assessments

Tufte, a pioneer in data visualization, proposed two popular theories on excellent visualization—graphical excellence and graphical integrity.

-

Specific guidelines were proposed to achieve graphical excellence. Some of them include presenting a large amount of data in a small space, avoiding distortion, and encouraging a comparison of the data.

-

Tufte also proposed guidelines to achieve graphical integrity, such as clear labeling of the data, representing numbers proportionally to the numerical quantities and matching the number of data dimensions with the amount of information.

Apart from these popular theories, Tufte also proposed “data-ink maximization principle”, stating that most chunks of data must be visualized using the lowest quantity of ink possible (Tufte 1985; Tufte et al. 1998; Tufte 2006). Other authors, such as Bertin and Chambers, also contributed to the theoretical evaluation of the techniques. Their assessments were based on their analysis of the brain, eye, and picture interaction, which are listed below:

-

Bertin’s perceptual theories suggested categorization of visual variables in the form of (x, y) planes and evident spots above the surface such as color, size, shape, orientation, and value (Bertin 1999, 1983).

-

Another set of perceptual theories were introduced by Chambers, who presented a general technique for plot construction by reducing clutter, removing the structure from the data, and labeling the data to avoid distortion (Chambers 2018).

5.2 User studies

Several task-based user studies have been carried out to evaluate uncertainty visualization techniques. In fact, user-based evaluations are the most popular type of assessments (Deitrick 2007; Deitrick and Edsall 2006; Newman and Lee 2004). Although several techniques are available to convey uncertainty in visualizations, their potency in communicating significant information is a subject of evaluation. Sanyal et al. (2009) presented a user study to evaluate uncertainty’s effectiveness on four common uncertainty visualization techniques in 1-D and 2-D data. The techniques chosen were the size and color of glyphs, the color of the data surface, and error bars on data. Twenty-seven participants were asked search and counting related questions on this 1-D and 2-D data. The users were expected to find the least or most uncertain data points and count the data’s number of data or uncertainty features. This study indicated that the success of uncertainty visualization techniques was dependent on the user task. The size of the uncertainty glyphs and color of the data surface worked fairly well; however, error bars performed poorly in the experiment. Such results could pave the way for future visualization researchers in choosing the right uncertainty visualization technique for their research.

Certain visualizations such as graph visualizations produce a complex network of nodes or graph pictures based on graph drawing algorithms. Their readability criteria have always measured such pictures’ quality; however, such criteria are useful but not sufficient to evaluate graph visualization (Nguyen et al. 2012). Nguyen et al. proposed new criteria called faithfulness and also introduced a model to quantify this new kind of criteria to differentiate it from readability criteria (Nguyen and Eades 2017). Users can comprehend a complex network better when any graph visualization takes care of the nodes’ connectivity with the concept of faithfulness. User studies could play a pivotal role in determining the effectiveness of several uncertainty visualization techniques and help the visualization community with the choice of techniques for their tasks.

6 Future research directions

From this literature survey, it is noteworthy that uncertainty visualization has certainly taken giant strides over the past few decades in data science. The available literature gives an overview of what is available today and what are a few open problems. A few of these open problems need detailed discussion to make existing and new data scientists and researchers aware of the issues and give them a head start. This section primarily attempts to accomplish this goal. The missing solutions range from incompleteness in the involvement of perceptual and cognitive senses to the methodologies’ inefficacy employing a comparison of results to determine the reliability of visualization (Zuk and Carpendale 2006, 2007; Pagendarm and Post 1995; Balabanian et al. 2010).

6.1 Integrated views

Uncertainty at times may require a comparison of different visualizations to conclude. Areas such as weather forecasting or medical imaging may involve comparing various results to conclude by detecting similarities or differences (Malik et al. 2010; Gleicher et al. 2011). Comparative visualization techniques have been employed in the past for decision making in such domains (Urness et al. 2003). Adding a lot of information to the view results in cluttering and makes it difficult to comprehend. Likewise, 3-D visualization techniques may be used to show a few parameters, say three or four, instead of all of them (Cai and Sakas 1999; Fuchs and Hauser 2009; Hagh-Shenas et al. 2007). These constraints pose challenges in depicting uncertainty in visualization in such areas. Future research can be performed in the field of comparative visualization. Another interesting research direction is to find efficient ways to model different types of uncertainties in an integrated system. For example, a possible research direction is to model separate uncertainties associated with the system and the user in a unified system.

6.2 User interaction

Another possible future direction of research is the focus on user interaction. In this case, the users can control when the information related to error or unreliability is displayed and how a visual balance of data and the associated errors are achievable when the entire data is visualized at once. Depending upon the development of new futuristic technologies—such as the crime scene investigation visualization capabilities shown in the movies, e.g., Minority Report, warrant the need for additional user interaction capabilities that may overlap with the area of human–computer interaction.

6.3 Perceptual improvements

Colors are primarily used for various visualization techniques depicting multiple types of data and their attributes. Therefore, people with certain conditions, such as color blindness, might be overwhelmed and challenged to understand data through visualization. Such specific cases lead to uncertainty in visualization. Aside from the usual uncertainty sources, for example, sampling uncertainty, modeling uncertainty, or uncertainty through visualization, uncertainties like these come from the end-users or viewers and their ambiguous interpretations of the visualization. In such cases, the display of additional information like uncertainty information in the visualization could add to the ambiguity and lead to a more challenging decision-making process. More research is needed to create efficient visualizations for individuals with a color deficiency or any other perceptual limitations.

6.4 Reasoning integration

There is a lot of complexity and analytic gaps in the reasoning process. Thus, the integration of data and reasoning visualization is likely to bring good results in cognitive support. This integration would help realize the exact depiction of the whole logical system, thereby reducing gaps in analysis and understanding. Otherwise, it would be challenging to observe the impact of gaps in the integrated analytic system. This would also lead to reduced cognitive load on end-users leading to a possible improvement in team performance.

6.5 Big data

A considerable amount of investigation is ongoing in the world of big data relating to uncertainty visualization. Since big data has large amounts of reliability issues, it is essential to discover efficient visual techniques to understand the uncertainty associated with big data, leading to a better understanding of the data. It is also vital to focus on user evaluations to assess alternative uncertainty visualization techniques to investigate and develop the best options. Besides, more research can be performed to improve the existing uncertainty-aware big data visual analytics systems.

6.6 Less clutter

Another open problem is the ever-growing data and the associated clutter in modern times that need to be visualized. This implies that the need for applying uncertainty visualization techniques to big data is only expected to increase. However, the display of uncertainty, along with the data, already results in a cluttered view. This presents a new challenge of representing more data with higher dimensional uncertainties in a display. Furthermore, existing techniques are used in a few areas, such as cartography and volume/flow visualization. Other areas need attention when it comes to applying these big data uncertainty visualization techniques. Moreover, there is a need to focus on the evaluation of such techniques, as stated earlier. For example, a data scientist must tell when and for what data which techniques are more applicable. Topics of research could range from depicting many data sets simultaneously without clutter for integrated views.

6.7 Empirical evaluation

There is a lot to be explored when it comes to empirical evaluation of the uncertainty visualization. Most of the earlier works discuss uncertainty visualization from the theoretical viewpoint. There is a lack of usable implementation strategies in previous works to represent uncertainty in data visualization. Very few research works describe the implementation of different typologies of uncertainty visualization. Future research works may focus on various implementation strategies for uncertainty visualization keeping the new developments and researchers in mind.

6.8 Machine learning

Ongoing research has shown immense promise in the integration of ML and uncertainty visualization techniques to enhance the ability to understand the uncertainty in ML models, be it in predictive models or classification models. Owing to this, there is a need to design better alternative uncertainty visualization techniques to develop comprehensible ML models for analysts. Future research is required to create alternative visualization techniques in the area of dimension reduction methods. Furthermore, ML models need to be improved further to make better predictive models to improve the whole uncertainty visualization experience in ML. Extensive user studies also need to be carried out to evaluate alternate visualization methods. As discussed earlier, visual analytics can improve the ML models’ human understanding; however, it can also introduce uncertainties into the analytics process. Research shows that uncertainty awareness can lead to better decision-making (Sacha et al. 2016). Therefore, it is essential to measure and estimate uncertainties, which is very challenging. One possible future research direction is to investigate and design visual analytics systems that quantify uncertainties at each stage of an interactive model, such as data processing, training, visualization, and testing.

7 Conclusions