Abstract

The goal of visualization is to effectively and accurately communicate data. Visualization research has often overlooked the errors and uncertainty which accompany the scientific process and describe key characteristics used to fully understand the data. The lack of these representations can be attributed, in part, to the inherent difficulty in defining, characterizing, and controlling this uncertainty, and in part, to the difficulty in including additional visual metaphors in a well designed, potent display. However, the exclusion of this information cripples the use of visualization as a decision making tool due to the fact that the display is no longer a true representation of the data. This systematic omission of uncertainty commands fundamental research within the visualization community to address, integrate, and expect uncertainty information. In this chapter, we outline sources and models of uncertainty, give an overview of the state-of-the-art, provide general guidelines, outline small exemplary applications, and finally, discuss open problems in uncertainty visualization.

What is not surrounded by uncertainty cannot be the truth.

—Richard Feynman

True genius resides in the capacity for evaluation of uncertain, hazardous, and conflicting information.

—Winston Churchill

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1 Introduction

Visualization is one window through which scientists investigate, evaluate and explore available data. As technological advances lead to better data acquisition methods, higher bandwidth, fewer memory limits, and greater computational power, scientific data sets are concurrently growing in size and complexity. Because of the reduction of hardware limitations, scientists are able to run simulations at higher resolution, for longer amounts of time, using more sophisticated numerical models. These advancements have forced scientists to become increasingly reliant on data processing, feature and characteristic extraction, and visualization as tools for managing and understanding large, highly complex data sets. In addition, there is becoming a greater accessibility to the error, variance, and uncertainty not only in output results but also incurred throughout the scientific pipeline.

With increased size and complexity of data becoming more common, visualization and data analysis techniques are required that not only address issues of large scale data, but also allow scientists to understand better the processes that produce the data, and the nuances of the resulting data sets. Information about uncertainty, including confidence, variability, as well as model bias and trends are now available in these data sets, and methods are needed to address the increased requirements of the visualization of these data. Too often, these aspects remain overlooked in traditional visualization approaches; difficulties in applying pre-existing methods, escalating visual complexity, and the lack of obvious visualization techniques leave uncertainty visualization an unsolved problem.

Effective visualizations present information in a manner that encourages data understanding through the appropriate choice of visual metaphor. Data are used to answer questions, test hypotheses, or explore relationships and the visual presentation of data must facilitate these goals. Visualization is a powerful tool allowing great amounts of data to be presented in a small amount of space, however, different visualization techniques are better than others for particular types of data, or for answering specific questions. Using the most befitting visualization method based on the data type and motivated by the intended goals of the data results in a powerful tool for scientists and data analysts.

The effective visualization of uncertainty, however, is not always possible through the simple application of traditional visualization techniques. Often, the visualization of the data itself has a high visual complexity, and the addition of uncertainty, even as a scalar value, complicates the display. Issues of visual clutter, data concealment, conflicts in how the data and the uncertainty are represented, and unintentional biases are just some of the problems incurred when visualizing data accompanied by uncertainty. Also, the complexity of these data sets may not lend themselves to the straightforward application of existing visualization methods, and thus, the added burden of uncertainty can be overwhelming.

Uncertainty data are becoming more prevalent and can be found in fields such as medical imaging, geoscience, and mechanical engineering. The simulation of complex systems, compilation of sensor data, and classification of tissue type are but a few sources of uncertainty data and their expression, size, and complexity can drastically vary. Uncertainty can arise in all stages of the analysis pipeline, including data acquisition, transformation, sampling, quantization, interpolation, and visualization. It can be a single scalar value presented alongside the original data, or can be an integral aspect of the data, derived from the description of the data itself. In any case, uncertainty is an imperative component of scientific data sets and should not be disregarded in visualizations.

1.1 Sources of Uncertainty

Uncertainty can mean very different things in different situations, with each driven by different key characteristics and goals. The uncertainty in a data set may result from the process through which the data was gathered or generated, or it may represent variability in the phenomenon represented by the data. We divide data uncertainty sources into three broad classes: uncertainty observed in sampled data, uncertainty measures generated by models or simulations, and uncertainty introduced by the data processing or visualization processes. Variability in the underlying phenomenon could manifest itself in sampled data or be incorporated into models or simulations. A particular data set might be subject to one form of uncertainty or multiple. Different types of uncertainty offer different challenges to effective and truthful visualization. While most of the visualization literature about uncertainty concentrates on issues of visual representation rather than source, a few papers have made a thoughtful analysis of the source of uncertainty, as well [9, 30, 74, 75, 98]. Other useful discussions of the sources of uncertainty can be found in the geo-spatial visualization and GIS literatures [11, 19, 20, 62]. The discussion below draws from all these sources.

1.1.1 Uncertainty in Sampled Data

Uncertainty in data that is gathered through a sampling process might give the appearance of too little information, too much information, or information that just cannot be trusted. Data sets where missing or incomplete instances provide too little information present challenges to many visualization methods. Filtering out data with missing elements can ignore valuable information and produce awkward holes. Filling in missing values or instances by interpolation, imputation, or other estimation techniques from known values can introduce error. In such cases, data quality metrics might indicate the confidence in estimated quantities. For instance, estimating a single missing data value from a dense set of similar instances would be expected to produce a smaller error than an estimation from a sparser or more disparate set. Data sets where multiple, contradictory measurements seem to provide too much data also offer challenges for visualization. Such situations can be caused by noisy data, noisy instruments, human error in the data gathering process, or sampling at a scale different than that natural to the phenomenon. One special case of error in data measurements is that of spatial data where the error might be in the position of a sampled location, rather than in its measured values, resulting in uncertainty about where values should be displayed. Similarly, data with contradictory values might be characterized by data quality metrics based on sample value range, variance, or another measure of variability. Finally, metadata about a data source may cast doubt on its certainty. For instance, data that is old, from an untrusted source, or gathered through a nonstandard process might be regarded with some skepticism (Fig. 1.1).

1.1.2 Models Containing Uncertainty

Sophisticated computational models may contain elements designed to estimate the uncertainty or variability in the model predictions. The sources of this type of uncertainty include residual variability from simplifying abstractions, variability in the mechanism or magnitude of causality and relationships, potential error in model inputs, incorrect model parameters, and imprecision in tacit knowledge incorporated in the model. The range of predictions made by model ensembles, where different component models may make different assumptions or use different parameters, illustrate the potential variability in even the best models.

The output from such a model may include information about estimated error in the form of a single error measure, ranges for expected values, or predicted distributions for values or errors. These measures are applicable to numeric quantities. Alternatively, a model that makes nominal or categorical predictions may also indicate the degree of confidence in its predictions by producing multi-value predictions, where each possible value or classification is associated with a likelihood.

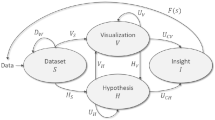

1.1.3 Uncertainty from the Visualization Process

Finally, we should understand how the visualization process impacts the propagation, magnification, perception, and impact of uncertainty. In order to do this, we must understand computational sources and magnifiers of error and uncertainty in input values, perceptual and cognitive influences on the understanding of uncertainty visualization, effects of differences in audience abilities and cultures, requirements imposed by different application tasks and goals, and competing positive and negative consequences of showing uncertainty.

2 Perceptual Uncertainty

Logically, it seems sensible to display information about uncertainty in a manner consistent with our cognitive models of which perceptual elements contain variability or uncertainty. A number of approaches to uncertainty visualization seem to build on this principle, representing uncertainty with such visual elements as blur, flicker, reduced saturation, sketched outlines, or transparency.

There have been relatively few careful evaluations of the effectiveness of uncertainty visualization and its impact on the decision-making process that have appeared in the visualization literature. In some cases, researchers have used quantitative evaluations or user studies to evaluate the ability of subjects to understand uncertain information [33, 109]. Zuk and Carpendale [111] present a framework for the heuristic evaluation of uncertainty visualizations from the perceptual and cognitive principles described by Bertin [6], Tufte [101], and Ware [105]. They use this framework to analyze eight uncertainty visualizations of different types and from different domains. They propose this sort of heuristic evaluation as a rough substitute when more specific evaluations are not practical.

Additional insight into the perceptual and cognitive elements of effective uncertainty representations can be found in the GIS literature. Harrower surveys a collection of evaluations of methods for representing uncertainty in map-based visualizations [38]. He observes that the most common characteristics used to judge a technique are its effects on confidence, speed, and accuracy of judgements. Two principles which may be derived from that set of evaluations are the superiority of displays that integrate value and certainty information over those that show each in a separate display and the preference for static displays over those that toggle between value and certainty. Deitrick describes experiments that show how inclusion of information about uncertainty changes the judgements made by subjects [21].

The field of medical decision-making has also considered the role of uncertainty in the decision-making process. Politi et al. [78] studied the effect of communication of uncertainty on patients engaged in shared decision-making. They reported an aversion to ambiguity in this situation, leading some patients to avoid making decisions in the presence of uncertainty while others engaged in additional information-seeking behaviors. They observed interactions between level of education and decision-making under uncertainty. In particular, less educated patients were more likely to conclude that the inclusion of visual depictions of uncertainty made data less trust-worthy. Patients also tended to interpret uncertain situations in a way that reinforced their initial values and preferences. Finally, Politi et al. suggest that communication of uncertainty may lead to greater ultimate satisfaction in the decision process and a lower likelihood of regret about a decision.

There is evidence that decision-making in the presence of uncertainty takes place in different regions of the brain than decision-making in more certain conditions. Specifically, Paulus et al. [76] observed different patterns of brain activity under fMRI during different decision-making conditions. They suggest that the more complex task of decision-making under uncertainty requires more complex strategies and is more influenced by experiences in the past. The physiological evidence supports this theory by showing increased involvement of brain areas important to strategy formation and adjustment, in particular the prefrontal and parietal cortex, when uncertainty is present.

3 Formal Description

The consideration and quantification of uncertainties is of great importance in many practical applications and is part of the data analysis chain to support decision making. For this reason, we need to understand the data including its shortcomings, value, and relevance, which largely depends on the presence or absence of uncertainty. Our goals are to understand quantified uncertainty and deal with it, as well as independently perform uncertainty quantification ourselves.

3.1 What is Uncertainty?

Uncertainty is the lack of information. It can be due to randomness, such as results by chance, for example the roll of the dice or knowing the exact daily quantity of rain in Seattle. This type of uncertainty is called aleatoric and is objective in that results differ each time an experiment is run. These types of phenomenon are truly random in that the results depend on chance, and thus use probabilistic modeling to describe. Uncertainty can also be due to a lack of knowledge, that is, "knowledge that can in principle could be known," but in practice is not. This type of uncertainty is called epistemic and is subjective, such as not knowing the birth date of the last Chinese Emperor. These uncertainties are due to errors that practically cannot be controlled and can be described by non-probabilistic modeling.

3.2 Mathematical Modeling of Uncertainty

A variety of types of uncertainties occur in practice, including mixtures of different types of uncertainty. Quantification of uncertainties, including mixtures, requires a unifying mathematical framework, which is very difficult to establish and not yet fully accomplished.

3.2.1 Fundamental Setting

From a fundamental standpoint, we are interested in the situation with possible outcomes or occurrences of “events” A, B, C, where A, B, and C are subsets of the set of all elementary events in the universe. The task at hand is to then measure the evidence that A ever happened, the degree of truth of that statement “event A happened”, and the probability that event A will happen. The question is then, how do we measure and what is measurement?

In mathematics, measurement means to assign real numbers to sets. For example, the classical task in metric geometry is to assign numbers to geometric objects for length, area, or volume. The requirement in the measurement task is that the assigned numbers should be invariant under displacement of the respective objects.

In ancient times, the act of measuring was equivalent to comparing with a standard unit. However, it soon became apparent that measurement was more complicated than initially thought in that it involves finite processes and sets. The first tool to deal with this problem was the Riemann integral which enabled the computation of length, areas, and volumes for complex shapes (as well as other measures). However, the Riemann integral has a number of deficiencies, including its applicability only to functions with a finite number of discontinuities, fundamental operations of differentiation and integration are, in general, not reversible, and limit processes, in general, can not be interchanged. In 1898, Émile Borel developed classical measure theory which includes \(\sigma \)-algebra to define a class of sets that is closed under set union of countably many sets and set complement, and defined as additive measure \(\mu \) that associates a number \(\in \mathbb {R} ^+_0\) with each bounded subset in the \(\sigma \)-algebra. Around 1899–1902, Henry Lebesgue defined integrals based on a measure that subsumes the Borel measure, based on a special case. He connected measures of sets and measures of functions.

3.2.2 Quantification

Probability measure was then developed in 1933 by Andrey Nikolaevich Kolmogorov, which used classical measure theory and added the measure of 1 assigned to the universal set. This is thought of as classical probability theory.

The classical probability theory has since become the dominant approach to examine uncertainty and randomness. Extensive mathematical studies followed and resulted in highly sophisticated theories. Its foundation rests on the definition of probability space, which was Kolmogorov’s big achievement. A probability space is a triplet \((\varOmega , F, P)\). Here \(\varOmega \) is a countable event space containing all possible outcomes of a random event. \(F\) is the so-called \(\sigma \)-algebra of \(\varOmega \) and it represents all combinations of the outcomes from \(\varOmega \). Its construction satisfies:

-

It is not empty: \(\emptyset \in F\) and \(\varOmega \in F.\)

-

If a set \(A\in F\), then its complement \(A^c \in F.\)

-

If sets \(A_1, A_2, \dots , \in F\), then \( \bigcup _{i=1}^\infty A_i \in F, \) and \(\bigcap _{i=1}^\infty A_i \in F.\)

\(P\) is the well known probability measure and it is used to assign a real number, i.e., the probability, on the occurrence of any outcomes of the events (from \(\varOmega \)) and their potential combinations (from \(F\)). It satisfies the following important and well known principles.

-

1.

\(0\le P(A)\le 1\), for any \(A\in F\).

-

2.

\(P(\varOmega ) = 1\). That is, the probabilities of all outcomes add up to one.

-

3.

For \(A_1, A_2, \dots \in F\) and \(A_i\cap A_j = \emptyset \), for any \(i\ne j\),

$$ P\left( \bigcup _{i=1}^\infty A_i\right) = \sum _{i=1}^\infty P(A_i). $$

About 50 years later, the additivity requirement became a subject of controversy in that it was too restrictive to capture the full scope of measurement. For example, it works well under idealized, error-free measurements, but is not adequate when measurement errors are unavoidable. In 1954, Gustave Choquet developed a (potentially infinite) family of non-additive measures (capacities), and for each given capacity, there exists a dual “alternating capacity”. An integral based on these measures is non-additive, can be computed using Riemann or Lebesgue integration and is applied specifically to membership functions and capacities.

In 1967, Arthur P. Dempster introduced imprecise probabilities based on the motivation that the precision required in classical probability is not realistic in many applications. Imprecise probabilities deal with convex sets of probability measures rather than single measures. For each given convex set of probability measures he also introduced 2 types of a non-additive measures: lower and upper probabilities, and super- and supra-additive. This allow probabilities to be represented imprecisely by intervals of real numbers.

In 1976, Glenn Shafer analyzed special types of lower and upper probabilities and call then belief and plausibility measures. The theory based on these measures became known as Dempster-Shafer theory (DST) or evidence theory. DST is capable of dealing with interval-based probabilities, such that belief or probability measures are equal to the ranges of admissible probabilities. As it turns out, belief measures are equivalent to Choquet capacities of order \(\inf \) and plausibility measures are equivalent to alternating capacities of order \(\inf \).

The comparison of membership functions of fuzzy sets and probabilities was investigated in 1978 by Michio Sugeno and found to be not directly possible. This led to the generalization of additive measures analogous to generalization such that crisp sets generalize to fuzzy sets, and additive measures generalize to (non-additive) fuzzy measures or monotone measures. The Sugeno integral was then introduced with respect to a monotone measure. That same year, Lofti Zadeh defined a possibility function associated with each fuzzy set that is numerically a membership function, and a possibility measure that is a supremum of the possibility function in each set of concern, for both crisp and fuzzy sets. This is one of several interpretations of the “theory of graded possibilities”. Its connection to DST is that constant plausibility measures are equivalent to possibility measures and constant belief measures are necessity measures.

In summary, the three most utilized uncertainty theories are the Classical Probability Theory, the Dempster-Shafer Theory, and Possibility Theory and can be divided into two classes. The first class uses additive measures in which the addition equal to the union expresses no interaction between events and can be thought of as classical probability combined with measure theory. The second class uses non-additive measures, in which addition greater than the union expresses positive interaction between events, such as synergy, cooperation, coalition, enhancement or amplification, while addition less than the union expresses negative interaction between events such as incompatibility, rivalry, inhibition, downgrading, or condensation. This class combines one of many uncertainty theories with generalized measure theory.

4 Evaluation

Visualization research is too often neglected by industry and other potential expert users. One of the reasons is the lack of a proper evaluation of the results. This lack of evaluation was especially obvious in historical visualization fields such as volume rendering or fluid flow visualization. In the more recent domain of uncertainty visualization, researchers have made a significant effort into the assessment of the proposed techniques. The types of evaluation may be classified into three groups:

-

Theoretical evaluation: the method is analyzed to see if it follows established graphical design principles,

-

Low-level visual evaluation: a psychometric visual user study is performed to evaluate low-level visual effects of the method,

-

Task oriented user study: a cognitive, task-based user study is conducted to assess the efficiency or the usability of the method.

4.1 Theoretical Evaluation

General guidelines and rules regarding visual depiction of data have been established, that have proven their efficiency. Bertin in [5], later translated in [6], has introduced the concept of visual variables. These include among others the location, size, orientation, shape, focus and realism. Furthermore he defined four visual properties, natural ordering, the ability to quantify, the ability to focus user attention (selectivity) and the ability to associate similar elements (associativity). He studied which of these properties are verified by the visual variables. Tufte in [102], through his concepts of graphical excellence and integrity, has proposed a number of guidelines to enhance the precision and the usability of graphical depiction. Chambers et al. in [14] have studied the relative influence of specific patterns on the visual perception, for example straight lines versus curves, dark versus light objects or small versus large patterns. This study leads the authors to define general rules for plot construction.

These graphical design principles may be used to conduct a theoretical evaluation of new uncertainty visualization techniques. As already mentioned in Sect. 1.2, Zuk and Carpendale in [111] have done such an evaluation for eight uncertainty visualization techniques. The same type of theoretical evaluation was followed by Riveiro in [87] to evaluate three uncertainty visualization techniques in the context of information fusion and decision making.

4.2 Low-Level Visual Evaluation

Barthelmé and Mamassian in [2] studied the influence on noise uncertainty in a decision-making task. Based on psychometric and simple task experiments, he proved that users can reliably measure the visual uncertainty and use this information in their decision-making. Coninx et al. in [18] conducted psychometric experiments to measure the impact of contrast sensitivity on the visibility of uncertain noisy patterns. He used this information in order to control the visibility of uncertainty data in a visualization technique based on the perturbation of colormaps by Perlin noise.

4.3 Task-Oriented User Study

Task oriented cognitive user studies are by far the most common way of assessing the efficiency and usability of uncertainty visualization techniques. In this type of evaluation a panel of users is typically asked to perform a task that requires not only low-level visual processing but also high-level cognitive treatment of the visual information. Standard tasks as an example may consist in counting the number of local minima in a dataset, find the location of the maximum or minimum value, find the direction of rotation of a vortex. The task completion time, task completion accuracy, user’s rating of efficiency and usability may be recorded. A statistical analysis of the recorded data is done. Typical analyses include analysis of variance (ANOVA), used to check in particular if the difference in the mean value of two distributions is significant. Examples of uncertainty visualization papers with a task-based evaluation include [20, 21, 69, 91].

5 Review of Current State of the Art

The goal of visualization is to effectively present large amounts of information in a comprehensible manner, however, most visualizations lack indications of uncertainty [42, 43, 63, 83].

5.1 Traditional Representations

Tukey [103] proposed graphical techniques to summarize and convey interesting characteristics of a data set not only to facilitate an understanding of the given data but also to further investigation and hypothesis testing. These tested graphical methods, such as the boxplot, histogram, and scatter plot, provide identifiable representations of a data distribution, and their simplicity allows for quick recognition of important features and comparison of data sets. In addition, they can be substituted for the actual display of data, specifically when data sets are too large to plot efficiently.

5.1.1 1D

One of the most ubiquitous approaches to displaying uncertainty information is the boxplot [28, 34, 94, 103], which is the standard technique for presenting the five-number summary, consisting of the minimum and maximum range values, the upper and lower quartiles, and the median, as illustrated in Fig. 1.2a. This collection of values quickly summarizes the distribution of a data set, including range and expected value, and provides a straightforward way to compare data sets. In addition, the reduced representation afforded by the five-number summary provides a concise tool for data analysis, since only these characteristic values need to be analyzed. Figure 1.2b and c show visual modifications of the boxplot. Surveys on the introduction and evolution of the boxplot can be found in [16, 81].

The box plot is often adapted to include information about the underlying distribution, as demonstrated in Fig. 1.2d–g. The most common modification adds density information, typically through changes to the sides of the plot. The hist plot [4] extends the width of the cross bars at the quartiles and median to express density at these three locations. The vase plot [4] instead varies the “box” continuously to reflect the density at each point in the innerquartile range. Similarly, the box-percentile plot [26] and violin plot [39] show density information for the entire range of the data set. Density can also be shown by adding dot plots [106], which graph data samples using a circular symbol. The sectioned density plot [17] completely reconstructs the box plot by creating rectangles whose colors and size indicate cumulative density, and placement express the location of the quartiles. Sample size and confidence levels can be expressed through changing or notching the width of the plot [67] (Fig. 1.2h) or by using dot-box plots, which overlay dot plots onto box plots [107]. Other descriptors, such as skew and modality, can be added by modifying the width of the median line [67], thickening the quartile lines [16], (Fig. 1.2i) adding beam and fulcrum displays [23] alongside, or overlaying additional glyphs [82] (Fig. 1.2j).

5.1.2 2D

Standard implementations of the boxplot focus on univariate data distributions. The five-number summary is a useful descriptor of not only univariate, but also bivariate data distributions. The main challenge in extending the boxplot for use with higher dimensional data is how to translate the five-number summary values, which are vector values in the bivariate case, into visual metaphors with meaningful spatial positions, while maintaining the simplicity of the original boxplot. A rangefinder boxplot [3], as seen as the solid back lines in Fig. 1.3a, is a simple extension of the boxplot into 2D which determines boxplots for the two dimensions independently and draws lines to show the interquartile ranges and extrema of those plots. This idea was further improved upon, as shown as the thick gray lines in Fig. 1.3a, to emphasize the quartiles rather than the range, by moving the perpendicular lines from the extrema values to the upper and lower quartile positions and extending whisker lines to the extrema value of the variables [53]. Other techniques for extending the boxplot into 2D all use the notion of a hinge that encompasses 50 % of the data and a fence that separates the central data from potential outliers. The distinctions between each of these methods are the way the contour of the hinge and fence are represented, and the methods used to calculate the contours. The 2D boxplot [99], as seen in Fig. 1.3b, computes a robust line through the data by dividing the data into three partitions, finding the median value of the two outer partitions, and using these points as the line. Depending on the relationship between the slope of the line and each variable, the quartile and fence lines are drawn either parallel to the robust line, or parallel to the variables coordinate axis. The lines not comprising the outer-fence and the inner-hinge boxes are removed. The bagplot [88] uses the concept of halfspace depth to construct a bivariate version of the boxplot, as seen in Fig. 1.3c. The relplot and the quelplot [32] use concentric ellipses to delineate between the hinge and fence regions. Both the relplot and quelplot can be seen in Fig. 1.3d.

5.1.3 PDFs

There is a body of research investigating methods for displaying probability distribution functions with spatial positions. Each of these methods takes an exploratory approach to the presentation of the data by filtering down the amount of data, and then providing a user interface for the scientist to explore the data sets. Ehlschlaeger et al. [25] present a method to smoothly animate between realizations of surface elevation. Bordoloi et al. [7] use clustering techniques to reduce the amount of data, while providing ways to find features of the data sets such as outliers. Streamlines and volume rendering have been used by Luo et al. [61] to show distributions mapped over two or three dimensions.

Kao et al. [48] uses a slicing approach to show spatially varying distribution data. This approach is interesting in that a colormapped plane shows the mean of the PDFs, and cutting planes along two edges allow for the interactive exploration of the distributions. Displaced surfaces as well as isosurfaces are used to enhance the understanding of the density of the PDFs.

Case studies of specific data have been performed by Kao et al. [46, 47]. Their data sets come from NASAs Earth Observing System (EOS) Satellite images and Light Detection And Ranging (LIDAR) data. The methods used to show this data include encoding the mean as a 2D color map, and using standard deviation as a displacement value. Histograms are also employed to understand better the density of the PDFs. To explore the mode of specific distributions, a small set of PDFs are plotted onto a color mapped spatial surface.

5.2 Uncertainty Visualization

Many visualization techniques that incorporate uncertainty information treat uncertainty like an unknown or fuzzy quantity; [75] is a survey of such techniques. These methods employ the meaning of the word uncertainty to create the interpretation of uncertainty or unknown to indicate areas in a visualization with less confidence, greater error, or high variation. Ironically, while blurring or fuzzing a visualization accurately indicates the lowered confidence in that data, it does not lead to more informed decision making. On the contrary, it obfuscates the information that leads to the measure of uncertainty. Because it obscures rather than elucidates the quantitative measures leading to the uncertain classification, such a solution to the problem of adding qualitative information to visualization misses important information.

5.2.1 Comparison Techniques

Often, uncertainty describes a comparison that can most clearly be understood visually, such as the difference between surfaces generated using different techniques, or a range of values that a surface might fall in. A simple approach to the visualization of this type of information is a side-by-side comparison of data sets. An example of this type of visualization is presented in Jiao et al. [41] where streamlines computed from various fiber tracking algorithms are interactively displayed along with the global and local difference measures. Another example is the time window, presented in [112], in which temporal uncertainty around archeological sites is displayed, using various visual clues, in an interactive, exploratory system.

However, this approach may not clearly manifest subtle differences when the data are nearly the same, and it becomes harder to perform this comparison as the visualization becomes more complicated. Another simple approach is to overlay the data to be compared [45]. With this technique, the addition of transparency or wire frame can produce a concise, direct comparison of the data sets. A similar approach uses difference images to display areas of variation [108]. These approaches are less effective, however, when the uncertainty can be categorized as more of a range of values rather than just two distinct ones. In such cases, a surface sweep, known as a fat surface [75], can be used to indicate all possible values. Another approach is the integration of isosurface and volume rendering. Here, an opaque isosurface can be used to indicate the most likely value, and a transparent volume rendering surrounding the isosurface can indicate the range of possible values [43]. Uncertainty information for large collections of aggregated data can be presented using hierarchical parallel coordinates [29]. Lee et al. [52] visualize differences in location and sub-tree structure between two hierarchies through color and transparency. Finally, bounded uncertainty, while not effectively visualized in 3D, can be expressed through the ambiguation of boundaries and edges of pie charts, error bars, and other 2D abstract graphs [70] or as modifications to line charts [96].

5.2.2 Attribute Modification

Another standard method to visualize uncertainty involves mapping it to free variables in the rendering equation or modifying the visual attributes of the data. Such methods include modifying the bidirectional reflectance function (BRDF) to change surface reflectance, mapping uncertainty to color or opacity [65, 91, 97], or pseudo-coloring using a look-up table [75]. This technique has been used as a means for conveying uncertainty in the areas of volume rendering [22, 51, 89], point cloud surface data [77], isosurfacing [45, 79, 80, 86] and flow fields [8], and is often combined with other uncertainty visualization methods. An example technique colormaps flowline curvature onto volume rendered surfaces, highlighting areas in which small changes in isovalue lead to large changes in isosurface orientation and thus indicating areas where the isosurface is a poor representation of material boundary [49]. Another example uses height as a free parameter to display uncertainty in 2D vector fields [72]. Texture can be used similarly to convey uncertainty and is also often modified by opacity, hue, or texture irregularities [18, 40, 74]. Sound has also been used as another channel for expressing uncertainty [58].

5.2.3 Glyphs

Glyphs are symbols used in visualization to signify data through parameters such as location, size, shape, orientation, and color. Because of the multivariate nature of glyphs, they can be used in visualization to map uncertainty to a free parameter. One such approach uses glyphs to present the distribution of multivariate aggregated data over a range of values [15]. These glyphs show the average, standard deviation, and distribution of three attributes of the data set. Conical glyphs have also been used to portray fiber tracks from DTI, leveraging the radius of the cone to encode uncertainty in the orientation of bundles [44]. An approach that modifies attributes of glyphs already present in the visualization is presented as a procedural generation algorithm [13]. In this work, the data is sampled on a regular grid and the size, color, and placement of glyphs are taken directly from the data samples. The uncertainty is then used to distort the glyphs so that glyphs with low uncertainty are very sharp, with the sharpness level decreasing as the uncertainty level increases. This distortion provides a clear indication of uncertainty and error while not placing heavy emphasis on areas of high uncertainty. In a similar fashion, contours already present in the visualization can be used [84, 85] or modified [71, 92] to express uncertainty.

Because not all data is visualized effectively using glyphs, the addition of glyphs to convey only uncertainty information is often a preferable approach. A specific example is the UISURF system [45], which visually compares isosurfaces and the algorithms used to generate them. In this system, glyphs are used to express positional and volumetric differences between isosurfaces by encoding the magnitude of the differences in the size of the glyphs. Similarly, line, arrow, and ellipsoidal glyphs can be used to depict uncertainty in radiosity solutions, interpolation schemes, vector fields, flow solvers, astrophysical data and animations through variation of placement, magnitude, radii, and orientation [54, 55, 57, 75, 91, 93, 109, 110, 113].

5.2.4 Image Discontinuity

Uncertainty visualization often relies on the human visual systems ability to quickly pick up an images discontinuities and to interpret these discontinuities as areas with distinct data characteristics. Techniques that utilize discontinuities rely on surface roughness, blurring, oscillations [13, 33, 56, 108], depth shaded holes, noise, and texture [22], as well as on the translation, scaling, rotation, warping, and distortion of geometry already used to visualize the data [75], to visualize uncertainty. Animation can highlight the regions of distortion or blur or highlight differences in visualization parameters [30, 60, 66]. Such techniques have been applied to multivaritate data displayed through scatter plots or parallel coordinates [27, 36].

6 Examples

6.1 Medical Visualization

A fundamental task in medical visualization is segmentation, the partitioning of a given image into regions that correspond to different materials, to different anatomical structures, or to tumors and other pathologies. Medical image acquisition typically introduces noise and artifacts, and we may wish to segment structures for which the data itself provides little contrast. This is a source of data uncertainty. In many cases, segmentation also involves complex computational models and numerous parameters, which introduces model uncertainty.

Traditional volume rendering classifies materials based on scalar intensity or feature vectors that account for first and second derivatives [50]. Lundström et al. [60] introduce probabilistic transfer functions that assign material probabilities to model cases in which the feature ranges of different materials overlap. This results in a distribution of materials at each location in space, which is visualized by an animation in which each material is shown for a duration that is proportional to its probability.

More complex segmentation tasks cannot be achieved based on local image properties alone. They require models that account for more global assumptions or more complex prior knowledge. Such models are also more computationally demanding and are typically run as a pre-process of the visualization. Some of them output class probabilities, from which Kniss et al. [51] derive measures that can be used to define transfer functions that enable exploring the risk associated with binary classifications, or to visualize spatial decision boundaries. Figure 1.4 shows the use of such transfer functions in a visualization of a segmented brain.

The framework of Saad et al. [90] combines volume rendering with tables that list groups of voxels for which the same materials have been found to be most, second most, and third most likely. They demonstrate several examples in which these tuples can be used to detect anomalous subregions within areas that share the most likely material. Follow-up work [89] has concentrated on identifying anomalies or misclassification by considering regions in which the image-based likelihood disagrees with shape and appearance priors.

Finally, work by Torsney-Weir et al. [100] addresses the model uncertainty in segmentation methods by providing a systematic framework to explore the impact of model parameters. This should facilitate finding settings that produce the desired segmentation, and for which the results do not change significantly when slightly changing the exact parameter values.

Fiber tracking, the reconstruction of nerve fiber bundles from diffusion MRI, is another subfield of medical visualization in which uncertainty plays an important role. It is treated in detail in Chap. 8 of this book.

6.2 Weather and Climate

Uncertainties are prolific in weather and climate applications and arise not only from insufficient models, but also from our inability to accurately measure current weather conditions and obtain precise knowledge on parameter settings. The typical approach for mitigating uncertainties in weather and climate applications is to perform multi-run simulations, often using a collection of models, parameter perturbations, and initial conditions to generate outcome results for multiple variables and time steps. While the variables contained in the output of both weather and climate simulations are similar, the main differences between the two domains are the spatial region of interest and the duration of time covered. Weather applications are typically only interested in a small subsection of the planet, such as North America, and run to cover time steps within the near future. In contrast, climate modeling has a spatial interest of the whole planet and is run over hundreds of years.

The EnsembleVis tool [84] for exploring short-range weather forecast data

The uncertainty resulting from these multi-run simulations are typically captured in what is know as “Ensemble data sets”. These ensembles combine the multiple runs such that notions of probability of outcome can be explored. An ensemble consists of multiple simulation realizations and are often generated by pre-defined parameter perturbations. The visualization and analysis of these data sets aims to understand variations between models and effects of parameters and initial conditions, culminating in an understanding of the phenomenon leading to weather and climate events.

An example of a visualization and analysis tool can be seen in Fig. 1.5, which shows a screen shot of the EnsembleVis framework for the exploration of short-range weather forecasting data [84]. This tool uses a multiwindow approach to provide a collection of views for the end user, an approach used by other tools [92]. This approach allows the user to see overviews of a single time step, the progression of the data over time, drill downs to explore interesting spatial locations, including direct data display, and finally query-based exploration for more complex analyses.

6.3 Security and Intelligence

Security and intelligence uncertainty factors are a natural fit for security visualization, where making well-informed decisions is the primary goal. Enforcing security has become a top priority among a wide range of real-life applications, for instance large corporate or government/military networks. However, the task of decision making is notoriously difficult due to the malicious, hidden nature of attacks, sparse sampling of real-time environment, and time-critical requirements. Therefore, in security analysis uncertainty often exists among decisions at all levels, ranging from global scale such as “is there any malicious activity?” to finer scale such as “which entities are malicious?” or “in what order did these events actually occur?”. The results of these decisions are used to make recommendations which can have significant operational impact, as nodes identified as malicious will be quarantined or removed from the network. Previously, both automated attack mitigation and interactive visualization approaches have been developed for security visualization. These existing techniques serve as a good platform for the integration of uncertainty visualizations and interactions. For example, several visual abstractions have been explored for detecting the sybil attack, which is a coordinated attack that can subvert many types of networks [24]. Sybil attacks are challenging to detect due to their variable attack forms and patterns. Because of this, traditional signature-based or behavior-based methods are ineffective, and security analysts must often find these nodes through manual analysis of their network. Visual abstractions from both adjacency matrix of the network connections [59] and spectral space [37] are explored, which can elucidate the signature patterns of an attack and apply automatic pattern matching algorithms or interactive analysis methods to search for similar patterns. As the short paper (Chap. 7) in this chapter describes, the factors of uncertainty can be introduced to existing detection mechanisms to improve the continuing analytic process. Since uncertainty is prevalent in security applications, the impact of uncertainty should be integrated into the entire procedure of data analysis and interactive exploration. Many current security visualization approaches can and should be augmented with interactions and visualizations for specifying and managing analytic uncertainty. By integrating analytic uncertainty in security visualization, analysts are able to make better-informed decisions regarding critical network infrastructure issues.

7 Open Problems

7.1 Perceptual and Cognitive Implications

Since visualization often relies heavily on the use of colors to convey information, it can be quite challenging for individuals with color vision deficiency. For them, even interpreting visualizations that would pose no problems for individuals with normal color vision can be a difficult task. In this case, however, the resulting ambiguity, and therefore, uncertainty, is inherent to the observer, falling outside the broad sources of uncertainty discussed in Sect. 1.1.1 (i.e., uncertainty observed in sampled data, uncertainty measures generated by models or simulations, and uncertainty introduced by the data processing or visualization processes). Thus, individuals with color vision deficiency have to constantly deal with uncertainty visualizations and make decisions based on ambiguous information. For those individuals, the display of additional data that tries to express the amount of uncertainty from various sources may even generate further ambiguities. The issues involving uncertainty visualization and color vision deficiency are discussed in Chap. 2.

7.2 Comparative Visualizations

The visualization of uncertainty may involve a comparison of different results, such as a weather forecast generated with different parameters. To detect similarities or differences in the results a comparative visualization technique [73] can be employed. In 3D a visualization via fusion [10, 12] is not feasible beyond a small number (2 or 3) of data sets, due to clutter and inter-dependence of the different data sets. An alternative to fusion is a side-by-side view of the data sets. This may be problematic in 3D since it is hard to find corresponding reference points in more than two volumes. As an example to control a 3D comparison Balabanian et al. [1] propose to integrate volume visualization into a hierarchical graph structure. These integrated views provide an interactive side-by-side display of different volumes while the parameter space can be explored through the graph structure. In 2D a blending of different results has basically the same issues as a fusion in 3D [31, 35]. There are techniques which allow a comparative visualization of different data sets in a single image. Urness et al. [104] introduced color weaving for flow visualization to compare different flow fields in a single 2D view. In contrast to blending, each pixel of the resulting image represents an unmodified value from one of the data sets. The generated pattern provides a good overview to detect similar or varying regions in the data sets. To compare certain regions in more detail, e.g., borders, it is better to consider larger comparison areas than individual pixels. In this context it is crucial that data sets which should be compared are visualized next to each other to get a direct comparison for a certain area. For only two data sets a checkerboard pattern can be used to achieve screen door transparency [95]. The white squares show one data set and the black squares show the other data set. The attribute block by Miller [68] allows a simultaneous comparison of four data sets. A repeating \(2\times 2\) pattern provides a shared border between all four data sets. An extension to this approach is the comparative visualization technique of Malik et al. [64]. Instead of a rectangular pattern a hexagonal pattern is used to more finely subdivide the image space. This allows the comparison of a larger number of data sets to one central data set since the hexagonal pattern can be subdivided according to the number of data sets to compare. Uncertainty of a measurement, simulation, or process provides an additional data stream which generates further visualization challenges. Uncertainty may be shown at discrete positions through glyphs or icons. For a dense representation of uncertainty, comparative visualization seems to be a promising emerging area. Topics of research will be: integrated views; sparsification of many data sets which shall be shown simultaneously; comparative navigation; visualization of competing, contradictive, or conflicting features.

References

Balabanian, J., Viola, I., Gröller, E.: Interactive illustrative visualization of hierarchical volume data. In: Proceedings of Graphics Interface 2010, Ottawa, Ontario, Canada, pp. 137–144 (2010)

Barthelmé, S., Mamassian, P.: Evaluation of objective uncertainty in the visual system. PLoS Comput. Biol. 5(9), e1000504 (2009)

Becketti, S., Gould, W.: Rangefinder box plots. Am. Stat. 41(2), 149 (1987)

Benjamini, Y.: Opening the box of a boxplot. Am. Stat. 42(4), 257–262 (1988)

Bertin, J.: Sémiologie graphique: Les diagrammes—Les réseaux—Les cartes. Editions de l’Ecole des Hautes Etudes en Sciences (1967)

Bertin, J.: Semiology of Graphics. The University of Wisconsin Press (1983) (Translated by William Berg)

Bordoloi, U., Kao, D., Shen, H.W.: Visualization techniques for spatial probability density function data. Data Sci. J. 3, 153–162 (2005)

Botchen, R.P., Weiskopf, D., Ertl, T.: Texture-based visualization of uncertainty in flow fields. In: IEEE Visualization 2005, pp. 647–654 (2005)

Boukhelifa, N., Duke, D.J.: Uncertainty visualization: why might it fail? In: CHI Extended Abstracts’09, pp. 4051–4056 (2009)

Bürger, R., Hauser, H.: Visualization of multi-variate scientific data. Comput. Graph. Forum 28(6), 1670–1690 (2009)

Buttenfield, B., Ganter, J.: Visualization and gis: what should we see? What might we miss? In: 4th International Symposium on Spatial Data Handling, vol. 1, pp. 307–316 (1990)

Cai, W., Sakas, G.: Data intermixing and multi-volume rendering. Comput. Graph. Forum 18(3), 359–368 (1999)

Cedilnik, A., Rheingans, P.: Procedural annotation of uncertain information. In: IEEE Proceedings of Visualization 2000, pp. 77–84 (2000)

Chambers, J.M., Cleveland, W.S., Kleiner, B., Tukey, P.A.: Graphical Methods for Data Analysis. Wadsworth (1983)

Chlan, E.B., Rheingans, P.: Multivariate glyphs for multi-object clusters. In: Proceedings of InfoVis ’05, pp. 141–148 (2005)

Choonpradub, C., McNeil, D.: Can the box plot be improved? Songklanakarin J. Sci. Technol. 27(3), 649–657 (2005)

Cohen, D.J., Cohen, J.: The sectioned density plot. Am. Stat. 60(2), 167–174 (2006)

Coninx, A., Bonneau, G.P., Droulez, J., Thibault, G.: Visualization of uncertain scalar data fields using color scales and perceptually adapted noise. In: Applied Perception in Graphics and Visualization (2011)

Couclelis, H.: The certainty of uncertainty: GIS and the limits of geographic knowledge. Trans. GIS 7(2), 165–175 (2003)

Deitrick, S., Edsall, R.: The influence of uncertainty visualization on decision making: An empirical evaluation. In: Progress in Spatial Data Handling, pp. 719–738. Springer, Berlin (2006)

Dietrick, S.: Uncertainty visualization and decision making: Does visualizing uncertain information change decisions? In: Proceedings of the XXII International Cartographic Conference (2007)

Djurcilov, S., Kim, K., Lermusiaux, P., Pang, A.: Visualizing scalar volumetric data with uncertainty. Comput. Graph. 26, 239–248 (2002)

Doane, D.P., Tracy, R.L.: Using beam and fulcrum displays to explore data. Am. Stat. 54(4), 289–290 (2000)

Douceur, J.R.: The sybil attack. In: The First International Workshop on Peer-to-Peer Systems, pp. 251–260 (2002)

Ehlschlaeger, C.R., Shortridge, A.M., Goodchild, M.F.: Visualizing spatial data uncertainty using animation. Comput. GeoSci. 23(4), 387–395 (1997)

Esty, W.W., Banfield, J.D.: The box-percentile pot. J. Stat. Softw. 8(17), 1–14 (2003)

Feng, D., Kwock, L., Lee II, Y.: R.M.T.: matching visual saliency to confidence in plots of uncertain data. IEEE Trans. Visual. Comput. Graph. 16(6), 980–989 (2010)

Frigge, M., Hoaglin, D.C., Iglewicz, B.: Some implementations of the box plot. Am. Stat. 43(1), 50–54 (1989)

Fua, Y.H., Ward, M., Rundensteiner, E.: Hierarchical parallel coordinates for exploration of large datasets. In: Proceedings of Vis ’99, pp. 43–50 (1999)

Gershon, N.D.: Visualization of fuzzy data using generalized animation. In: Proceedings of the IEEE Conference on Visualization, pp. 268–273 (1992)

Gleicher, M., Albers, D., Walker, R., Jusufi, I., Hansen, C., Roberts, J.: Visual comparison for information visualization. Inf. Visual. 10(4), 289–309 (2011)

Goldberg, K.M., Iglewicz, B.: Bivariate extensions of the boxplot. Technometrics 34(3), 307–320 (1992)

Grigoryan, G., Rheingans, P.: Point-based probabilistic surfaces to show surface uncertainty. In: IEEE Trans. Visual. Comput. Graph. 10(5), 546–573 (2004)

Haemer, K.W.: Range-bar charts. Am. Stat. 2(2), 23 (1948)

Hagh-Shenas, H., Kim, S., Interrante, V., Healey, C.: Weaving versus blending: a quantitative assessment of the information carrying capacities of two alternative methods for conveying multivariate data with color. IEEE Trans. Visual. Comput. Graph. 13(6), 1270–1277 (2007)

Haroz, S., Ma, K.L., Heitmann, K.: Multiple uncertainties in time-variant cosmological particle data. In: IEEE Pacific Visualization Symposium, pp. 207–214 (2008)

Harrison, L., Hu, X., Ying, X., Lu, A., Wang, W., Wu, X.: Interactive detection of network anomalies via coordinated multiple views. In: Proceedings of the 7th International Symposium on Visualization for Cyber Security, VizSec ’10 (2010)

Harrower, M.: Representing uncertainty: Does it help people make better decisions? In: UCGISWorkshop: Geospatial Visualization and Knowledge Discovery Workshop (2002)

Hintze, J.L., Nelson, R.D.: Violin plots: a box plot-density trace synergism. Am. Stat. 52(2), 181–184 (1998)

Interrante, V.: Harnessing natural textures for multivariate visualization. IEEE Comput. Graph. Appl. 20(6), 6–11 (2000)

Jiao, F., Phillips, J.M., Stinstra, J., Krüger, J., Varma, R., Hsu, E., Korenberg, J., Johnson, C.R.: Metrics for uncertainty analysis and visualization of diffusion tensor images. Lect. Notes Comput. Sci. 6326(2010), 179–190 (2010)

Johnson, C.R.: Top scientific visualization research problems. IEEE Comput. Graph. Appl. 24(4), 13–17 (2004)

Johnson, C.R., Sanderson, A.R.: A next step: visualizing errors and uncertainty. IEEE Comput. Graph. Appl. 23(5), 6–10 (2003)

Jones, D.K.: Determining and visualizing uncertainty in estimates of fiber orientation from diffusion tensor mri. Magn. Reson. Med. 49, 7–12 (2003)

Jospeh, A.J., Lodha, S.K., Renteria, J.C., Pang., A.: Uisurf: Visualizing uncertainty in isosurfaces. In: Proceedings of the Computer Graphics and Imaging, pp. 184–191 (1999)

Kao, D., Dungan, J.L., Pang, A.: Visualizing 2d probability distributions from eos satellite image-derived data sets: A case study. In: Proceedings of the Conference on Visualization ’01, VIS ’01, pp. 457–460 (2001)

Kao, D., Kramer, M., Love, A., Dungan, J., Pang, A.: Visualizing distributions from multi-return lidar data to understand forest structure. Cartograph. J. 42(1), 35–47 (2005)

Kao, D., Luo, A., Dungan, J.L., Pang, A.: Visualizing spatially varying distribution data. In: Information Visualization ’02, pp. 219–225 (2002)

Kindlmann, G., Whitaker, R., Tasdizen, T., Moller, T.: Curvature-based transfer functions for direct volume rendering: Methods and applications. In: Proceedings of the 14th IEEE Visualization 2003 (VIS’03), pp. 67–74 (2004)

Kniss, J., Kindlmann, G., Hansen, C.: Multidimensional transfer functions for interactive volume rendering. IEEE Trans. Visual. Comput. Graph. 8(3), 270–285 (2002)

Kniss, J.M., Uitert, R.V., Stephens, A., Li, G.S., Tasdizen, T., Hansen, C.: Statistically quantitative volume visualization. In: Proceedings of IEEE Visualization 2005, pp. 287–294 (2005)

Lee, B., Robertson, G.G., Czerwinski, M., Parr, C.S.: Candidtree: visualizing structural uncertainty in similar hierarchies. Inf. Visual. 6, 233–246 (2007)

Lenth, R.V.: Comment on rangefinder box plots. Am. Stat. 42(1), 87–88 (1988)

Li, H., Fu, C.W., Li, Y., Hanson, A.J.: Visualizing large-scale uncertainty in astrophysical data. IEEE Trans. Visual. Comput. Graph. 13(6), 1640–1647 (2007)

Lodha, S., Sheehan, B., Pang, A., Wittenbrink, C.: Visualizing geometric uncertainty of surface interpolants. In: Proceedings of the Conference on Graphics Interface ’96, pp. 238–245 (1996)

Lodha, S.K., Faaland, N.M., Charaniya, A.P.: Visualization of uncertain particle movement. In: Proceedings of the Computer Graphics and Imaging Conference, pp. 226–232 (2002)

Lodha, S.K., Pang, A., Sheehan, R.E., Wittenbrink, C.M.: Uflow: Visualizing uncertainty in fluid flow. In: Proceedings Visualization ’96, pp. 249–254 (1996)

Lodha, S.K., Wilson, C.M., Sheehan, R.E.: Listen: sounding uncertainty visualization. In: Proceedings Visualization ’96, pp. 189–195 (1996)

Lu, A., Wang, W., Dnyate, A., Hu, X.: Sybil attack detection through global topology pattern visualization. Inf. Visual. 10(1), 32–46 (2011)

Lundström, C., Ljung, P., Persson, A., Ynnerman, A.: Uncertainty visualization in medical volume rendering using probabilistic animation. IEEE Trans. Visual. Comput. Graph. 13(6), 1648–1655 (2007)

Luo, A., Kao, D., Pang, A.: Visualizing spatial distribution data sets. In: Proceedings of the Symposium on Data Visualisation 2003, VISSYM ’03, pp. 29–38 (2003)

MacEachren, A., Robinson, A., Hopper, S., Gardner, S., Murray, R., Gahegan, M., Hetzler, E.: Visualizing geospatial information uncertainty: what we know and what we need to know. Cartograph. Geograph. Inf. Sci. 32(3), 139–160 (2005)

MacEachren, A.M., Robinson, A., Hopper, S., Gardner, S., Murray, R., Gahegan, M., Hetzler, E.: Visualizing geospatial information uncertainty: what we know and what we need to know. Cartograph. Geograph. Inf. Sci. 32(3), 139–160 (2005)

Malik, M.M., Heinzl, C., Gröller, M.E.: Comparative visualization for parameter studies of dataset series. IEEE Trans. Visual. Comput. Graph. 16(5), 829–840 (2010)

Masuch, M., Freudenberg, B., Ludowici, B., Kreiker, S., Strothotte, T.: Virtual reconstruction of medieval architecture. In: Proceedings of EUROGRAPHICS 1999, Short Papers, pp. 87–90 (1999)

Masuch, M., Strothotte, T.: Visualising ancient architecture using animated line drawings. In: Proceedings of the IEEE Conference on Information Visualization, pp. 261–266 (1998)

McGill, R., Tukey, J.W., Larsen, W.A.: Variations of box plots. Am. Stat. 32(1), 12–16 (1978)

Miller, J.: Attribute blocks: visualizing multiple continuously defined attributes. IEEE Comput. Graph. Appl. 27(3), 57–69 (2007)

Newman, T.S., Lee, W.: On visualizing uncertainty in volumetric data: techniques and their evaluation. J. Vis. Lang. Comput. 15, 463–491 (2004)

Olston, C., Mackinlay, J.D.: Visualizing data with bounded uncertainty. In: Proceedings of the IEEE Symposium on Information Visualization (InfoVis’02), pp. 37–40 (2002)

Osorio, R.A., Brodlie, K.: Contouring with uncertainty. In: 6th Theory and Practice of Computer Graphics Conference, pp. 59–66 (2008)

Otto, M., Germer, T., Hege, H.C., Theisel, H.: Uncertain 2d vector field topology. Comput. Graph. Forum 29(2), 347–356 (2010)

Pagendarm, H., Post, F.: Comparative visualization—approaches and examples. In: 5th Eurographics Workshop on Visualization in Scientific Computing, Rostock, Germany (1994)

Pang, A., Furman, J.: Data quality issues in visualization. In: SPIE Visual Data Exploration and Analysis, vol. 2278, pp. 12–23 (1994)

Pang, A., Wittenbrink, C., Lodha, S.: Approaches to uncertainty visualization. Vis. Comput. 13(8), 370–390 (1997)

Paulus, M., Hozack, N., Zauscher, B., McDowell, J., Frank, L., Brown, G., Braff, D.: Prefrontal, parietal, and temporal cortex networks underlie decision=making in the presence of uncertainty. NeuroImage 13, 91–100 (2001)

Pauly, M., Mitra, N.J., Guibas, L.: Uncertainty and variability in point cloud surface data. In: Symposium on Point-Based Graphics, pp. 77–84 (2004)

Politi, M., Han, P., Col, N.: Communicating the uncertainty of harms and benefits of medical interventions. Med. Decis. Mak. 27(5) 681–695 (2007)

Pöthkow, K., Hege, H.C.: Positional uncertainty of isocontours: condition analysis and probabilistic measures. IEEE Trans. Visual Comput. Graph. PP(99), 1–15 (2010)

Pöthkow, K., Weber, B., Hege, H.C.: Probabilistic marching cubes. Comput. Graph. Forum 30(3), 931–940 (2011)

Potter, K.: Methods for presenting statistical information: The box plot. In: Hagen, H., Kerren, A., Dannenmann, P. (eds.) Visualization of Large and Unstructured Data Sets, GI-Edition, Lecture Notes in Informatics (LNI) S-4, pp. 97–106 (2006)

Potter, K., Kniss, J., Riesenfeld, R., Johnson, C.R.: Visualizing summary statistics and uncertainty. In: Computer Graphics Forum, Proceedings of Eurovis 2010, vol. 29(3), pp. 823–831 (2010)

Potter, K., Rosen, P., Johnson, C.R.: From quantification to visualization: A taxonomy of uncertainty visualization approaches. IFIP Advances in Information and Communication Technology Series p. (To Appear) (2012). (Invited Paper)

Potter, K., Wilson, A., Bremer, P.T., Williams, D., Doutriaux, C., Pascucci, V., Johhson, C.R.: Ensemble-vis: A framework for the statistical visualization of ensemble data. In: IEEE Workshop on Knowledge Discovery from Climate Data: Prediction, Extremes., pp. 233–240 (2009)

Praßni, J.S., Ropinski, T., Hinrichs, K.: Uncertainty-aware guided volume segmentation. IEEE Trans. Visual Comput. Graph. 16(6), 1358–1365 (2010)

Rhodes, P.J., Laramee, R.S., Bergeron, R.D., Sparr, T.M.: Uncertainty visualization methods in isosurface rendering. In: EUROGRAPHICS 2003 Short Papers, pp. 83–88 (2003)

Riveiro, M.: Evaluation of uncertainty visualization techniques for information fusion. In: 10th International Conference on Information Fusion, pp. 1–8 (2007)

Rousseeuw, P.J., Ruts, I., Tukey, J.W.: The bagplot: a bivariate boxplot. Am. Stat. 53(4), 382–387 (1999)

Saad, A., Hamarneh, G., Möller, T.: Exploration and visualization of segmentation uncertainty using shape and appearance prior information. IEEE Trans. Visual. Comput. Graph. 16(6), 1366–1375 (2010)

Saad, A., Möller, T., Hamarneh, G.: Probexplorer: uncertainty-guided exploration and editing of probabilistic medical image segmentation. Comput. Graph. Forum 29(3), 1113–1122 (2010)

Sanyal, J., Zhang, S., Bhattacharya, G., Amburn, P., Moorhead, R.J.: A user study to compare four uncertainty visualization methods for 1d and 2d datasets. IEEE Trans. Visual. Comput. Graph. 15(6), 1209–1218 (2009)

Sanyal, J., Zhang, S., Dyer, J., Mercer, A., Amburn, P., Moorhead, R.J.: Noodles: a tool for visualization of numerical weather model ensemble uncertainty. IEEE Trans. Visual. Comput. Graph. 16(6), 1421–1430 (2010)

Schmidt, G.S., Chen, S.L., Bryden, A.N., Livingston, M.A., Rosenblum, L.J., Osborn, B.R.: Multidimensional visual representations for underwater environmental uncertainty. IEEE Comput. Graph. Appl. 24(5), 56–65 (2004)

Spear, M.E.: Charting Statistics. McGraw-Hill, New York (1952)

Stokking, R., Zubal, I., Viergever, M.: Display of fused images: methods, interpretation, and diagnostic improvements. Semin. Nucl. Med. 33(3), 219–227 (2003)

Streit, A., Pham, B., Brown, R.: A spreadsheet approach to facilitate visualization of uncertainty in information. IEEE Trans. Visual. Comput. Graph. 14(1), 61–72 (2008)

Strothotte, T., Puhle, M., Masuch, M., Freudenberg, B., Kreiker, S., Ludowici, B.: Visualizing uncertainty in virtual reconstructions. In: Proceedings of Electronic Imaging and the Visual Arts, EVA Europe ’99, p. 16 (1999)

Thomson, J., Hetzler, B., MacEachren, A., Gahegan, M., Pavel, M.: A typology for visualizing uncertainty. In: Proceedings of SPIE. vol. SPIE-5669, pp. 146–157 (2005)

Tongkumchum, P.: Two-dimensional box plot. Songklanakarin J. Sci. Technol. 27(4), 859–866 (2005)

Torsney-Weir, T., Saad, A., Möller, T., Hege, H.C., Weber, B., Verbavatz, J.M.: Tuner: principled parameter finding for image segmentation algorithms using visual response surface exploration. IEEE Trans. Vis. Comput. Graph. (TVCG) 17(12), 1892–1901 (2011)

Tufte, E.: The Visual Display of Quantitative Information, 2nd edn. Graphics Press, Cheshire (2001)

Tufte, E.R.: The Visual Display of Quantitative Information. Graphics Press, Cheshire (1983)

Tukey, J.W.: Exploratory Data Analysis. Addison-Wesley, Reading (1977)

Urness, T., Interrante, V., Marusic, I., Longmire, E., Ganapathisubramani, B.: Effectively visualizing multi-valued flow data using color and texture. Proc. IEEE Visual. Conf. 03, 115–121 (2003)

Ware, C.: Information Visualization: Perception for Design, 2nd edn. Morgan Kaufmann Publishers, Los Altos (2004)

Wilkinson, L.: Dot plots. Am. Stat. 53(3), 276–281 (1999)

Wilkinson, L.: The Grammar of Graphics. Springer, New York, Inc. (1999)

Wittenbrink, C., Pang, A., Lodha, S.: Verity visualization: Visual mappings. Technical Report, University of California, Santa Cruz (1995)

Wittenbrink, C.M., Pang, A.T., Lodha, S.K.: Glyphs for visualizing uncertainty in vector fields. IEEE Trans. Visual. Comput. Graph. 2(3), 266–279 (1996)

Zehner, B., Watanabe, N., Kolditz, O.: Visualization of gridded scalar data with uncertainty in geosciences. Comput. Geosci. 36(10), 1268–1275 (2010)

Zuk, T., Carpendale, S.: Theoretical analysis of uncertainty visualization. In: SPIE vol. 6060: Visualization and Data Analysis, vol. 2006, pp. 66–79 (2006)

Zuk, T., Carpendale, S., Glanzman, W.D.: Visualizing temporal uncertainty in 3d virtual reconstructions. In: Proceedings of the 6th International Symposium on Virtual Reality, Archaeology and Cultural Heritage (VAST 2005), pp. 99–106 (2005)

Zuk, T., Downton, J., Gray, D., Carpendale, S., Liang, J.: Exploration of uncertainty in bidirectional vector fields. In: Society of Photo-Optical Instrumentation Engineers (SPIE) Conference Series, vol. 6809 (2008). Published online

Acknowledgments

The authors gratefully acknowledge research support from the National Science Foundation, Department of Energy, the National Institutes of Health, and the King Abdullah University for Science and Technology.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2014 Springer-Verlag London

About this chapter

Cite this chapter

Bonneau, GP. et al. (2014). Overview and State-of-the-Art of Uncertainty Visualization. In: Hansen, C., Chen, M., Johnson, C., Kaufman, A., Hagen, H. (eds) Scientific Visualization. Mathematics and Visualization. Springer, London. https://doi.org/10.1007/978-1-4471-6497-5_1

Download citation

DOI: https://doi.org/10.1007/978-1-4471-6497-5_1

Published:

Publisher Name: Springer, London

Print ISBN: 978-1-4471-6496-8

Online ISBN: 978-1-4471-6497-5

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)