Abstract

Weed detection plays a critical role in smart and precise agriculture systems by enabling targeted weed management and reducing environmental impact. Unmanned aerial vehicles (UAVs) and their associated imagery have emerged as powerful tools for weed detection. Traditional and deep learning methods have been explored for weed detection, with deep learning methods being favored due to their ability to handle complex patterns. However, accuracy rate and computation cost challenges persist in deep learning-based weed detection methods. To address this, we propose a method on the basis of the YOLOv5 algorithm to deal with high accuracy demand and low computation cost requirements. The approach involves model generation using a custom dataset and training, validation, and testing sets. Experimental results and performance evaluation validate the proposed method that indicates the research contributes to advancing weed detection in smart and precise agriculture systems, leveraging deep learning techniques for enhanced accuracy and efficiency.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Smart and precise agriculture is a rapidly evolving field that aims to optimize agricultural practices using advanced technologies [1, 2]. One of the key challenges in precise agriculture is the accurate detection and management of weeds [3], as they compete with crops for resources, reduce yields, and increase the need for herbicides [4]. The significance of weed detection in precise agriculture cannot be overstated, as it enables farmers to identify and target weeds specifically, minimizing the use of herbicides and reducing environmental impact [5, 6].

Currently, several technologies and the latest advances have been employed for automated weed detection in agriculture [7]. Among these, unmanned aerial vehicles (UAVs) and their associated imagery have garnered considerable attention from researchers. UAV-based imagery offers a unique perspective and high-resolution imaging capabilities [8, 9], allowing for the detection of weeds with greater precision and efficiency compared to other technologies. This has led to a surge in research interest and efforts in utilizing UAV imagery for weed detection in agriculture.

Deep learning-based algorithms have demonstrated significant success in different computer vision tasks, consisting of object detection and recognition [4, 10, 11]. When combined with UAV imagery, these algorithms can provide robust and accurate weed detection capabilities [12, 13]. By leveraging the power of deep learning, the potential for detecting and managing weeds in precise agriculture can be significantly enhanced.

Previous studies and recent deep-learning methods have made significant contributions to weed detection in agriculture [5, 14]. However, there are still limitations and research gaps that need to be addressed. The existing methods often suffer from challenges such as limited dataset availability, inadequate generalization, and difficulties in real-time performance [15, 16]. These limitations lead to presenting inadequate accuracy rate and high computation complexity weed detection applications. Therefore, further research is necessary to overcome these limitations and advance the field of weed detection in precise agriculture.

To address the research gap and enhance the efficiency of weed detection, this study proposes a you only look once (YOLO) based method. The YOLO is an effective deep-learning framework known for its real-time object detection capabilities [17, 18]. By leveraging the advantages of YOLO, this study aims to develop an efficient weed detection model that can accurately and rapidly identify weeds in UAV imagery. The proposed method will involve generating a custom dataset, followed by training, validation, and testing processes to create a robust and reliable YOLO model for weed detection.

This research makes several contributions to the field. Firstly, it identifies the research gap in weed detection in precise agriculture and highlights the limitations of existing methods. Secondly, it proposes a YOLO-based model as an effective solution to address the identified research gap. Finally, it conducts comprehensive experimental and performance evaluations to validate the proposed method’s effectiveness and demonstrate its potential in improving weed detection in agriculture. By addressing these contributions, this research aims to contribute to the advancement of precise agriculture practices and facilitate sustainable farming methods.

Review of previous studies

This section presents the related works and discusses previous studies in deep learning-based approaches for weed detection.

The paper [19] suggested a method for weed detection in line crops utilizing deep learning with unsupervised data labeling in UAV images. The method involves two main steps: First, unsupervised data labeling is performed to assign labels to the images based on pixel intensity variations, which eliminates the need for manual labeling. Then, a deep learning model is trained using the labeled data to classify the images into weed or non-weed categories. The key features of this approach include its ability to automatically label large datasets without human intervention and the utilization of deep learning for accurate weed detection. Nevertheless, this study’s limitation lies in the assumption that pixel intensity variations are sufficient for unsupervised labeling, which may not hold in all cases.

In [20], a deep learning approach is presented for weed detection in lettuce crops utilizing multispectral images. The method involves three main steps: preprocessing the multispectral images, training a deep convolutional neural network (CNN) model, and classifying the images into weed or non-weed categories. The key features of this approach include the utilization of multispectral images, which capture a broader range of information compared to traditional RGB images, and the use of a deep CNN model for accurate weed detection. Nevertheless, the limitation of this study lies in the requirement for high-quality and well-calibrated multispectral images, which may not always be available in practical scenarios. Additionally, the method’s performance may vary with different weed species and environmental conditions.

In [21], a deep learning-based system was developed for identifying weeds utilizing unmanned aerial system (UAS) imagery. The method utilizes a CNN to detect and classify weeds in the images. Key features of the approach include the use of UAS imagery, which provides high-resolution and comprehensive coverage, and the application of deep learning for accurate weed identification. The findings demonstrate that the proposed system achieves promising results in detecting and classifying weeds, outperforming traditional methods. Nevertheless, the study’s limitation lies in the reliance on high-quality and well-annotated training data, which can be time-consuming and resource-intensive to obtain. Additionally, the performance of the system may be affected by varying lighting conditions and the presence of overlapping or occluded weed instances.

The authors in [22] presented a CNN-based automated weed detection system utilizing UAV imagery. The method involves preprocessing the UAV images, training a CNN model, and utilizing the model to detect and classify weeds. Key features of this approach include the utilization of high-resolution UAV imagery, which enables detailed analysis of the crop fields, and the use of a CNN model for accurate weed detection. The findings demonstrate that the proposed system achieves high accuracy in identifying and localizing weeds in agricultural fields, outperforming traditional methods. Nevertheless, this study’s limitation of lies in the dependency on well-annotated training data, which can be time-consuming and labor-intensive to obtain. Additionally, the system’s performance may be influenced by variations in lighting conditions, weather conditions, and the presence of similar-looking objects in the field.

In [23], an UAV-based weed detection in Chinese cabbage was proposed utilizing deep learning techniques. The method involves capturing aerial images of Chinese cabbage fields utilizing UAVs, preprocessing the images, training a deep learning model, and classifying the images into weed or non-weed categories. Key features of this approach include the use of UAV imagery for comprehensive field coverage and the deep learning algorithms’ application for accurate weed detection. The outcomes show that the proposed method achieves high precision in identifying and localizing weeds in Chinese cabbage fields, outperforming traditional methods. Nevertheless, this study’s limitation lies in the reliance on well-annotated training data, which can be time-consuming and labor-intensive to obtain. Additionally, the performance of the system may be affected by variations in lighting conditions, occlusions, and the presence of similar-looking objects in the field.

This paper [24] evaluated the performance of YOLOv7, a deep object detection algorithm, on a real case dataset of crop weeds captured from UAV images. The method involves training the YOLOv7 model on the dataset and evaluating its performance in accurately detecting and localizing crop weeds. Key features of this study include the use of a state-of-the-art deep learning algorithm, which allows for real-time object detection, and the utilization of UAV imagery for comprehensive coverage of the agricultural fields. The findings demonstrate that YOLOv7 achieves high precision and recall in detecting crop weeds, showcasing its effectiveness in automated weed detection. Nevertheless, this study’s limitation lies in the dependence on a specific deep learning model, and its performance may vary with different models or versions. Additionally, the study highlights the challenges of handling class imbalance and overlapping instances of weeds in the dataset, which can impact the model’s performance.

Methodology

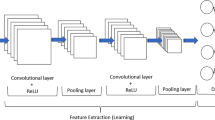

In the present study, a deep learning base using the Yolov5 algorithm is proposed for weed detection on UAV images. The YOLOv5 is a deep-learning algorithm for object detection that builds upon the success of the YOLO family of models [25, 26]. It uses a single neural network architecture to detect and classify objects in images or videos with exceptional speed and accuracy. YOLOv5 improves upon previous versions by introducing a streamlined architecture, novel training techniques, and advanced data augmentation methods.

To generate an effective YOLOv5 model for weed detection, we can leverage its key features. Firstly, YOLOv5 provides high-speed real-time inference, making it suitable for processing large volumes of UAV images quickly. This allows for rapid weed detection and decision-making in the field. Secondly, YOLOv5 offers a balance between accuracy and efficiency, enabling accurate localization and classification of weed instances while maintaining computational efficiency. Additionally, YOLOv5’s ability to handle multi-class detection allows for the identification of various weed species in agricultural settings. By training the model on a diverse dataset with annotated weed instances, YOLOv5 can learn to recognize the visual characteristics of weeds, including shape, color, and texture. The algorithm’s robustness to varying lighting conditions and occlusions further enhances its effectiveness in weed detection.

Dataset

Collecting and label images of weeds and non-weeds in a custom dataset. We collected images from internet resources [27]. We collected 2500 images. Among this dataset, we split it into three subsets: 10% for testing, 20% for validation, and 70% for training. Figure 1 demonstrates some samples of the dataset.

For the dataset extension, we also take advantage of data augmentation. Data augmentation is crucial for improving model generalization. The used augmentation techniques include random rotations, flips, scaling, and translation. We also used color jittering, contrast adjustments, and Gaussian noise to make the model more robust to varying lighting conditions and image artifacts. To address class imbalance, we employ techniques like oversampling the minority class (weeds) or applying focal loss during training to assign higher weights to the minority class samples. Additionally, augmenting bounding box annotations along with image data is essential to ensure accurate localization of weeds during detection. Techniques such as random cropping or resizing while preserving aspect ratio help in this regard.

Model training

The training set is utilized to train the YOLO model. We use the YOLOv5 training module to perform this task. In the training module, we specify hyperparameters to the training dataset, the batch size, the number of epochs, and other training parameters. Optimal hyperparameters for training a YOLOv5 model for automated weed detection for learning rate set to 0.001, the batch size of 16, the number of epochs set to 50, and anchor box dimensions tailored to the expected sizes and aspect ratios of weeds in the dataset. YOLOv5 will train the model by optimizing the network’s weights based on the provided training images and ground truth annotations.

As illustrated in Fig. 2, the training process based on the YOLOv5 model involves iteratively updating the model’s parameters to improve its performance in weed detection. During training, the model is fed with labeled training images and their corresponding ground truth annotations, which consist of bounding box coordinates and class labels for the weed instances. The goal of the training process is to reduce the variance between the ground truth annotations as well as the predicted bounding boxes of the model.

One way to monitor the progress of training is by analyzing the train/box-loss curve. In this curve, the Y-axis represents the box loss, which is a measure of the discrepancy between the ground truth boxes and the forecasted bounding boxes. The box loss captures how accurately the model localizes the weed instances in the images. The X-axis represents the number of training iterations or epochs. During the training process, the box loss should decrease over time. A decreasing curve indicates that the model is gradually improving its accuracy in predicting bounding boxes and localizing weeds. Ideally, the curve should converge to a low and stable value, indicating that the model has learned to detect the weed instances in the images accurately.

Model validation

Model validation in the context of YOLOv5 involves assessing the trained model’s performance on a separate validation dataset. This process helps evaluate the ability of the model to detect and localize weeds in unseen data precisely. The validation dataset consists of labeled images with ground truth annotations, similar to the training dataset. The set of validation is utilized to assess the model’s performance during the training process. It helps in monitoring the model’s progress and detecting overfitting. The YOLOv5 validation module is employed to evaluate the performance of the model on the validation dataset. This module compares the forecasted bounding boxes with the ground truth annotations as well as computes metrics, such as precision, mean average precision (mAP), and recall.

As shown in Fig. 3, during validation, the model’s predictions on the validation dataset are compared against the ground truth annotations to compute metrics such as box loss. The val/box_loss curve is utilized to monitor the performance of the model during validation. The Y-axis represents the box loss, which measures the discrepancy between the ground truth boxes and the forecasted bounding boxes, indicating the accuracy of localization. The X-axis represents the number of validation iterations or epochs. The val/box_loss curve should exhibit a decreasing trend as the model learns to improve its accuracy in predicting bounding boxes and localizing weeds. A lower box loss indicates better localization performance.

Results and discussion

This part donates discussion and results for the generated model on our proposed method for weed detection on UAV images. Firstly, experimental results are shown based on the proposed method. Figure 4 shows samples of experimental results. Then the model is assessed utilizing popular performance metrics like mAP, recall and precision. The details are discussed in the following sections.

Experimental results

Experimental results in weed detection are visually demonstrated by comparing the model’s predictions with the ground truth annotations. Through visualizations, we observe the accurate localization and classification of weed instances by the model. The predictions should align closely with the actual weed locations and exhibit minimal false positives or false negatives. Some image samples of our experimental results show in Fig. 4.

Precision metric

Precision is a vital metric for assessing the performance of the YOLOv5 model in weed detection [28]. It measures the ability of the model to identify weeds and avoid false positives precisely. A high precision score indicates a low rate of false alarms, ensuring reliable results. Precision is computed by dividing the true positives (correctly identified weeds) by the sum of true positives and false positives (incorrectly labeled as weeds). This metric provides valuable insights for optimizing the YOLOv5 model’s precision-oriented performance in distinguishing weeds from other objects or background elements. Figure 5 demonstrates the accuracy curve for the generated model.

As shown in Fig. 5, the accuracy metric curve for the YOLOv5 weed detection model depicts the trend of precision rates over the course of training epochs. The X-axis denotes the number of epochs, which indicates the progress of the model during training. The Y-axis represents the precision rate, which signifies the proportion of correctly forecasted weed instances out of all instances forecasted as weeds. By plotting the precision rates against the number of epochs, the curve provides a visual representation of how the precision of the YOLOv5 model evolves and improves over time.

Recall metric

Another popular performance metric is the recall rate [29]. The recall metric is an important evaluation measure for evaluating the YOLOv5 model’s performance in weed detection [29, 30]. Recall, also known as sensitivity or rate of true positive, measures the model’s ability to identify all actual weed instances in the dataset correctly. It quantifies the model’s capability to avoid false negatives, ensuring that no weeds are missed during the detection process. A high recall score shows that the model has a low rate of false dismissals, providing comprehensive coverage of the weed instances present. The recall is computed by dividing the true positives (correctly predicted weeds) by the sum of true positives and false negatives (weed instances missed by the model). It serves as a crucial indicator of the YOLOv5 model’s ability to capture the majority of the weeds accurately, offering valuable insights for optimizing its performance in weed detection tasks. Figure 6 demonstrates recall metric for the generated model.

As demonstrated in Fig. 6, the recall metric curve for the YOLOv5 weed detection model represents the trend of recall rates as the number of training epochs progresses. The X-axis indicates the number of epochs, representing the model’s training progress. The Y-axis represents the recall rate, which measures the proportion of correctly detected weed instances out of all actual weed instances. By plotting the recall rates against the number of epochs, the curve illustrates how the YOLOv5 model’s recall performance evolves and improves over time.

Mean average precision (mAP) metric

The mAP metric curve for the YOLOv5 weed detection model showcases the trend of mAP scores over the course of training epochs [29]. The X-axis represents the number of epochs, indicating the model’s training progress. The Y-axis represents the mAP metric, which combines recall and precision to provide an overall assessment of the model’s performance in detecting weeds. By plotting the mAP scores against the number of epochs, the curve visually illustrates how the YOLOv5 model’s detection accuracy evolves and improves over time. This curve is able to be analyzed to identify the epochs’ optimal number needed to attain the desired level of mAP for accurate and effective weed detection.

As shown in Fig. 7, the mAP_50" refers to the mAP at an Intersection over the Union (IoU) threshold of 0.50. It measures the average precision of the model when considering detections that have at least a 50% overlap with the ground truth bounding boxes of weeds. This metric evaluates the model’s accuracy in detecting weeds with a moderate level of spatial overlap.

On the other hand, "mAP-0.5:0.95" represents the mAP over a range of IoU thresholds, specifically from 0.50 to 0.95, with a step size of 0.05. The IoU is for Intersection over Union. It is a measurement utilized to assess the overlap between two bounding boxes, typically used in object detection tasks. The IoU is calculated by finding the ratio of the area of intersection among the ground truth bounding box and the predicted bounding box to the area of their union. The IoU is calculated as follows:

Both mAP_50 and mAP-0.5:0.95 are widely utilized metrics to assess object detection models like YOLOv5. They consider recall and precision at various IoU thresholds to capture the ability of the model to detect weeds precisely while considering different levels of spatial overlap. Higher mAP scores indicate better overall performance, with mAP-0.5:0.95 providing a more detailed evaluation across a range of IoU thresholds.

Discussion

This section discusses about our proposed method base on the generated YOLOv5 model and obtained results. This discussion instigates the accuracy rate, computation cost and complexity levels. These investigations intend to present our achievements associated to the addressed research challenge in this study.

Accuracy rate

Out YOLOv5 model’s accuracy is validated by its performance on precision, recall, and mAP metrics. A high precision score indicates that the model makes fewer false positive detections, meaning it correctly identifies weeds without many incorrect identifications. A high recall score demonstrates that the model effectively detects a large portion of the actual weed instances in the dataset, minimizing false negatives. Finally, a high mAP score showcases the overall effectiveness of the model in both precision and recall across various detection thresholds. These metrics collectively affirm that our model excels in accurately detecting weeds in diverse conditions, a crucial aspect for precision agriculture.

Low computation cost

Despite its impressive accuracy, our generated YOLOv5 model maintains a low computation cost. YOLOv5’s single-pass architecture and efficient backbone network contribute to its computational efficiency. It processes images in real-time or near-real-time, reducing the computational overhead compared to more complex models. Additionally, YOLOv5’s use of anchor boxes and optimized feature extraction ensures that it achieves accurate detections without requiring extensive computational resources. This makes it suitable for deployment on resource-constrained devices, including drones or edge computing platforms, where computational efficiency is critical.

Model complexity

The YOLOv5 model’s architecture strikes a balance between complexity and accuracy. It benefits from a streamlined design that avoids unnecessary layers and computations. The use of a CSPDarknet53 backbone network is a testament to its efficient architecture, as it efficiently extracts relevant features for object detection without excessive complexity. Moreover, YOLOv5 allows us for customization and fine-tuning, enabling us to tailor the model to generate specific weed detection requirements without introducing unnecessary complexity. This combination of an efficient architecture and flexibility in model design underscores its low complexity while maintaining high accuracy.

In conclusion, our generated YOLOv5 model showcases its accuracy in weed detection through precision, recall, and mAP metrics, while simultaneously demonstrating low computation cost and complexity. These qualities make it a robust choice for automated weed detection applications, ensuring accurate results without straining computational resources, and facilitating its deployment in various real-world scenarios.

Methods comparison

To indicates the effectiveness of the proposed method, we conduct some other experiments base on existing deep learning frameworks. To fair companion, same dataset is used for our proposed method and Faster RCNN, VGGNet-16 and ResNet-50 methods. Table 1 provides experimental results for this comparison.

Table 1 presents a comparative analysis of four distinct methods for automated weed detection: Faster R-CNN, ResNet-50, VGGNet-16, and the proposed YOLOv5 approach. The evaluation metrics, including precision, recall, and mean Average Precision (mAP), serve as critical indicators of the model’s detection performance. It’s evident that the proposed YOLOv5 method outshines the other three approaches, achieving higher precision and mAP scores. This result showcases YOLOv5’s effectiveness in accurately detecting weeds in images.

The success of the proposed YOLOv5 method can be attributed to several factors. YOLOv5’s single-shot detection architecture enables it to efficiently process images while maintaining high precision and recall rates. Its optimized backbone network, CSPDarknet53, plays a crucial role in feature extraction, aiding in precise weed localization and classification. Furthermore, fine-tuning on the custom dataset tailored specifically for weed detection allows YOLOv5 to recognize and adapt to the unique characteristics of weeds present in the images. Therefore, the results affirm that the proposed YOLOv5 method excels in automated weed detection when compared to Faster R-CNN, ResNet-50, and VGGNet-16. Its balanced performance in terms of precision and recall, coupled with its optimized architecture and training on a custom dataset, positions YOLOv5 as a robust and efficient choice for real-time weed detection applications.

Conclusion

In the present study, an automated weed detection approach proposed using deep learning and UAV imagery to improve the accuracy of weed detection in smart agriculture systems. The performance of the proposed model verified using a custom dataset and the quality of the generated output evaluated using various measures involving precision, recall and mAP metrics. By leveraging deep learning techniques and custom dataset training, our approach enhances the efficiency and accuracy of weed detection in smart agriculture systems. For future work, two potential directions can be considered. Firstly, the proposed method can be further optimized by exploring different variants of the YOLO algorithm or other advanced deep learning architectures to improve accuracy and reduce computation costs. Secondly, investigating the integration of multi-sensor data, such as combining UAV imagery with other sensing modalities like hyperspectral or LiDAR data, could enhance weed detection capabilities by providing complementary information and improving detection accuracy in complex agricultural landscapes.

References

T.A. Shaikh, T. Rasool, F.R. Lone, Towards leveraging the role of machine learning and artificial intelligence in precision agriculture and smart farming. Comput. Electron. Agric. 198, 107119 (2022)

A. Sharma, A. Jain, P. Gupta, V. Chowdary, Machine learning applications for precision agriculture: a comprehensive review. IEEE Access 9, 4843–4873 (2020)

P. Lottes, J. Behley, A. Milioto, C. Stachniss, Fully convolutional networks with sequential information for robust crop and weed detection in precision farming. IEEE Robot. Automat. Lett. 3(4), 2870–2877 (2018)

S.J. Rani, P.S. Kumar, R. Priyadharsini, S.J. Srividya, S. Harshana, Automated weed detection system in smart farming for developing sustainable agriculture. Int. J. Environ. Sci. Technol. 19(9), 9083–9094 (2022)

A.M. Hasan, F. Sohel, D. Diepeveen, H. Laga, M.G. Jones, A survey of deep learning techniques for weed detection from images. Comput. Electron. Agric. 184, 106067 (2021)

A. Wang, W. Zhang, X. Wei, A review on weed detection using ground-based machine vision and image processing techniques. Comput. Electron. Agric. 158, 226–240 (2019)

S. Shanmugam, E. Assunção, R. Mesquita, A. Veiros, P.D. Gaspar, Automated weed detection systems: A review. KnE Eng. pp 271–284 (2020).

D.C. Tsouros, S. Bibi, P.G. Sarigiannidis, A review on UAV-based applications for precision agriculture. Information 10(11), 349 (2019)

A.N. Veeranampalayam Sivakumar, J. Li, S. Scott, E. Psota, A. J. Jhala, J.D. Luck, Y. Shi, Comparison of object detection and patch-based classification deep learning models on mid-to late-season weed detection in UAV imagery. Remote Sensing, 12 (13), 2136 (2020).

N. Rai, Y. Zhang, B.G. Ram, L. Schumacher, R.K. Yellavajjala, S. Bajwa, X. Sun, Applications of deep learning in precision weed management: a review. Comput. Electron. Agric. 206, 107698 (2023)

A.H. Al-Badri, N.A. Ismail, K. Al-Dulaimi, G.A. Salman, A. Khan, A. Al-Sabaawi, M.S.H. Salam, Classification of weed using machine learning techniques: a review—challenges, current and future potential techniques. J. Plant Dis. Prot. 129(4), 745–768 (2022)

M.H. Saleem, J. Potgieter, K.M. Arif, Automation in agriculture by machine and deep learning techniques: a review of recent developments. Precision Agric. 22, 2053–2091 (2021)

M.D. Bah, E. Dericquebourg, A. Hafiane, R. Canals, Deep learning based classification system for identifying weeds using high-resolution UAV imagery. In: Intelligent Computing: Proceedings of the 2018 Computing Conference, Volume 2, pp 176–187 (2019).

X. Jin, T. Liu, Y. Chen, J. Yu, Deep learning-based weed detection in turf: a review. Agronomy 12(12), 3051 (2022)

Y. Zhang, M. Wang, D. Zhao, C. Liu, Z. Liu, Early Weed Identification Based on Deep Learning: A Review. Smart Agricult. Technol. 100123 (2022).

B. Tugrul, E. Elfatimi, R. Eryigit, Convolutional neural networks in detection of plant leaf diseases: a review. Agriculture 12(8), 1192 (2022)

Q. Wang, M. Cheng, S. Huang, Z. Cai, J. Zhang, H. Yuan, A deep learning approach incorporating YOLO v5 and attention mechanisms for field real-time detection of the invasive weed Solanum rostratum Dunal seedlings. Comput. Electron. Agric. 199, 107194 (2022)

J. Chen, H. Wang, H. Zhang, T. Luo, D. Wei, T. Long, Z. Wang, Weed detection in sesame fields using a YOLO model with an enhanced attention mechanism and feature fusion. Comput. Electron. Agric. 202, 107412 (2022)

M.D. Bah, A. Hafiane, R. Canals, Deep learning with unsupervised data labeling for weed detection in line crops in UAV images. Remote sensing 10(11), 1690 (2018)

K. Osorio, A. Puerto, C. Pedraza, D. Jamaica, L. Rodríguez, A deep learning approach for weed detection in lettuce crops using multispectral images. AgriEngineering 2(3), 471–488 (2020)

A. Etienne, A. Ahmad, V. Aggarwal, D. Saraswat, Deep learning-based object detection system for identifying weeds using UAS imagery. Remote Sensing 13(24), 5182 (2021)

M.A. Haq, CNN based automated weed detection system using uav imagery. Comput. Syst. Sci. Eng. 42(2), 837–849 (2022)

P. Ong, K.S. Teo, C.K. Sia, UAV-based weed detection in Chinese cabbage using deep learning. Smart Agricult.Technol., 100181 (2023).

I. Gallo, A.U. Rehman, R.H. Dehkordi, N. Landro, R. La Grassa, M. Boschetti, Deep object detection of crop weeds: performance of YOLOv7 on a real case dataset from UAV images. Remote Sensing 15(2), 539 (2023)

W. Wu, H. Liu, L. Li, Y. Long, X. Wang, Z. Wang, J. Li, Y. Chang, Application of local fully convolutional neural network combined with YOLO v5 algorithm in small target detection of remote sensing image. PLoS ONE 16(10), e0259283 (2021)

G. Jocher, A. Chaurasia, A. Stoken, J. Borovec, Y. Kwon, K. Michael, J. Fang, Z. Yifu, C. Wong, D. Montes, Ultralytics/yolov5: v7. 0-YOLOv5 SotA realtime instance segmentation. Zenodo (2022).

Roboflow, Grass Weeds Dataset (2023). https://universe.roboflow.com/roboflow-100/grass-weeds

N. Al-Qubaydhi, A. Alenezi, T. Alanazi, A. Senyor, N. Alanezi, B. Alotaibi, M. Alotaibi, A. Razaque, A.A. Abdelhamid, A. Alotaibi, Detection of unauthorized unmanned aerial vehicles using YOLOv5 and transfer learning. Electronics 11(17), 2669 (2022)

B. Yan, P. Fan, X. Lei, Z. Liu, F. Yang, A real-time apple targets detection method for picking robot based on improved YOLOv5. Remote Sensing 13(9), 1619 (2021)

O.G. Ajayi, J. Ashi, B. Guda, Performance evaluation of YOLO v5 model for automatic crop and weed classification on UAV images. Smart Agricult. Technol. 5, 100231 (2023)

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Liu, B. An automated weed detection approach using deep learning and UAV imagery in smart agriculture system. J Opt 53, 2183–2191 (2024). https://doi.org/10.1007/s12596-023-01445-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12596-023-01445-x