Abstract

When interest lies in causal analysis of the effects of multiple exposures on an outcome, one may be interested in investigating the interaction between the exposures. In such settings, causal analysis requires modeling the joint distribution of exposures given pertinent confounding variables. In the most general setting, this may require modeling the effect of confounding variables on the association between exposures via a second-order regression model. We consider joint modeling of exposures for causal analysis via regression adjustment and inverse weighting. In both frameworks, we also investigate the asymptotic bias of estimators when the dependence model for the generalized propensity score incorrectly assumes conditional independence of exposures or is based on a naive dependence model which does not accommodate the effect of confounders on the conditional association of exposures. We also consider the problem of a semi-continuous bivariate exposure and propose a two-stage estimation technique to study the effects of prenatal alcohol exposure, and the effects of drinking frequency and intensity on childhood cognition.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

1.1 Overview

Causal analysis is concerned with mitigating the effect of confounding variables on inferences regarding the exposure–disease relationship. With binary exposures, propensity scores capturing the association between confounders and the exposure of interest can be used for matching, stratification, or regression adjustment [1]. Inverse probability of exposure weighting offers another framework for the estimation of causal effects. For continuous exposure variables, generalized propensity scores have been developed [2, 3].

The complex etiology of many chronic conditions motivates joint consideration of multiple exposure variables and their possible interacting effects [4]. Examples include the study of smoking and alcohol consumption on pancreatic cancer [5], the interacting effect of smoking and drinking on dietary intake of antioxidants on oral, pharyngeal, or laryngeal squamous cell carcinoma [6], and environmental studies of several different air pollutants and their effects on lung cancer risk [7]. When the joint effects of multiple exposures are under consideration, multivariate propensity scores can play a useful role. Vegetabile et al [8] describe a joint model for multivariate binary exposures through the specification of a model for a latent multidimensional Gaussian variable. Williams and Crespi [9] develop a generalized propensity score and use it to construct inverse multivariate density-weighted estimating functions. Chen and Zhou [10] propose the use of entropy balancing for multiple continuous treatments as a framework for causal analysis; this approach is best suited for settings in which the dimensions of the exposure and confounding variables are modest.

In settings with a single toxin, interest may lie in the causal effect of different exposure patterns defined in terms of the frequency of the exposure, the dose of the exposure on exposure occasions, and their interaction. Di Credico et al [11], for example, study the effect of the duration and intensity of smoking on the risk of head and neck cancers. This is the setting of our motivating study assessing the effect of maternal drinking patterns on childhood cognition. Here, even though previous studies show that high levels of prenatal alcohol exposure (PAE) can cause serious developmental problems in children [12,13,14,15], the impact of low levels of exposure is not well understood. Still less is known about the impact of “dose rate" which refers to the possibility that infants exposed to the same total volume of alcohol over the course of a pregnancy may have very different cognitive deficits depending on whether the mother drinks small amounts relatively frequently, or whether she drinks large amounts but less frequently (chronic versus binge drinking). This motivates the development and study of the methods to better understand possible complex causal effects of PAE on cognition outcomes.

When considering confounding in the causal analysis of multiple exposures, the effect of confounders is usually modeled on each marginal exposure variable with dependencies accommodated between the exposures through joint models - conditional associations are typically assumed to be functionally independent of any confounders. We consider a more general framework in which confounders may affect second moments (i.e., the relation between different features of exposures to a single toxin). Specifically, we develop methods to study the joint effect of the frequency of drinking and the dose of alcohol per drinking occasion in expectant mothers on the cognitive development of their child [16]; we then apply the methods we develop to the motivating study.

The remainder of the paper is as follows. In Sect. 2, we define a response model involving the main effects of two binary exposures and their interaction, along with a generalized propensity score. We formulate a general model for the exposures in which confounders affect the first and second-order moments so that they explain how the two aspects of exposure vary together. We then describe how this can be used for regression adjustment and inverse weighting. The importance of modeling the effect of confounders on the association between exposures is investigated in Sect. 3 by evaluating the bias of causal estimands from regression adjustment and inverse probability of exposure weighting when naive propensity scores only deal with the marginal effects of confounders. In Sect. 4, we consider the case of continuous exposures including semi-continuous exposures and carry out an analogous investigation for analyses based on regression adjustment and inverse density weighting for estimating causal effects. A two-stage approach for estimating the causal effects of semi-continuous exposures is proposed in Sect. 5. An application to the Detroit Cohort Study [12] is given in Sect. 6 and concluding remarks and topics for future research are given in Sect. 7. We focus on the case of a bivariate exposure but as suggested by the notation in Sect. 2.1, the methods can be naturally generalized to accommodate higher dimensional exposures.

2 Causal Analysis with Multiple Exposures

2.1 Specification of the Full Model

Let Y denote a continuous response variable of interest, \({\varvec{A}}=(A_{1}, \dots , A_{p})^{\prime}\) a \(p \times 1\) vector of exposure variables with \(p \ge 2\), and \({\varvec{B}}=(A_{1}A_{2}, \ldots , A_{p-1}A_{p})^{\prime}\) a \(q\times 1\) vector of all pairwise products where \(q=p(p-1)/2\). Higher-order interaction terms can be considered, but here we focus on two-way interactions and so let \({\varvec{X}} = ({\varvec{A}}', {\varvec{B}}')'\) contain the exposure data of interest. Furthermore, let \({\varvec{Z}}=(Z_1, \ldots , Z_r)^{\prime}\) be an \(r \times 1\) vector of confounding variables obscuring the effects of \({{\varvec{X}}}\) on the response Y. The data-generating model for the response is taken to have the form

where \(\varvec{\theta } = ( \varvec{\alpha }', \varvec{\beta }')'\) with \(\varvec{\alpha }=(\alpha _0, \alpha _1,\dots ,\alpha _p)'\) and \(\varvec{\beta }=(\beta _1,\ldots ,\beta _q)'\), \(\varvec{\zeta }=(\zeta _1,\ldots ,\zeta _r)'\), and \(\epsilon\) is a mean zero error term with \({\varvec{X}}, {\varvec{Z}} \perp \epsilon\). Let \({\varvec{A}}_{(-j)} = (A_1,\ldots ,A_{j-1}, A_{j+1},\ldots , A_p)'\) be the vector of all exposure variables excluding \(A_j\), let \({\varvec{B}}_{(-j)}\) be the vector of all products of \(A_j\) with the \(p-1\) other exposures, and let \(\varvec{\beta }_{(-j)}\) be the corresponding vector of coefficients. The causal effect of a one unit change in \(A_j\) from \(a_{j\circ }\) to \(a_{j\circ } +1\) given \({\varvec{A}}_{(-j)}= {\varvec{a}}_{(-j)}\) and \({\varvec{Z}}\) is \(\alpha _j + {\varvec{a}}'_{(-j)} \varvec{\beta }_{(-j)}\). This model therefore enables one to study the causal interacting effects of the two exposures.

When \(\dim \{{\varvec{Z}}\}=r\) is large or one is concerned about getting the functional form of the effects of confounders correct in the response model, regression adjustment on the propensity score mitigates the effects of confounders. Let \(f_{a \mid z}({\varvec{a}} \mid {\varvec{z}})\) be the conditional joint probability distribution or mass function for multivariate continuous or discrete exposures given confounders. Imai and Van Dyk [17] defined the generalized propensity score (GPS) as \(G({\varvec{A}}, {\varvec{Z}})=f_{a \mid z}({\varvec{A}} \mid {\varvec{Z}})\) so that \({\varvec{Z}} \perp I({\varvec{A}}={\varvec{a}}) \mid G({\varvec{A}}, {\varvec{Z}})=g\). Additionally, they stated that if there is a finite dimensional quantity \({\varvec{S}}({\varvec{Z}})\) such that \(G({\varvec{A}}, {\varvec{Z}})\) depends on \({\varvec{Z}}\) only through \({\varvec{S}}({\varvec{Z}})\), matching or stratification on \({\varvec{S}}({\varvec{Z}})={\varvec{s}}\) or \(G({\varvec{A}}, {\varvec{Z}})\) is equivalent. For a single Bernoulli exposure variable, the generalized propensity score is the conventional propensity score, namely the conditional probability of being exposed given confounders (i.e., \(P(A_1=1 \mid {\varvec{Z}})\)), whereas for a scalar continuous exposure it is the conditional density of the exposure \(f_{a_1 \mid z}(A_1 \mid {\varvec{Z}})\); based on Assumption 3 of [17], it will often be sufficient to condition on a linear function of confounders such as \({\mathbb {E}}(A_1 \mid {\varvec{Z}})\). For multivariate exposures, the generalized propensity score is the multivariate joint distribution of exposures given confounders, \(G({\varvec{A}}, {\varvec{Z}})=f_{a \mid z}({\varvec{A}} \mid {\varvec{Z}})\). When the dependence of \({\varvec{A}}\) on \({\varvec{Z}}\) in \(G({\varvec{A}},{\varvec{Z}})\) can be expressed through a low dimensional function \({\varvec{S}}({\varvec{Z}})\), this generalized propensity score \({\varvec{S}}({\varvec{Z}})\) can be considered the effective propensity score; we consider this in what follows.

2.2 Propensity Scores for Two Binary Exposures

2.2.1 Regression Adjustment

Here, we aim to determine the minimal requirements for a potential vector-valued propensity score \({{\varvec{S}}}({\varvec{Z}})\) to ensure valid causal inference regarding the exposure effects conditional on \({{\varvec{S}}}({\varvec{Z}})\). We assume that data are available from independent individual processes and consider properties of a single process in much of what follows. From (2.1), note

Using \(P(\cdot )\) to informally denote a joint probability mass function or density, we write

so if \({{\varvec{S}}}({\varvec{Z}})\) contains enough information to construct the conditional distribution for the exposure variables given \({\varvec{Z}}\) (i.e., if \({\varvec{X}} \perp {\varvec{Z}} \mid {\varvec{S}}\)), then following Assumption 3 of [17], \({\varvec{S}}\) serves as a valid propensity score. In this case, the final term in (2.2) is independent of \({\varvec{X}}\) and a working regression model of the form

will give an estimator \(\hat{\varvec{\lambda }}\) which is consistent for the causal parameter \(\varvec{\theta }\).

Here, we give an illustrative example involving a bivariate binary exposure \({\varvec{A}}=(A_1, A_2)'\) where \(A_k\) indicates exposure status with respect to exposure k, \(k=1, 2\). Let \({\varvec{Z}}\) be a set of all potential confounders. Suppose the status for exposure k can be characterized by the marginal model \(P(A_k\mid {\varvec{Z}}) = P(A_k\mid {\varvec{Z}}_k)\), where \({\varvec{Z}}_k\) is a subvector of \({\varvec{Z}}\), \(k=1, 2\). We may assume, for example, that \(\text {logit}P(A_k=1\mid {\varvec{Z}}_k)={{\varvec{Z}}}'_k\varvec{\gamma }_k\). While such marginal models are typically used when considering scalar exposures, they may not be sufficient to fully characterize the joint distribution of exposures if some confounding variables exert a second-order effect. Let \({\varvec{Z}}_3\) be another set of confounders characterizing the dependence between \(A_1\) and \(A_2\) given \({\varvec{Z}}_1\) and \({\varvec{Z}}_2\) through a dependence regression model

where

Based on the marginal models for \(A_k \mid {\varvec{Z}}_k\), \(k=1, 2\), and (2.5),

where \(a=1-(1-\text {OR})\sum _{k=1}^2P(A_k=k\mid {\varvec{Z}}_k)\) [18] and we let \(\text {OR}\) denote \(\text {OR}(A_1, A_2 \mid {\varvec{Z}})\). The generalized propensity score is obtained by noting that \(P(A_{1} = 1, A_{2} = 0 \mid {\varvec{Z}})= P(A_{1} = 1 \mid {\varvec{Z}}_1)-P({\varvec{A}} = {{\textbf {1}}} \mid {\varvec{Z}})\) and \(P(A_{1} = 0, A_{2} = 1 \mid {\varvec{Z}})= P(A_{2} = 1 \mid {\varvec{Z}}_2)-P({\varvec{A}} = {{\textbf {1}}} \mid {\varvec{Z}})\), from which \(P({\varvec{A}} = {{\textbf {0}}} \mid {\varvec{Z}})\) can be obtained. A sufficient propensity score would be \({\varvec{S}}({\varvec{Z}}) = (S_1({\varvec{Z}}_1), S_2({\varvec{Z}}_2), S_3({\varvec{Z}}_3))'\) where \(S_k({\varvec{Z}}_k) = P(A_k=1\mid {\varvec{Z}}_k)\), \(k=1, 2\) , and \(S_3({\varvec{Z}}) = P({\varvec{A}} = {{\textbf {1}}}\mid {\varvec{Z}})\); alternatively, one could define \(S_k({\varvec{Z}}_k)={{\varvec{Z}}}'_k \varvec{\gamma }_k\), \(k=1, 2, 3\).

Here, \({\varvec{Z}}_1\) and \({\varvec{Z}}_2\) are sets of confounders influencing the marginal causal effects of \(A_1\) and \(A_2\) on Y, and conditioning on \((S_1,S_2)\) is sufficient if \(A_1\) does not interact with \(A_2\) (i.e., if \(\beta _1=0\)). If \(\beta _1 \ne 0\), then it is necessary to model the association between \(A_1\) and \(A_2\) given \(({\varvec{Z}}_1', {\varvec{Z}}_2')'\) as a function of possibly different sets of confounders \({\varvec{Z}}_3\) as suggested in (2.5). We investigate the implications of not doing this adequately in Section 3.

2.2.2 Inverse Probability Weighting

Here, we consider the use of a generalized propensity score as weight in inverse probability weighting analysis for two binary exposures. Let \(P({\varvec{A}} \mid {\varvec{Z}}; \varvec{\gamma })\) denote the conditional joint probability of \({\varvec{A}}=(A_1, A_2)'\) given confounders under the positivity condition [1] where \(P({\varvec{A}}={\varvec{a}} \mid {\varvec{Z}}; \varvec{\gamma })>0\) for all possible values of \({\varvec{Z}}\). The inverse “joint probability”-weighted estimating function [19] is expressed as

where \({\mathbb {E}}^R(\cdot )\) represents an expectation in the setting where \({\varvec{X}} \perp {\varvec{Z}}\) and \({\mathbb {E}}^R(Y \mid {\varvec{X}};\varvec{\varphi })={{\varvec{X}}}'\varvec{\varphi }\) defines a marginal structural model. Here,

To show that (2.6) is an unbiased estimating function, we take the expectation of \(U_1(\varvec{\varphi };\varvec{\gamma })\) with respect to \(Y \mid {\varvec{X}}, {\varvec{Z}}\) to obtain

where \(\varPsi =(\varvec{\varvec{\theta }}', \varvec{\zeta }')'\). The expectation of (2.7) with respect to \({\varvec{Z}} \mid {\varvec{A}}={\varvec{a}}\) gives

Since

we can rewrite (2.8) as

which effectively results in the expectation being taken in the setting where \({\varvec{Z}} \perp {\varvec{A}}\), equivalent to how this would be done in the hypothetical randomized trial [19]. Since (2.6) has expectation zero, solving \(U_1(\varvec{\varphi };\varvec{\gamma })=0\) defines an estimator that is consistent for the causal estimand of interest. In practice, \(\varvec{\gamma }\) must be estimated but provided a \(\sqrt{n}-\)consistent estimator is used in its place, consistency of \(\hat{\varvec{\varphi }}\) is still achieved.

3 Exposure Dependence Model Misspecification

3.1 Regression Adjustment

3.1.1 General Framework

Here, we derive the limiting bias of the estimators of exposure effects obtained through regression adjustment when the dependence model is misspecified through a) an inappropriate conditional independence assumption, or b) specification of an exchangeable dependence structure functionally independent of confounders; this is defined more formally in what follows.

Consider the following regression model with a generalized propensity score adjustment,

where \(\tilde{{\varvec{S}}}=\tilde{{\varvec{S}}}({\varvec{Z}})\) is a vector-valued propensity score which might be insufficient for adjustment under model misspecification, e.g., assuming conditional independence among exposures or using an inadequate dependence model. The contribution to the least squares equation for \(\varvec{\varphi }\) from a single individual based on (3.1) is \({\textbf{U}}(Y\mid {\varvec{X}}, \tilde{{\varvec{S}}};{\varvec{\varphi }}, \varvec{\psi }) = ({\varvec{X}}', \tilde{{\varvec{S}}}')'(Y- {{\varvec{X}}}'{\varvec{\varphi }}-\tilde{{\varvec{S}}}'\varvec{\psi })\). With a sample of n independent individuals yielding data \(\{(Y_i, {\varvec{X}}_i, {\varvec{Z}}_i), i=1,\ldots , n\}\), the least squares estimator of the exposure effect is the solution to \(\sum _{i=1}^n{\textbf{U}}(Y_i \mid {\varvec{X}}_i, \tilde{{\varvec{S}}}_i;{\varvec{\varphi }}, \varvec{\psi })=0\) and is denoted by \(\hat{\varvec{\varphi }}\); this is consistent for the solution to \({\mathbb {E}}\{{\textbf{U}}(Y\mid {\varvec{X}}, \tilde{{\varvec{S}}}; {\varvec{\varphi }}, \varvec{\psi })\}=0\) where the expectation is taken with respect to the full data generation process [20, 21].

Again we consider the bivariate case with \({{\varvec{X}}}=(1, A_1, A_2, A_1A_2)'\) and the corresponding coefficient \(\varvec{\varphi }=(\varphi _0, \varphi _1,\varphi _2,\varphi _3)'\). The explicit form of the limiting value of the estimator \(\tilde{\varvec{\varphi }}\) for \(\varvec{\theta }\) in (2.1) is

where \(\varvec{\zeta }\) and \(\varvec{\psi }\) are given in (2.1) and (3.1),

\(\Sigma _k=\textrm{cov}({\mathbb {E}}(A_k \mid {\varvec{Z}}), {\varvec{Z}})\), \(\Lambda _k=\textrm{cov}({\mathbb {E}}(A_k\mid {\varvec{Z}}), \tilde{{\varvec{S}}})\), \(\Sigma _3=\textrm{cov}({\mathbb {E}}(A_1 A_2 \mid {\varvec{Z}}), {\varvec{Z}})\) , and \(\Lambda _3=\textrm{cov}({\mathbb {E}}(A_1A_2 \mid {\varvec{Z}}),\tilde{{\varvec{S}}} )\). We also have \(\sigma _k^2=\textrm{var}(A_k)\), \(\sigma _3^2=\textrm{var}(A_1A_2)\), \(\rho _{12}=\textrm{corr}(A_1, A_2)\), and \(\rho _{k3}=\textrm{corr}(A_k, A_1A_2)\), \(k=1, 2\); see Online Appendix A for more details.

With two binary exposures, \(\sigma _k^2=p_k(1-p_k)\) and \(\sigma _3^2=p_{12}(1-p_{12})\), where \(p_k=P(A_k=1)\), \(k=1,2\) , and \(p_{12}=P(A_1A_2=1)\). The correlation \(\rho _{12}\) is

[22], and

3.1.2 A Misspecified Dependence Model

If exposure variables are treated as conditionally independent given confounders, one might specify \(\tilde{{\varvec{S}}}\) as \(\tilde{{\varvec{S}}}=(S_1({\varvec{Z}}_1), S_2({\varvec{Z}}_2))'\) or \(\tilde{{\varvec{S}}}=(S_1({\varvec{Z}}_1), S_2({\varvec{Z}}_2), S_1({\varvec{Z}}_1)S_2({\varvec{Z}}_2))'\), where \(S_1({\varvec{Z}}_1)\) and \(S_2({\varvec{Z}}_2)\) are the marginal propensity scores. A naive model using regression adjustment might be specified as

or possibly

In the most general setting, such propensity score regression adjustments are insufficient to render \({\varvec{X}}\perp {\varvec{Z}}\mid \tilde{{\varvec{S}}}\) since \(A_1A_2\) depends on the joint model of \(A_1\) and \(A_2\) given \({\varvec{Z}}\). As a result, estimates of the causal effects \(\varphi _1, \varphi _2\) and \(\varphi _3\) are inconsistent with the asymptotic bias given by the second and third terms on the right-hand side of Eqs. (3.2)–(3.4). It can be seen that the bias depends on the second-order association between the exposures and confounders, the association between exposures and generalized propensity scores, and variances of exposures.

A more flexible exposure model may recognize the conditional association between exposures (given marginal confounders \({\varvec{Z}}_1\) and \({\varvec{Z}}_2\)), but assume no role of confounders on the second-order dependence. In this case, one might consider a propensity score term based on

where \(\textrm{cov}(A_1, A_2 \mid {\varvec{Z}}_1, {\varvec{Z}}_2)=\rho \sqrt{V(A_1 \mid {\varvec{Z}}_1)V(A_2 \mid {\varvec{Z}}_2)}\) and \(V(A_k \mid {\varvec{Z}}_k)\) is the residual variance from the propensity score for exposure k, \(k=1, 2\). In this case, the regression model adjusted by the generalized propensity score may be,

We explore the impact of such misspecifications in Sect. 3.3.

3.2 Inverse Probability Weighting

A misspecified propensity score will have a different impact in the framework of the inverse propensity score-weighted estimating function. Let \(P_0({\varvec{A}}={\varvec{a}} \mid {\varvec{Z}}; \delta )\) denote the misspecified (naive) conditional joint density or mass function indexed by \(\delta\), and let \(U_{10}(\varvec{\varphi };\varvec{\delta })\) and \(U_{20}(\varvec{\delta })\) be the estimating functions as follows:

Let \(\varvec{\delta }^*\) be the solution to \({\mathbb {E}}_{{\varvec{A}},{\varvec{Z}}}\{U_{20}(\varvec{\delta });\varvec{\gamma },\varvec{\tau }\}=0\), the limiting value to which the estimator \(\hat{\varvec{\delta }}\) converges when fitting the misspecified model. The limiting value of \(\varvec{\varphi }\) is obtained by setting the equation below to zero and solving \(\hat{\varvec{\varphi }}\),

A misspecified propensity score \(P_0({\varvec{A}}={\varvec{a}} \mid {\varvec{Z}};\varvec{\delta })\) is the product of two marginal propensity scores \(P(A_1=a_1 \mid {\varvec{Z}};\varvec{\delta })P(A_2=a_2 \mid {\varvec{Z}};\varvec{\delta })\) under the conditional independence assumption. In this case, under the present formulation, we have

where

and \(\varvec{\delta }^*=(\varvec{\gamma }_1', \varvec{\gamma }_2')'\) so \(\hat{\varvec{\delta }}\) is consistent for the target parameters \(\varvec{\gamma }_1\) and \(\varvec{\gamma }_2\) but is of insufficient dimension to characterize the second-order dependence.

When the association is assumed functionally independent of confounders, the misspecified propensity score \(P_0({\varvec{A}}={\varvec{a}} \mid {\varvec{Z}};\varvec{\delta })\) is an inadequately modeled conditional joint density or mass function \(P(A_1=a_1, A_2=a_2 \mid {\varvec{Z}}_1, {\varvec{Z}}_2;\varvec{\delta })\). In this case, we have \(U_{20}(\varvec{\delta })=\partial \text {log} P({\varvec{A}}={\varvec{a}} \mid {\varvec{Z}}_1, {\varvec{Z}}_2; \varvec{\delta })/\partial \varvec{\delta }\), and \(\varvec{\delta }^*\) will not be consistent for the target parameters \(\varvec{\gamma }_1\) or \(\varvec{\gamma }_2\).

3.3 Empirical Bias and Efficiency

Here, we report on simulation studies investigating the limiting values of the exposure effects subject to model misspecification. We consider a bivariate binary exposure and assess the relative asymptotic bias of estimates of exposure effects under two types of model misspecification of Sect. 3.1.2 under both regression adjustment and IPW analyses.

Suppose a sample of n independent replicates \(\{(Y_i, {\varvec{X}}_i, {\varvec{Z}}_i), i=1\dots n\}\) are generated with \(n=500\). Let \({\varvec{Z}}\) contain binary confounding variables with \(Z_1 \perp Z_2\), \(P(Z_{1}=1)=P(Z_{2}=1)=0.5\), and \(P(Z_{3}=1 \mid Z_{1}, Z_{2}) = \text {expit}\{-0.896 +(\log 2) Z_{1}+(\log 3) Z_{2}\}\) giving \(P(Z_{3}=1)=0.5\). We let \(P(A_1=1 \mid Z_1)=\text {expit}(\gamma _{10}+\gamma _{11}Z_1)\) where \(\varvec{\gamma }_1=(\gamma _{10}, \gamma _{11})'=(-0.764, \log 2)'\) so \({\mathbb {E}}(A_{1})=0.4\), and \(P(A_2=1 \mid Z_2)=\text {expit}(\gamma _{20}+\gamma _{21}Z_2)\) with \(\varvec{\gamma }_2=(\gamma _{20}, \gamma _{21})'=(-0.112, \log 1.25)'\) so \({\mathbb {E}}(A_{2})=0.5\), Finally, we let \(\log \text {OR}(A_{1}, A_{2}\mid Z_3)=\gamma _{30}+\gamma _{31}Z_3\) with \(\varvec{\gamma }_3=(\gamma _{30}, \gamma _{31})'\); we consider \(\gamma _{31} \in [-5, 5]\) and for each value of \(\gamma _{31}\) , we solve for \(\gamma _{30}\) in (2.5) such that \({\mathbb {E}}\{\text {OR}(A_{1}, A_{2})\}=4\). For response Y, we generate data according to (2.1) with \(\varvec{\theta }=(\alpha _0, \alpha _1, \alpha _2, \beta _1)'=(0, 2,2,4)'\) and \(\varvec{\zeta }=(0.5, 0.5, 0.5)'\).

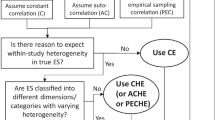

Under the assumption of conditional independence between exposures, the regression model adjusted by the generalized propensity scores can be fitted as in (3.5) or (3.6), which is denoted as Reg1 and Reg2 in Fig. 1, respectively. The IPW approach involves solving the exposure effect in (3.10). We denote the estimated effect as \(\hat{\varvec{\varphi }}=({\hat{\varphi }}_1, {\hat{\varphi }}_2, {\hat{\varphi }}_3)'\) for \({\varvec{X}}=(A_1, A_2, A_1A_2)'\), and the relative bias of the estimated exposure effect for \({\varvec{X}}\) is shown in Fig. 1 as \(({\hat{\varphi }}_1-\alpha _1)/\alpha _1\), \(({\hat{\varphi }}_2-\alpha _2)/\alpha _2\), and \(({\hat{\varphi }}_3-\beta _1)/\beta _1\). In the bottom right panel of Fig. 1, we show the relative bias of the causal effect of a one unit change in \(A_2\) given \(A_{1}=a_1=1\) or 0 and \({\varvec{Z}}\), and denote it as \(({\hat{\varDelta }}_2(A_{1})-\varDelta _2(A_{1}))/\varDelta _2(A_{1})\) where \(\varDelta _2(A_{1})=\alpha _2 + a_{1} {\beta }_{1}\) and \({\hat{\varDelta }}_2(A_{1})={\hat{\varphi }}_2 + a_{1} {{\hat{\varphi }}}_{3}\). The absolute value of relative bias of the exposure effect increases as the covariate effect on the exposure dependence increases. Moreover, the regression adjustment leads to less bias in effect estimation than the IPW approach in these settings. Adjusting for \((S_1({\varvec{Z}}), S_2({\varvec{Z}}))'\) or \((S_1({\varvec{Z}}), S_2({\varvec{Z}}), S_1({\varvec{Z}})S_2({\varvec{Z}}))'\) produces similar results. When \(\gamma _{31}=0\) (i.e., the conditional association between exposure is functionally independent of confounders), the bias of the estimated exposure effect is negligible when conditional independence is assumed between two binary exposures.

Empirical relative bias with standard errors (reflected by shaded bands) of estimators of exposure effects from inadequate propensity score modeling assuming conditional independence between two binary exposures when the second-order confounder has the effect \(\gamma _{31}\); regression adjustment (Reg) and inverse weighting (IPW) are considered; the marginal association between exposures is \(\text {OR}=4\)

We follow (3.7) and (3.10) to conduct the estimation for the second type of misspecification, that is, using an exchangeable dependence structure which assumes no second-order effects of confounders on the exposure. The results are shown in Fig. 2. When there is no confounder affecting the dependence (i.e., \(\gamma _{31}=0\)), the curves pass through zero points on both axes, indicating both the regression adjustment and IPW approaches produce unbiased estimates as the models are correctly specified under the assumption of no second-order effects of confounders on the exposure. Similarly as in Fig. 1, the absolute value of relative bias of the exposure effect would increase as the covariate effect on the exposure dependence (\(\gamma _{31}\)) increases. The regression adjustment leads to less bias in effect estimation compared to the IPW approach.

Empirical relative bias with standard errors (reflected by shaded bands) of estimators of exposure effects from inadequate propensity score modeling due to the omission of a second-order confounder with effect \(\gamma _{31}\); regression adjustment (Reg) and inverse weighting (IPW) are considered; the marginal association between two binary exposures is \(\text {OR}=4\)

4 Multivariate Continuous Exposures

In this section, we consider multiple continuous exposures and semi-continuous exposures.

4.1 Multivariate Normal Exposures

4.1.1 Regression Adjustment

Here, we consider \({\varvec{A}} \mid {\varvec{Z}} \sim \textrm{BVN}(\varvec{\mu }({\varvec{Z}}), \Sigma ({\varvec{Z}}))\) with \({\varvec{A}}=(A_1, A_2)'\), \({\varvec{Z}}=({\varvec{Z}}'_1, {\varvec{Z}}'_2, {\varvec{Z}}'_3)'\), \(\varvec{\mu }({\varvec{Z}})=(\mu _1({\varvec{Z}}), \mu _2({\varvec{Z}}))'\), \(\mu _k({\varvec{Z}})={\mathbb {E}}(A_k \mid {\varvec{Z}}) = {\mathbb {E}}(A_k \mid {\varvec{Z}}_k)\), \(\textrm{var}(A_k \mid {\varvec{Z}})=\sigma ^2_k\), \(k=1,2\), and \(\textrm{corr}(A_1, A_2 \mid {\varvec{Z}})= \rho ({\varvec{Z}}_3)\). We suppose \({\mathbb {E}}(A_k \mid {\varvec{Z}}) = {{\varvec{Z}}}'_k\varvec{\gamma }_k\) with a dependence model

Since the variance parameters are functionally independent of \({\varvec{Z}}\), a sufficient propensity score is \({\varvec{S}}({\varvec{Z}}) = (S_1({\varvec{Z}}_1), S_2({\varvec{Z}}_2), S_3({\varvec{Z}}_3))'\) where \(S_k({\varvec{Z}}_k) = {{\varvec{Z}}}_k'\varvec{\gamma }_k\), \(k=1, 2\) and \(S_3({\varvec{Z}}) = {{\varvec{Z}}}'_3 \varvec{\gamma }_3\) [17] since

where \(({\varvec{a}}-{\varvec{S}}) = (a_1 - S_1, a_2-S_2)'\) and

An alternative to (4.1) would be to model \(B_1 = A_1 A_2\) given \({\varvec{Z}}\) directly to define \(S_3({\varvec{Z}}) = {\mathbb {E}}(B_1 \mid {\varvec{Z}}_3)\) and note that in this case, we could replace the covariance entries of \(\Sigma\) with \(S_3({\varvec{Z}}) - S_1({\varvec{Z}}_1) S_2({\varvec{Z}}_2)\); the key point is that \({\varvec{S}}({\varvec{Z}}) = (S_1({\varvec{Z}}_1), S_2({\varvec{Z}}_2), S_3({\varvec{Z}}_3))'\) contains the relevant information on confounders in the exposure model.

4.1.2 Inverse Joint Density Weighting

Inverse density weighting can be used when exposures are continuous [23]. With conditional joint density \(f({\varvec{a}} \mid {\varvec{z}}; \varvec{\gamma })\) as in (2.6), we write the inverse joint density-weighted estimating function as

The expectation of \(U_1(\varvec{\varphi };\varvec{\gamma })\) with respect to \(Y \mid {\varvec{X}}, {\varvec{Z}}\) and \({\varvec{Z}} \mid {\varvec{X}}\) gives

where

Thus, we can rewrite (4.3) as

which results in the expectation being taken in the setting where \({\varvec{Z}} \perp {\varvec{A}}\) and gives consistent causal estimate \(\hat{\varvec{\varphi }}\) from solving \(U_1(\varvec{\varphi };\varvec{\gamma })=0\).

4.2 Multivariate Semi-continuous Exposures

4.2.1 Regression Adjustment

Here we suppose \(A_{1}\) and \(A_{2}\) are non-negative semi-continuous exposure variables with a mass at zero and we adopt a two-part generalized propensity score model [24] to address the effects of confounding variables. While these two exposures could in general represent exposures to different agents, here we consider the case where they represent different aspects of the exposure to a single agent. Specifically for motivating study, we may let \(A_1\) and \(A_2\) represent the drinking frequency (proportion of drinking days per week) and drinking intensity (dose per drinking occasion) – among non-drinkers, these degenerate to zero. We can therefore let \(A^{+}={\mathbb {I}}(A_1A_2 > 0)\) indicate positive exposure, \( L _ { k } = \ l o g ( A_{k} \mid A^{+}=1)\), \(k=1,2\), and \(\pi _{1}({\varvec{Z}}_0)={\mathbb {E}}(A^{+} \mid {\varvec{Z}}_{0})\).

We consider the true response model is (2.1) with \({\varvec{X}}=(1, A^+, A^+L_{1}, A^+L_{2}, A^+L_{1}L_{2})'\). We let

where \({{\varvec{Z}}}_0=(1, {\varvec{Z}}_1', {\varvec{Z}}_2')'\), and given \(A^+=1\), we suppose \(L_k={{\varvec{Z}}}_k'\gamma _k+R_k\), \(k=1, 2\) , and \({\varvec{R}}=(R_{1}, R_{2})'\) is bivariate normal with \(\textrm{var}(R_k)=\sigma _k^2\), \(k=1, 2\), \(\rho ({\varvec{Z}})=\textrm{corr}(R_1, R_2 \mid {\varvec{Z}})\) , and \(\log \{(1+\rho ({\varvec{Z}})/(1-\rho ({\varvec{Z}}))\}={{\varvec{Z}}}_3'\varvec{\gamma }_3\). As such, we have

to form the generalized propensity score as \({\varvec{S}}=(\pi _{1}({\varvec{Z}}_0), {\mathbb {E}}(L_{1} \mid {\varvec{Z}}_{0}, {\varvec{Z}}_{1}), {\mathbb {E}}(L_{2} \mid {\varvec{Z}}_{0}, {\varvec{Z}}_{2}), {\mathbb {E}}(L_{1}L_{2} \mid {\varvec{Z}}))'\) or \({\varvec{S}}=(S_0({\varvec{Z}}_0), S_1({\varvec{Z}}_1),S_2({\varvec{Z}}_2),S_3({\varvec{Z}}_3))'\) and use it for adjustment in the regression model (2.4) as

where \({\varvec{X}}=(1, A^+, A^+L_{1}, A^+L_{2}, A^+L_{1}L_{2})'\).

4.2.2 Inverse Joint Density Weighting

The inverse density of exposure weighted estimating function is given here by

where \(\varvec{\varGamma }=(\varvec{\gamma }_1', \sigma _1^2, \varvec{\gamma }_2', \sigma _2^2, \varvec{\gamma }_3')'\),

and \(D({\varvec{X}})=(1, A^+, A^+L_{1},A^+L_{2}, A^+L_{1}L_{2})'\).

Stabilization can be used to address extreme weights [19, 25] where

with \(\pi _{2}(\varvec{\gamma }_0^{\dagger })\) and \(f(l_{1},l_{2} \mid A^+=1)\) unconditional quantities with respect to the conditional quantities \(\pi _{1}({\varvec{Z}}_{0}; \varvec{\gamma }_0)\) and \(f(l_{1}, l_{2} \mid A^+=1, {\varvec{Z}})\) for weights stabilization. The inverse weighted estimating function for \(\varvec{\varphi }\) with stabilized weights is given by (4.6) with \(w^s({\varvec{X}}, {\varvec{Z}})\) in place of \(w({\varvec{X}}, {\varvec{Z}})\) giving

The estimate of \(\varvec{\varphi }\) is the solution from solving \(U_0(Y \mid {\varvec{X}};\varvec{\varphi },\varvec{\varGamma }, \varvec{\gamma }_0)=0\) or \(U_0(Y \mid {\varvec{X}};\varvec{\varphi },\varvec{\varGamma },\varvec{\varGamma }^{\dagger }, \varvec{\gamma }_0, \varvec{\gamma }_0^{\dagger })=0\); See Online Appendix B and C, for more details on parameter estimation through the second-order generalized estimating equations (GEE2) [26, 27] and derivation of the asymptotic covariance of the estimator.

4.3 Misspecification of Propensity Scores

4.3.1 Regression Adjustment and Inverse Density Weighting

If we treat the bivariate continuous or semi-continuous exposures as conditionally independent, the generalized propensity score can be adjusted similarly as in the regression model (3.5). That is,

where \({\varvec{X}}=(1, A_{1}, A_{2}, A_{1}A_{2})'\) contains continuous exposures, \(\tilde{{\varvec{S}}}=({\mathbb {E}}(A_{1} \mid Z_{1}), {\mathbb {E}}(A_{2} \mid Z_{2}), {\mathbb {E}}(A_{1} \mid Z_{1}){\mathbb {E}}(A_{2} \mid Z_{2}))'\). For semi-continuous exposures, we have \({\varvec{X}}=(1, L_{1}, L_{2}, L_{1}L_{2})'\), and \(\tilde S = {\left( {{\pi _1}\left( {{Z_0}} \right),\mathbb{E}\left( {{L_1}\mid {Z_0},{Z_1}} \right),\mathbb{E}\left( {{L_2}\mid {Z_0},{Z_2}} \right),\mathbb{E}\left( {{L_1}\mid {Z_0},{Z_1}} \right)\mathbb{E}\left( {{L_2}\left| {{Z_0},{Z_2}} \right.} \right)} \right)^\prime }\) in (4.8).

When exposures are continuous, the inverse density of exposure weighted estimating equation used for exposure effects estimation is given by

and when exposures are semi-continuous, the inverse density of exposure weighted estimating equation is given by

where

and \(D({\varvec{X}})=(1, A^+, A^+L_{1},A^+L_{2}, A^+L_{1}L_{2})'\).

When the dependence model assumes no role of confounders on the association between exposures, the regression model adjusted by the generalized propensity score is similar to (3.7),

where \({\varvec{X}}=(1, A_{1}, A_{2}, A_{1}A_{2})'\), \(\tilde{{\varvec{S}}}=({\mathbb {E}}(A_{1} \mid {\varvec{Z}}_{1}), {\mathbb {E}}(A_{2} \mid Z_{2}), {\mathbb {E}}(A_{1}A_{2} \mid {\varvec{Z}}_{1}, {\varvec{Z}}_{2}))'\). For semi-continuous exposures, we have \({\varvec{X}}=(1, L_{1}, L_{2}, L_{1}L_{2})'\), \(\tilde{{\varvec{S}}}=(\pi _1({\varvec{Z}}_0), {\mathbb {E}}(L_{1} \mid {\varvec{Z}}_0, {\varvec{Z}}_{1}), {\mathbb {E}}(L_{2} \mid {\varvec{Z}}_0, {\varvec{Z}}_{2}), {\mathbb {E}}(L_{1}L_{2} \mid {\varvec{Z}}_0, {{\varvec{Z}}}_1, {{\varvec{Z}}}_2))'\).

The inverse density weighting approach using the misspecified joint density of exposures is to solve the following estimating equation for continuous exposures,

For the semi-continuous exposures, the inverse density of exposure weighted estimating equation is to solve (4.10) with

4.3.2 Empirical Bias and Efficiency

In this section, we report on simulation studies based on the random sample of size 500 with 1000 repetitions to estimate the exposure effect when exposures are continuous or semi-continuous. The estimated exposure effect through both regression adjustment and inverse density-weighting approach would be assessed in terms of the relative bias, i.e., \((\hat{\varvec{\varphi }}-\varvec{\theta })/\varvec{\theta }\).

When exposures in the true response model (2.1) are bivariate continuous, we let \(A_{k} =\gamma _{k0} +\gamma _{k1}Z_{k}+R_{k}\), \(k=1,2\), where

and \(Z_{k} \perp R_{k}\). Additionally, we have \(\log \{(1+\rho )/(1-\rho )\}=\gamma _{30}+\gamma _{31}Z_{3}\). We let \(\gamma _{10}= 2\) and \(\gamma _{11}=\log 2\) such that \({\mathbb {E}}(A_{1})=2\), let \(\gamma _{20}= 0.5\) and \(\gamma _{21}=\log 1.25\) such that \({\mathbb {E}}(A_{2})=0.5\). We let \(\gamma _{31} \in [-5, 5]\) and solve for \(\gamma _{30}\) such that \({\mathbb {E}}(\rho )=0.5\).

Empirical relative bias with standard errors (reflected by shaded bands) of estimators of exposure effects from inadequate propensity score modeling assuming conditional independence between two continuous exposures when the second-order confounder has the effect \(\gamma _{31}\); regression adjustment (Reg) and inverse weighting (IPW) are considered; the marginal association between exposures is \(\rho =0.5\)

The relative biases of exposure effects estimated from (4.8), (4.9), (4.11), and (4.12) under two types of model misspecifications are shown in Figs. 3 and 4. The patterns of relative biases for continuous exposures are quite different from the case of binary exposures, but the main findings are similar in general. We again see the relative bias of the exposure effect increases as the covariate effect on the exposure dependence increases. Compared to the case of binary exposures, the estimated relative bias is relatively lower for continuous exposures. Regression adjustment tends to produce a smaller bias than the inverse density weighting approach with higher efficiency.

Empirical relative bias with standard errors (reflected by shaded bands) of estimators of exposure effects from inadequate propensity score modeling due to the omission of a second-order confounder with effect \(\gamma _{31}\); regression adjustment (Reg) and inverse weighting (IPW) are considered; the marginal association between two continuous exposures is \(\rho =0.5\)

For semi-continuous exposures, we let \(\pi _{1}({\varvec{Z}}_{0})=\text {expit}(\gamma _{00}+\gamma _{01}Z_{1}+\gamma _{02}Z_{2})\) where \(\gamma _{00}=1.484\), \(\gamma _{01}=1\), \(\gamma _{02}=2\) such that \({\mathbb {E}}(\pi _{1})=0.7\) in the first part of the model. For the second part where given \(A^+=1\), we suppose \(\ l o g (A_{k} \mid A^+=1)=\gamma_{k0}+\gamma_{k1}Z_{k}+R_{k}\), \(k=1, 2\), where

We let \(\gamma _{10}= 2\) and \(\gamma _{11}=\log 2\) such that \({\mathbb {E}}(\log A_{1} \mid A^+=1)=2\), let \(\gamma _{20}= 0.5\) and \(\gamma _{21}=\log 1.25\) such that \({\mathbb {E}}(\log A_{2}\mid A^+=1)=0.5\). Additionally, we have \(\rho =\sigma _{12}/(\sigma _{1}\sigma _{2})\), and \(\log \{(1+\rho )/(1-\rho )\}=\gamma _{30}+\gamma _{31}Z_{3}\), where we let \(\gamma _{31} \in [-5, 5]\) and solve for \(\gamma _{30}\) such that \({\mathbb {E}}\{\rho (A_1, A_2)\}=0.5\).

Figures D1 and D2 in Online Appendix D show the relative biases of semi-continuous exposure effects estimated via (4.8), (4.10), (4.11) and (4.13) under two model misspecifications. The patterns of the relative bias as a function of the covariate effect \(\gamma _{31}\) are similar to those of Figs. 3 and 4, while the case of semi-continuous exposures can lead to higher variability in estimates compared to binary and continuous exposures, especially under inverse density weighting and conditional independence. Again regression adjustment yields a smaller bias and greater precision compared to inverse density weighting in these simulation settings.

5 A Two-Stage Causal Analysis for Bivariate Semi-continuous Exposures

Here, we propose a two-stage approach for causal analysis involving semi-continuous exposures, again considering the special case that a single binary indicator \(A^+\) indicates that an individual is exposed, and if \(A^+=1\) then variables \(A_k\), \(k=1,\ldots , p\) record the different facets of exposure. It is helpful to let \(A_k^c=A^+(A_k-\bar{A}_k)\) denote the the centered value of \(A_k\) where \(\bar{A}_k={\mathbb {E}}(A_k \mid A^+=1)\), \(k=1, \dots , p\), and \({\varvec{A}}=(A_1^c,\dots , A_p^c)'\) is the vector of exposures, \({\varvec{B}}=(A_1^c A_2^c, \ldots , A_{p-1}^c A_p^c)'\) is the vector of pairwise products of exposures, \({\varvec{X}}=(A^+, {\varvec{A}}^{\prime }, {\varvec{B}}')'\), and \({\varvec{Z}}\) is an \(r \times 1\) vector of confounders. The data-generating model is

5.1 Stage I: The Dose–Response Surface

In stage I, we focus on the subgroup analysis modeling the dose–response surface among those with \(A^+=1\) (the exposed). For a sample with \(\{Y_i, {\varvec{X}}_i, {\varvec{Z}}_i, i=1, \dots , n \}\), the estimating function is \(U_{1}(\varvec{\theta })=\sum _{i=1}^n U_{i1}(\varvec{\theta })\) for the covariate adjustment where

\(D_1({\varvec{A}}_i, {\varvec{B}}_{i}, {\varvec{Z}}_i)=(1, {\varvec{A}}_{i}^{\prime }, {\varvec{B}}_{i}',{\varvec{Z}}_i')'\), and

Alternatively, if \({\varvec{S}}_A={\mathbb {E}}({\varvec{A}} \mid A^+=1, {\varvec{Z}})\) and \({\varvec{S}}_B={\mathbb {E}}({\varvec{B}} \mid A^+=1, {\varvec{Z}})\), we can set

where \(D_1({\varvec{A}}_i, {\varvec{B}}_i, {\varvec{S}}_{i})=(1, {\varvec{A}}_i', {\varvec{B}}_i', {\varvec{S}}_{i}')'\), and

We have \(\hat{\varvec{\theta }}\) from solving \(\sum _{i=1}^n U_{i1}(\varvec{\theta })=0\) or \(\sum _{i=1}^n U_{i2}(\varvec{\theta })=0\) which is the causal effect of the continuous part of exposure.

5.2 Stage II: The Effect of Exposure Status

The exposure effect of \({\varvec{A}}\) and \({\varvec{B}}\) is obtained from stage I and is used as an offset in stage II. Specifically we use \({\tilde{\eta }}_0({\varvec{A}},{\varvec{B}}; \varvec{\theta })=\hat{\varvec{\theta }}_2' {\varvec{A}}_{i} + \hat{\varvec{\theta }}_3' {\varvec{B}}_{i}\) as an offset taking the value zero for those with \(A^+=0\). Stage II is directed at assessing the effect of the binary exposure \(A^+\); many propensity score-based methods can be used to assess the effect of exposure at the reference level. We now discuss 4 possible approaches: simple regression adjustment, propensity score regression adjustment, inverse probability weighting (IPW), and augmented inverse probability weighting (AIPW).

5.2.1 A. Simple regression adjustment

For the simple regression adjustment, we have the estimating function as

where \(\varvec{\alpha }^\circ =(\alpha _0, \alpha _1, \varvec{\alpha }_5')'\), \(D_2(A_i^+, {\varvec{Z}}_i)=(1, A_i^+, {\varvec{Z}}_i')'\), and

The superscript Z in \(\tilde{\eta }^Z_0\) indicates that \(\varvec{\theta }\) is estimated from (5.2) by adjusting for \({\varvec{Z}}\). If \(\varPsi =(\varvec{\alpha }^{\circ \prime }, \varvec{\theta }')'\), we solve the joint estimating equations,

to get \({\hat{\varPsi }}\).

5.2.2 B. Propensity score regression adjustment

If \(S_0={\mathbb {E}}(A^+ \mid {\varvec{Z}})\), replacing \({\varvec{Z}}\) with \(S_0\) in (5.4), we obtain the estimating function

where \(D_2(A_i^+, S_{i0})=(1, A_i^+, S_{i0})'\), \(D_3({\varvec{Z}}_i)=(1, {\varvec{Z}}_i)'\), \({\mathbb {E}}(A_i^+ \mid {\varvec{Z}}_i; \varvec{\psi })=\text {expit}(\varvec{\psi }'{\varvec{Z}}_i)\), and

The superscript S in \(\tilde{\eta }^S_0\) indicates that \(\varvec{\theta }\) is estimated from (5.3) by adjusting with the generalized propensity score. Let \(\varPsi =(\varvec{\alpha }^{\circ \prime }, \varvec{\theta }', \varvec{\psi }')'\), we can consider solving the joint estimating equations to obtain \({\hat{\varPsi }}\),

5.2.3 C. Inverse probability weighting (IPW)

We first consider a marginal structural model with the offset,

Then we define the estimating function

where \(D_4(A_i^+)=(1, A_i^+)'\). Let \(\varPsi =(\varvec{\phi }', \varvec{\theta }', \varvec{\psi }')'\), so we can consider solving the joint estimating equations,

5.2.4 D. Augmented inverse probability weighting (AIPW)

The AIPW approach combines an outcome regression (OR) model and a propensity score (PS) model. The AIPW estimators are shown to be doubly robust, with estimators consistent if either the outcome regression model or the propensity score model is correctly specified [28]. To obtain this estimator, we begin by specifying the estimating functions for the outcome regression model through the G-computation approach [29, 30].

We consider

Then the AIPW estimator \({\hat{\mu }}_1\) and \({\hat{\mu }}_0\) can be obtained by solving the estimating equation \(\sum _{i=1}^n U_{i9}(\varvec{\theta },\varvec{\psi }, \mu _1)=0\) and \(\sum _{i=1}^n U_{i,10}(\varvec{\theta },\varvec{\psi }, \mu _2)=0\), where

and

Let \(\varPsi =(\varvec{\theta }', \varvec{\psi }', \varvec{\lambda }', \varvec{\mu }')'\), we can solve the joint estimating equations \(\sum _{i=1}^n {U}_i(\varPsi )={\varvec{0}}\), where

From standard estimating function theory, we have

where \({\mathcal {A}}(\varPsi )=E\{-\partial {U}_i(\varPsi )/\partial \varPsi '\}\) and \({\mathcal {B}}(\varPsi )=E\{{U}_i(\varPsi ){U}_i'(\varPsi )\}\), and the expectation is taken with respect to the true distribution. In practice, we use sample averages to estimate \({\mathcal {A}}(\varPsi )\) and \({\mathcal {B}}(\varPsi )\), and evaluate the resulting expressions at the estimates to obtain \({\mathcal {A}}^{-1}({\hat{\varPsi }}){\mathcal {B}}({\hat{\varPsi }})[{\mathcal {A}}^{-1}({\hat{\varPsi }})]' \;,\) where

and

5.3 Simulation Studies on the Two-Stage Approach

Here, we evaluate the performance of the two-stage approach for the analysis of a bivariate semi-continuous exposure under the data-generating process of Sect. 4.3.2. We consider the stage II estimator based on various combinations of methods in stages I and II. For stage I, confounder regression (5.2) and propensity score adjustment (5.3) are used. In stage II, we employ methods A) to D) of Sect. 5.2. We see that when all models are correctly specified, the empirical bias is negligible across all methods as shown in Table 1; use of IPW in stage II yields the estimates with the highest standard error, while the AIPW estimator improves the efficiency as expected.

Table 2 displays the relative bias and empirical standard error of the stage II estimators when models are misspecified in stage II. Here, the confounders are transformed as \(V_1 = \exp (Z_1/2)+Z_2\;, V_2 = Z_2/(1+\exp (Z_1))+10\;, V_3 = (Z_1Z_2/10 +0.6)^2\) and \({\varvec{V}}=(V_1, V_2, V_3)'\) is used instead of \({\varvec{Z}}=(Z_1, Z_2, Z_3)'\) in stage II for model misspecification. If either the outcome regression (OR) model or the propensity score (PS) model is misspecified in Stage II, biased estimates are obtained in methods reliant on the respective model. The AIPW estimator exhibits the double robustness property. When both models are misspecified in stage II, the AIPW approach yields an estimator with a large finite sample bias and high standard error.

6 Prenatal Alcohol Exposure and Cognition

Here, we consider a large prospective cohort study comprised of 480 inner-city pregnant African–American mothers recruited between September 1986 and April 1989 [12]. Quantitative measures of alcohol consumption during pregnancy were obtained at each maternal prenatal visit using a timeline follow-back interview [31] and converted to three common metrics: the number of standard drinks per drinking occasion, frequency of drinking (days/month), and average oz absolute alcohol (AA/day). Additional variables collected included socioeconomic status (SES), maternal age, maternal years of education, marital status, and family income; maternal smoking (cigarettes/day) and marijuana, cocaine, and other illicit drug use (days/month), maternal history of alcohol abuse or dependence; and child’s sex, race, and whether s/he was raised by his/her biological mother and/or was in foster care; see Table D1 in Online Appendix D. The mothers and their children were followed for 19-21 years postpartum with a retention rate of 79.0% (\(N=377\)). A variety of cognitive tests were administered to each child to assess various facets of cognitive function. The results of the various cognitive tests were synthesized by fitting a second-order confirmatory factor analysis giving a composite measure of cognitive function [32] which we adopted as the response, Y, in the analyses that follow.

Among the 377 mothers in this analysis, 16.2% reported no alcohol consumption during pregnancy resulting in the alcohol exposure variables being semi-continuous. For mothers who drank alcohol during pregnancy, two main exposures (oz prenatal absolute alcohol exposure per drinking occasion, and the proportion of drinking days during pregnancy) were log-transformed and centered. Here, we evaluate the causal effects in four ways as discussed in the previous sections: subgroup analysis involving drinkers, analyses based on the bivariate semi-continuous exposure using conventional regression adjustment and the two-part model, and full sample analysis with the two-stage approach. We aim to evaluate the causal effect of alcohol drinking, two main exposures, and their interaction on child cognitive function while controlling for selected measurements listed in Table 3.

6.1 Subgroup Analysis Involving Drinkers

We first restrict attention to drinkers and focus on the causal effects of alcohol exposure on children’s cognition. Let \(A_1\) represent the number of standard drinks per drinking occasion, and \(A_2\) represent the proportion of days in each month of drinking (days/month); we refer to these as the intensity and frequency of drinking, respectively. If \(A^+ = {\mathbb {I}}(A_{i1}A_{i2} > 0)\) indicates any consumption of alcohol, to conduct the analyses among drinkers, we consider the regression model

where \(L_{ik}=A_i^+ \{\log A_{ik}- {\mathbb {E}}(\log A_{ik} \mid A_i^+=1)\}\). When using propensity score regression adjustment, we consider

where \({\varvec{S}}_i=(S_{i1}, S_{i2}, S_{i3})'\), with \(S_{ik}={\mathbb {E}}(L_{ik} \mid A_i^+=1, {\varvec{Z}}_i)\), \(k=1, 2\), \(S_{i3}={\mathbb {E}}(L_{i1}L_{i2} \mid A_i^+=1, {\varvec{Z}}_i)\). The estimated effects of each confounder are given in columns 3-5 of Table 3 for each of the respective models.

6.2 Analyses Based on the Bivariate Semi-continuous Exposure Model

The conventional regression model adjusted by all the confounders is fitted as

with \({\varvec{Z}}_i\) the set of confounders. Using a two-part model for the generalized propensity score as in [24], the regression model adjusted by generalized propensity score is

where as in (4.5), \({\varvec{S}}_i=(\pi _{i1}({\varvec{Z}}_i), {\mathbb {E}}(L_{i1} \mid {\varvec{Z}}_i), {\mathbb {E}}(L_{i2} \mid {\varvec{Z}}_i), {\mathbb {E}}(L_{i1}L_{i2} \mid {\varvec{Z}}_i))'\) with

The results are shown in Table 3.

The inverse density weighting approach to estimating causal effects involves solving (4.7) with stabilized weights. We assume the exposures follow a bivariate normal distribution when we estimate the conditional joint density of the two exposures.

6.3 Full Sample Analysis with the Two-Stage Approach

We also address the effects of confounding variables through the two-stage approach as discussed in Sect. 5. We first define the offset from subgroup analysis on drinkers using estimates obtained from (6.1) or (6.2) which is the stage I. Let \({\tilde{\eta }}_i={\hat{\alpha }}_1 L_{i1}+{\hat{\alpha }}_2 L_{i2}+{\hat{\alpha }}_3 L_{i1}L_{i2}\) be the offset in stage II and use regression adjustment with covariates, regression adjustment with propensity score, IPW, and AIPW to differentiate the actual exposure effect as a drinker.

6.4 Results

We assess the covariate balance using Pearson correlation [23, 33]; see Table D2 in Online Appendix D. The Pearson correlations were computed between each exposure and each covariate in Table 3 before generalized propensity score adjustment or weighting adjustment. To evaluate the performance of each approach, we analyzed the covariate balance after conditioning on the generalized propensity score or applying inverse probability/density weighting. All the methods described above substantially reduce the maximum absolute correlation and average absolute correlation compared to the conventional regression approach shown in the third row of Table 3, indicating improvement of covariate balance.

In the full sample analysis with the two-part model, we found that the regression approach with generalized propensity score adjustment reduced covariate imbalance more effectively than the inverse density weighting approach. In the full sample analysis with the two-stage approach, the propensity score-based methods in stage II (e.g., IPW) achieve better covariate balance than the regression approach. Overall, our results demonstrate the effectiveness of each analysis with different methods in improving covariate balance, with the optimal approach depending on the specific analysis context.

Table 4 presents the causal effects estimated from both subgroup analysis and full sample analysis with the various methods. All estimated effects are negative, including the effects of drinking at the reference level versus not drinking, prenatal absolute alcohol exposure per occasion, the proportion of drinking days, and their interaction, confirming that alcohol consumption by expectant mothers has a negative effect on children’s cognitive development. For subgroup analysis among drinkers, the results from the regression with covariate adjustment and the regression model adjusted by generalized propensity scores are quite similar, indicating the cognitive function score of the child decreases as the mother increases the amount of alcohol consumed at each drinking occasion, or increases her drinking frequency. The interaction term is negative so the effect of increasing the intensity of drinking on each occasion is greater when the frequency of alcohol use during pregnancy is greater, and vice versa. For full sample analysis, the conventional regression adjustment and the regression model adjusted by the two-part generalized propensity scores also generate similar estimates except for the drinker indicator. The inverse density weighting approach results in a larger effect size of alcohol exposure per occasion and smaller effect sizes of drinking frequency and their interaction compared with estimates obtained from the regression methods. In full analysis with the two-stage approach, the effect of drinking obtained from the propensity score-based methods in stage II, that is regression with a propensity score adjustment, IPW, and AIPW method, is larger than the estimate obtained from regression approaches. The IPW approach in stage II has the largest standard error among all the methods, whereas the AIPW approach generates a similar, but more precise estimate of the causal effect.

We built a dose–response surface on the exposure estimates obtained from stage I using a regression model with generalized propensity score adjustment in the two-stage approach. The resulting surface is shown in the left panel of Fig. 5. We define the reference level of a child’s cognition when the mother has an average level of intensity and density of alcohol use, that is having 1.81 oz absolute alcohol per occasion and spending approximately 4 days per month (0.13 months) consuming alcohol. The x-axis and y-axis show the increment levels of drinking frequency and intensity, respectively. This graphical depiction shows the expected reduction in child’s cognitive function resulting from increased drinking frequency and intensity. The right panel of Fig. 5 displays a contour plot that shows the change in cognition associated with different combinations of changes in intensity and frequency of alcohol intake. For instance, spending approximately 9 more days per month (0.3 months) drinking based on the reference level while maintaining the same drinking intensity, or spending 3 more days per month while also increasing the dose of drinks by 2 oz on each drinking occasion based on the reference level would both result in a 4-unit decrease in the child’s cognitive function score.

7 Discussion

In this article, we describe methods for assessing the effect of two or more exposure variables on a continuous response. Confounding is addressed through the use of a generalized propensity score. In this setting, the generalized propensity score is based on the joint density of exposures and in particular is the vector of linear functions of confounders necessary to construct this joint density. For inverse density weighting, we stabilize the weights via the marginal joint density of the exposures. A key finding is that when confounders affect the joint distribution of the exposure variables, they must be accounted for in the propensity score modeling to ensure consistent estimation and valid inference regarding the interactions between the exposures. Regression adjustment appears to be less sensitive to misspecification of the dependence structure and omission of the second-order effects of confounders, and moreover, it leads to more precise estimation of causal effects. We did not consider matching or stratification on the generalized propensity score but these represent alternative approaches for dealing with confounders [34].

Note that much of the literature on causal analysis includes the conceptualization of potential outcomes [35, 36] for each unit, here denoted by \(\{Y({\varvec{a}})\) for \({\varvec{a}} \in {\mathcal {A}}\}\) where \({\mathcal {A}}\) may be a multidimensional space for possible exposures. We do not make use of potential outcomes in this paper, but our methods could be reformulated and formalized within that framework.

References

Rosenbaum PR, Rubin DB (1983) The central role of the propensity score in observational studies for causal effects. Biometrika 70(1):41–55

Imbens GW (2000) The role of the propensity score in estimating dose-response functions. Biometrika 87(3):706–710

Hirano K, Imbens GW (2004) The propensity score with continuous treatments. Appl Bayesian Model Causal Inference Incomplete-Data Perspect 226164:73–84

Dominici F, Peng RD, Barr CD, Bell ML (2010) Protecting human health from air pollution: shifting from a single-pollutant to a multi-pollutant approach. Epidemiology 21(2):187

Duell EJ (2012) Epidemiology and potential mechanisms of tobacco smoking and heavy alcohol consumption in pancreatic cancer. Mol Carcinog 51(1):40–52

Suzuki T, Wakai K, Matsuo K, Hirose K, Ito H, Kuriki K, Sato S, Ueda R, Hasegawa Y, Tajima K (2006) Effect of dietary antioxidants and risk of oral, pharyngeal and laryngeal squamous cell carcinoma according to smoking and drinking habits. Cancer Sci 97(8):760–767

Beelen R, Hoek G, van den Brandt PA, Goldbohm RA, Fischer P, Schouten LJ, Armstrong B, Brunekreef B (2008) Long-term exposure to traffic-related air pollution and lung cancer risk. Epidemiology 19:702–710

Vegetabile BG, Gillen DL, Stern HS (2020) Optimally balanced gaussian process propensity scores for estimating treatment effects. J R Stat Soc Ser A 183(1):355–377

Williams JR, Crespi CM (2020a) Causal inference for multiple continuous exposures via the multivariate generalized propensity score. arXiv:2008.13767

Chen J, Zhou Y (2023) Causal effect estimation for multivariate continuous treatments. Biometr J. https://doi.org/10.1002/bimj.202200122

Di Credico G, Edefonti V, Polesel J, Pauli F, Torelli N, Serraino D, Negri E, Luce D, Stucker I, Matsuo K et al (2019) Joint effects of intensity and duration of cigarette smoking on the risk of head and neck cancer: a bivariate spline model approach. Oral Oncol 94:47–57

Jacobson SW, Jacobson JL, Sokol RJ, Chiodo LM, Corobana R (2004) Maternal age, alcohol abuse history, and quality of parenting as moderators of the effects of prenatal alcohol exposure on 7.5-year intellectual function. Alcoholism 28(11):1732–1745

Kodituwakku P (2007) Defining the behavioral phenotype in children with fetal alcohol spectrum disorders: a review. Neurosci Biobehav Rev 31(2):192–201

Lewis CE, Thomas KG, Dodge NC, Molteno CD, Meintjes EM, Jacobson JL, Jacobson SW (2015) Verbal learning and memory impairment in children with fetal alcohol spectrum disorders. Alcoholism 39(4):724–732

Jacobson JL, Dodge NC, Burden MJ, Klorman R, Jacobson SW (2011) Number processing in adolescents with prenatal alcohol exposure and ADHD: differences in the neurobehavioral phenotype. Alcoholism 35(3):431–442

Jacobson JL, Jacobson SW, Sokol RJ, Martier SS, Ager JW, Kaplan-Estrin MG (1993) Teratogenic effects of alcohol on infant development. Alcoholism 17(1):174–183

Imai K, Van Dyk DA (2004) Causal inference with general treatment regimes: generalizing the propensity score. J Am Stat Assoc 99(467):854–866

Lipsitz SR, Laird NM, Harrington DP (1991) Generalized estimating equations for correlated binary data: using the odds ratio as a measure of association. Biometrika 78(1):153–160

Hernán MA, Robins JM (2010) Causal inference

White H (1982) Maximum likelihood estimation of misspecified models. Econometrica 1–25

Rotnitzky A, Wypij D (1994) A note on the bias of estimators with missing data. Biometrics 1163–1170

Yule GU (1912) On the methods of measuring association between two attributes. J R Stat Soc 75(6):579–652

Williams JR, Crespi CM (2020b) Causal inference for multiple continuous exposures via the multivariate generalized propensity score. arXiv:2008.13767

Hocagil TA, Cook RJ, Jacobson SW, Jacobson JL, Ryan LM (2021) Propensity score analysis for a semi-continuous exposure variable: a study of gestational alcohol exposure and childhood cognition. J R Stat Soc 184:1390–1413

Robins JM (2000) Marginal structural models versus structural nested models as tools for causal inference. In: Statistical models in epidemiology, the environment, and clinical trials. Springer, New York, pp 95–133

Zhao LP, Prentice RL (1990) Correlated binary regression using a quadratic exponential model. Biometrika 77(3):642–648

Kalema G, Molenberghs G, Kassahun W (2016) Second-order generalized estimating equations for correlated count data. Comput Stat 31:749–770

Scharfstein DO, Rotnitzky A, Robins JM (1999) Adjusting for nonignorable drop-out using semiparametric nonresponse models. J Am Stat Assoc 94(448):1096–1120

Snowden JM, Rose S, Mortimer KM (2011) Implementation of g-computation on a simulated data set: demonstration of a causal inference technique. Am J Epidemiol 173(7):731–738

Williamson EJ, Forbes A, Wolfe R (2012) Doubly robust estimators of causal exposure effects with missing data in the outcome, exposure or a confounder. Stat Med 31(30):4382–4400

Jacobson SW, Chiodo LM, Sokol RJ, Jacobson JL (2002) Validity of maternal report of prenatal alcohol, cocaine, and smoking in relation to neurobehavioral outcome. Pediatrics 109(5):815–825

Jacobson JL (2022) Effects of prenatal alcohol exposure on cognitive development: a dose-response analysis. Under review

Austin PC (2019) Assessing covariate balance when using the generalized propensity score with quantitative or continuous exposures. Stat Methods Med Res 28(5):1365–1377

Wu X, Mealli F, Kioumourtzoglou MA, Dominici F, Braun D (2022) Matching on generalized propensity scores with continuous exposures. J Am Stat Assoc 1–29

Splawa-Neyman J, Dabrowska DM, Speed TP (1990) On the application of probability theory to agricultural experiments. Essay on principles. Section 9. Stat Sci 465–472

Rubin DB (1974) Estimating causal effects of treatments in randomized and nonrandomized studies. J Educ Psychol 66(5):688

Prentice RL, Zhao LP (1991) Estimating equations for parameters in means and covariances of multivariate discrete and continuous responses. Biometrics 825–839

Acknowledgements

We thank Neil Dodge, Ph.D., for his assistance with data management support: This research was funded by grants to Sandra W. Jacobson and Joseph L. Jacobson from the National Institutes of Health/National Institute on Alcohol Abuse and Alcoholism (NIH/NIAAA; R01-AA025905) and the Lycaki-Young Fund from the State of Michigan. Richard J. Cook was supported by the Natural Sciences and Engineering Research Council of Canada through grants RGPIN 155849 and RGPIN 04207. Louise Ryan was supported by the Australian Research Council Centre of Excellence for Mathematical and Statistical Frontiers (ACEMS) CE140100049. Data collection for the Detroit Longitudinal Study was supported by grants from NIH/NIAAA (R01-AA06966, R01-AA09524, and P50-AA07606) and NIH/National Institute on Drug Abuse (R21- DA021034).

Author information

Authors and Affiliations

Corresponding author

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Li, K., Akkaya-Hocagil, T., Cook, R.J. et al. Use of Generalized Propensity Scores for Assessing Effects of Multiple Exposures. Stat Biosci 16, 347–376 (2024). https://doi.org/10.1007/s12561-023-09403-8

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12561-023-09403-8