Abstract

The aim of this study is to present a new regularized extreme learning machine (ELM) algorithm that can perform variable selection based on the simultaneous use of both ridge and Liu regressions in order to cope with some disadvantages of ELM and its variants such as instability and poor generalization performance and lack of sparsity. The proposed algorithm was compared with the classical ELM as well as the variants based on ridge, Liu, Lasso and Elastic Net approaches by cross-validation process and best tuning parameter over seven different real-world applications and their performances were presented comparatively. The proposed algorithm outperformed ridge, Lasso and Elastic Net algorithms in training performance prediction (average 40%) and stability (average 80%) and in test performance prediction (average 20%) and stability (60%) in the majority of the data. In addition, the proposed ELM was found to be more compact (better sparsity capability) with lower norm values. The results confirmed that the proposed ELM presents more stable and sparse solutions with better generalization performance than any other algorithm under favorable conditions. The findings based on experimental study via real-world applications indicate that the proposed ELM provides effective solutions to the mentioned drawbacks and yields more stable and sparse performance with better generalization capability than its competitors. Consequently, the proposed algorithm represents a powerful alternative both regression and classification tasks in machine learning field due to its theoretical flexibility.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Recent developments in information retrieving field (particularly machine learning) have heightened the need for faster, reliable and generalizable algorithms. Obviously, artificial neural networks is the leading field to achieve these purposes due to the capabilities on modelling complex problem, adaptability, speed and flexibility. Although the training algorithms like back-propagation (BP) are the core components on the performance of any neural network, they have some shortcomings such as local optima. Additionally, these algorithms may cause higher computation-cost because of the need of extensive parameter tuning like weights, biases, momentum, and learning rate. As a solution to deal with these issues, extreme learning machine (ELM) was proposed by Huanget al. [1, 2].

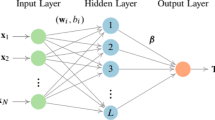

ELM is a single layer feed-forward neural network (SLFN) as an alternative to the conventional learning algorithms (like BP) by considering a simple but elegant idea into the training process of the network. This idea is based on assigning some parameters including the input weights and biases randomly and reducing the problem to a simple linear system which can be obtained via ordinary least squares (OLS) method. ELM has obtained a large and growing attention due to some useful properties: (i) to have a closed-form solution which can be found via an OLS solution, (ii) to eliminate parameter tuning like input weights, biases, momentum, and learning rate. because of the random assignment procedure, (iii) to provide successful results for both regression and classification tasks, (iv) to present faster performance unlike its competitors (i.e., BP), (v) to need less human intervention during training process. Over the past decade, ELM has received major scientific attention as a powerful instrument for modelling various types of problems in supervised, unsupervised and semi-supervised learning.

ELM has some advantages not only from practical capabilities but also theoretical properties including interpolation, universal approximation capability, and generalizability[1, 2]. In addition to these properties, ELM can be used both for regression and classification tasks in real-world applications. Although ELM has been considered in many fields, it has drawbacks like the instability, poor generalizability and insufficient sparsity due to the structural risk minimization which is the key concept of ELM’s learning process. The structure of the hidden layer matrix of ELM seems to be the main reason of these drawbacks. If the hidden layer matrix suffers from the ill-conditioning problem, the performance of ELM is dramatically affected with the non-optimal solution; that is, the ELM solution may have a large variance and the distance from the ELM estimates to the true values may be large. As a result of ill-conditioning, as opposed to Huang et al. [3], ELM does not have the smallest training error and the smallest norm of output weights. The natural result of this particular problem is to produce any solution other than ordinary ELM method.

A number of studies have been carried out to explore the effects of these drawbacks, and attempts have been made to improve ELM to deal with these drawbacks. In the linear regression field, ridge estimator proposed by Hoerl andKennard [4] is one of the most well-known methods to overcome the ill-conditioning problem. Therefore, Deng et al. [5], Li and Niu [6], Huang and Sun [7], Shao and Er [8], Chen and Wang [9], Yu et al. [10], Wang and Li [11], Yıldırım and Özkale [12], and Luo et al. [13] proposed some alternative algorithms based on the ridge estimator to take advantage of its ability to deal with the ill-conditioning problem, and showed that it generally contributes positively to the performance of the ELM in practical applications. Although ridge estimators is the most used shrinkage method in the literature, Liu estimator proposed by Keijian [14] is considered as a powerful alternative to ridge estimator. Because the ridge estimator is not linearly dependent, while the Liu estimator is linearly dependent on the tuning parameter which makes it easier to calculate the Liu estimator than the ridge estimator. In the context of ELM, Yıldırım and Özkale [15] proposed ELM based on Liu estimator with various tuning parameter selection techniques and proved that Liu estimator makes the ELM performance more stable and generalizable.

In addition to ill-conditioning problem, sparsity is another problem. The sparsity is critical property of any algorithm from the perspective of speed and compactness which is simply refers to a matrix of numbers (i.e., matrix of predictors in linear regression) that includes many zeros or values that will not significantly impact the calculation. However, ELM does not have the sparsity property and its variants based on ridge or Liu estimator are not the solutions at this point. Since Lasso regression proposed by Tibshirani [16] in linear regression is a remedy to provide sparse solutions by shrinking some of regression coefficients to exactly zero, Miche et al.[17, 18], Martínez-Martínez et al. [19], Luo et al. [13], Shan et al. [20], Li et al. [21] and Preeti et al. [22] developed ELM-based Lasso algorithms to improve the ELM performance via pruning the irrelevant nodes. Although Lasso enjoys sparsity, it selects as many predictors (p) as the maximum number of observations (n) in the \(p>n\) case, it does not have grouping effect and its performance is dominated by ridge regression if there exists high correlation between predictors. Because of these reasons, Zou and Hastie [23] introduced Elastic Net (ENet) in linear regression. Martínez-Martínez et al. [19] considered the ENet approach which can be seen as the cascade of the ridge and Lasso estimators into the learning process of ELM. Yıldırım and Özkale [24] proposed a novel regularized algorithm called as LL-ELM based on both Liu and Lasso regressions as alternative to ENet.

From a statistical point of view, Liu and ridge estimators have their own characteristics in shrinking the hidden layer structure. They have different objective functions which affect their ability to learn the inherent patterns in the data. Therefore, ridge and Liu-based ELM algorithms act differently, affecting generalization performance [15].

Research Motivation and Enthusiasm

The motivation of this study is based on the idea that a new regularized ELM algorithm with sparsity property can be developed by simultaneously taking advantage of the different properties of the ridge and Liu estimators. Influenced with this idea, we propose a novel regularized ELM algorithm based on the combination of Liu, ridge and Lasso regressions which we call as GO-ELM. The GO-ELM has following major contributions into the existing literature:

-

GO-ELM can easily deal with the multicollinearity problem (i.e., the ill-conditioning).

-

GO-ELM has the sparsity property as a result of \(L_{1}\) norm regularization and may provide more compact and better generalization performance.

-

GO-ELM carries the advantages of ridge and Liu regressions since it carries the shrinkage idea of both ridgeand Liu.

-

GO-ELM has the grouping effect as ENet so that it overcomes Lasso which selects one predictor from the highly correlated group.

-

Although GO-ELM has the sparsity feature like ENet and Lasso, the shrinkage effect is different from ENet and Lasso. Such that while ENet and Lasso shrink the weights to the origin, GO-ELM shrinks to \(d\varvec{\hat{\beta }}_{ELM}\), \(0<d<1\).

-

GO-ELM can be extended to the classification problems. This property makes it usable for various kinds of data-driven machine learning problems.

Review of ELM and Its Variants

ELM is based on a classical SLFN but differs from the point that during the training process, the parameters like input weights and biases in the hidden layer are selected arbitrarily unlike the iterative process in any other neural network. As a result of fixed parameters, the learning problem (classification or regression) is reduced to a system which consists of a series of linear equations. For any linear system, OLS method can be used to find the solution. A SLFN can be written as

where \(\left( \textbf{x}_{j}^{T},\textbf{y}_{j}^{T}\right) \) is the set of N distinct patterns with \(\textbf{x}_{j}\in R^{p}\) and \(\textbf{y}_{j}\in R^{m}\), \(\textbf{y}_{j}\) is the m-dimensional network output, \(\varvec{\omega }_{i}\) is the input weight between the i-th hidden neuron and the hidden layer, \(b_{i}\) is the bias, \(\varphi \) is the number of hidden neurons, \(g\left( .\right) \) is the activation function and \(\varvec{\beta }_{i}\) is the outputweights [1, 2].

Equation (1) can be written in a matrix form as

where

is the output matrix of hidden layer, \(\varvec{\beta }_{ \varphi \times m }=\left( \beta _{1},...,\beta _{\varphi }\right) ^{T}\) and \(\varvec{Y}_{ N\times m }=\left( \textbf{y}_{1},...,\textbf{y}_{N}\right) ^{T}\) are the output weight vector and output value vector, respectively. Here, m corresponds to the number of output layer neurons which is commonly equal to the number of target variable and fixed as 1 in most practical applications. To estimate the \(\beta \) parameter, the objective form of Eq. (2) is defined as:

The \(\varvec{\beta }\) estimator minimizing Eq. (3) is obtained as \(\widehat{\varvec{\beta }}_{ELM}=\textbf{S}^{+}\textbf{Y}\) where \(\textbf{S}^{+}\) is the Moore-Penrose inverse of matrix \(\textbf{S}\) [2]. Some popular ways of calculating the Moore-Penrose inverse are the orthogonal projection method, iterative methods and singular value decomposition [25, 26]. According to the orthogonal projection method, \(\textbf{S}^{+}\) is calculated via \(\textbf{S}^{T}\left( \textbf{SS}^{T}\right) ^{-1}\) if \(\textbf{S}\) is full row rank, else \(\textbf{S}^{+}=\left( \textbf{S}^{T}\textbf{S}\right) ^{-1}\textbf{S}^{T}\) if \(\textbf{S}\) is full column rank.

When there is a multicollinearity problem, inverting the matrix \(\left( \textbf{S}^{T}\textbf{S}\right) ^{-1}\) can sometimes be impossible and sometimes unstable. Therefore, alternative methods to ordinary ELM are recommended. On the other hand, ordinary ELM does not have sparsity property; that is, does not do variable selection. The methods proposed on the basis of ELM as a solution to these two problems are as follows:

Li and Niu [6] considered ridge regression in the context of ELM which was originally proposed by Hoerl and Kennard [4] and defined the ridge-ELM as

where k is the ridge tuning parameter and \(\textbf{I}_{\varphi }\) is the identity matrix with dimension \(\varphi \). Although the value of k affects the performance of R-ELM, there is no single best and suitable method for selecting the ridge tuning parameter. Regression studies literature [6, 27] indicates that R-ELM can provide smaller error than ELM if k is correctly determined.

Yıldırım and Özkale [15] considered the Liu regression in the context of ELM which was originally defined by Kejian [14] and defined the Liu-ELM as

where \(0<\) \(d<1\) is the Liu tuning parameter which shrinks each element of \(\varvec{\hat{\beta }}_{ELM}\) by the same d value. Since \(\varvec{\hat{\beta }}_{Liu-ELM}^{d}\) is in linear form of d, computing \(\varvec{\hat{\beta }}_{Liu-ELM}^{d}\) is much easier and faster than \(\varvec{\hat{\beta }}_{R-ELM}^{k}\) which provides computationally effective and cost minimized solutions for machine learning. \(\varvec{\hat{\beta }}_{Liu-ELM}^{d}\) is also a convex combination of \(\varvec{\hat{\beta }}_{ELM}\) and \(\varvec{\hat{\beta }}_{R-ELM}^{k}\) for k = 1 which claims that \(\varvec{\hat{\beta }}_{Liu-ELM}^{d}\) provides solution paths between \(\varvec{\hat{\beta }}_{ELM}\) and \(\varvec{\hat{\beta }}_{R}^{(k=1)}\) as d goes from1 to 0:

Yıldırım and Özkale [28] proposed OK-ELM which takes the advantages of ridge and Liu regressions as

where \(0<d<1\) and \(k>0\) are the tuning parameters. OK-ELM is a convex combination of R-ELM and ELM:

where d serves as a balance parameter between ELM and R-ELM and refers the relative contributions of them. Basically, while the higher d towards to 1 yields more contribution in favor of ELM, the lower d increases the effect of R-ELM to the solution.

In order to gain the sparsity property to ELM, Miche et al. [17] and Martínez-Martínez et al. [19] defined ELM based on Lasso (\(\varvec{\hat{\beta }}_{Lasso-ELM}\)) as the solution of the objective function

The main idea behind \(Lasso-ELM\) is to select the nodes which minimize the error by shrinking some of the nodes to exactly zero. The sparsity property provides (i) compactness, (ii) stability, (iii) generalization ability, (iv) interpretability and (v) speed. The solution of \(Lasso-ELM\) can be obtained via LARS-EN algorithm [23] or algorithm of Sjöstrandet al. [29] which is based on the LARS algorithm [30] and the algorithm with piecewise linear regularization path proposed by Rosset and Zhu [31]. The details of these algorithms can be found in Sjöstrand et al. [29].

Although Lasso does shrinkage and automatic variable selection simultaneously, it has disadvantages in linear regression. Similarly, in ELM-based studies, Lasso-ELM has same disadvantages. Therefore, Miche et al. [17] proposed ELM based on ENet which has the grouping effect and simultaneously the shrinkage and automatic variable selection properties and has the objective function

To solve the ENet-ELM, Miche et al. [17] and Luo et al. [13] applied the LARS-EN algorithm.

The Proposed Algorithm: GO-ELM

An algorithm holding the sparsity property can easily outperform any other algorithm in terms of speed, compactness and generalization performance. To gain OK-ELM the sparsity capability, we propose a new algorithm called GO-ELM which has triple shrinkage. The first two shrinkage effects are same with those of ridge and Lasso as in ENet and the third shrinkage factor makes the result closer to the real value than ENet. We define the objective function of the GO-ELM as:

In the proposed approach, the characteristic features of both ridge and Liu estimators to handle the multicollinearity problem occurring in the hidden layer of output matrix, are considered in learning process of algorithm. Additionally, the sparsity capability has been added via \(L_{1}\)-norm simultaneously with ridge and Liu estimators.

The objective function of GO-ELM can be written in augmented form given in Eq. (5) as follows:

where \(\tilde{\textbf{S}}=\genfrac(){0.0pt}0{\textbf{S}}{\sqrt{k}\textbf{I}_{\varphi }}\) and \(\tilde{\textbf{Y}}=\genfrac(){0.0pt}0{\textbf{Y}}{\sqrt{k}d\varvec{\hat{\beta }}_{ELM}}\) are the augmented form of the hidden layer and output matrices. The advantage of writing Eq. (6) in form Eq. (5) is to solve the GO-ELM problem as an \(L_1\)-norm problem. Therefore, various types of algorithm like LARS can be used to obtain the optimal solution of Eq. (6) via Eq. (5). The GO-ELM has the following properties which show that it is a generalization of ENet-ELM, Lasso-ELM, Liu-ELM, R-ELM and OK-ELM:

-

As d goes to 0, GO-ELM behaves similar to ENet-ELM

-

When \(\lambda =0\), GO-ELM and OK-ELM give same solutions.

-

When \(k=1\), GO-ELM solution is same with Liu-ELM solution and when \(k=0\), GO-ELM solution is same with Lasso-ELM solution. Then, GO-ELM solutions trace a curve path through the parameter space from the Liu-ELM to Lasso-ELM as k goes from 1 to 0.

-

When \(\lambda =0\) and \(d=0\), GO-ELM gives R-ELM solutions

-

Since GO-ELM solutions can also be obtained from Eq. (6), it does variable selection as Lasso-ELM and ENet-ELM.

-

The GO-ELM is strictly convex which can be seen from the equivalent objective function:

$$\begin{aligned} {\left\| {\textbf{S}{\varvec{\beta }} - \textbf{Y}} \right\| _2} + \lambda _{1}(\frac{1-\alpha }{2}{\left\| {d{{\widehat{\beta }}_{ELM}} - \varvec{\beta } } \right\| _2} + \alpha {\left\| \varvec{\beta } \right\| _1)} \end{aligned}$$where \(\lambda _{1}=k+\lambda \), \(\alpha =\lambda /(\lambda +k)\) and \(\lambda _{1}\ge 0\), \( 0\le \alpha \le 1\) are tuning parameters. The GO-ELM solutions trace a curve path through the parameter space from the OK-ELM to Lasso-ELM as \(\alpha \) goes from 0 to 1.

Choice of Tuning Parameters

GO-ELM depends on three tuning parameters which can be selected similarly to Lasso-ELM and ENet-ELM. First, the d values in the interval (0,1) are selected, and for each d value, a k value is selected for the k grid values in the specified range. Then, for each (d, k) pair, GO-ELM is solved by any algorithm such as LARS-EN [23, 29] to select \(\lambda \) via K-fold cross-validation. The cross-validation is done on a three-dimensional surface. By cross-validating the training data by considering every possible combination of three different tuning parameters, the best performing values were determined as optimum for the current trail. The tuning parameters are then chosen so as to give the smallest cross-validation error.

The pseudo-code for GO-ELM algorithm is as given by Algorithm 1.

Sparsity Property

Figure 1 is given to show the sparsity property of GO-ELM and to compare the coefficient paths for GO-ELM, Lasso-ELM and ENet-ELM under Body Fat data set. In order to give better insights as visually, the number of hidden layer is fixed to 20. The differences between the coefficient paths of Lasso-ELM, ENet-ELM and GO-ELM algorithms are noticeable, but (ii) and (iii) exhibit a similar structure. This is because the value of d is a small value. While k is constant, the value of d is effective in determining the difference between ENet-ELM and GO-ELM algorithms. Figure 1 proves that all three algorithms do variable selection and the ways to select variables are different from each other.

Grouping Property

Grouping effect property of the ENet states that highly correlated features will have similar estimated coefficients. The property is described formally in terms of an upper bound on the difference between two coefficients as it relates to the correlation between the predictors (e.g., Zou and Hastie [23], Theorem 1, Zhou [32], Theorem 2).

The grouping effect of GO-ELM is encouraged from the tuning parameter k and it is stated by Theorem 1.

Theorem 1

Given \((\textbf{Y},\textbf{S})\) and parameters \(\lambda \), k, and d are fixed. If \(\widehat{\beta }_i \widehat{\beta }_t > 0\), then

where \(\widehat{\beta }\) is the GO-ELM solution.

Proof

Let us first take the derivative of Eq.(5) with respect to \(\beta _{i} \) and \(\beta _{t}\) and set the results to zero:

where

and \(s_{i}\) and \(s_{t}\) have the same sign. Subtracting Eq. (8) from Eq. (7), we obtain

Applying the Cauchy-Schwarz inequality to Eq. (9),we get

Since \({{{\widehat{\beta }}}}\) is a minimizer of Eq. (6), by taking \(\beta =0\), we see that \(D({{{\widehat{\beta }}}},k,\lambda )\le \left\| {\textbf{ Y}}\right\| _{2}+\frac{k}{2}{\left\| {d{{\widehat{\beta }}_{ELM}}} \right\| _{2}}\) and we writethe inequality

With respect to Eq. (10), we complete the proof.

If \(h_{i}\), \(i=1,\ldots ,\varphi \) are standardized as \(\sum \limits _{j=1}^{N}h_{ij}=\sum \limits _{j=1}^{N}g\left( \mathbf {\omega }_{i}.\textbf{x}_{j}+b_{i}\right) =0\) and \(\sum \limits _{j=1}^{N}h^2_{ij}=\sum \limits _{j=1}^{N}g^{2}\left( \mathbf {\omega }_{i}.\textbf{x}_{j}+b_{i}\right) =1\), the inequality in Theorem 1 reduces to

where \(h_{i}^{T}h_{t}\) is the correlation between \(h_{i}\) and \(h_{t}\).

When \(h_{i}\) and \(h_{t}\) are highly correlated, \(h_{i}^{T}h_{t}\) goes to 1 (if it goes to -1 then consider \(-h_{t}\)). In this case, Theorem 1 says that the difference between the coefficient paths of \(h_{i}\) and \(h_{t}\) is almost 0 (this result is the same with the linear regression as Zou and Hastie [23] and Zhou [32] stated).

Performance Evaluation

Data Sources

In this study, Body Fat, Boston Housing, Machine CPU, Servo, Fish, Heart and Abalone data sets have been retrieved from the UCI database and have been considered to compare the performance of all algorithms. Their properties are tabulated in Table 1.

Experimental Settings

The design of the experiment plays a critical role on the performance of each algorithm considered in this study. The process followed in this study can be summarized as follows:

-

The inputs were standardized with a mean of zero and a variance of one so that possible performance losses due to data structure (such as scale and unit properties)was avoided.

-

Model training was initialized with random weight and bias values. In order to eliminate the effect of this randomness, each trial was performed using fixed starting points (i.e., seed values) by grid searching over the same range of tuning parameters depending on each algorithm.

-

The sine function, which is one of the commonlyused functions in ELM models, was used as theactivation function.

-

The data set was split into 70% and 30% training and test data, respectively. According to this splitting, the sample size of the training and test data sets for each data set is given in Table 1. The algorithms were trained using the training data and a 5-fold cross-validation (CV) was applied to obtain the tuning parameters on the training data. Then, the performance analysis on the test datawas obtained.

-

Too few neurons in the hidden layers to adequately detect signals in a complex data set can cause poor fit. An excessive number of neurons in the hidden layers can increase the time required to train the ELM. Training time can increase to a point where it is impossible to adequately train the ELM. To reach a compromise between too many and too few neurons in the hidden layers, the number of hidden layer neurons was taken as 100 and fixed across all data sets.

-

The number of trials was set at twenty and the average of the trial results was reported.

-

A grid search approach was used to determine the tuning parameters. Since different parameters are effective in training each algorithm, all algorithms were trained as a whole for all possible combinations of (d, k and \(\lambda \)) values. In Lasso, the conventional tuning parameter is the \(L_{1}\)-norm of the coefficients or the fraction of each regression coefficient estimates to the \(L_{1}\)-norm (s) (e.g., [23]). The advantage of s, is that it is valued within [0, 1]. Therefore, Lasso tuning parameter \(\lambda \) is reported through s. The grid of values for the parameters are picked as:

$$\begin{aligned} \begin{aligned} d\in \;&\big [ 10^{-5},10^{-4},..,10^{-2},0.02, \\&\;\, 0.03...,0.1,0.15,0.2,...,1\big ], \end{aligned} \end{aligned}$$$$\begin{aligned} \begin{aligned} k\in \;&\big [10^{-5},10^{-4},..,10^{-2},0.02, \\&\;\, ...,0.1,0.2,...,1,1.5,2,...,5\big ], \end{aligned} \end{aligned}$$$$\begin{aligned} \begin{aligned} s\in \;&\big [ 10^{-5},10^{-4},..,10^{-2},0.02, \\&\;\, 0.03...,0.1,0.15,0.2,...,1\big ]. \end{aligned} \end{aligned}$$To get the optimum (d, k) pairs for the OK-ELM, 5-fold CV is conducted on two-dimensional space and to get the optimum (d, k, s) pairs for the GO-ELM, 5-fold CV is conducted on three-dimensional space. In each fold, the tuning parameters minimizing the CV error are determined as optimal for the corresponding fold. This process is repeated for twenty trials and the mean values of the tuning parameters calculated as overall for all foldsare reported.

-

The Norm (Mean), Norm (SD), RMSE and SD criteria were used for the performance evaluation. The optimal tuning parameters found through the training data set are used to produce the lowest RMSE value in each trial and the average of the statistics are presented in Table 2.

The number of nodes, the norm values and standard deviations of the obtained coefficients are presented in Table 2.

The changes in the comparisons are also presented by Figs. 2 and 3 where the relative reduction rate is ascomputed as

Some of the results provided through Figs. 2 and 3 with Table 2 are listed as follows:

-

From the view of training RMSE, the GO-ELM algorithm outperforms the ELM, R-ELM, Lasso-ELM and ENet-ELM algorithms for all data sets except the Heart data. The superiority of GO-ELM over ELM, R-ELM, Lasso-ELM and ENet-ELM is more than by an average of \(40\%\) for RMSE (compared to other algorithms, GO-ELM gives a minimum of \(7\%\) and a maximum of \(95\%\) improvement) and more than by an average of \(80\%\) for SD (compared to other algorithms, GO-ELM gives a minimum of \(25\%\) and a maximum of \(99\%\) improvement).

-

GO-ELM is superior over ELM, R-ELM, Lasso-ELM and ENet-ELM with more than by an average of \(20\%\) in the sense of testing RMSE (minimum \(2\%\), maximum \(92\%\) improvement), this superiority is more than by an average of \(60\%\) in the sense of SD (minimum \(54\%\), maximum \(99\%\) improvement).

Results and Discussion

Table 2 gives the performance comparison of all algorithms based on the fixed parameters for the proposed algorithm and the optimal tuning parameter values for each algorithm itself, respectively. The performance measures such as Norm (Mean), Norm (SD), RMSE and SD were all computed on the test data sets using the optimal tuning parameters obtained through training data set. Since the training data sets are used for optimum tuning parameter selection, the comparisons will be made according to the test data. When the performance comparison is performed using the parameters for which the proposed algorithm is optimal, the results given in Table 2 are achieved.

The comprehensive evaluation of the tuning parameters in the grid space using the optimal values for each algorithm is presented in Table 2. The important results from Table 2 are as follows:

-

Considering the test data, GO-ELM outperforms OK-ELM on the RMSE criterion in all other data sets except the MachineCPU data set. The superiority percentage of OK-ELM over GO-ELM in the MachineCPU data set is only \(0.039\%\). According to the test data set, except for the Boston data set, GO-ELM outperforms OK-ELM under the SD criterion. In all test data sets except the Heart test data set, GO-ELM shows better RMSE and SD performances than ELM, R-ELM, Lasso-ELM, and ENet-ELM. The testing RMSE error of GO-ELM for the data sets except Machine CPU and Heart is the smallest value when compared to its competitors.

-

Considering the number of nodes for all data sets, it is seen that ELM, R-ELM and OK-ELM do not select nodes, while Lasso-ELM, ENet-ELM and GO-ELM do node selection. This is an indication that Lasso-ELM, ENet-ELM and GO-ELM have been proposed as solutions to the sparsity problem.

-

The norm of GO-ELM is less than ELM in all data sets. Except for the Servo and MachineCPU data, the mean of the norm of GO-ELM is smaller than the other algorithms, even if the standard error of the norm is not small in all other data sets.

On the test data, the changes of the residual values of the algorithms trained at their optimum tuning parameters are presented in Figs. 4, 5, and 6 for Body, Boston and Abalone data sets.

In order to evaluate the stability of the algorithms, the narrow range of residual values is preferable. Figures 4, 5, and 6 show that the stability of GO-ELM algorithm over the other algorithms are obvious for Body Fat and Abalone data sets. Considering Fig. 5 for the Boston data set, GO-ELM and OK-ELM algorithms show almost the same stability performance and it is seen that both algorithms outperform other algorithms. In order to determine whether the performance of the proposed algorithm is statistically significant compared to other algorithms, paired samples t-test with Bonferroni correction was used on the test data predictions (i.e., errors) and the results are given in Table 3. According to these results, it is mostly observed that the performance difference is statistically significant.

Conclusion

The aim of the current study is to present a new regularized ELM algorithm based on the simultaneous use of both ridge and Liu regression with Lasso regression to improve the ELM and its variants to cope with some drawbacks such as instability, poor generalization performance and lack of sparsity. This new algorithm has been proposed as a solution to multicollinearity and sparsity problems such as Lasso-ELM and ENet-ELM by both node selection and shrinkage. After conducting experimental study via real-world applications, the findings indicate that the proposed algorithm provides effective solutions to the mentioned drawbacks and yields more stable and sparse performance with better generalization capability than its competitors.

Limitations and Ideas for Future Works

Although the proposed algorithm provides significantimprovements on the performance of ELM and its variants, the major limitation of this study is the method of selection of tuning parameters. Some solid and analytical or genetic algorithm-based approaches should be considered in the future works. Secondly, the proposed algorithm cannot be used in high-dimensional data and it should be possible to make an adaptation that can be used in high-dimensional data. Additionally, keeping the number of hidden neurons fixed can be considered as another limitation. Future studies may include finding the optimum number of neurons using ELM and then choosing a sparse model using the number of neurons found.

Data Availability

The datasets generated during and/or analyzed during the current study are available in the UCI repository (https://archive-beta.ics.uci.edu/).

References

Huang GB, Zhu QY, Siew CK. Extreme learning machine: a new learning scheme of feedforward neural networks. In: 2004 IEEE International Joint Conference on Neural Networks (IEEE Cat. No. 04CH37541) (Vol. 2). IEEE; 2004. p. 985–90.

Huang GB, Zhu QY, Siew CK. Extreme learning machine: Theory and applications. Neurocomputing. 2006;70(1–3):489–501.

Huang GB, Zhou H, Ding X, Zhang R. Extreme learning machine for regression and multiclass classification. IEEE Trans Syst Man Cybernet B (Cybernet). 2011;42(2):513–29.

Hoerl AE, Kennard RW. Ridge regression: Applications to nonorthogonal problems. Technometrics. 1970;12(1):69–82.

Deng W, Zheng Q, Chen L. Regularized extreme learning machine. In: 2009 IEEE Symposium on Computational Intelligence and Data Mining. IEEE; 2009. p. 389–95

Li G, Niu P. An enhanced extreme learning machine based on ridge regression for regression. Neural Comput Appl. 2013;22:803–10.

Huang WB, Sun FC. Building feature space of extreme learning machine with sparse denoising stacked-autoencoder. Neurocomputing. 2016;22(174):60–71.

Shao Z, Er MJ. Efficient leave-one-out cross-validation-basedregularized extreme learning machine. Neurocomputing. 2016;19(194):260–70.

Chen YY, Wang ZB. Novel variable selection method based on uninformative variable elimination and ridge extreme learning machine: CO gas concentration retrieval trial. Guang pu xue yu guang pu fen xi= Guang pu. 2017;37(1):299–305.

Yu Q, Miche Y, Eirola E, Van Heeswijk M, Séverin E, Lendasse A. Regularized extreme learning machine for regression with missing data. Neurocomputing. 2013;15(102):45–51.

Wang H, Li G. Extreme learning machine Cox model for high-dimensional survival analysis. Stat Med. 2019;38(12):2139–56.

Yildirim H, Özkale MR. The performance of ELM based ridge regression via the regularization parameters. Expert Syst Appl. 2019;15(134):225–33.

Luo X, Chang X, Ban X. Regression and classification using extreme learning machine based on L1-norm and L2-norm. Neurocomputing. 2016;22(174):179–86.

Kejian L. A new class of blased estimate in linear regression. Commun Stat Theor Methods. 1993;22(2):393–402.

Yıldırım H, Özkale MR. An enhanced extreme learning machine based on Liu regression. Neural Process Lett. 2020;52:421–42.

Tibshirani R. Regression shrinkage and selection via the lasso. J R Stat Soc Ser B Stat Methodol. 1996;58(1):267–88.

Miche Y, Sorjamaa A, Bas P, Simula O, Jutten C, Lendasse A. OP-ELM: Optimally pruned extreme learning machine. IEEE Trans Neural Netw. 2009;21(1):158–62.

Miche Y, Van Heeswijk M, Bas P, Simula O, Lendasse A. TROP-ELM: a double-regularized ELM using LARS and Tikhonov regularization. Neurocomputing. 2011;74(16):2413–21.

Martínez-Martínez JM, Escandell-Montero P, Soria-Olivas E, Martín-Guerrero JD, Magdalena-Benedito R, Gómez-Sanchis J. Regularized extreme learning machine for regression problems. Neurocomputing. 2011;74(17):3716–21.

Shan P, Zhao Y, Sha X, Wang Q, Lv X, Peng S, Ying Y. Interval lasso regression based extreme learning machine for nonlinear multivariate calibration of near infrared spectroscopic datasets. Anal Methods. 2018;10(25):3011–22.

Li R, Wang X, Lei L, Song Y. \( L_ 21 \)-norm based loss function and regularization extreme learning machine. IEEE Access. 2018;18(7):6575–86.

Preeti, Bala R, Dagar A, Singh RP. A novel online sequential extreme learning machine with L 2, 1-norm regularization for prediction problems. Appl Intell. 2021;51:1669–89.

Zou H, Hastie T. Regularization and variable selection via the elastic net. J R Stat Soc Ser B Stat Methodol. 2005;67(2):301–20.

Yıldırım H, Özkale MR. LL-ELM: a regularized extreme learning machine based on L 1-norm and Liu estimator. Neural Comput Appl. 2021;33(16):10469–84.

Rao CR, Mitra SK. Generalized inverse of a matrix and itsapplications. In: Proceedings of the Sixth Berkeley Symposium on Mathematical Statistics and Probability, Volume 1: Theory of Statistics (Vol. 6). University of California Press; 1972. p. 601–21.

Schott JR. Matrix analysis for statistics. John Wiley & Sons; 2016.

Tutz G, Binder H. Boosting ridge regression. Comput Stat Data Anal. 2007;51(12):6044–59.

Yıldırım H, Özkale MR. A combination of ridge and Liu regressions for extreme learning machine. Soft Comput. 2023;27(5):2493–508.

Sjöstrand K, Clemmensen LH, Larsen R, Einarsson G, Ersbøll B. Spasm: a Matlab toolbox for sparse statistical modeling. J Stat Softw. 2018;23(84):1–37.

Efron B, Hastie T, Johnstone I, Tibshirani R. Least angle regression. Ann Stat. 2004;32(2):407–99.

Rosset S, Zhu J. Piecewise linear regularized solution paths. Ann Stat. 2007;1:1012–30.

Zhou DX. On grouping effect of elastic net. Stat Probab Lett. 2013;83(9):2108–12.

Author information

Authors and Affiliations

Contributions

Hasan Yıldırım: methodology, investigation, software, data curation, formal analysis, visualization, writing — original draft. M. Revan Özkale: methodology, conceptualization, supervision, writing — review editing, validation.

Corresponding author

Ethics declarations

Competing Interest

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Yıldırım, H., Özkale, M.R. A Novel Regularized Extreme Learning Machine Based on \(L_{1}\)-Norm and \(L_{2}\)-Norm: a Sparsity Solution Alternative to Lasso and Elastic Net. Cogn Comput 16, 641–653 (2024). https://doi.org/10.1007/s12559-023-10220-w

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12559-023-10220-w