Abstract

Historically, teachers’ uptake and implementation of empirically supported classroom interventions have involved substantial face-to-face consultation. However, most schools do not have the resources to provide this intensive level of support and many teachers may not need it. Thus, evaluation of alternative supports is warranted. In this pilot study, we evaluated the feasibility and effectiveness of an interactive web-based technology [i.e., the Daily Report Card.Online (DRC.O)] designed to facilitate teachers’ use of the DRC with minimal external support and examined individual teacher characteristics associated with DRC use. Elementary school teachers (N = 54) were given access to the DRC.O Web site and asked to use it to implement a DRC with one child. With regard to implementation, 16% were short-term adopters (less than 1 month) and 39% were long-term adopters (1–7 months of use). On average, short-term adopters adhered to 37% of data entry procedures, whereas long-term adopters adhered to 74% of data entry procedures. Higher teacher stress was associated with shorter use and lower adherence. Web site analytics revealed that, on average, long-term adopters completed all steps of DRC development in less than 1 h and spent only 3 min per day engaged in data entry for progress monitoring. The magnitude of change in student target behaviors and Strengths and Difficulties Questionnaire scores was moderate to large. These results reveal the feasibility and promise of the DRC.O and generate hypotheses for future research. Implications for additional evaluation of technology-driven implementation supports for teachers are discussed.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

In the context of a multi-tiered system of support (Benner, Kutash, Nelson, & Fisher, 2013), elementary school teachers in the USA and Canada are expected to implement universal classroom management strategies (e.g., use of rules, routines, praise) to prevent disruptive student behavior and facilitate academic engagement (Epstein et al., 2008; McLeod et al., 2017). However, 10–20% of students often need additional more targeted supports within the general education classroom (Forness, Freeman, Paparella, Kauffman, & Walker, 2012). One of the most well-studied and effective targeted interventions for inattentive and disruptive behavior is a daily report card (DRC; Pyle & Fabiano, 2017; Vannest Davis, Davis, Mason, & Burke, 2010). Despite the existence effective targeted interventions and the potential benefit for identified students, many teachers report limited use of such strategies (Hart et al., 2017; Martinussen, Tannock, & Chaban, 2011). Barriers to use include contextual factors such as limited access to professional development, inadequate implementation supports, and time for implementation (Collier-Meeks, Sanetti, & Boyle, 2018; Long et al., 2016), as well as to individual factors such as lack of knowledge of behavioral principles (Owens et al., 2017), inadequate skills in data-driven decision making (Farley-Ripple, & Buttram, 2015), and stress (Domitrovich et al., 2015).

Studies demonstrate that many teachers can overcome these barriers when provided with consultation (Becker, Bradshaw, Domitrovich, & Ialongo 2013; Conroy et al., 2015; Owens et al., 2017). In these studies, consultation typically occurred with an external consultant weekly over several months, a practice that exceeds the resources available in most school districts (Kelly & Lueck, 2011). However, without such support, there is a risk of low adoption of effective behavior management strategies which can contribute to continued disruptive behavior (Owens et al., 2018) and lower student achievement (McLean & Connor, 2015). Thus, research is needed to develop feasible strategies that can overcome barriers to adoption and implementation of strategies like the DRC.

The goals of this pilot study were to (a) evaluate the feasibility and effectiveness of an interactive technology [the Daily Report Card.Online (DRC.O); see www.oucirs.org/daily-report-card-preview] designed to address the above-described barriers to DRC use, and (b) identify teacher characteristics associated with DRC use. The DRC.O is an interactive web-based system that offers access to professional development and mirrors implementation supports of a consultant.

Daily Report Card

When using the DRC, teachers attempt to improve two to four target behaviors (e.g., completes work, respects others) per student. Teachers set individual goals for each behavior, provide daily feedback to students about the behaviors, and record the frequency of each behavior. To foster positive home–school relationships, students take the DRC home to parents who are asked to provide privileges contingent on outcomes. Studies document that the DRC is effective with elementary school students (Pyle & Fabiano, 2017; Vannest et al., 2010), is acceptable to teachers (Girio & Owens, 2009), and can address a variety of behaviors (positive, negative, academic) (Owens et al., 2012). There are also empirical benchmarks for the magnitude of behavior change that can be expected after each month of use (Holdaway et al., 2018; Owens et al., 2012). However, two studies reveal that most teachers report rarely using a DRC and similar interventions (Hart et al., 2017; Martinussen et al., 2011). We hypothesize that interactive technology can be leveraged to overcome barriers to DRC use and achieve positive student outcomes similar to those obtained when using consultation (at least for some teachers).

Leveraging Technology to Enhance Adoption and Implementation

In implementation science frameworks (see Aarons, Hurlburt, & Horwitz, 2011 for review), adoption is defined as the decision to proceed with the implementation of a new strategy. Implementation is defined by several subcomponents, including adherence (the extent to which an intervention is implemented as intended) and dose (the quantity received or duration of the intervention implementation) (see Sanetti & Kratochwill, 2009 for review). By leveraging interactive technology, we may be able to address several of the barriers to teacher’s adoption and implementation of targeted interventions (Collier-Meeks et al., 2018). For example, interactive technologies can provide easy access to relevant professional development content to enhance teacher knowledge without travel or the need for substitute teacher coverage. Interactive technologies can use data-driven algorithms to recommend intervention modifications. Such tools may enhance capacity in teachers who have limited skills in data-driven decision making (Farley-Ripple, & Buttram, 2015) and may offer a problem-solving resource that guides intervention decisions and supports implementation over time (Long et al., 2016).

Online professional development programs for academic instruction have produced equally positive effects on teachers’ knowledge of course content, pedagogical beliefs, and instructional practices when compared to face-to-face formats (Carey et al., 2008; Russell, Carey, Kleiman, & Venable, 2009). Online professional development for classroom management is in its infancy. However, three studies (Becker, Bohnenkamp, Domitrovich, Keperling, & Ialongo, 2014; Bishop, Gile, & Bryant, 2005; Pianta, Mashburn, Downer, Hamre, & Justice, 2008) reveal that online supports in this domain enhance access to relevant content, are acceptable to teachers, and can increase strategy use by teachers. Despite this promise, the online programs in these studies were coupled with substantial face-to-face supports from consultants. Given the limited resources in many schools, intensive consultation is not often feasible.

Recent studies also reveal that professional development is most effective when tailored to the teacher’s individual needs (e.g., Coles et al., 2015; Owens et al., 2017). Some teachers need intensive face-to-face consultation; however, a substantial portion of teachers do not. With additional research, school administrators may be able to reserve face-to-face consultation for teachers who have significant difficulty implementing interventions and/or whose students demonstrate the greatest need, and offer less intensive, technology-driven supports to the majority of teachers with lesser needs.

Daily Report Card.Online (DRC.O)

In response to the need for feasible training and implementation supports, our team created the Daily Report Card.Online (DRC.O) system, an online resource that supports teachers’ adoption and implementation of the DRC intervention. The DRC.O offers the information and materials needed to understand and implement a DRC, including video models of implementation. Teachers can access this content at their preferred time and pace. The DRC.O includes interactive features designed to mirror supports provided by face-to-face consultants. For example, the DRC.O Wizard guides teachers through a development process of selecting and prioritizing target behaviors, collecting baseline data about those behaviors, and using the baseline data to set individualized student goals. The system graphs student progress and has algorithms that offer data-driven decisions, making recommendations about when goals should be changed (i.e., to gradually shape student behavior into the normative range) and by how much (to ensure that the student experiences success). We developed the DRC.O iteratively, repeatedly soliciting feedback from school professionals so that the user interface aligns with user needs.

The initial evaluation of the DRC.O was conducted with 33 elementary teachers in the USA who were provided access to the DRC.O program and asked to implement a DRC with one student (with elevated inattentive and/or disruptive behavior problems) for 2 months using supports from the Web site and brief, in-person consultation, as needed (Mixon, Owens, Hustus, Serrano, & Holdaway, 2019). With regard to adoption, 69.69% of teachers who received access to the Web site adopted the DRC intervention (i.e., completed the DRC Development Wizard and implemented the DRC for at least 1 day). In total, 54.54% (18 of the total sample) of teachers implemented the intervention for at least 1 month and 51.51% (17 the total sample) implemented it for at least 2 months (as evidenced by entering daily intervention data). These teachers (n = 17) demonstrated acceptable levels of observed implementation integrity (i.e., comparable to that observed in some consultation studies; Owens, Evans et al., 2018), and their students demonstrated improvements in total (d = .53) and hyperactive and inattentive (d = .83) problems on the Strengths and Difficulties Questionnaire (Goodman et al., 2001). Importantly, teachers achieved these outcomes using an average of 32 min of face-to-face consultation; this is a substantial reduction in time compared to studies on DRC effectiveness with traditional consultation, in which teachers participated in 6–10, 30-min meetings (Fabiano et al., 2010; Owens et al., 2012). These results suggest that, for some teachers, online supports offer a more feasible means of supporting DRC implementation.

Factors Associated with Implementation

With the above-noted variability in adoption and implementation of the DRC.O, it is important to explore if there are teacher characteristics associated with success with online supports. Identifying such characteristics may allow school administrators to determine which teachers are most likely to benefit from this type of support. There is some evidence that teacher characteristics may influence intervention implementation (Domitrovich et al., 2015; Owens et al., 2017) and use of self-paced technology-driven supports (Mixon et al., 2019). For example, teacher age and highest degree were associated with longer implementation of the DRC in our first pilot study. Older teachers and those with a master’s degree implemented the DRC longer than younger teachers and those without a master’s degree, respectively (Mixon et al., 2019). In addition, there is evidence that teachers with high and low scores on knowledge of relevant behavioral principles respond differentially to different levels of intensity of consultation (e.g., Owens et al., 2017). Greater foundational knowledge of the principles that underlie the intervention may lead teachers to feel comfortable with the intervention, approach the opportunity, and ultimately have efficacy for ongoing implementation. Thus, greater teacher knowledge of the behavioral principles may predict greater DRC adoption and longer implementation. Lastly, two studies have found that higher levels of teacher stress are associated with lower levels of implementation of other effective classroom management strategies (Domitrovich et al., 2015; Wehby, Maggin, Partin, & Robertson, 2012). Because teachers in the USA and Canada report high levels of daily stress as a function of challenging student behavior (Canadian Teachers’ Federation, 2018; Ingersoll, 2001), this is an important predictor to consider prior to planning for broader dissemination of the DRC.O to more teachers.

Current Study Addressing Limitations in the Literature

There are three limitations to the above-described DRC.O study (Mixon et al., 2019). First, it was conducted in local schools wherein some teachers had worked with the research team on previous projects that included the DRC. Indeed, 24 of 33 teachers reported that they had previously used a DRC and we found that those who had previously implemented a DRC were significantly more likely than those without previous use to implement for more than 8 weeks. Second, although minimal, teachers in this project also had access to face-to-face consultation, if requested. Consultants were in the teachers’ buildings for 1–2 h per week, and 74% of teachers sought brief face-to-face supports. On average, teachers had three brief consultations (SD = 2.03) that lasted an average of 10 min per contact (SD = 4.30). Third, the previous study did not examine the magnitude of change in DRC target behaviors or compare that change to monthly benchmarks (Holdaway et al., 2018; Owens et al., 2012).

To advance our understanding of the feasibility of the DRC.O in facilitating adoption and implementation of the DRC, we sought to replicate the findings of Mixon et al. (2019) under more naturalistic conditions. Namely, we collaborated with school districts that did not have a previous partnership with the researchers and had not previously received professional development on the DRC intervention (per school administrator report). We also observed DRC adoption, Web site use, and DRC implementation in the absence of face-to-face supports from the research team. Lastly, we calculated single-case effect sizes to represent the magnitude of change in each target behavior and compared those to benchmarks.

The aims of the study were to: (1) assess the feasibility of the system, defined as percent of teachers who adopt the DRC and use each aspect of the DRC.O system, (2) examine the quality and duration of teachers’ implementation over time, (3) identify teacher factors associated with adoption and implementation, and (d) examine student outcomes associated with the DRC intervention. We expected that at least 30% of teachers would use all aspects of the Web site, adopt and implement the DRC, and achieve positive student outcomes. We hypothesized that teachers with higher knowledge of behavioral principles and lower levels of stress would implement the DRC for longer duration and/or with higher quality. We did not make hypotheses about teacher age, because it was confounded with previous DRC use in the past study, and because there is reason to expect that younger teachers (relative to older teachers) may be more comfortable with technology in the absence of consultation.

Method

Participants

The project was conducted by an interdisciplinary partnership between researchers in Southeast Ohio, researchers in Alberta, Canada, and school district personnel in Alberta, Canada. Participants included teacher–student dyads recruited from 10 elementary schools from two school districts in Alberta. In total, 54 teachers (75.9% female) consented to participate and were given access to the Web site (33.3% taught K–second grades; 50% taught third–sixth grades; 7.4% reported teaching multiple grades; and 9.3% did not report the grade they taught). Most teachers (85.2%) were general education teachers; 9.3% were special education teachers; and the remainder did not report their teacher status. With regard to highest degree earned, 18.5% reported having a master’s degree, 75.9% reported having a bachelor’s degree, and the remainder did not report their highest degree. On average, teachers were 39.53 years in age (SD = 11.05) and had 12.08 years of experience in the field (SD = 9.02). As a point of comparison, among elementary school teachers in Alberta, the average age is 42 and 72% are female (Alberta Government, 2013). Because this was a dissemination trial focused on teacher behavior, demographic data about students were not obtained.

Procedures

District administrators provided preliminary information about the research project to elementary school principals in the two participating districts and invited them to participate. Interested principals distributed information to teachers and encouraged interested teachers in their building to participate in the initial DRC.O orientation session. The orientation session was conducted in person by the DRC.O developer in September 2017. Teacher consent was reviewed and collected at the start of the training session, and then teachers were asked to complete the baseline teacher rating scales (described below) prior to the start of the training.

During the 3-h orientation session, the rationale for the DRC was described and teachers were guided through a “case” in which they learned how to develop a DRC (with paper–pencil). Following this interactive activity, teachers were given access to the DRC.O and the developer demonstrated how the DRC.O Wizard could make each of the steps of development easier and more efficient. The remainder of the session was spent guiding teachers through the key elements of the DRC.O including DRC development, implementation features, and data tracking, graphing, target modification, and how to contact the researchers via the Web site.

We designed the remaining procedures to optimize outcome assessment (i.e., use proximal data from the intervention, and a short, yet broad measure (i.e., Strengths and Difficulties Questionnaire) to progress monitor change in distal functioning), minimize teacher burden, and mirror typical practice. Thus, at the end of the session, teachers were encouraged to identify one student (demonstrating inattention, hyperactivity, impulsivity, non-compliance, disrespect, or aggression) in their classroom who could likely benefit from the DRC. They were instructed to complete a Strengths and Difficulties Questionnaire (SDQ) (Goodman, 2001) to determine student eligibility. If the student’s score fell in the at-risk range or higher on the Hyperactive/Inattention Problems or Conduct Problems subscales, the teacher pursued parent consent. Following receipt of all consent documentation, teachers began using the DRC.O to develop and implement the DRC. Teachers were encouraged to implement the DRC for 2 months and enter daily data into the DRC.O platform. Support from the research team was available to teachers through e-mail only via the “Contact Us” feature on the Web site; the research team responded to all requests within 48 h. Teachers were also encouraged to seek support from personnel in their building or district who typically provide support for behavioral interventions. E-mail prompts and encouragement were sent to teachers by research staff at specific points of implementation (e.g., following baseline tracking; at DRC launch) or during lapses in data entry. Teachers were asked to complete the SDQ after 2 months of implementation or when the DRC was discontinued.

Unexpectedly, one school dropped out after the orientation. Teachers in this building expressed that they did not feel the behavioral approach inherent in the use of a DRC was a good fit for their school. In addition, after the initial orientation, three additional school principals requested to participate. Due to scheduling constraints, the developer oriented teachers in two schools via video conference. (One session was in October and one in December 2017.) An Alberta-based project staff member (trained by the developer) held a face-to-face training in October 2017. These three sessions (one for each school) were shortened to 1–2 h by eliminating the case-based learning activity. All other aspects of the DRC.O orientation remained the same. Three teachers were scheduled for an orientation session, but unforeseen circumstances prevented attendance. They were still given access to the Web site after providing consent.

Daily Report Card.Online (DRC.O)

The DRC.O Web site was developed to provide educators with an accessible, interactive, web-based platform to aid in the development and implementation of a DRC intervention (Mixon et al., 2019). The Web site contains four primary “steps” that guide users through the key elements (Step 1: Orientation to DRCs and the Web site; Step 2: Creating the DRC; Step 3: Preparing to Launch the DRC; and Step 4: Implementing the DRC). Within these steps, users can access videos that provide detailed information and modeling of the skills and download PDFs of helpful resources (e.g., Pre-DRC Launch Tasks; Explaining the DRC to the Child; DRC Rewards Menu Template). The creation of the DRC occurs via an interactive wizard that guides users through the steps of selecting, defining, and prioritizing target behaviors, tracking these behaviors for 5 days to obtain a baseline assessment, using these data to set individualized goals for each target behavior, and printing the DRC. The DRC Wizard provides teachers with a menu of target behaviors that our team has developed with teachers over the last 15 years. All target behaviors are worded in a manner that is specific, objective, and measureable. Once a teacher begins implementing the DRC, the Web site provides a data entry portal depicted as a calendar that reveals the days that data have been entered or not entered. The data are automatically graphed, and the graphs provide automated data-driven recommendations for when to modify the goals for each target behavior to facilitate shaping the behavior to normative levels. The Web site also provides tools for modifying target behaviors, a resources page with additional videos and downloadable materials, and a “Contact Us” portal for technological or implementation supports.

Measures

Demographic Questionnaire

At baseline, teachers provided information about their age, gender, educational attainment, current teaching role, and years of experience in the field.

Teacher Knowledge of Behavioral Principles

This 16-item multiple-choice measure assesses teacher knowledge of behavioral principles as applied to children and was developed based on the Behavior Modification Test (Kratochwill, Elliott, & Busse, 1995). In the development process, we retained the 11 items were sensitive to change as a function of participation in a workshop focused on ADHD and classroom management (Owens, Coles, & Evans, 2014), had discriminant validity in identifying teachers with disparate baseline characteristics (Owens et al., 2017), and had item difficulty levels that prevented ceiling effects (e.g., we dropped items answered correctly by 80% or more teachers prior to participation in the workshop). Thus, the items retained represent “difficult” items that differentiate teachers with high and low knowledge. A percent correct score was calculated for each teacher.

Teacher Stress

Teachers completed 12 items from the Teacher Concerns Inventory (TCI; Fimian, 1988) that assess work-related stress in the following domains: Time Management (4 items; alpha = .63), General Work-Related Stress (4 items; alpha = .80), and Student Discipline and Motivation (4 items; alpha = .81). Items are scored on a 5-point scale with higher scores indicating greater stress in that domain. The psychometric properties of the measure, including the factor structure and convergent validity, are well demonstrated (see Fimian, 1988, for review). TCI scores have been shown to be associated with teacher burnout, job satisfaction (Fimian, 1988), and counseling sought for work-related issues (Fimian & Krupicka, 1987).

DRC.O Web site Use Metrics

The DRC.O provides several use metrics that were analyzed in this study. First, Web site analytics track the number of visits each user makes to each page and the time spent on a given Web site page. This information was used to document teachers’ use of various Web site features (Aim 1). Second, the Web site has a daily data entry calendar into which teachers enter the student’s daily performance (e.g., number of interruptions or percent work complete). Using these data, we defined “adoption” as completing all steps in the DRC Creation Wizard, downloading the first DRC, and entering daily data for at least 1 day (Aim 1). For Aim 2, we define “implementation adherence” as the number of school days for which there are data entered, divided by the total number of eligible school days (e.g., without student absences and holidays) between the starting date and ending date of the DRC (last day of data entry). In addition, we define “duration” as the longest running target behavior (the target behavior that has the most school days between the first and last day data were entered).

Strengths and Difficulties Questionnaire (SDQ)

We assessed change in student behavior using the SDQ, a 25-item questionnaire that assesses psychological adjustment of youth in five broad domains: Conduct Problems, Hyperactivity/Inattention, Emotion Problems, Peer Problems, and Prosocial Behaviors (Goodman, 2001). Items are rated on a three-point scale ranging from 0 (not true) to 2 (certainly true). For the first four subscales, higher scores indicate more severe problems; for the Prosocial subscale, lower scores indicate more severe problems. Each subscale contains five questions. A total difficulty score (ranging from 0 to 40) represents the sum of the first four subscales. Students were eligible to participate if the teacher rated the child’s behavior in the borderline range or higher based on the SDQ cutoff scores (available at www.sdqinfo.org). The SDQ has demonstrated acceptable internal consistency when using the total score (α = .87) and by subscale (α = .70–.88; Goodman, 2001). All teachers were asked to complete the SDQ at baseline for eligibility determination and after 2 months of DRC implementation (or at termination if that preceded 2 months). Internal consistencies for this sample were as follows: .64 for Conduct Problems, .77 for Hyperactivity/Inattention, .82 for Emotion Problems, .66 for Peer Problems, and .77 for Prosocial Behavior, and .77 for Total Problems.

DRC Target Behaviors

To compare change in student target behavior to available monthly benchmarks (Holdaway et al., 2018), we calculated a single-case design effect size (ES). For each DRC target behavior, we assessed the number of data points within each month of intervention. If there were at least 10 days of data in a given month, we quantified the change during that month of intervention (Month 1 and Month 2) by calculating Tau-U and Taunonoverlap ES (Parker, Vannest, Davis, & Sauber, 2011), a single-case ES that accounts for possible trends during baseline. If the baseline tau value was ≥ .10 (indicating substantial baseline trend; Vannest & Ninci, 2015), we calculated Tau-U to correct for the baseline trend. Otherwise, Taunonoverlap was used to calculate the ES. The following standards (Vannest & Ninci, 2015) were applied to evaluate the magnitude of Tau-U and Taunonoverlap effect sizes: ≤ .20 = small, .21–.60 = moderate; .61–.80 = large; > .80 = very large.

Results

Aim 1: Feasibility (DRC Adoption, Training, and Web site Use)

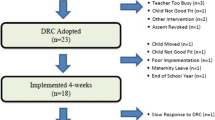

Figure 1 shows how many teachers received the Web site, adopted the DRC (defined as completing all steps of the DRC Wizard, downloading the DRC, and entering data for at least 1 day), and implemented the DRC, as well as reasons for dropout at each phase. In total, 54 teachers received access to the Web site: 55.56% (n = 30) adopted the DRC. Of these teachers, 9 (16.67% of the total sample) implemented the DRC for less than 1 month, 21 (38.89% of total sample) implemented for at least 1 month, and 11 (20% of the total sample) implemented for at least 2 months. Because moderate to large changes in student behavior can be observed within 1 month of use (Owens et al., 2012), we categorized teachers as short-term users (used less than 1 month; n = 11) or long-term users (use for 1 month or longer; n = 21).

Table 1 reveals the number of visits associated with various Web site features by teacher adoption status. One-way analyses of variance tests revealed that there were no group differences related to the use of the initial steps on the Web site (Step 1: Orientation, Step 2: DRC Development, and Step 3: Preparation). However, there were significant differences in use of the DRC Wizard (F(2, 40) = 4.95, p = .012), viewing and editing target behaviors (F(2, 40) = 7.22, p = .002; F(2, 40) = 8.44, p = .001, respectively), the daily data entry calendar (F(2, 40) = 10.11, p < .001), and Step 4: Implementation videos (F(2, 40) = 5.38, p = .009), leading to significant group difference in total visits (F(2, 40) = 12.34, p < .001) (see Table 1 for group differences based on post hoc testing). In addition, long-term users interacted with the Web site for significantly more total hours (M = 9.49 h; SD = 11.48) than short-term users (M = 5.18; SD = 6.70) and non-users (M = 1.68; SD = 2.01); F(2 40) = 3.91, p = .028. Importantly, on average, long-term users spent a total of less than 1 h (M = 57.75 min; SD = 80.54) completing all steps of the DRC development process (i.e., completing the wizard), with all but four teachers completing the wizard in less than 1 h. On average (excluding three outliers), over the course of implementation, long-term users spent 2.46 min per day (SD = 2.24) entering data into the graph.

Because there was unplanned variability in the orientation teachers received, we examined whether orientation type was associated with DRC adoption status. Table 2 reveals the type of orientation (video or face-to-face; by DRC.O developer or project staff; duration of training, and month of training) by non-users and users. The Chi-square test (albeit analysis was weakly powered) was not significant, suggesting that these factors may be not associated with adoption rates following training.

Aim 2: Implementation

The Web site offers two indicators of implementation. First, the Web site captures daily data entry which indicates the child performance on each target each day (e.g., number of interruptions, percent of work complete). Using these data, we calculated percent implementation adherence by dividing the number of days the teacher entered data by the number of eligible school days (e.g., removing child absences, weekend, holidays) between the starting and ending dates of the intervention. On average, short-term users adhered to data entry procedures on 37.11% (SD = 20.34%) of school days, and long-term adopters adhered to data entry procedures on 74.30% (SD = 24.53%) of school days; t(28) = 3.99, p < .001.

Second, we also capture the duration of implementation, the number of school days of the longest running target behavior (as determined by the first and last days of data entry). On average, short-term users used the DRC for 9.00 school days (SD = 5.24) and long-term adopters implemented the DRC for 39.62 school days (SD = 26.61); t(28) = 3.39, p = .002.

Aim 3: Factors Predicting Adoption

First, we conducted bivariate correlations to examine the relationship between teacher characteristics and implementation outcomes (i.e., percent implementation adherence and duration; see Table 3). Then, we conducted two hierarchical linear regressions, one per each implementation variable (see Table 4). On the first step in each model, we entered the non-malleable characteristics of age and highest degree. On the second step in each model, we entered the variables that were significant in the bivariate analysis (i.e., knowledge and stress discipline/motivation subscale). In both models, neither age nor highest degree was a significant predictor of either outcome. However, stress related to student discipline and motivation was a significant predictor of both percent adherence and DRC duration, accounting for 12% and 36% of the variance in each variable, respectively. Higher stress was associated with shorter use and lower adherence.

Aim 4: Student Outcomes

Strengths and Difficulties Questionnaire

Teachers were instructed to complete the SDQ prior to implementing the DRC and at the end of 2 months. All short-term (n = 9) and long-term (n = 21) teachers completed the SDQ at baseline. The students in each DRC status group were not significantly different in severity at baseline (see pre-SDQ scores for the full subsamples in Table 5). However, only 3 short-term users and 17 long-term adopters completed the SDQ following DRC use. The pre- and post-SDQ scores for these subsamples are also presented in Table 5. Because only 33% of the short-term users completed a post-SDQ, we did not conduct analyses or compute effect sizes for this group. Paired samples t-tests for long-term users reveal significant pre-to-post improvement on all SDQ subscales, except the Emotion Problems subscale; see within-subject effect sizes for the magnitude of this change in Table 5. In addition, 7 of 21 (33%) students (of long-term users who had follow-up data) had SDQ scores move into the normative range during implementation.

DRC Target Behaviors

In total, across all students, 135 target behaviors were created. There were 13 types of behaviors selected from the DRC Wizard, and 5 (3.70%) target behaviors were custom-made by the teacher. The target types most commonly selected were: Interruptions (19.26%); classwork completion (14.81%); respects adults (9.63%); transitions (8.89%), and out of seat/area (6.67%). The remaining targets were selected less than 6% of the time (listed in order of descending frequency): handles disappointments appropriately, completes routines, follows directions, respects others’ space, respects peers, pays attention, uses materials appropriately, and aggression.

For each DRC target behavior, we calculated a Taunonoverlap effect size (ES). Across all students, there were 75 targets with sufficient data to calculate ES at the end of Month 1. The average ES at the end of Month 1 was .40 (SD = .41). There were 62 (83%) targets that had a positive (improving) ES and 13 (17%) targets that had a negative (or worsening) ES. See Table 6 for the average ES across all targets by month. From a student perspective, 12 of the 24 (50%) students (of short- and long-term users) had all targets improve by the end of implementation, 10 students (42%) had at least one target improve, and 8% (2 students) had all targets decline. Teacher implementation adherence was correlated with Month 1 (r = .42) and Month 2 (r = .67) ES (when averaged across targets for each student).

There are two indicators of modification that teachers made over time. First, teachers could have added new targets after the initial set of targets. Among the 29 long-term users, 6 teachers (21%) added new targets. Second, teachers could have modified the goal criteria for a given target (e.g., allowing 5 interruptions at first, then lowering the goal to 3 at a later date). Among the 29 long-term users, 6 teachers (21%) modified the goal criterion of the student’s target behaviors. There was no overlap in the 6 teachers who added targets and those who modified goals.

Discussion

The results suggest that, by leveraging interactive DRC.O technology, a substantial portion of teachers (38.89%) can adopt and implement a DRC intervention for an acceptable period of time (at least 1 month) and achieve positive student outcomes (as indicated by change in SDQ scores and target behaviors). Web site analytics reveal the feasibility of the Web site for facilitating DRC development and progress monitoring. Regression analyses highlight the role of stress as a possible barrier to implementation. We interpret these results in the context of the findings of previous DRC studies and offer hypotheses for future research.

With regard to adoption, 55.56% of teachers completed the DRC Development Wizard and launched the DRC. This rate is lower than that found in the previous DRC.O pilot study (69.69%) (Mixon et al., 2019). The higher rate in the previous study is likely attributed to the fact that some of the teachers had previously participated in DRC projects with the investigator and all teachers had access to some face-to-face supports (e.g., to problem-solve about target behaviors and/or technology), if requested. Collectively, these data suggest that access to some face-to-face supports, even if brief (10 min or less), could help some teachers overcome barriers during the DRC development phase. Still, 55.56% initial uptake with no external face-to-face support bodes well for future dissemination efforts. By leveraging technology, these preliminary data suggest that we can reach a substantial portion of teachers with relatively low human resource demands.

With regard to implementation, 38.89% of teachers implemented the DRC for at least 1 month. This was lower than found in the previous pilot study (54.54%), likely as a function of the above-described contextual issues. In addition, in the previous study, the investigators conducted four observations of each teacher’s classroom to assess implementation and student behavior. These observations may have played a role in teachers’ continued use of the DRC. Thus, increased observations may be a factor influencing teachers’ implementation behavior. Nevertheless, previous studies document that moderate to large change in student behavior can be observed within 1 month of use (e.g., Owens et al., 2012). That 38.89% of teachers used the technology to implement the DRC for this duration with high adherence (i.e., entered student data on 74% of school days) and achieved positive student outcomes (see discussion below) without face-to-face support suggests that this tool may help more teachers reach more students in need. In addition, these results suggest that the data entry calendars may be a useful indicator of adherence (i.e., it was associated with positive student outcomes) and that the graphing features may be a useful tool for assessing progress monitoring (i.e., target behaviors are sensitive to change in a short period of time). Importantly, the adherence and outcome data are consistent with the previous pilot study (Mixon et al., 2019) and DRC studies that used consultants (e.g., Owens et al., 2008), suggesting that DRC.O is a promising alternative to intensive face-to-face supports, yet brief problem-solving consultants and/or observation may further facilitate use. A randomized controlled assessment of the DRC.O is warranted to be able to draw causal conclusions about these hypothesized impacts.

Web site analytics (Table 1) offer some important insights. First, the Web site offers a validity check for the categorization of short- and long-term users. Long-term users spent more total hours on the Web site (M = 9.49) than short-term users (M = 5.18). Notably, long-term users spent, on average, less than 1 h completing all steps of the online DRC development process. This reveals the efficiency of the Web site over meetings with a consultant. Namely, in previous studies (e.g., Fabiano et al., 2010; Owens et al., 2008), DRC development involved two to three meetings with the teacher of a duration of 30–60 min each. Similarly, on average, long-term users spent less than 3 min per day entering data into the graph. These findings are very encouraging, given that teachers report limited time and challenges managing competing demands as significant barriers to implementation (Collier-Meek et al., 2018). Sharing these findings with teachers during orientation to the DRC.O may help to set expectations for how minimal the data entry process may be, providing benefits (e.g., graphs and data-driven decisions) that outweigh the costs (i.e., teacher time).

With regard to student outcomes, Table 6 reveals that the magnitude of change in student DRC scores is moderate to large and consistent with studies offering monthly benchmarks (Holdaway et al., 2018; Owens et al., 2012). Namely, with a sample of 37 elementary students with a DRC intervention whose teachers received biweekly consultation for 4 months, Holdaway et al. (2018) found an average Tau ES of .37 at Month 1 and .43 at Month 2 and an average standard mean difference (SMD) effect size (a less conservative effect size) of .94 at Month 1 and 1.13 at Month 2. In addition, 33% students (of long-term users who had follow-up data) had SDQ scores move into the normative range during implementation. These data are encouraging in that a substantial portion of teachers implemented a DRC without consultation and produce positive student outcomes that are similar in magnitude to those produced by teachers who receive consultation in previous studies.

Lastly, the correlation analyses indicated that teacher knowledge of behavioral principals and stress related to discipline and student motivation were associated with implementation. Thus, these factors should be assessed in future implementation trials. However, in partial support of the hypothesis, only one component of stress was associated with lower adherence and use of the DRC. This finding is consistent with previous studies (Domitrovich et al., 2015; Troutman et al., 2018) and suggests that it may be helpful to consider multiple aspects of stress when determining the type of support that may best match teacher need.

Limitations and Future Research

The current study shows promise for leveraging the use of technology to introduce classroom-based interventions like the DRC; however, limitations must be considered. First, although the sample closely aligns in gender and age with teachers in Alberta, the sample may not be representative of the entire population of teachers, as this sample represents the few in each building who were willing to participate. Thus, rates of adoption and implementation may be different among a broader population. Second, this is a naturalistic study design; thus, the data are useful for understanding feasibility, promise, and generating hypotheses, but cannot be used to draw causal conclusions. Third, due to limited resources and our prioritized focus on teacher behavior, we did not gather information about students. There may be student characteristics (e.g., cognitive functioning) that moderate outcomes, even in the context of high-quality implementation. Fourth, we did not assess teacher integrity or student behavior via classroom observation and these represent more objective and valid indices of implementation and integrity. Fifth, not all teachers completed the SDQ after the end of the DRC intervention. Thus, changes in student behavior reflected here may be different than actually occurred for all students. Sixth, because the DRCO does not yet have a parent outreach component, we did not assess parent implementation of DRC review or provision of contingent rewards. With future iterations of the Web site, we plan to develop and evaluate parent-focused components. Lastly, a variety of orientation sessions were provided (video conference, in person, by the developer, by project staff) to meet the needs of schools in the study. That the orientation type did not seem to be associated with adoption rates was encouraging; however, future research should consider systematic evaluation of various orientation modalities for the DRC.O including determining whether such a session is needed at all.

Despite these limitations, this pilot study produces novel information that can be used to generate hypotheses for future research. For example, there is recent evidence that tailoring professional development to teachers may produce better outcomes for teachers who face barriers (Owens et al., 2017). This evidence suggests that it may be helpful to consider a continuum of professional development for teachers that includes: (a) high levels of technology and no face-to-face support on one end (e.g., like the DRC.O), (b) technology coupled with some face-to-face support or web-based consultation, and (c) intensive face-to-face supports with or without technology on the other end of the continuum. Future research that examines the application of various implementation supports in an adaptive design would advance our understanding of the extent to which matching implementation supports and teacher needs can produce higher-quality implementation and more positive student outcomes than a one-size-fits-all approach to professional development. Supports that could be examined in an adaptive manner include the presence of brief face-to-face supports for problem solving during the development phase, the use of technology for consultation, and/or the use of one or two observations during the implementation phase. In addition, research could examine the impact of either providing a stress management intervention to teachers, or using levels of stress (and perhaps knowledge) to guide decisions about matching more intensive supports to teachers who have higher levels of stress.

In addition, if brief face-to-face supports may help teachers overcome barriers, future research could examine whether internal (rather than external) consultants can be leveraged to efficiently provide this support (e.g., Atkins et al., 2008). Internal consultants could be teachers with experience with a DRC or school psychologists or behavioral consultants who are naturally in the role to offer implementation supports. Teachers may be more receptive and trusting of internal supports. Further, if school administrators are trained in the system and generate reports from the system, these could be used to identify teachers who may need assistance and to highlight teachers who are experiencing success. Lastly, continued input from teachers can inform future iterations of the Web site and tools. For example, some teachers have recommended incorporating the availability of data entry via mobile devices (in addition to the existing data entry calendar). Such improvements may further enhance efficiency and likely enhance acceptability and use.

Summary

The current best practices for professional development involve consultation that uses a problem-solving approach (Frank & Kratochwill, 2014) with observation and performance feedback (Owens et al., 2017; Solomon et al., 2012). However, most schools do not have the resources to implement this intensive level of support with all teachers. Further, many teachers may not need this level of support. This study (coupled with the previous pilot study; Mixon et al., 2019) suggests that the DRC.O can facilitate teachers’ implementation of a DRC to produce positive student outcome with minimal to no face-to-face supports. Thus, the strategic pairing of an empirically supported intervention with accessible and user-friendly technology may increase the likelihood that such interventions could be widely disseminated and taken-to-scale with the potential of substantially addressing prevalent student need and enhancing services for youth in need. A systematic program of implementation research that explores the hypotheses discussed above will continue to advance this important agenda.

References

Aarons, G. A., Hurlburt, M., & Horwitz, S. M. (2011). Advancing a conceptual model of evidence-based practice implementation in public service sectors. Administration and Policy in Mental Health and Mental Health Services Research,38(1), 4–23.

Alberta Government. (2013). A transformation in progress: Alberta’s K-12 education workforce 2011–2012. Retrieved at https://open.alberta.ca/publications/9781460106884 on December 8, 2018.

Atkins, M. S., Frazier, S. L., Leathers, S. J., Graczyk, P. A., Talbott, E., Jakobsons, L., et al. (2008). Teacher key opinion leaders and mental health consultation in low-income urban schools. Journal of Consulting and Clinical Psychology,76(5), 905–908.

Becker, K. D., Bohnenkamp, J., Domitrovich, C., Keperling, J. P., & Ialongo, N. S. (2014). Online training for teachers delivering evidence-based preventative interventions. School Mental Health,6(4), 225–236.

Becker, K. D., Bradshaw, C. P., Domitrovich, C., & Ialongo, N. S. (2013). Coaching teachers to improve implementation of the good behavior game. Administration and Policy in Mental Health and Mental Health Services Research,40, 482–493.

Benner, G. J., Kutash, K., Nelson, J. R., & Fisher, M. B. (2013). Closing the achievement gap of youth with emotional and behavioral disorders through multi-tiered systems of support. Education and Treatment of Children,36, 15–29.

Bishop, D. C., Giles, S. M., & Bryant, K. S. (2005). Teacher receptiveness toward web-based training and support. Teaching and Teacher Education,21(1), 3–14.

Canadian Teachers’ Federation. (2018). Teacher voices on work-life balance. Available from https://www.ctf-fce.ca/en/Pages/Issues/Teachers-Survey-Infographic.aspx. Retrieved on September 27, 2018.

Carey, R., Kleiman, G., Russell, M., Douglas Venable, J. D., & Louie, J. (2008). Online courses for math teachers: Comparing self-paced and facilitated cohort approaches. The Journal of Technology, Learning and Assessment,7(3), 4–35.

Coles, E. K., Owens, J. S., Serrano, V. J., Slavec, J., & Evans, S. W. (2015). From consultation to student outcomes: The role of teacher knowledge, skills, and beliefs in increasing integrity in classroom management strategies. School Mental Health,7(1), 34–48.

Collier-Meek, M. A., Sanetti, L. M., & Boyle, A. M. (2018). Barriers to implementing classroom management and behavior support plans: An exploratory investigation. Psychology in the Schools,56, 5–17.

Conroy, M. A., Sutherland, K. S., Algina, J. J., Wilson, R. E., Martinez, J. R., & Whalon, K. J. (2015). Measuring teacher implementation of the BEST in CLASS intervention program and corollary child outcomes. Journal of Emotional and Behavioral Disorders,23, 144–155.

Domitrovich, C. E., Pas, E. T., Bradshaw, C. P., Becker, K. D., Keperling, J. P., Embry, D. D., et al. (2015). Individual and school organizational factors that influence implementation of the PAX good behavior game intervention. Prevention Science,16(8), 1064–1074.

Epstein, M., Atkins, M., Culinan, D., Kutash, K., & Weaver, R. (2008). Reducing behavior problems in the elementary school classroom. IES Practice Guide (NCEE 2008-012). U.S. Department of Education, National Center for Education Statistics. Washington, DC: U.S. Government Printing Office.

Fabiano, G. A., Vujnovic, R., Pelham, W. E., Waschbusch, D. A., Massetti, G. M., Yu, J., et al. (2010). Enhancing the effectiveness of special education programming for children with ADHD using a daily report card. School Psychology Review,39, 219–239.

Farley-Ripple, E., & Buttram, J. (2015). The development of capacity for data use: The role of teacher networks in an elementary school. Teachers College Record,117(4), 1–34.

Fimian, M. J. (1988). Teacher stress inventory. Brandon, VT: Clinical Psychology Publishing Company.

Fimian, M. J., & Krupicka, W. M. (1987). Occupational stress and receipt of professional counseling in special education. Perceptual and Motor Skills,65, 995–999.

Forness, S. R., Freeman, S. F., Paparella, T., Kauffman, J. M., & Walker, H. M. (2012). Special education implications of point and cumulative prevalence for children with emotional or behavioral disorders. Journal of Emotional and Behavioral Disorders,20(1), 4–18.

Frank, J. L., & Kratochwill, T. R. (2014). School-based problem-solving consultation. In W. P. Erchul & S. M. Sheridan (Eds.), Handbook of research in school consultation (2nd ed., pp. 18–39). New York: Routledge.

Girio, E. L., & Owens, J. S. (2009). Teacher acceptability of evidence-based and promising treatments for children with attention-deficit/hyperactivity disorder. School Mental Health,1, 16–25. https://doi.org/10.1007/s12310-008-9001-6.

Goodman, R. (2001). Psychometric properties of the strengths and difficulties questionnaire. Journal of the American Academy of Child & Adolescent Psychiatry,40(11), 1337–1345.

Hart, K. C., Fabiano, G. A., Evans, S. W., Manos, M. J., Hannah, J. N., & Vujnovic, R. K. (2017). Elementary and middle school teachers’ self-reported use of positive behavioral supports for children with ADHD: A national survey. Journal of Emotional and Behavioral Disorders,25(4), 246–256.

Holdaway, A. S., Owens, J. S., Evans, S. W., Coles, E. K., Egan, T. E., & Himawan, L. K. (2018, October). Incremental benefits of a daily report card over time for youth with or at-risk for ADHD: Replication and extension. Poster presented at the Annual Conference on Advancing School Mental Health, Las Vegas, NV.

Ingersoll, R. M. (2001). Teacher turnover and teacher shortages: An organizational analysis. American Educational Research Journal,38(3), 499–534.

Kelly, M. S., & Lueck, C. (2011). Adopting a data-driven public health framework in schools: Results from a multi-disciplinary survey on school-based mental health practice. Advances in School Mental Health Promotion,4(4), 5–12.

Kratochwill, T. R., Elliott, S. N., & Busse, R. T. (1995). Behavior consultation: A five-year evaluation of consultant and client outcomes. School Psychology Quarterly,10(2), 87–117.

Long, A. C., Sanetti, L. M. H., Collier-Meek, M. A., Gallucci, J., Altschaefl, M., & Kratochwill, T. R. (2016). An exploratory investigation of teachers’ intervention planning and perceived implementation barriers. Journal of School Psychology,55, 1–26.

Martinussen, R., Tannock, R., & Chaban, P. (2011). Teachers’ reported use of instructional and behavior management practices for students with behavior problems: Relationship to role and level of training in ADHD. Child & Youth Care Forum,40(3), 193–210.

McLean, L., & Connor, C. M. (2015). Depressive symptoms in third-grade teachers: Relations to classroom quality and student achievement. Child Development,86(3), 945–954.

McLeod, B. D., Sutherland, K. S., Martinez, R. G., Conroy, M. A., Snyder, P. A., & Southam-Gerow, M. A. (2017). Identifying common practice elements to improve social, emotional, and behavioral outcomes of young children in early childhood classrooms. Prevention Science,18(2), 204–213.

Mixon, C. S., Owens, J. S., Hustus, C., Serrano, V. J., & Holdaway, A. S. (2019). Evaluating the impact of online professional development on teachers’ use of a targeted behavioral classroom intervention. School Mental Health,11(1), 115–128.

Owens, J. S., Coles, E. K., & Evans, S. W. (2014, September). The role of teacher knowledge, skills, and beliefs in the implementation of classroom management skills. Presentation at the Annual School Mental Health Research Summit, Pittsburgh, PA.

Owens, J. S., Coles, E. K., Evans, S. W., Himawan, L. K., Girio-Herrera, E., Holdaway, A. S., et al. (2017). Using multi-component consultation to increase the integrity with which teachers implement behavioral classroom interventions: A pilot study. School Mental Health,9, 218–234.

Owens, J. S., Evans, S. W., Coles, E. K., Himawan, L. K., Holdaway, A. S., Mixon, C., et al. (2018). Consultation for classroom management and targeted interventions: examining benchmarks for teacher practices that produce desired change in student behavior. Journal of Emotional and Behavioral Disorders. https://doi.org/10.1177/106342661879544.

Owens, J. S., Holdaway, A. S., Zoromski, A. K., Evans, S. W., Himawan, L. K., Girio-Herrera, E., et al. (2012). Incremental benefits of a daily report card intervention over time for youth with disruptive behavior. Behavior Therapy,43, 848–861.

Owens, J. S., Murphy, C. E., Richerson, L., Girio, E. L., & Himawan, L. K. (2008). Science to practice in underserved communities: The effectiveness of school mental health programming. Journal of Clinical Child and Adolescent Psychology,37, 434–447.

Parker, R. I., Vannest, K. J., Davis, J. L., & Sauber, S. B. (2011). Combining nonoverlap and trend for single-case research: Tau-U. Behavior Therapy,42(2), 284–299.

Pianta, R. C., Mashburn, A. J., Downer, J. T., Hamre, B. K., & Justice, L. (2008). Effects of web-mediated professional development resources on teacher-child interactions in pre-kindergarten classrooms. Early Childhood Research Quarterly,23, 431–451.

Pyle, K., & Fabiano, G. A. (2017). Daily report card intervention and attention deficit hyperactivity disorder: A meta-analysis of single-case studies. Exceptional Children,83(4), 378–395.

Russell, M., Carey, R., Kleiman, G., & Venable, J. D. (2009). Face-to-face and online professional development for mathematics teachers: A comparative study. Journal of Asynchronous Learning Networks,13(2), 71–87.

Sanetti, L. M. H., & Kratochwill, T. R. (2009). Toward developing a science of treatment integrity: Introduction to the special series. School Psychology Review,38(4), 445–459.

Solomon, B. G., Klein, S. A., & Politylo, B. C. (2012). The effect of performance feedback on teachers’ treatment integrity: A meta-analysis of the single-case literature. School Psychology Review,41(2), 160–175.

Troutman, A. C., Owens, J. S., Monopoli, W. J., Evans, S. W., & Coles, E. C. (2018, October). School, teacher, and student factors that predict use of effective classroom management practices. Poster presented at the Annual Conference on Advancing School Mental Health, Las Vegas, NV.

Vannest, K. J., Davis, J. L., Davis, C. R., Mason, B. A., & Burke, M. D. (2010). Effective intervention for behavior with a daily behavior report card: A meta-analysis. School Psychology Review,39(4), 654–672.

Vannest, K. J., & Ninci, J. (2015). Evaluating intervention effects in single-case research designs. Journal of Counseling & Development,93(4), 403–411.

Wehby, J. H., Maggin, D. M., Partin, T. C. M., & Robertson, R. (2012). The impact of working alliance, social validity, and teacher burnout on implementation fidelity of the good behavior game. School Mental Health,4(1), 22–33.

Acknowledgements

The authors express their gratitude to the educators, administrators, and families in the participating school districts for their collaboration.

Funding

This study was funded by the PolicyWise for Children & Families, Alberta, Canada (Grant No. 1609SM). The opinions expressed are those of the authors and do not represent views of PolicyWise.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The first author received no financial benefit from the DRC.O program, but is the primary developer of the Web site. Other authors have no conflicts of interest to disclose.

Ethical Standard

Procedures were approved by the Institutional Review Boards at both universities and within all school districts. All procedures were performed in accordance with the ethical standards as laid down in the 1964 Declaration of Helsinki and its later amendments or comparable ethical standards.

Informed Consent

Informed consent was obtained from all individual participants included in the study.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Owens, J.S., McLennan, J.D., Hustus, C.L. et al. Leveraging Technology to Facilitate Teachers’ Use of a Targeted Classroom Intervention: Evaluation of the Daily Report Card.Online (DRC.O) System. School Mental Health 11, 665–677 (2019). https://doi.org/10.1007/s12310-019-09320-6

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12310-019-09320-6