Abstract

In this paper, a new hybrid algorithm, Hybrid Symbiosis Organisms Search (HSOS) has been proposed by combining Symbiosis Organisms Search (SOS) algorithm with Simple Quadratic Interpolation (SQI). The proposed algorithm provides more efficient behavior when dealing with real-world and large scale problems. To verify the performance of this suggested algorithm, 13 (Thirteen) well known benchmark functions, CEC2005 and CEC2010 special session on real-parameter optimization are being considered. The results obtained by the proposed method are compared with other state-of-the-art algorithms and it was observed that the suggested approach provides an effective and efficient solution in regards to the quality of the final result as well as the convergence rate. Moreover, the effect of the common controlling parameters of the algorithm, viz. population size, number of fitness evaluations (number of generations) of the algorithm are also being investigated by considering different population sizes and the number of fitness evaluations (number of generations). Finally, the method endorsed in this paper has been applied to two real life problems and it was inferred that the output of the proposed algorithm is satisfactory.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

One of the greatest challenges faced by researchers in the field of optimization is to acquire the techniques to solve a non-linear complex optimization problem. For the problems that include discontinuity, gradient based algorithms are not at all suitable and the traditional mathematical programming techniques are quite not appropriate enough for solving various multi-objective optimization problems and also the problems that involve a large number of constraints [7]. The nature based optimization algorithms are the best alternative to deal with these types of optimization problems. Some of the optimization algorithms that can be found in the literature are Genetic Algorithm (GA) [5], based on the Principle of the Darwinian Theory; Particle Swarm Optimization (PSO) [34], based on the principle of Foraging Behavior of the Swarm of Birds; and Differential Evolution (DE) [9], based on the Darwinian Theory of Evolution; Symbiosis Organisms Search (SOS) algorithm [1], based on the Interaction Relationship among the organisms in the Ecosystem, Biogeography-Based Optimization (BBO) [8], Artifical bee colony algorithm [32], Memetic Algorithm [21, 33] and so on. These techniques have a huge number of applications over a wide range of real world problems, in almost all branches of Humanities, Science and Technology. Since heuristic algorithms do not guarantee to find the optimal solution in a finite amount of time [11], a large number of studies have been performed to amalgamate meta-heuristics algorithms with other forms of algorithms and it was found that these hybrid methods are impressive for fixing exclusive optimization problems. For this reason, the hybrid meta-heuristic methods are currently enjoying an increasing interest in the optimization community. Some of the hybrid optimization algorithms available in the literature are found in [4, 6, 38]. An algorithm is successful, if it depends on its exploration and exploitation ability. Global exploration ability indicates that the optimization algorithm effectively uses the whole search space, whereas, local exploitation ability indicates that the optimization algorithm searches for the best solution near a new solution in which it has already discovered [15]. SOS is a population based iterative global optimization method proposed by Cheng and Prayogo [1] on natural phenomena of the relationship of organisms in an ecosystem. This algorithm is executed in three phases. These three phases are mutualism phase, commensalism phase and parasitism phase. This algorithm has only common control parameters viz. Eco size and number of generation (number of fitness evaluation). However, though SOS has only two common control parameters, it is quite difficult to set the value of the parameters when executing the algorithm. On the other hand, the Simple Quadratic Interpolation (SQI) is used to accelerate the evolution process by producing a new set of solution vector which lies on a point of minima quadratic curve that goes through three randomly selected solution vectors [22]. In the present study, we propose a hybrid Symbiosis Organisms Search (HSOS) algorithm, by combining SOS and SQI. The SQI is used to enhance the algorithm’s exploration ability, and at the same time, it can expedite the convergence of the algorithm. The proposed algorithm is tested against a set of 13 (thirteen) well known benchmark functions, CEC2005 and CEC2010 special session on real-parameter optimization. The experimental results are compared extensively with the other meta-heuristic algorithms which are available in the literature. Also the effect of the common control parameters viz. population size and number of generations are also been discussed. For the validity of the proposed algorithm, two real world problems are solved and the results are compared with the state-of-the art PSO variants.

The remaining part of this paper, Sects. 2 and 3 review the basic notions of SOS and SQI respectively. The new proposed HSOS is presented in Sect. 4. Sections 5, 6 and 7 of the paper empirically demonstrates the efficiency and accuracy of the hybrid approach in solving unconstrained optimization problems and Sect. 8 deals with the application of two real world problems. Finally, Sect. 9 summarizes the contribution of the paper in a concise way.

2 Overview of Symbiosis Organism Search algorithm

Symbiosis Organisms Search (SOS) algorithm is a nature inspired algorithm, based on the interactive behavior of the organisms in nature (Ecosystem) [1]. There are different types of symbiotic relationships that occur in nature. In an ecosystem, the most common symbiotic relationships are mutualism, commensalism and parasitism. If both the species of interaction get benefit from the interaction then the symbiosis relationship is called ‘Mutualism’. An example of mutualism can be represented in the relationship between Bullhorn Acacia trees and certain species of ants. ‘Commensalism’ is an association between two different species where one species enjoys a benefit and the other is neither significantly affected nor benefited. For example, birds build a nest in a tree. The bird gets to benefit because the tree is giving shelter to the bird while the tree is neither getting any benefit nor any harm from the bird. ‘Parasitism’ is a relationship in which one organism gets benefits and the other organism is harmed, but not always destroyed. The organism that gets the benefit is called the parasite. For example, the mosquito is a parasite, feeding on a human. While feeding the mosquito may transfer different types of diseases (e.g. Malaria) to human because of which a human may or may not die. By combining these three phases, the SOS algorithm is implemented. A group of organisms in an ecosystem is analogous to the population in SOS algorithm. Each organism is represents one candidate solution corresponding to the problem. Each organism inside the ecosystem is related to an explicit fitness value that replicates the degree of adaptation to the specified objective. The implementation of SOS requires only common controlling parameters like population size and number of fitness evaluations (generations) for its working.

In the initial ecosystem, a bunch of organisms is generated randomly within the search area. In SOS, new solution generation is ruled through imitating the biological interplay between two organisms in the ecosystem. In the next three subsections, three phases that resemble the real-world biological interaction model viz. mutualism phase, commensalism phase, and parasitism phase are discussed briefly.

2.1 Mutualism phase

In this phase, an organism \(Org_j \) is selected randomly from the ecosystem to interact with the organism \(Org_i\), the i\(\mathrm{th}\) member in the ecosystem. Both organisms interact in a mutualistic relationship with the intention of expanding common survival abilities in the ecosystem. The new organism \(\text {Org}_\mathrm{i}^{\text {new}} \) and \(\text {Org}_\mathrm{j}^{\text {new}} \) in the ecosystem for each of \(Org_i \) and \(Org_j \) is calculated based on the mutualistic symbiosis between them by Eqs. (1) and (2).

Here, benefits factors (BF1 and BF2) are determined randomly as either 1 or 2. These factors represent the level of benefit to each organism, i.e., whether an organism gets respectively partial or full benefit from the interaction. Equation (3) shows a vector called “\(\text {Mutual}_{\text {Vector}} \)” representing the relationship characteristic between organisms \(Org_i \) and \(Org_j \).

2.2 Commensalism phase

In the commensalism phase, organism \(Org_i \) gets benefitted by organism \(Org_j \) and organism \(Org_i \) increases the beneficial advantage in the ecosystem to the higher degree of adaption. The new organism \(\text {Org}_\text {i}^{\text {new}} \) is calculated by Eq. (4) and updated in the ecosystem by only if its new fitness is better than its pre-interaction fitness.

Here \(\text {Org}_{\text {best}} \) is the best organism in the ecosystem.

2.3 Parasitism phase

In this phase, an artificial parasite called “Parasite_Vector” is created by duplicating organism \(Org_i \) within the search space. Then modifying the randomly selected dimensions by using random quantity of the organism \(Org_i \). Another organism \(Org_j \) is considered randomly from the ecosystem which serves as a host to the parasite vector (Parasite_Vector). If the Parasite_Vector has a better fitness value, it will kill organism \(Org_j \) and assumes its position in the ecosystem. If the fitness value of \(Org_j \) is better, \(Org_j \) will have immunity from the parasite and the Parasite_Vector will no longer be able to live in that ecosystem.

The algorithm steps of SOS are as follows:

-

Step 1: Initialize the organisms randomly and evaluate the fitness value of each initialized organism.

-

Step 2: Update each organism by mutualism phase which is represented by Eqs. (1) and (2).

-

Step 3: Update the new organisms by commensalism phase using Eq. (4).

-

Step 4: Calculate the new organisms and update them by parasitism phase as given in Sect. 2.3.

-

Step 5: If the stopping criterion is not satisfied, go to Step 2, else return with the best fitness value of the organism as the solution.

3 The Simple Quadratic Interpolation Method

The Simple Quadratic Interpolation (SQI) operator is used to obtain a set of the new organism after all the step of the SOS algorithm is completed at the present iteration. The new organism is placed in the ecosystem if the fitness value of the new organism is better than that of the corresponding organism in the ecosystem. Mathematically SQI can be formulated as follows:

Consider the two organisms \(Org_j \) and \(Org_k \quad (j\ne k)\) where \(Org_j =(org_{j,1} ,org_{j,2} ,org_{j,3} ,............,org_{j,D} )\) and \(Org_k =(org_{k,1} ,org_{k,2} ,org_{k,3} ,..........,org_{k,D} )\) from the ecosystem. Then the organism \(Org_i \) is updated according to the three point’s quadratic interpolation. The \(m^{th}\) dimension of the new organism \(Org_{i,m} ^{New}\)is calculated following Eq. (5)

Where \(\hbox {m} = 1, 2, 3{\ldots } D\); f\(_\mathrm{i}\), f\(_\mathrm{j}\) and f\(_\mathrm{k}\) are the fitness value of i\(\mathrm{th}\), j\(\mathrm{th}\) and k\(\mathrm{th}\) organisms respectively. The SQI is intended to enhance the entire search capability of the algorithm.

4 The proposed approach

The exploration and exploitation capability play a major role in the development of an algorithm [12]. In Sect. 3, it has been observed that the SQI method may be used for the better exploration of the search space. On the other hand, Cheng and Prayoga [1], has discussed the better exploitation ability of SOS for global optimization. Also, the SQI is used to accelerate the evolution process by producing a new point which lies at the minima point of the quadratic curve that goes through the three selected points [22]. In the proposed HSOS method, the exploration capability of SQI and the exploitation potential of SOS have been combined in order to increase the robustness of the algorithm. This combination can improve the searching capability of the algorithm for attaining the global optimum. The proposed HSOS approach is described below: Firstly, the organism is updated by three phases of SOS algorithm and then updated by three points SQI. If an organism violates any boundary condition, the violating organism is reflected back following the rule as given in Eq. (6) [4].

where \(LB_i\) and \(UB_i \) are respectively the lower and upper bounds of the i\(\mathrm{th}\) organism.

The Pseudocode and flowchart of the HSOS algorithm for solving benchmark functions are shown in Fig.1a, b. Also, the algorithm steps can be summarized in the following way:

-

Step 1: Randomly initialize the ecosystem organisms and evaluate the fitness of each organism.

-

Step 2: Main loop

-

Step 2.1: Calculate the new organisms and update by mutualism phase using Eqs. (1) and (2) and repair the infeasible organisms of the ecosystem to be feasible using Eq. (6).

-

Step 2.2: Calculate the new organisms and update by commensalism phase using Eq. (4) and repair the infeasible organisms of the ecosystem to be feasible using Eq. (6).

-

Step 2.3: Calculate the new organisms and update by parasitism phase.

-

Step 2.4: Update organisms by SQI method using Eq. (5) and repair the infeasible organisms of the ecosystem to be feasible using Eq. (6).

-

Step 3: If the stopping criterion is not satisfied to go to Step 2 and proceed until the best fitness value is obtained.

5 Experimental studies and discussion

To validate the proposed method, thirteen well known benchmark functions [10], CEC2005 [13] and CEC2010 [23] special session on benchmark functions are being considered. Details of the thirteen well known benchmark functions are given in Table 1. The algorithm is coded in MATLAB7.10.0 (R2010a). In each table, the boldface represents the best result found after a certain number of function evaluation.

5.1 Comparison with basic SOS

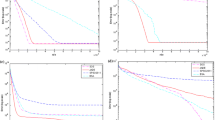

Table 2 shows the comparison of results in terms of absolute value of the error of the functions with the basic SOS algorithm for 13 benchmark functions which are given in Table 1. The experimental results of absolute error of 13 benchmark functions are presented in Table 2 after reaching 200,000 fitness evaluations for dimension (D) \(=\) 30. The algorithm runs 25 times taking Eco-size \(=\) 50. The mean and standard deviation of the fitness evaluation before reaching the VTR (value to reach) and success full run (SR) are also presented in Table 2, where the value of VTR is taken as 1e−08. From Table 2, it has been observed that the proposed HSOS perform better in all the functions except TF5. Also, the proposed method obtained the optimal result of seven functions (TF1, TF2, TF3, TF4, TF6, TF9, TF11). From Table 2, it has been seen that the proposed method requires the minimum number of the function (fitness) evaluation to reach the VTR. Figure 2 represents the convergence graph of nine benchmark functions. Figure 2a, b, c and d (for functions F1–F4) demonstrates that the convergence curves tend to reach to its global optima linearly with the change in number of function evaluations and also convergence graphs reach its global optima drop by drop with the change in number of function evaluation for functions F5, F7, F8, F12, and F13 (Fig. 2e-i). So, it can be concluded that HSOS converge faster than basic SOS.

5.2 Effect of eco-size i.e. population size and number of variable (dimension)

To identify the effect of population size (Eco-size) on 13 benchmark functions, given in Table 1, the algorithm is experimented with different population sizes (Eco-size) viz. 50, 100 and 200. The dimension (D) is considered as 50 and 100. The fitness evaluations are considered as 200000 on the dimension of 50 and 300000 on the dimension of 100 respectively. The comparative results of each benchmark function for each strategy (Eco-size) are represented in Tables 3 and 4, over 25 independent runs on each benchmark function with each strategy. The boldface values indicate the global optimum value among the strategy of that function.

Table 3 demonstrates the effect of the population size of 13 benchmark functions with dimension D \(=\) 50 and 200,000 function evaluations. Similarly, Table 4 shows the statistical results of 13 benchmark functions for D \(=\) 100 with 300,000 function evaluations. From Table 3, it is observed that the strategy with population size 50, the proposed HSOS method produce the better result than the strategy with population size 50 into the SOS method except the function F6, F9 and F11. For functions F6, F9, and F11 produce the identical results and hence there is no effect of population size on these functions to achieve their respective global optimum values with the same number of function evaluations either in proposed method or SOS method. It is also seen that the proposed method performs well than SOS when population size is 100 and 200. But, one should note that no algorithm can have a single successful run in any test function with population size 100 and 200, which indicates that a high population size may severely decline the performance of SOS. It is because of the fact that the number of iteration will significantly decrease with the increase of the population size. Thus, SOS cannot find any high-quality solutions, and incomplete convergence may frequently occur under this condition. That is, if the number of variables is high, the population size should be high, and vice versa. The similar case is shown in Table 4.

6 Experimental results of thirteen benchmark functions (given in Table 1)

Table 5 presents the experimental result of 13 benchmark functions of dimension (D) \(=\) 10 with D*10,000 fitness evaluations. The experimental results are then compared with the state of the art algorithms like DE [9], EPSDE [17], PSO [34], and CLPSO [18]. From Table 5, it is observed that HSOS is superior to DE for ten functions, superior to EPSDE for seven functions, superior to PSO for eleven functions and superior to CLPSO for ten functions. Table 6 shows the statistical rank of the algorithms obtained by Friedman test for the mean performance of 13 benchmark function. From Table 6 it can be inferred that overall performance of HSOS on these benchmark function is significantly better than other compared algorithms.

6.1 Experimental results of CEC2005 benchmark function

Table 7 shows the comparison result of CEC 2005 special session on real-parameter optimization benchmark functions with dimension (D) \(=\) 10 which can be divided into four classes:

-

(1)

Unimodal Functions F1–F5;

-

(2)

Basic Multimodal Functions F6–F12;

-

(3)

Expanded Multimodal Functions F13–F14;

-

(4)

Hybrid Composition Functions F15–F25.

The experimental results are then compared with other evolutionary algorithms like CMA-ES [19], DE/best/2/bin (F \(=\) 0.5, CR \(=\) 0.9) [16] and DE/rand/1/bin (F \(=\) 0.5, CR \(=\) 0.9) [9] with 100,000 fitness evaluations over 25 independent runs. Table 8 displays the statistical rank of all the algorithms obtained by Friedman rank test for the mean performance of all the function. The best results obtained by different algorithms for each function are mentioned in bold in Table 7. From Table 7, it is perceived that the proposed HSOS algorithm performs better than other algorithms for twelve functions with respect to the mean performance of the algorithms. Also, from Table 8, it is seen that the rank of proposed method obtained by Friedman rank test is one. Hence, it can be said that HSOS is significantly better than others from the statistical points of view.

Also, the proposed HSOS method experiments on twelve CEC2005 benchmark functions for dimension (D) \(=\) 50. The parameters setting for this experiment were conducted with 50 eco-size and 500,000 fitness evaluations over 100 runs as same of reference [20]. The experimental results are compared with seven state-of-the-art PSO variants (FIPS [26], UPSO [25], CLPSO [18], \({\mathrm {\chi }} \)PSO [28], BBPSO [29], DMSPSO [30], and DMeSR-PSO [20]) and five meta-heuristic algorithms (GA [5], BBO [8], DE [9], PSMBA [31] and ABC [32]). For each function, the performance results are compared in terms of median, mean and standard deviation of all the algorithms. Table 9 illustrates the experimental results of six (F1–F6) CEC 2005 benchmark functions for dimension 50. In Table 9, results except proposed HSOS are taken from references [20]. From Table 9, it can be detected that for function F1, F4 and F6, HSOS performs better than other algorithms; for function F2 and F3 the best result provides by the DMeSR-PSO method and for F5, provides the best result by DE method.

Table 10 provides the performance result of next six (F7–F12) CEC2005 benchmark functions with respect to the median, mean, and standard deviation for each function of all the algorithms. From the table, it can be concluded that HSOS performs much better than other algorithms for functions F8, F9, F10, F11, and F12 in terms of mean performance of all the algorithms and for function F7, PSMBA performs better than other algorithms. Table 11 indicates the statistical rank obtained by Friedman rank test for the mean performance of all the algorithms. From Table 11,the average rank of HSOS is minimum, which indicates that the final rank of HSOS is one. So, it can easily be perceived that HSOS significantly performs better than other algorithms.

6.2 Experimental result of Ten well known and Fourteen CEC2005 benchmark function

In this experiment, 24 benchmark functions are considered from the literature and the details of these functions are given in reference [24]. For the comparison of the result, the parameter setting is taken as same as in reference [24]. The experimental results are presented in Table 12 and the results are compared with state of the art DE variants like DE/rand/1/bin (F \(=\) 0.5, CR \(=\) 0.9) [9], ODE [14], OXDE [24]. In Table 12, results except HSOS are taken from [24] and the best results are highlighted in boldface. It is seen that in Table 12, the proposed method performs better for seventeen functions. The rank obtained by Friedman rank test for the mean result of the entire problem is presented in Table 13. According to the statistical rank given in Table 13, it is inferred that the proposed method significantly performs better than others methods.

7 Experimental results of CEC2010 benchmark functions

In this section, the CEC2010 special session on large scale global optimization problems is considered for the justification of the efficiency of the proposed method. The parameters setting for this experiment are considered as eco-size 50, run times 25 with 120,000 fitness evaluations. The experimental results are presented in Table 14 in terms of best, worst, mean, median and standard deviation and the results are compared with CLPSO [18], FI-PS [26], UPSO [25], and CPSO-H [27]. The results are compared in terms of mean results and it can be observed from Table 14 that the proposed HSOS method performs better than other methods for fourteen benchmark functions. Also, the statistical rank by Friedman rank test for the mean performance for all the functions is presented in Table 15 and found that the rank of HSOS is one. So, it can be said that the HSOS method significantly performs better for large scale global optimization problems.

7.1 Experimental result of six benchmark functions

In this experiment, six well known benchmark function are considered and the details of these functions are given in [3]. The experimental results of these six functions are compared with state of the art PSO variant like GPSO [34], LPSO [35], VPSO [36], FIPS [26], DWS-PSO [30], CLPSO [18], APSO [37] and CSPSO [3]. The experimental results are presented in Table 16 and all the results except HSOS are taken from reference [3]. In Table 16 the best results are bolded. From Table 16, it is observed that the HSOS method performs better than other methods for all the optimization problems in terms of mean solution. The ranks obtained by Friedman test for the mean result of all the problems are presented in Table 17. From Table 17, it is seen that the rank obtained by Friedman test is minimum and the final rank is one. So,it can be concluded that HSOS significantly performs better than other methods.

8 Real world applications

In this section, the formulation of two real world problems and the experimental results of the problems are discussed.

8.1 Problem formulation

The performance of the proposed HSOS method over two real life non-linear optimization problems, namely, the Frequency modulation sounds parameter identification problem and spread spectrum radar polyphase code design problem [2]. The formal statement of the problem can be defined as follows [2]:

RP 1: Frequency modulation sounds parameter identification problem

Frequency-Modulated (FM) sound wave synthesis has an important role in several modern music systems. The problem FM synthesizer is a six dimensional optimization parameter problem where the vector to be optimized is X = {a1, \(\mathrm{{\omega }} \)1, a2, \({{\omega }}\)2, a3, \(\mathrm{{\omega }}\)3} of the sound wave given in Eq. (7). The problem is to generate a sound as given in Eq. (7) similar to target sound is given in Eq. (8) and is a highly complex multimodal one having strong epistasis. The optimum minimum value of this problem is \(f(X^*)=0\). The expressions for the estimated sound and the target sound waves are given as:

respectively (where \(\theta =\frac{2\pi }{100})\) and each parameter is defined in the range [−6.4, 6.35]. The fitness function is defined as the summation of the square of the errors between the estimated wave (Eq. 7) and the target wave (Eq. 8) as follows:

RP 2: Spread spectrum radar polyphase code design problem

The problem is based on the properties of the aperiodic auto-correlation function and the assumption of coherent radar pulse processing in the receiver and this design problem is widely used in the radar system design and it has no polynomial time solution. The problem under consideration is modelled as a min–max nonlinear non-convex optimization problem with continuous variables and with numerous local optima. It can be expressed as follows:

where \(X=\big \{ (x_1 ,x_2 ,x_3 ,..........x_D )\in R^D\vert 0\le x_j \le 2\pi ,j=1,2,3,.......,D\big \}\) and m = 2D-1, with

Here, the objective is to minimize the module of the biggest among the samples of the so called auto-correlation function which are related to the complex envelope of the compressed radar pulse at the optimal receiver output, while the variables represent symmetrized phase differences.

8.2 Results and discussions

The RP1 problem is a six dimension Parameter Estimation for Frequency-Modulated (FM) Sound Waves problem and for RP2, the dimension is considered as 20. For each of the two real world problems, we perform 25 independent runs with Eco-size \(=\) 50 and 150,000 function evaluations. The performance results in terms of best, mean and standard deviation of two real world problems are reported in Table 18 and the results are compared with the state of the art other algorithms. From Table 18, it is observed that for RP1 and RP2, HSOS reach the optimal solution; also, the mean result (accuracy of the algorithms) and standard deviation (robustness of the algorithm) of HSOS are better than those of other algorithms. Also, Fig.3a, b indicate the convergence graph of the two real world problems. From the figures, it is seen that the proposed hybrid method converges faster than other algorithms.

9 Conclusion

This paper presents a new hybrid method called HSOS for optimizing large scale non-linear complex optimization problems. For the validity of the proposed algorithm, 13 well known benchmark functions, CEC2005, and CEC2010 special session on real-parameter optimization are considered. Also, two real life problems are taken to justify the efficiency of the proposed method. In HSOS algorithm, the steps of SOS are executed first to enhance its exploitation capability and then SQI method is implemented to enhance the global search ability of SOS method. Moreover, since the algorithm has only two common control parameters, such as, population size and number of function evaluation (generation), the effect of these two parameters on the performance of the algorithm are also investigated by considering different population sizes and number of function evaluations. The results obtained by using HSOS algorithm have been compared with the other state-of-the-art optimization algorithms which are available in the literature for the considered benchmark problems. Extensive experiments are conducted to investigate the performance of the HSOS in various benchmark problems. The statistical Friedman rank test was conducted for the mean performance of each considering functions of all algorithms. On the basis of all investigation, it may be concluded that the proposed HSOS outperforms other algorithms in terms of the numerical results and a statistical test for multimodal functions and large scale optimization problems.

Further, the proposed HSOS algorithm can be applied to constraint and multi objective engineering optimization problems and also on the industrial environment as it is suitable for the optimization of any system involving a large number of variables and objectives. Also, it can be applied to safety-critical system performance; business models, power system problem, modelling, simulation and optimization of complex systems and service engineering design problems etc.

References

Cheng MY, Prayogo D (2014) Symbiotic Organisms Search: a new metaheuristic optimization algorithm. Comput Struct 139:98–112

Das S, Suganthan PN (2010) Problem Definitions and Evaluation Criteria for CEC 2011 Competition on Testing Evolutionary Algorithms on Real World Optimization Problems, Technical Report, December. http://www.ntu.edu.sg/home/EPNSugan

Gao W-f, Liu S-y, Huang L-l (2012) Particle swarm optimization with chaotic opposition-based population initialization and stochastic search technique. Commun Nonlinear Sci Numer Simulat 17:4316–4327

Gong W, Cai Z, Ling CX (2011) DE/BBO: a hybrid differential evolution with biogeography-based optimization for global numerical optimization. Soft Comput 15:645–665

Holland JH (1992) Adaptation in natural and artificial systems. University of Michigan Press. ISBN: 0-262-58111-6

Marinaki M, Marinakis Y (2015) A hybridization of clonal selection algorithm with iterated local search and variable neighborhood search for the feature selection problem. Memetic Comput 7:181–201

Osman IH, Laporte G (1996) Metaheuristics: a bibliography. Ann Oper Res 63:513–623

Simon D (2008) Biogeography-based optimization. IEEE Trans Evolut Comput 12(5):702–713

Storn R, Price K (1997) Differential evolution—a simple and efficient heuristic for global optimization over continuous spaces. J Global Optim 11:341–359

Tsai HC (2015) Roach infestation optimization with friendship centers. Eng Appl Artif Intell 39:109–119

Sorensen K (2015) Metaheuristics–the metaphor exposed. Int Trans Oper Res 22:3–18

Crepinšek M, Liu S-H, Mernik M (2013) Exploration and exploitation in evolutionary algorithms: a survey. ACM Comput Surveys 45(3):35

Suganthan PN, Hansen N, Liang JJ, Deb K, Chen YP, Auger A, Tiwari S (2005) Problem definitions and evaluation criteria for the CEC 2005 special session on real-parameter optimization, Nanyang Tech. Univ., Singapore and KanGAL, Kanpur Genetic Algorithms Lab., IIT, Kanpur, India, Tech. Rep., Rep. No. 2005005, May 2005

Rahnamayan S, Tizhoosh H, Salama M (2008) Opposition-based differential evolution. IEEE Trans Evol Comput 12(1):64–79

Bhattacharjee K, Bhattacharya A, Nee Dey SH (2015) Backtracking search optimization based economic environmental power dispatch problems. Electr Power Energy Syst 73:830–842

Storn R (1996) On the usage of differential evolution for function optimization”, in: Biennial Conference of the North American Fuzzy Information Processing Society (NAFIPS), IEEE, Berkeley, pp 519–523

Mallipeddi R, Suganthan PN, Pan QK, Tasgetiren MF (2011) Differential evolution algorithm with ensemble of parameters and mutation strategies. Appl Soft Comput 11:1679–1696

Liang JJ, Qin AK, Suganthan PN, Baskar S (2006) Comprehensive learning particle swarm optimizer for global optimization of multimodal functions. IEEE Trans Evolut Comput 10(3):281–295

Hansen N, Ostermeier A (2001) Completely derandomized self-adaptation in evolution strategies. Evol Comput 9(2):159–195

Tanweer MR, Suresha S, Sundararajan N (2015) Dynamic mentoring and self-regulation based particle swarm optimization algorithm for solving complex real-world optimization problems. Inf Sci. doi:10.1016/j.ins.2015.07.035

Ong YS, Lim MH, Chen XS (2010) Research frontier: memetic computation—past, present & future. IEEE Comput Intell Mag 5(2):24–36

Deep K, Das KN (2008) Quadratic approximation based hybrid genetic algorithm for function optimization. Appl Math Comput 203(1):86–98

Tang K, Li X, Suganthan PN, Yang Z, Weise T (2010) Benchmark Functions for the CEC’2010 Special Session and Competition on Large-Scale Global Optimization. July 8, 2010

Wanga Y, Cai Z, Zhang Q (2012) Enhancing the search ability of differential evolution through orthogonal crossover. Inf Sci 185:153–177

Parsopoulos KE, Vrahatis MN (2004) UPSO-A unified particle swarm optimization scheme. Lect Ser Comput Sci 1:868–873

Mendes R, Kennedy J, Neves J (2004) The fully informed particle swarm: simpler, maybe better. IEEE Trans Evol Comput 8:204–210

van den Bergh F, Engelbrecht AP (2004) A cooperative approach to particle swarm optimization. IEEE Trans Evol Comput 8:225–239

Clerc M, Kennedy J (2002) The particle swarm-explosion, stability, and convergence in a multidimensional complex space. IEEE Trans Evol Comput 6(1):58–73

Kennedy J (2003) Bare bones particle swarms. In: Proceedings of the IEEE SIS, pp 80–87

Liang JJ, Suganthan PN (2005) Dynamic multi-swarm particle swarm optimizer. In: Proceedings of the IEEE SIS, pp 210–224

Mo H, Liu L, Xu L (2014) A power spectrum optimization algorithm inspired by magnetotactic bacteria. Neural Compt Appl 25(7):1823–1844

Karaboga D, Basturk B (2007) A powerful and efficient algorithm for numerical function optimization: artificial bee colony (ABC) algorithm. J Global Optim 39(3):459–471

Chen X, Ong YS, Lim MH, Tan KC (2011) A multi-facet survey on memetic computation. IEEE Trans Evol Comput 15(5):591–607

Shi Y, Eberhart R (1998) A modified particle swarm optimizer. In: IEEE International Conference on computational intelligence, pp 69–73

Kennedy J, Mendes R (2002) Population structure and particle swarm performance. In: IEEE international conference evolutionary computation, Honolulu, HI, pp 1671–1676

Kennedy J, Mendes R (2006) Neighborhood topologies in fully informed and best-of neighborhood particle swarms. IEEE Trans Syst Man Cybern Part C 36(4):515–9

Zhan ZH, Zhang J, Li Y, Chung HH (2009) Adaptive particle swarm optimization. IEEE Trans B 39(6):1362–1381

Pan I, Das S (2013) Design of hybrid regrouping PSO-GA based sub-optimal networked control system with random packet losses. Memetic Compt 5:141–153

Acknowledgments

The authors would like to thank Dr. P.N. Suganthan, for providing the source code of some PSO variants. The authors would also like to express their sincere thanks to the referees and editor for their valuable comments and suggestions which has proved to be an immense help in the improvement of the paper.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Nama, S., Kumar Saha, A. & Ghosh, S. A Hybrid Symbiosis Organisms Search algorithm and its application to real world problems. Memetic Comp. 9, 261–280 (2017). https://doi.org/10.1007/s12293-016-0194-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12293-016-0194-1