Abstract

Nature-inspired algorithms take inspiration from living things and imitate their behaviours to accomplish robust systems in engineering and computer science discipline. Symbiotic organisms search (SOS) algorithm is a recent metaheuristic algorithm inspired by symbiotic interaction between organisms in an ecosystem. Organisms develop symbiotic relationships such as mutualism, commensalism, and parasitism for their survival in ecosystem. SOS was introduced to solve continuous benchmark and engineering problems. The SOS has been shown to be robust and has faster convergence speed when compared with genetic algorithm, particle swarm optimization, differential evolution, and artificial bee colony which are the traditional metaheuristic algorithms. The interests of researchers in using SOS for handling optimization problems are increasing day by day, due to its successful application in solving optimization problems in science and engineering fields. Therefore, this paper presents a comprehensive survey of SOS advances and its applications, and this will be of benefit to the researchers engaged in the study of SOS algorithm.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Optimization algorithms are mostly inspired by nature, usually based on swarm intelligence. Swarm intelligence is an area of artificial intelligence (AI) that is concerned with a collective behaviour within distributed and self-organized systems [1,2,3,4,5]. Different optimization algorithms are based on different inspirations, and these algorithms have been widely applied in optimization of problems in science, technology, and engineering problems [6,7,8,9,10]. The traditional swarm-intelligence optimization algorithms include evolutionary algorithms like GA [11], DE [12], and swarm intelligence algorithms like the PSO [13], bees algorithm (BA) [14], particle bee algorithm (PBA) [15], ant colony optimization (ACO) [16, 17], and artificial bee colony (ABC) [18]. Recently, the area of swarm intelligence has witnessed development of promising optimization algorithms such as symbiotic organisms search (SOS) [19], cuckoo search (CS) [20], bat algorithm, fire fly algorithm (FA) [21], and cat swarm optimization (CSO) [22], while new algorithms like Beetle Antennae Search (BAS) [23, 24] and Eagle Perching Optimizer (EPO) [25] emerged very recently. Figure 1 shows the position of the newly introduced SOS within the context of metaheuristics.

The traditional algorithms like PSO, GA, and ACO have been proven to be effective and robust in solving various classes of optimization problems. However, these algorithms have limitations like entrapment in local minima, high computational complexity, slow convergence rate, and unsuitability for some class of objective functions. SOS is a stochastic king of metaheuristic algorithm, and it searches for set of solutions by means of randomization. SOS is a SI-based optimization techniques introduced by Cheng and Prayogo [19], and it was motivated by interactive behaviour by organisms for survival. Some organisms in the ecosystem depend on other species for their survival, and this dependency-based survival is called symbiotic association. Mutualism, commensalism, and parasitism are the main forms of symbiotic association in an ecosystem. Mutualism association is when two organisms interact for mutual benefit, that is, both benefits from the relationship. Commensalism is when one organism develops a relationship with a pair of specie, while one specie acquires benefit from the relationship and the other specie is not harmed. Parasitism is when two species develop a relationship, and one specie acquires benefits from the relationship, while the other specie is harmed.

In the SOS algorithm, the mutualism and commensalism phases concentrate on generating new organisms and thus select the best organism for survival. The operations of these phases enable the search procedure to discover diverse solutions in the search space, thereby improving the exploration ability of the algorithm [26]. On the other hand, the parasitic phase enables the search procedure to avoid the solution being trapped in a likely local optima, thus improving the exploitation ability of the algorithm [26]. The standard SOS algorithm was proposed to handle continuous benchmark and engineering problems, which was shown to be robust and has faster convergence speed when compared with GA [11], PSO [13], DE [12], BA [14], and PBA [15] which are the traditional metaheuristic algorithms. The three phases of the SOS algorithm are simple to operate, with only simple mathematical operations to code. Further, unlike the competing algorithms, SOS does not use tuning parameters, which enhances its performance stability. The SOS algorithm operations did not require algorithm-specific turning parameters unlike other metaheuristic algorithms like GA which needs to turn crossover and mutation rates, and PSO needs to turn cognitive factor, social factor, and inertia weight. This feature of SOS is considered an advantage since the improper turning of the parameters could prolong the computation time and cause premature convergence [19, 27].

We thus conclude that the novel SOS algorithm, while robust and easy to implement, is able to solve various numerical optimization problems despite using fewer control parameters than competing algorithms. Therefore, interests of researchers in using SOS for handling optimization problems are increasing day by day. Therefore, this paper presents a comprehensive survey of SOS advances and its applications, and this will be of benefit to the researchers engaged in the study of SOS algorithm.

The contributions of this paper are as follows:

Comprehensive presentation of SOS algorithm and basic steps of SOS algorithms.

Review of advances of SOS algorithm and its applications.

Review of the modifications of SOS for handling continuous, discrete, multi-objective, and large-scale optimization problems.

Emphasis on the future research direction of SOS.

The structure of the remaining parts of the paper is follows: The biological foundations of SOS algorithm are discussed in Sect. 2, and then, the features of the algorithm are explained, and thereafter, the structure of the algorithm is presented. Section 3.1 provides the evolution SOS algorithms under which various modified and hybrid versions of SOS algorithms are discussed. The extensive review of the application areas for which SOS algorithms have been applied is presented in Sect. 4. The pronounced application areas of SOS algorithms include combinatorial, continuous, and multi-objective optimization. Besides optimization, application of SOS algorithm covered engineering and other real-world problems such as power systems, transportation, design and optimization of engineering structures, economic dispatch problems, wireless communication, and marching learning. Section 5 presents discussion and potential future research works, and finally, Sect. 6 draws concluding remarks on the paper.

2 Symbiotic organisms search

2.1 Foundations of SOS

Symbiotic organism search (SOS) was introduced in [19], and it was motivated by interactive behaviour by organisms for survival. The principal idea behind SOS is the simulation of forms symbiotic association in an ecosystem which comprises of three stages. In the first stage which is called the mutualism phase, a pair of organisms interact for mutual benefit and neither of them is harmed from the interaction. A classic example of mutualism association is an interaction between bees and flowers. Bees collect nectar from flower for the production of honey, and nectar collection process by bees enables the transfer of pollen grains which aid pollination. Therefore, the involved organisms interact for mutual benefit from the relationship. During the second stage which is called commensalism phase, a pair of organisms engage in a symbiotic relation where one of the organisms acquires benefits from the relationship and the other neither benefits nor harmed. A relationship between remora fish and sharks is a typical example of commensalism association. Remora fish rides on shark for food, and shark neither benefits nor harmed from the relationship. During the third stage, called parasitism phase, a pair of organisms engage in a symbiotic relation where one of the organisms acquires benefits from the relationship and the other is harmed. An example of parasitic association is a relationship between anopheles mosquito and human host. An anopheles mosquito transmits plasmodium parasite to human host which could cause the death of human host if his/her system cannot fight against the parasite.

2.2 Characteristics of SOS

The SOS algorithm is population (ecosystem) based with a pre-set size called eco_size. An initial ecosystem is randomly generated similar to other evolutionary algorithms (EAs). An organism (individual) \(X_i\) within the ecosystem represents a candidate solution to a given optimization problem. \(X_i\) is a D-dimensional vector of real values, where D is the dimension of a given optimization problem. Then, mutualism, commensalism, and parasitism phases are used to improve certain organisms, where an organism is replaced if its new solution is better than the old one. The algorithm iterates until the stopping criterion is reached.

2.2.1 Mutualism phase

During the mutualism phase, the algorithm tries to improve an organism \(X_i\) and an arbitrary organism \(X_j\)\((i \ne j)\) by moving their positions towards the best organism \(X_{\mathrm{best}}\) by taking into consideration the current mean (mutual benefit factor)of the organisms \(X_i\) and \(X_j\), and this represents the qualities of the organisms \(X_i\) and \(X_j\) from the current generation. Equations (1) and (2) modelled how organisms improvement may be influenced by the difference between the best organism and qualities of the mutual organisms. The parameters \(r_i\) and \(B_{F}\) are applied to the models to provide source of randomness; \(r_1(0,1)\) and \(r_2(0,1)\) are uniformly generated random number in the interval 0 to 1; and \(B_{F}\) is the benefit factor which is either 1 or 2 which emphasizes the importance of the benefit of mutual relationship.

where \(r_1(0,1)\) and \(r_2(0,1)\) are vectors of uniformly distributed random numbers between 0 and 1; \(i=1,2,3,...,\hbox {ecosize}\); \(j \in \{1, 2, 3, \ldots ,\hbox {ecosize}| j \ne i\}\); ecosize is the number of organisms in the search space.

2.2.2 Commensalism phase

During the commensalism phase, an organism \(X_i\) tries to improve its self by interacting with an arbitrary organism \(X_j\), where i is not equal to j. The improvement is attempted by moving the position of \(X_i\) towards the position of the best organism \(X_{\mathrm{(best)}}\) by taking into account the current position of the arbitrary organism \(X_j\). Equation 4 modelled how organism improvement may be influenced by the difference between the best organism and an arbitrary organism \(X_j\). The parameter r is applied to the model to provide source of randomness; \(r(-1,1)\) is a uniformly generated random number in the interval − 1 to 1.

where \(r(-\,1,1)\) is a vector of uniformly distributed random numbers between \(-\,1\) and 1. \(i=1,2,3, \ldots ,\hbox {ecosize}\); \(j \in \{1, 2, 3, \ldots ,\hbox {ecosize} | j \ne i\}\); ecosize is the number of organisms in the search space.

2.2.3 Parasitism phase

In parasitism phase, an artificial parasite called parasite vector is created by cloning an ith organism \(X_i\) and modifying it using randomly generated number. Then, \(X_j\) is randomly selected from ecosystem, and fitness values of parasite vector and \(X_j\) are computed. If the parasite vector is fitted than \(X_j\) , then \(X_j\) is replaced by the parasite vector, otherwise \(X_j\) survives to the next generation of ecosystem and parasite vector is discarded. This phase increases the exploitation and exploration capability of the algorithm by randomly removing the inactive solution and introducing the active ones. Consequently, premature convergence could be avoided and convergence rate could be improved.

2.3 Structure of SOS

The pseudocode of the SOS algorithm is presented in Algorithm 1. The population of organisms is initialized, typically using uniformly generated random number. The SOS procedure is performed within the while loop (lines 2–32 in Algorithm 1) which comprises of the following steps. Firstly, the mutual vector and benefit factor parameters are computed, and then, the mutualism phase modifies the current solution and a randomly selected solution (lines 4–17 in Algorithm 1). Then, the commensalism phase is applied to modify the current solution to introduce the exploration into the space (lines 18–23 in Algorithm 1). Finally, parasitism phase is applied to prevent the search procedure from getting entrapped in the local optima (lines 23–30 in Algorithm 1). The process stops when the stopping criteria are reached.

3 Taxonomy of SOS advances

Figure 2 presents taxonomy of the recent advances of the SOS algorithm. The SOS advance is classified into main SOS algorithm, SOS evolution, and SOS applications. The SOS evolution includes modifications and hybridization. The SOS application includes the multi-objective optimization, combinatorial optimization, continuous optimization, engineering applications, and other application areas.

3.1 Evolution of symbiotic organisms search algorithms

The standard SOS was developed as global optimizer for continuous optimization problems, which was shown to be robust and has faster convergence speed when compared with genetic algorithm (GA) [11], particle swarm optimization (PSO) [13], differential evolution (DE) [12], bees algorithm (BA) [14], and particle bee algorithm (PBA) [15] which are the traditional metaheuristic algorithms. The difficulty arose when SOS could not obtain efficient solutions for other complex optimization problems, which is turn with the no-free-lunch theorem [28]. To overcome the difficulties, SOS algorithms have undergone several hybridizations and modifications to provide optimal and efficient solutions for various optimization problems. This section overviews the developments of SOS algorithms in terms of the modifications and hybridizations. Figure 3 presents the taxonomy of SOS evolution.

3.1.1 Modified symbiotic organisms search algorithms

Since the introduction of SOS algorithm, it has undergone several modifications to provide optimal and efficient solutions for various optimization problems. Modifications have been applied to several components of SOS such as solution initialization [29, 30], SOS phases [29,30,31,32,33], introduction of new phases [34], and fitness function evaluation [29]. The summary of modified SOS algorithms is presented in Table 1

Modified symbiotic organisms search (MSOS) algorithm was proposed by [31] to improve the convergence rate and accuracy of SOS algorithm. In MOS, the ecosize is divided into three inhabitants and the integrated inhabitant is executed using predefined probabilities. The inhabitants and probabilities are used to update mutualism, commensalism, and parasitism phases, respectively. The MSOS algorithm introduces, in all of its phases, new relations to update the solutions to improve its capacity of identifying stable and of high-quality solutions in a reasonable time. Furthermore, to increase the capacity of exploring the MSOS algorithm in finding the most promising zones, it is endowed with a chaotic component generated by the logistic map.

New relations for updating the phases of SOS were introduced by [29] to improve the quality of solution, and chaotic sequence generated by logistic map was employed to improve the exploration capability of SOS. In this paper, this technique is referred to as MSOS1. The introduced relations are applied to update the mutualism and commensalism phases. Modification to benefit factors of SOS algorithms was proposed in [35], otherwise known as SOS–AFB. The factors were adaptively determined based on the fitness of the current organism and the best organism. The adaptive benefit factors strengthen the exploration capability when the organisms \(X_i\) or \(X_j (i \ne j)\) are far from the best organism (\(X_{\mathrm{best}}\)), while the exploitation capability is strengthen when the interacting organisms are closer to the best organism. The competition and amensalism interaction strategies were proposed by [34] in addition to mutualism, commensalism, and parasitism phases proposed in the standard SOS. The idea of competition phase is to generate an organism that can compete with the current organism \(X_i\). An organism \(X_j\) is randomly selected from the ecosystem, and a new organism called \(X_{\mathrm{competitor}}\) is generated. If \(f(X_{\mathrm{competitor}})\) is better than \(f(X_i)\), then \(X_i\) is updated with \(X_{\mathrm{competitor}}\). The concept of amensalism phase is similar to that of competition phase, but amensalism organism is unaffected by the interaction, while the other organism is negatively affected. This phase introduces diversity into search space, thereby avoiding premature convergence and local optima entrapment. The commensalism organism \(X_{\mathrm{amensalis}}\) is generated by mutating the randomly selected organism \(X_j\), and \(X_j\) is replaced by \(X_{\mathrm{amensalis}}\).

Predation phase and a random weighted reflective parameter are proposed by [30] to improve the performance of SOS algorithm. The new improved SOS is called ISOS. The proposed predator phase modelled the interaction between the predator and prey in an ecosystem. The predator feeds on the prey which always eventually leads to the death of the prey. The proposed random weighted parameter is used to replace the generated normally distributed random numbers in the mutualism and commensalism phase, respectively. Banerjee and Chattopadhyay [32] presents a modified SOS (MSOS) algorithm for optimizing the performance of 3-dimensional turbo code (3D-TC) in controlling error coding scheme to improve adequate redundancy in communications system. The proposed MSOS improves the performance of bit error rate (BER) of 3D-TC as compared to 8-state Duo-Binary Turbo Code (DB-TC) of the Digital Video Broadcasting Return Channel via Satellite standard (DVB-RCS) and Serially Concatenated Convolution Turbo Code (SCCTC) structures.

Miao et al. [33] proposed a modified SOS algorithm based on simplex method (SMSOS) in order to obtain optimal flight route for unmanned combat aerial vehicle (UCAV) systems under multi-constrained global optimization problem. The modified algorithm adopted simplex method that improves the population diversity and increases exploration and exploitation. The method has been added into the phases of the original SOS such as mutualism, commensalism, and parasitism. Thus, prevent the local optimal solution of the algorithm from premature convergence. Simulation has been conducted in the MATLAB environment, and the result obtained shows the efficiency of the modified SOS for UCAV compared with other state-of-the-art algorithms in finding the shortest path to evade the randomly generated threat areas with high optimization precision. However, modified SOS tests the flight route path only and did not take into consideration of other parameters used by the UCAV. A different modification of SOS that uses complex method has been developed in order to extend the CSOS diversification that can lead to high precision in obtaining global optimum solution [33].

3.1.2 Hybrid symbiotic organisms search algorithms

The traditional SOS algorithm suffers from local optima entrapment like other metaheuristic algorithms which leads to premature convergence [37]. The prominent way to improve the search ability of metaheuristic algorithms is by hybridization. Hybridization technique tries to integrate the advantages of two or more techniques to substantially reduce their disadvantages [37]. The hybrid algorithms result to improvements in convergence speed and quality of solutions [37]. Table 2 summarizes hybrid-based SOS algorithms

Hybrid SOS algorithms have been proposed by incorporating local search techniques [38, 41]. Hybrid algorithms are not only able to offer faster speed of convergence, but also produce better quality solutions. In [38], a hybrid SOS is proposed by combining SOS algorithm with simple quadratic interpolation (SQI) to enhance the global search of SOS algorithm. The performance of the proposed algorithm was tested on real-world and large-scale benchmark functions like CEC2005 and CEC2010. The results obtained by the proposed algorithm were compared with other classical metaheuristic algorithms. The proposed algorithm outperforms other state-of-the-art algorithms in terms of quality of solutions and convergence rate. Saha and Mukherjee [39] hybridized SOS with a chaotic local search (CSOS) to improve the solution accuracy and convergence speed of the SOS algorithm, and the parasitism phase of the standard SOS is not considered in the proposed CSOS in order to reduce the computational complexity. The performance evaluation of CSOS was carried out using both twenty-six unconstrained benchmark test functions and real-world power system problem. The CSOS algorithm obtained superior results over the compared algorithms for both benchmark function optimization and power engineering optimization task.

Guha et al. [41] presented an hybrid SOS called quasi-oppositional symbiotic organism search (QOSOS) algorithm for handling load frequency control (LFC) problem of the power system. The theory of quasi-oppositional based learning (Q-OBL) is integrated into SOS to avoid entrapment in local optima and improve convergence rate. Authors considered two-area and four-area interconnected power system to test the effectiveness of the proposed algorithm. The dynamic performance of the test power systems obtained by QOSOS is better than that of SOS and comprehensive learning PSO. QOSOS also showed better robustness and sensitivity for the test power systems.

To address the issue of SOS been likely trapped in the local optima, attempts have made by researchers to hybridize simulated annealing local search technique with SOS algorithm [43,44,45,46]. Çelik and Öztürk [46] proposed the hybridization of SOS with simulated annealing (hSOS–SA) for integration into the design of proportional– integral–derivative (PID) controller for automatic voltage regulator (AVR) by taking into consideration both time and frequency domain specifications. The SOS optimizes the PID parameters used by the technique in order to improve the stability of the system. Furthermore, the hybridization with SA prevents the technique from premature convergence that leads to stable, fast and reliable AVR response.

Moreover, [44] proposed SASOS-I algorithm for task scheduling in cloud computing environment, and the SASOS-I avoided likely entrapment of SOS procedure in the local optima while minimizing task makespan and response time. Ezugwu et al. [45] presented SOS-SA algorithm for solving travelling salesman problems, and the proposed algorithm reduces execution time while reducing the convergence speed of the SOS procedure. Sulaiman et al. [43] presented symbiotic organism search-based simulated annealing (SASOS-II) local search technique for solving directional overcurrent relay (DOCR) to minimize the sum of the operating times of all primary relays considering standard IEEE3-, 4-, and 6-bus systems. The SASOS-III algorithm efficiently minimizes the considered models of the problem.

4 Applications of symbiotic organisms search algorithms

The SOS algorithm and its variants have been used in solving various optimization problems in the field of science and engineering. The taxonomy of applications of SOS algorithm is illustrated in Fig. 4. As seen it the figure, SOS algorithm has been applied to combinatorial, continuous, and multi-objective optimization problems. Additionally, it has been used for solving problems in transportation, machine learning, and cloud computing. Finally, SOS algorithms usage has been on the increase in areas of engineering. These areas include environmental/economic dispatch, power systems optimization, design of engineering structures, wireless communications, construction project scheduling, electromagnetic optimization, and reservoir optimization.

4.1 Symbiotic organisms search for classes of optimization problems

Some efforts have been made in adapting and modifying SOS algorithms to handle various classes of optimization problems such as multi-objective optimization, constrained optimization, combinatorial optimization, and continuous optimization which are important aspect of facilitating design and optimization of various problems in engineering and computer science.

4.1.1 Multi-objective symbiotic organisms search algorithms

Multi-objective optimization problems involve many conflicting objectives, thus improving one objective lead to deterioration of other objectives [47,48,49,50,51,52,53,54,55,56]. There is no single optimal solution that can optimize multi-objective optimization problems (MOP) with conflicting objectives, rather a set of optimal trade-off solutions known as Pareto optimal solutions. Many practical optimization problems consist of multiple objectives. The objectives often conflict with one another. Improving one objective may lead to decline in quality of another objective. Thus, there is no single solution which can optimize all the objectives. However, a set of optimal trade-off solutions are significant for decision-making. Metaheuristic algorithms have proven to be able to provide approximate solutions to MOP. Recently, SOS has been applied to solve MOP. Since the original SOS cannot be adapted directly to handle multi-objective optimization problems, three issues have to be considered when extending SOS to handle multi-objective optimization problems. First, how to choose the global and local best organisms to guide the search of an organism. Second, how to maintain good solutions found so far. Lastly, how to constraints in the case of constrained optimization problems. Table 3 presents multi-objective SOS algorithms.

Dosoglu et al. [57] proposed SOS for handling economic emission load dispatch (EELD) problem for thermal generator in power systems to minimize operating cost and emission while satisfying load demand. The multi-objectives are converted into a single objective using weighted sum approach. The efficiency of the proposed algorithm was tested on various standard power systems IEEE 3-machines 6-bus, IEEE 5-machines 14-bus, IEEE 6-machines 30-bus test systems for both with transmission loss and without transmission loss. The obtained results by the proposed algorithm are better as compared to other optimization algorithms like GA, DEA, PSO, bees algorithm (BA), mine blast algorithm (MBA), and cuckoo search (CS). Tran et al. [58] presents multi-objective SOS (MSOS) for optimizing trade-off among project duration, project cost, and the utilization of multiple work shift schedules. The optimal trade-off while maintaining availability constraints is essential to enhance the success of entire construction project. They employed a selection mechanism introduced by [62] for selection of candidates solution to facilitate the generation of good Pareto front. The ecosize remains unchanged during the optimization process, the best solutions are chosen from the combined ecosystem, and a two-solution dominance approach is used. The combination of the current population and advanced population is larger than the ecosize. Therefore, the ecosize solutions are selected based on the non-dominated sorting technique [11] and crowding entropy sorting technique [63]. A study of performance of the MSOS was carried out using a case study of construction projects, and the obtained results were compared with known algorithms like non-dominated sorting genetic algorithm II (NSGA-II), the multiple objective particle swarm optimization (MOPSO), the multiple objective differential evolution (MODE), and the multiple objective artificial bee colony (MOABC). MSOS was found to be efficient in solving trade-off among project duration, cost, and labour utilization and finds Pareto optimal solutions that are useful for assisting construction project decision-makers.

Adaptive penalty function and distance measure were proposed by [59] to handle both equality and inequality constraints associated with multi-objective optimization problems. The values of the penalty function and distance measure are changed according to the fitness and the average constraint violation of individual to modify the objective function. The modified objective function is used at non-dominated sorting stage to obtain the optimal solution in feasible and infeasible region. The performance of the proposed algorithms was evaluated using eighteen benchmark multi-objective functions. The results of the simulation indicate superior performance of the proposed algorithm over other multi-objective optimization algorithms like multi-objective particle swarm optimization (MOPSO), multi-objective colliding bodies optimization (MOCBO), non-dominated sorting genetic algorithm II (NSGA-II), and two gradient-based multi-objective algorithms such as Multi-Gradient Explorer (MGE) and Multi-Gradient Pathfinder (MGP). Ayala et al. [60] proposed multi-objective SOS (MSOS) algorithm for solving electromagnetic optimization problems, and the MSOS algorithm is based on non-dominance sorting and crowding distance criterion. Furthermore, an improved MSOS (IMSOS) was proposed by replacing the random components of SOS with Gaussian probability distribution function. The results of the proposed algorithms shown interesting performance over NSGA-II.

Abdullahi et al. [61] proposed a chaotic symbiotic organisms search (CMSOS) algorithm for solving multi-objective large-scale task scheduling optimization problem on IaaS cloud computing environment. The chaotic sequence technique is employed to ensure diversity among organisms for global convergence. The CMSOS algorithm ensured optimal trade-offs between execution time and cost.

4.1.2 Combinatorial optimization

SOS algorithm and its variants have been utilized for solving combinatorial optimization problems. Table 4 presents the summary of combinatorial SOS-based algorithms. Cheng et al. [27] proposed discrete version of SOS for solving multiple resources leveling in the multiple projects scheduling problem (MRLMP) to reduce fluctuation in resource utilization during the span of project implementation. The proposed optimization model transforms continuous solutions to discrete solutions to handle MRLMP, because SOS was designed originally for continuous optimization space and MRLMP is a discrete optimization problem. The authors compared the performance of the SOS with GA, PSO, and DE on discrete optimization space using accuracy, solution stability, and satisfaction as performance measure. The SOS was found to be more reliable and efficient as indicated experimental results and statistical tests. Verma et al. [64] proposed a discrete version of SOS for congestion management (CM) problem in deregulated electricity market to reduce load rescheduling cost. The proposed algorithm considered line loading and load bus voltage constraints while handling CM problem to minimize rescheduling cost of generators. The effectiveness of the proposed method in various test cases, and the results obtained by SOS were compared to those of simulated annealing (SA), random search method (RSM), and PSO. The proposed method proved to be efficient on modified IEEE 30- and 57-bus test power system for CM problems. Eki et al. [65] proposed a discrete version of SOS for handling capacitated vehicle routing problem (CVRP), a decoding method for dealing with discrete problem setting of CVRP. The proposed algorithm was tested on a set of classical benchmark problems, and the obtained results showed the superior performance of SOS over the best known results. Authors applied swap reverse local search technique after the execution of the three phases of SOS to improve the quality of solution.

Abdullahi et al. [66] presents discrete version of SOS (DSOS) for solving task scheduling problem in cloud computing environment. It transforms continuous solutions into discrete solutions to fit the task scheduling problem in cloud computing environment. The proposed method was compared with PSO and its variants, and DSOS proved to be more effective and efficient than PSO and its variants. DSOS outperformed PSO and its variants in terms of convergence rate, quality of solution, and scalability. Abdullahi and Ngadi [44] presents hybridized SA and SOS (SASOS) for solving task scheduling problem in cloud computing environment. The SA algorithm was used to improve the local search ability of SOS. The proposed algorithm was used to improve the task execution makespan by taking into consideration the utilization of computing resources. The performance of SASOS was compared with that basic SOS, and SASOS performs better in terms of convergence rate, quality of solution, and scalability. Vincent et al. [34] proposed SOS for solving capacitated vehicle routing problem (CVRP), and the aim of CVRP is to minimize the total route cost when deciding the routes for a set of vehicles. The authors proposed two solution representations for transforming the solution from continuous to discrete search space. They further apply local search strategy and introduced two new interaction called competition and amensalism. Performance of the proposed SOS was evaluated using two set of known benchmark problems. The results obtained by the proposed SOS are better than that of basic SOS and PSO. Zhang et al. [67] developed machine learning framework based on regularized extreme learning machine (ELM) and SOS. SOS is employed for optimizing input weights, bias and regularized factor parameters, while ELM computes the output weights to save computing time. The proposed algorithm was evaluated using data from University of California, Irvine (UCI) dataset repository, and it was found to perform better than the classical classification algorithms like scalable vector machine (SVM), least-squares support-vector machine (LS-SVM), and back-propagation (BP).

4.1.3 Continuous optimization

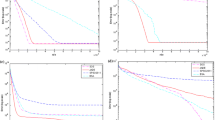

The original SOS was applied to continuous optimization problems taken into account the benchmarks of the standard mathematical optimization functions. Summary of the continuous optimization-based SOS algorithms is presented in Table 5. SOS was originally proposed in [19] for solving unconstrained mathematical and engineering design problems. The evaluation of SOS was carried out on standard numerical benchmark test functions and compared with GA, PSO, PBA, BA, and DE. The results obtained by SOS indicate its superior performance for handling complex numerical optimization problems. SOS was also found to achieve better results when tested with some practical structural design problems.

Kanimozhi et al. [68] proposed SOS for solving Thomson’s problem by determining optimal configuration of N identical point charges on a unit sphere. The performance of the proposed algorithm was carried on values of N ranging from 3 to 300. The results of SOS algorithm provide better optimum energy values than that of evolutionary algorithm and spectral gradient for unconstrained condition (EA_SG2) method. Nama et al. [38] presents hybrid SOS by combining SOS algorithm with simple quadratic interpolation (SQI) to enhance the global search of SOS algorithm. The performance of the proposed algorithm was tested on real-world and large-scale benchmark functions like CEC2005 and CEC2010. The results obtained by the proposed algorithm were compared with other classical metaheuristic algorithms. The proposed algorithm outperforms other state-of-the-art algorithms in terms of quality of solutions and convergence rate.

4.2 SOS algorithms for engineering applications

The growing popularity of SOS as a robust and efficient metaheuristic algorithm has attracted attention of researchers in using SOS algorithm to solve optimization problems arising from various disciplines of science and engineering. While significant success has been achieved in this areas in recent times, optimization of problems in these areas still remains active research issue. As a result, SOS algorithm has been applied to solve optimization problems in the class like economic dispatch [29, 57, 69], power optimization [31, 32], construction project scheduling [58], design optimization of engineering structures [30, 35, 59, 70], transportation [34, 65], energy optimization [68], wireless communication [71, 72], and machine learning [73, 74]. With the trend of application of SOS to optimization problems, SOS has shown to provide all-purpose principles that can easily be adapted to solve wide range of optimization problems in various domains.

4.2.1 Economic dispatch

Economic dispatch problem has attracted several research attentions because of the high concerns about environmental pollution. SOS algorithm and its variants have been applied in solving economic dispatch problems by optimising pollution emission and cost of generation. Summary of SOS application to economic dispatch problems is presented in Table 6. Rajathy et al. [75] present a novel method of using symbiotic organism search algorithm in solving security-constrained economic dispatch proposed SOS for solving security-constrained Economic Load Dispatch Problem. The 6-bus system was used to test the efficiency of the proposed algorithm. The presented simulation results for Economic Load Dispatch with and without transmission constraints showed better convergent rate for the proposed algorithm. Tiwari and Pandit [76] present SOS for handling bid-based economic dispatch problem for deregulated electricity market. SOS algorithm tries to minimize generator cost while satisfying load demands. The efficiency of the proposed algorithm was tested on IEEE-30 bus system with six generators, two customers, and two dispatch periods under low, medium, and high bidding strategies. The results obtained by SOS were compared with other algorithms such as DE and PSO. SOS was found to produce better result than DE and PSO.

Sonmez et al. [77] proposed SOS for handling dynamic economic dispatch problem in modern power system. They considered constraints like ramp rate limits, prohibiting operating zones and valve-point effects. The proposed algorithm was evaluated using 5 units, 10 units, and 13 units systems as test cases. The results of SOS were found to be more robust and converge faster than other metaheuristic algorithms like PSO, GA, SA, and DE. Guvenc et al. [69] proposed SOS for solving both classical and non-convex economic load dispatch (ELD) problems. Authors used three different test cases like 3-unit, 15-unit, and 38-unit power systems to show the efficiency and reliability of the proposed algorithm. SoS outperformed other metaheuristic algorithm in terms of solution quality and convergence rate for both classical and non-convex problems. Secui [29] proposed a modified SOS for solving economic dispatch problem by taking into consideration various constraints valve-point effects, the prohibited operating zones (POZ), the transmission line losses, multi-fuel sources, as well as other operating constraints of the generating units and power system. New relations for updating the phases of SOS were introduced, and chaotic sequence generated by logistic map was employed to improve the exploration capability of SOS. The performance of the proposed algorithm was tested for various systems like 13 units, 40 units, 80 units, 160 units, and 320 units. The proposed algorithm showed better performance compared to other optimization techniques for solving economic dispatch problems.

4.2.2 Power systems optimization

Several related optimization problems have been solved using SOS algorithm, and these problems include optimal power flow, efficient allocation of compensators and capacitors in power systems, optimization of power loss, and power transmission congestion management. Summary of SOS application to power systems optimization problems is presented in Table 7. Duman [78] proposed SOS for solving optimal power flow (OPF) problem with valve-point effect and prohibited zones in modern power systems. The efficiency of the proposed algorithm is tested on modified IEEE 30-bus test system using various cases like without valve-point effect and prohibited zones, with prohibited zones and with valve-point effect, with valve-point effect, and prohibited zones. The obtained results for all the test scenarious by the proposed method were compared with other metaheuristic algorithms in the literature, and SOS proved to be more effective and robust for all the test cases. Banerjee and Chattopadhyay [31] proposed a modified SOS (MSOS) for synthesis of 3-dimensional turbo code for optimization of bit error rate (BER) performance in communication systems. Authors grouped the ecosystem into three inhabitants each with it associated probability. The simulation result indicates that MSOS is performed better in terms of accuracy, speed, and convergence as compared with SOS and harmony search (HS) algorithm.

Guha et al. [41] proposed hybrid SOS called quasi-oppositional symbiotic organism search (QOSOS) algorithm for handling load frequency control (LFC) problem of the power system. The theory of quasi-oppositional based learning (Q-OBL) is integrated into SOS to avoid entrapment in local optima and improve convergence rate. Authors considered two-area and four-area interconnected power system to test the effectiveness of the proposed algorithm. The dynamic performance of the test power systems obtained by QOSOS is better than that of SOS and comprehensive learning PSO. QOSOS also showed better robustness and sensitivity for the test power systems. Balachennaiah and Suryakalavathi [79] presents SOS for solving optimal power flow (OPF) problem, and they attempt to optimize real power loss (RPL) of a transmission system while considering certain constraints. The efficiency of the proposed method is experimented on New England 39-bus system, and the results obtained are compared with those of interior point successive linear programming (IPSLP) and bacteria foraging algorithm (BFA). Authors observed superior performance of the proposed algorithm over IPSL and BFA. Prasad and Mukherjee [80] proposed SOS for optimal power flow (OPF) problem of power system equipped with flexible AC transmission systems. The authors used modified IEEE-30 and IEEE-57 bus test systems to test the efficiency of the proposed algorithm. The OPF was formulated with objective functions such as fuel cost minimization, transmission active power loss minimization, emission reduction, and minimization of combined economic and environmental cost. The simulation results are obtained by the proposed algorithm more effective as compared with hybrid tabu search and simulated annealing (TS/SA) and differential evolution (DE).

Saha et al. [81] proposed SOS algorithm for solving directional over-current relays (DORs) coordination optimization problem in power systems. Authors validated the computational capability of the proposed using IEEE 6-bus and WSCC 9-bus test systems. The obtained results showed significant reduction in operating time of relays while maintaining reliable coordination margin for primary/backup pair as compared to particle swarm optimization (PSO) and teaching learning-based optimization (TLBO). Guha et al. [41] proposed SOS algorithm to solving load frequency control problem (LFC) for design and analysis of interconnected two-area reheat thermal power plant equipped with proportional–integral–derivative (PID) controller. The proposed algorithms enhanced the stability of the power system as compared to DE and PSO. Saha and Mukherjee [39] hybridized SOS with a chaotic local search (CSOS) to improve the solution accuracy and convergence speed of the SOS algorithm, and the parasitism phase of the standard SOS is not considered in the proposed CSOS in order to reduce the computational complexity. The performance evaluation of CSOS was carried out using both twenty-six unconstrained benchmark test functions and real-world power system problem. The CSOS algorithm obtained superior results over the compared algorithms for both benchmark function optimization and power engineering optimization task.

4.2.3 Engineering structures

The need for energy-efficient and eco-friendly buildings has been a major concern worldwide. Modern building designs are tailored towards low-carbon emission and energy efficiency. Therefore, optimal design of residential buildings needs to consider conflicting objectives like low cost, energy efficiency, and minimal environmental impact. SOS algorithms have been employed for solving optimization problems arising from such design specification, and the summary of the application is presented in Table 8.

Talatahari [87] presents discrete version of SOS for solving structural optimization problem. The 3-bay 24-story frame problem was used to evaluate the efficiency of the proposed algorithm. The proposed algorithm performs better than other metaheuristic algorithms like ACO, HS, and imperialist competitive algorithm (ICA). Tejani et al. [35] introduced adaptive benefit factors in basic SOS to keep the balance between exploration and exploitation of search space. The efficiency of the proposed algorithm was tested on sets of engineering structure optimization problems. Six planar and space trusses subject to multiple natural frequency constraints were used to study the effectiveness of the proposed algorithm. The performance of the proposed algorithms was compared with other metaheuristic algorithms like NHGA, NHPGA, CSS, enhanced CSS, HS, FA, CSS–BBBC, OC, GA, hybrid OC–GA, CBO, 2D–CBO, PSO, and DPSO. Prayogo et al. [70] presents SOS algorithm for solving civil engineering problem with many design variables and constraints. The performance of SOS was evaluated using benchmark problems and three civil engineering problems, and the results of simulation indicated that SOS is more effective and efficient than the compared algorithms. The proposed model is a promising tool for assisting civil engineers to make decisions to minimize the expenditure of material and financial resources.

4.2.4 Wireless communication

Improved SOS algorithms have been to found optimal solutions in terms of covered and efficient energy consumption in wireless communications systems than the original SOS and their counterpart swarm intelligence algorithms. Dib [71] proposed SOS for handling design of linear antenna arrays with low sidelobes level. The proposed algorithm produces a radiation pattern with minimum sidelobe level as compared with other metaheuristics algorithms like PSO, biography-based optimization (BBO), and Taguchi. Das et al. [89] presents SOS for determining optimal size and location of distributed generation (DG) in radial distributed network (RDN) for the reduction in network loss taking into consideration deterministic load demand. The performance of the proposed algorithm was tested using different RDNs like 33-bus and 69-bus distribution networks. The results obtained by the proposed algorithm are compared with other metaheuristic algorithms such as particle swarm optimization (PSO), teaching-learning-based optimization (TLO), cuckoo search (CS), artificial bee colony (ABC), gravitational search algorithm (GSA), and stochastic fractal search (SFS). The proposed algorithm offers better solution in terms of minimum loss and convergence mobility.

Banerjee and Chattopadhyay [32] presents a modified SOS (MSOS) algorithm for optimizing the performance of 3-dimensional turbo code (3D-TC) in controlling error coding scheme to improve adequate redundancy in communications system. The proposed MSOS improves the performance of bit error rate (BER) of 3D-TC as compared to 8-state Duo-Binary Turbo Code (DB-TC) of the Digital Video Broadcasting Return Channel via Satellite standard (DVB-RCS) and Serially Concatenated Convolution Turbo Code (SCCTC) structures.

4.2.5 Other engineering applications

Application of SOS algorithms to other engineering areas is presented in Table 9. Bozorg-Haddad et al. [90] applied SOS algorithm to optimize single objective and multi-objective reservoir optimization problems. The proposed algorithm outperforms GA and water cycle algorithm (WCA) for solving single objective and multi-objective reservoir formulations. Ayala et al. [60] proposed multi-objective SOS (MSOS) algorithm for solving electromagnetic optimization problems, and the MSOS algorithm is based on non-dominance sorting and crowding distance criterion. Furthermore, an improved MSOS (IMSOS) was proposed by replacing the random components of SOS with Gaussian probability distribution function. The results of the proposed algorithms shown interesting performance over NSGA-II. Nama et al. [30] presents improved version of SOS (I-SOS) for solving unconstrained global optimization problems. The authors proposed an additional phase named predation phase to improve the performance of the algorithm, and they also introduce random weighted reflection vector to improve the search capability of SOS. The performance of the proposed algorithm was tested on a set of benchmark functions and compared with other state-of-the-art metaheuristics algorithms like PSO, DE, and basic SOS. The performance results indicate that I-SOS outperformed PSO, DE, and SOS.

Sadek et al. [91] proposed SOS algorithm for controlling instability and high input nonlinearity of the magnetic levitation system, and SOS algorithm was used to provide initial adaptive and control parameters. Adaptive fuzzy backstepping control strategy was used to approximate the uncertain parameters and structures to minimize tracking error and improve stability of the system. Ljapunov stability theorem was employed to update the parameters of the improved fuzzy backstepping control during each sampling period. The simulation results of the proposed system were verified by the experimental results which support theoretical background of the proposed control method.

4.3 Other application areas

SOS algorithms have also been applied in solving problems in the area of machine of learning [73, 74], transportation [34, 65], and cloud computing [40, 44, 61, 66, 92, 93].

4.3.1 Machine learning

In recent years, machine learning techniques have been widely used in scientific and industrial applications. However, the main drawback of these techniques is how to determine the optimal value of the parameters to be tuned. To address this issue, metaheuristic algorithms have been employed for parameter optimization purposes. SOS algorithms have been proved to be efficient in tuning parameters for machine learning techniques.

Wu et al. [74] proposed SOS algorithm for efficient training of feedforward neural networks (FNNs) by optimizing the weights and biases, and performance of the proposed algorithm was investigated using eight different datasets selected from the UCI machine learning repository. The obtained results of eight datasets with different characteristic show that the proposed approach is efficient to train FNNs compared to other training methods that have been used in the literature: cuckoo search (CS), PSO, GA, multiverse optimizer (MVO), gravitational search algorithm (GSA), and biogeography-based optimizer (BBO). Nanda and Jonwal [73] proposed SOS algorithm for training the weights of wavelet neural network (WNN) for equalizer design in order to prevent inter-symbol interference in communication channels. The performance of SOS-based WNN trained equalizer is compared with WNN trained by cat swarm optimization (CSO) and clonal selection algorithm (CLONAL), particle swarm optimization(PSO), and least mean square algorithm (LMS). Furthermore, the performance of the WNN structure-based equalizer was compared with other equalizers with structure based on functional link artificial neural network (trigonometric FLANN), radial basis function network (RBF), and finite impulse response filter (FIR). The results of SOS showed robust performance in handling the burst error conditions.

4.3.2 Other applications

Other related applications of SOS algorithms go to other areas like transportation [34, 65] and cloud computing [40, 44, 61, 66, 92, 93]. Summary of such applications is presented in Table 10.

5 Discussions and future works

In this section, the features of SOS algorithm are discussed. SOS algorithms are suitable for solving both unimodal and multi-modal optimization problems, and they have global and fast convergence, thus obtaining better results on both continuous and discrete optimization problems. The fast convergence of SOS is attributed to deterioration of diversity among the organisms as the search procedure progresses. For SOS algorithm to be appropriate for large-scale optimization problems, there is need to establish a balance between local and global search [95, 96].

The results of SOS algorithms are influenced by the best solution found within the ecosystem; therefore, improving the best solution can improve the efficiency of search mechanism of the ecosystem. Experimental results indicate that SOS algorithms could be successfully applied to complex optimization problems. In some situations, the convergence rate of SOS algorithm could be improved by increasing diversity of solutions within the search space using chaotic maps. Adaptive turning of SOS control parameters (benefit factors) could be suitable for improving the global convergence. Incorporation of chaotic maps and weighted parameters into SOS move function could improve the convergence speed and quality of solutions. However, there are several rooms for further modifications and developments, for instance parallelization using multi-population (multi-ecosystem) and co-evolutionary scheme [97, 98].

Most of the analyzed papers hybridized SOS algorithm with local search techniques such as simulated annealing (SA), chaotic local search (CLS), simple quadratic interpolation (SQI), and quasi-oppositional based learning (Q-OBL). The SOS algorithm serves as a global optimizer, while the local search techniques further improve the global solutions. However, SOS algorithm can be hybridized with other robust local search and global search optimizers such as PSO, DE, and GA. Also, hybridizations that support integration of genetic operators as well as PSO and with SOS algorithms will be an future development [99, 100]. Other possibles future developments could be the hybridization of SOS algorithms with machine learning techniques where SOS acts as metaheuristic for providing optimal parameter settings for the machine learning techniques [101,102,103,104,105].

Various combinatorial optimization problems have been solved with SOS algorithm, the algorithm found better solution in some cases, while the solutions found in other cases are not acceptable. The main issue here is the imbalance between local search and global search during the SOS procedure. The imbalance problem between local search and global search could be addressed hybridizing SOS algorithm with local search optimizers like variable neighbourhood search [106], hill-climb local search [107]. Another ways of controlling balance between local and global search are through diversity maintenance and control [108]. Maintaining proper balance between local search and global search indicates new directions for future development of SOS algorithm. Moreover, using SOS algorithm for combinatorial optimization problems requires transforming continuous variables in the search space to discrete variables in the problem space. The SOS algorithms for solving the constrained optimization problems regard the constraints as a penalty function which punishes the solutions that violates the constraints known as infeasible solutions. The value of penalty function corresponds to the extent of violation of the constraints, which affects the fitness function. However, there is need for more effective and efficient methods for penalizing infeasible solutions when using SOS algorithms for solving constrained optimization problems.

In spite of the recorded success of SOS algorithm, there has not been any attempt to present theoretical analysis of the algorithm. Experimental results showed that SOS algorithms often converge faster to global solutions; however, there is no theoretical analysis to show how fast the algorithm converges. The theoretical developments of SOS algorithms need to be explored in order to gain a thorough understanding of how SOS algorithm works. The theories of complexity and Markov chains can be employed for convergence analysis and stability of main variants of SOS algorithms []. In fact, theoretical analysis is an open problems regarding all metaheuristic algorithms [109].

Parameter tuning is another area for all metaheuristic algorithms. The only control parameter of SOS is the benefit factors which control the extent of exploitation and exploration ability of the organisms. The values for these parameters are suggested to be statically assigned in the original proposal of SOS algorithm. Therefore, there is need for studies in determining optimal settings for algorithm control parameters for wider application to solve range of optimization problems with little tuning of control parameter values [63]. However, design of automatic schemes for tuning algorithm control parameters in an intelligent and adaptive manner is still an open optimization problem.

SOS algorithms have been applied to various optimization problems as evidenced from this view, and the applications can be extended to the areas like data mining, feature selection, bio-informatics, scheduling, and real-world large-scale optimization [110]. It is indisputable that more applications of SOS algorithms will surface in the near future.

6 Conclusions

SOS algorithms have been applied to optimization problems in various domains since its introduction in 2014. SOS has proven to be efficient for optimizing complex multidimensional search space while handling multi-objective and constrained optimization problems. Active researches on SOS since its introduction include hybridization, discrete optimization problems, constrained and multi-objective optimization. Hybridization intends to combine the strengths of SOS like global search ability and rapid optimization, with other related techniques to address some of the issues with SOS performance, like entrapment in local optima. SOS algorithm has been to be very effective and easily adaptable to various application requirements, with potentials for hybridization and modifications though SOS still faces challenges like local optima entrapment, imbalance between local search and global search, constraint handling, large-scale optimization, and multi-objective optimization, and these are still important research focus as evident from the literature. Further understanding and refinements of SOS algorithm and challenges of using it to solve large-scale optimization problems are required.

References

Manjarres D, Landa-Torres I, Gil-Lopez S, Del Ser J, Bilbao MN, Salcedo-Sanz S, Geem ZW (2013) A survey on applications of the harmony search algorithm. Eng Appl Artif Intell 26(8):1818–1831

Ma H, Simon D, Fei M, Shu X, Chen Z (2014) Hybrid biogeography-based evolutionary algorithms. Eng Appl Artif Intell 30:213–224

Li B, Li Y, Gong L (2014) Protein secondary structure optimization using an improved artificial bee colony algorithm based on ab off-lattice model. Eng Appl Artif Intell 27:70–79

Sedghizadeh S, Beheshti S (2018) Particle swarm optimization based fuzzy gain scheduled subspace predictive control. Eng Appl Artif Intell 67:331–344

Sarkhel R, Das N, Saha AK, Nasipuri M (2018) An improved harmony search algorithm embedded with a novel piecewise opposition based learning algorithm. Eng Appl Artif Intell 67:317–330

Ghasemi M, Taghizadeh M, Ghavidel S, Aghaei J, Abbasian A (2015) Solving optimal reactive power dispatch problem using a novel teaching-learning-based optimization algorithm. Eng Appl Artif Intell 39:100–108

Lim WH, Isa NAM (2015) Particle swarm optimization with dual-level task allocation. Eng Appl Artif Intell 38:88–110

Haixiang G, Yijing L, Yanan L, Xiao L, Jinling L (2016) Bpso-adaboost-knn ensemble learning algorithm for multi-class imbalanced data classification. Eng Appl Artif Intell 49:176–193

Moosavi SHS, Bardsiri VK (2017) Satin bowerbird optimizer: a new optimization algorithm to optimize anfis for software development effort estimation. Eng Appl Artif Intell 60:1–15

Chen Z-S, Zhu B, He Y-L, Le-An Y (2017) A pso based virtual sample generation method for small sample sets: applications to regression datasets. Eng Appl Artif Intell 59:236–243

Deb K, Pratap A, Agarwal S, Meyarivan TAMT (2002) A fast and elitist multiobjective genetic algorithm: NSGA-II. IEEE Trans Evol Comput 6(2):182–197

Qin AK, Huang VL, Suganthan PN (2009) Differential evolution algorithm with strategy adaptation for global numerical optimization. IEEE Trans Evol Comput 13(2):398–417

Kennedy J (2011) Particle swarm optimization. In: Encyclopedia of machine learning. Springer, pp 760–766

Pham DT, Ghanbarzadeh A, Koc E, Otri S, Rahim S, Zaidi M (2011) The bees algorithm–a novel tool for complex optimisation. In: Intelligent production machines and systems-2nd I* PROMS virtual international conference (3–14 July 2006)

Cheng M-Y, Lien L-C (2012) Hybrid artificial intelligence-based PBA for benchmark functions and facility layout design optimization. J Comput Civil Eng 26(5):612–624

Doerner K, Gutjahr WJ, Hartl RF, Strauss C, Stummer C (2004) Pareto ant colony optimization: a metaheuristic approach to multiobjective portfolio selection. Ann Oper Res 131(1):79–99

Dorigo M, Birattari M, Stutzle T (2006) Ant colony optimization. IEEE Comput Intell Mag 1(4):28–39

Karaboga D, Basturk B (2007) A powerful and efficient algorithm for numerical function optimization: artificial bee colony (abc) algorithm. J Glob Optim 39(3):459–471

Cheng M-Y, Prayogo D (2014) Symbiotic organisms search: a new metaheuristic optimization algorithm. Comput Struct 139:98–112

Yang X-S, Deb S (2009) Cuckoo search via lévy flights. In: World congress on nature and biologically inspired computing, 2009. NaBIC 2009. IEEE, pp 210–214

Yang X-S (2010) Firefly algorithm, stochastic test functions and design optimisation. Int J Bio-Inspired Comput 2(2):78–84

Chu S-C, Tsai P-W, Pan J-S (2006) Cat swarm optimization. In: Pacific Rim international conference on artificial intelligence. Springer, pp 854–858

Jiang X, Li S (2017) Bas: beetle antennae search algorithm for optimization problems. arXiv preprint arXiv:1710.10724

Jiang X, Li S (2017) Beetle antennae search without parameter tuning (bas-wpt) for multi-objective optimization. arXiv preprint arXiv:1711.02395

Khan AT, Senior SL, Stanimirovic PS, Zhang Y (2018) Model-free optimization using eagle perching optimizer. arXiv preprint arXiv:1807.02754

Crepinsek M, Mernik M, Liu S-H (2011) Analysis of exploration and exploitation in evolutionary algorithms by ancestry trees. Int J Innov Comput Appl 3(1):11–19

Cheng M-Y, Prayogo D, Tran D-H (2015) Optimizing multiple-resources leveling in multiple projects using discrete symbiotic organisms search. J Comput Civ Eng 30(3):04015036

Wolpert DH, Macready WG (1997) No free lunch theorems for optimization. IEEE Trans Evol Comput 1(1):67–82

Secui DC (2016) A modified symbiotic organisms search algorithm for large scale economic dispatch problem with valve-point effects. Energy 113:366–384

Nama S, Saha A, Ghosh S (2016) Improved symbiotic organisms search algorithm for solving unconstrained function optimization. Decis Sci Lett 5(3):361–380

Banerjee S, Chattopadhyay S (2017) Power optimization of three dimensional turbo code using a novel modified symbiotic organism search (MSOS) algorithm. Wirel Pers Commun 92(3):941–968

Banerjee S, Chattopadhyay S (2016) Optimization of three-dimensional turbo code using novel symbiotic organism search algorithm. In: 2016 IEEE annual India conference (INDICON). IEEE, pp 1–6

Miao F, Zhou Y, Luo Q (2018) A modified symbiotic organisms search algorithm for unmanned combat aerial vehicle route planning problem. J Oper Res Soc 70:1–32

Vincent FY, Redi AP, Yang CL, Ruskartina E, Santosa B (2016) Symbiotic organism search and two solution representations for solving the capacitated vehicle routing problem. Appl Soft Comput 52:657–672

Tejani GG, Savsani VJ, Patel VK (2016) Adaptive symbiotic organisms search (SOS) algorithm for structural design optimization. J Comput Des Eng 3(3):226–249

Spendley WGRFR, Hext GR, Himsworth FR (1962) Sequential application of simplex designs in optimisation and evolutionary operation. Technometrics 4(4):441–461

Jaszkiewicz A (2002) Genetic local search for multi-objective combinatorial optimization. Eur J Oper Res 137(1):50–71

Nama S, Saha AK, Ghosh S (2016) A hybrid symbiosis organisms search algorithm and its application to real world problems. Memet Comput 9:1–20

Saha S, Mukherjee V (2018) A novel chaos-integrated symbiotic organisms search algorithm for global optimization. Soft Comput 22(11):3797–3816

Abdullahi M, Ngadi MA, Dishing SI (2017) Chaotic symbiotic organisms search for task scheduling optimization on cloud computing environment. In: 2017 6th ICT international student project conference (ICT-ISPC). IEEE, pp 1–4

Guha D, Roy P, Banerjee S (2017) Quasi-oppositional symbiotic organism search algorithm applied to load frequency control. Swarm Evol Comput 33:46–67

Çelik E, Öztürk N (2018) A hybrid symbiotic organisms search and simulated annealing technique applied to efficient design of pid controller for automatic voltage regulator. Soft Comput 22(23):8011–8024

Sulaiman M, Ahmad A, Khan A, Muhammad S (2018) Hybridized symbiotic organism search algorithm for the optimal operation of directional overcurrent relays. Complexity 2018:1–11

Abdullahi M, Ngadi MA (2016) Hybrid symbiotic organisms search optimization algorithm for scheduling of tasks on cloud computing environment. PLoS ONE 11(6):e0158229

Ezugwu AE-S, Adewumi AO, Frîncu ME (2017) Simulated annealing based symbiotic organisms search optimization algorithm for traveling salesman problem. Expert Syst Appl 77:189–210

Çelik E, Öztürk N (2018b) First application of symbiotic organisms search algorithm to off-line optimization of PI parameters for DSP-based DC motor drives. Neural Comput Appl 30(5):1689–1699

Yalcın GD, Erginel N (2015) Fuzzy multi-objective programming algorithm for vehicle routing problems with backhauls. Expert Syst Appl 42(13):5632–5644

Akbari M, Rashidi H (2016) A multi-objectives scheduling algorithm based on cuckoo optimization for task allocation problem at compile time in heterogeneous systems. Expert Syst Appl 60:234–248

Reina DG, Ciobanu R-I, Toral SL, Dobre C (2016) A multi-objective optimization of data dissemination in delay tolerant networks. Expert Syst Appl 57:178–191

Türk S, Özcan E, John R (2017) Multi-objective optimisation in inventory planning with supplier selection. Expert Syst Appl 78:51–63

Bandaru S, Ng AHC, Deb K (2017) Data mining methods for knowledge discovery in multi-objective optimization: part a-survey. Expert Syst Appl 70:139–159

Rao RV, Rai DP, Balic J (2017) A multi-objective algorithm for optimization of modern machining processes. Eng Appl Artif Intell 61:103–125

Savsani V, Tawhid MA (2017) Non-dominated sorting moth flame optimization (NS-MFO) for multi-objective problems. Eng Appl Artif Intell 63:20–32

Zou F, Wang L, Hei X, Chen D, Wang B (2013) Multi-objective optimization using teaching-learning-based optimization algorithm. Eng Appl Artif Intell 26(4):1291–1300

Tolmidis AT, Petrou L (2013) Multi-objective optimization for dynamic task allocation in a multi-robot system. Eng Appl Artif Intell 26(5):1458–1468

Zhang Z, Wang X, Lu J (2018) Multi-objective immune genetic algorithm solving nonlinear interval-valued programming. Eng Appl Artif Intell 67:235–245

Dosoglu MK, Guvenc U, Duman S, Sonmez Y, Kahraman HT (2018) Symbiotic organisms search optimization algorithm for economic/emission dispatch problem in power systems. Neural Comput Appl 29(3):721–737

Tran D-H, Cheng M-Y, Prayogo D (2016) A novel multiple objective symbiotic organisms search (MOSOS) for time-cost-labor utilization tradeoff problem. Knowl Based Syst 94:132–145

Panda A, Pani S (2016) A symbiotic organisms search algorithm with adaptive penalty function to solve multi-objective constrained optimization problems. Appl Soft Comput 46:344–360

Ayala H, Klein C, Mariani V, Coelho L (2017) Multi-objective symbiotic search algorithm approaches for electromagnetic optimization. IEEE Trans Magn 53:1–4

Abdullahi M, Ngadi MA, Dishing SI, Ahmad BI (2019) An efficient symbiotic organisms search algorithm with chaotic optimization strategy for multi-objective task scheduling problems in cloud computing environment. J Netw Comput Appl 133:60–74

Ali M, Siarry P, Pant M (2012) An efficient differential evolution based algorithm for solving multi-objective optimization problems. Eur J Oper Res 217(2):404–416

Wang Y-N, Wu L-H, Yuan X-F (2010) Multi-objective self-adaptive differential evolution with elitist archive and crowding entropy-based diversity measure. Soft Comput 14(3):193–209

Verma S, Saha S, Mukherjee V (2015) A novel symbiotic organisms search algorithm for congestion management in deregulated environment. J Exp Theor Artif Intell 29:1–21

Eki R, Vincent FY, Budi S, Redi AANP (2015) Symbiotic organism search (sos) for solving the capacitated vehicle routing problem. World Acad Sci Eng Technol Int J Mech Aerosp Ind Mechatron Manuf Eng 9(5):850–854

Abdullahi M, Ngadi MA et al (2016) Symbiotic organism search optimization based task scheduling in cloud computing environment. Future Gener Comput Syst 56:640–650

Zhang B, Sun L, Yuan H, Lv J, Ma Z (2016) An improved regularized extreme learning machine based on symbiotic organisms search. In: 2016 IEEE 11th conference on industrial electronics and applications (ICIEA). IEEE, pp 1645–1648

Kanimozhi G, Rajathy R, Kumar H (2016) Minimizing energy of point charges on a sphere using symbiotic organisms search algorithm. Int J Electr Eng Inform 8(1):29

Guvenc U, Duman S, Dosoglu MK, Kahraman HT, Sonmez Y, Yılmaz C (2016) Application of symbiotic organisms search algorithm to solve various economic load dispatch problems. In: 2016 international symposium on innovations in intelligent systems and applications (INISTA). IEEE, pp 1–7

Prayogo D, Cheng M-Y, Prayogo H (2017) A novel implementation of nature-inspired optimization for civil engineering: a comparative study of symbiotic organisms search. Civ Eng Dimens 19(1):36–43

Dib N (2016) Synthesis of antenna arrays using symbiotic organisms search (SOS) algorithm. In: 2016 IEEE international symposium on antennas and propagation (APSURSI). IEEE, pp 581–582

Dib NI (2016) Design of linear antenna arrays with low side lobes level using symbiotic organisms search. Prog Electromagn Res B 68:55–71

Nanda SJ, Jonwal N (2017) Robust nonlinear channel equalization using wnn trained by symbiotic organism search algorithm. Appl Soft Comput 57:197–209

Wu H, Zhou Y, Luo Q, Basset MA (2016) Training feedforward neural networks using symbiotic organisms search algorithm. Comput Intell Neurosci 2016:1–14

Rajathy R, Taraswinee B, Suganya S (2015) A novel method of using symbiotic organism search algorithm in solving security-constrained economic dispatch. In: 2015 international conference on circuit, power and computing technologies (ICCPCT). IEEE, pp 1–8

Tiwari A, Pandit M (2016) Bid based economic load dispatch using symbiotic organisms search algorithm. In: 2016 IEEE international conference on engineering and technology (ICETECH). IEEE, pp 1073–1078

Sonmez Y, Kahraman HT, Dosoglu MK, Guvenc U, Duman S (2017) Symbiotic organisms search algorithm for dynamic economic dispatch with valve-point effects. J Exp Theor Artif Intell 29(3):495–515

Duman S (2016) Symbiotic organisms search algorithm for optimal power flow problem based on valve-point effect and prohibited zones. Neural Comput Appl 28:1–15

Balachennaiah P, Suryakalavathi M (2015) Real power loss minimization using symbiotic organisms search algorithm. In: 2015 annual IEEE India conference (INDICON). IEEE, pp 1–6

Prasad D, Mukherjee V (2016) A novel symbiotic organisms search algorithm for optimal power flow of power system with facts devices. Eng Sci Technol Int J 19(1):79–89

Saha D, Datta A, Das P (2016) Optimal coordination of directional overcurrent relays in power systems using symbiotic organism search (sos) optimization technique. IET Gener Transm Distrib 10:2681–2688

Zamani MKM, Musirin I, Suliman SI (2017) Symbiotic organisms search technique for SVC installation in voltage control. Indones J Electr Eng Comput Sci 6(2):318–329

Baysal YA, Altas IM (2017) Power quality improvement via optimal capacitor placement in electrical distribution systems using symbiotic organisms search algorithm. Mugla J Sci Technol 3:64–68

Das S, Bhattacharya A (2016) Symbiotic organisms search algorithm for short-term hydrothermal scheduling. Ain Shams Eng J 9(4):499–516

Guha D, Roy PK, Banerjee S (2018) Symbiotic organism search algorithm applied to load frequency control of multi-area power system. Energy Syst 9(2):439–468

Kahraman HT, Dosoglu MK, Guvenc U, Duman S, Sonmez Y (2016) Optimal scheduling of short-term hydrothermal generation using symbiotic organisms search algorithm. In: 2016 4th international Istanbul smart grid congress and fair (ICSG). IEEE, pp 1–5

Talatahari S (2016) Symbiotic organisms search for optimum design of frame and grillage systems. Asian J Civ Eng (BHRC) 17(3):299–313

Nama S, Saha A (2018) An ensemble symbiosis organisms search algorithm and its application to real world problems. Decis Sci Lett 7(2):103–118

Das B, Mukherjee V, Das D (2016) Dg placement in radial distribution network by symbiotic organism search algorithm for real power loss minimization. Appl Soft Comput 49:920–936

Bozorg-Haddad O, Azarnivand A, Hosseini-Moghari SM, Loáiciga HA (2017) Optimal operation of reservoir systems with the symbiotic organisms search (SOS) algorithm. J Hydroinformatics 19:jh2017085

Sadek U, Sarjaš A, Chowdhury A, Svečko R (2017) Improved adaptive fuzzy backstepping control of a magnetic levitation system based on symbiotic organism search. Appl Soft Comput 56:19–33

Anwar N, Deng H (2017) Optimization of scientific workflow scheduling in cloud environment through a hybrid symbiotic organism search algorithm. Sci Int 29:499–502

Kumar KP, Kousalya K, Vishnuppriya S (2017) Dsos with local search for task scheduling in cloud environment. In: 2017 4th international conference on advanced computing and communication systems (ICACCS). IEEE, pp 1–4

Ezugwu AE, Adewumi AO (2017) Discrete symbiotic organisms search algorithm for travelling salesman problem. Expert Syst Appl 87:70–78

Yang X-S (2011a) Review of meta-heuristics and generalised evolutionary walk algorithm. Int J Bio-Inspired Comput 3(2):77–84

Fister I, Yang X-S, Brest J (2013) A comprehensive review of firefly algorithms. Swarm Evol Comput 13:34–46

Chen Y-H, Huang H-C (2015) Coevolutionary genetic watermarking for owner identification. Neural Comput Appl 26(2):291–298

Li X, Yao X (2012) Cooperatively coevolving particle swarms for large scale optimization. IEEE Trans Evol Comput 16(2):210–224

Kazemi SMR, Minaei Bidgoli B, Shamshirband S, Karimi SM, Ghorbani MA, Chau K, Kazem Pour R (2018) Novel genetic-based negative correlation learning for estimating soil temperature. Eng Appl Comput Fluid Mech 12(1):506–516

Lee CKH (2018) A review of applications of genetic algorithms in operations management. Eng Appl Artif Intell 76:1–12

Taormina R, Chau K-W, Sivakumar B (2015) Neural network river forecasting through baseflow separation and binary-coded swarm optimization. J Hydrol 529:1788–1797

Moazenzadeh R, Mohammadi B, Shamshirband S, Chau K (2018) Coupling a firefly algorithm with support vector regression to predict evaporation in northern Iran. Eng Appl Comput Fluid Mech 12(1):584–597

Wu CL, Chau KW (2011) Rainfall-runoff modeling using artificial neural network coupled with singular spectrum analysis. J Hydrol 399(3–4):394–409

Zhang S, Chau K-W (2009) Dimension reduction using semi-supervised locally linear embedding for plant leaf classification. In: International conference on intelligent computing. Springer, pp 948–955

Hajikhodaverdikhan P, Nazari M, Mohsenizadeh M, Shamshirband S, Chau K (2018) Earthquake prediction with meteorological data by particle filter-based support vector regression. Eng Appl Comput Fluid Mech 12(1):679–688

Hansen P, Mladenović N, Urošević D (2006) Variable neighborhood search and local branching. Comput Oper Res 33(10):3034–3045

Geng J, Huang M-L, Li M-W, Hong W-C (2015) Hybridization of seasonal chaotic cloud simulated annealing algorithm in a SVR-based load forecasting model. Neurocomputing 151:1362–1373

Črepinšek M, Liu S-H, Mernik M (2013) Exploration and exploitation in evolutionary algorithms: a survey. ACM Comput Surv (CSUR) 45(3):35

Yang X-S (2011) In: International symposium on experimental algorithms. Springer, pp 21–32

Zamuda A, Brest J (2012) Population reduction differential evolution with multiple mutation strategies in real world industry challenges. In: Swarm and evolutionary computation. Springer, pp 154–161

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Abdullahi, M., Ngadi, M.A., Dishing, S.I. et al. A survey of symbiotic organisms search algorithms and applications. Neural Comput & Applic 32, 547–566 (2020). https://doi.org/10.1007/s00521-019-04170-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-019-04170-4