Abstract

In this research paper, we propose a new iterative algorithm for finding a common solution to fixed point problems of demicontractive mapping and variational inequality problems which involves monotone and Lipschitz continuous operators in the framework of real Hilbert spaces. We incorporate a viscosity iterative technique, using subgradient extragradient method, we prove under standard assumptions that the iterative sequence generated from our algorithm strongly converges to the solution set, assuming the solution set is consistent. Furthermore, we adopt a self-adaptive stepsize that is being generated at each iteration, which is independent of the Lipschitz constant of the singled-valued operator. Our result is an improvement and an extension of many results in this direction.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Our purpose in this paper is to study an interesting combination of problems of finding a fixed point of a given nonlinear operator which turns out to solve variational inequalities in real Hilbert spaces. Let C be a nonempty closed convex subset of a real Hilbert space \({\mathcal {H}}\), with inner product \(\langle \cdot , \cdot \rangle \) and an induced norm \(\Vert .\Vert .\) Let \(T: C\rightarrow C\) be nonlinear. The map T has a fixed point if \(Tx=x\) and the set of fixed point of T is denoted by \(F(T):=\lbrace x\in C: Tx=x\rbrace \ne \emptyset .\) The variational inequality problem (VIP) and fixed point problem (FPP)is formulated as:

where f is a single-valued mapping defined on C.

Let the solution set of (1.1) be denoted by \(\Gamma :=VI(C,f) \cap F(T) \ne \emptyset .\) Problem (1.1) is a generalization of many optimization problems and has been studied by many researchers in different capacities (see, [1, 4, 37, 38] and contained references). Basically, (1.1) includes two remarkable and striking problems:

-

1.

The FPP, which can be defined as follows:

$$\begin{aligned} \text{ find } \ \ \ x\in C \ \ \ \text{ such } \text{ that } \ \ \ T(x)=x, \end{aligned}$$(1.2)provided the \(F(T) \ne \emptyset \).

-

2.

Another important problem embeded in problem (1.1) is the well known VIP which has following structure:

$$\begin{aligned} \text{ find }\ \ \ x\in C \ \ \text{ such } \text{ that } \ \ \ \langle f(x), y-x\rangle \ge 0, \ \ \forall y\in C. \end{aligned}$$(1.3)Let VIP(C, f) and SOL VIP(C, f) denote problem (1.3) and its solution set, respectively. It is important to note that problem (1.3) is a unifying and an essential modelling tool in many field such as economics, programming, engineering mechanics and many more, for examples see [2, 5, 16] and references contained therein. This concept was introduced and studied by Stampacchia [34], for the purpose of modelling problems in mechanics.

There are methods for solving (1.3) which include: regularization method and projection method. In what follows, our focus for solving (1.3) is the projection method. This method involves construction of an iterative algorithm of the form:

$$\begin{aligned} x_{n+1} = P_C(x_n - \lambda f x_n), n\ge 1, \end{aligned}$$(1.4)where \(\lambda \) is positive and \(P_C\) is a projection onto closed convex subset C. With this method, it is well known that the problem (1.3) is equivalent to the following fixed point problem:

$$\begin{aligned} \text{ find } \ \ x\in C \ \ \text{ such } \text{ that } \ \ \ x=P_C(x-\lambda f(x)), \end{aligned}$$(1.5)for an arbitrary positive constant, \(\lambda .\) The basic projection method for solving VIP involves the gradient method, which performs only one iteration onto the feasible set. This method requires that the operators are inverse strongly monotone or strongly monotone (see [12]) for the iterative sequence to converge to the solution set. These conditions are very strong and quite restrictive. To circumvent this challenge, Korpelevich [18] while studying sadle point problems introduced a concept called extragradient method. It was further extended to VIPs for both Euclidean and Hilbert spaces. To be precise, Korpelevich [18] constructed the following algorithm:

$$\begin{aligned} {\left\{ \begin{array}{ll} x_0 \in C,\\ y_n = P_C(x_n -\mu Ax_n),\\ x_{n+1} = P_C(x_n - \mu Ay_n), \end{array}\right. } \end{aligned}$$(1.6)where \(\mu \in (0,\frac{1}{L}),\) the singled-valued operator\( A: H\rightarrow H\) is monotone and L-Lipschitz continuous and \(P_C\) is a projection onto C. He proved that the recursive sequence \(\lbrace x_n \rbrace \) generated by (1.6) converged weakly to the solution set SOL VIP(C, A).

Since the the introduction of (1.6), many authors have modified it in various forms (see, [8, 17, 20] and the cited references therein).

In 2006, Nadezhkina and Takahashi [26] used the concept of hybrid and shrinking projection techniques to construct an extragradient based method and obtained a strong convergence. In short, in [31], the following algorithm is presented:

Observe that the computation of the algorithm (1.6) requires computing two projections per iteration. It is known that projections onto closed convex set \(P_C\) has no closed form of expression. To drop the second \(P_C\) from the algorithm (1.6), Censor et al. [5] introduced a subgradient extragradient method and constructed the following iterative scheme:

The authors of [5] considered a projection onto a half space \(P_{T_n}\) which has a closed form of expression. They proved under some mild conditions that the sequence \(\lbrace x_n \rbrace \) generated by (1.8) converged weakly to the solution. They further modified (1.8), using a hybrid and shrinking projection method as contained in (1.7) and obtained a strong convergence(see, [6]). It is pertinent to mention that Censor et al. [40] extended [5] to Euclidean spaces. Based on this improvement in [6, 26], many researchers have used other techniques to obtain a strong convergence (see [14, 19, 23, 37]).

In recent years, there has been a tremendous interest in developing fast convergence of algorithms, especially for the inertial type extrapolation method which was first proposed by Polyak in [31]. This inertial technique is based on a discrete analogue of a second order dissipative methods. This method was not known until the Nesterov’s acceleration gradient methods was published in 1983 (see, [27]) and by 2009, Beck and Teboulle [3] made it very popular. Recently, some researchers have constructed different fast iterative algorithms by means of inertial extrapolation techniques, for example, inertial Mann algorithm [25], inertial forward-backward splitting algorithm [21], inertial extragradient algorithm [22, 36], inertial projection algorithm [35], and fast iterative shrinkage-thresholding algorithm (FISTA) [3]. Recent results on the use of inertial method can be found in [8, 10, 11, 41,42,43] and contained references

Remark 1.1

We note that the algorithms (1.6)–(1.8) have a major drawback on the stepsize \(\mu \) in the sense that, they heavily rely on the Lipschitz constant of the given operator. This dependence on the Lipschitz constant affects the efficiency of the algorithms. In many practical importance, the Lipschitz constants are not known and in several occasions, difficult to estimate.

Based on Remark 1.1, many researchers have worked and improved the already existing results in various forms. In a recent work published by Gibali [9], he introduced an Armijo-like search rule and remarked that, it is for local approximation of the Lipschitz constant. Although, it does not require the knowledge of Lipschitz constant but might involve additional computation of projection operator. Thong and Hieu [37] presented two parallel iterative algorithms for solving a variational inequality problem and fixed point problem for demicontractive mapping using subgradient extragradient technique. They obtained a strong convergence in both schemes under the assumption that the single-valued operator is monotone and Lipschitz continuous. But, their stepsize was dependent on the Lipschitz constant which was a heavy drawback. To improve on this result, in [36] the authors proposed another two inertial self-adaptive stepsizes for solving variational inequailties and obtained strong convergence results under the assumption that the operator is strongly pseudomonotone, which is also a stronger assumption than being monotone and Lipschitz continuous. Shehu et al. [33] studied an inertial typed subgradient extragradient method with self-adaptive stepsize. Under some mild conditions, the authors obtained a weak convergence. Furthermore, they later considered the operator A to be strongly monotone and Lipschitz continuous and obtanied a strong convergence. More so, Ogwo et al. [29] studied relaxed inertial subgradient extragradient methods for solving variational inequality problems involving quasi-monotone operator and obtained weak convergence. For more results in this direction, see for instance [1, 28,29,30, 38] and cited references. Motivated and inspired by the work of [9, 18, 29, 33, 37], we construct a new inertial algorithm that is simple and efficient for approximating solutions of variational inequlity problems and fixed point problems using subgradient extragradient type method.

Our contributions in this research include the following:

-

(a)

A new inertial self-adaptive subgradient extragradient algorithm which does not require the prior knowledge of Lipschitz constant is constructed. The variable stepsize \(\lambda _n\) does not need the \(\lim _{n\rightarrow \infty } \lambda _n=0,\ \text{ or }\ \sum ^{\infty }_{n=1}\lambda _n=0\) as in the case of [36]. It is more applicable than fixed stepsizes.

-

(b)

The inertial term we use improves the rate of convergence greatly. It is quite different from the one considered by [10, 11, 19, 23, 24]. It does not also require computing norm difference between \(x_n\) and \(x_{n-1}\) before choosing the inertial factor, \(\theta _n\).

-

(c)

We obtain that the iterative sequence \(\lbrace x_n \rbrace \) converges strongly to the solution set. Unlike the weak convergences obtained by [5, 29, 38]. We assume that the single-valued operator A is not required to be strongly monotone as in the case of [33] or strong pseudomonotone used by [36]. These two assumptions are stronger than being monotone and Lipschitz continuous that we consider.

-

(d)

The general class of operator called the demicontractive mapping is considered. Many important operators like nonexpansive mapping, pesudocontractivs, k-strictly pseudocontractive, quasi-nonexpansive operators among many others are all embedded in demicontractive mapping (see Remark 2.2 below). It is a more general class of operators than the ones used by [1, 4, 33, 38].

-

(e)

Numerical examples are provided which show the general performance of our algorithm.

The rest of the paper is organized as follows: the preliminaries in Sect. 2 deal with basic definitions of the terms and related lemmas, which we state without their proofs. In Sect. 3, we state our algorithm and assumptions for our operators and control sequences. The convergence analysis is given in Sect. 4 while in Sects. 5 and 6 are devoted for numerical illustartions and conclusion, respectively.

2 Preliminaries

We list some basic concepts and lemmas which are useful for constructing and analysing the convergence of our algorithm.

Definition 2.1

Let H be a real Hilbert space and \(\forall x,y \in H, p \in F(T)\). A map \(T: H\rightarrow H\) is called:

-

(1)

monotone on H if, \(\langle T(x)-T(y), x-y\rangle \ge 0;\)

-

(2)

\(\eta \)-strongly monotone on H if there exists \(\eta > 0\) such that \(\langle T(x) - T(x), x-y\rangle \ge \eta \Vert x-y\Vert ^2; \)

-

(3)

Lipschitz continuous on H if, there exists a constant \(L> 0\) such that \(\Vert T(x) - T(y)\Vert \le L\Vert x-y\Vert ;\)

-

(4)

a nonexpansive mapping if \(\Vert Tx-Tx\Vert \le \Vert x-y\Vert ;\)

-

(5)

a quasi-nonexpansive on H if, \(\Vert T(x)-p\Vert \le \Vert x-p\Vert ;\)

-

(6)

\(\kappa \)-strictly pseudo-contractive on H if, \(\Vert Tx-Ty\Vert ^2\le \Vert x-y\Vert ^2 + \kappa \Vert x-y-(Tx-Ty)\Vert ^2\), for some \(\kappa \in [0,1);\)

-

(7)

\(\sigma \)-demi-contractive if, there exists \(\sigma \in [0,1)\) such that \(\Vert Tx-p\Vert ^2 \le \Vert x-p\Vert ^2 + \sigma \Vert (I-T)x\Vert ^2;\) or equivalently \(\langle Tx-x,x-p\rangle \le \frac{\sigma -1}{2}\Vert x-Tx\Vert ^2;\) or equivalently \(\langle Tx-p,x-p\rangle \le \Vert x-p\Vert ^2 + \frac{\sigma -1}{2}\Vert x-Tx\Vert ^2.\)

Remark 2.2

We observe that:

-

(i)

(7) contains as a special case, nonexpansive mapping, quasi-nonexpansive mapping, \(\kappa \)-strictly pseudo-contractive mapping with a nonempty fixed point.

-

(ii)

All nonexpansive operators are \(\kappa \)-strictly pseudo-contractive mapping with a nonempty fixed point.

-

(iii)

Also, all quasi-nonexpansive mappings are a subclass of 0-demi-contractive mapping.

-

(iv)

Nonexpansive mappings are contained in quasi-nonexpansive mapping.

But the converse of all of these definitions are not necessarily true. To understand this, consider the following examples below.

Example 2.3

[13] We consider a demicontractive mapping which is not neccessarily pseudocontractive or \(\kappa \)-strictly pseudo-contractive. Let \(H={\mathbb {R}}, C= [-1,1]\), let \(T: C \rightarrow C\) be defined by \(Tx = \frac{2}{3}x sin(\frac{1}{x})\) if \(x\ne 0\) and \(T0=0\) otherwise. Observe that the only fixed point of T is zero (0). However, for \(x\in C,\)

for any \(\sigma \in [0,1),\) this shows that T is a demi-contractive.

Let us now show that T is not pseudo-contractive mapping. Let \(x=2/\pi , y=2/3\pi \). Then,

‘It follows that

Example 2.4

[38] We consider a case where T is quasi-nonexpansive but fails to be nonexpansive. Let \(Tx=\frac{x}{2}sinx, \ \text{ if }\ x\ne 0\) and \(Tx=x,\) then we have that \(x=\frac{x}{2}sinx,\) which implies that \(sinx =2,\) impossible. Thus, we obtain that \(x=0,\) which means that \(F(T)=\lbrace 0\rbrace .\)

Now, for all \(x \in H,\)

this shows that T is quasi-nonexpansive. However, setting \(x=2\pi \) and \(y=\frac{3\pi }{2},\) we get

which further means that the operator T is not a nonexpansive mapping.

Example 2.5

[7] We give an example of demi-contractive mapping that is not quasi-nonexpansive and not pseudo-contractive mapping. Let \(f: [-2, 1] \rightarrow [-2, 1]\) be a real-valued function defined by \(f(x)=-x^2-x\). Then, it is demi-contractive on \([-2,1]\) and conitnuous. It is neither quasi-nonexpansive nor pseudo-contractive mapping on \([-2,1]\).

Definition 2.6

The mapping \(P_C: H \rightarrow C\) which assigns to each \(v\in H\), the unique point \(P_C(v)\) such that \(\Vert P_C(v)-v\Vert = \inf \lbrace \Vert w-v\Vert : w\in C\rbrace .\) This is called the projection operator.

The operator \(P_C\) satisfies the following condition:

Also, for

Further implication is that

For details on the metric projections, an interested reader is encouraged to consult [44, section 3].

Lemma 2.7

[39] Let H be a Hilbert space and \(S: H \rightarrow H\) be a nonexpansive mapping with a nonempty fixed point. If \(\lbrace x_n \rbrace \) is a sequence in H that converges weakly to a point \(x^*\) and \(\lbrace (I-S)x_n\rbrace \) converges strongly to y, then \((I-S)x^* =y.\)

Lemma 2.8

Let H be a Hilbert space. Then, the following results hold for all \(x,y\in H, \lambda \in {\mathbb {R}}\):

-

(a)

\(2\langle x,y\rangle =\Vert x\Vert ^2 + \Vert y\Vert ^2- \Vert x-y\Vert ^2= \Vert x+y\Vert ^2 -\Vert x\Vert ^2 - \Vert y\Vert ^2,\)

-

(b)

\(\Vert x-y\Vert ^2 \le \Vert x\Vert ^2 + 2\langle y, x-y\rangle \)

-

(c)

\(\Vert \lambda x +(1-\lambda )y\Vert ^2 = \lambda \Vert x\Vert ^2 + (1-\lambda )\Vert y\Vert ^2 -\lambda (1-\lambda )\Vert x-y\Vert ^2.\)

Lemma 2.9

[32] Let \(\lbrace a_n \rbrace \) be a sequence of non-negative real numbers, \(\lbrace \beta _n \rbrace \) be a sequence of real numbers in (0, 1) with condition \(\sum ^{\infty }_{n=1}\beta _n =0,\) and \(\lbrace d_n\rbrace \) be a sequence of real numbers. Assume that

and

-

(a)

\(\sum ^{\infty }_{n=0}\alpha _n =\infty \)

-

(b)

\(\limsup _{n\rightarrow }d_n\le 0.\)

Then, \(\lim _{n\rightarrow \infty } a_n =0.\)

Lemma 2.10

[24] Let \(\lbrace a_n \rbrace \) be sequence of non-negative real numbers satisfying the following inequality:

where \(\lbrace \beta _n \rbrace \) is a sequence in (0, 1) and \(\lbrace \delta _n \rbrace \) is a sequence of real numbers. suppose that \(\sum ^{\infty }_{n=1}\gamma _n < \infty , \) and \(\delta _n \le \beta _n M\) for some \(M> 0.\) Then, \(\lbrace a_n \rbrace \) is a bounded sequence.

Lemma 2.11

[19] Let \(A:H\rightarrow H\) be a monotone and L-Lipschtiz continuous mapping on H. Let \(S=P_C(I-\mu A),\) where \(\mu > 0.\) If \(\lbrace x_n \rbrace \) is a sequence in H satisfying \(x_n \rightharpoonup q\) and \(x_n -Sx_n \rightarrow 0\) then \(q\in VI(C,A)=F(S).\)

Lemma 2.12

[23] Let \(\lbrace a_n\rbrace \) be a sequence of nonnegative real numbers such that there exists a subsequence \(\lbrace a_{n_{j}} \rbrace \) of \(\lbrace a_n\rbrace \) such that \(a_{n_{j}} \le a_{n_{j}+1}\) for all \(j\in {\mathbb {N}}.\) Then, there exists a nondecreasing sequence \(\lbrace m_k \rbrace \) of \({\mathbb {N}}\) such that \(\lim _{k\rightarrow \infty } m_k =\infty \) and the following properties are satisfied by all (sufficiently large) number \(k\in {\mathbb {N}}:\)

That is, \(m_k\) is the largest number n in the set \(\lbrace 1,2,...,k \rbrace \) such that \(a_n \le a_{n+1}.\)

3 The proposed algorithm

We present the proposed algorithm in this section and state the standard assumptions for the control sequences and the operators.

Remark 3.4:

-

i)

See that Assumption 3.1 (2) requires the operator A to be monotone and Lipschitz continuous.

-

ii)

The stepsize \(\lambda _n\) in (3.2) is self-adaptive. It is being generated at each iteration which makes our algorithm easily implemeneted without the prior knowledge of the Lipschitz constant of operator A.

-

iii)

The inertial term \(\theta _n (x_n - x_{n-1})\) contains an extra-term like Halpern iterative scheme, this greatly improves the rate of convergence of our proposed Alogrithm.

4 Convergence analysis

To establish the main theorem of this paper, the following lemmas should be stated and proved.

Lemma 4.1

Let \(\lbrace x_n \rbrace \) be the recursive sequence generated by Algorithm 3.3 such that Assumptions 3.1 and 3.2 are satisfied, then \(\lbrace x_n \rbrace \) is bounded.

Proof

Let \(x^* \in \Gamma .\) Then by the definition of variational inequality, we obtain that

Since A is monotone, we get that

Thus,

Hence,

We therefore obtain from the difinition of \(T_n\) that

It follows that

Now, using the definition of projection, its characterization and (4.2), we get

Observe from (3.2) that \(\lambda _n\) is a monotone nonincreasing sequence. Without loss of generality, we may assume that the \(\lim _{n\rightarrow \infty } \lambda _n = \lambda .\) Therefore,

It follows from (4.3) and (4.4) that

From the Algorithm 3.3, step 2, using the definition of \(q_n\), condition (d) of Assumption 3.2 and for all \(x^* \in \Gamma ,\) we obtain

Now,

Furthermore

Let \(\gamma _n = \tau _n (1-\rho )\) in (4.8). Since, \(\lim _{n\rightarrow \infty }\frac{\theta _n}{\tau ^2_n}\Vert x_n - x_{n-1}\Vert =0,\) set

and apply Lemma 2.10 in (4.8) to obtain

Thus, we conclude from (4.9) and Lemma 2.10 that the sequence, \(\lbrace x_n \rbrace \) is bounded. Consequently, we obtain that \(\lbrace w_n \rbrace , \lbrace y_n \rbrace , \lbrace z_n \rbrace \) and \(\lbrace q_n \rbrace \) are all bounded sequences and this completes the proof of Lemma 4.1. \(\square \)

Lemma 4.2

For all \(x^* \in \Gamma ,\) we have

where \(\zeta _n=\frac{2\tau _n(1-\rho )}{1-\tau _n \rho }\).

Proof

Let \(x^* \in \Gamma .\) Then,

It follows from (4.10), (3.3) and Lemma 2.8 (b) that

Letting \(M_0=Sup \bigg \lbrace (1+\tau _n \rho )\Vert x_0-x^*\Vert ^2,\frac{\theta _n}{\tau ^2_n}\Vert x_n-x_{n-1}\Vert ^2,\frac{\Vert x_n-x^*\Vert ^2}{2\tau _n}, 2\tau ^2_n\frac{\theta _n}{\tau ^2_n}\Vert x_n -x_{n-1}\Vert ^2; n\in {\mathbb {N}} \bigg \rbrace \) and in (4.11), we conclude that

Putting \(\zeta _n=\frac{2\tau _n(1-\rho )}{1-\tau _n \rho }\) we can re-write (4.12) as follows:

This completes the proof of Lemma 4.2. \(\square \)

Next, we establish the following important lemma.

Lemma 4.3

We prove that

Proof

From the algorithm and for all \(x^* \in \Gamma ,\) we get

Furthermore from the Algorithm and for all \( x^* \in \Gamma ,\)

Thus, it follows from (4.15) that

where \(K_0=\sup \bigg \lbrace \Vert f(w_n)-x^*\Vert ^2, \Vert x_n -x^*\Vert ^2,\tau _n\frac{\theta _n}{\tau ^{2}_n}\Vert x_n - x_{n-1}\Vert ^2, \tau _n \Vert x_0-x^*\Vert ^2: n\in {\mathbb {N}}\bigg \rbrace ,\) completing lemma 4.3. \(\square \)

Lemma 4.4

For any \(x^* \in \Gamma \), we obtain

Proof

The result follows immediately from Lemma 4.3. \(\square \)

We are now ready to establish the main theorem of this paper.

Theorem 4.5

Let C be a nonempty closed convex subset of a real Hilbert space, H. Let A be a monotone and Lipschitz continuous defined on H and \(T: H \rightarrow H\), be a \(\sigma \)-demi-contractive mapping. Let f be a contraction map defined on H. Assume the solution set \(\Gamma \ne \emptyset .\) If the Assumptions 3.1 and 3.2 are satisfied, then the sequence \(\lbrace x_n \rbrace \) converges strongly to the solution set.

Proof

Since \(P_{\Gamma }f\) is a contraction on H, there exists \(q\in \Gamma \) such that \(q=P_{\Gamma }f(q)\). Thus, we prove that the iterative sequence \(\lbrace x_n \rbrace \) converges strongly to \(q=P_{\Gamma }f(q).\) In order to establish this result, we consider the following two cases.

Case 1: Suppose there is \(n_0 \in {\mathbb {N}}\) such that \(\lbrace \Vert x_n -q\Vert \rbrace ^{\infty }_{n=n_{0}}\) is nonincreasing. Then, \(\lim _{n\rightarrow \infty } \lbrace \Vert x_n -q\Vert \rbrace \) exists. It follows from this fact that

From the Algorithm 3.3, we get

It follows from (4.17) and the Assumption 3.2(b–c) that

From Lemma 4.3, we obtain that

It follows from (4.19) that

Subsequently,

Since the \(\lim _{n\rightarrow \infty }\lbrace \Vert x_n-q\Vert \rbrace \) exists,it follows that

That is,

And consequently from the Lemma 4.4, we get

Using the condition on \(\tau _n\) and definition of \(q_n\), we estimate that

It follows from (4.23) and (4.19) that

Now, using the definition of \(q_n\) once again, (4.18), (4.19) and (4.24), we get

And consequently from (4.25), we conclude that

Applying the condition on \(\alpha _n\), the Assumption 3.2(b) and the estimate (4.26), we obtain

Thus, we obtain from (4.27) that

Finally, applying the condition of \(\tau _n\) in the inequality below, using (4.26), we get that

Therefore,

Because \(\lbrace x_n\rbrace \) is bounded (see Lemma 4.1), there exists a subsequence \(\lbrace x_{n_{k}}\rbrace \) of \(\lbrace x_n\rbrace \) such that \(\lbrace x_{n_{k}}\rbrace \) weakly converges to some q as \(k\rightarrow \infty \) and

Since \(x_{n_{k}}\rightharpoonup q\) and \(\Vert w_n - y_n\Vert =\Vert w_n -P_C(w_n -\lambda _n Aw_n)\Vert \rightarrow 0\) by Lemma 2.11, we obtain \(q\in VI(C,A).\) Furthermore, since \(\Vert z_n - x_n\Vert \rightarrow 0\), \(z_{n_{k}}\rightharpoonup q\) and \(\lim _{n\rightarrow \infty }\Vert z_n - Tz_n\Vert =0,\) we get from these facts that \(q\in F(T).\) Therefore, \(q\in VI(C,A)\cap F(T),\) that is, \(q\in \Gamma .\) Also, by \(p=P_{VI(C,A)\cap F(T)}(0)\), we get

By our estimate that \(\Vert x_{n+1}-x_n\Vert \rightarrow 0,\) we obtain

Therefore, using (4.12), (4.13), (4.31) and Lemma 2.9, we find that the sequence \(\lbrace x_n \rbrace \) converges strongly to q.

Case 2: There exists a subsequence \(\lbrace \Vert x_{n_{k}}-p\Vert ^2\rbrace \) of \(\lbrace \Vert x_n - p\Vert ^2\rbrace \) such that \(\Vert x_{n_{k}}-p\Vert ^2 < \Vert x_{n_{k}+1}-p\Vert ^2\) for all \(k\in {\mathbb {N}}.\) It follows from Lemma 2.12 that there exists a nondecreasing sequence \(\lbrace m_k \rbrace \) of \({\mathbb {N}}\) such that \(\lim _{k\rightarrow \infty }\) and the following inequalities hold for all \(k\in {\mathbb {N}}:\)

From Lemma 4.3, we get that

Using (4.33) together with assumptions in \(\lbrace \lambda _n\rbrace \) and \(\lbrace \tau _n \rbrace ,\) it follows that

However, from Lemma 4.4, we obtain

Using Lemma 4.1, it follows from (4.35) that

Furthermore, by the same argument in case 1, we have

It follows from (4.12) and (4.32) that

and hence

Since \(\zeta _{m_{k}}>0\) and using (4.32) we get

Taking the limit in the above inequality as \(k\rightarrow \infty ,\) we conclude that \(x_k\) converges strongly to \(q=P_{\Gamma }f(q).\) This completes the proof of Theorem 4.5. \(\square \)

5 Numerical illustrations

Let \({H}=\left( l _2({\mathbb {R}}), ~||. ||_{ l _2}\right) \), where \( l _2({\mathbb {R}}):=\{x=(x_1, x_2, x_3, \dots ),~x_i\in {\mathbb {R}}:\sum _{i=1}^\infty |x_i|^2<\infty \}\) and \(||x||_{ l _2}:= \left( \sum _{i=1}^\infty |x_i|^2 \right) ^{\frac{1}{2}},~~\forall x \in l _2({\mathbb {R}}).\) Let \({C}=\{x\in { l _2}({\mathbb {R}}):||x-a||_{{ l _2}}\le r\},\) where \(a=(1, \frac{1}{2}, \frac{1}{3}, \cdots )\), \(r=3\). Then C is a nonempty closed and convex subset of \({ l _2}({\mathbb {R}})\). Thus,

Now, define the operators \(A,f,T: { l _2}({\mathbb {R}}) \rightarrow { l _2}({\mathbb {R}})\) by

Then A is Lipschitz continuous and monotone with Lipschitz constant \({L}=1\), f is a contraction with \(\rho =\frac{1}{3}\) and T is a demi-contractive mapping with \(\sigma =\frac{117}{169}\).

Furthermore, we choose \(\lambda _1=1\), \(\mu =0.5\), \(\tau _n=\frac{1}{n+1}\), \(\alpha _n=\frac{2n+3}{50n+100}\), \(\beta _n=\frac{n+1}{100n+2}\) and

where \(e_n=\frac{1}{n^2}\) and \(\theta =0.3\). Then, we consider the following cases for the numerical experiments.

Case 1: Take \(x_1= (1, \frac{1}{2}, \frac{1}{3}, \cdots ) \) and \(x_0=(\frac{1}{2}, \frac{1}{5}, \frac{1}{10}, \cdots )\).

Case 2: Take \(x_1= (\frac{1}{2}, \frac{1}{5}, \frac{1}{10}, \cdots )\) and \(x_0=(1, \frac{1}{2}, \frac{1}{3}, \cdots )\).

Case 3: Take \(x_1= (1, \frac{1}{4}, \frac{1}{9}, \cdots )\) and \(x_0= (\frac{1}{2}, \frac{1}{4}, \frac{1}{8}, \cdots ).\)

The stopping criterion is \(\text{ TOL}_n < 10^{-5}\), where \(\text{ TOL}_n=0.5\Vert x_n - T(PC(x_n - x_n))\Vert ^2\). Note that \(\text{ TOL}_n=0\) implies that \(x_n\) is a solution.

Remark 5.1

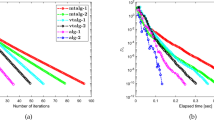

The numerical illustration 5 is provided to check the general performance of our Algorithm 3.3. The Table 1 and Fig. 1 show the time it takes and number of iterations it takes for the recursive sequence to converge in the cases 1 to 3 stated above.

6 Conclusion

In the real Hilbert space setting, we have proposed subgradient extragradient methods for approximating a common solution for variational inequality problems and fixed point problems. We obtained a strong convergence under some mild assumptions, that is, the associated single-valued operator A is monotone and Lipschitz continuous. Furthermore, we have adopted self-adaptive stepsize which is generated at each iteration. An appropriate computational experiment is provided to support our theoritical argument. Our algorithm is an improvement to the recent work of [33, 37, 38] among other already announced results in this direction.

References

Abbas, M., Iqbal, H.: Two inertial extragradient viscosity algorithms for solving variational inequality and fixed point problems. J. Nonlinear Var. Anal. 4, 377–398 (2020)

Baiocchi, C., Capelo, A.: Variational and Quasivariational Inequalities. Applications to Free Boundary Problems. Wiley, New York (1984)

Beck, A., Teboulle, M.: A fast iterative shrinkage-thresholding algorithm for linear inverse problems. SIAM J. Imaging Sci. 2, 183–202 (2009)

Ceng, L.-C., Shang, M.: Hybrid inertial subgradient extragradient methods for variational inequalities and fixed point problems involving asymptotically nonexpansive mappings. Optimization (2019). https://doi.org/10.1080/02331934.2019.1647203

Censor, Y., Gibali, A., Reich, S.: The subgradient extragradient method for solving variational inequalities in Hilbert space. J. Optim. Theory Appl. 148, 318–335 (2011)

Censor, Y., Gibali, A., Reich, S.: Strong convergence of subgradient extragradient methods for the variational inequality problem in Hilbert space. Optim. Methods Softw. 26, 827–845 (2011)

Chidume, C.E., Măruşter, Ş: Iterative methods for the computation of fixed points of demicontractive mappings. J. Comput. Appl. Math. 234(3), 861–882 (2010). https://doi.org/10.1016/j.cam.2010.01.050

Gibali, A., Jolaoso, L.O., Mewomo, O.T., Taiwo, A.: Fast and simple Bregman projection methods for solving variational inequalities and related problems in Banach spaces. Results Math. 75, Art. No. 179, 36 pp (2020)

Gibali, A.: A new non-Lipschitzian projection method for solving variational inequalities in Euclidean spaces. J. Nonlinear Anal. Optim. 6, 41–51 (2015)

Godwin, E.C., Izuchukwu, C., Mewomo, O.T.: An inertial extrapolation method for solving generalized split feasibility problems in real Hilbert spaces. Boll. Unione Mat. Ital. 14(2), 379–401 (2021)

Godwin, E.C., Izuchukwu, C., Mewomo, O.T.: Image restoration using a modified relaxed inertial method for generalized split feasibility problems Math. Methods Appl. Sci. 46(5), 5521–5544 (2023)

He, B.S., Liao, L.Z.: Improvements of some projection methods for monotone nonlinear variational inequalities. J. Optim. Theory Appl. 112, 111–128 (2002)

Hicks, T.L., Kubicek, J.D.: On the Mann iteration process in a Hilbert space. J. Math. Anal. Appl. 59(3), 498–504 (1977). https://doi.org/10.1016/0022-247x(77)90076-2

Izuchukwu, C, Mebawondu, A.A., Mewomo, O.T.: A new method for solving split variational inequality problems without co-coerciveness. J. Fixed Point Theory Appl. 22(4), Art. No. 98 (2020)

Izuchukwu, C., Ogwo, G.N., Mewomo, O.T.: An inertial method for solving generalized split feasibility problems over the solution set of monotone variational inclusions. Optimization (2020). https://doi.org/10.1080/02331934.1808648

Jolaoso, L.O., Taiwo, A., Alakoya, O.T., Mewomo, O.T.: Strong convergence theorem for solving pseudomonotone variational inequality problem using projection method in a reflexive Banach space. J. Optim. Theory Appl. 185(3), 744–766 (2020)

Khan, S.H., Alakoya, T.O., Mewomo, O.T.: Relaxed projection methods with self-adaptive step size for solving variational inequality and fixed point problems for an infinite family of multivalued relatively nonexpansive mappings in Banach Spaces. Math. Comput. Appl. 25, Art. 54 (2020)

Korpelevich, G.M.: The extragradient method for finding saddle points and other problems. Ekonomikai Matematicheskie Metody 12, 747–756 (1976)

Kraikaew, R., Saejung, S.: Strong convergence of the Halpern subgradient extragradient method for solving variational inequalities in Hilbert spaces. J. Optim. Theory Appl. 163, 399–412 (2014)

Liu, H., Yang, J.: Weak convergence of iterative methods for solving quasimonotone variational inequalities. Comput. Optim. Appl. 77(2), 491–508 (2020)

Lorenz, D., Pock, T.: An inertial forward–backward algorithm for monotone inclusions. J. Math. Imaging Vis. 51, 311–325 (2015)

Luo, Y.L., Tan, B.: A self-adaptive inertial extragradient algorithm for solving pseudo-monotone variational inequality in Hilbert spaces. J. Nonlinear Convex Anal. (in press) (2020)

Maingé, P.E.: A hybrid extragradient-viscosity method for monotone operators and fixed point problems. SIAM J. Control Optim. 47, 1499–1515 (2008)

Mainge, P.E.: Approximation methods for common fixed points of nonexpansive mappings in Hilbert spaces. J. Math. Anal. Appl. 325, 469–479 (2007)

Maingé, P.E.: Convergence theorems for inertial KM-type algorithms. J. Comput. Appl. Math. 219, 223–236 (2008)

Nadezhkina, N., Takahashi, W.: Strong convergence theorem by a hybrid method for nonexpansive mappings and Lipschitz-continuous monotone mappings. SIAM J. Optim. 16, 1230–1241 (2006)

Nesterov, Y.: A method of solving a convex programming problem with convergence rate O(1/k2). Sov. Math. Dokl. 27, 372–376 (1983)

Ogwo, G.N., Izuchukwu, C., Mewomo, O.T.: Inertial methods for finding minimum-norm solutions of the split variational inequality problem beyond monotonicity. Numer. Algorithms 88(3), 1419–1456 (2022)

Ogwo, G.N., Izuchukwu, C., Shehu, Y., Mewomo, O.T.: Convergence of relaxed inertial subgradient extragradient methods for quasimonotone variational inequality problems. J. Sci. Comput. (2022). https://doi.org/10.1007/s10915-021-01670-1

Ogwo, G.N., Izuchukwu, C., Mewomo, O.T.: Relaxed inertial methods for solving split variational inequality problems without product space formulation. Acta Math. Sci. Ser. B (Engl. Ed.) 42, 1701–1733 (2022)

Polyak, B.T.: Some methods of speeding up the convergence of iteration methods. Comput. Math. Math. Phys. 4, 1–17 (1964)

Saejung, S., Yotkaew, P.: Approximation of zeros of inverse strongly monotone operators in Banach spaces. Nonlinear Anal. 75, 742–750 (2012)

Shehu, Y., Iyiola, O.S., Reich, S.: A modified inertial subgradient extragradient method for solving variational inequalities. Optim. Eng. 23, 421–449 (2022). https://doi.org/10.1007/s11081-020-09593-w

Stampacchia, G.: Variational inequalities. In: Theory and Applications of Monotone Operators. Proceedings of the NATO Advanced Study Institute, Venice, Italy, Edizioni Odersi, Gubbio, Italy, pp. 102–192 (1968)

Tan, B., Xu, S.S., Li, S.: Inertial shrinking projection algorithms for solving hierarchical variational inequality problems. J. Nonlinear Convex Anal. (in press) (2020)

Thong, D.V., Hieu, D.V.: Inertial extragradient algorithms for strongly pseudomonotone variational inequalities. J. Comput. Appl. Math. 341, 80–98 (2018)

Thong, D.V., Hieu, D.V.: Modified subgradient extragradient algorithms for variational inequality problems and fixed point problems. Optimization 67(1), 83–102 (2017). https://doi.org/10.1080/02331934.2017.1377199

Tian, M., Tong, M.: Self-adaptive subgradient extragradient method with inertial modification for solving monotone variational inequality problems and quasi-nonexpansive fixed point problems. J. Inequal. Appl. (2019). https://doi.org/10.1186/s13660-019-1958-1

Xu, H.K.: Viscosity approximation methods for nonexpansive mappings. J. Math. Anal. Appl. 298, 279–291 (2004)

Censor, Y., Gibali, A., Reich, S.: Extensions of Korpelevich’s extragradient method for the variational inequality problem in Euclidean space. Optim. J. Math. Program. Oper. Res. 61(9), 1119–1132 (2012). https://doi.org/10.1080/02331934.2010.539689

Ezeora, J.N., Francis, O.: Nwawuru: an inertial-based hybrid and shrinking projection methods for solving split common fixed point problems in real reflexive spaces. Int. J. Nonlinear Anal. Appl. 14(1), 2541–2556 (2023). https://doi.org/10.22075/ijnaa.2022.24912.2852

Nwawuru, F.O., Ezeora, J.N.: Inertial-based extragradient algorithm for approximating a common solution of split-equilibrium problems and fixed-point problems of nonexpansive semigroups. J. Inequal. Appl. 2023, 22 (2023). https://doi.org/10.1186/s13660-023-02923-3

Ezeora, J.N., Enyi, C.D., Nwawuru, F.O., Richard, C.: Ogbonna. An algorithm for split equilibrium and fixed-point problems using inertial extragradient techniques. Comput. Appl. Math. 42, 103 (2023). https://doi.org/10.1007/s40314-023-02244-7

Goebel, K., Reich, S.: Uniform Convexity. Hyperbolic Geometry, and Nonexpansive Mappings, Series of Monographs and Textbooks in Pure and Applied Mathematics, vol. 83 (1984)

Acknowledgements

The authors sincerely thank the editor and the anonymous reviewers for their careful reading, constructive comments and fruitful suggestions that substantially improved the manuscript.

Funding

There is no funding for this project.

Author information

Authors and Affiliations

Contributions

The first author conceptualize, design and carry out the computations with the second author while the third author handles the numerical part and equally oversees the correctness of the entire manuscript and gives it a beautiful look.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Ethical approval

Not applicable.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Nwawuru, F.O., Echezona, G.N. & Okeke, C.C. Finding a common solution of variational inequality and fixed point problems using subgradient extragradient techniques. Rend. Circ. Mat. Palermo, II. Ser 73, 1255–1275 (2024). https://doi.org/10.1007/s12215-023-00978-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12215-023-00978-1

Keywords

- Extragradient

- Subgradient-extragradient

- Variational inequality

- Lipschitz constant

- Viscosity iteration

- Hilbert spaces