Abstract

A class of singularly perturbed parabolic partial differential equations with a large delay and an integral boundary condition is studied. The problem’s solution features a weak interior layer besides a boundary layer. This paper presents a higher-order accurate numerical method on a specially designed non-uniform mesh. The technique employs the Crank-Nicolson difference scheme in the temporal variable, whereas an upwind difference scheme in space. The proposed method is unconditionally stable and converges uniformly independent of the perturbation parameter. The numerical result for two model problems is presented, which agrees with the theoretical estimates.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Ordinary and partial differential equations have played a vital role in the mathematical modelling of various real-world phenomena in science and engineering. To make the model more realistic, it is sometimes needed to include past states of the system rather than only the current state. Modelling of such systems leads to delay differential equations (DDEs). These equations arise in many scientific areas such as control theory [33], nonlinear optics [23], neuroscience [35], population dynamics [21], HIV infection models [13], optical feedback [18], and others [15, 38]. Specific examples of delay systems with large delay include semiconductor lasers with two optical feedback loops of different lengths [34], ring-cavity lasers with optical feedback [18], and others [15, 38]. In DDE, however, the evolution of the system at a certain time depends on the past history, the introduction of such delay in the model improves the dynamics of the model but increases the complexity of the system. Therefore, studying this class of differential equations (DE) is important.

When we associate a mathematical model with physical phenomena, we often capture essentials and neglect the minor components, involving small parameters. DE in which the highest-order derivative term is affected by a small parameter \(\varepsilon \) is classified as singularly perturbed problem (SPP). The main characteristics of these problems are the appearance of boundary/interior layer in the solution when \(\varepsilon \rightarrow 0\). Moreover, the presence of delay term makes the problem more challenging. These layers are the small regions, where the solution changes extremely rapidly. Such phenomena cause significant numerical challenges that can only be overcome by employing specially designed numerical methods. While solving SPP, unless specially designed meshes are used, conventional numerical methods fail to reduce error below a certain fixed limit. Such meshes are designed based on prior knowledge of the location of the layers under consideration. To overcome these limitations, many researchers have developed various parameter uniform numerical methods that behave well enough independent of the perturbation parameter.

Many researchers have made efforts to develop parameter uniform numerical methods for singularly delay differential equation (SPDDE) [8, 25]. In [2], the authors discussed a SPDDE with Dirichlet boundary condition. While the authors in [4] studied SPDDE with Robin type boundary condition. In [30], a hybrid difference method is used for parabolic problems with time delay. A stable finite difference scheme for a singularly perturbed problem with delay and advanced term is presented in [29]. In [19], a standard finite difference scheme is presented to solve parabolic SPDDE on an equidistributed mesh. For a time-delayed convection-diffusion problem, a hybrid scheme is given in [14]. While a hybrid difference approach for a parabolic differential equation with delay and advance terms is studied in [20, 36]. A very good amount of literature is available for time delay problems but the study of problems with space delay is still in the initial stage [7, 26, 28, 40].

In the past few years, a growing interest can be seen towards the study of SPDDEs with integral boundary conditions due to their wide applications in science and engineering such as heat conduction, oceanography, electrochemistry, blood flow models, cellular systems, thermodynamics, population dynamics, etc. [1, 5, 10, 11, 16, 17, 22, 24, 42]. For the existence, uniqueness and well-posedness of the solution to such types of problems, the readers are referred to [3, 6, 9] and references therein. The solution of such problems is not known at the boundary points of the domain and the boundary values are associated with the solution at the interior points of the domain. Recently, the interest of researchers for the numerical solution of such class of problems has increased considerably. In [39], the authors studied SPDDE with integral boundary condition using an upwind finite difference method on piecewise uniform Shishkin mesh. In [41], a hybrid difference scheme is used to find the approximate solution for SPDDE with large delay and integral boundary condition. It is proved that the method is almost second-order convergent which is optimal compared to [27]. The theory and numerical approximation for a time-dependent SPDDE with integral boundary condition is little developed. In [40], a parabolic SPDDE of reaction-diffusion type with integral boundary condition is studied. The method presented has almost second order of convergence in space and one in time. In the literature, so far, no one has considered parabolic SPDDE of convection-diffusion type with integral boundary condition. Motivated by the above works, in this article, a parameter uniform numerical method is presented to solve singularly perturbed parabolic DDE with integral boundary condition and having a large delay in space. An implicit numerical scheme comprising the Crank-Nicolson scheme in the time direction and the finite difference scheme in the space direction is used to find an approximate solution. The method presented is parameter uniform and has second order of convergence in time and almost first order in space.

2 The analytical problem

Let \(D=\mu \times \Delta :=(0,2)\times (0,T]\) and consider the following parabolic delay differential equation with integral boundary condition

where \(\varepsilon \ll 1\) is a small positive parameter, g(s, t), p(s), q(s), and r(s) are sufficiently smooth functions. Also, assume that the initial-boundary data \(\psi _1\), \(\psi _2\) and \(\psi _3\) are smooth and bounded functions such that

Here, f(s) is non-negative, monotonic function such that \(\displaystyle \int _{0}^{2}f(s)ds<1\). Moreover, the given data satisfies the compatibility conditions

Rewriting (2.1) as

where

and

These assumptions confirms the existence and uniqueness of the solution [2, 31]. The solution of (2.1) exhibits a weak interior layer at \(s=1\) and a strong boundary layer at \(s=2\).

Lemma 2.1

Let \({\mathcal {P}}(s,t)\in C^{2,1}({\bar{D}})\). If \({\mathcal {P}}(0,t)\ge 0\), \({\mathcal {P}}(s,0)\ge 0\), \({{\mathcal {K}}}{{\mathcal {P}}}(2,t)\ge 0\) with \({{\mathcal {L}}}{{\mathcal {P}}}(s,t)\ge 0\) for all \((s,t)\in D\) and \([{\mathcal {P}}_s](1,t)={\mathcal {P}}_s(1^+,t)-{\mathcal {P}}_s(1^-,t)\le 0\). Then \({\mathcal {P}}(s,t)\ge 0\) for all \((s,t)\in {\bar{D}}\).

Proof

Let \((s^k,t^k)\in D\) and \( \displaystyle {\mathcal {P}}(s^k,t^k)=\min \limits _{(s,t)\in {\bar{D}}} {\mathcal {P}}(s,t)\). Consequently,

Suppose \({\mathcal {P}}(s^k,t^k)<0\), it follows that \((s^k,t^k)\notin \Gamma :=\Gamma _1\cup \Gamma _2\cup \Gamma _3\).

- Case I::

-

If \(s^k\in (0,1)\), then

- Case II::

-

If \(s^k\in (1,2)\), then

- Case III::

-

If \(s^k=1\), then

$$\begin{aligned}{}[{\mathcal {P}}_s](s^k,t^k)={\mathcal {P}}_s(s^{k+},t^k)-{\mathcal {P}}_s(s^{k-},t^k)>0 \text{ since } {\mathcal {P}}(s^k,t^k)<0 . \end{aligned}$$

The result thus follows from a contradiction. \(\square \)

As a consequence of Lemma 2.1, obtaining the following stability estimate is straightforward.

Lemma 2.2

The solution of (2.1) satisfies

Proof

Define \(\theta _{\pm }(s,t)= \displaystyle \Vert y\Vert _{\infty ,\Gamma }+\frac{1}{\eta }\Vert {\mathcal {G}} \Vert _{\infty , {\bar{D}}} \pm y(s,t),\;\;(s,t)\in {\bar{D}}\). For \((s,t)\in \Gamma \), \(\theta _{\pm }(s,t)\ge 0\), and if \((s,t)\in D\), it follows that

and the result follow as a consequence of Lemma 2.1. \(\square \)

Generally, we may take homogeneous boundary data \(\psi _1=\psi _2=\psi _3=0\) by subtracting some appropriate smooth function from y that satisfies the original boundary data [37].

Lemma 2.3

Let y be the solution of (2.1) then there exists a constant C independent of \(\varepsilon \) such that

Proof

For \(i=0\), the result follows from Lemma 2.2. The assumption \(\psi _1(s,t)=\psi _3(s,t)=0\) gives \(y=0\) along the left and right hand sides of D, which implies \( y_t=0\) along these sides. Also, \( \psi _2(s,t)=0\) gives \(y=0\) along the line \(t=0\). Thus \(y_{s}=0=y_{ss}\) along the line \(t=0\). Now, put \(t=0\) in (2.1) to obtain

implying \(y_t(s,0)=g(s,0)\) since \(y_{s}(s,0)=y_{ss}(s,0)=y(s,0)=y(s-1,0)=0\). Thus \(|y_t|\le C\) on \(\Gamma \) as g(s, t) is continuous on \({\bar{D}}\). On applying differential operator \({\mathcal {L}}\) on \(y_t(s,t)\), we get

An application of the Lemma 2.1 yields \(\left| y_t\right| \le C\) on \({\bar{D}}\).

Now \(y_{tt}=0\) on \(\Gamma _1\cup \Gamma _3\) as \(y_t=0\) on \(\Gamma _1\) and \(\Gamma _3\). Differentiating (2.1) with respect to t and put \(t=0\) to obtain

Since \(y_t(s,0)=g(s,0)\),

and by definition

From (2.5), we have

Since g is continuous on \({\bar{D}}\). Therefore, for significantly large value of C, \(|y_{tt}|\le C\) on \(\Gamma _2\). Hence, \(|y_{tt}|\le C\) on \(\Gamma .\) Moreover, it is easy to verify that

on D and the proof follows from Lemma 2.1. \(\square \)

3 Semi-discretization in time

Let \({{\mathbb {T}}_t}^M=\left\{ t_k=kT/M, k=0:M\right\} \) be a equidistant mesh which partition the domain [0, T] into M number of sub-intervals. Next, we semi-discretize (2.1) using the Crank-Nicholson scheme in the time variable. The resulting semi-discrete problem on \({{\mathbb {T}}_t}^M\) reads

where \(s\in [0,2]\) and \(k= 0,1,\ldots , M-1\), such that

Here, \(Y(s,t_{k+1})\) denotes a numerical approximation of continuous solution y at \((k+1)\) time step, \(\displaystyle l(s)=\frac{\Delta t q(s)+2}{2\Delta t}\) and \(\displaystyle m(s)=\frac{2-\Delta t q(s)}{2\Delta t}\).

Writing (3.1) as

where

and

Operator \({\mathfrak {L}}_{CN}\) satisfies the following discrete maximum principle.

Lemma 3.1

Let the function \(\chi (s,t_{k+1})\) satisfies \(\chi (s,t_{k+1})\ge 0\) for \(s=0,2\) and \({\mathfrak {L}}_{CN}\chi (s,t_{k+1})\ge 0\) for all \(s\in \mu \). Then \(\chi (s,t_{k+1})\ge 0\) for all \(s\in {\bar{\mu }}\).

Proof

Let \(\chi (\xi ,t_{k+1})=\min \limits _{s\in \mu }\chi (s,t_{k+1})\) for some \(\xi \in \mu \). Then

Suppose \(\chi (\xi ,t_{k+1})<0\), it follows that \((\xi ,t_{k+1})\notin \Gamma \) since \(\chi (\xi ,t_{k+1})\ge 0\) for \(s =0,2\).

- Case I::

-

If \(\chi \in (0,1]\), then

- Case II::

-

If \(\chi \in (1,2)\), then

The required result follows from contradiction. \(\square \)

Lemma 3.2

Let \(Y(s,t_{k+1})\) be the solution of (3.1). Then

Proof

Define \(\zeta _{\pm }(s,t_{k+1})=\max \left\{ |Y(0,t_{k+1})|,\frac{\Delta t}{\eta \Delta t+1}\Vert \hat{{\mathcal {G}}}\Vert _{{\bar{\mu }}}\right\} \pm Y(s,t_{k+1})\) for \(s\in [0,1]\). Clearly, \(\zeta _{\pm }(0,t_{k+1})\ge 0\). Moreover, it follows that

Similarly, define \(\displaystyle \zeta _{\pm }(s,t_{k+1})=\max \left\{ |Y(2,t_{k+1})|,\frac{\Delta t}{\eta \Delta t+1}\Vert \hat{{\mathcal {G}}}\Vert _{{\bar{\mu }}}\right\} \pm Y(s,t_{k+1})\) for \(s\in [1,2]\). Clearly, \(\zeta _{\pm }(2,t_{k+1})\ge 0.\) Also, it follows that

An application of Lemma 3.1 yields the desired result. \(\square \)

Next, we compute global errors using local error bounds. From (3.3)

where \({\mathcal {L}}_{CN}\) is as defined in (3.3), and

with the following boundary conditions

Lemma 3.3

The local truncation error (LTE) \({\hat{e}}_{k+1}:={\tilde{Y}}(s,t_{k+1})-y(s,t_{k+1})\) at \(k+1\) time step satisfies

Proof

For proof, see [12]. \(\square \)

Moreover, LTE at each time step contributes to the estimate for global error \(E_k:=y(s,t_k)-Y(s,t_k)\). Then, it follows that

As a result, the time semi-discretization procedure achieves uniform convergence.

The solution \(Y(s,t_{k+1})\) of problem (3.1) admits a decomposition into smooth and singular components [32]. We write

Here, the smooth component \(X(s,t_{k+1})\) satisfies

in (0, 1], and in (1, 2) satisfies

where \(X_0(s,t_{k+1})\) satisfies the associated reduced problem, and \(Z(s,t_{k+1})\) satisfies

The solution of (2.1) contains a boundary layer at \(s=2\) and an interior layer at \(s=1\). Further, decompose \(Z(s,t_{k+1})\) as \( Z(s,t_{k+1}):=Z_I(s,t_{k+1})+Z_B(s,t_{k+1})\) where \(Z_B(s,t_{k+1})\) and \(Z_I(s,t_{k+1})\) satisfies

and

The following lemma provides bounds on the derivatives of smooth component \(X(s,t_{k+1})\) and singular component \(Z(s,t_{k+1})\).

Lemma 3.4

For \(k=0,1,2,3\), the smooth component \(X(s,t_{k+1})\) and singular component \(Z(s,t_{k+1})\) satisfy the following estimates

Proof

For proof, see [39]. \(\square \)

4 Spatial discretization

The problem’s solution exhibits a strong boundary layer at \(s=2\) and a weak interior layer at \(s=1\). Consequently, to generate a piecewise-uniform mesh \(\bar{{\mathbb {D}}}_s^N\), we partition the given interval [0, 2] into four subintervals as

where \(\displaystyle \beta =\min \left\{ 0.5,\frac{2\varepsilon \ln N}{p_0^*}\right\} \) is defined as the mesh transition parameter. Each subinterval contains N/4 mesh elements. Therefore, we may write

where

For \(i\ge 1\), a function \(V_{i,k+1}\) and step size \(h_i\) discretize (3.1) using the difference formula

The discrete problem on \(\bar{{\mathbb {D}}}_s^N\times {{\mathbb {T}}_t}^M\) thus reads

where

and

Moreover, for \(i=N/2\)

with

The operator \({\mathcal {L}}_{CN}^N\) satisfies the following discrete maximum principle.

Lemma 4.1

Let \(Z_{i,k+1}\ge 0\) for \(i=0,\ldots ,N\), \({\mathcal {L}}_{CN}^N Z_{i,k+1}\ge 0\) for \(i= 1,\ldots ,N/2-1,N/2+1,\ldots ,N\) and \(D_s^+Z_{N/2,k+1}-D_s^-Z_{N/2,k+1}\le 0\). Then \(Z_{i,k+1}\ge 0\) for \(i= 0, 1\ldots ,N\).

Proof

Let \(j^*\in \left\{ 0,1,\ldots ,N\right\} \backslash \{N/2\}\) and \(Z_{j^*,k+1}=\min \limits _{\bar{{\mathbb {D}}}_s^N\times {{\mathbb {T}}_t}^M}{Z_{i,k+1}}\). Assume that \(Z_{j^*,k+1}<0\), it follows that \(j^*\notin \left\{ 0,N\right\} \).

- Case I::

-

For \(j^*\in \left\{ 1,2,\ldots ,N/2-1\right\} \)

$$\begin{aligned} {\mathcal {L}}_{CN1}^N Z_{j^*,k+1}= & {} \frac{-\varepsilon }{2} \delta _s^2Z_{j^*,k+1}+\frac{p_{j^*}}{2} D_s^-Z_{i^*,k+1}+l_{j^*}Z_{j^*,k+1}\\= & {} \frac{-\varepsilon }{{\hat{h}}_{j^*}}\left\{ \frac{Z_{j^*+1,k+1}-Z_{j^*,k+1}}{h_{j^*+1}}-\frac{Z_{j^*,k+1}-Z_{j^*-1,k+1}}{h_{j^*}} \right\} \\&+\frac{p_{j^*}}{2}\left\{ \frac{Z_{j^*,k+1}-Z_{j^*-1,k+1}}{h_{j^*}} \right\} +l_{j^*}Z_{j^*,k+1}\\< & {} 0. \end{aligned}$$ - Case II::

-

For \(j^*\in \left\{ N/2+1,\ldots , N-1\right\} \)

$$\begin{aligned} {\mathcal {L}}_{CN2}^N Z_{j^*,k+1}= & {} \frac{-\varepsilon }{2} \delta _s^2Z_{j^*,k+1}+\frac{p_{j^*}}{2} D_s^-Z_{i^*,k+1}+l_{j^*}Z_{j^*,k+1}+\frac{r_{j^*}}{2}Z_{j^*-N/2,k+1}\\\le & {} \frac{-\varepsilon }{2} \delta _s^2Z_{j^*,k+1}+\frac{p_{j^*}}{2} D_s^-Z_{i^*,k+1}+l_{j^*}Z_{j^*,k+1}+\frac{r_{j^*}}{2}Z_{j^*,k+1}\\= & {} \frac{-\varepsilon }{2} \delta _s^2Z_{j^*,k+1}+\frac{p_{j^*}}{2} D_s^-Z_{i^*,k+1}+(l_{j^*}+r_{j^*})Z_{j^*,k+1}\\= & {} \frac{-\varepsilon }{2} \delta _s^2Z_{j^*,k+1}+\frac{p_{j^*}}{2} D_s^-Z_{i^*,k+1}+\left( \frac{q_{j^*}+r_{j^*}}{2}+\frac{1}{\Delta t}\right) Z_{j^*,k+1}\\\le & {} \frac{-\varepsilon }{2} \delta _s^2Z_{j^*,k+1}+\frac{p_{j^*}}{2} D_s^-Z_{i^*,k+1}+\left( \eta +\frac{1}{\Delta t}\right) Z_{j^*,k+1}\\= & {} \frac{-\varepsilon }{{\hat{h}}_{j^*}}\left\{ \frac{Z_{j^*+1,k+1}-Z_{j^*,k+1}}{h_{j^*+1}}-\frac{Z_{j^*,k+1}-Z_{j^*-1,k+1}}{h_{j^*}} \right\} \\&+\frac{p_{j^*}}{2}\left\{ \frac{Z_{j^*,k+1}-Z_{j^*-1,k+1}}{h_{j^*}} \right\} +\left( \eta +\frac{1}{\Delta t}\right) Z_{j^*,k+1}\\< & {} 0. \end{aligned}$$ - Case III::

-

For \(j^*=N/2\), \(D_s^+Z_{N/2,k+1}-D_s^-\phi _{N/2,k+1}>0\).

The required result follows from contradiction. \(\square \)

Consequently, the following stability estimate of the discrete operator \({\mathcal {L}}_{CN}^N\) can be obtained.

Lemma 4.2

Let \(Z_{i,k+1}\) be the solution of (4.1). Then

Proof

Let \(\chi _{i,k+1}^{\pm }:=\max \left\{ |Z_{0,k+1}|,\frac{\Delta t}{\eta \Delta t+1}\Vert {\mathcal {L}}_{CN1}^N Z_{i,k+1}\Vert \right\} \pm Z_{i,k+1}\) for \(i=1,\ldots ,N/2-1\). Clearly, \(\chi _{0,k+1}^{\pm }\ge 0\) and

In case, \(i=N/2+1,\ldots ,N-1\), define \(\chi _{i,k+1}^{\pm }=\max \left\{ |Z_{N,k+1}|,\frac{\Delta t}{\eta \Delta t+1}\Vert {\mathcal {L}}_{CN2}^N Z_{i,k+1}\Vert \right\} \pm Z_{i,k+1}\). Clearly, \(\chi _{N,k+1}^{\pm }\ge 0\) and

Moreover, if \(i=N/2\), \((D_s^+-D_s^-)\chi _{i,k+1}^{\pm }=0.\) Hence, the required result follows from Lemma 4.1. \(\square \)

5 Error analysis

Let us decompose \(Y_{i,k+1}\) into smooth and singular components to obtain a parameter uniform error estimate. We write \(Y_{i,k+1}:=X_{i,k+1}+Z_{i,k+1}\) where the smooth component X and the singular component Z satisfies

and

The error \(e_{i,k+1}\) is given by

Theorem 5.1

Let \(Y(s_i,t_{k+1})\) and \(Y_{i,k+1}\) be the solutions of (3.1) and (4.1), respectively. Then

Proof

The proof follows on the lines similar to the one presented in [39] for ordinary differential equations. \(\square \)

Finally, we combine (3.7) and Theorem 5.1 to obtain the principle convergence estimate that reads.

Theorem 5.2

Let y and Y be the solutions of the continuous problem (2.1) and the discrete problem (4.1), respectively. Then

for \(0\le i\le N\) and \(0\le k\le M\).

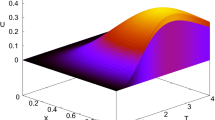

Surface plot of the numerical solution of Example 6.1 with \(M=N=128\) and \(\varepsilon =2^{-4}\)

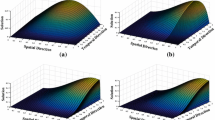

Numerical solutions of Example 6.1 for different values of \(\varepsilon \) at \(t=2\)

Surface plot of the numerical solution of Example 6.2 with \(M=N=128\) and \(\varepsilon =2^{-4}\)

Numerical solutions of Example 6.2 for different values of \(\varepsilon \) with \(t=2\)

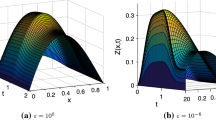

Numerical solution of Example 6.1 for different t when \(\varepsilon =2^{-1}\)

Numerical solution of Example 6.1 for different t when \(\varepsilon =2^{-4}\)

Numerical solution of Example 6.2 for different t when \(\varepsilon =2^{-1}\)

Numerical solution of Example 6.2 for different t when \(\varepsilon =2^{-4}\)

Loglog plot of maximum pointwise error for Example 6.1

Loglog plot of maximum pointwise error for Example 6.2

6 Numerical experiments

In this section, we consider two model problems, present numerical results using the proposed method, and verify the theoretical estimates numerically.

Example 6.1

Consider the following problem with integral boundary condition

Example 6.2

Consider the following problem with integral boundary condition:

The exact solution of the above examples is not known for comparison. Therefore, the double mesh principle [32] is used to estimate the proposed method’s error and rate of convergence. The maximum absolute error \((E_{N,\bigtriangleup t})\) and order of convergence (\(P_{N,\bigtriangleup t}\)) is defined as \( E_{N,\bigtriangleup t}:=\max \left| Y_{N,\bigtriangleup t}(s_i,t_{k+1})-{\tilde{Y}}_{2N,\bigtriangleup t/2}(s_i,t_{k+1})\right| \) and \( P_{N,\bigtriangleup t}:=\displaystyle \log _2\left( \frac{E_{N,\bigtriangleup t}}{E_{2N,\bigtriangleup t/2}}\right) \). Here, \(Y_{N,\bigtriangleup t}(s_i,t_{k+1})\) and \({\tilde{Y}}_{2N,\bigtriangleup t/2}(s_i,t_{k+1})\) denotes the numerical solutions on \(\bar{{\mathbb {D}}}_s^N\times {{\mathbb {T}}_t}^M\) and \(\bar{{\mathbb {D}}}_s^{2N}\times {{\mathbb {T}}_t}^{2M}\), respectively.

The maximum absolute error (\(E_{N,\bigtriangleup t}\)) and corresponding order of convergence (\(P_{N,\bigtriangleup t}\)) for Example 6.1 and Example 6.2 are tabulated for different values of \(\varepsilon \), M, and N in Tables 1 and 3 , respectively. In addition to this Tables 2 and 4 depict the order of convergence in time variable for Examples 6.1 and 6.2 when \(N=512\) and \(\varepsilon =2^{-6}\).

The presence of both interior and a boundary layer is apparent from the surface plots of the numerical solution for Examples 6.1 and 6.2 displayed in Figs. 1 and 3 , respectively. Figures 2 and 4 further illustrates the presence of the layers when \(t=2\) for Example 6.1 and Example 6.2. In contrast, Figs. 5-8 presents the solution for different time t and for different values of \(\varepsilon \) for given examples. It is to observe that as \(\varepsilon \) approaches the limiting value, it attributes the stiffness to the system and leads to the exponential changes across the interior and boundary layers. The log-log plot of errors are given in Figs. 9-10 for Example 6.1 and Example 6.2, respectively. It agrees with the expected convergence rate for the proposed method on the specially generated mesh.

7 Conclusion

A class of singularly perturbed parabolic partial differential equations with a large delay and an integral boundary condition is solved numerically. The proposed method consists of an upwind finite difference scheme on a non-uniform mesh in space and a Crank-Nicolson scheme on a uniform mesh in the time variable. The non-uniform mesh in the spatial direction is chosen so that most of the mesh points remain in the regions with rapid transitions. The method is invested for consistency, stability, and convergence. The error analysis of the proposed method reveals the parameter uniform convergence of first-order in space and second-order in time. Numerical experiments corroborate the theoretical findings.

Data availability

Data sharing not applicable to this article as no datasets were generated or analyzed during the current study.

References

Almomani, R., Almefleh, H.: On heat conduction problem with integral boundary condition. J. Emerg. Trends Eng. Appl. Sci. 3, 977–979 (2012)

Ansari, A.R., Bakr, S.A., Shishkin, G.I.: A parameter robust finite difference method for singularly perturbed delay parabolic partial differential equations. J. Comput. Appl. Math. 205, 552–566 (2007)

Ashyralyev, A., Sharifov, Y.A.: Existence and uniqueness of solutions for nonlinear impulsive differential equations with two-point and integral boundary conditions. Adv. Differe. Equ. 173, 1–11 (2013)

Avudai-Selvi, P., Ramanujam, N.: A parameter uniform difference scheme for singularly perturbed parabolic delay differential equation with Robin type boundary condition. Appl. Math. Comput. 296, 101–115 (2017)

Bahuguna, D., Abbas, S., Dabas, J.: Partial functional differential equation with an integral condition and applications to population dynamics. Nonlinear Anal. 69, 2623–2635 (2008)

Bahuguna, D., Dabas, J.: Existence and uniqueness of a solution to a semilinear partial delay differential equation with an integral condition. Nonlinear Dyn. Syst. Theory 8, 7–19 (2008)

Bansal, K., Sharma, K.K.: Parameter-robust numerical scheme for time-dependent singularly perturbed reaction-diffusion problem with large delay. Numer. Funct. Anal. Optim. 39, 127–154 (2018)

Bashier, E.B.M., Patidar, K.C.: A fitted numerical method for a system of partial delay differential equations. Comput. Math. Appl. 61, 1475–1492 (2011)

Boucherif, A.: Second-order boundary value problems with integral boundary conditions. Nonlinear Anal. 70, 364–371 (2009)

Cahlon, B., Kulkarni, D.M., Shi, P.: Stepwise stability for the heat equation with a nonlocal constraint. SIAM J. Numer. Anal. 32, 571–593 (1995)

Cannon, J.R.: The solution of the heat equation subject to the specification of energy. Quart. Appl. Math. 21, 155–160 (1963)

Clavero, C., Gracia, J.L., Jorge, J.C.: High-order numerical methods for one-dimensional parabolic singularly perturbed problems with regular layers, Numer. Methods Partial. Differ. Equ. 21, 148–169 (2004)

Culshaw, R.V., Ruan, S.: A delay differential equation model of HIV infection of CD4+ T-cells. Math. Biosci. 165, 27–39 (2000)

Das, A., Natesan, S.: Uniformly convergent hybrid numerical scheme for singularly perturbed delay parabolic convection diffusion problems on Shishkin mesh. Appl. Math. Comput. 271, 168–186 (2015)

Erneux, T.: Applied Delay Differential Equations. Springer, New Yo (2009)

Ewing, R.E., Lin, T.: A class of parameter estimation techniques for fluid flow in porous media. Adv. Water Res. 14, 89–97 (1991)

Formaggia, L., Nobil, F., Quarteroni, A., Venezian, A.: Multiscale modelling of the circulatory system: a preliminary analysis. Comput. Vis. Sci. 2, 75–83 (1999)

Franz, A.L., Roy, R., Shaw, L.B., Schwartz, I.B.: Effect of multiple time delays on intensity fluctuation dynamics in fiber ring lasers. Phys. Rev. E 78, 16208 (2008)

Gowrisankar, S., Natesan, S.: A robust numerical scheme for singularly perturbed delay parabolic initial boundary value problems on equidistributed grids. Electron. Trans. Numer. Anal. 41, 376–395 (2014)

Gupta, V., Kumar, M., Kumar, S.: Higher order numerical approximation for time dependent singularly perturbed differential-difference convection-diffusion equations. Numer. Methods Partial Differ. Equ. 34, 357–380 (2018)

Gurney, W.S.C., Blythe, S.P., Nisbet, R.M.: Nicholson’s blowflies revisited. Nature 287, 17–21 (1980)

Hu, M., Wang, L.: Triple positive solutions for an impulsive dynamic equation with integral boundary condition on time scales. Int. J. Appl. Math. Stats. 31, 43–66 (2013)

Ikeda, K.: Multiple-valued stationary state and its stability of the transmitted light by a ring cavity system. Opt. Commun. 30, 257–261 (1979)

Jankowskii, T.: Differential equations with integral boundary conditions. J. Comput. Appl. Math. 147, 1–8 (2002)

Kaushik, A., Sharma, K.K., Sharma, M.: A parameter uniform difference scheme for parabolic partial differential equation with a retarded argument. Appl. Math. Model. 34, 4232–4242 (2010)

Kaushik, A., Sharma, N.: An adaptive difference scheme for parabolic delay differential equation with discontinuous coefficients and interior layers. J. Differ. Equ. Appl. 26, 11–12 (2020)

Kumar, D., Kumari, P.: A parameter-uniform collocation scheme for singularly perturbed delay problems with integral boundary conditions. J. Appl. Math. Comput. 63, 813–828 (2020)

Kumar, D., Kumari, P.: Parameter-uniform numerical treatment of singularly perturbed initial-boundary value problems with large delay. Appl. Numer. Math. 153, 412–429 (2020)

Kumar, K., Chakravarthy, P.P., Ramos, H., Vigo-Aguiar, J.: A stable finite difference scheme and error estimates for parabolic singularly perturbed PDEs with shift parameters. J. Comput. Appl. Math. 405, 113050 (2022)

Kumar, S., Kumar, M.: High order parameter uniform discretization for singularly perturbed parabolic partial differential equations with time delay. Comput. Math. Appl. 68, 1355–1367 (2014)

Ladyzhenskaya, O.A., Solonnikov, V.A., Ural’ceva, N.N.: Linear and quasilinear equations of parabolic type. Translated from the Russian by S. Smith. Translations of Mathematical Monographs, vol. 23. American Mathematical Society, Providence, R.I., (1968)

Linß, T.: Layer Adapted Meshes for Reaction Convection Diffusion Problems. Springer, Berlin (2010)

Mackey, M.C., Glass, L.: Oscillation and chaos in physiological control systems. Sci. New Ser. 197, 287–289 (1977)

Marconi, M., Javaloyes, J., Barland, S., Balle, S., Giudici, M.: Vectorial dissipative solitons in vertical-cavity surface-emitting lasers with delays. Nat. Photon. 9, 450–455 (2015)

Marcus, C.M., Westervelt, M.: Stability of analog neural networks with delay. Phys. Rev. A 39, 347–359 (1989)

Ramesh, V.P., Priyanga, B.: Higher order uniformly convergent numerical algorithm for time-dependent singularly perturbed differential-difference equations, Differ. Equ. Dyn. Syst. 29, 239–263 (2021)

Roos, H.-G., Stynes, M., Tobiska, L.: Robust Numerical Methods for Singularly Perturbed Differential Equations. Springer, Berlin (2008)

Schöll, E., Schuster, H.: Handbook of Chaos Control. Wiley, Weinheim (2008)

Sekar, E., Tamilselvan, A.: Singularly perturbed delay differential equations of convection-diffusion type with integral boundary condition. J. Appl. Math. Comput. 59, 701–722 (2019)

Sekar, E., Tamilselvan, A., Vadivel, R., Gunasekaran, N., Zhu, H., Cao, J., Li, X.: Finite difference scheme for singularly perturbed reaction diffusion problem of partial delay differential equation with nonlocal boundary condition. Adv. Differ. Equ. 2021, 151 (2021)

Sharma, A., Rai, P.: A hybrid numerical scheme for singular perturbation delay problems with integral boundary condition. J. Appl. Math, Comput (2021)

Turkyilmazoglu, M.: Parabolic partial differential equations with nonlocal initial and boundary values. Int. J. Comput. Methods 12, 1550024 (2015)

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interest

The authors have no competing interests to declare that are relevant to the content of this article.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This document is the result of the research project MTR/2021/000117 funded by Science and Engineering Research Board, Department of Science & Technology, Government of India.

Rights and permissions

Springer Nature or its licensor holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Sharma, N., Kaushik, A. A uniformly convergent difference method for singularly perturbed parabolic partial differential equations with large delay and integral boundary condition. J. Appl. Math. Comput. 69, 1071–1093 (2023). https://doi.org/10.1007/s12190-022-01783-2

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12190-022-01783-2