Abstract

In this paper, we present a new numerical methods for solving large-scale differential Sylvester matrix equations with low rank right hand sides. These differential matrix equations appear in many applications such as robust control problems, model reduction problems and others. We present two approaches based on extended global Arnoldi process. The first one is based on approximating exponential matrix in the exact solution using the global extended Krylov method. The second one is based on a low-rank approximation of the solution of the corresponding Sylvester equation using the extended global Arnoldi algorithm. We give some theoretical results and report some numerical experiments to show the effectiveness of the proposed methods compared with the extended block Krylov method given in Hached and Jbilou (Numer Linear Algebra Appl 255:e2187, 2018).

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In this paper, we consider the differential Sylvester matrix equation (DSE in short) of the form

where \(A\in \mathbb {R}^{n\times n}\), \(B\in \mathbb {R}^{p\times p}\), and \(E\in \mathbb {R}^{n\times s}\), \(F\in \mathbb {R}^{p\times s}\), are full rank matrices, with \(s\ll n,p\). The initial condition is given in a factored form as \(X_{0}=Z_{0}\tilde{Z}_{0}^{T}\) and the matrices A and B are assumed to be large and sparse.

Differential Sylvester matrix equations play a fundamental role in many problems in control, filter design theory, model reduction problems, differential equations and robust control problems; see, e.g., [1, 13, 19] and the references therein.

The exact solution of the differential Sylvester matrix Eq. (1) is given by the following result.

Theorem 1

[1] The unique solution of the differential Sylvester equations (1) is defined by

There are several methods for solving small or medium-sized differential Sylvester matrix equations. One can see, for example backward differentiation formula (BDF) and Rosenbrock method [12, 31].

During the last years, there is a large variety of methods to compute the solution of large scale matrix differential equations such as differential Lyapunov matrix equation, differential Sylvester equation and Riccati differential equation. For more details see [4, 5, 7, 18, 19, 26,27,28, 35]. For large-scale problems, the effective methods are based on Krylov subspaces. Some methods have been proposed for solving large matrix equation, see, e.g., [2, 8, 9, 18,19,20, 34]. The main idea employed in these methods is to use a extended Krylov subspace and then apply the Galerkin-type orthogonality condition. In [19], Jbilou and Hached are presented two approaches to solving the large differential Sylvester matrix equation, by using the block extended Krylov subspaces. The main idea in this work is using the extended global Krylov subspaces to solve (1).

The rest of the paper is organized as follows. In the next section, we give expression based on the global Arnoldi process of the unique solution X(t) of the differential Sylvester matrix Eq. (1). In Sect. 3, we recall the extended global Arnoldi algorithm with some of its properties. In Sect. 4, we defined EGA-exp method based on extended global Krylov subspaces and quadrature method to approximate matrix exponential and computing numerical solution of Eq. (1). In Sect. 5, we present a low-rank approximation of solution of differential Eq. (1) using projection onto extended global Krylov subspaces \(\mathcal {K}_{m}^{g}(A,E)\) and \(\mathcal {K}_{m}^{g}(B^T,F)\). Finally, Sect. 6 is devoted to numerical experiments showing effectiveness of proposed methods.

Throughout the paper, we use the following notations. The Frobenius inner product of the matrices X and Y is defined by \(\langle X,Y \rangle _F=tr(X^TY)\), where tr(Z) denotes the trace of a square matrix Z. The associated norm is the Frobenius norm denoted by \(\parallel {\cdot } \parallel _F\). The Kronecker product \(A\otimes B = [a_{i,j} B]\) where \(A=[a_{i,j}]\). This product satisfies the properties: \((A\otimes B)(C\otimes D) = (AC\otimes BD)\) and \((A\otimes B)^T = A^T\otimes B^T\). We also use the matrix product \(\diamond \) defined in [11]. The following proposition gives some properties satisfied by this product.

Proposition 1

Let \(A, \ B, \ C \in \mathbb {R}^{n \times ps}\), \(D \in \mathbb {R}^{n \times n}\), \(L \in \mathbb {R}^{p \times p}\) and \(\alpha \in \mathbb {R}\). Then we have,

- 1.

\((A + B)^T \diamond C = A^T \diamond C + B^T \diamond C\).

- 2.

\( A^T \diamond (B + C) = A^T \diamond B + A^T \diamond C\).

- 3.

\((\alpha A)^T \diamond C = \alpha (A^T \diamond C).\)

- 4.

\((A^T \diamond B)^T = B^T \diamond A.\)

- 5.

\( A^T \diamond (B (L \otimes I_s)) = (A^T \diamond B) L\).

A block matrix \(\mathcal {V}_m = [ V_1, V_2,\ldots , V_m ]\) is F-orthonormal if \(\mathcal {V}_m^T\diamond \mathcal {V}_m =I_m\). We have the following result.

Lemma 1

[24] Let \(\mathcal {V}_m = [ V_1, V_2,\ldots , V_m ]\) be an \(n\times ms\)F-orthonormal block matrix, \(Z\in \mathbb {R}^{m\times s}\) and \(Y\in \mathbb {R}^{ms\times q}\). Then we have

2 Expression of the exact solution of the differential Sylvester equation

In this section we will give the expression of the unique solution X(t) of the differential Sylvester matrix Eq. (1). This expression is based on the global Arnoldi process. The modified global Arnoldi process constructs an F-orthonormal basis \(V_1, \ V_2,\ldots ,V_m\) of the matrix Krylov subspace

Let \(\mathcal {V}_m=\left[ V_1, V_2,\ldots , V_m \right] \) and \(\widetilde{H}^A_m \) is the \((m + 1) \times m\) upper Hessenberg matrix whose entries \(h_{i,j}\) are defined by Algorithm 1 and \(H^A_m\) is the \(m \times m\) matrix obtained from \(\widetilde{H}^A_m \) by deleting its last row. We have the following relation

where \(e_m^T=[0,0,\ldots , 0, 1]\).

Let \(P_A\) be the minimal polynomial of A associated to E and \(P_{B^T}\) be the minimal polynomial of \(B^T\) associated to F, q the degree of \(P_A\) and \(q'\) the degree of \(P_{B^T}\). The following result shows that the solution X of (1) can be expressed in terms of the global Arnoldi basis.

Theorem 2

Let \(\mathcal {V}_q=\left[ V_1, V_2,\ldots , V_q \right] \) and \(\mathcal {W}_{q'}=\left[ W_1, W_2,\ldots , W_{q'}\right] \) be the F-orthonormal block matrices obtained by applying simultaneously q and \(q'\) steps of the global Arnoldi algorithm to the pairs (A, E) and \((B^T, F)\) respectively. Then the unique solution X of (1) can be expressed as:

where \(Y_{qq'}(t)\) is the solution of the low-order differential Sylvester equation

with \(\widetilde{E}_{q}=\Vert E\Vert _Fe_1^{(q)}\) and \(\widetilde{F}_{q'}=\Vert F\Vert _Fe_1^{(q')}\).

Proof

Let Z(t) be the matrix defined by \(\mathcal {V}_{q}(Y_{qq'}(t)\otimes I_s)\mathcal {W}_{q'}^{T}\). Then we have,

Since \(Y_{qq'}\) is the solution of the lower order differential Sylvester equation then \(\dot{Y}_{qq'}(t)-\mathbb {T}_{q,A}Y_{qq'}(t)-Y_{qq'}(t)\left( H^B_{q'}\right) ^{T}-\widetilde{E}_{q}\widetilde{F}_{q'}^{T}=0\). We get

Therefore, using the fact that the solution of (1) is unique, it follows that \(X(t)= \mathcal {V}_{q}(Y_{qq'}(t)\otimes I_s)\mathcal {W}_{q'}^{T}\). \(\square \)

3 The extended global Arnoldi process

In this section, we recall the extended global Krylov subspace and extended global Arnoldi process. Let V be a matrix of dimension \(n \times s\). Then the extended global Krylov subspace associated to (A, V) is given by

The extended global Arnoldi process constructs an F-orthonormal basis \(\left\{ V_1, \ V_2,\ldots , V_m\right\} \) of the extended global Krylov subspace \(\mathcal {K}_{m}^g(A,V)\) [20]. The algorithm is summarized as follows

Let \( {\mathbb V}_m = \left[ V_1,V_2,\ldots ,V_m \right] \) with \(V_i\in \mathbb {R}^{n \times 2s}\) and \(2m \times 2m\) upper block Hessenberg matrix

We have the following relation

where \(\overline{\mathbb {T}}_{m,A}=\mathbb {V}_{m+1}^{T}\diamond (A\mathbb {V}_{m})\), and \(E_m^T=[0_{2\times 2(m-1)},I_2]\) is the matrix formed with the last 2 columns of the \(2m\times 2m\) identity matrix \(I_{2m}\).

4 Matrix exponential approximation and Gauss quadrature method

In this section, we compute a approximation of the solution of the large differential Sylvester matrix Eq. (1). Our approach is based on two steps. First approximation of matrix exponential is given using extended global Krylov subspace and then the Gaussian quadrature method is applied.

The exact solution of (1) is given by

We use extended global Krylov subspace method to approximate \(e^{(t-\tau )A}E\) and \(e^{(t-\tau )B^T}F\).

By Algorithm 2 we compute \(\mathbb {V}_{m}=[V_{1},\ldots ,V_{m}]\) and \(\mathbb {W}_{m}=[W_{1},\ldots ,W_{m}]\) the F-orthonormal matrices whose columns form an basis of the subspace \(\mathcal {K}_{m}^g(A,E)\) and \(\mathcal {K}_{m}^g(B^T,F)\), respectively.

The approximation \(Z_{m,A}\) of \(Z_A=e^{(t-\tau )A}E\) is obtained by

where \(\mathbb {T}_{m,A}=\mathbb {V}_{m}^{T}\diamond (A\mathbb {V}_{m})\) (see [32, 33, 36]).

In the same way, an approximation of \(Z_B=e^{(t-\tau )B^T}F\) is given by

where \(\mathbb {T}_{m,B}=\mathbb {W}_{m}^{T}\diamond (B^T\mathbb {W}_{m})\).

That leads us to the following approximation

Assuming that \(X(t_0) = 0\), then the approximate solution of the differential Sylvester Eq. (1) is obtained by

where

with

Since m is generally very small (\(m<< n\)), the factors \(\mathbb {X}_{m,A}\) and \(\mathbb {X}_{m,B}\) can be computed using the expm function of Matlab, and we calculate the approximation of the integral of (8) by Gauss quadrature formulae.

The next result shows that the \(2m\times 2m\) matrix function \(\mathbb {X}_m(t)\) is solution of a low-order differential Sylvester matrix equation.

Theorem 3

The matrix function \(\mathbb {X}_m(t)\) defined by (8) satisfies the following low-order differential Sylvester matrix equation

where \(\widetilde{E}_{m}=\mathbb {V}_{m}^{T}\diamond E\) and \(\widetilde{F}_{m}=\mathbb {W}_{m}^{T}\diamond F.\)

Proof

The proof can be easily derived from the expression (8) and the result of Theorem 1. \(\square \)

Let \(R_{m}(t)=\dot{X}_{m}(t)-AX_{m}(t)-X_{m}(t)B-EF^{T}\) be the residual associated to the approximation \(X_m(t)\).

Theorem 4

If \(X_{m}(t)=\mathbb {V}_{m}(\mathbb {X}_{m}(t)\otimes I_s)\mathbb {W}_{m}^{T}\) is the approximation obtained at step m by the extended global Arnoldi algorithm. Then the residual \(R_m(t)\) satisfies the inequality

for the 2-norm, we have

where \(\overline{\mathbb {X}}_{m}(t)\) is the \(2\times 2m\) matrix corresponding to the last 2 rows of \(\mathbb {X}_m(t)\).

Proof

We have

Therefore

We use the following properties

and

we obtained

Since \(\mathbb {X}_{m}(t)\) is the exact solution of the equation

Then

If \(\overline{\mathbb {X}}_{m}(t)=E_m^T\mathbb {X}_{m}(t)\), then

The same way for the 2-norm, we have \(\Vert R_{m}(t)\Vert _2\le \max \left\{ \Vert T^A_{m+1,m} \overline{\mathbb {X}}_{m} (t)\Vert _2,\Vert T^B_{m+1,m} \overline{\mathbb {X}}_{m} (t)\Vert _2 \right\} .\)\(\square \)

The following result shows that the approximation \(X_m(t)\) is an exact solution of a perturbed differential Sylvester equation.

Theorem 5

Let \(X_m(t)\) be the approximate solution given by (7). Then we have

where

Proof

By multiplying (9) on the left by \(\mathbb {V}_{m}\) and on the right by \(\mathbb {W}_{m}^{T}\), we obtained

Since

then

Finally

\(\square \)

The next result states that the error \(\mathcal {E}_m(t)=X(t)-X_m(t)\) satisfies also a differential Sylvester matrix equation.

Theorem 6

Let X(t) be the exact solution of (1) and let \(X_{m}(t)\) be the approximate solution obtained at step m of Algorithm 2. The error \(\mathcal {E}_m(t)=X(t)-X_m(t)\) satisfies the following equation

Proof

We have

then

\(\square \)

Notice that from Theorem 6, the error \(\mathcal {E}_m(t)\) can be expressed in the integral form as follows

where \(\mathcal {E}_{m,0}=\mathcal {E}_{m}(t_{0}).\)

Next, we give an upper bound for the norm of the error by using the 2-logarithmic norm defined by \(\mu _2(A)=\frac{1}{2}\lambda _{{ max}}(A+A^T).\)

Theorem 7

Assume that the matrices A and B are such that \(\mu _2(A)+\mu _2(B)\ne 0\). Then at step m of the extended global Arnoldi process, we have the following upper bound for the norm of the error \(\mathcal {E}_m(t)\),

where

The matrix \(\overline{\mathbb {X}}_{m}\) is the \(2\times 2m\) matrix corresponding to the last 2 rows of \(\mathbb {X}_{m}(t)\).

Proof

We first point out that \(\Vert e^{tA}\Vert _2\le e^{\mu _{2}(A)t}.\) Using the expression (13) of \(\mathcal {E}_m(t)\), we obtain the following relation

Therefore, using (13) and the fact that \(\Vert e^{(t-\tau )A}\Vert _2\le e^{(t-\tau )\mu _{2}(A)}\), we get

Using the result of Theorem 4, we obtain \(\displaystyle {\max _{\xi \in [t_0,t]}}\Vert R_m(\xi )\Vert _2\le \alpha _m\) and then

Next, we give another upper bound for the norm of the error \(\mathcal {E}_m(t)\). \(\square \)

Theorem 8

The error \(\mathcal {E}_{m}(t)\) satisfies the following inequality

where

Proof

From the expressions of X(t) and \(X_{m}(t)\), we have

Now as

and since

we also have

We get

\(\square \)

One can use some known results [22, 33] to derive upper bounds for \(\Vert Z_A(\tau )-Z_{m,A}(\tau )\Vert _2\), and \(\Vert Z_B(\tau )-Z_{m,B}(\tau )\Vert _2\), when using the extended global Krylov subspaces.

Lemma 2

where

Proof

We have

then

and

Therefore, the error \(e_{m,A}(\tau )=Z_A(\tau )-Z_{m,A}(\tau )\) is such that

which allows to give the following expression of \(e_{m,A}\):

As \(\tau -u>0\), it follows that

Then, we get

\(\square \)

Notice that if \(\nu _{2}(A)\) is not known but \(\nu _{2}(A)\ge 0\) (which is the case for positive semidefinite matrices) then we get the upper bound

To define a new upper bound for the norm of the global error \(\mathcal {E}_m(t)\), we can use the upper bounds for the errors \(e_{m,A}\) and \(e_{m,B}\) in the expression (15) stated in Theorem 8 to get

and then we obtain

where

The approximate solution \(X_m(t)\) could be given as a product of two matrices of low rank. It is possible to decompose it as \(X_m=Z_1\, Z_2^T\) where the matrix \(Z_1\) and \(Z_2\) are of low rank (lower than 2m). Consider the singular value decomposition of the \(2m\times 2m\) matrix

where \(\varSigma \) is the diagonal matrix of the singular values of \(\mathbb {X}_{m}\) sorted in decreasing order. Let \(\mathbb {X}_{1,l}\) and \(\mathbb {X}_{2,l}\) be the \(2m\times l\) matrices of the first l columns of \( {\widetilde{G} }_1\) and \( {\widetilde{G} }_2\) respectively, corresponding to the l singular values of magnitude greater than some tolerance \(d_{tol}\). We obtain the truncated SVD

where \(\varSigma _l = \mathrm{diag}[\sigma _1, \ldots , \sigma _l]\). Setting \(Z_{1,m}=\mathbb {V}_{m} \, \left( \mathbb {X}_{1,l}\, \varSigma _l^{1/2}\otimes I_s\right) \) and \(Z_{2,m}=\mathbb {W}_{m} \, \left( \mathbb {X}_{2,l}\, \varSigma _l^{1/2}\otimes I_s\right) \) Leads to

This is very important for large problems when one doesn’t need to compute and store the approximation \(X_m\) at each iteration, see [2, 6, 8].

We summarize the above method for solving large differential Sylvester matrix equations (EGA-exp) in following algorithm.

5 Low-rank approximate solutions by extended global Arnoldi algorithm

We present in this section an approach that avoid exponential approximation and also avoid quadrature method that we use in previous sections. This approach is based on extended global Krylov projection of the differential Sylvester matrix Eq. (1). For more details on global Krylov projection method for solving large matrix equations see [2, 8, 9, 24].

Recall that when we Apply the extended global Arnoldi algorithm to the pairs (A, E) and \((B^T,F)\), we get F-orthonormal bases \(\left\{ V_1, V_2,\ldots , V_m \right\} \) and \(\left\{ W_1, W_2,\ldots , W_m\right\} \) of extended global Krylov subspaces \(\mathcal {K}_m^g(A,E)\) and \(\mathcal {K}_m^g(B^T,F)\), respectively and we have

where

We then consider approximate solution of the large differential Sylvester matrix Eq. (1) that have the low-rank form

where \(Y_{m}(t)\) the solution of the reduced differential Sylvester matrix equation

with \(\widetilde{E}_{m}=\Vert E\Vert _Fe_1^{(2m)}\) and \(\widetilde{F}_{m}=\Vert F\Vert _Fe_1^{(2m)}\).

The following theorem gives a result that allows us the computation of the norm of the residual.

Theorem 9

The Frobenius norm of the residual \(R_m(t)\) associated to the approximation \(X_{m}(t)\) satisfies the relation

where \(\overline{Y}_{m}(t)\) is the \(2\times 2m\) matrix corresponding to the last 2 rows of \(Y_m\).

Proof

See Theorem 4. \(\square \)

To solve the reduced order differential Sylvester matrix Eq. (22) one can use Backward Differentiation Formula (BDF) or Rosenbrock method, see [12, 19, 31].

We summarize steps of this approach in the following algorithm

6 Numerical experiments

In this section, we present some numerical experiments of large and sparse differential Sylvester matrix equations. We compare the two approaches proposed in this work [Algorithm 3 (EGA-exp), Algorithm 4 using BDF (EGA–BDF) and Algorithm 4 using Rosenbrock (EGA–ROS)] whit the extended block Arnoldi (EBA-exp) given in [19]. All the experiments were performed on a laptop with an Intel Core i5 processor and 4 GB of RAM using Matlab2014. The n-by-s matrices E and F are given by random values uniformly distributed on [0, 1].

6.1 Example 1

In this first example, the matrices A and B are obtained from the centered finite difference discretization of the operators:

on the unit square \([0,1]\times [0,1]\) with homogeneous Dirichlet boundary conditions. The number of inner grid points in each direction was \(n_0\) and \(p_0\) for the operators \(L_A\) and \(L_B\), respectively. The matrices A and B were obtained from the discretization of the operator \(L_A\) and \(L_B\) with the dimensions \(n = n_0^2\) and \(p=p_0^2\), respectively. The discretization of the operator \(L_A(u)\) and \(L_B(u)\) yields matrices extracted from the Lyapack package [30] using the command fdm_2d_matrix and denoted as \(\mathrm{fdm}(n0,'f\_1(x,y)','f\_2(x,y)','f(x,y)')\). For this experiment, we consider \(A=\mathrm{fdm}(n0,f_1(x,y),f_2(x,y),f(x,y))\) and \(B=\mathrm{fdm}(p0,g_1(x,y),g_2(x,y),g(x,y))\) with \(f_1(x,y)=-e^{xy}\), \(f_2(x,y)=- \sin (xy)\), \(f(x,y)=y^2\), \(g_1(x,y)=-100e^x\), \(g_2(x,y)=-12xy\) and \(g(x,y)=\sqrt{x^2+y^2}\). we used \(s=2\). The time interval considered was [0, 2] and the initial condition \(X_0=X(t_0)\) was \(X_0=Z_0\widetilde{Z}_0^T\), where \(Z_0=0_{n\times 2}\) and \(\widetilde{Z}_0=0_{p\times 2}\). The tolerance was set to \(10^{-7}\) for the stop test on the residual. For the EGA–BDF and Rosenbrock methods, we used a constant timestep \(h=0.1\).

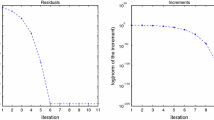

In Fig. 1, we chose a size of \(2500\times 2500\), \(2500\times 2500\) for the matrices A and B, respectively, we plotted the Frobenius norms of the residuals \(\Vert R_m(T_f)\Vert _F\) at final time \(T_f\) versus the number of extended global Arnoldi iterations for the EGA-exp, EGA–BDF and EGA–ROS methods.

In Fig. 2, the matrices A and B are obtained from the discretisation of the operator \(L_A(u)\) and \(L_B(u)\) with dimensions \(n = 10{,}000\) and \(p = 4900\), respectively. we plotted the Frobenius norms of the residuals \(\Vert R_m(T_f)\Vert _F\) at final time \(T_f\) versus the number of extended global Arnoldi iterations for the EGA-exp, EGA–BDF and EGA–ROS methods.

6.2 Example 2

For the second set of experiments, we use the matrices add32, pde2961, and \({ thermal}\) from the University of Florida Sparse Matrix Collection [15] and from the Harwell Boeing Collection (http://math.nist.gov/MatrixMarket). The tolerance was set to \(10^{-7}\) for the stop test on the residual. For the EGA–BDF and EGA–ROS methods, we used a constant timestep \(h=0.01\).

In Fig. 3, the matrices \(A={ thermal}\) and \(B=add32\) with dimensions \(n=3456\) and \(p=4960\), respectively, and \(s=3\). we plotted the Frobenius norms of the residuals \(\Vert R_m(T_f)\Vert _F\) at final time \(T_f\) versus the number of extended global Arnoldi iterations for the EGA-exp, EGA–BDF and EGA–ROS methods.

In Fig. 4, we used the matrices \(A=pde2961\) and \(B=\mathrm{fdm}(90,100e^{x},\sqrt{2x^2+y^2},y^2-x^2)\) with dimensions \(n=2961\) and \(p=8100\), respectively, and \(s=4\). we plotted the Frobenius norms of the residuals \(\Vert R_m(T_f)\Vert _F\) at final time \(T_f\) versus the number of extended global Arnoldi iterations for the EGA-exp, EGA–BDF and EGA–ROS methods.

6.3 Example 3

In this last example, we compare the performances of the extended global Arnoldi method associated to the different techniques for solving the reduced-order problem and the EBA-exp method given in [19].

In Table 1, we list the Frobenius residual norms at final time \(T_f=2\) and the corresponding CPU time for each method. For this experiment, the algorithms are stopped when the residual norms are smaller than \(10^{-9}\).

The numerical results are promising, showing the effectiveness of the global extended exponential method EGA-exp compared with extended block exponential approach EBA-exp given in [19] in terms of precision and computation time.

7 Conclusion

We presented in this paper some iterative methods for computing numerical solutions for large scale differential Sylvester matrix equations with low rank right-hand sides. The first approach arises naturally from the exponential expression of the exact solution and the use of approximation approach of the exponential of a matrix times by extended global Krylov subspaces. The second approach is based on low-rank approximate solutions and extended global algorithm. The approximate solutions are given as products of two low rank matrices and allow for saving memory for large problems. The numerical experiments show that the proposed extended global Krylov based methods are effective for large and sparse problems.

References

Abou-Kandil, H., Frelling, G., Ionescu, V., Jank, G.: Matrix Riccati equations in control and systems theory. In: Systems and Control Foundations and Applications. Birkhäuser, Basel (2003)

Agoujil, S., Bentbib, A.H., Jbilou, K., Sadek, El.M.: A minimal residual norm method for large-scale Sylvester matrix equations. Electron. Trans. Numer. Anal. 43, 45–59 (2014)

Bartels, R.H., Stewart, G.W.: Solution of the matrix equation \(A X + X B = C\), algorithm 432. Commun. ACM 15, 820–826 (1972)

Behr, M., Benner, P., Heiland, J.: Solution formulas for differential Sylvester and Lyapunov equations. arXiv:1811.08327v1

Benner, P., Mena, H.: Rosenbrock methods for solving Riccati differential equations. IEEE Trans. Autom. Control 58(11), 2950–2957 (2013)

Benner, P., Li, R.C., Truhar, N.: On the ADI method for Sylvester equations. J. Comput. Appl. Math. 233, 1035–1045 (2009)

Benner, P., Mena, H.: Numerical solution of the infinite-dimensional \(LQR\)-problem and the associated Riccati differential equations. J. Numer. Math. 26(1), 1–20 (2018)

Bentbib, A.H., Jbilou, K., Sadek, El.M.: On some Krylov subspace based methods for large-scale nonsymmetric algebraic Riccati problems. Comput. Math. Appl. 70, 2555–2565 (2015)

Bentbib, A.H., Jbilou, K., Sadek, El.M.: On some extended block Krylov based methods for large scale nonsymmetric Stein matrix equations. Mathematics 5, 21 (2017)

Bouhamidi, A., Jbilou, K.: A note on the numerical approximate solutions for generalized Sylvester matrix equations with applications. Appl. Math. Comput. 206(2), 687–694 (2008)

Bouyouli, R., Jbilou, K., Sadaka, R., Sadok, H.: Convergence properties of some block Krylov subspace methods for multiple linear systems. J. Comput. Appl. Math. 196, 498–511 (2006)

Butcher, J.C.: Numerical Methods for Ordinary Differential Equations. Wiley, Berlin (2008)

Corless, M.J., Frazho, A.E.: Linear Systems and Control—An Operator Perspective, Pure and Applied Mathematics. Marcel Dekker, New York (2003)

Datta, B.N.: Numerical Methods for Linear Control Systems Design and Analysis. Elsevier, New York (2003)

Davis, T.: The University of Florida sparse matrix collection, NA digest, vol. 97(23) (7 June 1997). http://www.cise.ufl.edu/research/sparse/matrices. Accessed 10 June 2016

Druskin, V., Knizhnerman, L.: Extended Krylov subspaces approximation of the matrix square root and related functions. SIAM J. Matrix Anal. Appl. 19(3), 755–771 (1998)

Golub, G.H., Nash, S., Van Loan, C.: A Hessenberg–Schur method for the problem \(AX+XB=C\). IEEC Trans. Autom. Control AC–24(1979), 909–913 (1979)

Hached, M., Jbilou, K.: Numerical solutions to large-scale differential Lyapunov matrix equations. Numer. Algorithms 79, 741–757 (2017)

Hached, M., Jbilou, K.: Computational Krylov-based methods for large-scale differential Sylvester matrix problems. Numer. Linear Algebra Appl. 255(5), e2187 (2018). https://doi.org/10.1002/nla.2187

Heyouni, M.: Extended Arnoldi methods for large low-rank Sylvester matrix equations. Appl. Numer. Math. 60, 1171–1182 (2010)

Higham, N.J.: The scaling and squaring method for the matrix exponential revisited. SIAM J. Matrix Anal. Appl. 26(4), 1179–1193 (2005)

Hochbruck, M., Lubich, C.: On Krylov subspace approximations to the matrix exponential operator. SIAM J. Numer. Anal. 34, 1911–1925 (1997)

Jagels, C., Reichel, L.: Recursion relations for the extended Krylov subspace method. Linear Algebra Appl. 434(7), 1716–1732 (2011)

Jbilou, K.: Low-rank approximate solution to large Sylvester matrix equations. App. Math. Comput. 177, 365–376 (2006)

Jbilou, K.: ADI preconditioned Krylov methods for large Lyapunov matrix equations. Linear Algebra Appl. 432, 2473–2485 (2010)

Koskela, A., Mena, H.: Analysis of Krylov subspace approximation to large scale differential Riccati equations. arXiv:1705.07507v4

Mena, H., Ostermann, A., Pfurtscheller, L., Piazzola, C.: Numerical low-rank approximation of matrix differential equations. J. Comput. Appl. Math. 340, 602–614 (2018)

Mena, H., Lang, N., Saak, J.: On the benefits of the \(LDL^T\) factorization for large-scale differential matrix equation solvers. Linear Algebra Appl. 480, 44–71 (2015)

Moler, C.B., Van Loan, C.F.: Nineteen dubious ways to compute the exponential of a matrix. SIAM Rev. 20, 801836 (1978)

Penzl, T.: Lyapack a matlab toolbox for large Lyapunov and Riccati equations, model reduction problems, and linear-quadratic optimal control problems. http://www.tu-chemintz.de/sfb393/lyapack

Rosenbrock, H.H.: Some general implicit processes for the numerical solution of differential equations. J. Comput. 5, 329–330 (1963)

Saad, Y.: Numerical solution of large Lyapunov equations. In: Kaashoek, M.A., van Schuppen, J.H., Ran, A.C. (eds.) Signal Processing, Scattering, Operator Theory and Numerical Methods. Proceedings of the International Symposium MTNS-89, vol. 3, pp. 503–511. Birkhäuser, Boston (1990)

Saad, Y.: Analysis of some Krylov subspace approximations to the matrix exponential operator. SIAM J. Numer. Anal. 29, 209–228 (1992)

Simoncini, V.: A new iterative method for solving large-scale Lyapunov matrix equations. SIAM J. Sci. Comput. 29(3), 1268–1288 (2007)

Stillfjord, T.: Low-rank second-order splitting of large-scale differential Riccati equations. IEEE Trans. Autom. Control 60(10), 2791–2796 (2015)

Van Der Vorst, H.: Iterative Krylov Methods for Large Linear Systems. Cambridge University Press, Cambridge (2003)

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Sadek, E.M., Bentbib, A.H., Sadek, L. et al. Global extended Krylov subspace methods for large-scale differential Sylvester matrix equations. J. Appl. Math. Comput. 62, 157–177 (2020). https://doi.org/10.1007/s12190-019-01278-7

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12190-019-01278-7

Keywords

- Extended global Krylov subspaces

- Matrix exponential

- Low-rank approximation

- Differential Sylvester equations