Abstract

The major issue for optimization with genetic algorithms (GAs) is getting stuck on a local optimum or a low computation efficiency. In this research, we propose a new real-coded based crossover operator by using the Exponentiated Pareto distribution (EPX), which aims to preserve the two extremes. We used EPX with three the most reputed mutation operators: Makinen, Periaux and Toivanen mutation (MPTM), non uniform mutation (NUM) and power mutation (PM). The experimental results with eighteen well-known models depict that our proposed EPX operator performs better than the other competitive crossover operators. The comparison analysis is evaluated through mean, standard deviation and the performance index. Significance of EPX vs competitive is examined by performing the two-tailed t-test. Hence, the new crossover scheme appears to be significant as well as comparable to establish the crossing among parents for better offspring.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

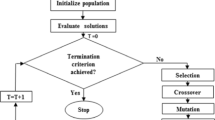

For the global optima, several other techniques have been discussed in literature, e.g. simulated annealing (SA), differential evolution (DE), particle swarm optimization (PSO), ant-colony optimization (ACO), genetic algorithms (GAs) etc. [6] . In further studies, a detailed description about these algorithms have also been given [7,8,9]. Among these algorithms, GA has been found to be the most powerful algorithms to solve the optimization related problems [10]. In the early 1970s, professor Holland proposed the genetic algorithms (GAs) first time, see for examples [1,2,3,4,5]. It is a population based probabilistic approach, which searches the global optima for an optimistic problem.

There are several applications of GA, such as in automatic control, combinatorial optimization, production scheduling problems, optimization problems, planning and design, bioengineering, system engineering, artificial intelligence and 6-6 parallel manipulators etc. [11,12,13,14,15, 55]. Also, many real life problems have been formulated as mathematical models to optimize their local objective functions, which further require their global optimum solution. This optimum value usually depends upon the decision variables that define the objective function. GA does not ensure the exact optimum solution, but it gives the optimal solution among the local optimum ones. Usually the real life problems are constrained optimization problems, but the current research deals with unconstrained optimization problems. It may appear as:

Minimize g(y), with \(g : R^{n}\rightarrow R\) where, y \(\epsilon\) G. In the large search space, the G is denoted as a n-dimensional rectangular hypercube and \(R^{n}\) is identified by \(c_{i}\le y_{i} \le d_{i}, i=1,2,3,...,n\). For evolutionary algorithms, the first step is to connect a bridge in the context of real situation problem and the problem solving space through evolutionary techniques. This step is defined as how the possible solutions are represented and stored in a computer language. In order to represent the candidates in the search space, a desired encoding scheme is adapted in which each of the chromosome is represented by the vector’s length. Here the length of a vector is defined by the number of decision variables configuring the dimensions of search space. Most often these variables are represented in binary codes in the form of 0’s and 1’s.

The GA with binary coding problem is that it only maps the discrete values in the search set and this works well when an optimization problem has moderate decision variables. But in such cases, the accuracy of solution is compromised. As the accuracy of solution is directly linked to encoding length, so it results in excessive use of memory and computing which reduces the computation speed [16].

The idea of real encoding was emerged in early 90’s, where a chromosome was interpreted in a vector of real coded GA [17,18,19]. The real-coded GA also uses three basic genetic operators i.e. selection, crossover and mutation. Although, it overcomes the problem of binary coded GA in a way that it requires continuous variables, but it may also faces the problem of premature convergence. This may happen due to the GA’s inability to locally exploit the information regarding solutions in population.

GA has a major drawback that it gets stuck at local optimum because of its premature convergence. It is strongly linked with population diversity. For more selection pressure, there is less population diversity, which, as a result leads the GA to converge at the local optimum point. To maintain the balance between population diversity and selection pressure remains a major goal and it has been addressed by various studies [20, 21, 47, 48, 57] . We know that all the three operators affect the GA but crossover has major impact on it. It basically uses the information about the current population, directing the search in other regions of that search space. Hence, we can say that the exploration of GA depends on the crossover operator. Thus, it is essential to choose a suitable real-coded crossover operator to get more accurate results.

To overcome all the discussed issues with GAs, our study proposes an efficient crossover operator in order to come up with the solution for complex optimization problems. The current study is designed to have six sections. The Sect. 1 is about the introduction of GA as a tool to solve complex optimization problems. The Sect. 2 presents the existing real coded crossover operators. The Sect. 3 is about proposed real coded crossover operator, whereas the Sect. 4 is about an experimental setup. Section 5 discusses the results of the current study while the last Sect. 6 is all about concluding the current study findings.

2 Existing crossover operators

The performance of GA is highly affected by its operators, i.e. selection, crossover and mutation. Crossover is an important operator to maintain a balance between the two extremes, i.e. exploration and exploitation. In case of real coded GA, there is a list of operators that have been introduced in the literature. For example, Michalewicz [22] proposed a simple crossover related to genetics suggesting that how randomly selected genes from a parent pair are exchanged, result in production of the offspring. Radcliffe [23] proposed a flat crossover, which selects the genes from two parents by using the uniform distribution to produce an offspring. The search capabilities of this operator were further enhanced by extending the line and intermediate crossover operators, see for example, Muhlebein and Schlierkamp-Voosen [24]. Both of them are allowed the exploration within a pre-decided interval beyond the parents. Further, the above given ideas of Radcliffe, and Muhlebein and Schlierkamp-Voosen were further generalized by Eshelman and Schaffer [25]. They introduced the blend crossover operator with parameter ‘\(\alpha\)’ which directs the exploration in the interval. The interval may be in between the parent’s genes or on the either side of the parents. It also becomes the extended intermediate crossover for \(\alpha = 0.25\). In 1991, Wright [18] introduced the heuristic crossover (HX) based on the fitness values of parents. Only one offspring is produced by mating of two parents and it is also biased in the favor of relatively better parent.

A lot of approaches to generate offspring through arithmetical crossovers were suggested by Michalewicz [26]. For examples, one of them is to produced offspring within the genes interval of both parents. Another one is to generate one offspring using uniform distribution between the genes and using the means of parents to generate the second one. The idea of fuzzy recombination operators, which was used with heuristic crossover as heuristic fuzzy connective based crossovers, see for example, [27, 28]. These crossovers successfully maintain the population’s diversity with enhanced convergence speed. A simplex multi-parent crossover proposed by Tsutsui [29] produces the offspring from the simplex formed by the parent solutions. An improved version of Genetic Diversity Evolutionary Algorithm (GeDEA) called GeDEA-II is proposed which features a novel crossover operator, the Simplex-Crossover (SPX), and a novel mutation operator, the Shrink-Mutation [56] .The simulated binary crossover (SBX) aims to simulate binary-crossovers making it useful for continuous search space [30]. A Gaussian distribution based crossover for real-coded GA was proposed by Tutkun [31]. Laplace crossover (LX) was suggested by Deep and Thakur [32], which produces offspring based on the Laplace distribution. Logistic crossover (LogX) was proposed to produce more near optimal results based on Logistic distribution [57] .

Some other crossover operators have also been proposed with multiple descendants. Producing three offspring from two parents using a linear crossover operator [18]. A uni-modal normally distributed crossover operator (UNDX) introduced by Ono and Kobayashi [33] where three parents participate to produce two or more offspring. Later on, its performance is enhanced by adding the uniform crossover (UX) [34]. Another multi-parent crossover operator called the Parent centric crossover (PCX) was proposed by Deb et al. [35]. It was further modified by Sinha et al. [36]. In the average bound crossover, four offspring are produced and the parents are then replaced by the two best offspring [37]. The hybrid crossover operator produces a number of offspring using different crossover operators. Crossover operators are classified as mean centric or parent centric if they generate offspring near the centroid of parent or near the parents. A detailed analysis was done by Herrera et al. [38]. The simplex crossover, blend crossover and the unimodal normal distribution crossover are the mean centric operators, whereas, LX, SBX and fuzzy recombination are parent centric approaches.

The differential evolution crossover (DEX) has been proposed as a multi-parent crossover operator to avoid premature convergence. As a part of improved class of RCGAs it uses successful parent strategy which provides a successful alternative to parent selection during DEX process [43]. A new hybrid strategy was applied by combining the SBX and simplex crossover to effectively optimize a problem [44]. Another recent variant of RCGA is the improved real coded genetic algorithm (IRCGA) which uses SBX to improve the convergence speed and solution quality for dynamic economic dispatch [45]. Another idea used a multi-parent crossover operator with a diversity operator instead of a mutation operator to solve constrained optimization problems as well as engineering problems [46]. A new adaptive genetic algorithm has been proposed to maintain the balance between exploration and exploitation. It used the arithmetic crossover operator in a new adaptive environment [47]. For the optimization of multi-modal test problems for real coded genetic algorithm, a novel parent centric crossover operator is proposed based on a log-logistic probability distribution. The main aim of fisk crossover (FX) is trade-off between selection pressure and population diversity [48]. An improved RCGA is defined by using a new heuristic normal distribution crossover (HNDX) which aim to direct the crossover to the optimal crossover direction [49]. Direction based crossover operators have also been proposed which direct the crossover search direction to be consistent with the optimal crossover direction [50,51,52,53]. Another conditionally breeding RCGA is performed by difference degree between individuals instead of using the crossover and mutation probability. It aimed to improve the ability of GA to converge to the near optimal solution [54].

3 Real-coded crossover operators: used in this study

The operators that have been used in the current study are:

3.1 Laplace crossover (LX)

Based on Laplace distribution, Deep and Thakur [32] proposed a self-adaptive parent centric crossover operator. The Laplace distribution function is as follows.

Following the same steps as mentioned earlier, a parameter \(`\beta _{i}\)’ is created using following formula:

Offspring are generated using following equations:

The authors suggested to assign zero value to the location parameter of this distribution.

3.2 Simulated binary crossover (SBX)

Simulated binary crossover is among the most commonly known crossover operator which was proposed by Deb and Agarwal [30]. The cumulative distribution function (CDF) is as follows:

The parameter ‘\(\beta _{i}\)’ is generated using the following equations:

where, \(m_{_{c}} \epsilon ~(0,\infty )\) is the distribution index for SBX. Two offspring \(\theta =(\theta _{1},\theta _{2},...,\theta _{n})\) and \(\eta =(\eta _{1},\eta _{2},...,\eta _{n})\) are generated from a pair of parents \(y=(y_{1},y_{2},...,y_{n})\) and \(z=(z_{1},z_{2},...,z_{n})\) as follows:

3.3 Heuristic crossover (HX)

This is the most common and elderly used crossover is Heuristic crossover which was proposed by Wright [18]. It aims to solve the constrained and unconstrained optimization problems. It works differently from other crossover operators as it produces a single offspring from a parent pair. The steps to generate offspring \(\xi = (\xi _{1}, \xi _{2},...,\xi _{n})\) are as follows:

-

1.

Generate a random number \(u_{i}\) between 0 and 1.

-

2.

Find an offspring using following formula:

$$\begin{aligned} \xi _{i}= (z_{i}-y_{i})u_{i}+z_{i} \end{aligned}$$(7)

and observe the fitness of the parent z, which is not worse than the parent y. Produced offspring will be biased in the direction of relatively fit parent.

In case the produced offspring \(\xi \le y_{i}^l\) or \(\xi \ge y_{i}^u\), then a new offspring is generated using step 2. The produced offspring will be assigned a random value if HX fails to produce an offspring within the constraints even after n attempts as:

where \(y_{i}^l\) and \(y_{i}^{u}\) are the lower and upper limits of \(i^{th}\) parent y.

3.4 Logistic crossover (LogX)

One recently proposed crossover operator based on the Logistic distribution [57]. The CDF of Logistic distribution is given as:

From a pair of parents \(x=(x_{1},x_{2},...,x_{n})\) and \(y=(y_{1},y_{2},...,y_{n})\), two offspring are generated as \(\xi _{i}=(\xi _{1},\xi _{2},...,\xi _{n})\) and \(\eta _{i}=(\eta _{1},\eta _{2},...,\eta _{n})\) using the following steps:

-

1.

Generate a random number u between 0 and 1.

-

2.

The parameter \(\beta _{i}\) is created by inverting the CDF of Logistic distribution.

$$\begin{aligned} \beta _{i}= \mu -sLog(\frac{1-u}{u}) \end{aligned}$$(10) -

3.

For i=1,2,...,n, the offspring are given by the equation:

$$\begin{aligned} \eta _{i} = 0.5[(x+y)+\beta _{i}|x-y|)] \end{aligned}$$(11)$$\begin{aligned} \xi _{i}= 0.5[(x+y)-\beta _{i}|x-y|)] \end{aligned}$$(12)

3.5 Makinen, Periaux and Toivanen mutation (MPTM)

This mutation operator is suggested by Makinen et al. [39]. It was proposed to solve multidisciplinary shape optimization problems in aerodynamics and electromagnetic. It is also used to solve constrained optimization problems [40]. The mutated individual \(\zeta =(\zeta _{1},\zeta _{2},...,\zeta _{n})\) is created from an individual \(\eta =(\eta _{1},\eta _{2},...,\eta _{n})\) as follows:

-

1.

A uniform random number, say u, is generated between 0 and 1.

-

2.

Find

$$\begin{aligned} k= \frac{x_{i}-(x_{i})^{l}}{(x_{i})^{u}-x_{i}}, \end{aligned}$$(13)where \(x_{i}^{l}\) and \(x_{i}^{u}\) are the lower and upper bounds of ith decision variable respectively.

-

3.

A parameter \(k_{i}\) is created using following formula:

$$\begin{aligned} k_{i}= {\left\{ \begin{array}{ll} k_{i}-k_{i}(\frac{k_{i}-r_{i}}{k_{i}})^{b},~~~~~~~~~~~~~if~~r_{i}<k_{i}\\ k_{i} ,~~~~~~~~~~~~~~~~~~~~~~~~~~~~~if~~r_{i}=k_{i}\\ k_{i}+(1-k_{i})(\frac{r_{i}-k_{i}}{1-k_{i}})^{b},~~~~~~if~~r_{i}>k_{i} \end{array}\right. } \end{aligned}$$(14)where ‘ b’ is the index of mutation.

-

4.

The muted solution will be created as follows:

$$\begin{aligned} \zeta _{i}= (1-k_{i})x_{i}^{l}+k_{i}x_{i}^{u} \end{aligned}$$(15)

3.6 Non-uniform mutation (NUM)

It is one of the most common and widely used mutation operator proposed by [41, 42]. In this type of mutation as the number of generation increases, there is decrease in the strength of mutation. In other words, the NUM operator uniformly searches for the space in the initial generations, but in the later generations it searches for the space locally. The steps to create the muted solution are:

-

1.

Create a uniformly distributed random number between 0 and 1.

-

2.

The muted solution will be:

$$\begin{aligned} \zeta _{i}= {\left\{ \begin{array}{ll} \epsilon _{i}+(x_{i}^u-\epsilon _{i})(1-u_{i}^{(1-(t/T))})^{b},~~~~~if~~r_{i}\le 0.5\\ \epsilon _{i}-(\epsilon _{i}-x_{i}^l)(1-u_{i}^{(1-(t/T))})^{b},~~~~~otherwise \end{array}\right. } \end{aligned}$$(16)

where ‘b’ is the parameter of mutation operator which determines the strength of mutation. ‘T’ stands for the maximum number of generations whereas ‘t’ represents the current generation number.

3.7 Power mutation (PM)

One of the most frequently used operator that uses Power distribution was originally proposed by Deep and Thakur [32]. Its density function is as follows:

with the following distribution function:

where ‘\(\lambda\)’ is the index of the distribution. Following steps are used to find the muted solution.

-

1.

A random number ‘r’ is created between 0 and 1.

-

2.

Create a random number ‘\(s_{i}\)’ which follows the Power distribution as:

$$\begin{aligned} s_{i}= (r_{i})^{1/\lambda } \end{aligned}$$(19) -

3.

Create the muted solution using the following equation:

$$\begin{aligned} \zeta _{i}={\left\{ \begin{array}{ll} \epsilon _{i}-s_{i}(\epsilon _{i}-y_{i}^{l}),~~~~~if~~ \frac{y_{i}-y_{i}^{l}}{y_{i}^{u}-y_{i}}<r_{i}\\ \epsilon _{i}+s_{i}(y_{i}^{u}-\epsilon _{i}),~~~~~if~~ \frac{y_{i}-y_{i}^{l}}{y_{i}^{u}-y_{i}} \ge r_{i} \end{array}\right. } \end{aligned}$$(20)where \(r_{i}\) is the uniform random number between 0 and 1 and \(y_{i}^{l}\) and \(y_{i}^{u}\) are the lower and upper bounds of \(i^{th}\) decision variable respectively.

4 The proposed real coded crossover operator based on Exponentiated Pareto distribution

The proposed Exponentiated Pareto crossover (EPX) operator is used in conjunction with the Makinen, Periaux and Toivanen mutation (MPTM). This modification give rise to a new crossover operator EPX-MPTM to improve the performance of existing crossover operators. The density function of Exponentiated pareto distribution is given as follows:

where \(\mu\), \(\theta >0\) are the location and scale parameters respectively. The CDF of Exponentiated Pareto distribution is given as:

From parents \(k=(k_{1},k_{2},...,k_{n})\) and \(l=(l_{1},l_{2},...,l_{n})\), two offspring are generated as \(\xi _{i}=(\xi _{1},\xi _{2},...,\xi _{n})\) and \(\eta _{i}=(\eta _{1},\eta _{2},...,\eta _{n})\) using the steps given below:

-

1.

Generate a random number ‘z’, where \(z\epsilon\) (0,1).

-

2.

The parameter ‘\(\alpha _{i}\)’ is created by inverting the CDF of Exponentiated pareto Distribution i.e., by equating the area under the curve from \(-\infty\) to \(\alpha _{i}\) to the randomly generated number z as follows:

$$\begin{aligned} F(t)= & \,(1-(1+t)^{-\mu })^{\theta }\nonumber \\ z= & \, (1-(1+\alpha _{i})^{-\mu })^{\theta }\nonumber \\ z^{1/\theta }= & \, 1-(1+\alpha _{i})^{-\mu }\nonumber \\ 1-z^{1/\theta }= & \, (1+\alpha _{i})^{-\mu }\nonumber \\ (1-z^{1/\theta })^{(-1/\mu )}= & \, 1+\alpha _{i} \end{aligned}$$(23)$$\begin{aligned} \alpha _{i}= & \, ((1-z^{1/\theta })^{(-1/\mu )})-1 \end{aligned}$$(24) -

3.

For i=1,2,...,n, the offspring are given by the equation:

$$\begin{aligned} {\left\{ \begin{array}{ll} \eta _{i} = 0.5[(k+l)+\alpha _{i}|k-l|)] \\ \xi _{i}= 0.5[(k+l)-\alpha _{i}|k-l|)] \end{array}\right. } \end{aligned}$$(25)

The shape of Exponentiated Pareto distribution is shown in the upper left subplot of Fig. 1. The distribution becomes symmetric for larger values of the parameter ‘\(\theta\)’. The upper right subplot of Fig. 1 clearly depicts that for a fixed value of ‘\(\mu\)’, a smaller value of parameter ‘\(\theta\)’ generates near the parent offspring while the larger value of this parameter generates far off parents. As we know genetic algorithm is a population based algorithm and the main purpose of any operator (i.e. selection, crossover and mutation) is to keep balance between selection pressure and population diversity. The long tail of the Exponentiated Pareto distribution shows high population diversity which reduces the selection pressure and vice versa for the high peak. But the Exponentiated Pareto distribution maintains a better trade-off between the population diversity and selection pressure (as seen in the upper left subplot of Fig. 1).

The third subplot shows the distribution of offspring. It shows increase in probability of generating offspring away from parent. Meanwhile it also depicts that the length of interval, in which offspring are generated, increases with an increase in ‘\(\theta\)’ (the spread parameter). EPX is very explorative and its exploration range also increases with an increase in ‘\(\theta\)’ (shown by long tail in graph). In this way, the search power of the proposed operator in terms of probability of generating the offspring is high.

5 The experimental setup

In this section, experimental test is carried out with its eighteen benchmark functions in order to validate the proposed algorithm. It is aimed to make its comparison with three other optimization algorithms.

5.1 Mathematical test functions

Eighteen benchmark test problems have been selected to test the performance of proposed crossover operator. These problems have different difficulty level and multi-modality. The problems with their essential information are summarized in appendix.

5.2 Algorithms for comparison

For performance evaluation of the proposed algorithm, the optimal fitness values of EPX-MPTM are compared with four other evolutionary algorithms. These are LX, SBX, HX and LogX in conjunction with three mutation operators MPTM, NUM and PM.

5.3 Settings for comparison

In order to maintain uniformity of the testing environment, the population size for all the cases is made ten times to the number of decision variables. There are thirty independent runs which are made on various initial populations using each of the algorithm. All the GAs use tournament selection as a selection criteria. Elitism is applied with size one i.e., the best individuals are preserved in the current generation. The value of ‘a’ for LX is set to be zero and for HX, ‘k’ is 4. A maximum number of 1000 generations is the stopping criteria for all the algorithms. The values of parameters are fixed during a complete run. The performance of the proposed algorithm has been evaluated on each test function based on the statistics from 30 independent runs. A run is said to be successful if the objective function value varies not more than 5% of its optimal value in the run. In this way, performance index has been computed.

6 Results and discussion

In this section, comparison of the proposed operators EPX-MPTM, EPX-NUM and EPX-PM is performed with other crossover operators in groups of three i.e., (HX-MPTM, LX-MPTM, SBX-MPTM), (HX-NUM, LX-NUM, SBX-NUM) and (HX-PM, LX-PM, SBX-PM). The mutation operator is same in each of these groups to maintain uniformity of the comparison process. Results are analyzed in three different ways. EPX operator performed better than other crossover operators in most of the test problems. It is also compared with the LogX operator using each of three mutation operators . Results showed that the proposed operator is more efficient in function optimization as compared to the other crossover operators. The Table 1 shows the summary of operators used in the study (Table 1. The parameter settings used for the GA are shown in Table 2.

6.1 First way of analysis

6.1.1 EPX-MPTM vs LX-MPTM, SBX-MPTM, HX-MPTM

The average value of the objective function, standard error and the number of successful runs are listed in Table 3. Results indicate that the proposed operator, EPX-MPTM, has a smaller value of the mean objective function in all problems. The proposed operator has 100 % success rate in twelve problems while the other operators solve only ten problems. None of the GAs could solve P14 in all runs.

6.1.2 EPX-NUM vs LX-NUM, SBX-NUM, HX-NUM

The proposed operator EPX-NUM is also compared with other crossover operators in conjunction with NUM mutation operator. In 15 problems, the EPX-NUM shows more near optimal results than other crossover operator i.e., LX-NUM, SBX-NUM and HX-NUM. In other three problems, there is not much difference in the results (Table 4).

6.1.3 EPX-PM vs LX-PM, SBX-PM, HX-PM

Table 5 shows the mean, standard deviation and the number of successful runs for third group of comparison. The proposed operator outperforms the other crossover operators in all problems except P4. EPX produces more optimal results than the other crossover operators. From the above comparison, it can be concluded that on the basis of mean, standard deviation (S.D) and successful rate, EPX worked efficiently with MPTM, NUM and PM operators. It resulted in more near optimal results than other crossover operators in most of the test problems.

6.1.4 EPX-MPTM vs LogX-MPTM, EPX-NUM vs LogX-NUM and EPX-PM vs LogX-PM

The proposed EPX is compared with LogX operator in conjunction with MPTM, NUM and PM as mutation operators (Tables 6 and 7). Results show the better performance of EPX in terms of less average and standard deviation values. The EPX solves all problems successfully than the LogX operator as indicated by the successful runs mentioned in tables 6 and 7

.

6.2 Second way of analysis

The second way of analysis is to compare the relative performance of all the GAs simultaneously. The performance index (PI) is calculated in the following manner:

where

where \(i=1,2,...,N_{p}\); \(Sr^{i}\) is the number of successful runs of \(i^{th}\) problem; \(Tr^{i}\) is the total number of runs of \(i^{th}\) problem; \(Mf^{i}\) is the mean objective function value of \(i^{th}\) problem; \(Lmf^{i}\) is the least mean objective function value of \(i^{th}\) problem; \(Sf^{i}\) is the standard deviation of \(i^{th}\) optimization problem; \(Lsf^{i}\) is the least standard deviation value among all GAs of \(i^{th}\) optimization problem and \(N_{p}\) is the total number of problems analyzed.

\(t_{1}, t_{2}\) and \(t_{3}\) are the weights assigned to successful runs, mean objective function value and the standard deviation of the objective function value respectively. Same weights are assigned to two terms at a time so that the behavior of PI can be easily analyzed. The following three cases are considered here:

Case 1: \(t_{1}=w\), \(t_{2}=t_{3}=\frac{1-w}{2}\), \(0\le w \le 1\)

Case 2: \(t_{2}=w\), \(t_{1}=t_{3}=\frac{1-w}{2}\), \(0\le w \le 1\)

Case 3: \(t_{3}=w\), \(t_{1}=t_{2}=\frac{1-w}{2}\), \(0\le w \le 1\)

From Fig. 2, it is evident that the proposed crossover operator i.e., EPX outperforms the other crossover operators.

6.3 Third way of analysis

The student’s t test has also been applied to test the hypothesis that whether the mean of proposed operator is smaller than the other three GAs. It further investigate whether the mean of the proposed algorithm is more close to the optimal value than the other crossover operators. Results are shown in Table 8.

The GA convergence performed on P9 (Axis parallel hyper ellipsoid) is shown in Figs. 3, 4, 5. LX and HX take more generations to converge i.e. have more population diversity and SBX converges too quickly because of more selection pressure. EPX works more effectively than LogX operator as it attains the global optima while maintaining the balance between exploration and exploitation. It can be analyzed on any test problem for all competing selection strategies (Table 8).

7 Conclusion

In this paper, we proposed a new real coded GA, the ”Exponentiated Pareto” crossover. It is used in conjunction with MPTM, NUM and PM mutation operators. Graphical representation shows that the proposed operator generates near parent offspring for a smaller value of the spread parameter. The problem dimensions are fixed to be 30 as used in earlier studies.

For the sake of analysis, the GAs are categorized into three groups. In the first group, the EPX operator is compared with other crossover operators in conjunction with MPTM mutation operator, the second group compares the varying crossover operators with NUM mutation operator and the third group use the PM mutation operator with the four varying crossover operators.

Three kind of analysis have been performed. In the first way of analysis, the optimal fitness values of the EPX operator are compared with other crossover operators. The mean and standard deviation results show that the EPX operator is more efficient then the other crossover operators. It has 100% success rate in almost all problems.

The second kind of analysis is carried out by using the performance index. Figure 2 shows that the EPX operator outperforms the other crossover operators. Finally, the significant t-test results support our hypothesis that the EPX provides more near optimal results.

References

Holland J (1975) Adaptation in natural and artificial systems: an introductory analysis with application to biology. Control and Artificial Intelligence. MIT press, Cambridge

Zheng SR, Lai JM, Liu GL, Gang T (2006) Improved real coded hybrid genetic algorithm. Comput Appl 26(8):1959–1962

Liu HH, Cui C, Chen J (2013) An improved genetic algorithm for solving travel salesman problem. Trans Beijing Inst Technol 33(4):390–393

Jingi W, Yang X, Lei C (2012) The application of GA-based PID parameter optimization for the control of superheated steam temperature. In: International conference on machine learning and cybernetics. vol 3, pp 835–839

Golberg DE (1989) Genetic algorithms in search, optimization, and machine learning. Addison-Wesley Publishing Company, Boston

Deb K (2001) Nonlinear goal programming using multi-objective genetic algorithms. J Op Res Soc 52(3):291–302

Kirkpatrick S, Gelatt CD, Vecchi MP (1983) Optimization by simulated annealing. Sci 220(4598):671–680

Price K, Storn RM, Lampinen JA (2006) Differential evolution: a practical approach to global optimization. Springer Science and Business Media, Berlin

Eberhart RC, Shi Y, Kennedy J (2001) Swarm intelligence. Elsevier, Netherlands

Bäck T, Schwefel HP (1993) An overview of evolutionary algorithms for parameter optimization. Evol Comput 1(1):1–23

Chen CT, Wu CK, Hwang C (2008) Optimal design and control of CPU heat sink processes. IEEE Trans Compon Packag Technol 31(1):184–195

Chen CT, Chuang YC (2010) An intelligent run-to-run control strategy for chemical-mechanical polishing processes. IEEE Trans Semicond Manuf 23(1):109–120

Dyer JD, Hartfield RJ, Dozier GV, Burkhalter JE (2012) Aerospace design optimization using a steady state real-coded genetic algorithm. Appl Math Comput 218(9):4710–4730

Tsai CW, Lin CL, Huang CH (2010) Microbrushless DC motor control design based on real-coded structural genetic algorithm. IEEE/ASME Trans Mech 16(1):151–159

Valarmathi K, Devaraj D, Radhakrishnan TK (2009) Real-coded genetic algorithm for system identification and controller tuning. Appl Math Model 33(8):3392–3401

Goldberg DE (1990) Real-coded genetic algorithms, virtual alphabets and blocking. University of Illinois at Urbana Champaign, Champaign

Lawrence D (1991) Handbook of genetic algorithms. Van Nostrand Reinhold

Wright AH (1991) Genetic algorithms for real parameter optimization. Found Genet Algorithms 1:205–218

Janikow CZ, Michalewicz Z (1991) An experimental comparison of binary and floating point representations in genetic algorithms. ICGA

Hussain A, Muhammad YS (2020) Trade-off between exploration and exploitation with genetic algorithm using a novel selection operator. Complex Intell Sys 6(1):1–14

Eiben AE, Schut MC, de Wilde AR (2006) Is self-adaptation of selection pressure and population size possible?–A case study. In: Parallel problem solving from nature-PPSN IX, pp 900–909

Michalewicz Z, Logan T, Swaminathan S (1994) Evolutionary operators for continuous convex parameter spaces. In Proceedings of the 3rd annual conference on evolutionary programming, PP 84–97

Radcliffe NJ (1991) Equivalence class analysis of genetic algorithms. Complex Syst 5(2):183–205

Mühlenbein H, Schlierkamp-Voosen D (1993) Predictive models for the breeder genetic algorithm in continuous parameter optimization. Evol Comput 1(1):25–49

Eshelman LJ, Schaffer JD (1993) Real-coded genetic algorithms and interval-schemata. Found Genet Algorithms 2:187–202

Michalewicz Z, Janikow CZ (1991) Handling constraints in genetic algorithms. ICGA 151–157

Voigt HM (1992) Fuzzy evolutionary algorithms. International Computer Science Institute

Voigt HM, Mühlenbein H, Cvetkovic D (1995) Fuzzy recombination for the breeder genetic algorithm. In: Proceedings of sixth international conferene on genetic algorithms

Tsutsui S, Yamamura M, Higuchi T (1999) Multi-parent recombination with simplex crossover in real coded genetic algorithms. In: Proceedings of the 1st annual conference on genetic and evolutionary computation, vol 1, pp 657–664

Deb K, Agrawal RB (1995) Simulated binary crossover for continuous search space. Complex Syst 9(2):115–148

Tutkun N (2009) Optimization of multimodal continuous functions using a new crossover for the real-coded genetic algorithms. Expert Syst Appl 36(4):8172–8177

Deep K, Thakur M (2007) A new crossover operator for real coded genetic algorithms. Appl Math Comput 188(1):895–911

Ono I, Kita H, Kobayashi S (2003) A real-coded genetic algorithm using the unimodal normal distribution crossover. In: Advances in evolutionary computing, pp 213–237

Ono I, Kita H, Kobayashi S (1999) A robust real-coded genetic algorithm using unimodal normal distribution crossover augmented by uniform crossover: Effects of self-adaptation of crossover probabilities. In: Proceedings of the 1st annual conference on genetic and evolutionary computation. vol 1, pp 496–503

Deb K, Anand A, Joshi D (2002) A computationally efficient evolutionary algorithm for real-parameter optimization. Evol Comput 10(4):371–395

Sinha A, Tiwari S, Deb K (2005) A population-based, steady-state procedure for real-parameter optimization. IEEE Congr Evol Comput 1:514–521

Ling SH, Leung FH (2007) An improved genetic algorithm with average-bound crossover and wavelet mutation operations. Soft Comput 11(1):7–31

Herrera F, Lozano M, Sanchez AM (2003) A taxonomy for the crossover operator for real-coded genetic algorithms: An experimental study. Int J Intell Syst 18(3):309–338

Mäkinen RA, Périaux J, Toivanen J (1999) Multidisciplinary shape optimization in aerodynamics and electromagnetics using genetic algorithms. Int J Numer Methods Fluids 30(2):149–159

Miettinen K, Mäkelä MM, Toivanen J (2003) Numerical comparison of some penalty-based constraint handling techniques in genetic algorithms. J Global Optim 27(4):427–446

Michalewicz Z (2013) Genetic algorithms+ data structures= evolution programs. Springer Science and Business Media, Berlin

Michalewicz Z (1995) Genetic algorithms, numerical optimization, and constraints. In: Proceedings of the sixth international conference on genetic algorithms. vol 195, pp 151–158

Ali MZ, Awad NH, Suganthan PN, Shatnawi AM, Reynolds RG (2018) An improved class of real-coded Genetic Algorithms for numerical optimization. Neurocomput 275:155–166

Jin YF, Yin ZY, Shen SL, Zhang DM (2017) A new hybrid real-coded genetic algorithm and its application to parameters identification of soils. Inverse Probl Sci Eng 25(9):1343–1366

Pattanaik JK, Basu M, Dash DP (2018) Improved real coded genetic algorithm for dynamic economic dispatch. J Electr Syst Inf Technol 5(3):349–362

Elsayed SM, Sarker RA, Essam DL (2014) A new genetic algorithm for solving optimization problems. Eng Appl Artif Intell 27:57–69

Al-Naqi A, Erdogan AT, Arslan T (2013) Adaptive three-dimensional cellular genetic algorithm for balancing exploration and exploitation processes. Soft Comput 17(7):1145–1157

Ahmad I, Almanjahie IM (2020) A novel parent centric crossover with the log-logistic probabilistic approach using multimodal test problems for real-coded genetic algorithms. Math Probl Eng 2020. https://doi.org/10.1155/2020/2874528

Wang J, Cheng Z, Ersoy OK, Zhang P, Dai W, Dong Z (2018) Improvement analysis and application of real-coded genetic algorithm for solving constrained optimization problems. Math Probl Eng 2018. https://doi.org/10.1155/2018/5760841

Chuang YC, Chen CT, Hwang C (2015) A real-coded genetic algorithm with a direction-based crossover operator. Inf Sci 305:320–348

Das AK, Pratihar DK (2019) A directional crossover (DX) operator for real parameter optimization using genetic algorithm. Appl Intell 49(5):1841–1865

Das AK, Pratihar DK (2020) A direction-based exponential crossover operator for real-coded genetic algorithm. In: Singh B, Roy A, Maiti D (eds) Recent advances in theoretical, applied, computational and experimental mechanics. Lecture Notes in Mechanical Engineering. Springer, Singapore

Chuang YC, Chen CT, Hwang C (2016) A simple and efficient real-coded genetic algorithm for constrained optimization. Appl Soft Comput 38:87–105

Zhao Y, Cai Y, Cheng D (2017) A novel local exploitation scheme for conditionally breeding real-coded genetic algorithm. Multimed Tools Appl 76(17):17955–17969

Rolland L, Chandra R (2016) The forward kinematics of the 6–6 parallel manipulator using an evolutionary algorithm based on generalized generation gap with parent-centric crossover. Robotica 34(1):1

Da Ronco CC, Benini E (2013) A simplex crossover based evolutionary algorithm including the genetic diversity as objective. Appl Soft Comput 13(4):2104–2123

Naqvi FB, Yousaf Shad M, Khan S (2021) A new logistic distribution based crossover operator for real-coded genetic algorithm. J Stat Comput Simul 91(4):817–835

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

About this article

Cite this article

Naqvi, F.B., Shad, M.Y. Seeking a balance between population diversity and premature convergence for real-coded genetic algorithms with crossover operator. Evol. Intel. 15, 2651–2666 (2022). https://doi.org/10.1007/s12065-021-00636-4

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12065-021-00636-4