Abstract

Data collection and the availability of large data sets has increased over the last decades. In both statistical and machine learning frameworks, two methodological issues typically arise when performing regression analysis on large data sets. First, variable selection is crucial in regression modeling, as it helps to identify an appropriate model with respect to the considered set of conditioning variables. Second, especially in the context of survey data, handling of missing values is important for estimation, which occur even with state-of-the-art data collection and processing methods. Within this paper, we provide an Bayesian approach based on a spike-and-slab prior for the regression coefficients, which allows for simultaneous handling of variable selection and estimation in combination with handling of missing values in covariate data. The paper also discusses the implementation of the approach using Markov chain Monte Carlo techniques and provides results for simulated data sets and an empirical illustration based on data from the German National Educational Panel Study. The suggested Bayesian approach is compared to other statistical and machine learning frameworks such as Lasso, ridge regression, and Elastic net, and is shown to perform well in terms of estimation performance and variable selection accuracy. The simulation results demonstrate that ignoring the handling of missing values in data sets can lead to the generation of biased selection results. Overall, the proposed Bayesian method offers a holistic, flexible, and powerful framework for variable selection in the presence of missing covariate data.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In regression modeling a long-standing problem is to select an appropriate model in terms of the considered set of conditioning variables. The selection of appropriate variables is always related to the associated selection of models, where various approaches arising in the domain of statistical or machine learning algorithms are discussed in the literature to solve this task. While model selection is in principle straightforward in terms of a decision theoretic approach typically pursued in the context of model averaging, see Hansen (2007), implementation of such a model selection strategy considering all possible model setups is often impossible given available computing capacities. Since the seminal paper of Schwarz (1978) providing a benchmark criterion for model complexity obeying Occam’s razor and allowing for model comparison on a common scale, many papers have addressed variable selection in frequentist and Bayesian model setups, see among others Raftery (1995); Tibshirani (1996); O’Hara and Sillanpää (2009); Bottolo and Richardson (2010); Ishwaran and Rao (2005). Clyde and George (2004) depict variable selection problems to become a special part of model selection with every subset of covariates corresponding to a distinct model, and finally every selection problem is a description of uncertainty to the data.Footnote 1 To avoid computationally difficulties when considering all possible models, selection methods help to pick up relevant variables and reduce the amount of variables that become part of the modeling process.Footnote 2 Further, formalized model selection strategies guard against ad-hoc multiple testing approaches invalidating the use of p-values and informal model assessment potentially results in incorrect inference due to strong multicollinearity among the set of variables. This points to the choices with regard to the set of variables considered within model selection. Next to the set of actually observed variables, say X, any combination of the variables in terms of higher order moments and cross products, or functional transformation thereof could be considered as well to handle nonlinear relationships. This increases also for moderate P the number of variables to be considered. While Hansen (2007) and Frühwirth-Schnatter (2010), as typical for the applied literature, consider complete data scenarios, model selection strategies at least in the field of surveyed and administrative data should also address potentially incomplete data, i.e. the estimation strategy requires handling of missing data.

Typical formalized approaches to variable selection discussed in the statistical and machine learning literature are shrinkage estimators, with Lasso, ridge regression, and Elastic-net as the prominent variants. Next to established procedures such as stepwise selection, see Marill and Green (1963), also spike-and-slab prior formulations have been suggested, see Ročková and George (2018) and O’Hara and Sillanpää (2009) for an overview. As pointed out by Korobilis and Shimizu (2022) shrinkage estimators can be well aligned to the Bayesian estimation paradigm, where the different penalization terms correspond to assumed prior distributions, whereas the considered loss functions correspond to likelihood functions. However, the Bayesian estimation approach can be readily extended to handle missing values via the device of data augmentation, see Tanner and Wong (1987). Data augmentation in combination with Markov chain Monte Carlo methodology (MCMC) allows for derivation of estimators via sample averages. Further, the more complex the assumed likelihood structures are, the more compelling MCMC approaches to provide estimators may become.Footnote 3 When considering binary data or hierarchical model structures, the involved loss and likelihood functions, serving as optimization criteria in the statistical and machine learning context, become more complex although in principle straightforward to handle via MCMC techniques, see among others Aßmann and Boysen-Hogrefe (2011).

In this article, we hence illustrate how model selection for binary regression models can be performed simultaneously to handling missing values in the considered set of covariate data in a Bayesian framework. Comparison is provided regarding alternative statistical and machine learning approaches arising in the context of shrinkage estimation, such as Lasso, ridge regression, and Elastic-net regression for binary dependent variables. We provide the corresponding Bayesian approach based on a MCMC implementation for the handling of missing values accomplished in conjunction with estimation and variable selection and review the close relationships between shrinkage estimators. The described approach uses classification and regression trees to approximate the full conditional distribution of missing values. The holistic Bayesian approach allows for the incorporation of prior uncertainty and the flexibility to consider any function of observed or augmented data within the set of conditioning variables.

We assess the quality of different variable selection approaches when missing values occur, where the considered shrinkage estimation approaches are combined with multiple imputation, via a simulation study and an empirical illustration. As indicators of quality, we use indicators assessing the prediction performance regarding variable selection. The results suggest that for simple setups in terms of numbers of variables, missing mechanism, and dependency structures, all considered approaches perform well with regard to model selection. The more complex the setups becomes, the less reliable standard model selection approaches work, where especially inference is more accurate for the suggested Bayesian approach based on spike-and-slab priors and simultaneous consideration of missing values. The empirical application illustrates that the considered Bayesian approach is well suited to provide weights that can be used in subsequent analyses. A main finding of the paper is hence that the quality of variable selection approaches remains high in principle even in the context of incomplete data situations. The paper also documents the required computational resources to apply estimation and model selection of this kind. Finally, the Bayesian approach to handling missing values and variable selection is a powerful and flexible framework that offers several advantages over established methods. By providing a coherent and unified framework, the Bayesian approach enables the comparison of different models on a common scale and the incorporation of prior knowledge and expert opinion. Additionally, standardizing the variables and ranking them based on the effect sizes can provide a simple and intuitive way to interpret the results. However, it is important to consider other concepts of variable importance when interpreting the results to gain a more comprehensive understanding of the relationships between the variables and the response variable.

The paper is organized as follows. Sect. 2 reviews the relationship between shrinkage estimators and Bayesian estimation and provides the suggested Bayesian approach towards model selection in the presence of missing covariate data in terms of a spike-and-slab approach for binary regression models. Also, the details with regard to posterior sampling and corresponding inference are provided. Sect. 3 subsumes the variable selection methods in statistical learning and handling of missing values in general and Sect. 4 adds quality assessments of variable selection. Sect. 5 presents results for simulated data sets and Sect. 6 provides the empirical illustration. Sect. 7 concludes.

2 Bayesian estimation for binary regression models with variable selection and handling of missing values

A Bayesian estimation approach in general can be motivated in terms of a decision theoretic approach using a loss function to assess the difference between parameter of interest taking value \(\theta\) and the corresponding estimator \(\theta^{*}\). A loss function \(L(\theta,\theta^{*})\) is defined as a mapping of the estimators \(\theta^{*}\) from the set of possible estimators and each of the parameter values \(\theta\) within the parameter space in the real line. The optimal estimator \(\tilde{\theta}^{*}\) in terms of minimal expected posterior loss is then defined as

where \(f(\theta|D)\) denotes the posterior density of all parameters of interest \(\theta\). The resulting minimization problem is similar to optimization problems arising in the context of penalized estimation if the involved loss function is defined to imply the mode of the posterior distribution and if the target function in penalized estimation (possibly in log scale) is similar to the structure of the posterior distribution proportional to the product of likelihood and prior distribution.Footnote 4

This framing in terms of a decision theoretic approach points out that also all model selection decisions are an integral part of the estimation process with various model selection approaches being discussed within the literature. Standard estimation procedures are typically conditional on one specific statistical model and the data set under consideration, where properties of the considered data need to be reflected within the statistical model. These properties refer to the scale type of variables within the data, the dimensionality of the data set, and the completeness. Whereas the scale of the variables is reflected in the considered statistical model, strategies to handle the dimension or incompleteness of the data are typically not an integral part of the estimation routine, but considered in a sequential manner. The straightforward strategy to address all issues simultaneously provided by complete enumeration of all possible models (including all possible subsets of variables and incomplete data constellations) is often hindered by the tremendous computational efforts involved and the intractability of the incomplete data likelihood functions. The computational intensity is one of the main reasons why, from a historical viewpoint, strategies such as stepwise variable selection, both forward and backward, have been discussed in the context of complete data early.Footnote 5 Thus, the model selection process involves in complete data situations at most the evaluation of \(P(P+1)/2\) model specifications instead of \(2^{P}\) model specifications to find a maximum or minimum of the underlying break-up criterion. Thereby, the number of models considered within the selection is dramatically reduced although at the cost of path dependency.Footnote 6

For illustration of the mechanisms involved in complete model enumeration, consider a set of models \(\{\mathcal{M}_{m}\}_{m=1}^{M}\). Given data D, prediction or inference can be based on the asymptotic distribution or the posterior distribution of a parameter estimator for \(\theta\) being model specific, i.e., \(f(\theta|D,\mathcal{M}_{m})\) being specific for the considered models \(m=1,\ldots,M\) with \(2^{P}\leq M\) in case model selection coincide with selecting the appropriate subset of covariate variables, or M even larger than \(2^{P}\) when alternative model frameworks or missing data patterns are considered additionally.Footnote 7 A common pitfall is relying on a single model, especially when multiple models are equally likely but provide differing predictions or inferences.

Bayesian model averaging provides a formal mechanism to aggregate estimation results from different models arising when performing a complete enumeration. Aggregated prediction or inference can be obtained via

with

and \(f(\mathcal{M}_{m}|D)\) denoting the posterior and \(f(\mathcal{M}_{m})\) the prior model probability, whereas \(f(D|\mathcal{M}_{m})\) denotes the marginal model specific likelihood implied via

\(\mathcal{L}(D|\theta,\mathcal{M}_{m})\) and \(f(\theta|\mathcal{M}_{m})\) thereby denote the model specific likelihood and prior distributions, respectively. In case the model specification addresses missing values, the likelihood

When a specific model, say \(\mathcal{M}_{m^{\prime}}\), has by far the highest probability aggregated prediction and inference resembles the inference and prediction conditional on model \(\mathcal{M}_{m^{\prime}}\). In case the posterior model probabilities of different models are similar, the aggregated and conditional predictions and inferences will also be similar.

This scheme allows for aggregating inference and prediction from a set of considered models, whether nested or non-nested. However, operationalization and implementation of this scheme require access to the likelihood function as well as tractability of the integration involved to derive the marginal model likelihood. Further, the scheme may be criticized to depend on prior assumption, although the set of considered models may include different prior settings as well for a given likelihood specification. As the computational efforts can become easily prohibitively large, this strategy is applied in the literature in case only relatively small sets of alternative models are considered, see Aßmann and Boysen-Hogrefe (2011), Aßmann (2012), and Frühwirth-Schnatter and Kaufmann (2008), where the computational issues are tractable.

Given the tremendous efforts possibly involved in this general strategy, alternative strategies are discussed in the literature providing model selection based on adaptive strategies. These alternative strategies may consider a restricted class of statistical models only or use alternative model criteria for selection and aggregation purposes which involve tractable computational efforts. In particular, model selection comes down to variable selection, also referred to as feature selection in the literature, when the statistical model is restricted to take the form of a regression model with the conditional expectation of the dependent variable taking the form \(X\beta\), where \(\beta\) is a \(P\times 1\) vector of parameters. In general, looking on the \(2^{P}\) possible regression models in total requires to calculate \(2^{P}\) different comparative measurements, e.g., the Bayes-factor, which leads to model-averaging to get a posterior distribution that takes into account the uncertainty about all M models which requires computing the posterior distribution over the parameters of interest in each model \(\mathcal{M}_{m}\) (Clyde and George 2004). Additional computation is required for the posterior distribution over all such models. For linear regression models with complete covariate data Hansen (2007) discusses model averaging allowing as well as for model selection. Otherwise, in a Bayesian view ignoring the model or parameter by setting the prior to zero violates Cromwells’s rule (Jackman 2009). Further, for a restricted model class, the model selection issue can be tackled as well by means of shrinkage estimation often also labeled as penalized estimation approaches. In a model-averaging perspective we are interested in all posterior model probabilities for models in which the regression parameters are unequal to zero which leads to variable selection (George 2000).

In the following, we will consider binary regression models with missing data in covariate variables and discuss shrinkage estimators and Bayesian variable selection approaches to handle the model selection issue arising in form of selecting the most appropriate subset of conditioning variables. The discussion will also point out how these approaches relate to the general strategy. The considered model framework can be described as follows. Let \(D=\{y,X\}\) comprise a \(N\times 1\) vector \(y=(y_{1},\ldots,y_{N})^{\prime}\) of binary dependent variables and a \(N\times P\) matrix of covariate data \(X=(X_{1}^{\prime},\ldots,X_{N}^{\prime})^{\prime}\) not including a constant. We will introduce the probit specification for the binary regression model in the following as the involved MCMC sampling scheme is more tractable compared to a corresponding Logit specification. Hence, the binary regression model with probit link is given as

where \(e=(e_{1},\ldots,e_{N})^{\prime}\) is a \(N\times 1\) vector of independent standard normally distributed error terms and \(y^{*}=(y_{1}^{*},\ldots,y_{N}^{*})^{\prime}\) a vector of latent variables. The corresponding likelihood and augmented likelihood functions take the forms

and

where \(\Phi(\cdot)\), \(\phi(\cdot)\), and \(\mathcal{I}(\cdot)\) denote the cumulative density of the standard normal distribution, the density of the standard normal distribution, and the indicator function, respectively.

The consideration of the augmented likelihood function is based on Albert and Chib (1993) as it simplifies the implementation of a MCMC sampling scheme for estimation. Contextualizing this for variable selection within binary regression models, a representation for all possible model specifications is required. In general, the model setup is implied via the likelihood and the prior distribution which yields to approximate the posterior distribution as proportion of the likelihood and the prior distribution. To describe differences between Bayesian estimators and shrinkage or Maximum Likelihood estimators in general it may be helpful to recall that Bayesian estimators can be formulated as a decision theoretic problem aiming at minimizing Bayes risk. This risk is associated with an appropriate loss function, see Mood et al. (1974). Depending on the loss function, the posterior mean or median are appropriate Bayesian estimators. In this sense, shrinkage estimators may be interpreted as posterior mode estimators, although as pointed out by Gneiting (2011) it might be hard to reconcile this kind of estimator with the decision theoretic duality of loss functions and estimators in case of interaction between penalization and cross-validation. In this sense, and as the posterior distribution is hardly ever accessible by analytical means, Bayesian estimators are typically derived as sample means, where samples from the assumed posterior distribution are obtained using Markov chain Monte Carlo (MCMC) methods. Different MCMC techniques for Bayesian variable and model selection are developed varying the prior distribution or the mechanism in the MCMC sampler, for details see Yang et al. (2005).

To arrive a general model specification encompassing all possible models, the binary regression setup with \(\beta=(\beta_{1},\ldots,\beta_{P})^{\prime}\) described above can be extended as follows. Following Lee et al. (2003), we use a \(P\times 1\) indicator vector \(\gamma\), where each single \(\gamma_{j}\) with \(j=1,\ldots,P\) is defined by

Taking \(\gamma\) as a condition into account, the model described in Eqs. (1) and (2) would become

and

with the diag() operator stacking the indicated vector on the main diagonal of corresponding square matrix. To complete the model setup, priors for \(\alpha\), \(\beta\), and \(\gamma\) need to be specified when variable selection is considered for binary regression models. The implied posterior can be described via

Thereby, all quantitative continuous covariate variables in the data set are considered to be standardized via a z-transformation.Footnote 8 In case an intercept is considered in the model specifications, the intercept is then the common parameter for all possible model and represents the overall mean of the model. Following Lamnisos et al. (2009); George and McCulloch (1993); Lee et al. (2003) we use a normal prior for \(\alpha\) with expected value \(\alpha_{0}\) and variance h, i.e.

with \(\phi(\cdot|\cdot,\cdot)\) denoting the normal distribution with indicated expectation and variance parameter. Typically, h is set as a large value corresponding to an uninformative prior setting with regard to the intercept (Lamnisos et al. 2009). Table 1 outlines the details with regard to hyperparameters of all prior distributions.

The prior setting for \(\beta\) and \(\gamma\) is based on George and McCulloch (1993) assuming an independent marginal conditional setup

The functional forms follow spike-and-slab priors first suggested by Mitchell and Beauchamp (1988) for Bayesian variable selection for normal linear regression models. The according mixture distribution for the coefficients is given as

where \(f_{1}\) and \(f_{2}\) are placeholders for any appropriate continuous or discrete probability density function. Given this mixture form, \(f_{1}\) is used to steer coefficients to zero (spike), e.g., \(f_{1}\) assigns a unit point mass at \(\beta_{j}=0\) and \(f_{2}\) allows for non-zero coefficients (slab), which can be an absolutely continuous density otherwise such as uniform or normal. Hence, this setup directly incorporates selective shrinkage, i.e., the separating effect in the coefficients caused by the spike-and-slab so that most coefficients are peaked at zero and significant coefficients are set different from zero. A mixture of two normal distributions with different variances are widely used implying

where typically \(\tau_{2}\gg\tau_{1}\). Note that different approaches for spike-and-slab prior setups can be found, such as Laplace priors (Tibshirani 1996) or Horseshoe priors (Carvalho et al. 2010) or setting \(f_{1}\) to unit mass at zero (George and McCulloch 1997). Hence, if \(\beta_{j}\) is found to differ substantially from zero, it will be assigned in the model (slab) or otherwise will be skipped out of the model (spike). Note that the different prior setups correspond to different setups of the penalization function in the context of shrinkage estimators.

George and McCulloch (1993) introduced the Stochastic Search Variable Selection (SSVS) method, which is a Bayesian approach for variable selection in linear regression models. SSVS uses a mixture of two normal distributions as a prior for the regression coefficients, where one distribution has a very small variance and the other has a large variance. This prior encourages shrinkage of the coefficients towards zero, and the stochastic search algorithm explores the model space to identify the most important variables. For the prior for \(\gamma\) that specifies the model space we follow George and McCulloch (1993, 1997) and Lee et al. (2003) and consider a Bernoulli prior framework given as

with \(w_{j}\in(0,1)\) governing the probability that the j-th column of X is considered within the regression. It is also common to set \(w_{j}=w\) for \(j=1,\dots,P\), thereby assuming homogeneity of the inclusion probabilities.

In this case, the prior distribution of \(\gamma\) is binomial and the a priori expected number of selected variables of X can be modeled in terms of w. A fixed value for w can be set if there is consolidated knowledge. If \(P\gg N\), small values of w are chosen, to bound the number of variables in the model. Hence, the prior penalizes larger models by setting w to a small percentage.Footnote 9 Otherwise, a maximum for the model size \(P_{\text{max}}\) can be set as in Dobra (2009).Footnote 10 In the following, we use the binomial prior on \(\gamma\) with homogeneous inclusion probabilities.

The model setup so far describes the situation with completely observed data and is, as discussed in the literature, accessible to posterior sampling, see also Albert (1992). Data augmentation can also be used to handle missing values. To perform Bayesian inference, an MCMC sampling scheme, see e.g., Geman and Geman (1984); Gelfand and Smith (1990); Aßmann and Preising (2020), is implemented to generate a sample from the posterior distributions of interest. Handling of latent structures and missing values is conceptually straightforward in the Bayesian context via the device of data augmentation since the full conditional distributions of missing values can be added as outlined in Aßmann et al. (2022). The prior is thereby also augmented where we opt for a prior for the missing values proportional to the distribution of observed values, see also below. The parameter vector can be augmented with the missing values, which can then be utilized as conditions for all other full conditional distributions of interest. In the context of a binary probit models, data augmentation involves augmenting the dependent variable y by drawing a new value \(y^{*}\) from a conditional distribution depending on the other current model parameters, thus the observed binary data is augmented with the latent continuous variable. Data augmentation can be operationalized via including a set of appropriately specified full conditional distribution for the missing values within the MCMC sampling scheme. First the latent variable is drawn or the missing variable are handled, then the model parameters are updated by using the current augmented data. Finally, it allows to estimate the model parameters more accurately and flexibly, and can be used in a variety of applications.Footnote 11 This framework has been widely used in various applications, including missing data imputation.

The quantities of interest are hence \(y^{*},\beta,\alpha,\gamma\), and \(X_{\text{mis}}\), where \(X_{\text{mis}}\) denotes the missing values of the covariate data X with \(X=(X_{\text{obs}},X_{\text{mis}})\). The corresponding posterior of interest results from Eqs. (4) in combination with the assumed operationalizations of Eq. (6), see also Table 1. The prior for the missing values \(X_{\text{mis}}\) is discussed when providing the assumed full conditional distribution. Starting point for sampling and inference is hence the augmented posterior distribution, see also Aßmann et al. (2022), given as

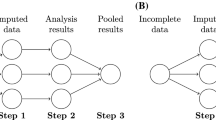

Thus, we draw the posterior values iteratively for \(m=1,\ldots,M\) from the respective full conditional distributions of the considered parameter blocks \(y^{*},\beta,\gamma,X_{\text{mis}}\). After setting appropriate starting values for \(\beta,\gamma,X_{\text{mis}}\), the set of full conditional distributions in the Gibbs sampler is set up as follows. Fig. 1 shows the schematic progression of the Gibbs sampler with the setting of the start values and the sequential structure of the full conditional distributions.

- \(f(y^{*}|\cdot)\) :

-

The full conditional distributions of the latent variables \(y^{*}\) corresponds to a product of truncated normal distributions, since the single elements \(y_{i}^{*}\), \(i=1,\ldots,N\), are mutually independent. Sampling for each element is hence performed from a truncated normal distribution with moments \(\mu_{y_{i}^{*}}=\alpha+X_{i,\gamma}\beta_{\gamma}\) and variance equal to one with the truncated sphere ranging from \(-\infty\) to 0 in case \(y_{i}=0\) and in case \(y_{i}=1\) ranging from 0 to \(\infty\).

- \(f(\alpha,\beta|\cdot)\) :

-

Following Albert and Chib (1993), the full conditional distribution follows in principle the standard Bayesian linear regression given the latent continuous variable \(y^{*}\). Consideration of the underlying continuous spike-and-slab prior the full conditional distribution has the form of a multivariate normal with variance and expectation given as

$$\begin{aligned}\displaystyle V_{\alpha,\beta}=(D^{-1}+\tilde{X}^{\prime}\tilde{X})^{-1}\quad\text{and}\quad m_{\alpha,\beta}=V(D^{-1}(\alpha_{0},\beta_{0}^{\prime})^{\prime}+\tilde{X}^{\prime}y^{*})\end{aligned}$$respectively, where \(D\) is a diagonal matrix with \(D=\text{diag}(h,(\iota_{P}-\gamma)\tau_{1}+\gamma\tau_{2})\) with \(\iota_{\cdot}\) denoting a vector of ones with indicated size and \(\tilde{X}=(\iota_{N},X)\), see also Biswas et al. (2022) for a discussion of this full conditional distribution.Footnote 12

- \(f(\gamma|\cdot)\) :

-

The full conditional distribution for \(\gamma\) is implied via the assumed prior structure and corresponds to \(P\) independent Bernoulli distributions as the single elements \(\gamma_{j}\), \(j=1,\ldots,P\), are mutually independent. The corresponding implied probabilities are given as

$$p_{j}=\frac{w\tau_{2}^{-1}\exp\left\{-\frac{\beta_{j}^{2}}{2\tau_{2}^{2}}\right\}}{w\tau_{2}^{-1}\exp\left\{-\frac{\beta_{j}^{2}}{2\tau_{2}^{2}}\right\}+(1-w)\tau_{1}^{-1}\exp\left\{-\frac{\beta_{j}^{2}}{2\tau_{1}^{2}}\right\}},\quad j=1,\ldots,P,$$with the hyperparameters \(0<w<1\) and \(\tau_{2}^{2}\gg\tau_{1}^{2}> 0\), see Biswas et al. (2022) for discussion and Table 1 for chosen values.

- \(f(X_{\text{mis}}|\cdot)\) :

-

Values of \(X_{\text{mis}}\) are sampled sequentially for each column vector of \(X\), i.e., \(X=(X^{(1)},\ldots,X^{(P)})\), based on the non-parametric approximation suggested in the form of classification and sequential regression trees (CART), see Burgette and Reiter (2010). Let \(X_{\text{com}}^{(k)}=(X_{\text{obs}}^{(k)},X_{\text{mis}}^{(k)})\), \(k=1\ldots,P\), denote the completed variables, and \(X_{\text{com}}^{(\setminus k)}\), \(k=1,\ldots,P\), denotes the completed matrix of variables except column \(k\). It is a major advantage of the data augmentation approach that the latent variables possibly serving as kinds of sufficient statistics can be used for the approximation of the full conditional distribution of missing values. In a first step, a decision tree is built for \(X_{\text{com}}^{(k)}\) conditional on the corresponding values of all remaining variables \(X_{\text{com}}^{(\setminus k)}\) as well as conditional on \(y\) and \(y^{*}\) serving as a kind of sufficient statistic for \(y\).Footnote 13 In each iteration the covariates are standardized in the spike-and-slab approach as well as in the imputation for the variable selection. To incorporate a prior uncertainty on the hyperparameters of the sequential partitioning regression trees, we build trees with a randomly varying minimum number of elements within nodes. Every missing observation can then be assigned to a node and thus a grouping of observations implied by the binary partition in terms of the conditioning variables. The values within each node provide access to an empirical distribution function serving as an approximation to the full conditional distribution of a missing value and thus as the key element for running the data generating mechanism for missing values. Thereby, this modeling approach is in line with prior distributions of missing values proportional to observed data densities. Draws from the empirical distribution function within a node correspond to draws from the full conditional distributions of missing values, where sampling is performed via the Bayesian bootstrap to account for the estimation uncertainty of the full conditional distribution, see Rubin (1981). The considered approach further offers the flexibility to consider any function of observed or augmented data within the set of conditioning variables as well. We incorporate the implementation available within the rpart package available for R (R Core Team. 2020), see Therneau and Atkinson (2018) for further details. The sampled \(X_{\text{mis}}\) values allow to refer to an updated completed matrix of covariates in all other steps of the MCMC algorithm including a renewed standardization of the covariate data.

Schematic progress of the sequential structure of the full conditional distributions within the Gibbs sampler. Note that the starting values are set once before starting the \(m=1,\dots,M\) iterations. The full conditional distributions in the ellipses express extended data, whereas the rectangular blocks represent the full conditional distributions, which provide the output of interest. After subtracting an appropriate burn-in phase, both provide the corresponding estimators based on the median or mean

Given the MCMC output, estimators are readily defined via corresponding sample moments, either arithmetic means or medians. Whether arithmetic means or medians are reported depends on the loss function involved in defining a Bayesian point estimators based on a Risk function, see Mood et al. (1974). Arithmetic means correspond to quadratic Bayesian loss, whereas medians correspond to absolute Bayesian loss. Furthermore, the model structure implies that estimators conditional on \(\gamma_{j}=1\), \(j=1,\ldots,P\), may be considered as well as unconditional estimators. Whereas unconditional estimators can be obtained via using the complete MCMC output, conditional estimators correspond to discarding those draws for which the sample elements in \(\gamma\) are equal to one. In this sense, estimates of the inclusion probabilities reaching at least 50% are necessary to consider a variable as a part of the true underlying data generating process, see also Russu et al. (2012); Bottolo and Richardson (2010); Hans et al. (2007). Finally, note that within the simulation experiments as well as within the empirical illustration, we set M to equal 20,000 after a burn-in phase of 5,000 was found sufficient to discard the effects of initialization both within the simulations study and the empirical illustration. Next, we will discuss variable selection and handling of missing values in the context of shrinkage estimators the relations to Bayesian estimation.

3 Shrinkage estimation for binary regression models with missing covariate data

Variable selection as a special case of model selection can be performed in terms of shrinkage estimators. Thereby, the task is to identify a subset of variables that are potential predictors for the dependent variable and is best with respect to predefined optimality criterion. Resulting reduced models give us a higher chance to interpret, visualize and handle the results suitably.

In general, the shrinkage or penalized estimation approach for variable selection is provided in terms of a loss function. Hence, with \(\theta=(\alpha,\beta)\), the resulting can be defined as

Thereby, \(\mathcal{LF}(D,\theta)\) measures the ability of the model to fit the data usually taking a form close to a likelihood function, whereas \(p(\beta,\lambda)\) penalizes model complexity, i.e., the dimensionality of the parameter vector \(\beta\) and \(\lambda\) steers the magnitude of penalization. The different shrinkage estimators discussed in the literature differ with respect to alternative specifications of \(\mathcal{LF}(D,\theta)\) and \(p_{\text{shrink}}(\beta,\lambda)\). In general, the loss function resembles in structure the Mallows criterion, see Mallows (1973).

Prominent choices for measuring model fitness is the residual sum of squares for continuous dependent variables, where the sum of squared residuals is also a constituent part of the log likelihood function given the assumption of multivariate normality or more generally assuming an ellipsoid distribution. The penalization function should be monotonically increasing for larger dimension of \(\beta\), where typical functions fulfilling this requirement are quadratic or absolute distance functions. Note that these also play a prominent role in logarithms of densities, for example the normal or Laplace density, respectively. Given this, log likelihood functions and log prior distributions operate in the same way as loss and penalty functions respectively, which in turn makes log likelihood functions and priors natural candidates for defining shrinkage estimators. These relationships will be highlighted in more detail, when discussing specific shrinkage estimators in the following. A final remark relates to the mechanism how the consideration of penalty functions causes the selection of a subset of variables. For illustration consider the case, where the function assessing model fitness takes the form of an ellipsoid with fitness decreasing with larger distance to the center of the ellipsoid. The penalty function contributes minimum loss when parameters take the value zero. The overall loss function is thereby minimized via balancing marginal increase in fitness with marginal loss arising from the penalization in a Lagrangean manner. The point where the marginal fitness and penalization contributions can be balanced depends on the chosen functional forms, where opting for absolute loss may provide shrinkage of single parameters exactly towards zero.

Different specifications of \(p(\beta,\lambda)\) imply different shrinkage estimators. A general specification for the penalization function can be stated in form of a linear combination of different distance functions or norms, i.e.,

where for \(\kappa_{j}\) taking values \(1,2,\ldots\), i.e., the corresponding \(L_{\kappa}\)-norms are involved. The following special cases are prominent in the literature. For \(J=1\) and \(\kappa_{1}=2\), the penalization function involves quadratic norms \(L_{2}\) what is referred to as ridge regression, i.e., \(p_{\text{ridge}}(\beta,\lambda)=\lambda_{1}\beta^{\prime}\beta\), see Hoerl and Kennard (1970) and Friedman et al. (2010) for a discussion in the context of generalized linear models. \(\lambda_{1}\) controls the impact of the penalization, with higher values pushing more coefficients towards zero. If \(\lambda_{1}\to\infty\), then \(\hat{\beta}_{\text{ridge}}\to 0\), so that the model finally consists only of the intercept. With ridge regression no genuine variable selection is possible because all coefficients stay in the model but are more or less shrunk towards zero. In the context of the binary probit regression model, the loss function is typically chosen as \(\mathcal{LF}(D,\theta)=-\ln\mathcal{L}(D|\theta)=-\ln\mathcal{L}(y|X,\beta)=-\sum_{i=1}^{N}\ln\Phi((2y_{i}-1)(\alpha+X_{i}\beta))\). Given this, the ridge regression approach towards binary dependent data resembles a Bayesian estimation approach with multivariate normal prior in the sense that the overall optimality function of the ridge regression has a functional form that is proportional to the logarithm of the implied unnormalized posterior distribution.

A similar situation arises when considering the case with \(J=1\) and \(\kappa_{1}=1\), implying the use of absolute values instead of squared ones. Tibshirani (1996) introduced this least absolute shrinkage and selection operator (Lasso) to the linear regression problem, extended to the generalized linear model by Park and Hastie (2007). Given this, the penalization function \(p(\beta,\lambda)\) becomes \(p_{\text{Lasso}}(\beta,\lambda)=\lambda_{1}(\beta^{\prime}\beta)^{\frac{1}{2}}\). Increasing \(\lambda_{1}\) sets more coefficients to zero, causing the selection of fewer variables with the selected being shrunk, and finally the number of nonzero coefficients decreases. The analytical solution of the Lasso due to the \(L_{1}\)-norm penalty is more complicated than with \(L_{2}\)-norm.Footnote 14 Again, similarity to a Bayesian setup with Laplace prior distribution should be noted when considering the functional forms of the logarithm of the unnormalized posterior density and the overall loss function. Friedman et al. (2010) show that both Lasso and ridge regression have their drawbacks and advantages in the context of correlated variables and over-fitting. Therefore, Zou and Hastie (2005) proposed the Elastic net approach incorporating to include the best of both. This method uses both \(L_{1}\)- and \(L_{2}\)-penalties and thus is a convex combination of the ridge and Lasso approach towards shrinkage. Friedman et al. (2010) extend the Elastic net to generalized linear models where \(J=2\), \(\lambda_{1}=1\), and \(\lambda_{2}=2\). After reparametrization the Elastic net manifests as

thereby using \(\varphi\) as control parameter for the weight given to the \(L_{1}\)- and \(L_{2}\)-norm driven penalty with \(0<\varphi<1\) and \(\lambda> 0\) as weighting parameter given to the penalty. The combination causes the Lasso penalization term to select among the variables thereby putting all weight on the set of selected variables, whereas the ridge term shrinks coefficients towards each other. Hence, Elastic net finds a sparse model with typically high prediction accuracy. Framing this approach from a Bayesian perspective implies that the penalization function is similar to the kernel of the logarithm of a mixture distribution, where the two mixture components follow normal and Laplace distributions, respectively.

As discussed, the Bayesian approach to handling missing values and variable selection, as well as established methods, can set regression coefficients to zero if appropriate. This means that variables are selected by the different approaches, but without ranking the importance of these variables. However, if using standardized variable values, the size of the regression coefficients can be used as a comfortable and simpler way to rank the variables. This ensures that all variables are on the same scale, which facilitates easy comparison of each variable. This approach is recommended by Kyung et al. (2010) for handling different variables with different measurements. Additionally, as discussed by Friedman et al. (2010), standardizing the variables simplifies the analysis. When ranking variables based on the size of the regression coefficients, it is essential to recognize that this ranking reflects the effect sizes. However, it is also crucial to consider other concepts of variable importance, such as significance and permutation feature importance. These alternative concepts can provide additional insights into the relationships between the variables and the response variable and should be taken into account when interpreting the results (Strobl et al. 2007). By considering multiple perspectives on variable importance, it becomes possible to gain a more nuanced understanding of the relationships in the data.

After standardization we resort to various R packages. For Elastic net estimation the glmnet-package (Friedman et al. 2010) provides many setting options for the above-mentioned control and penalty parameters. As hyperparameter, we set both \(\varphi=0.0\) for ridge penalized estimation and \(\varphi=1.0\) for the Lasso penalty and additionally \(\varphi=0.5\). The strength of penalty is controlled by the tuning parameter \(\lambda\) which can also be set before estimation or via cross-validation which is most widely used and implemented (Friedman et al. 2010). The k-fold cross-validation is used with \(k=10\) folds and a squared loss to use for cross-validation by mean squared error. The shrinkage parameter \(\lambda\) is picked up out of the sequence of possible parameters as the within one standard error of the minimum mean cross-validated error value, so that the most regularized model is given. After chosen the shrinkage parameter the Elastic net is finally estimated with the set control and the cross-validated shrinkage parameter for each m data set. Then, the presented results are averaged over the M datasets.

However, the methods are typically discussed and evaluated under the assumption of fully observed covariate data, so that missing values can be handled in a variety of ways beyond the automated Bayesian approach. First, the data augmentation method within Gibbs sampling is particularly well suited to dealing with missing data problems, since the inclusion of missing values in the parameter vector results in the treatment of all other model quantities as if the data were fully observed. In addition, adding the data augmentation step to the Bayesian estimation routine allows for the avoidance of combination rules (Tanner and Wong 1987). Second, to cope with missing values in covariate data in the other above-mentioned approaches, we make use of multiple imputation, see Rubin (1976), where we use the multiple imputation via chained equations (MICE) approach, following Buuren and Groothuis-Oudshoorn (2011). Within the MICE approach, for each variable showing missing values, a full conditional model is specified, where imputations are generated via sampling from these full conditional distributions. Given an appropriate implementation scheme, the MICE algorithm repeatedly iterates over the sequence of assumed full conditional distributions, generates imputations via sampling, and hence updates the data. After an appropriately chosen burn-in phase, obtained draws can be used to build an imputed data set that can be used in subsequent analyses. Repeating the MICE algorithm M times provides M imputed data sets given those the shrinkage estimation routines are performed for each of the imputed data sets.

Furthermore, the treatment of missing values leads to further considerations regarding the evaluation of the quality aspects of the different selection and estimation procedures. The issues and challenges associated with multiple imputation appear widely, in some cases, as convergence issues, imputations model mis-specification, imputation of rare events, large amounts of missing data, challenges while reporting and interpreting or issues while pooling the results. Following Buuren (2018) the general routine associated with MICE of imputing data, analyzing results and pooling results across all M imputed datasets becomes difficult in variable selection because the set of selected variables will differ more or less. In the literature of statistical and machine learning context exists no standard method to pool the results and to combine the information provided by the M different model results. Even for complete datasets, the likelihood-based variable selection methods reach to several limitations (Miller 2019). In the literature different methods are discussed and for a current overview see Du et al. (2022). Brand (1999) presents a two-step solution which pools the results based on the pooled likelihood ratio p‑values selecting insignificant variables after applying stepwise regression to each M imputed dataset and exclude variables from a combined supermodel if they have been selected at least less than half of the runs. Otherwise, (Bayesian) model averaging can be resorted to in order to account for the variability of the selected variables across all imputed data sets (Yang et al. 2005). Buuren (2018) distinguishes the literature into three general approaches, referring to Wood et al. (2008) and Vergouwe et al. (2010): the Majority approach counting how often a variable is selected (at least half of the models applied to the imputed datasets), and the Stack approach, so that all imputed datasets are stacked into a single dataset applying variable selection methods with weights, and the Wald approach, especially for stepwise selection, pooling based on the Wald statistics.

Considering the above-mentioned variable selection approaches while handling missing data, we present the following routines. First, we present an average-based approach (Average), where the final shrinkage estimator is obtained as the arithmetic mean of the M estimators obtained for each imputed data set and the combined variance estimator is given as the sum of within and between variance of the M estimators obtained from the imputed data sets.Footnote 15 Hence, this procedure is a rough way of pooling estimates, but common in daily practice. As a second approach (Majority), we set up an imputation based on the above-mentioned MICE settings, where we perform variable selection techniques on each imputed dataset m resulting to M different selection models with partly different selected variables. For pooling, we extract the selected variables from each model and sum across the imputations to identify variables that were selected in at least half of the imputed datasets. Then, we estimate a probit model as supermodel with the mostly selected variables and pool results according to the Rubin’s rules for generalized linear models. However, this procedure implicitly involves a loss of information. Finally, following Buuren (2018) we implement a pooling approach based on the Wald test (Wald) and expand the Majority-approach by extracting the redundant variable by testing. After counting who often a variable is selected in the M imputed datasets, we compare the variable appearing in more than 50% of the M models and apply a Wald test to determine which variable of the sorted counts.

4 Quality assessments of variable selection while handling missing values

Value of data is in general linked to the ability to form informed decisions based on the available data information. This value depends on the quality of statistical or machine learning algorithms to use the available data information thoroughly. The proposed Bayesian approach illustrates in the context of a binary regression model the possibilities to integrate all available information into the analysis of factors influencing the binary dependent variable. The proposed method covers the data constellation with many although incompletely observed variables and relatively few observations. The proposed algorithm learns in a machine learning like manner the best combination of covariate variables explaining the dependent variable thereby addressing the entailed problem to decide for a subset of variables and their corresponding influence. To assess the quality in terms of the statistical efficiency of the proposed method, we present a couple of statistical measures typically used to compare different statistical approaches. In the context of variable selection and missing value imputation, the quality aspects refer to the indicators used to assess the performance of different approaches in selecting the correct variables and handling missing values. These quality aspects are typically related to the prediction performance and accuracy of the model. This means that the quality of the approaches is evaluated based on how well they can identify the correct variables while imputing missing values. First, a common quality aspect is to evaluate the performance of variable selection approaches including the accuracy of selection in both model and variable, i.e. the ability of the approach to select the correct variables and exclude irrelevant ones. The following criteria are used to assess the performance of the different strategies within the different scenarios. For evaluation diagnostics of the different approaches, we use the precision rate (\(PR\)), the recall rate (\(RR\)), and the F-measure assessing the number of covariate variables (in-)correctly identified as (false) true, i.e., whether the decision to incorporate them in the model is in line with the DGP or not. A true positive (\(TP\)) is a correctly selected positive, in our case correctly selecting a variable which is indeed part of the assumed DGP. A true negative (\(TN\)) is a correctly non-selected non-important one. A false positive (\(FP\)) is an incorrect selection that a variable is important, when in fact, was non-important. Finally, false negative (\(FN\)) is an incorrect selection that a variable is non-important, when in fact is important. Based on these definitions the above-mentioned performance measurements are defined as

Note that the F-measure is given as the weighted harmonic mean of the precision and sensitivity. The F-measure balances hence both and is useful if the recall \(RR\) has large values, but the precision \(PR\) has small ones.

In addition to the aforementioned considerations, the consistency of variable selection across different iterations in the case of M imputed datasets represents a further quality aspect in shrinkage estimation approaches. But as above mentioned the calculus of counting the selected variables seems not to be a suitable quality aspect. Due to space limitations only the average estimates over the \(M=100\) datasets are reported, thn the biases are straightforward. Note that the number of estimators per shrinkage approaches varies. For instance, the ridge regression provides M estimates even for redundant variables because estimates are shrunk to zero. In contrast, the Elastic net and the Lasso set the impact of variables to zero. The Bayesian spike-and-slab approach, on the other hand, provides M estimates for all variables.

Generally, the root mean square error (RMSE) over the results from the M datasets is an quality indicator for the robustness not only in the complete cases, but also in the handling of missing values. For a parameter \(\hat{\theta}_{p}\) the RMSE is calculated

where MSE denotes the mean square error. In the context of ridge regression and the Bayesian approach, the value of D is equal to M. However, in the case of the Elastic net, Lasso, and stepwise regression, the value of D differs from M for each variable. In these approaches, redundant variables are typically excluded from the model, and in a few datasets, important variables are attenuated, thus D varies.

5 Simulation study

The following simulation experiments aim at a comparison of different strategies to achieve variable selection within a binary probit regression framework. The simulations were implemented in R (R Core Team. 2020) and Julia (Bezanson et al. 2017) and compare the performance of the Bayesian variable selection approach – hereafter referred to as Bayesian Spike-and-Slab (SnS) – with the stepwise regression (SR) strategy as implemented in the MASS R package (Venables and Ripley 2002)) and different variations of Elastic net (EN) like Lasso and ridge regression as available within the R package glmnet (Friedman et al. 2010). For the shrinkage estimators, we consider for the Elastic net setup different control parameter \(\varphi=0.0\) for ridge regression (EN.0), \(\varphi=0.5\) for a variation of Elastic net (EN.5), and \(\varphi=1.0\) for Lasso (EN1.). Via cross-validation \(\lambda\) is chosen such that the error is within one standard error of the minimum shrinkage parameter (Friedman et al. 2010). Thereby, stepwise regression strategy depends on the choice of the selection criterion, where we opt for the Bayesian information criterion (BIC). For the Bayesian estimation approach, estimates are each based on MCMC chains of length 40,000. After discarding the first 10,000 iterations as burn-in, inference is based on the remaining 30,000 simulated draws from the joint posterior distribution. Convergence is monitored via Geweke statistics, the Gelman-Rubin statistics, and the effective sample size, see Geweke (1991); Gelman et al. (2023). The convergence diagnostics indicate overall convergence.

The simulated data is generated to follow a probit regression model with \(N=1000\) and \(P=10\). The considered data generating process satisfies the following conditions. Next to a constant, the covariate data \(X_{(p)}\) with \(1,\dots,9\) is generated from standard normal distributions in each variable. Only the first five out of the total ten parameters are set to a non-zero value by setting the indicator vector, accordingly, including the intercept. The signs of the 10 parameters are chosen to alternate. For the sake of variation, \(X_{1}\) to \(X_{4}\) are drawn from a multivariate normal distribution with expectation \(\mu=(0,0,0,0)^{\prime}\) and covariance \(\text{vech}(\Sigma)=(1,0.85,0.65,0.45,1,0.45,0.35,1,0.25,1)^{\prime}\) mimicking a situation with correlated covariate data.Footnote 16 Finally, a total of 100 simulated data sets are generated through replication.

Based on this data generating process, we consider two different variations for missingness in the covariate data: missing completely at random (MCAR) and missing at random (MAR). Simulating missing values as MCAR, we randomly set \(20\%\) of the values in the covariates \(X_{2}\) and \(X_{3}\) to missing later named as experimental study 1 (Ex 1) and we randomly set \(20\%\) in \(X_{2}\), \(30\%\) in \(X_{3}\) and \(10\%\) in \(X_{3}\) to missing as experimental study 2 (Ex 2). For the MAR variation (Ex 3), we consider a missing generating mechanism for \(X_{2,i}\) where \(X_{2,i}\) is missing if \(F_{U}(U_{i})> 0.75\), where \(F_{U}(U_{i})\) denotes the empirical distribution function of the random variable \(U_{i}\) which is

with \(\omega_{2,i}=0.2X_{2,i}+\rho_{i}\) and \(\rho_{i}\) being standard normally distributed. Thus, a missing rate of \(25\%\) for \(X_{2}\) is generated. For further details on the described missing designs, see Table 2.

To assess the different estimation strategies in case of missing values, we designed a comprehensive simulation study which is split in different scenarios. First, we consider a benchmark estimation without missing values labeled as before deletion (BD), followed by estimation of complete cases (CC) only, and finally a scenario with missing values (MIS), where missing values are handled either via multiple imputation before estimation as for the shrinkage estimators or embedded within the MCMC algorithm as for the Bayesian approach.

Regarding estimation results, we provide the true parameter values used in the DGP, mean posterior medians and averaged estimates, respectively over the 100 replications obtained for the BD, CC, MIS scenarios. We report on both the regression coefficients and conditional variance parameters. Beside the averaged estimates, simulation results are also evaluated in terms of the root mean square error (RMSE) and the inclusion probabilities where possible. Therefore, it is important to bear in mind that we assess the models’ performance on two levels. Firstly, the model selection, that is, regardless of the classification rule, how well do the different routines predict the initial model parameter based on the DGP. And secondly, how exactly the parameters underlying the data generating process are estimated.

The results of the different variations are presented in Tables 3 for experimental study 1 (Ex 1), Table 4 for experimental study 2 (Ex 2), and Table 5 for experimental study 3 (Ex 3) summarizing the three scenarios BD, CC, and MIS with results as average estimates and root mean square errors (RMSEs). Looking on Ex 1, the tables differ only in the results of estimation and pooling of the treatment of missing values, which are examined under quality aspects. As benchmark we report results for a simple probit model covering all important variables \((\alpha,\beta_{1},\dots,\beta_{4})\) for the three different experimental studies. In the BD scenario we find overall unbiased results for all parameters, therefore we can assume that the considered routines are implemented correctly. The different pooling approaches produce very different results in terms of accuracy and variation in the estimation results. It is surprising that the results of simple averaging come to significant improvements in the estimation results compared to the before deletion values, which is set as a benchmark for the three pooling strategies. It seems that the majority method tends to over-fit, as the estimation results are clearly too unbiased. This can be seen in all three experimental studies. Compared to our Bayesian spike-and-slab approach, which also treats missing values, there are sometimes large differences in the estimation results compared to the respective pooling approaches. However, the average and Wald methods show comparable patterns in that the RMSEs are better compared to the complete cases, whereas they are in part more distorted compared to the before deletion results. When data sets with many of missing values in several variables are available, the Bayesian spike-and-slab method shows its strengths. The RMSE increases slightly for all methods in the CC scenario, whereas the absolute estimation bias decrease for the three Elastic net methods. The bias of the estimators increases for the stepwise regression method and for the Spike-and-Slab approach. In contrast, imputation shows that performance decreases for all five approaches measured with the RSME. With the Bayesian Spike-and-Slab, the inclusion probability for \(X_{2}\) increases again, so that the variables are selected with a very high probability, but the bias increases so that the RSME is high compared to the stepwise regression. Interestingly, all three Elastic net approaches do not show altogether large deviations in the imputation scenario due to the combining rules. Thus, the results of stepwise regression and Bayesian Spike-and-Slab are quite similar. The experimental study 2 shows similar results, but due to the higher missing dropout, more biased results are to be expected. This shows the strength of our Bayesian approach, which does not only treat the missing values in the run-up to selection and estimation, making the estimation and selection results more precise and the selection of variables more correct in terms of precision, recall and F-measure. Experimental Study 3 shows less precise results in estimation and selection due to the MAR failure of data for all presented selection strategies, however, the Bayesian spike-and-slab approach can score here by having precision ahead of the other methods.

However, it must be noted that the estimation accuracy is more accurate with stepwise regression (SR) and Bayesian Spike-and-Slab (SnS), and thus the bias is small and the RMSE is half towards the Elastic net (EN) approaches. Table 6 shows the results of the calculations of Accuracy: precision, recall, and F‑measure for before deletion, complete case and imputation obtained with Elastic net, stepwise regression, and Bayesian Spike-and-Slab. Looking on the important measurement, e.g., F-measure, shows in principle the same result. Accuracy, as measured for ridge regression (EN.00), always yields the same results because all variables are always included in the model and no selection is made, only shrinkage. The spike-and-slab is almost among the top performers in terms of F-measure. This shows that the ridge approach does not perform selection in the strict sense, but merely shrinks the values close to zero. Thus, the values for the ridge results never reach the accuracy of the other estimation methods. On the other hand, it is striking that the accuracy values of the Lasso estimates do not approach those of stepwise regression or our Bayesian approach. However, stepwise regression also shows its strengths in correctly selecting appropriate variables that were involved in the data generating process. In general, the accuracy decreases when estimating the complete cases compared to the before deletion values and then increases when dealing with missing values, as intended. Here, it is also shown that the average and the Wald method of pooling yields comparable results to the Bayesian approach and provides the intended accuracy gain of handling missing values compared to the complete cases.

6 Empirical illustration

In order to illustrate the usefulness of the suggested Bayesian spike and slab approach in empirical analysis, we provide exemplary applications using the scientific data use file of the German National Educational Panel Study (NEPS), Starting Cohort Grade 9, see NEPS (2021) and Blossfeld and Roßbach (2019). For this purpose, a random sample of schools, stratified by school type, was drawn throughout Germany. Within the schools, all students of two randomly selected ninth grades were invited to participate in the survey. The technical details on weighting are reported for each wave, see e.g., Bergrab (2020). Here, variable selection finds its application in selecting the appropriate variables that describe a student’s probability of participation in a specific wave, where we analyze wave twelve here. From the set of available covariate variables (\(P=16\)), only a few have to be selected in order to determine the participation probabilities and subsequently prepare suitable weights for further analyses. In the starting cohort considered here all students, regardless of whether in vocational education or academic track, willing to participate in the NEPS are followed up over time. The students entering the academic track usually remain within their school context. In contrast, students entering the vocational education leave school for a vocational training. In wave twelve all students left their school context and are surveyed individually. To account for the wave-specific participation decision of students’ response propensity re-weighting is used to provide corresponding weights. To model binary participation decisions a model with probit link function is used for all three variable selection methods: backward selection, Elastic net, Bayesian spike-and-slab. By wave twelve, the panel cohort has reduced to \(n=7,911\) students in the age of mean 26.76 (standard deviation 0.73). For our analysis, we included all students and \(p=16\) variables. In contrast to the weighting report, all variables were standardized before selection to show comparability with the above-mentioned experimental studies.

Table 7 summarizes the results for stepwise regression, Elastic net, and Bayesian spike-and-slab (Bayesian SnS) approach. To model individual participation, for the stepwise regression the glm-function with a probit link provided in R (R Core Team. 2020) was used. BIC based backward selection was used and only significant coefficients are reported. For Elastic net only non-negligible variables are reported. The dash indicates that this variable was not included in the estimation model. The shrinkage parameter of Elastic net \(\lambda\) is set to the largest value such that the error is 1 standard error of the minimum: \(\lambda_{\min}=0.015\) obtained with the cv.glmnet-function in R. The Elastic net represents a Lasso selection with a control parameter of 1.0. For the results of our Bayesian approach both, the estimates \(\hat{\beta}\) as median of posterior and the corresponding marginal posterior inclusion probabilities \(\hat{\gamma}\), are presented. The bold results in the last two columns show variables with an inclusion probability higher than 50%, where the other results are listed for the sake of completeness. The prior setting for the spike-and-slab were set to \(\tau_{2}=1\gg\tau_{1}=0.015\) and the shrinkage \(w=0.015\). The posterior estimates are based on MCMC chains of length 20,000. After discarding the first 5,000 iterations as burn-in, inference is based on the remaining 15,000 simulated draws from the joint posterior distribution. The convergence diagnostics indicate overall convergence.

Table 7 show less differences among the three approaches. Participation in the last waves is selected according to all three approaches, except for participation in wave 6, which is not selected by Elastic net. The total estimate and the selection of migration background vary across the three models. The most parsimonious model is determined by Elastic net and our spike-and-slab approach, which includes a total of six variables. In contrast, stepwise regression only includes one additional variable in the model. The selection of stepwise regression shows overall significant results, whereas the Bayesian spike-and-slab approach extracts migration background as a redundant variable with an inclusion probability of \(\hat{\gamma}=0.227\). The remaining inclusion probabilities indicate a markedly distinct decision to include. The model based on elastic net does not consider participation in Wave 6. However, it includes all other variables that are also selected by stepwise regression and the Bayesian approach. An analysis of the inclusion probabilities reveals that, in addition to the intercept, positive participation in the surveys in waves 9, 10, and 11 also strongly influences the probability of participating in wave 12. The inclusion probabilities are 1 or nearly 1 for all four variables. This demonstrates that stepwise regression and the Bayesian approach yield nearly identical outcomes, as the most crucial variables are selected in a comparable sequence. The advantage of the Bayesian approach is that, in addition to the assessment based on the significance level in stepwise regression, the inclusion probability offers a more direct approach to the evaluation.

It is important to recognize that the Bayesian approach is susceptible to the influence of prior beliefs, which requires further investigation. The impact of the variance priors on the values of \(\beta\) and \(\gamma\) is negligible, as well as the starting values. However, when holding \(\tau_{2}=1\), the exploration of the gradual adjustment of the hyperparameter \(\tau_{1}\) from 0.050 to 0.200 in steps of 0.0.025 reveals sensitive changes. The results of this can be found in Table 8. The Bayesian spike-and-slab algorithm is sensitive to the specific choice of spike, i.e., \(\tau_{2}\); in detail, climbing up the increments on \(\tau_{2}\) keeps the variables participation in the last wave and migration background in the model with constant high inclusion probabilities. Furthermore, the estimates of the latter two variables alternate, but the estimate for participation in the previous wave is extremely constant. The other selected variables vanish out gradually. It is noteworthy that a similar outcome is achieved when the shrinkage parameter of Elastic net, defined as \(\lambda_{\text{min}}=0.015\), is employed in conjunction with the Bayesian spike-and-slab approach.

As previously discussed, these methods will set regression coefficients to zero, if necessary. The three approaches compared provide results that are essentially similar, although differences in depth are apparent. The results offer an intriguing perspective on the Bayesian approach to handling missing values, as well as variable selection and its performance relative to established methods for variable selection. Further investigations regarding the weights calculated with the models were not carried out.

7 Conclusion

This paper provides assessment of different strategies, i.e., applying statistical as well as machine learning algorithms, for variable selection in the context of binary regression models with missing values in the covariate variables. We show how our algorithmic strategies can be combined and how they can accommodate inference over the prior inclusion probability and which prior settings affect the posterior estimates. To handle missing values within the Bayesian estimation paradigm, the device of data augmentation can be used. The discussion of the various strategies highlights similarities and differences between shrinkage estimators and Bayesian estimation approaches. The choice of hyperparameters is in all methods a sensitive issue. The tuning parameter for using shrinkage estimators is typically estimated by cross-validation, whereas the hyperparameters in Bayesian estimation are fixed a priori.

From a methodological view, the conceptual strengths of the Bayesian spike-and-slab model and the ridge regression are revealed in the sense that they do not exhaust predictive information in trying to determine which variables have exactly zero effect. Any attempt to select variables after the fact, as is done in Lasso or Elastic net, does not lead to the loss of information to worse predictions. Therefore, in the Bayesian setting it is important to understand that the set of selected variables that have no predictive effect has a probability of (approximately) zero. Whereas all variables that have an inclusion probability that is above a certain threshold can be considered truly predictive. The evidence provided in the empirical illustration suggests that for the purposes of weighting in the \(N> P\) setting, all strategies work well, but with small advantages of the Bayesian approach, especially when missing values occur in the covariate data. As discussed in Du et al. (2022) our Bayesian approach, simultaneously imputing the missing values, selecting and estimating jointly the model parameters, is time-consuming and computationally intensive not only for a large amount of missingness. Therefore, we show that dealing with missing values in the context of statistics and machine learning requires a convincing strategy for imputing the missing values. Not considering the missing data pattern as well as the averaging of imputed estimation results without taking pooling rules into account leads to a loss of quality, which can be seen e.g., in biased estimation results or lower variances of the estimators. Hence, case-deletion or complete-case strategies are frequently used when individuals are excluded from the analysis, if they are missing any of the variables or items. whereas the quality of the data analysis suffers as a result. As shown, non-Bayesian variable selection approaches cannot be easily applied within the framework of multiple imputation. The difficulty lies in how to combine the selection results across the multiply imputed data sets, because non-Bayesian variable selection approaches would commonly identify different redundant coefficients for the various imputed data sets and thus will lead to different numbers of coefficients to compare. In conclusion, we present a strategy clearly combining estimation, shrinkage and handling of missing values. The Bayesian holistic method for combining variable selection and imputation of missing values in covariates offers several advantages over traditional methods. By treating missing values as parameters and assigning priors to them, this approach provides a more accurate and reliable estimation of regression coefficients. While it may appear to users that the Elastic net and stepwise regression approaches are assumption-free, there are nevertheless assumptions involved regarding the setting of the control and shrinkage parameters, which are set via the priors in the Bayesian approach. Likewise, the pooling step based on chained equation is non-trivial, while in the Bayesian approach this can be implemented as an additional step in the iterative Gibbs sampler.

There are several approaches in the literature for imputing missing values and selecting variables. Note that Heymans et al. (2007) suggest a boot-strapped variable selection under multiple imputation to overcome the pitfalls of applying the combining rules to stepwise regression and Panken and Heymans (2022) provide similar frameworks for the logistic setup based on the majority based approach dividing the imputed datasets in test and train data. Chen and Wang (2013) extend the Lasso to multiple imputation with grouping the imputed data, combining multiple imputation and random Lasso see Liu et al. (2016), and combining Lasso with the Expectation-Maximization algorithm (Sabbe et al. 2013). The presented Bayesian holistic method highlights the potential of combining imputation with advanced techniques with accuracy and reliability in statistical results and offers promising avenues for future research.

Notes

Model selection is further often related to prediction and estimation performance, which in the sense of model fitness is used as a model selection criterion. In the context of variable selection, the prediction and estimation performance relate to the ability to identify the relevant set of covariates and the implied regression relation correctly with higher prediction and estimating performance indicating higher quality of the applied approach. A side aspect of quality relates to the conceptual stringency and interpretability of the considered approach.

Once a statistical modeling has been agreed upon, model selection problems can also be classified in terms of the number of variables (P) and number of observations (N). Thereby, the case with \(N\gg P\) typically arises for survey data, whereas the case with \(P\gg N\) can be found for medical data.

With more complex model structure also the burden of involved numerical optimization may rise. In combination with efforts to handle missing values via multiple imputation, see Rubin (1984), the computational costs increased considerably.

A direct correspondence is given when the prior is directly comparable in structure to the penalization term and the function assessing model fitness in the penalized context reflects the properties of the negative (logarithmic) likelihood function.

For completeness, note that stepwise selection backwards starts with the most general still manageable model specification involving allP variables and selects from P candidate models, where these P models each leave one variable out. If the predefined model selection criterion detects better fit among the candidate models, the candidate model with the largest increase in model fit is chosen, and the process is repeated until no further increase in model fit is detected. Stepwise selection – forwards or backwards – add or removes just one variable per step that changes the criterion most.

Note that a similar strategy is also inherent to other approaches like classification and regression trees, see Breiman et al. (1984), where splitting points are defined in terms of single variables only.

When making prediction or inference, it’s essential to recognize that the both are based on a specific model and may be optimal only within the context of the considered models.