Abstract

Logistic regression is a standard model in many studies of binary outcome data, and the analysis of missing data in this model is a fascinating topic. Based on the idea of Wang D, Chen SX (2009) Empirical likelihood for estimating equations with missing values. Ann Stat, 37:490–517, proposed are two different types of multiple imputation (MI) estimation methods, which each use three empirical conditional distribution functions to generate random values to impute missing data, to estimate the parameters of logistic regression with covariates missing at random (MAR) separately or simultaneously by using the estimating equations of Fay RE (1996) Alternative paradigms for the analysis of imputed survey data. J Am Stat Assoc, 91:490–498. The derivation of the two proposed MI estimation methods is under the assumption of MAR separately or simultaneously and exclusively for categorical/discrete data. The two proposed methods are computationally effective, as evidenced by simulation studies. They have a quite similar efficiency and outperform the complete-case, semiparametric inverse probability weighting, validation likelihood, and random forest MI by chained equations methods. Although the two proposed methods are comparable with the joint conditional likelihood (JCL) method, they have more straightforward calculations and shorter computing times compared to the JCL and MICE methods. Two real data examples are used to illustrate the applicability of the proposed methods.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

“Missing data” is a widespread issue in practical data analysis and frequently appear in many areas of science for various reasons, e.g., survey non-response, data collection conditions, expensive or long-term experiments. Rubin (1976) used the missing mechanism concept to formalize the missing value, where the missingness indicators are considered as random variables that are described by a distribution. For the missingness mechanism, there are three main types: missing completely at random (MCAR), missing at random (MAR), and missing not at random (MNAR). MCAR means that the appearance of missing values is completely independent of all variables with missing observations and variables with no missing observations. MAR means that the missingness is only related to the variables with no missing observations, but unrelated to the variables with missing observations. MNAR, neither MAR nor MCAR, implies that missing values are related to observed and unobserved values. The impact of missing data on statistical research can lead to biased estimates of parameters, lose information, decrease statistical power, increase standard errors, or weaken generalizability of findings (Dong and Peng 2013). Various methods for dealing with missing values in regression models were proposed; see, e.g., Rubin (1976), Little (1992), Zhao and Lipsitz (1992), Wang et al. (2002), Lee et al. (2012), and Lukusa et al. (2016) for more details.

Logistic regression is often applied in many studies in which researchers would like to investigate the relationship between a binary response variable and covariates (Hosmer et al. 2013). In practice, the analysis of logistic regression with one or more covariates MAR frequently appears, which provides specific challenges. There were some studies on this issue. For instance, Lipsitz et al. (1998) derived a modified conditional logistic regression with covariates MAR. Wang et al. (1997) provided the weighted semiparametric estimation method to investigate the properties of regression parameter estimators when the selection probabilities are estimated by kernel smoothers. Wang et al. (2002) proposed the joint conditional likelihood (JCL) method to estimate the parameters of logistic regression with covariates MAR by combining complete-case (CC) (or validation) data, where the cases without missing observations are included, and non-complete (or non-validation) data that include the cases with missing observations. When both the outcome and covariates in logistic regression are MAR, Lee et al. (2012) presented the two semiparametric estimation methods, validation likelihood (VL) and JCL estimation methods, to estimate the logistic regression parameters. Similarly, Hsieh et al. (2013) applied these approaches to estimate the parameters of logistic regression with the outcome and covariates MAR separately or simultaneously. Jiang et al. (2020) developed a stochastic approximation version of the EM (SAEM) algorithm, which is based on Metropolis-Hastings sampling, to perform statistical inference for the parameters of logistic regression with missing covariates, and compared their estimators with those of the random forest multiple imputation by chained equations (MICE) method from mice package in R (Buuren and Groothuis-Oudshoorn 2011). Tran et al. (2021) recently estimated the parameters of logistic regression with categorical/discrete covariates MAR separately or simultaneously via the JCL estimation method that uses the information from a CC and three non-complete data sets to improve efficiency in estimation.

Based on the results of Tran et al. (2021), although the JCL estimation method outperforms the CC, semiparametric inverse probability weighting (SIPW), and VL estimation methods, its calculations are more complex and, hence, it takes longer computing time. In addition, the estimators of the SAEM approach outperform those of the MICE method, but the computing time of the SAEM approach is longer compared to the MICE method. Moreover, it can be seen from Jiang et al. (2020) that the MICE estimators are underestimators, although the variables are MCAR. Therefore, we are highly motivated to develop other estimation methods for logistic regression with covariates MAR separately or simultaneously that are not only comparable with the JCL and MICE estimation methods in terms of efficiency but also simpler and faster in calculation.

In this work, based on the ideas of Fay (1996), Wang and Chen (2009), and Lee et al. (2016, 2020), we develop two different types of MI methods to estimate the parameters of logistic regression with two covariate vectors MAR separately or simultaneously under the assumption that all covariates and surrogates are categorical/discrete. We also compare the two proposed MI methods with the JCL method of Tran et al. (2021) and MICE method in terms of efficiency in estimation and computing time. Our proposed MI methods are two-step procedures based on the suggestion of Fay (1996) that makes the calculation process simpler and faster than the three-step procedure in Rubin (1987). Firstly, each type MI estimation method uses three empirical conditional distribution functions (CDFs) (Wang and Chen 2009) to generate random values. The first type MI (MI1) method uses only the CC data. The second type MI (MI2) method uses both the CC and non-complete data to generate random values to impute missing data. Secondly, solve the estimating equations to obtain estimates of the logistic regression parameters (Fay 1996). The estimating equations are more convenient in practice because they are solved only once rather than combining M estimating equations, where M is the number of imputations, to get the pooled estimates as done in Rubin (1987), such that it shortens computing time. The formulas of Lee et al. (2016, 2020) are applied to estimate the variances of the two proposed MI estimators to improve efficiency in estimation.

Section 2 presents the assumptions and notations used throughout this work. Section 3 reviews the SIPW, VL, JCL, and MICE estimation methods. Two different types of MI estimation methods are proposed in Sect. 4. In Sect. 5, the finite-sample performances of the proposed methods are investigated by conducting extensive simulations under various settings. Two real data sets are used to demonstrate the practical use of the proposed methods. Section 6 presents some discussions and conclusions.

2 Assumptions and notations

Let Y be a binary outcome variable denoting whether an event of interest occurs, where \(Y=1\) if event occurs and \(Y=0\) otherwise. Suppose that \({{{\mathbf {\mathtt{{X}}}}}}_1=(X_1,X_2,\dots ,X_{r_1})^T\) is a vector of \(r_1\) categorical/discrete covariates, and \({{{\mathbf {\mathtt{{X}}}}}}_2=(X_{r_1+1},X_{r_1+2},\dots ,X_p)^T\) is a vector of \(r_2\) categorical/discrete covariates, where \(p=r_1+r_2\). It is assumed that \({{{\mathbf {\mathtt{{X}}}}}}_1\) and \({{{\mathbf {\mathtt{{X}}}}}}_2\) may be missing separately or simultaneously. In this work, we do not consider the case where some covariates in \({{{\mathbf {\mathtt{{X}}}}}}_1\) and \({{{\mathbf {\mathtt{{X}}}}}}_2\) have missing observations. Thus, when it is said that \({{{\mathbf {\mathtt{{X}}}}}}_s\), \(s=1,2\), is missing, it means all covariates in \({{{\mathbf {\mathtt{{X}}}}}}_s\) have missing observations simultaneously. Assume that \(\varvec{Z}=(Z_1,Z_2,\dots ,Z_q)^T\) a vector of q categorical/discrete covariates that are always observed. Let \(\varvec{X}=({{{\mathbf {\mathtt{{X}}}}}}_1^T,{{{\mathbf {\mathtt{{X}}}}}}_2^T)^T\), \({\mathcal {X}}=(1,\varvec{X}^T,\varvec{Z}^T)^T\), and \(\{(Y_i,{\mathcal {X}}_i): i=1,2,\dots ,n\}\) be a random sample. Assume that \(\varvec{X}\) is MAR. The logistic regression model is considered as follows:

where \(H(u)=\{1+\exp (-u)\}^{-1}\) and \(\varvec{\beta }=(\beta _0,\varvec{\beta }_1^T,\varvec{\beta }_2^T,\varvec{\beta }_3^T)^T\) is a vector of parameters associated with \({\mathcal {X}}_i\). The main goal is to estimate \(\varvec{\beta }\) when some of the \(\varvec{X}_i\)s are MAR. Let \(\delta _{ij}\), \(j=1,2,3,4\), denote the missingness statuses of \(\varvec{X}_i=({{{\mathbf {\mathtt{{X}}}}}}_{1i}^T,{{{\mathbf {\mathtt{{X}}}}}}_{2i}^T)^T\) in which \(\delta _{i1}=1\) if both \({{{\mathbf {\mathtt{{X}}}}}}_{1i}\) and \({{{\mathbf {\mathtt{{X}}}}}}_{2i}\) are observed; 0 otherwise; \(\delta _{i2}=1\) if \({{{\mathbf {\mathtt{{X}}}}}}_{1i}\) is missing and \({{{\mathbf {\mathtt{{X}}}}}}_{2i}\) is observed; 0 otherwise; \(\delta _{i3}=1\) if \({{{\mathbf {\mathtt{{X}}}}}}_{1i}\) is observed and \({{{\mathbf {\mathtt{{X}}}}}}_{2i}\) is missing; 0 otherwise; \(\delta _{i4}=1\) if both \({{{\mathbf {\mathtt{{X}}}}}}_{1i}\) and \({{{\mathbf {\mathtt{{X}}}}}}_{2i}\) are missing; 0 otherwise. Some studies used a surrogate variable that can be measured or is measured easily that is used instead of a variable that cannot be measured or is measured difficultly, so it can be used for an MAR variable in a model to improve the information and, hence, enhance the performance of estimation and prediction. See, e.g., Wang et al. (1997, 2002), Hsieh et al. (2010, 2013), and Lee et al. (2011, 2012, 2020) for more details. We also consider the possibility of categorical/discrete surrogate vectors \({{{\mathbf {\mathtt{{W}}}}}}_1\) and \({{{\mathbf {\mathtt{{W}}}}}}_2\) for \({{{\mathbf {\mathtt{{X}}}}}}_1\) and \({{{\mathbf {\mathtt{{X}}}}}}_2\), respectively, such that \({{{\mathbf {\mathtt{{W}}}}}}_1\) and \({{{\mathbf {\mathtt{{W}}}}}}_2\) are dependent on \({{{\mathbf {\mathtt{{X}}}}}}_1\) and \({{{\mathbf {\mathtt{{X}}}}}}_2\), respectively, and independent of Y given \(\varvec{X}\) and \(\varvec{Z}\). That is, there are correlations between \({{{\mathbf {\mathtt{{W}}}}}}_1\) and \({{{\mathbf {\mathtt{{X}}}}}}_1\) and \({{{\mathbf {\mathtt{{W}}}}}}_2\) and \({{{\mathbf {\mathtt{{X}}}}}}_2\), respectively. Hence, we can have the logistic regression model \(P(Y_i=1|{{{\mathbf {\mathtt{{X}}}}}}_{1i},{{{\mathbf {\mathtt{{X}}}}}}_{2i},\varvec{Z}_i,{{{\mathbf {\mathtt{{W}}}}}}_{1i},{{{\mathbf {\mathtt{{W}}}}}}_{2i})=P(Y_i=1|{{{\mathbf {\mathtt{{X}}}}}}_{1i},{{{\mathbf {\mathtt{{X}}}}}}_{2i},\varvec{Z}_i) =H(\beta _0+\varvec{\beta }_1^T{{{\mathbf {\mathtt{{X}}}}}}_{1i}+\varvec{\beta }_2^T{{{\mathbf {\mathtt{{X}}}}}}_{2i}+\varvec{\beta }_3^T\varvec{Z}_i)\) as given in (1). Let \(\varvec{W}=({{{\mathbf {\mathtt{{W}}}}}}_1^T,{{{\mathbf {\mathtt{{W}}}}}}_2^T)^T\) and \(\varvec{V}_i=(\varvec{Z}_i^T,\varvec{W}_i^T)^T\), \(i=1,\dots ,n\). The CC data set (\(\delta _{i1}=1\)) consists of \((Y_i,\varvec{X}_i,\varvec{V}_i)\), and the three non-complete data sets include \((Y_i,{{{\mathbf {\mathtt{{X}}}}}}_{2i},\varvec{V}_i)\), \((Y_i,{{{\mathbf {\mathtt{{X}}}}}}_{1i},\varvec{V}_i)\), and \((Y_i,\varvec{V}_i)\), respectively, when \(\delta _{i2}\), \(\delta _{i3}\), and \(\delta _{i4}\) are equal to 1. Under the assumption of MAR mechanism (Rubin 1976) of \({{{\mathbf {\mathtt{{X}}}}}}_1\) and \({{{\mathbf {\mathtt{{X}}}}}}_2\), the selection probability model is

with \(\sum _{j=1}^{4}\pi _j(Y_i,\varvec{V}_i)=1\). \(\pi _j(Y_i,\varvec{V}_i)\)s are the unknown nuisance parameters and need to be estimated. In this study, under the assumption that \(\varvec{V}_i\)s are categorical/discrete vectors, the nonparametric estimators of \(\pi _j(Y_i,\varvec{V}_i)\) are given as follows:

where \(I(\cdot )\) is an indicator function. It is noticed that in this work the requirement of categorical/discrete covariates is for mathematical derivation purposes. \({\widehat{\pi }}_1(Y_i,\varvec{V}_i)\)s are used as weights for the SIPW and VL estimation methods and \({\widehat{\pi }}_j(Y_i,\varvec{V}_i)\)s, \(j=1,2,3,4\), are used as weights for modification of conditional probabilities for the JCL estimation method in Sect. 3. If covariates are continuous, one can use the kernel estimation approach by using the arguments of Wang and Wang (2001).

3 Review of estimation methods

This section briefly reviews the four famous estimation methods, SIPW, VL, JCL, and MICE methods, for logistic regression with covariates MAR separately or simultaneously. These methods are also used to compare their estimation performance with the proposed approaches in the following sections.

3.1 SIPW estimation method

Horvitz and Thompson (1952) proposed the weighted estimator that uses inverse probability weighting (IPW) to reduce biased estimation and has become known as the H-T estimator. When the selection probabilities are known, Zhao and Lipsitz (1992) extended the H-T estimator to propose the IPW estimator to improve efficiency in estimation. This approach is, however, limited in practice because the selection probabilities are usually unknown. Therefore, some authors, e.g., Wang et al. (1997) and Wang and Wang (2001), suggested the SIPW approach that uses the nonparametric estimators of the unknown selection probabilities as weighted inverse terms. See, e.g., Hsieh et al. (2010) and Lee et al. (2012) for further details. Considering the logistic regression model (1) when \({{{\mathbf {\mathtt{{X}}}}}}_1\) and \({{{\mathbf {\mathtt{{X}}}}}}_2\) are MAR separately or simultaneously, one can obtain the SIPW estimator \(\widehat{\varvec{\beta }}_W\) of \(\varvec{\beta }\) by solving the following estimating equations:

where \(\widehat{\varvec{\pi }}_1=\left( {\widehat{\pi }}_{11},\dots ,{\widehat{\pi }}_{1n}\right)\) for \({\widehat{\pi }}_{1i}={\widehat{\pi }}_1(Y_i,\varvec{V}_i)\), given in (3), being the estimator of \(\pi _{1i}=\pi _1(Y_i,\varvec{V}_i)\) defined in (2).

3.2 VL estimation method

Breslow and Cain (1988) proposed the conditional maximum likelihood (ML) approach to estimate the parameters of logistic regression for two-stage case-control data. They showed that their estimator of \(\varvec{\beta }\) is not only consistent and asymptotically a normal distribution but also is useful when information of covariates is missing for a large part of the sample. Wang et al. (2002), Lee et al. (2012), and Hsieh et al. (2013) applied this approach to provide the VL estimation method, which uses the CC data, to solve the issue of missing data in logistic regression. When \({{{\mathbf {\mathtt{{X}}}}}}_1\) and \({{{\mathbf {\mathtt{{X}}}}}}_2\) are MAR separately or simultaneously, Tran et al. (2021) estimated \(\varvec{\beta }\) by using the following estimating equations:

where

and \({\widehat{\pi }}_1(Y_i,\varvec{V}_i)\) is given in (3). One can solve \(\widehat{\varvec{U}}_V(\varvec{\beta })=\varvec{0}\) to obtain the VL estimator \(\widehat{\varvec{\beta }}_V\) of \(\varvec{\beta }\).

3.3 JCL estimation method

Both the SIPW and VL approaches that use only the CC data set (\(\delta _1=1\)) may not maximize efficiency in estimation. Therefore, to overcome this drawback, Wang et al. (2002) proposed the JCL estimation method that combines the CC and non-complete data; see, e.g., Lee et al. (2012) and Hsieh et al. (2013) for more details. Tran et al. (2021) proposed the JCL method to estimate the parameters of logistic regression with covariates MAR separately or simultaneously.

When \({{{\mathbf {\mathtt{{X}}}}}}_1\) and \({{{\mathbf {\mathtt{{X}}}}}}_2\) are MAR separately or simultaneously, one can obtain the JCL estimator \(\widehat{\varvec{\beta }}_J=({\widehat{\beta }}_0,\widehat{\varvec{\beta }}_{J1}^T,\widehat{\varvec{\beta }}_{J2}^T,\widehat{\varvec{\beta }}_{J3}^T)^T\) of \(\varvec{\beta }\) in the logistic regression model (1) by solving the following estimating equations:

where \({\widehat{H}}_1({{{\mathbf {\mathtt{{X}}}}}}_{1i},{{{\mathbf {\mathtt{{X}}}}}}_{2i},\varvec{V}_i;\,\varvec{\beta })\) is defined in (6), and

for

and

3.4 MICE estimation method

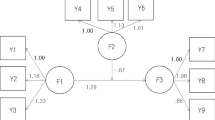

MI (Rubin 1987, 1996) is a simple and powerful method for dealing with missing data and is available in several commonly used statistical software and packages. One can use the MI approaches to generate fully imputed (“completed”) data sets by retaining the observed values and replacing the missing data with plausible values from an imputation model. There are various extensions of the MI method of Rubin (1987) in which their differences are mostly in the structural assumption of the imputation model. See, e.g., Rubin (1987, 1996), Rubin and Schenker (1986), Fay (1996), and Pahel et al. (2011) for details. Their procedures often follow the three basic steps, shown in Figure 1A: Step 1) Imputation: impute missing values M times to obtain M imputed (“completed”) data sets; Step 2) Analysis: analyze each of the M imputed (“completed”) data sets by using the chosen statistical methods; Step 3) Pool: combine the M analysis results from Step 2 into one result by using the formula of Rubin (1987). The MI methods are almost different in the way of their imputed data to fill missing values (Step 1), e.g., mean, regression, hot deck, cold deck, principal component, and chained-equation imputation methods (Little and Rubin 2019).

The mice package (Buuren and Groothuis-Oudshoorn 2011) is one of the useful and famous packages that uses MI by chained equations, also known as fully conditional specification and sequential regression MI, in Step 1 to impute values for missing data. Specifically, assume \(\varvec{u}=(u_1,u_2,\dots ,u_k)\) is a vector of k variables with missing observations. Firstly, all missing values of \(u_i\) are filled in by using basic random sampling and replacement with observed values themselves. Secondly, construct a regression model of \(u_1\) on \(u_2,\dots ,u_k\). Simulated draws from the corresponding posterior predictive distribution of \(u_1\) are used to replace missing values of \(u_1\). Then, generate a regression model of \(u_2\) based on the \(u_1\) variable with imputed values and \(u_3,\dots ,u_k\). Again, simulated draws from the corresponding posterior predictive distribution of \(u_2\) are used to replace missing values of \(u_2\). Repeat the process for each of \(u_3,\dots ,u_k\). This procedure is called a “cycle”. Repeat this cycle several times (e.g., 10 or 20) to create a first imputed (“complete”) data set. Finally, repeat the procedure M times to obtain M imputed (“completed”) data sets. Steps 2 and 3 of this MI method follow the rule of Rubin (1987).

In summary, this section has introduced four estimation methods used for the benchmark comparisons in this work and the convenience of reference. Although the simulation results of Tran et al. (2021) showed that the JCL method outperforms the CC, VL, and SIPW methods, it is pretty hard to obtain the JCL estimates of the logistic regression parameters because of its complex calculations and long computing time. In addition, the MICE approach can be applied to the case of both continuous and categorical/discrete data and any missingness mechanism (White et al. 2011), but its estimators for logistic regression with missing covariates underestimate the parameters, although their standard errors were small (Jiang et al. 2020). Moreover, the procedure of this approach may take much time to compute. Therefore, in Sect. 4, we propose two different types of MI methods that not only provide efficient estimation comparable with the JCL and MICE approaches but also have more straightforward computations to shorten computing time.

4 Proposed MI estimation methods

This section introduces two different types of MI methods based on the ideas of Fay (1996), Wang and Chen (2009), and Lee et al. (2016, 2020). The procedure of these two proposed MI methods has two steps (Fay’s type, Figure 1B): Step 1) Impute values for non-complete data by using the empirical CDFs of missing values given the observed data as done in Wang and Chen (2009) to obtain M imputed (“completed”) data sets; Step 2) Solve the estimating equations only one time to obtain the estimates of the logistic regression parameters. The estimated variances of the MI estimators are then obtained by using the formulas of Lee et al. (2016, 2020). The main difference of these two MI methods is in the way of missing data imputation in Step 1. As mentioned in Sect. 1, this procedure is more convenient in practice and saves computing time. The details of these two different types of MI methods are stated in the following sections.

4.1 Type 1 MI (MI1) method

Let \(F_{{{{\mathbf {\mathtt{{X}}}}}}_{1i}}({{{\mathbf {\mathtt{{x}}}}}}_1|{{{\mathbf {\mathtt{{X}}}}}}_{2i},Y_i,\varvec{V}_i)\), \(F_{{{{\mathbf {\mathtt{{X}}}}}}_{2i}}({{{\mathbf {\mathtt{{x}}}}}}_2|{{{\mathbf {\mathtt{{X}}}}}}_{1i},Y_i,\varvec{V}_i)\), and \(F_{\varvec{X}_i}({\mathbf {x}}|Y_i,\varvec{V}_i)\), where \({\mathbf {x}}=({{{\mathbf {\mathtt{{x}}}}}}_1^T,{{{\mathbf {\mathtt{{x}}}}}}_2^T)^T\), be the CDFs of \({{{\mathbf {\mathtt{{X}}}}}}_{1i}\) given \(({{{\mathbf {\mathtt{{X}}}}}}_{2i},Y_i,\varvec{V}_i)\), \({{{\mathbf {\mathtt{{X}}}}}}_{2i}\) given \(({{{\mathbf {\mathtt{{X}}}}}}_{1i},Y_i,\varvec{V}_i)\), and \(\varvec{X}_i=({{{\mathbf {\mathtt{{X}}}}}}_{1i}^T,{{{\mathbf {\mathtt{{X}}}}}}_{2i}^T)^T\) given \((Y_i,\varvec{V}_i)\), respectively. To build the MI1 method, we consider the following empirical CDFs of \({{{\mathbf {\mathtt{{X}}}}}}_{1i}\) given \(({{{\mathbf {\mathtt{{X}}}}}}_{2i},Y_i,\varvec{V}_i)\), \({{{\mathbf {\mathtt{{X}}}}}}_{2i}\) given \(({{{\mathbf {\mathtt{{X}}}}}}_{1i},Y_i,\varvec{V}_i)\), and \(\varvec{X}_i\) given \((Y_i,\varvec{V}_i)\):

respectively. When \({{{\mathbf {\mathtt{{X}}}}}}_{1i}\) and \({{{\mathbf {\mathtt{{X}}}}}}_{2i}\) are MAR separately or simultaneously, their missing values are imputed several times by random values generated from \({\widetilde{F}}_{{{{\mathbf {\mathtt{{X}}}}}}_{1i}}({{{\mathbf {\mathtt{{x}}}}}}_1|{{{\mathbf {\mathtt{{X}}}}}}_{2i},Y_i,\varvec{V}_i)\), \({\widetilde{F}}_{{{{\mathbf {\mathtt{{X}}}}}}_{2i}}({{{\mathbf {\mathtt{{x}}}}}}_2|{{{\mathbf {\mathtt{{X}}}}}}_{1i},Y_i,\varvec{V}_i)\), and \({\widetilde{F}}_{\varvec{X}_i}({\mathbf {x}}|Y_i,\varvec{V}_i)\). The MI1 procedure is summarized as follows:

- Step 1.:

-

Imputation: Generate the vth imputed (“completed”) data (\(v=1,2,\dots ,M\)) based on the missingness status of \(\varvec{X}_i=({{{\mathbf {\mathtt{{X}}}}}}_{1i}^T,{{{\mathbf {\mathtt{{X}}}}}}_{2i}^T)^T\), \(i=1,2,\dots ,n\).

- i):

-

If \(\delta _{i1}=1\) (\({{{\mathbf {\mathtt{{X}}}}}}_{1i}\) and \({{{\mathbf {\mathtt{{X}}}}}}_{2i}\) are observed), keep the values of \({{{\mathbf {\mathtt{{X}}}}}}_{1i}\) and \({{{\mathbf {\mathtt{{X}}}}}}_{2i}\). Set \({\mathcal {X}}_i=(1,{{{\mathbf {\mathtt{{X}}}}}}_{1i}^T,{{{\mathbf {\mathtt{{X}}}}}}_{2i}^T,\varvec{Z}_i^T)^T\) for all v.

- ii):

-

If \(\delta _{i2}=1\) (\({{{\mathbf {\mathtt{{X}}}}}}_{1i}\) is missing and \({{{\mathbf {\mathtt{{X}}}}}}_{2i}\) is observed), keep the values of \({{{\mathbf {\mathtt{{X}}}}}}_{2i}\) and generate \(\widetilde{{{{\mathbf {\mathtt{{X}}}}}}}_{1iv}\) from \({\widetilde{F}}_{{{{\mathbf {\mathtt{{X}}}}}}_{1i}}({{{\mathbf {\mathtt{{x}}}}}}_1|{{{\mathbf {\mathtt{{X}}}}}}_{2i},Y_i,\varvec{V}_i)\) to fill the missing values of \({{{\mathbf {\mathtt{{X}}}}}}_{1i}\). Define \(\widetilde{{\mathcal {X}}}_{2iv}=(1,\widetilde{{{{\mathbf {\mathtt{{X}}}}}}}^T_{1iv},{{{\mathbf {\mathtt{{X}}}}}}_{2i}^T,\varvec{Z}^T_i)^T\).

- iii):

-

If \(\delta _{i3}=1\) (\({{{\mathbf {\mathtt{{X}}}}}}_{1i}\) is observed and \({{{\mathbf {\mathtt{{X}}}}}}_{2i}\) is missing), keep the values of \({{{\mathbf {\mathtt{{X}}}}}}_{1i}\) and generate \(\widetilde{{{{\mathbf {\mathtt{{X}}}}}}}_{2iv}\) from \({\widetilde{F}}_{{{{\mathbf {\mathtt{{X}}}}}}_{2i}}({{{\mathbf {\mathtt{{x}}}}}}_2|{{{\mathbf {\mathtt{{X}}}}}}_{1i},Y_i,\varvec{V}_i)\) to fill the missing values of \({{{\mathbf {\mathtt{{X}}}}}}_{2i}\). Define \(\widetilde{{\mathcal {X}}}_{3iv}=(1,{{{\mathbf {\mathtt{{X}}}}}}_{1i}^T,\widetilde{{{{\mathbf {\mathtt{{X}}}}}}}^T_{2iv},\varvec{Z}^T_i)^T\).

- iv):

-

If \(\delta _{i4}=1\) (both \({{{\mathbf {\mathtt{{X}}}}}}_{1i}\) and \({{{\mathbf {\mathtt{{X}}}}}}_{2i}\) are missing), generate \(\widetilde{{{{\mathbf {\mathtt{{X}}}}}}}_{1iv}\) and \(\widetilde{{{{\mathbf {\mathtt{{X}}}}}}}_{2iv}\) from \({\widetilde{F}}_{\varvec{X}_i}({\mathbf {x}}|Y_i,\varvec{V}_i)\) to fill the missing values of \({{{\mathbf {\mathtt{{X}}}}}}_{1i}\) and \({{{\mathbf {\mathtt{{X}}}}}}_{2i}\). Define \(\widetilde{{\mathcal {X}}}_{4iv}=(1,\widetilde{{{{\mathbf {\mathtt{{X}}}}}}}^T_{1iv},\widetilde{{{{\mathbf {\mathtt{{X}}}}}}}^T_{2iv},\varvec{Z}^T_i)^T\).

- Step 2.:

-

Analysis: Solve the following estimating equations:

$$\begin{aligned} \varvec{U}_{M1}(\varvec{\beta }) =\dfrac{1}{\sqrt{n}} \sum _{i=1}^{n}\left( \delta _{i1}\varvec{S}_i(\varvec{\beta })+\delta _{i2} \widetilde{\varvec{S}}_{2i}(\varvec{\beta })+\delta _{i3}\widetilde{\varvec{S}}_{3i}(\varvec{\beta })+\delta _{i4}\widetilde{\varvec{S}}_{4i}(\varvec{\beta })\right) =\varvec{0}, \end{aligned}$$(18)where \(\varvec{S}_i(\varvec{\beta })={\mathcal {X}}_i(Y_i-H(\varvec{\beta }^T{\mathcal {X}}_i))\), \(\widetilde{\varvec{S}}_{ki}(\varvec{\beta })=M^{-1}\sum _{v=1}^{M}\widetilde{{\mathcal {X}}}_{kiv}(Y_i-H(\varvec{\beta }^T\widetilde{{\mathcal {X}}}_{kiv}))\), \(k=2,3,4\), to obtain the MI1 estimator, \(\widehat{\varvec{\beta }}_{M1}\), of \(\varvec{\beta }\). Next, calculate the estimated variance of \(\widehat{\varvec{\beta }}_{M1}\), \(\widehat{{\,\mathrm{Var}\,}}(\widehat{\varvec{\beta }}_{M1})\), by the formula of Lee et al. (2016), which is also a Rubin-type estimated variance (Rubin 1987), as follows:

$$\begin{aligned} \varvec{G}_{M1}^{-1}(\widehat{\varvec{\beta }}_{M1})\left\{ \dfrac{1}{M}\sum _{v=1}^{m}\sum _{i=1}^{n} (\widetilde{\varvec{U}}_{vi}(\widehat{\varvec{\beta }}_{M1}))^{\otimes 2} +\left( 1+\dfrac{1}{M}\right) \dfrac{\sum _{v=1}^{m}(\widetilde{\varvec{U}}_{v} (\widehat{\varvec{\beta }}_{M1}))^{\otimes 2}}{M-1}\right\} (\varvec{G}_{M1}^{-1}(\widehat{\varvec{\beta }}_{M1}))^T, \end{aligned}$$(19)where \(\varvec{G}_{M1}^{-1}(\varvec{\beta })\) is the gradient of \(-M^{-1}\sum _{v=1}^{M}\widetilde{\varvec{U}}_{v}(\varvec{\beta })=-\varvec{U}_{M1}(\varvec{\beta })\), for

$$\begin{aligned} \widetilde{\varvec{U}}_{vi}(\varvec{\beta })&=\dfrac{1}{\sqrt{n}}\left( \delta _{i1}\varvec{S}_i(\varvec{\beta }) +\delta _{i2}\widetilde{\varvec{S}}_{2i}(\varvec{\beta })+\delta _{i3}\widetilde{\varvec{S}}_{3i}(\varvec{\beta })+\delta _{i4}\widetilde{\varvec{S}}_{4i}(\varvec{\beta })\right) , \\ \widetilde{\varvec{U}}_{v}(\varvec{\beta })&=\sum _{i=1}^{n}\widetilde{\varvec{U}}_{vi}(\varvec{\beta }). \end{aligned}$$

Notice that in Step 1, the imputed data sets are generated by random sampling from the empirical CDFs based on the CC data. The indicator variables \(\delta _{ij}\), \(j=1,2,3,4\), \(i=1,2,\dots ,n\), are used to identify exactly the partitioned covariate vector without missing observations that are used as the information for the empirical CDFs. For example, when \(\delta _{i2}=1\) (\(\delta _{i3}=1\)), the condition from the observed \({{{\mathbf {\mathtt{{X}}}}}}_{2i}\) (\({{{\mathbf {\mathtt{{X}}}}}}_{1i}\)), \(Y_i\), and \(\varvec{V}_i\) is used to create a set of values for the missing values of \({{{\mathbf {\mathtt{{X}}}}}}_{1i}\) (\({{{\mathbf {\mathtt{{X}}}}}}_{2i}\)); when \(\delta _{i4}=1\), both \({{{\mathbf {\mathtt{{X}}}}}}_{1i}\) and \({{{\mathbf {\mathtt{{X}}}}}}_{2i}\) are missing, the condition from only \(Y_i\) and \(\varvec{V}_i\) is used to create a set of values for the missing values of \({{{\mathbf {\mathtt{{X}}}}}}_{1i}\) and \({{{\mathbf {\mathtt{{X}}}}}}_{2i}\). In other words, the procedure uses the exact and more information from the covariate vector without missing observations for missing data imputation, and, hence, estimation may be more efficient. In addition, because this method only has two steps and solves the estimating equations one time, it can shorten computing time.

4.2 Type 2 MI (MI2) method

The MI2 method is quite similar to the MI1 method except for the formulas of empirical CDFs, which are used to generate values to fill missing data. Consider the empirical CDFs of \({{{\mathbf {\mathtt{{X}}}}}}_{1i}\) given \(({{{\mathbf {\mathtt{{X}}}}}}_{2i},Y_i,\varvec{V}_i)\), \({{{\mathbf {\mathtt{{X}}}}}}_{2i}\) given \(({{{\mathbf {\mathtt{{X}}}}}}_{1i},Y_i,\varvec{V}_i)\), and \(\varvec{X}_i\) given \((Y_i,\varvec{V}_i)\) as follows:

respectively. When \({{{\mathbf {\mathtt{{X}}}}}}_{1i}\) and \({{{\mathbf {\mathtt{{X}}}}}}_{2i}\) are MAR separately or simultaneously, their missing values are imputed several times by random observations generated from \(\widetilde{{\widetilde{F}}}_{{{{\mathbf {\mathtt{{X}}}}}}_{1i}}({{{\mathbf {\mathtt{{x}}}}}}_1|Y_i,\varvec{V}_i)\), \(\widetilde{{\widetilde{F}}}_{{{{\mathbf {\mathtt{{X}}}}}}_{2i}}({{{\mathbf {\mathtt{{x}}}}}}_2|Y_i,\varvec{V}_i)\), and \(\widetilde{{\widetilde{F}}}_{\varvec{X}_i}({\mathbf {x}}|Y_i,\varvec{V}_i)\) according to the missingness statuses of \({{{\mathbf {\mathtt{{X}}}}}}_{1i}\) and \({{{\mathbf {\mathtt{{X}}}}}}_{2i}\). The procedure of the MI2 method is stated as follows:

- Step 1.:

-

Imputation: Generate the vth imputed (“completed”) data (\(v=1,2,\dots ,M\)) according to the missingness status of \(\varvec{X}_i=({{{\mathbf {\mathtt{{X}}}}}}_{1i}^T,{{{\mathbf {\mathtt{{X}}}}}}_{2i}^T)^T\), \(i=1,2,\dots ,n\).

- i):

-

If \(\delta_{i1}=1\), keep the values of \({{{\mathbf {\mathtt{{X}}}}}}_{1i}\) and \({{{\mathbf {\mathtt{{X}}}}}}_{2i}\). Set \({\mathcal {X}}_i=(1,{{{\mathbf {\mathtt{{X}}}}}}_{1i}^T,{{{\mathbf {\mathtt{{X}}}}}}_{2i}^T,\varvec{Z}_i^T)^T\) for all v.

- ii):

-

If \(\delta _{i2}=1\), keep the values of \({{{\mathbf {\mathtt{{X}}}}}}_{2i}\) and generate \(\widetilde{\widetilde{{{{\mathbf {\mathtt{{X}}}}}}}}_{1iv}\) from \(\widetilde{{\widetilde{F}}}_{{{{\mathbf {\mathtt{{X}}}}}}_{1i}}({{{\mathbf {\mathtt{{x}}}}}}_1|{{{\mathbf {\mathtt{{X}}}}}}_{2i},Y_i,\varvec{V}_i)\) to fill the missing values of \({{{\mathbf {\mathtt{{X}}}}}}_{1i}\). Define \(\widetilde{\widetilde{{\mathcal {X}}}}_{2iv}=(1,\widetilde{\widetilde{{{{\mathbf {\mathtt{{X}}}}}}}}^T_{1iv}, {{{\mathbf {\mathtt{{X}}}}}}_{2i}^T,\varvec{Z}^T_i)^T\).

- iii):

-

If \(\delta _{i3}=1\), keep the values of \({{{\mathbf {\mathtt{{X}}}}}}_{1i}\) and generate \(\widetilde{\widetilde{{{{\mathbf {\mathtt{{X}}}}}}}}_{2iv}\) from \(\widetilde{{\widetilde{F}}}_{{{{\mathbf {\mathtt{{X}}}}}}_{2i}}({{{\mathbf {\mathtt{{x}}}}}}_2|{{{\mathbf {\mathtt{{X}}}}}}_{1i},Y_i,\varvec{V}_i)\) to fill the missing values of \({{{\mathbf {\mathtt{{X}}}}}}_{2i}\). Define \(\widetilde{\widetilde{{\mathcal {X}}}}_{3iv}=(1,{{{\mathbf {\mathtt{{X}}}}}}_{1i}^T,\widetilde{\widetilde{{{{\mathbf {\mathtt{{X}}}}}}}}^T_{2iv},\varvec{Z}^T_i)^T\).

- iv):

-

If \(\delta _{i4}=1\), generate \(\widetilde{\widetilde{{{{\mathbf {\mathtt{{X}}}}}}}}_{1iv}\) and \(\widetilde{\widetilde{{{{\mathbf {\mathtt{{X}}}}}}}}_{2iv}\) from \(\widetilde{{\widetilde{F}}}_{\varvec{X}_i}({\mathbf {x}}|Y_i,\varvec{V}_i)\) to fill the missing values of \({{{\mathbf {\mathtt{{X}}}}}}_{1i}\) and \({{{\mathbf {\mathtt{{X}}}}}}_{2i}\). Define \(\widetilde{\widetilde{{\mathcal {X}}}}_{4iv}=(1,\widetilde{\widetilde{{{{\mathbf {\mathtt{{X}}}}}}}}^T_{1iv},\widetilde{\widetilde{{{{\mathbf {\mathtt{{X}}}}}}}}^T_{2iv},\varvec{Z}^T_i)^T\).

- Step 2.:

-

Analysis: Solve the following estimating equations:

$$\begin{aligned} \varvec{U}_{M2}(\varvec{\beta }) =\dfrac{1}{\sqrt{n}}\sum _{i=1}^{n} \left( \delta _{i1}\varvec{S}_i(\varvec{\beta })+\delta _{i2}\widetilde{\widetilde{\varvec{S}}}_{2i}(\varvec{\beta }) +\delta _{i3}\widetilde{\widetilde{\varvec{S}}}_{3i}(\varvec{\beta }) +\delta _{i4}\widetilde{\widetilde{\varvec{S}}}_{4i}(\varvec{\beta })\right) =\varvec{0}, \end{aligned}$$(20)where \(\varvec{S}_i(\varvec{\beta })={\mathcal {X}}_i(Y_i-H(\varvec{\beta }^T{\mathcal {X}}_i))\), \(\widetilde{\widetilde{\varvec{S}}}_{ki}(\varvec{\beta })=M^{-1}\sum _{v=1}^{M}\widetilde{\widetilde{{\mathcal {X}}}}_{kiv}(Y_i-H(\varvec{\beta }^T\widetilde{\widetilde{{\mathcal {X}}}}_{kiv}))\), \(k=2,3,4\), to obtain the MI2 estimator, \(\widehat{\varvec{\beta }}_{M2}\), of \(\varvec{\beta }\). Then, calculate the estimated variance of \(\widehat{\varvec{\beta }}_{M2}\), \(\widehat{{\,\mathrm{Var}\,}}(\widehat{\varvec{\beta }}_{M2})\), by the following formulas:

$$\begin{aligned} \varvec{G}_{M2}^{-1}(\widehat{\varvec{\beta }}_{M2})\left\{ \dfrac{1}{M}\sum _{v=1}^{m}\sum _{i=1}^{n} (\widetilde{\widetilde{\varvec{U}}}_{vi}(\widehat{\varvec{\beta }}_{M2}))^{\otimes 2} +\left( 1+\dfrac{1}{M}\right) \dfrac{\sum _{v=1}^{m}(\widetilde{\widetilde{\varvec{U}}}_{v}(\widehat{\varvec{\beta }}_{M2}) )^{\otimes 2}}{M-1}\right\}\quad (\varvec{G}_{M2}^{-1}(\widehat{\varvec{\beta }}_{M2}))^T, \end{aligned}$$(21)where \(\varvec{G}_{M2}^{-1}(\varvec{\beta })\) is the gradient of \(-M^{-1}\sum _{v=1}^{M}\widetilde{\widetilde{\varvec{U}}}_{v}(\varvec{\beta })=-\varvec{U}_{M2}(\varvec{\beta })\), for

$$\begin{aligned} \widetilde{\widetilde{\varvec{U}}}_{vi}(\varvec{\beta })&=\dfrac{1}{\sqrt{n}}\left( \delta _{i1}\varvec{S}_i(\varvec{\beta })+\delta _{i2} \widetilde{\widetilde{\varvec{S}}}_{2i}(\varvec{\beta })+\delta _{i3}\widetilde{\widetilde{\varvec{S}}}_{3i}(\varvec{\beta })+\delta _{i4}\widetilde{\widetilde{\varvec{S}}}_{4i}(\varvec{\beta })\right) , \\ \widetilde{\widetilde{\varvec{U}}}_{v}(\varvec{\beta })&=\sum _{i=1}^{n}\widetilde{\widetilde{\varvec{U}}}_{vi}(\varvec{\beta }). \end{aligned}$$

Notice that each of the two proposed MI methods uses three empirical CDFs of Wang and Chen (2009) to generate random values for \({{{\mathbf {\mathtt{{X}}}}}}_1\) and \({{{\mathbf {\mathtt{{X}}}}}}_2\) MAR separately or simultaneously. However, there is a slight difference between these two MI approaches in how information is obtained from the observed data to replace the missing data in the first and second empirical CDFs, while the third empirical CDFs are the same. The MI1 method uses only \(\delta _{i1}=1\), but the information of observed \({{{\mathbf {\mathtt{{X}}}}}}_{2i}\) is given in the indicator function in \({\widetilde{F}}_{{{{\mathbf {\mathtt{{X}}}}}}_{1i}}({{{\mathbf {\mathtt{{x}}}}}}_1|{{{\mathbf {\mathtt{{X}}}}}}_{2i},Y_i,\varvec{V}_i)\), and the information of observed \({{{\mathbf {\mathtt{{X}}}}}}_{1i}\) is given in the indicator function in \({\widetilde{F}}_{{{{\mathbf {\mathtt{{X}}}}}}_{2i}}({{{\mathbf {\mathtt{{x}}}}}}_2|{{{\mathbf {\mathtt{{X}}}}}}_{1i},Y_i,\varvec{V}_i)\). The MI2 method, however, combines \(\delta _{i1}=1\) and \(\delta _{i3}=1\) in \(\widetilde{{\widetilde{F}}}_{{{{\mathbf {\mathtt{{X}}}}}}_{1i}}({{{\mathbf {\mathtt{{x}}}}}}_1|Y_i,\varvec{V}_i)\), and \(\delta _{i1}=1\) and \(\delta _{i2}=1\) in \(\widetilde{{\widetilde{F}}}_{{{{\mathbf {\mathtt{{X}}}}}}_{2i}}({{{\mathbf {\mathtt{{x}}}}}}_2|Y_i,\varvec{V}_i)\), but the information of observed \({{{\mathbf {\mathtt{{X}}}}}}_{1i}\) or \({{{\mathbf {\mathtt{{X}}}}}}_{2i}\) is not given in the indicator functions \(I(Y_k=Y_i,\varvec{V}_k=\varvec{V}_i)\)s. Moreover, by the conditions inside the indicator functions in the empirical CDFs, the MI2 method can be applied to the case of both the continuous and categorical/discrete \(\varvec{X}\) data, while the MI1 method can only be applied to the case of the categorical/discrete \(\varvec{X}\) data, which is its limitation in practice. Despite their differences in methodology, both the techniques aim to improve information for missing data imputation and maximize estimation efficiencies. The next section investigates the finite-sample performances of the proposed methods compared to the CC, SIPW, VL, JCL, and MICE estimation methods via extensive simulations.

5 Simulation and real data studies

5.1 Simulation studies

Monte Carlo simulations were conducted to examine the finite-sample performances of the following estimators:

-

(1)

\(\widehat{\varvec{\beta }}_F\): full data ML estimator used as a benchmark for comparisons

-

(2)

\(\widehat{\varvec{\beta }}_C\): CC estimator

-

(3)

\(\widehat{\varvec{\beta }}_W\): SIPW estimator that is the solution of \(\varvec{U}_W(\varvec{\beta },\widehat{\varvec{\pi }}_1)=\varvec{0}\) in (4)

-

(4)

\(\widehat{\varvec{\beta }}_V\): VL estimator that is the solution of \(\widehat{\varvec{U}}_V(\varvec{\beta })=\varvec{0}\) in (5)

-

(5)

\(\widehat{\varvec{\beta }}_J\): JCL estimator that is the solution of \(\widehat{\varvec{U}}_J(\varvec{\beta })=\varvec{0}\) in (7)

-

(6)

\(\widehat{\varvec{\beta }}_{M1}\): MI1 estimator that is the solution of \(\varvec{U}_{M1}(\varvec{\beta })=\varvec{0}\) in (18)

-

(7)

\(\widehat{\varvec{\beta }}_{M2}\): MI2 estimator that is the solution of \(\varvec{U}_{M2}(\varvec{\beta })=\varvec{0}\) in (20)

-

(8)

\(\widehat{\varvec{\beta }}_{ME}\): MICE estimator from mice package in R, used as a benchmark for comparisons.

To evaluate the estimation performances of the proposed methods as well as compare with the other estimation methods under various situations, we were motivated to construct five scenarios, where the two univariates \(X_1\) and \(X_2\) were uncorrelated in Scenarios 1-4, and correlated in Scenario 5. Specifically, Scenario 1 studied the impact of the sample size on the performance of all the estimation methods under the same selection probabilities. Three sets of selection probabilities were considered in Scenario 2 to examine the influence of the missing rates on the performances of the estimation methods. The aim of Scenario 3 was the same as Scenario 2 except only changing the value of \(\varvec{\beta }\) to know whether the efficiencies of the estimators were altered or not under different logistic regression models. In Scenario 4, three different numbers of imputations were studied to know how they affected the performances of the two proposed MI estimation methods. Finally, Scenario 5 provided six different correlation coefficients between \(X_1\) and \(X_2\) under the same selection probabilities and sample size to investigate the performances of all the methods in these situations.

For each experimental configuration, 1, 000 replications were performed. \(M=30\) was considered except considering \(M=5,25\), and 45 in Scenario 4. Calculated were the bias, standard deviation (SD), asymptotic standard error (ASE), and coverage probability (CP) of a 95% confidence interval for each estimator. To evaluate the relative efficiencies (REs) of estimators, we computed the ratio of mean square error (MSE) of each of the other estimators, without including the full data ML estimator, to those of the MI1 and MI2 estimators, respectively, where the MSE of an estimator was defined as the sum of the square of bias and the square of SD, i.e., \(\text {MSE}=\text {bias}^2+\text {SD}^2\).

Scenario 1. The goal was to assess how well all the estimation methods performed when the two covariates \(X_1\) and \(X_2\) were independent by using the same observed selection probabilities and considering the three different sample sizes \(n = 500\), 1, 000, and 2, 000. The discrete distribution of the four values \((-0.3,-0.1,0.4,1)\) with probabilities (0.2, 0.3, 0.3, 0.2), respectively, was used to generate the data of \(X_1\). The data of \(X_2\) were generated from the discrete distribution of the four values \((-1,-0.4,0.2,0.6)\) with probabilities (0.1, 0.3, 0.3, 0.3), respectively. The Bernoulli distribution with success probability 0.4 was used to generate the data of Z. The surrogate variables of \(X_1\) and \(X_2\) were \(W_k\) that is 1 if \(X_k>0\); 0 if \(X_k\le 0\), \(k=1,2\). The Bernoulli distribution with success probability \(P(Y=1|X_1,X_2,Z)=H(\beta _0+\beta _1X_1+\beta _2 X_2+\beta _3Z)\) was used to generate the data of Y, where \(\varvec{\beta }=(\beta _0,\beta _1,\beta _2,\beta _3)^T=(1,-0.5,1,\log (2))^T\). The following multinomial logistic regression model

was used to generate the data of \(\delta _{ij}\) given \((Y_i,W_{1i},W_{2i},Z_i)\), \(i=1,2,\dots ,n\), \(j=1,2,3\), where \(\varvec{\alpha }=(\alpha _1,\alpha _2,\alpha _3)^T=(2,0.6,0.6)^T\) and \(\varvec{\gamma }=(\gamma _1,\gamma _2,\gamma _3,\gamma _4)^T=(0.7,-0.2,0.1,-1.2)^T\). Under the three different sample sizes, the observed selection probabilities were similar and about 0.6, 0.15, 0.15, and 0.1, respectively. This means that the percentages of complete cases, only \(X_1\) missing, only \(X_2\) missing, and both of them missing were 60%, 15%, 15%, and 10%, respectively.

The simulation results of Scenario 1 are illustrated in Table 1. The full data ML method overall outperformed the other approaches, but it is only considered as a benchmark comparison because it has the practical disadvantage that no missing data are required. The biases of the CC (for \(\beta _0,\beta _3\)) and MICE (for \(\beta _1,\beta _2\)) estimators were the largest, which implies that these two methods have the worst estimation. The performances of the SIPW and VL methods were similar, and the MI1, MI2 and JCL methods had similar performances, particularly when \(n=1,000\) and 2,000. The SD and ASE of each estimator were similar except the MICE estimator for \(\beta _1\) and \(\beta _2\), and decreased when the sample size was increased. The ASEs of the MI1 and MI2 estimators were similar and the smallest compared to the other estimators except the full data ML estimator. The empirical CPs for all the estimation methods were overall close to the nominal probability 95% except the CC (for \(\beta _0\) when \(n=2,000\); \(\beta _3\) when \(n=1,000,2,000\)) and MICE (for \(\beta _0\) when \(n=2,000\); \(\beta _1\) when \(n=500, 2,000\); \(\beta _2\) when \(n=1,000,2,000\)) methods. In addition, it can be seen from Table 2 that the relative efficiency values were larger than 1 except the JCL (for \(\beta _0\) when \(n=500,1,000\); \(\beta _1,\beta _2\) when \(n=500,1,000,2,000\)) and MICE (for \(\beta _0\) when \(n=500,1,000\); \(\beta _1\) when \(n=500\) and MICE versus MI1; \(\beta _3\) when \(n=500,1,000,2,000\)) estimators, which shows that the two proposed MI estimators were comparable with the JCL and MICE estimators (for \(\beta _0\) as \(n=500,1,000\); \(\beta _1\) as \(n=500\); \(\beta _3\) as \(n=500,1,000,2,000\)) in terms of efficiency. The relative efficiency values of the MICE estimator to the two proposed MI estimators tended to be increased and were larger than 1 (for \(\beta _1,\beta _2\)) when the sample size was increased, i.e., the two different types of MI estimators are more efficient than the MICE estimator for \(\beta _1\) and \(\beta _2\) when the sample size was increased.

Scenario 2. In this scenario, we examined the impact of observed selection probabilities, i.e., when the missing rates were changed, on the efficiencies of the estimators given \(n=1,000\) fixed. The same as Scenario 1 were the values for \(\varvec{\beta }\) and \(\varvec{\gamma }\) and the procedure to generate the data of \(X_1\), \(X_2\), Z, Y, \(W_1\), and \(W_2\). For the multinomial logit model in (22) to generate the data of \(\delta _{ij}\), set \(\varvec{\gamma }=(0.7,-0.2,0.1,-1.2)^T\) and \(\varvec{\alpha }=(0.7,0.5,0.5)^T\), \((1.5,0.6,0.6)^T\), and \((2.5,0.6,0.6)^T\) to obtain the three sets of observed selection probabilities, (0.31, 0.26, 0.26, 0.17), (0.47, 0.20, 0.20, 0.13), and (0.72, 0.11, 0.11, 0.06), respectively.

Table 3 shows the simulation results of this scenario. The biases, SDs and ASEs of the last seven estimators overall tended to be decreased when the CC percentage was increased from 31% to 72%. The serious bias still happened to the CC (for \(\beta _0,\beta _3\)) and MICE (for \(\beta _1,\beta _2\)) estimators. The performances of the MI1 and MI2 estimation methods were essentially the same, and the ASEs of the two proposed MI estimators were overall the smallest in comparison with the other estimators except the full data ML estimator. The empirical CPs based on all the estimation methods were overall close to the nominal probability 95% except the CC (for \(\beta _0\) when CC percentage = 0.31, 0.47), SIPW (for \(\beta _3\) when CC percentage = 0.31), MI1 and MI2 (for \(\beta _1,\beta _2\) when CC percentage = 0.31), and MICE (for \(\beta _1\) when CC percentage = 0.31; \(\beta _2\) when CC percentage = 0.31, 0.47, 0.72) methods. The relative efficiency values in Table 4 were still greater than 1 except the JCL (for \(\beta _0,\beta _1,\beta _2\)) and MICE (for \(\beta _0\) when CC percentage = 0.72; \(\beta _3\)) estimators, and tended to decrease except the JCL (for \(\beta _0,\beta _1,\beta _2\)) and MICE (for \(\beta _3\)) estimators when the CC percentage was increased, indicating that the two different types of MI estimators were comparable with the JCL estimator and the most efficient compared to the CC, SIPW, VL, and MICE estimators. When the CC percentage was 0.72, the relative efficiency values were (very) close to 1 for the SIPW, VL, and JCL estimators compared to the two MI estimators.

Scenario 3. In this scenario, we wished to know whether changing the values of the logistic regression parameters affects the performances of the estimation methods. Therefore, we kept all the settings in Scenario 2 except changing \(\varvec{\beta }=(1,-0.5,1,\log (2))^T\) to \(\varvec{\beta }=(-1,1,0.7,-1)^T\). As the simulation results given in Tables 5 and 6, the three sets of observed selection probabilities were (0.29, 0.24, 0.24, 0.23), (0.45, 0.19, 0.19, 0.16), and (0.70, 0.10, 0.10, 0.10), respectively, which were quite similar to those in Scenario 2, although the first three observed selection probabilities 0.29, 0.24, and 0.24 in the first set in this scenario were slightly reduced, compared to the first three observed selection probabilities 0.31, 0.26, and 0.26 in the first set in Scenario 2, and the last observed selection probability 0.23 was slightly increased compared to the last one 0.17. The performances of all these estimation methods in this scenario were quite similar to those in Scenario 2. One of the reasons might have the similar missing rates in this scenario and Scenario 2. Hence, in general, changing the value of \(\varvec{\beta }\) had a little effect on the selection probabilities and the efficiencies of the proposed estimation methods.

Scenario 4. The purpose of this scenario was to examine the impact of the number of multiple imputations on the performances of the proposed methods when \(X_1\) and \(X_2\) were independent with a fixed sample size. All the settings were the same as in Scenario 1 except considering \(M=5,25\), and 45, respectively, given \(n=1,000\). The observed selection probabilities were 0.60, 0.15, 0.15, and 0.10.

The simulation results for the MI1, MI2 and MICE methods in Table 7 were essentially the same as those in Table 1 of Scenario 1 when \(n=1,000\). Table 8 shows that the relative efficiency values were also overall the same as those in Table 2 of Scenario 1 for \(n=1,000\). Therefore, this simulation study demonstrated that the two proposed MI methods were not affected by the number of imputations.

Table 9 provides a summary of computing time for each estimation method. The JCL and MICE methods had the longest and second longest computing time, respectively. The JCL method took an average of 21.88 seconds to perform one simulation, which are approximately 73, 35.3 and 23 times the two proposed MI methods when \(M=5,25\), and 45, respectively. On average, the MICE methods took 1.15, 5.73, and 10.30 seconds to perform one simulation when \(M=5,25\), and 45, which are approximately 3.8, 9.2 and 10.8 times the two proposed MI methods, respectively. However, the performances of the JCL and two proposed MI methods were essentially the same. Therefore, based on the simulation results, the MI1 and MI2 methods overall outperformed the other methods, except the full data ML and JCL methods, when estimating the parameters of logistic regression with covariates MAR.

Scenario 5. The scenario aimed to examine the performances of all the approaches when \(X_1\) and \(X_2\) were correlated given that the observed selection probabilities and sample size were fixed. The settings of M, \(\varvec{\beta }\), and \(\varvec{\gamma }\) in this scenario were the same as those in Scenario 1. We set \(\varvec{\alpha }=(1.15,0.6,0.6)^T\) to compare the efficiencies of all the estimation methods in a situation of higher missing rates, i.e., the observed selection probabilities (0.40, 0.23, 0.23, 0.14), given \(n=1,000\). In addition, to generate the correlated data of \(X_1\) and \(X_2\), the distribution that had the four values \(-0.3,-0.1,0.4\), and 1 with probabilities 0.2, 0.3, 0.3, and 0.2, respectively, was used to first generate the data of \(X_1\). Then, given each value of \(X_1\), the data of \(X_2\), \((-1,-0.4,0.2,0.6)\), were generated such that the correlations between \(X_1\) and \(X_2\) were \(\rho =-0.21,-0.53,-0.71,0.21,0.51\), and 0.71, respectively. For example, for the case of \(\rho =-0.21\), if \(X_1=-0.3\), the four values of \(X_2\) were generated with probabilities 0.42, 0.1, 0.2, and 0.28, respectively. Similarly, when \(X_1=-0.1,0.4\), and 1, we generated the four values of \(X_2\) with probabilities (0.2, 0.1, 0.4, 0.3), (0.2, 0.4, 0.2, 0.2), and (0.65, 0.05, 0.05, 0.25), respectively. Finally, the surrogate variables \(W_1\) and \(W_2\) of \(X_1\) and \(X_2\), respectively, were set as in Scenario 1.

Given in Tables 10 and 11, respectively, are the simulation results for \(X_1\) and \(X_2\) with negative and positive correlation values. The biases of the CC and MICE estimators were overall larger than the other estimators. The biases were overall not changed much when the absolute correlation value was increased except the MICE estimator (for \(\beta _1\) when \(\rho\) was changed from \(-0.21\) to \(-0.71\); \(\beta _0,\beta _1, \beta _2\) when \(\rho\) was changed from 0.21 to 0.71). The SDs and ASEs of all the estimators, except the estimators of \(\beta _3\), tended to be increased with the increase in the absolute correlation value. The SD and ASE of the CC estimator were the largest. The SD and ASE of the JCL estimator were comparable with or (slightly) larger than those of the MI1 and MI2 estimators. The empirical CPs based on all the estimation methods were overall close to the nominal probability 95% except the CC (for \(\beta _0\) when \(\rho =-0.53\); \(\beta _3\) when \(\rho =-0.53,-0.71,0.51, 0.71\)), MI1 (for \(\beta _0\) when \(\rho =0.71\); \(\beta _1,\beta _2\) when \(\rho =-0.71,0.51\)), MI2 (for \(\beta _1\) when \(\rho =-0.71,0.51\)), and MICE (for \(\beta _0\) when \(\rho =-0.21,0.21,0.51,0.71\); \(\beta _1,\beta _2\) for all \(\rho\) values) methods. The relative efficiency values of the CC, SIPW, VL, and MICE estimators to the two different types of MI estimators were greater than 1 except the relative efficiency values of the SIPW to MI2 estimators and the MICE to the two different types of MI estimators (for \(\beta _0\) when \(\rho =-0.53,-0.71\); \(\beta _1\) when \(\rho =-0.21\); \(\beta _3\) when \(\rho =0.21,0.51,0.71\)). The performances of the MI1 and MI2 estimators were comparable with that of the JCL estimator in terms of efficiency (Table 12).

In summary, Scenarios 1–5 show that the performances of the JCL and two proposed MI methods were comparable, but Scenario 4 demonstrates that the MI1 and MI2 methods shortened the computing time the JCL and MICE methods took.

5.2 Examples

Two real data examples were used to demonstrate the practicality of the two proposed MI methods and CC, SIPW, VL, JCL, and MICE methods. \(M=30\) imputations were used.

5.2.1 Example 1

The first real data example is the data set of the Global Longitudinal Study of Osteoporosis in Women (GLOW500M) (Hosmer et al. 2013). Let Y be a binary outcome variable to denote whether a respondent had any fracture in the first year, where \(Y=1\) if yes; \(Y=0\) if no. Three covariates are considered. \(X_1\) is used to indicate the history of prior fracture that had missing values, in which \(X_1\) is 1 if yes and 0 if no. \(X_2\) denotes the self-reported risk of fracture that had missing values, where \(X_2=1\) if less than others of the same age; 2 if same as others of the same age; 3 if greater than others of the same age. Z denotes age at enrollment with integer values from 55 to 90 without missing values. The sample size is \(n=500\). The rates of only \(X_1\) missing, only \(X_2\) missing, and both of them missing were 16%, 16%, and 4%, respectively. Thus, the size of the CC data set is 320 (64%). In addition, let W denote the hip fracture of mother that is 1 if yes; 0 otherwise. Because W is not significant in logistic regression with the response Y, and W is correlated with \(X_2\) (Spearman’s rank correlation coefficient is 0.13 with p-value 0.025) under the CC data, W is considered as a surrogate variable of \(X_2\) in this study. Moreover, the self-reported risk of fracture was dichotomized to be less than others of the same age (\(X_2= 1\)) versus same as or greater than others of the same age (\(X_2 = 2\) or 3). The dummy variable \(DX_2\) is used for the dichotomized self-reported risk of fracture, which is 1 if \(X_2=1\) and 0 otherwise. Age at enrollment (Z) was categorized to be three groups, \(Z\le 60\), \(60<Z\le 70\), and \(Z>70\). Let \(DZ_1\) and \(DZ_2\) be dummy variables for the categorized age at enrollment. \(DZ_1\) is 1 if \(Z\le 60\) and 0 otherwise, and \(DZ_2=1\) if \(60<Z\le 70\) and 0 otherwise. The missingness mechanism of \(X_1\) and \(X_2\) was identified as MAR by Tran et al. (2021). The following logistic regression model is used to fit the data set:

The estimates of \(\beta _k\)s and their corresponding ASEs are given in Table 13. The analysis results indicate that \(\beta _k\), \(k=0,1,2,3,4\), are statistically significantly different from zero based on all the estimation methods except \(\beta _3\) and \(\beta _4\) based on the CC method. Based on the SIPW, VL, JCL, MI1, MI2 and MICE methods, the estimates of \(\beta _k\)s and their ASEs were overall quite similar. According to all the estimation methods, the results of testing \(\beta _1=0\) and \(\beta _2=0\) and their estimates reveal that women with the history of prior fracture were more likely to have fracture(s) in the first year compared to those without the history of prior fracture, and women with self-reported risk of fracture less than others of the same age were less likely to have fracture(s) in the first year compared to those whose self-reported risk of fracture was the same as or greater than others of the same age. The results of testing \(\beta _3=0\) and \(\beta _4=0\) and their estimates based on the SIPW, VL, JCL, MI1, MI2 and MICE estimation methods indicate that women aged 60 years or younger and those older than 60 years and younger than or equal to 70 years were less likely to have fracture(s) in the first year compared to those older than 70 years.

5.2.2 Example 2

The two proposed MI methods are applied to analyze the second real data example, the set of cable television (TV) data collected from the customer survey study of 1,586 residents in three cities in Taiwan (Lee et al. 2011). The satisfaction level of cable TV service is considered as the binary outcome variable, denoted by Y (\(1=\) satisfied; \(0=\) neutral and dissatisfied). The two covariates with missing values are response, denoted by \(X_1\) (\(1=\) Yes; \(0=\) No), to the question “Have you been given a discount on cable TV?”, and response, denoted by \(X_2\) (\(0=\) the number of children under 12 years old is equal 0; \(1=\) the number of children under 12 years old is \(\ge 1\)), to the question “How many children under the age of 12 live with you?”. Another non-missing covariate is response, denoted by Z (\(1=\text {Yes}\); \(0=\text {No}\)), to the question “Are you paying for the fourth channel?”. Because the rates of only \(X_1\) missing, only \(X_2\) missing, and both of them missing were 17.9%, 1.3%, and 0.4%, respectively, the CC data set consists of 1,274 respondents (80.3%). A surrogate variable of \(X_1\) and \(X_2\) is response, denoted as W (\(1=\) Yes; \(0=\) No), to the question “Would you pay extra money for additional channels?”. We fit the following logistic regression model to the data set:

The multinomial logistic regression model \(\log \left( \pi _j(Y,W,Z)/\pi _4(Y,W,Z)\right) =\alpha _j+\gamma _{1j}Y+\gamma _{2j}W+\gamma _{3j}Z\), \(j=1,2,3\), is used to examine the effects of Y, W, and Z on the missingness mechanism of \(X_1\) and \(X_2\), i.e., on their selection probabilities. The p-values of Wald chi-squared tests for the effects of Y (i.e., testing \(H_0:\gamma _{1j}=0\), \(j=1,2,3\)) and Z (i.e., testing \(H_0:\gamma _{3j}=0\), \(j=1,2,3\)) are 0.0021 and \(<0.0001\), respectively, so Y and Z are statistically significantly related to the missingness mechanism of \(X_1\) and \(X_2\) and, hence, it is reasonable to assume that \(X_1\) and \(X_2\) are MAR.

Table 14 gives the estimates of \(\beta _k\), \(k=0,1,2,3\), and their corresponding ASEs. The results of testing \(\beta _k=0\), \(k=0,1,2,3\), are statistically significant except testing \(\beta _1=0\) by using the MICE estimation method. The estimates of \(\beta _1\) and \(\beta _3\) are positive, implying that the respondents were more likely to be satisfied with the cable TV service when they were offered a discount and when they paid for the fourth channel, respectively. Based on all the estimation methods, the estimates of \(\beta _2\) are negative, revealing that it is less likely for the respondents to report satisfaction with the cable TV service when there were children under 12 years old in their family. The parameter estimates based on the SIPW, VL, JCL, MI1, MI2, and MICE methods are quite similar and different from those based the CC method. Moreover, the ASEs of the two different types of MI estimators of \(\beta _0\), \(\beta _1\), and \(\beta _3\) are the smallest in comparison with the other estimation methods, showing the outperformance of the two proposed MI methods and consistence with the simulation results.

Moreover, one can also apply the estimation methods to analyze the cable TV data set with artificial missing values and higher missing rates. To this end, let \((\delta _1^0,\delta _2^0,\delta _3^0,\delta _4^0)\) be indicators for the original missingness statuses of \(X_1\) and \(X_2\). Let \((\delta _1^*,\delta _2^*,\delta _3^*,\delta _4^*)\) be indicators for artificial missingness statuses of \(X_1\) and \(X_2\) under the assumption of MAR mechanism. Let \(({\tilde{\delta }}_1,{\tilde{\delta }}_2,{\tilde{\delta }}_3,{\tilde{\delta }}_4)\) be the combination of indicators for the original and artificial missingness statuses of \(X_1\) and \(X_2\), where \({\tilde{\delta }}_{ij}=\delta _{i1}^0\delta _{ij}^*+(1-\delta _{i1}^0)\delta _{ij}^0\), \(i=1,2,\dots ,n\), \(j=1,2,3,4\). The following multinomial logistic regression model

is applied to generate the data of \(\delta _{ij}^*\), \(j=1,2,3,4\), given \((Y_i,W_i,Z_i)\), where \(\varvec{\alpha }^*=(\alpha _1^*,\alpha _2^*,\alpha _3^*)^T=(1.7,-0.5,1)^T\) and \(\varvec{\gamma }^*=(\gamma _1^*,\gamma _2^*,\gamma _3^*)^T=(-0.7,-0.5,-0.3)^T\). One can obtain the selection probabilities of \(({\tilde{\delta }}_1,{\tilde{\delta }}_2,{\tilde{\delta }}_3,{\tilde{\delta }}_4)\) as (0.406, 0.234, 0.231, 0.129), which imply that the percentages of complete cases, only \(X_1\) missing, only \(X_2\) missing, and both of them missing are 40.6%, 23.4%, 23.1%, and 12.9%, respectively.

The analysis results of the artificial cable TV data set are given in Table 15. In general, these results have some changes compared to those of the original cable TV data set in Table 14 because of increased missing rates, but they still represent well the performance properties of the proposed estimation methods. Indeed, the changes are the results of testing \(\beta _k=0\), \(k=1,2,3,4\). Testing \(\beta _1=0\) is not statistically significant by using the SIPW, JCL, MI1, MI2, and MICE estimation methods. Testing \(\beta _2=0\) is not statistically significant by using the CC, SIPW, and VL estimation methods. In addition, the ASEs of all the estimators are increased, especially the CC estimators of all the parameters, and the SIPW and VL estimators of \(\beta _1\) and \(\beta _2\). The ASEs of the two proposed MI estimators are changed less compared to the other estimators. The (absolute) estimates of \(\beta _0\) and \(\beta _3\) are increased, and those of \(\beta _2\) are decreased for almost all the estimation methods. The estimates of \(\beta _1\) are increased based on the first three estimation methods and decreased based on the last four estimation methods. Again, the parameter estimates based on the MI1 and MI2 estimation methods are changed less compared to the JCL and MICE estimation methods.

In summary, the two real data sets are used to evaluate the applicability of the seven estimation methods and confirm the results in the simulation study section. When the logistic regression model includes all categorical/discrete variables and has two covariates MAR separately or simultaneously, the analytical results are highly consistent with the conclusions in the simulation studies. The biases and ASEs of the MI1, MI2, and JCL estimators are indeed slightly similar and better than those of the SIPW and VL estimators. The results also imply that one may make mistakes in obtaining the fitted logistic regression model if using the CC or MICE (from mice package) approach. For instance, in real data example 1, the CC estimation method concludes that \(DZ_1\) and \(DZ_2\) are insignificant; in real data example 2, the MICE estimation method suggests that \(X_1\) is not effective at the significance level 5%. Furthermore, the analysis of the artificial cable TV data example shows that the two proposed methods provide more stable results than the other estimation methods.

6 Conclusion

Two different types of MI methods have been proposed to estimate the parameters of logistic regression with covariates missing separately or simultaneously. Based on the idea of Wang and Chen (2009), for each type MI estimation method we have proposed three empirical CDFs to generate the random values for missing data and estimated the logistic regression parameters by using the estimating equations of Fay (1996), which are more convenient in practice than those of Rubin (1987) because they are solved only once. The simulation studies have showed that the two proposed MI methods’ performances were comparable with the JCL method, but they had shorter computing time than the JCL and MICE estimation methods, and are easily implemented. The two proposed MI methods overall outperformed the CC, SIPW, VL, and MICE methods. Two real data sets have been used to illustrate the practical use of the two proposed MI methods.

Although we have focused on the case where covariates are categorical/discrete, one can also consider the case of continuous covariates by using the nonparametric kernel approach of Wang and Chen (2009) to construct the empirical CDFs. For example, assume that \({{{\mathbf {\mathtt{{X}}}}}}_1\) and \({{{\mathbf {\mathtt{{X}}}}}}_2\) are continuous covariate vectors and MAR separately or simultaneously, \(\varvec{Z}\) is a categorical/discrete covariate vector and always observed, \({{{\mathbf {\mathtt{{W}}}}}}_1\) and \({{{\mathbf {\mathtt{{W}}}}}}_2\) are categorical/discrete surrogate vectors of \({{{\mathbf {\mathtt{{X}}}}}}_1\) and \({{{\mathbf {\mathtt{{X}}}}}}_2\), respectively, and, hence, \(\varvec{V}=(\varvec{Z}^T,\varvec{W}^T)^T\) is a categorical/discrete covariate vector. The MI2 method still works for the case. For the MI1 method, to impute the missing values of \({{{\mathbf {\mathtt{{X}}}}}}_{1i}\) and \({{{\mathbf {\mathtt{{X}}}}}}_{2i}\), one can construct the empirical CDFs of \({{{\mathbf {\mathtt{{X}}}}}}_{1i}\) given \(({{{\mathbf {\mathtt{{X}}}}}}_{2i},Y_i,\varvec{V}_i)\) and \({{{\mathbf {\mathtt{{X}}}}}}_{2i}\) given \(({{{\mathbf {\mathtt{{X}}}}}}_{1i},Y_i,\varvec{V}_i)\) as follows:

Here \({\mathcal {H}}_s\) is a \(p_s\times {p_s}\), \(s=1,2\), symmetric positive definite matrix and depends on n for \(p_1=r_2=\text {length}({{{\mathbf {\mathtt{{X}}}}}}_2)\) and \(p_2=r_1=\text {length}({{{\mathbf {\mathtt{{X}}}}}}_1)\). \({\mathcal {K}}_{{\mathcal {H}}_s}({\mathbf {u}})=|{\mathcal {H}}_s|^{1/2}{\mathcal {K}}_s({\mathcal {H}}_s^{-1/2}{\mathbf {u}})\) for \({\mathcal {K}}_s(\cdot )\) being a \(p_s\)-variate kernel with \(\int {\mathcal {K}}_s({\mathbf {u}})d{\mathbf {u}}=1\) and \({\mathcal {H}}^{1/2}_s\) the bandwidth matrix. \({\widetilde{F}}_{\varvec{X}_i}^*({\mathbf {x}}|Y_i,\varvec{V}_i)\) is equal to \({\widetilde{F}}_{\varvec{X}_i}({\mathbf {x}}|Y_i,\varvec{V}_i)\) in (17). We can use the MI1 procedure in Secti. 4.1 to estimate the parameters. The simulation studies demonstrate the two proposed MI methods still work well for this case; simulation results are not reported.

Furthermore, to build the MI1 method for the case where both \({{{\mathbf {\mathtt{{X}}}}}}_1\) and \({{{\mathbf {\mathtt{{X}}}}}}_2\) are continuous covariate vectors and MAR separately or simultaneously, \(\varvec{Z}\) is a continuous covariate vector, and \({{{\mathbf {\mathtt{{W}}}}}}_1\) and \({{{\mathbf {\mathtt{{W}}}}}}_2\) are continuous surrogate vectors of \({{{\mathbf {\mathtt{{X}}}}}}_1\) and \({{{\mathbf {\mathtt{{X}}}}}}_2\), respectively, one can construct the following empirical CDFs of \({{{\mathbf {\mathtt{{X}}}}}}_{1i}\) given \(({{{\mathbf {\mathtt{{X}}}}}}_{2i},Y_i,\varvec{V}_i)\), \({{{\mathbf {\mathtt{{X}}}}}}_{2i}\) given \(({{{\mathbf {\mathtt{{X}}}}}}_{1i},Y_i,\varvec{V}_i)\), and \(\varvec{X}_i\) given \((Y_i,\varvec{V}_i)\):

Here \({\mathcal {H}}_s\) is a \(q_s\times {q_s}\), \(s=1,2,3\), symmetric positive definite matrix and depends n for \(q_1=r_2+\text {length}(\varvec{V})\), \(q_2=r_1+\text {length}(\varvec{V})\), and \(q_3=\text {length}(\varvec{V})\). \({\mathcal {K}}_{{\mathcal {H}}_s}({\mathbf {u}})=|{\mathcal {H}}_s|^{1/2}{\mathcal {K}}_s({\mathcal {H}}_s^{-1/2}{\mathbf {u}})\) for \({\mathcal {K}}_s(\cdot )\) being a \(q_s\)-variate kernel with \(\int {\mathcal {K}}_s({\mathbf {u}})d{\mathbf {u}}=1\) and \({\mathcal {H}}_s^{1/2}\) the bandwidth matrix. Similarly, to develop the MI2 method for this case, one can form the empirical CDFs of \({{{\mathbf {\mathtt{{X}}}}}}_{1i}\) given \(({{{\mathbf {\mathtt{{X}}}}}}_{2i},Y_i,\varvec{V}_i)\), \({{{\mathbf {\mathtt{{X}}}}}}_{2i}\) given \(({{{\mathbf {\mathtt{{X}}}}}}_{1i},Y_i,\varvec{V}_i)\), and \(\varvec{X}_i\) given \((Y_i,\varvec{V}_i)\) as follows:

Here \({\mathcal {H}}\) is a \(q_3\times {q_3}\) symmetric positive definite matrix and depends n. \({\mathcal {K}}_{{\mathcal {H}}}({\mathbf {u}})=|{\mathcal {H}}|^{1/2}{\mathcal {K}}({\mathcal {H}}^{-1/2}{\mathbf {u}})\) for \({\mathcal {K}}(\cdot )\) being a \(q_3\)-variate kernel with \(\int {\mathcal {K}}({\mathbf {u}})d{\mathbf {u}}=1\) and \({\mathcal {H}}^{1/2}\) the bandwidth matrix.

Finally, as an extension, the two proposed MI methods can be applied to estimate the parameters of logistic regression with outcome and covariates missing separately or simultaneously, and to estimate the parameters of a multinomial logit model with the same missing issue. These tasks are future research.

References

Breslow NE, Cain KC (1988) Logistic regression for two-stage case-control data. Biometrika 75:11–20

Buuren SV, Groothuis-Oudshoorn K (2011) Mice: multivariate imputation by chained equations in R. J Stat Softw 45(3):1–67

Dong Y, Peng CYJ (2013) Principled missing data methods for researchers. Springer, Berlin

Fay RE (1996) Alternative paradigms for the analysis of imputed survey data. J Am Stat Assoc 91:490–498

Horvitz DG, Thompson DJ (1952) A generalization of sampling without replacement from a finite universe. J Am Stat Assoc 47:663–685

Hosmer DW, Lemeshow S, Sturdivant RX (2013) Applied logistic regression, 3rd edn. Wiley, New York

Hsieh SH, Lee SM, Shen PS (2010) Logistic regression analysis of randomized response data with missing covariates. J Stat Plann Infer 140:927–940

Hsieh SH, Li CS, Lee SM (2013) Logistic regression with outcome and covariates missing separately or simultaneously. Comput Stat Data Anal 66:32–54

Jiang W, Josse J, Lavielle M, Group T (2020) Logistic regression with missing covariates|parameter estimation, model selection and prediction within a joint-modeling framework. Comput Stat Data Anal 145:106907

Lee SM, Gee MJ, Hsieh SH (2011) Semiparametric methods in the proportional odds model for ordinal response data with missing covariates. Biometrics 67:788–798

Lee SM, Hwang WH, de Dieu Tapsoba J (2016) Estimation in closed capture-recapture models when covariates are missing at random. Biometrics 72:1294–1304

Lee SM, Li CS, Hsieh SH, Huang LH (2012) Semiparametric estimation of logistic regression model with missing covariates and outcome. Metrika 75:621–653

Lee SM, Lukusa TM, Li CS (2020) Estimation of a zero-inflated Poisson regression model with missing covariates via nonparametric multiple imputation methods. Computat Stat 35:725–754

Lipsitz SR, Parzen M, Ewell M (1998) Inference using conditional logistic regression with missing covariates. Biometrics 54:295–303

Little RJ (1992) Regression with missing X’s: a review. J Am Stat Assoc 87:1227–1237

Little RJ, Rubin DB (2019) Statistical analysis with missing data, 3rd edn. Wiley, New York

Lukusa TM, Lee SM, Li CS (2016) Semiparametric estimation of a zero-inflated Poisson regression model with missing covariates. Metrika 79:457–483

Pahel BT, Preisser JS, Stearns SC, Rozier RG (2011) Multiple imputation of dental caries data using a zero-inflated Poisson regression model. J Public Health Dent 71:71–78

Rubin DB (1976) Inference and missing data. Biometrika 63:581–592

Rubin DB (1987) Statistical analysis with missing data. Wiley, New York

Rubin DB (1996) Multiple imputation after 18+ years. J Am Stat Assoc 91:473–489

Rubin DB, Schenker N (1986) Multiple imputation for interval estimation from simple random samples with ignorable nonresponse. J Am Stat Assoc 81:366–374

Tran PL, Le TN, Lee SM, Li CS (2021) Estimation of parameters of logistic regression with covariates missing separately or simultaneously. Communications in statistics - Theory and methods, in press

Wang CY, Chen JC, Lee SM, Ou ST (2002) Joint conditional likelihood estimator in logistic regression with missing covariate data. Statistica Sinica 12:555–574

Wang CY, Wang S, Zhao LP, Ou ST (1997) Weighted semiparametric estimation in regression analysis with missing covariate data. J Am Stat Assoc 92:512–525

Wang D, Chen SX (2009) Empirical likelihood for estimating equations with missing values. Ann Stat 37:490–517

Wang S, Wang CY (2001) A note on kernel assisted estimators in missing covariate regression. Statistics and Probability Letters 55:439–449

White IR, Royston P, Wood AM (2011) Multiple imputation using chained equations: issues and guidance for practice. Stat Med 30:377–399

Zhao LP, Lipsitz S (1992) Designs and analysis of two-stage studies. Stat Med 11:769–782

Acknowledgements

The authors thank two referees and an Associate Editor for their constructive comments that improved the presentation. The research of S.M. Lee and T.N. Le was supported by Ministry of Science and Technology (MOST) Grant of Taiwan, ROC, MOST-109-2118-M-035-002-MY3.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Lee, SM., Le, TN., Tran, PL. et al. Estimation of logistic regression with covariates missing separately or simultaneously via multiple imputation methods. Comput Stat 38, 899–934 (2023). https://doi.org/10.1007/s00180-022-01250-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00180-022-01250-3