Abstract

Recent advancements in natural language processing (NLP) have catalyzed the development of models capable of generating coherent and contextually relevant responses. Such models are applied across a diverse array of applications, including but not limited to chatbots, expert systems, question-and-answer robots, and language translation systems. Large Language Models (LLMs), exemplified by OpenAI’s Generative Pretrained Transformer (GPT), have significantly transformed the NLP landscape. They have introduced unparalleled abilities in generating text that is not only contextually appropriate but also semantically rich. This evolution underscores a pivotal shift towards more sophisticated and intuitive language understanding and generation capabilities within the field. Models based on GPT are developed through extensive training on vast datasets, enabling them to grasp patterns akin to human writing styles and deliver insightful responses to intricate questions. These models excel in condensing text, extending incomplete passages, crafting imaginative narratives, and emulating conversational exchanges. However, GPT LLMs are not without their challenges, including ethical dilemmas and the propensity for disseminating misinformation. Additionally, the deployment of these models at a practical scale necessitates a substantial investment in training and computational resources, leading to concerns regarding their sustainability. ChatGPT, a variant rooted in transformer-based architectures, leverages a self-attention mechanism for data sequences and a reinforcement learning-based human feedback (RLHF) system. This enables the model to grasp long-range dependencies, facilitating the generation of contextually appropriate outputs. Despite ChatGPT marking a significant leap forward in NLP technology, there remains a lack of comprehensive discourse on its architecture, efficacy, and inherent constraints. Therefore, this survey aims to elucidate the ChatGPT model, offering an in-depth exploration of its foundational structure and operational efficacy. We meticulously examine Chat-GPT’s architecture and training methodology, alongside a critical analysis of its capabilities in language generation. Our investigation reveals ChatGPT’s remarkable aptitude for producing text indistinguishable from human writing, whilst also acknowledging its limitations and susceptibilities to bias. This analysis is intended to provide a clearer understanding of ChatGPT, fostering a nuanced appreciation of its contributions and challenges within the broader NLP field. We also explore the ethical and societal implications of this technology, and discuss the future of NLP and AI. Our study provides valuable insights into the inner workings of ChatGPT, and helps to shed light on the potential of LLMs for shaping the future of technology and society. The approach used as Eco-GPT, with a three-level cascade (GPT-J, J1-G, GPT-4), achieves 73% and 60% cost savings in CaseHold and CoQA datasets, outperforming GPT-4.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

The advent of Large Language Models (LLMs) has marked a paradigm shift in the field of Natural Language Processing (NLP) [1,2,3,4,5,6], transforming how we interact with and understand textual data [7]. Among the pioneers in this domain, OpenAI’s Generative Pre-Trained Transformers (GPT) have emerged as a prominent force, reshaping the landscape of NLP [8]. Comprising multiple transformer layers, GPT models employ self-attention mechanisms that allow for the intricate comprehension of language patterns within extensive datasets [9]. This design allows the models to learn semantic meaning from data, thereby achieving remarkable progress in textual understanding. Recent research has delved into the varied applications of GPT technology across multiple sectors, including finance, healthcare, and law. This exploration underscores the wide-ranging applicability and potential of GPT models, highlighting their versatility in addressing distinct industry-specific challenges and requirements [10, 11]. Table 1 presents the list of acronyms used throughout the survey.

To illustrate the market penetration of ChatGPT and its integration with users, we present statistical details. Figure 1 demonstrates the efficacy of the ChatGPT website [12] over the past seven months. This observation is substantiated by the graphical representation, which indicates a notable upswing in the utility of ChatGPT. Specifically, in November 2022, the platform garnered 152.7 million views [13,14,15,16,17,18,19,20]. The number of views escalated to 266 million in December 2022, and then saw a more significant rise to 616 million in January 2023. This upward trend persisted, reaching 1 billion views in February, before surging to 1.6 billion in March 2023. Remarkably, by April 2023, user engagement reached its zenith at 1.8 billion, continuing its upward trajectory into May with a total of 1.9 billion views. These statistics illustrate the increasing integration of ChatGPT into daily activities, highlighting the growing necessity to enhance the performance of GPT LLMs. Furthermore, additional data reveals ChatGPT’s widespread impact across various industries, underscoring its broad applicability and influence.

Figure 2 illustrates the rapid growth of the chatbot sector [12], reaching 100 million users in only two months. This remarkable achievement, when compared to other social media and entertainment platforms, showcases a stark contrast in user base growth. For example, TikTok reached the same number of users within 9 months, whereas platforms such as YouTube, Instagram, Facebook, and Twitter took 18, 30, 54, and 60 months, respectively, to achieve similar milestones. In the music industry, Spotify required a considerably longer period, taking 132 months to reach this mark. Meanwhile, Netflix, a giant in the entertainment sector, achieved the 100 million user milestone in 216 months, further highlighting the unprecedented rapid adoption rate of the platform in question. [21,22,23,24,25,26,27,28].

Since their introduction, GPT models have undergone swift and significant development, beginning with the launch of GPT-1, which established the foundation for a groundbreaking series of models in natural language processing (NLP). This evolution has been instrumental in transforming the landscape of NLP, setting new benchmarks for language understanding and generation. [29]. Following the initial model, subsequent iterations such as GPT-2 and GPT-3 have significantly broadened the scope and enhanced the capabilities of these systems, pushing the frontiers of Machine Learning (ML) and language comprehension to new heights. These advancements have paved the way for more sophisticated applications in various domains, showcasing the progressive potential of GPT models in understanding and generating human-like text. [9]. With the recent unveiling of GPT-4 and the anticipation of future models, the GPT series continues to push the frontiers of NLP, offering unprecedented levels of accuracy and efficiency in language tasks [30]. The evolution from GPT-1 to GPT-4 marks an ongoing endeavor to refine the models’ complexity, responsiveness, and contextual awareness. This journey is pivotal in shaping the future landscape of interactions between machines and humans, demonstrating a commitment to advancing the capabilities of these models to understand and engage with human language more effectively. [31].

Table 2 provides a comprehensive comparison of different generations of GPT models, encompassing GPT (or GPT-1) to GPT-4. The text outlines the evolution of the models in terms of their parameters, underscoring the escalation in complexity and improvements in self-attention mechanisms. Additionally, it traces the incorporation of Reinforcement Learning based Human Feedback (RLHF), illustrating a shift towards more advanced and nuanced training methodologies. This progression reflects a concerted effort to refine the models’ ability to process and understand language through increasingly sophisticated techniques. [32,33,34,35,36,37,38,39,40]. Furthermore, the table illustrates the diversification in applications, demonstrating the growing versatility and adaptability of GPT models in various domains. (Fig. 3).

The core of GPT models’ success lies in the self-attention mechanism, an innovative technique that has revolutionized ML models ability to recognize dependencies across sequences in data [41]. The self-attention mechanism allows each part of an input sequence to focus on different parts of itself, enabling the model to create contextual representations. Alongside self-attention, RLHF adds another dimension to the model’s training, aligning the generated content with human-like reasoning and judgment [42]. By fine-tuning models using RLHF, it is possible to guide the model’s behavior based on human-generated feedback, optimizing performance in specialized domains [43]. This dual application of self-attention and RLHF has contributed to the efficiency of various applications such as text summarization, creative writing, and question-answering systems [44]. For instance, GPT-3 ability to perform legal document analysis has been benchmarked against human experts, revealing astonishing parallels in terms of accuracy and insight [11, 41]. Moreover, the use of self-attention and RLHF has been pivotal in addressing the long-standing challenge of long-range dependencies in sequence data, leading to breakthroughs in machine translation and other complex tasks [45].

The training process of these models is both complex and resource-intensive, leveraging vast amounts of data and specialized computational hardware [46]. By employing a multi-stage approach that combines pre-training on broad data and fine-tuning on specific tasks, GPT models are capable of delivering customized performance across a wide range of applications [47]. This training architecture underscores the adaptability and specialization of GPT models, whilst also illuminating the considerable infrastructure and computational requirements necessary for deployment [48]. The synergistic application of self-attention and RLHF, combined with an intricate training process, encapsulates the scientific innovation and practical challenges inherent in the development and implementation of these advanced language models [49,50,51,52,53,54,55,56].

Despite these remarkable advancements, the implementation of GPT models is not without challenges and concerns. The computational cost associated with training these models is substantial, often requiring highly specialized hardware and vast amounts of energy [44, 57]. This raises significant questions about their sustainability and environmental impact, with recent studies highlighting the considerable carbon footprint associated with training large-scale models [58, 59]. Ethically, the deployment of GPT models has stirred debate around issues such as bias, misinformation, and potential misuse. Research has indicated that these models can inadvertently propagate stereotypes or misrepresentations found within their training data, posing serious ethical considerations [60, 61].

Additionally, the complex architecture and extensive resource requirements of GPT models may limit accessibility for researchers and organizations with constrained resources, raising questions about inclusivity and equitable access to this cutting-edge technology [62, 63]. Notably, the applications of GPT models extend into areas like real-time language translation, medical diagnosis support, and personalized education, underscoring the potential societal impact and the necessity for responsible development and deployment [64, 65]. The intersection of ethical considerations, practical constraints, and far-reaching potential paints a complex picture of the current state of LLMs, necessitating ongoing research, collaboration, and critical evaluation.

Therefore, this article aims to demystify the ChatGPT model, bridging the knowledge gap and contributing a holistic understanding of its architecture, performance, and societal implications. In undertaking this endeavor, the analysis leverages recent research, empirical evidence, and a thoughtful scrutiny of the ethical considerations involved. The objective is to furnish scholars, practitioners, and policymakers with a thorough and groundbreaking examination of ChatGPT, offering insights that span theoretical underpinnings, practical applications, and ethical implications, elucidating its role in shaping the future of technology and society. Through a detailed examination of ChatGPT’s complexities, this study not only enriches academic dialogue but also acts as a roadmap for future innovations and ethical deployment. Combining theoretical insights with practical applications, it contributes to the broader endeavor of leveraging AI’s potential responsibly, with an acute consciousness of its intricacies and obligations. The following sections will investigate ChatGPT’s architecture, its diverse applications, ethical implications, and forward-looking views, thereby setting the stage for a well-informed and thorough grasp of this crucial technology.

1.1 Existing Surveys

This section presents the discussion on existing surveys conducted in different research areas by the researchers. Mondal et al. [66] surveyed the problems that Generative Artificial Intelligence (GAI) and its effects on the economy and society. It exhorts companies to modify their tactics in order to combine physical and virtual meetings through GAI integration. They also provide business managers with a useful foundation for creating successful plans. By identifying the potential for businesses to create value propositions and customer experiences through digital communication and information technology, this also examines the GAIs initial deployments in diverse industries aims to inspire future customer solutions.

Another study by [67], examined the three main areas of the ChatGPT, namely, the ChatGPT responses to questions pertaining to science education; potential Chat-GPT uses in science pedagogy; and the study of ChatGPT as a research tool and its utility. This research, which used a self-study technique, produced outstanding results, with ChatGPT frequently aligning its outputs with the investigation’s main themes. However, this is also important to recognize that ChatGPT runs the risk of taking the position of the supreme epistemic authority. The analysis presents insights that, while unique, lack substantial empirical evidence or the necessary qualifications, a point that is elaborated upon within the survey. From an application-oriented perspective, the use of ChatGPT across various sectors such as healthcare, clinical practice, education, and manufacturing industries is examined, highlighting its versatility and potential impact in these fields [68,69,70,71,72,73,74]. The changes to transformer-based LLMs is presented in the article.

In [75], the authors examined the current trends of GAI models, and associated integrations with the metaverse for precision medicine. The salient advantages and inherent drawbacks are highlighted. GAI is a dynamic branch of AI that can create different content types on its own, such as text, photos, audio, and video. GAI presents fresh opportunities for content production within the metaverse [76], successfully overcoming technological gaps in this rapidly developing digital space. Utilizing ChatGPT can markedly enhance the generation and presentation of virtual objects within the metaverse, potentially steering Industry 5.0 towards a new era of hyper-customization and individualized user experiences. This advancement underscores the technology’s capacity to meet specific user demands through personalized and dynamic virtual environments.

Lv et al. [77] provides an in-depth analysis of how Generative AI (GAI) technologies are employed to create innovative and captivating content in digital and virtual settings. It outlines the diverse applications of GAI, such as converting text into images, audio, and scientific materials, delivering a thorough review of its capabilities in augmenting creative outputs across various formats. In the study [78], the authors showcased the utilization of Generative AI (GAI) in text-to-code tasks, revealing its capacity for economic optimization and the potential to enhance both creative and non-creative endeavors. However, their current framework suffers from notable limitations, including prolonged training periods due to the unavailability of datasets for certain models like text-to-text and text-to-audio. Additionally, these models demand extensive datasets and substantial computational resources, resulting in significant time and expenses for training and execution [79,80,81,82,83,84,85].

Authors in [86] conducted a survey on the application of ChatGPT in education. Initially, the study explores the potential of GPT in academic tasks. Subsequently, it delves into the limitations of GPT, particularly in terms of providing erroneous information and amplifying biases. Furthermore, the survey emphasizes the importance of addressing security concerns during data training and prioritizing privacy considerations. To enhance students’ learning experiences, collaborative efforts among policymakers, researchers, educators, and technology experts are deemed essential. The survey encourages discussions on the safe and beneficial integration of emerging Generative AI (GAI) tools in educational settings.

Xia et al. [87] delved into the potential of Generative AI (GAI) technologies in advancing communication networks, specifically within the framework of Web 3.0 cognitive architectures. Their research emphasized the importance of Semantic Communication (SC), a paradigm poised to optimize data exchange efficiency while maximizing spectrum resource utilization. However, despite notable advancements, current SC systems face challenges related to context comprehension and the integration of prior knowledge [26, 88,89,90,91,92,93,94,95,96]. GAI emerges as a viable solution, providing the ability to automate various tasks and produce personalized content across diverse communication channels. Moreover, within the architecture encompassing cloud, edge, and mobile computing, Semantic Communication (SC) networks play a crucial role, as highlighted by Prasad and colleagues [97]. The implementation of GAI, both globally and locally within these networks, is instrumental in refining the transmission of semantic content, the synergy of source and channel coding, and the adaptability in generating and encoding information. This approach aims to enhance semantic understanding and optimize resource allocation.

Another study conducted by [98] examined the diverse applications of GPT models in Natural Language Generation (NLG) tasks. Drawing inspiration from OpenAI’s groundbreaking GPT-3 innovation, the article provided a comprehensive overview of ChatGPT’s current role in NLG applications. It explored potential uses across various sectors including e-commerce, education, entertainment, finance, healthcare, news, and productivity. A crucial aspect of the analysis involved evaluating the efficacy of implementing GPT-enabled NLG technology to customize content for individual users.

In [99], the authors outlined recent advancements in pre-trained language models and their potential to enhance conversational agents. Their analysis focuses on assessing whether these models can effectively address the challenges encountered by dialogue-based expert systems. The article examines various underlying architectures and customization options. Additionally, it explores the existing challenges encountered by Chatbots and similar systems. The emergence of pre-trained models (PTMs) has sparked a revolution in Natural Language Processing (NLP), significantly impacting the field. Qin et al. [100] offered a comprehensive examination of Pretrained Models (PTMs) in Natural Language Processing (NLP). They provide insights into applying PTM knowledge to downstream tasks and suggest potential research directions for the future. This survey serves as a valuable guide for comprehending, leveraging, and advancing PTMs across various NLP applications.

Authors in [101] delved into the background and technological aspects of GPT, with a specific focus on the GPT model itself. The article explores the utilization of ChatGPT for developing advanced chatbots. Within the study, interview dialogues between users and GPT are showcased across various topics, and subsequently analyzed for semantic structures and patterns. The interview underscores the advantages of ChatGPT, emphasizing its capability to enhance various aspects including search and discovery, reference and information services, cataloging, metadata generation, and content creation. Important ethical issues like bias and privacy are also covered. Another study in [102] discussed the benefits and downsides of using LLMs in the classroom while taking into account the perspectives of both students and teachers.

They place a strong emphasis on the opportunity for creating educational content, raising student engagement, and modifying learning environments. However, the use of LLMs in education has to be backed with effective prompt engineering, to ensure high competency among the students and faculties. Table 3 presents a comparitive study of existing surveys.

1.2 Uniqueness of the Survey

In contrast to previous reviews that predominantly focus on foundational architectures, auxiliary model frameworks, and specific technical details related to GPTs and LLMs, this proposed survey introduces a novel bifurcated methodology. It offers a comprehensive examination of ChatGPT by elucidating its architectural intricacies, delineating its diverse deployment landscapes, and conducting a thorough evaluation of its training methodologies. Thus, the survey provides a holistic perspective on the complex designs and development of ChatGPT-centric models tailored for specific application domains. By juxtaposing ChatGPT with alternative learning frameworks, this study aims to illuminate the distinctive learning mechanisms employed by AI models under various training paradigms and conditions. This addresses a gap in existing literature, which often portrays GPTs solely as conversational agents, by providing empirical support for the utilization of GPT technology in real-time data analysis applications.

The survey provides an in-depth exploration of the pivotal technologies that form the foundation of ChatGPT’s functionality. It acts as a bridge between strategic architectural decisions and the tangible aspects of resource distribution and performance enhancement. A noteworthy case study EcoGPT by Chen et al. [103] is examined to highlight the economic considerations in optimizing GPT models, introducing three principal strategies, namely, the LLM approximation, prompt adaptation, and model cascading. These strategies demonstrate significant cost efficiencies, thereby facilitating the deployment of cost-effective models in practical scenarios. Hence, this survey aims to provide both researchers and industry professionals with invaluable insights to develop superior GPT models. These models are not only customized for specific applications but also effectively balance the cost-scalability equation.

1.3 Survey Methodology

In this subsection, we discuss the potential research questions which the authors brainstormed while preparing the survey. Table 4 presents the research questions, and also presents its practical importance (why these RQs needs to be addressed) Based on the proposed RQs, the authors have done a thorough scan of literature works associated with GPT models from academic databases. A list of possible keywords involved in the search include “ChatGPT”, “GPT”, “LLM + Training”, “GPT models”, “Security for GPT models”, “GPT architecture”, “Model training for LLMs”, “Prompts in LLMs”, “Finetuning LLMs”, “Performance of LLM models”, “GPT in applications”, and others. The search is performed on literary databases like IEEE, Elsevier, Taylor & Francis, Springer, ACM, and Wiley, and also indexing platforms like Google scholar, and preprints like arXiV, bioRxiv, and SSRN were scrutinized.

The initial search yielded a significant pool of approximately 250 academic articles. These articles were subsequently refined using stringent inclusion and exclusion criteria. The first phase involved a thorough review of titles and abstracts, resulting in a reduction to 250 relevant publications. Following this, the introductions of these articles were scrutinized to ensure alignment with our established research topics, leading to a further reduction of 80 publications. The third step involved a meticulous content evaluation tailored to our research objectives, resulting in the identification of 165 papers that met our criteria for academic rigor and relevance. The core of our thorough survey is built on these chosen works.

1.4 Survey Contributions

The research contributions of the article are presented as follows.

-

We delve into the fundamentals of Large Language Models (LLMs) to bolster Chat-GPT models, focusing specifically on the self-attention mechanism, Reinforcement Learning based Human Feedback (RLHF), and prompt adaptation techniques for GPT models.

-

Building upon the foundational concepts of ChatGPT, we elucidate the architectural and functional components of the ChatGPT model. This exploration paves the way for intriguing possibilities in task-specific and training optimizations, fundamentally reshaping the deployment of ChatGPT across various application domains.

-

We also examine the underlying technologies that underpin ChatGPT. This explo-ration not only satisfies academic curiosity but also holds practical implications for industries aiming to incorporate Large Language Models (LLMs) into their operations.

-

We explore the open issues and challenges associated with deploying Chat-GPT, delving deeper into the security and ethical considerations of GPT models. This discussion lays the groundwork for ensuring the secure utilization of GPT technology.

-

Lastly, our case study, EcoGPT, showcases core optimizations aimed at reducing costs and training time for GPT models. Through performance evaluation, we identify practical changes necessary to redesign GPT models for large-scale deployment.

1.5 Layout

The rest of the article is divided into eight sections. Section 2 discusses the preliminaries (nuts and bolts) to design GPT models. Section 3 presents the architectural schematics of the LLMs, and then analyses the ChatGPT model, with discussion on the key AI models. Section Sect. 4 presents the open issues and challenges in GPT model design (in terms of training, resource requirements, and model designs). Section 5 presents the security and ethical considerations to be followed in the GPT design. Section 6 presents the case study, EcoGPT, which renders a cost-effective and fine-tuned LLM design. Section 7 summarizes the key points and discusses the future directions of research in generative AI and GPT models. Finally, 8 presents the final thoughts and concludes the article with potential future scope of work by authors.

2 The Nuts and Bolts of ChatGPT

With its exceptional performance across a broad spectrum of textual outputs, ranging from scientific publications to creative works, ChatGPT stands at the forefront of AI language models. It offers a reliable approach to content recognition by leveraging an AI classifier trained on an extensive corpus of text generated by both humans and AI. Through its provision of contextually aware and enlightening responses, its advanced Natural Language Processing (NLP) capabilities redefine human-AI interaction. Acting as a versatile tool, ChatGPT fosters innovation and enhances efficiency across various industries. Thus, in this section, we delve into the fundamentals, often referred to as the nuts and bolts, of ChatGPT. This lays the groundwork for readers to gain a deeper appreciation and understanding of the ChatGPT architecture and key components discussed in the subsequent sections.

2.1 Evolution of ChatGPT

On November 30, 2022, OpenAI took a significant stride towards democratizing AI technology by releasing ChatGPT for public testing. In just the first week, user adoption surged, with over 200,000 sign-ups, underscoring the immediate appeal and utility of the model. Following this, on December 8, 2022, ShareGPT was introduced, encouraging community participation through shared conversational experiences. This initiative resulted in a notable 15% increase in user-generated content, according to metrics published by OpenAI. As of February 1, 2023, OpenAI shifted towards a more business-oriented approach with the introduction of GPT Plus, a subscription-based service. Garnering an initial uptake of 50,000 subscribers, it provided exclusive benefits, positioning it as a premium option for professionals and enterprises. On February 7, 2023, Microsoft broadened the model’s scope of application by integrating it into the Bing search engine, resulting in a 20% increase in daily search traffic on Bing. Subsequently, OpenAI facilitated more extensive platform integrations on March 1, 2023. Then, on March 9, 2023, Azure OpenAI Services enabled 300 inter-API platform integrations.

On March 14, 2023, GPT-4 became accessible to ChatGPT Plus members, enhancing the context accuracy of the GPT-3.5 model by 10%. Just nine days later, on March 23, 2023, plugins were launched, offering a broader range of customization options. Within a month, subscriptions increased by 25% owing to effective user customization, which provided personalized and on-the-fly services. Subsequently, on April 4, 2023, chat-integrated services-focused firms with Y Combinator backing entered the market, stimulating entrepreneurship activity.

On April 20, 2023, Yokosuka, a Japanese city, began utilizing ChatGPT for administrative tasks, showcasing the model’s global applicability. The code interpreter plugin, which was launched on July 16, 2023, solidified ChatGPT’s status as a versatile tool in the contemporary computing landscape. Figure 1 presents the timeline of ChatGPT’s evolution, illustrating the multifaceted progression of ChatGPT.

2.2 Learning mechanisms integrated with ChatGPT

Table 5 indicates about the various learning techniques that can be compared with the ChatGPT learning mechanism. The framework known as Abductive Learning (ABL) [104], which aims to link ML and logical reasoning. ABL uses a knowledge base that makes use of logical languages like First-Order Logic (FOL), in contrast to traditional supervised learning techniques. Conclusions derived from knowledge-based reasoning offer alternatives to conventional supervised labels. Abductive learning integrates a learning model, such as a neural network, capable of learning from both labeled and unlabeled datasets. This approach embodies a standard process guided by data induction. In contrast, reasoning occurs when predictions from the learning model are applied, benefiting from knowledge-based data. The effectiveness of the learning model is affirmed when there is alignment between the outcomes of the reasoning and the information within the knowledge base. The process entails iteratively executing two steps: induction and deduction, which are akin to learning and reasoning, respectively. These steps persist until logical reasoning, based on the predictions made by the learner, coincides with the knowledge base. However, if achieving consistency becomes unattainable, the learning process is halted and terminated. Another training method known as “parallel learning” involves running several models or learning tasks at once [105]. Parallel learning enables the simultaneous training of many models or learning tasks as opposed to a single model sequentially, which may result in a faster and more effective learning process. This strategy proves particularly beneficial in scenarios with substantial volumes of data. In such cases, learning tasks can be subdivided into smaller components and executed concurrently. To optimize learning effectiveness and leverage computational resources efficiently, parallel learning can be achieved through distributed computing resources or parallel processing algorithms. The parallel learning framework typically consists of three modules: a description module that reconstructs the real world into artificial worlds; a prediction module that runs computational experiments with the learner in the artificial worlds; and a prescription module that applies the prediction (action) to the real world in order to manage and control it.

There is another learning technique which is called as Federated Learning, McMa-han et al. [115] first put up the idea of Federated Learning (FL) in 2016. By creating a decentralized federation, where numerous clients only communicate with a central server, FL aims to preserve data confidentiality and privacy. Each client has a unique dataset that is kept private from other clients. The selected group of clients receives a global model from the server to use as a starting point for training on their individual local datasets. The server then compiles the locally trained models from each of the chosen clients and updates the global model using an average operation. Throughout the FL process, these two steps—model distribution and aggregation—are repeated iteratively. In order to improve machine translation another learning technique was identified and is called dual learning, and was first implemented in 2016 [107]. The primary objective of dual learning is to address the challenge of acquiring extensive labeled data. Unlike active learning, which depends on manual labeling, dual learning leverages the structural resemblance between two tasks, maximizes the utilization of unlabeled data, and enables bidirectional enhancements in learning performance. In the context of dual learning, these two machine learning tasks exhibit structural duality, where one task involves mapping from space X to space Y, and the second task involves mapping from space Y to space X. This inherent duality can be advantageous for various applications, such as Neural Machine Translation (NMT) between two languages, Automatic Speech Recognition (ASR) versus Text-To-Speech (TTS), and picture captioning versus image generation.

Bengio first suggested the idea of curriculum learning in 2009 [107]. The curriculum learning can be explained using an example, where the educational material is constantly arranged in a sequential fashion from elementary school to university, beginning with simpler topics and moving to more difficult ones. Students who have a firm grasp of the fundamentals typically learn more complex material more quickly and effectively. This finding served as the inspiration for Curriculum Learning, which allows learners to start with simpler samples and work their way up to more challenging ones. The learning process in Curriculum Learning is broken down into T distinct stages, designated as ⟨Q1,..., Qt,..., QT⟩ with each step specifying the unique learning criteria for that phase. Here, the Qt is the learning criteria for the learning stage.The criterion in curriculum Learning may include a number of ML-related con-cepts, like as samples, tasks, model capacity, loss, and others. Most curriculum learning frameworks have two components called Difficulty Measurer and Training Scheduler to ensure that each stage, Qt, proceeds from easy to tough. These elements help determine the degree of difficulty of the learning content so that the training process can be scheduled appropriately.

Each sample in the dataset must be given a priority rating by the difficulty measurer in order to determine the order in which they should be learned. The Training Scheduler, meanwhile, decides when and how many difficult examples will be delivered into the learning model. The Difficulty Measurer’s main responsibility is to rate the difficulty of components, such as samples. A number of ways can be used to carry out this evaluation, including manual configuration prior to learning, assessment by an external teacher model, dynamic evaluation depending on the model’s output, or even reinforcement learning methods. A useful strategy that can improve the performance, robustness, and convergence speed of ML models is curriculum learning. The caliber of the curriculum’s design and the dataset being used, however, determine how effectively students learn from a curriculum. To improve the learner’s capacity for generalization, Caruana [109] created Multi-Task Learning (MTL) in 1997. This is accomplished by utilising domain-specific data from related task training data. MTL can be viewed as an inductive transfer mechanism in which the training signal from a second task acts as an inductive bias to speed up learning. The NeurIPS workshop “Learning to Learn: Knowledge Consolidation and Transfer in Inductive Systems” in 1995 helped popularise the Pratt [110] notion of transfer learning. The observation that people may use the knowledge they’ve learned to solve new, related problems more quickly and effectively is what drives transfer learning. Transfer learning seeks to solve issues with less labeled data by loosening the assumption of independent and identically distributed (i.i.d.) training and test data. Its goal is to transfer the information learned from a source domain—such as task Ts on dataset Ds —to a target domain (task Tt and dataset Dt), where there is a lack of labeled data. The final objective is to increase the prediction function’s ft learning performance in the intended domain. Transfer learning can be seen as an extension of Meta learning [111], which is a subset of the more general idea of Learning to Learn. It involves using many source tasks (T1, T2,..., Tn) to help solve a new task, Tt, more quickly. Di, the dataset for each task, has two subsets: Dtr for training and Dts for testing. Depending on how the meta-knowledge is learned and applied to new activities, there are three different types of meta-learning. Model-based, optimization-based, and metric-based approaches are some examples of these categories.

Thrun and Mitchell [112] first proposed the idea of lifelong learning in 1995. It is based on the notion that the experience and knowledge we acquire via ongoing learning throughout our lifetimes can improve our capacity to meet new difficulties in the future. It is because of this realization that lifelong learning is being developed. Lifelong learning entails a continuous and incremental learning process where the learner has completed a number of tasks (T1, T2,..., TN) at a specific period. These tasks may fall under a variety of domains and are linked to the appropriate datasets (D1, D2,..., DN). A knowledge base is used to store and make use of the knowledge obtained from these tasks. The goal of lifelong learning is to use the knowledge that has been gained within the KB to help complete a new task (TN + 1), then to update the KB with the newly acquired knowledge. In conclusion, lifelong learning demonstrates a number of critical features, including the following: (1) it is a continual process of learning; (2) it involves the collection and updating of knowledge; and (3) it makes use of the collected knowledge to support new learning activities. Deep neural network learning’s catastrophic forgetting problem has been addressed via lifelong learning, and learning in open environments has also been studied. Lifelong learning has found use in the field of NLP, helping to improve topic modelling, information extraction, and chatbots’ ability to engage in continuous conversation.

A ML technique known as ensemble learning combines the results of various distinct models to produce a single, more reliable and accurate prediction. Ensemble learning [113] makes use of the variety and combined knowledge of numerous models, as opposed to relying on just one, to enhance overall performance. Ensemble learning involves training multiple base models on the same dataset using various techniques or data sampling alterations. The ensemble method merges the predictions from each individual model in a meaningful manner to generate the final forecast. This combination can be achieved through techniques such as voting, averaging, or weighted averaging. Ensemble learning offers several advantages, including improved generalization and reduced overfitting by averaging the predictions from multiple models to mitigate the impact of individual model biases or errors. Additionally, it might be able to capture many facets of the data and offer a more thorough insight of the underlying trends.

Ensemble learning encompasses several effective techniques, including bagging, boosting, and stacking. Bagging involves combining the outputs from multiple models, with each model trained on different bootstrap samples drawn from the training dataset. In contrast, boosting adopts a sequential approach to model training, where each subsequent model is tailored to correct the errors of its predecessor. Stacking, on the other hand, utilizes a meta-model that optimally weighs the inputs from various base models to generate a consolidated prediction. With the advent and subsequent success of large-scale pretrained models in the realms of Natural Language Processing (NLP) and Computer Vision (CV), the concept of contrastive learning, which has its roots in the work by Hinton in the early 1990s [114], has witnessed a resurgence in popularity, showcasing its relevance and applicability in the modern era of deep learning advancements.

Contrastive Learning has emerged as a crucial tool in unsupervised scenarios, functioning as a form of self-supervised learning. Its core tenet posits that similar examples should exhibit comparable representations, with the learner focusing on discerning distinctive features rather than mastering every aspect of the sample. The objective of contrastive learning is to separate the representations of dissimilar examples while bringing together those of similar examples. This is achieved through the design of a contrastive loss function and appropriate selection of metrics. Contrastive learning has been effectively applied in various tasks, including picture-to-image translation, image captioning, autonomous driving, and visual positioning.

2.3 Transformers: The ChatGPT base

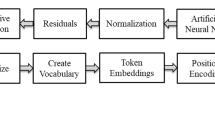

The base architecture of ChatGPT model is the transformer model [8], which is an encoder-decoder structure. The designed models on transformers are trained on billion of parameters, which are systematically arranged into layers and head units. The encoder-decoder fundamentally have several layers, where each layers consists of multiple head units to focus on different parts of the input text. Figure 4 presents the transformer architecture.

2.4 A Round of Encoding Operation

Mathematically, an encoder works on an input sequence X = {× 1, × 2,..., xn}, where n is the sequence length. Every xi is a d-dimensional input embedding. The input embedding is shown mathematically as follows.

where We is the embedding-weight matrix. Next, we have positional encoding P added to the input embeddings E, which is represented as E′, and thus E = E + P. Next, stage is the multi-head self-attention mechanism, where E′ is linearly projected into a query Q, key K, and a value V. The tuple (Q, K, V) are matrices, and are represented as follows.

The values are split into h different heads and scaled dot product attention Hi on each head is computed as follows

These attention heads are concatenated and one more time are linearly transformed, which results in the multi-head attention output, presented as follows.

Once the multi-head self-attention layer is complete, the next step in the encoding process if the Feed-Forward Neural Network (FFN), which is applied position wise in independent fashion to the output. The FFN is basically two linear layers with a Rectified Linear Unit (ReLU) activation in between, presented as follows.

Post the FFN, layer normalization is done, and the processed sequence is passed to the next encoder layer, which applies the same steps of operation. The following encoding process is successively repeated for all encoding layers.

2.4.1 A Round of Decoding Operation

The Decoder also consists of layers of multi-head attention and feed-forward neural networks but has an additional layer of multi-head attention connecting it to the output of the Encoder. First, we have the Masked Multi-Head Self-Attention, which is similar to the Multi-Head Self-Attention but with a mask to prevent future positions from being attended to. After this layer, we have the encoder-decoder attention layer, which takes the output Z of the last Encoder layer and the output Y of the Masked Multi-Head Self-Attention layer of the Decoder. The details are as follows.

where Q = Y · Wq, K = Z · Wk, V = Z · Wv.

At the final layer, the decoder output goes through a linear layer followed by Softmax layer for prediction. It is shown as follows.

The data used for training these models usually consist of large text corpora that contains websites, books, and articles. These are tokenized into subwords or words, followed by embedding into continuous vectors. The model is trained using optimization algorithms like Adam with custom learning rates, often employing techniques like learning rate warm-up and layer normalization.

2.4.2 Tokenization and Embedding

Tokenization segments a sequence of text into discrete units known as “tokens.” These tokens represent words, subwords, or single characters, depending on the specific tokenization approach chosen. This can be defined as:

Input: A textual sequence, denoted as T = {w1, w2,..., wn}, where w1, w2,..., wn are the generated words by the token.

Output: A list of tokens, represented as tokens = {t1, t2,..., tm}, with ‘m’ indicating the number of tokens generated through the tokenization process. The token creation is shown as follows.

Initially, T consisted of words w1, w2,..., wn, and the tokenization process converts each word into the number of tokens. The tokenization splits the text based on the white space or punctuation and employs a byte-pair encoding technique to convert the words into tokes. Word embedding represents words as continuous vectors in a multi-dimensional space while maintaining the semantic relationships between words.

where E(Ttoken) is a high dimensional vector representing semantic information about the tokens, this can be done using cosine similarities and an unsupervised learning algorithm. Two integrated techniques, the self-attention mechanism, and the reinforcement learning strategies to the encoding–decoding process is discussed in next section. Also, ChatGPT allows a rich set of Application Programming Interfaces (APIs) to allow interoperability with different applications, which makes it a gamechanger to connect with open-source LLM designs be developers. The details are discussed in the next subsection.

2.5 APIs and Interoperability

API is a set of rules that allows one software to interact with another application. API makes it easier to utilize the functionality of any application without having implementation knowledge by interacting with servers and back-end systems. The ChatGPT API is powered by the “gpt-3.5-turbo” model, representing OpenAI’s most advanced language model available for public use, devoid of any subscription requirements. This model features an expansive context window of 4096 tokens, marking an enhancement of 96 tokens compared to its predecessor, the Davinci-003 model. Furthermore, there exists a “snapshot” iteration named “gpt-3.5-turbo-0301,” notable for its exemption from automatic updates. This version grants developers the autonomy to integrate OpenAI’s latest model enhancements into their systems at their discretion. OpenAI is committed to the continuous refinement of the ChatGPT API, with scheduled updates and improvements slated to commence from April 2023, ensuring the API remains at the forefront of technological advancements.

The OpenAI GPT-3.5-Turbo API offers developers a multitude of advantages for enriching their applications with natural language processing (NLP) capabilities. One notable advantage is its capacity to generate text responses that closely resemble human language, thereby elevating the overall user experience of these applications. This capability unlocks avenues for creating diverse content, including blog articles, social media posts, and more. Moreover, the OpenAI GPT-3.5-Turbo API is distinguished by its continuous learning and improvement. As the model interacts with more data, its accuracy and efficacy consistently improve. This iterative learning process ensures that developers can depend on the API to provide responses that are not only up-to-date but also highly precise when addressing user inquiries. This dynamic aspect of the API presents a promising trajectory for the advancement of language-centric applications.

2.6 Key enablers for ChatGPT

With cutting-edge developments like Big Data, AI, Cloud Computing, enhanced networking like 5G and beyond, and Human–Computer Interaction, GPT is the culmination of numerous technical advancements [116]. Figure 5 provides an overview of the technologies that enable GPT working with rich interfacing in diverse applications. GPT provides the representation of the essential elements of the GPT models.

-

Bigdata: Big data is the term used to describe the enormous amounts of structured and unstructured data that are produced by organizations, people, and machines. The emergence of new technologies, like the Internet of Things (IoT) [117], has sparked an explosion in the production of data from sources like social media, sensors, and transaction-based systems. The advent of big data has revolutionized how businesses approach data analysis and decision-making. The training it has facilitated for the utilization of advanced models like GPT in the field of natural language processing (NLP) has yielded invaluable insights. GPT models, in particular, leverage deep learning (DL) techniques and big data for natural language creation. They harness vast amounts of data, ranging from millions to even trillions of samples sourced from diverse repositories such as books, papers, websites, and social media platforms.

-

An exemplar of this advancement is the sophisticated and multimodal GPT-4. With the aid of extensive and diverse training data, GPT models, including GPT-4, exhibit heightened accuracy and proficiency in NLP tasks such as questionanswering, text summarization, and language translation. Furthermore, their adaptable and versatile nature allows for customization to specific tasks and domains, and they can even be extended to produce photos and videos.

Big data offers numerous advantages for GPT, such as the capability to train with vast amounts of data. However, it also presents challenges concerning data accuracy, privacy concerns, and ethical data usage. Nevertheless, with the ever-growing accessibility of data, GPT models are poised to advance significantly, heralding a transformative future for NLP. To harness the full potential of big data and GPT models, organizations must prioritize addressing ethical concerns and ensuring data accuracy as technology progresses.

-

Human–Computer Interaction: In the realm of Human–Computer Interaction (HCI), ChatGPT benefits from several key enabling technologies that have significantly augmented its capabilities and improved user experience. Among these, Natural Language Processing (NLP) techniques stand out as fundamental pillars. Transformer-based models, in particular, have played a pivotal role in ChatGPT’s success within HCI. These models leverage attention mechanisms to grasp contextual nuances, resulting in more coherent and contextually relevant responses that mimic human conversation. Moreover, advancements in pre-training techniques have been instrumental. Techniques such as unsupervised learning and large-scale language modeling have empowered ChatGPT to generalize effectively across diverse domains. This versatility makes ChatGPT a valuable tool for various HCI applications, enhancing its adaptability and utility in real-world scenarios.

Another critical technology for ChatGPT (GPT-4 and above) is the integration of multimodal capabilities. Incorporating multiple modalities such as images, sounds, and videos alongside text can greatly enrich user interactions. ChatGPT leverages techniques from computer vision and audio processing to comprehend and generate content across different modalities, resulting in more dynamic and immersive user experiences. This multimodal approach is particularly relevant in Human–Computer Interaction (HCI), especially in the context of virtual assistants. These assistants cater to user interactions through a combination of voice commands, text inputs, and visual elements, making the ability to handle multiple modalities invaluable for enhancing user engagement and satisfaction. Overall, these pivotal technologies, grounded in advancements in Natural Language Processing (NLP) and multimodal integration, have positioned ChatGPT as a robust and adaptable tool for improving user experiences across diverse Human–Computer Interaction (HCI) scenarios.

-

Cloud Computing: In the context of Cloud Computing, key enabling technologies for ChatGPT play a critical role in harnessing the potential of AI and NLP on a scalable and efficient cloud-based infrastructure. One essential component is cloudbased infrastructure [118], which provides the computing resources required for training and deploying large-scale language models like ChatGPT, such as Graphics Processing Units (GPUs), and Tensor Processing Units (TPUs). Cloud platforms offer elasticity, enabling enterprises to allocate resources dynamically as required, facilitating the execution of compute-intensive tasks such as training and deploying advanced AI models like ChatGPT. Additionally, containerization and container orchestration technologies like Docker and Kubernetes are essential for deploying ChatGPT on the cloud [119].

Containers serve to encapsulate the program and its dependencies, ensuring consistent and repeatable deployments across multiple cloud instances. Kubernetes, functioning as an orchestration platform, automates container deployment, scaling, and management, facilitating the seamless and efficient operation of ChatGPT at scale in cloud environments. When coupled with robust cloud-based AI services, these technologies establish a foundation for integrating ChatGPT into cloud computing ecosystems. This integration enables a diverse array of applications, spanning from chatbots to natural language interfaces for cloud-based services and resources [120]. Cloud providers utilize recommender systems to optimize resource provisioning and service allocation for models like ChatGPT. These systems analyze usage patterns, workload characteristics, and performance metrics to suggest appropriate resource allocations, instance types, and configurations. This proactive approach enhances system reliability, scalability, and cost efficiency, ensuring that ChatGPT receives the necessary resources and services to operate effectively within the cloud environment [121].

-

5G and beyond communication networks: The emergence of 5G and 6G technologies presents significant potential for augmenting the functionalities of expansive language models like GPT-3.5 and GPT-4 [122]. The ultra-fast data transfer capabilities of 5G technology provide uninterrupted access to cloud-based models [123], resulting in expedited retrieval of information and enhanced real-time language processing on user devices [124]. The reduced latency observed in these networks also facilitates the lightning response of the model, which enhances the user experience in applications. The integration of 6G networks provides the opportunity for localized language model processing on user devices, hence ensuring privacy and reducing on cloud infrastructure [125, 126]. The aforementioned technical improvements have the potential to expand worldwide accessibility to LLM and enhance the availability of advanced language processing skills. The improved data transfer rates and reduced latency associated with 6G technology contribute to enhanced efficiency in model training, ultimately refining the output response to queries. Moreover, the integration of advanced AI and ML algorithms in 6G networks enables models to access more sophisticated algorithms, leading to more refined and contextually appropriate responses. This transformative capability has the potential to revolutionize interactions with AI across various domains. Additionally, to bolster data privacy, federated learning techniques are integrated with 5G networks. [127].

-

Artificial Intelligence: AI resides at the heart of ChatGPT LLMs, and it plays an essential role in these models and provides services in all area, including healthcare [128] [129], military surveillance [130], and many others. Understanding and producing human-like language necessitates the use of advanced ML algorithms and neural network topologies. ChatGPT can evaluate massive volumes of text data, learn linguistic patterns, and provide contextually relevant responses thanks to AI, particularly DL. Additionally, AI fuels ChatGPT’s ongoing evolution through finetuning and transfer learning, enabling its versatility across diverse domains and applications. Nevertheless, fully unleashing AI’s potential in Large Language Models (LLMs) like ChatGPT demands substantial processing capacity. The sheer scale and intricacy of these models necessitate robust processing capabilities. LLMs boasting billions of parameters rely on access to high-performance GPUs or TPUs for effective training and fine-tuning. To mitigate processing demands during inference and deployment, model optimization techniques like quantization and pruning come into play. These AI-driven optimizations enhance ChatGPT’s resource efficiency while preserving its linguistic proficiency. Moreover, employing ChatGPT across various applications such as virtual assistants, content generation, and language translation underscores the need for scalable cloud-based infrastructure [131]. AI-driven solutions such as auto-scaling and containerization guarantee seamless deployment of ChatGPT across multiple applications, efficiently utilizing computational resources as required. In summary, AI empowers ChatGPT LLMs to excel in natural language understanding and generation. However, effective utilization mandates access to substantial computational resources, meticulous model optimization, and flexible deployment strategies tailored to diverse applications.

3 Demystifying ChatGPT: Insights into OpenAI LLMs

The advent of Large Language Models (LLMs) has ushered in a new era of Natural Language Processing (NLP) and comprehension, with ChatGPT emerging as a prominent exemplar. This study aims to delve into the intricacies of OpenAI’s LLMs, shedding light on their underlying mechanisms, capabilities, and implications. ChatGPT’s development marks a significant milestone in AI research, solidifying Ope-nAI’s commitment to advancing AI in a responsible and ethical manner. Through an exhaustive examination of its architectural design, training methodologies, and ethical considerations, this research endeavors to provide a comprehensive resource for scholars, practitioners, and policymakers alike. One of the primary distinguishing features of ChatGPT is its challenging scale and sophistication. With billions of parameters, it demonstrates remarkable prowess in generating coherent and contextually relevant natural language responses. This study will dissect the underlying architecture responsible for such capabilities, with a particular focus on deep neural networks and Transformer-based models. Furthermore, ethical aspects surrounding ChatGPT’s utilization will be scrutinized, encompassing bias mitigation strategies, content generation guidelines, and the ethical deployment of LLMs in real-world scenarios. This section aims to contribute to the ongoing discourse regarding the responsible development and deployment of advanced language models by elucidating the inner mechanisms of ChatGPT and tackling the inherent challenges.

3.1 Unveiling the Multifaceted Roles of ChatGPT: From Assistant to Innovator

The comprehensive functions of GPT are depicted in Table 6, which intricately delineates its various roles, its corresponding descriptions, and the pertinent user demographics it serves.

ChatGPT plays a versatile role, serving a diverse user base that includes students, learners, content creators/developers, and general information seekers. It excels in providing clear explanations for complex concepts, facilitating learning and content creation. Researchers leveraging ChatGPT’s insights should verify its content against established literature and evidence to ensure accuracy and impartiality in their research papers. Additionally, ChatGPT offers robust support across customer service, education, and entertainment domains, showcasing rapid adaptability to emerging information.

Equipped with assistant-like capabilities, ChatGPT stands poised to revolutionize industries by generating authentic content and making certain professions more accessible. This advancement serves a broad spectrum of users, including individuals seeking up-to-date information, businesses leveraging ChatGPT for enhanced customer interaction, linguists, and those seeking increased accessibility and guidance across various domains and platforms. The content it provides upholds scholarly integrity, ensuring the absence of plagiarism or AI-induced bias, which resonates well with researchers. ChatGPT excels at enhancing knowledge and capabilities. It holds a competitive edge in conversational AI development by its capacity to learn from interactions, adapt, and comprehend diverse topics, delivering contextually appropriate responses. This versatility offers advantages to various stakeholders, including individuals desiring enhanced conversational experiences, conversational AI researchers and developers, industry leaders like OpenAI and its rivals, as well as content creators and data providers enriching ChatGPT’s training data. Such capabilities cater to a wide array of needs and interests within the AI landscape, fostering innovation and progress.

In addition to these, ChatGPT boasts advantages across a myriad of other tasks. Its versatility enables it to undertake a diverse range of roles, including code generation, meal suggestions, enhancing overall quality of life, completing creative tasks, handling user inquiries, offering guidance, serving as a virtual assistant, and transforming software development. Such versatility proves beneficial for software developers, programmers, everyday users seeking assistance and advice, researchers, academics, and industry professionals within the technology sector. [148].

To effectively address customer inquiries, offer recommendations, predict outcomes, assist in code enhancement, foster innovation through personalized bot creation, and facilitate content moderation on social media platforms, ChatGPT assumes a pivotal role in query resolution, seamlessly integrating into business workflows. This feature serves a wide array of stakeholders, including companies, customer service teams, end users, software developers, social media moderators, and administrators, benefiting from its capabilities. In corporate settings, ChatGPT finds practical application in supporting marketing endeavors, fostering audience engagement, and achieving overarching marketing objectives. However, safeguarding sensitive data against potential breaches is paramount, necessitating careful consideration of associated risks by policymakers. Enterprises, marketers, regulatory bodies, employees, organizational users, and the general public are among the various groups poised to reap the benefits of such applications. These entities frequently encounter ChatGPT and generative AI technologies within the realm of business operations. For professionals seeking to enhance their proficiency in digital marketing, ChatGPT proves invaluable, assisting in the creation of content suitable for social media updates, blogs, and other online publications, thereby augmenting campaigns and promoting engagement with target audiences. Moreover, it provides recommendations for tailoring headlines, introductions, and paragraphs to specific keywords. Customer service representatives, seasoned industry specialists, digital marketers, comprehensive marketing teams, diligent market researchers, and data analysts are just a few examples of individuals poised to leverage this technology.

ChatGPT undertakes a multitude of tasks aimed at interpreting and effectively conveying thoughts and concepts. It excels in generating code, facilitating the translation of ideas from natural language to programming languages, and assisting programmers in debugging their code. With capabilities spanning a wide spectrum of natural language processing (NLP) activities, ChatGPT produces outputs comparable to those written by humans, owing to its extensive training on a diverse dataset sourced from the internet. Programmers, developers, students, learners, and anyone interested in programming and NLP stand to benefit significantly from these functionalities. As a versatile tool, ChatGPT offers functionalities such as programming language validation, concept translation, and code generation. ChatGPT’s appeal rests on its user-friendliness and its ability to generate text that closely resembles human writing. Leveraging a vast dataset of internet content, ChatGPT adeptly handles a myriad of natural language processing (NLP) tasks. Its utility extends to various user groups, including enthusiasts seeking improved interpretability in chatbots, researchers and developers exploring interpretability within AI systems, market researchers and data analysts leveraging ChatGPT to enhance survey design and extract invaluable insights from consumer data, among others. By actively incorporating user feedback, Chat-GPT continuously enhances the quality of its responses and overall user engagement, thereby elevating its interpretational capabilities. This dynamic feature extends to intelligent survey creation, facilitating accurate data collection, and analyzing unstructured customer input to unveil intricate patterns in consumer attitudes and behaviors. Such versatility proves beneficial to diverse user segments, ranging from individuals seeking genuine and meaningful conversations to researchers and developers delving into AI advancements, technology enthusiasts keen on emerging developments, and content creators harnessing the untapped potential of dialogue generation.

ChatGPT enhances students’ writing skills by injecting excitement and curiosity into their writing process. It offers personalized assignments, real-time suggestions, and expert guidance, tailoring prompts to suit each student’s unique preferences and skill levels. Leveraging AI technology, this inclusive approach not only bolsters writing proficiency but also cultivates an engaging and enriching writing experience for various stakeholders, including students, educators, and educational institutions. Moreover, when AI models are transparent and explainable, they lend meaning to the decisions they make, instilling trust in the model and its capabilities [149].

ChatGPT’s remarkable linguistic capabilities hold significant implications for the education sector. It transforms student interactions, offers valuable instructional assistance, serves as a user-friendly search tool, and greatly contributes to enhancing writing skills. The educational benefits derived from ChatGPT’s capabilities have the potential to reshape how information is accessed and shared, resonating strongly with students, educators, and anyone who values a conversational approach to searching for information.

ChatGPT fosters the cultivation of outstanding writing abilities by prioritizing progress and advancement. It aids digital marketers in crafting impactful and compelling advertising content, thereby enhancing operational effectiveness, while also delivering precise AI assessments and tailored feedback. This functionality caters to a diverse range of users, including writers, content creators, educators, trainers, and professionals in digital marketing. The invaluable support provided by ChatGPT in skill refinement and the crafting of impactful communication stands to benefit all these stakeholders.

ML serves as the backbone for generative AI systems like ChatGPT, enabling them to generate unique content based on existing information. This transformative capability holds the promise of revolutionizing work environments and offering invaluable insights for market research and relationship-building efforts. A diverse array of beneficiaries stand to gain from this innovation, including knowledge workers, researchers, developers, content creators, market analysts, and everyday consumers seeking insightful and imaginative solutions. Leveraging extensive training on human-generated online content and predictive text capabilities, OpenAI’s ChatGPT generates AI-powered responses spanning a wide spectrum of topics and writing styles. This versatile tool appeals to a broad audience, including art enthusiasts, poets, creative writers, authors, content creators, scholars, students, and researchers. Through the automation of processes, streamlining of workflows, and enhancement of cybersecurity via virtual assistants and generative AI technologies, ChatGPT enhances the execution of routine office operations. The beneficiaries of this capability encompass office staff and employees who rely on seamless support to accomplish their daily tasks effectively.

3.2 ChatGPT Language Generation Model-Attention Model and RL for LLMs

The ChatGPT language generation model is built on a pre-trained transformer architecture, specifically tailored for natural language processing (NLP) tasks, particularly in the domain of conversational AI. The core components of this generation model comprise:

-

Architecture: The architecture of ChatGPT is rooted in transformers, which excel at capturing context from input queries and establishing relationships with prompt sequences. It leverages a self-attention mechanism to focus on different parts of the input sequence, facilitating the generation of responses. This inherent design makes ChatGPT remarkably efficient in producing natural language text.

-

Pre-training: In this phase, ChatGPT learns to predict the next word in the output response by training on a large corpus of text data from the internet. This process enables the model to develop a comprehensive understanding of common language usage and context.

-

Finetuning: After the initial pre-training phase, the model must undergo fine-tuning on a specific dataset to optimize its performance for that particular domain. This fine-tuning process customizes the model’s behavior for specific tasks, such as question-answering and chatbots.

-

Conversational ability: It is engineered to engage in conversations akin to human interactions. With the ability to grasp the user’s queries, comprehend context across multiple exchanges, and generate responses that are contextually relevant. This makes the system suitable for virtual assistants of any business application.

-

Deployment: It finds applications in services demanding human-like responses across multiple queries of the same context, including virtual assistants and customer support, which may be either voice or text-based.

3.2.1 Attention Model

Attention models in transformers play a vital role in capturing dependencies within the input sequence. They calculate the weighted sum of the input query, considering both relevance and position in the sequence, achieved through scaled dot-product attention. This mechanism enables the model to prioritize specific parts of the input data during output generation by assigning varying levels of attention. The three primary attention mechanisms are:

-

Self Attention: It identifies dependencies and relationships among tokens in the input query, enabling the model to learn contextual information effectively.

-

Encode-Decoder Attention: The encoder processes the input, while the decoder handles the output sequence, aligning the input and output by transferring information between them.

-

Multi-Head Attention: It executes the attention mechanism multiple times concurrently. This parallel processing involves utilizing learned parameters from distinct sub-spaces to perform multiple attention operations simultaneously.

The attention score between query Q and key-vector V is defined as follows.

where Q is the query vector derived from the current position of the words, which can be learned by xi.Wq where x ∈ [t1, t2,..., tn] denote tokens of the input query and W is the learned value weight matrix. Key vector K is derived from the position of the token in the input sequence, which is learned by xi.Wk. \(\sqrt {{\text{d}}_{{\text{k}}} }\) is the dimensions of the key vector. The attention weight is calculated by the following equation.

The weighted sum of the value vector is calculated as follows.

The value vector V contains the information associated with each position in the input sequence, which is learned by xi.Wv.

3.2.2 Reinforcement Learning in LLMs

Integration of Reinforcement Learning (RL) in LLMs enables the model to perform sequential decision-making and interaction with the system. It uses a special signal to guide the model to make better decisions. The objective function of integrating RL in LLM can be defined as follows.

where MAXθ defines the optimization that optimize the parameter function θ, and θ are the parameter of LLM. Eπ represents the expectations of which behavior or policy a model would use. \(\sum\nolimits_{\infty }^{{{\text{t}} = 0}} {{\text{is}}}\) summing from time stamp 0 to infinite horizons of RL. Y represents the importance of reward in the future, which ranges from 0 to 1. R(st, at) represents the reward function that assigns a scaler value to the internal state of the model(st) and action of generated text sequence(at).

4 Open Issues and Challenges

In this section, we outline the open issues and research challenges related to the integration of ChatGPT into various domains. The details are as follows:

4.1 Open Challenges

Despite ChatGPT’s major contributions to scientific advances, it is critical to recognize and address the research challenges that have arisen as a result of its adoption. Table 7 presents an overview of future research options and probable directions. This part investigates these problems and evaluates ChatGPT’s potential in the scientific community through emerging developments. The following are common concerns that arise when using ChatGPT for scientific research.

Table 7 indicates the open issues of the ChatGPT. Trust in information generated by AI must be maintained by consistency and precision [150]. Despite ChatGPT’s outstanding humanoid writing skills, certain errors and inaccuracies have been found. It is crucial to increase the accuracy and coherence of AI-generated information in order to guarantee the validity of scientific discoveries. Concerns regarding AI-generated unfairness are raised by the usage of enormous amounts of text to train ChatGPT [151]. The AI model may unintentionally reinforce biases found in the training data, thus affecting subsequent research and studies. In order to stop the spread of unfair and biased information, this emphasizes the necessity of addressing biases in the training data.

The pervasive reliance on advanced AI models such as ChatGPT may potentially compromise researchers’ autonomy and critical thinking skills. Therefore, it is imperative to strike a balance between leveraging AI technology and preserving researchers’ independence and analytical capabilities [152]. While ChatGPT has the capability to generate high-quality language, there is a risk of producing inappropriate or low-quality responses. To ensure consistent production of top-notch content, continuous monitoring, learning, and improvement initiatives are essential [153].

The biases included in the training data can have an impact on ChatGPT’s performance. When utilized to produce predictions, biased data can have negative consequences in industries including healthcare, manufacturing, law enforcement, and employment [154, 166]. It is crucial to take into account the volume and variety of data utilized for training AI models in order to reduce this risk. Due to its massive dataset training, ChatGPT frequently has trouble generalizing to new data [155]. In order to strengthen ChatGPT’s capacity to generalize and perform better, new training methods must be created.

Understanding the ChatGPT model’s decision-making process and spotting its shortcomings is challenging due to its intricacy. Enhancing explainability is crucial to fostering confidence in AI models and making it possible to see any faults [156]. ChatGPT models use a lot of computational resources, which can be harmful to the environment. In order to lessen the ecological impact of ChatGPT models, there is a need to improve their power efficiency. While ChatGPT may produce text quickly, its responses can occasionally be delayed [157]. Users that want quick and flexible interactions would benefit from ChatGPT’s increased speed and adaptability. It is essential to guarantee the security and integrity of content produced by ChatGPT [158]. To stop the formation of detrimental or harmful content, such as intolerance, precautions must be implemented.