Abstract

The brain tumor is considered the deadly disease of the century. At present, neuroscience and artificial intelligence conspire in the timely delineation, detection, and classification of brain tumors. The process of manually classifying and segmenting many volumes of MRI scans is a challenging and laborious task. Therefore, there is an essential requirement to build computer-aided diagnosis systems to diagnose brain tumors timely. Herein review focuses on the advances of the last decade in brain tumor segmentation, feature extraction, and classification through powerful and versatile brain imaging modality Magnetic Resonance Imaging (MRI). However, particular emphasis on deep learning and hybrid techniques. We have summarized the work of researchers published in the last decade (2010–2019) termed as the 10s and the present decade (only including the year 2020) termed as the 20s. The decades in review reveal the bore witness to the critical revolutionary paradigm shift in artificial intelligence viz. conventional/machine learning methods, emerged deep learning, and emerging hybrid techniques. This review also covers some persistent concerns on using the type of classifier and striking trends in commonly employed MRI modalities for brain tumor diagnosis. Moreover, this study ensures the limitation, solutions, and future trends or opens up the researchers’ advanced challenges to develop an efficient system exhibiting clinically acceptable accuracy that assists the radiologists for the brain tumor prognosis.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Brain tumor commonness is a significant contributing aspect to the universal death rate. According to the GLOBOCAN 2020 report, the number of new brain cancer cases was 308,102, and 2.5% of people died from brain cancer [1]. Tumors originating in the brain can be categorized into four main types: gliomas, meningiomas, pituitary adenomas, and nerve sheath tumors. The World Health Organization (WHO) categorizes brain tumors through cell origin and behavior, from lowest to extreme aggressive [2]. Low-grade gliomas (LGG) (grades I and II) and high-grade glioma (HGG) (grades III and IV) are two major categories of brain tumors. The HGG grows rapidly, with a maximal life expectation is two years. In contrast, LGG grows slowly and sometimes allows the subject to have many years of life anticipation. Indeed, brain tumors have many characteristics, including variable locations, varying shapes, and sizes, and poor contrast, leading to overlapping with the intensity values of healthy brain tissues [3]. These characteristics affect the complexity of tumor growth and predict the extent of resection at the time of surgical planning, which has implications for patient treatment [4]. Therefore, distinguishing healthy tissues from the tumor and exact classification is not an easy task. Reliable segmentation and brain tumor classification are important to determine the tumor size, exact position, and type.

Timely detection of tumors is essential to treat brain tumors effectively. Medical imaging modalities such as computed tomography (CT), biopsy, cerebral angiography, myelography, positron emission tomography (PET), and MRI contribute a vital role towards brain tumor detection due to their non-invasive nature [5]. Amongst them, MRI and CT are the two most commonly exercised modalities. MRI provides an in-depth scan that can easily spot brain tumors and other infections.

Moreover, MRI is the most popular scan system in detecting several diseases and their treatment planning in clinical trials, especially brain tumors [6]. The neurological MR images for brain tumor diagnosis are captured from three different views, viz. axial, coronal, and sagittal [7], as illustrated in the Fig. 1a. Three primary MR modalities include: T1-weighted (T1-W), T2-weighted (T2-W), and FLAIR are utilized for brain tumor analysis [8] as illustrated in the Fig. 1b. Initially, the brain tumor diagnosis relies on the radiologist experts after the precise analysis and comprehensive monitoring of the image. However, owing to the limited availability of domain knowledge expertise, this process is time-consuming.

CAD systems truly help radiologists to improve the diagnosis of brain tumors in no time, thereby decreasing the mortality rate due to brain cancer. The fundamental rationale of the CAD is to automate the process of detecting brain tumor images with superior authenticity and reliability. Many articles have been published on brain tumor detection, classification, and segmentation to date. The majority of previous research focused on the conventional/machine learning-based approaches. Machine learning (ML) techniques are uniquely suitable to address big data challenges such as brain tumor segmentation. However, it has been used to train machines for image recognition, which generally requires human intervention and intelligence [9]. Typical ML methods apply human-designed based feature extraction techniques to differentiate tumor properties and features in imaging data [10]. For instance, Hu, Leland S., et al. proposed a novel study based on a decision tree classifier to predict underlying tumor molecular alterations using hand-crafted features [11]. These features were extracted from biopsies of 13 subjects using textural metrics. However, deep learning (DL) techniques do not need pre-selection of features because they automatically learn the most appropriate features for identification and prediction. Deep learning automatically mines important features, evaluates patterns, and categorizes the information by extracting multi-level features. Lower-level features include corners, edges, and basic shapes, while higher-level features include image texture, more processed shapes, and particular image patterns [12]. Moreover, deep learning techniques are used to extract features from additional information and integrate them into the recommendation process [13]. However, it is unable to maintain the spatial consistency and visual delineation of the subject. Therefore, the research paradigm for brain tumor detection, segmentation, and classification has now been shifted towards hybrid-based techniques. A hybrid approach is a method of combining the strengths of several classifier systems into a single system to enhance the overall accuracy.

The paradigm shift from conventional machine learning to deep learning and hybrid approach in the brain tumor analysis domain inspired us to do an extensive review over the last (10s) and the present decade (20s). The primary objective of this work is summarized as follows.

-

This review attempts to sum up the previously reported work on brain tumor segmentation, feature extraction, and classification utilizing brain MRI scans.

-

The comprehensive study has been exploited to show the development of soft computing, viz. artificial intelligence (AI) in the entire field of brain tumor analysis, both from an application-driven and methodology perspective.

-

The review presented here aims to assist the researcher in designing state-of-the-art CAD methods which can help radiologists for the early diagnosis of brain tumors.

-

To present the current trends in the domain of deep learning and a hybrid-based approach for tumor prognosis.

-

Consequently, the review presents the key findings in the dedicated discussion part that successfully elaborate the shift’s pendulum.

-

To highlight the prospects and open research challenges for the successful and fully automatic identification of brain tumors.

-

Moreover, the statistical analysis was carried out by considering various factors and presented in graphs.

-

Lastly, performances of CAD systems of brain tumors through multi-modal MR scans for tumor segmentation, feature extraction, and tumor classification have been studied and compared for the 2010–2020 years.

In this manuscript, we have used freely available search databases including Google Scholar, Scopus, IEEE explorer, Science Direct, and PubMed to find the most relevant papers by applying different queries. We have limited our search to manuscripts published between the years 2010–2020. We have used the following queries in various combinations: “brain cancer diagnosis”, “Brats dataset segmentation”, “brain tumor segmentation and classification”, “brain tumor detection using machine learning and deep learning classifiers”, “brain tumor MRI and deep learning”, “brain tumor using Harvard dataset”, “brain tumor detection and BrainWeb dataset”, “brain tumor detection and segmentation using TCIA dataset”, “artificial intelligence and brain tumor”, etc. More than 400 related papers are thoroughly reviewed, among them, 190 were most relevant to brain tumor detection, segmentation, and classification, which we have chosen for this manuscript.

After this introduction section, the whole review is organized as follows. We present the development for brain tumor segmentation techniques through MRI over the years 2010–2020 in Sect. 2. Then this review summarizes the development for brain tumor feature extraction and classification techniques through MRI over the years 2010–2020 in Sect. 3. The statistical analysis of the decades comprehensively examines the pros and cons of published literature for the design of a reliable, automated, cost-effective, robust, secondary diagnostic tool, i.e., a CAD system is done in Sect. 4. The current trend on deep learning-based brain tumor diagnosis is presented in Sect. 5. The current trend on hybrid-based brain tumor diagnosis is illustrated in Sect. 6. Then a comprehensive discussion part, where the limitations, the research findings, and research challenges are briefly elaborated in Sect. 7. The future research directions for the selection of appropriate technique, image-modality, and dataset for brain tumor segmentation and classification are briefly explained in Sect. 8. In the end, the conclusion of this review is made in Sect. 9.

2 The Development for Tumor Segmentation Techniques (2010–2020)

The process of cleaving an image into the region of interest (ROIs) for the easy depiction and characterization of the data is termed segmentation. The critical objective of segmentation is to locate the tumor regions for the more straightforward prognosis and classification of brain tumors by changing the representation of the MR images. It separates the tumor regions, for instance, necrotic and edema, from the non-tumor regions, mainly WM (white matter) and GM (gray matter) [3] as presented in Fig. 2.

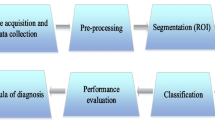

Owing to the complex anatomy and high inconsistency, segmentation or labeling of brain MR scans is challenging. For brain tumor segmentation, several conventional segmentation techniques have been utilized so far, including contour and shape-based methods, thresholding-based techniques, edge, and region-based algorithms, statistical-based approaches, multi-resolution analysis, etc. In this review, all other methods are categorized as conventional/ML-based methods (traditional approaches), DL-based methods (emerged method), and hybrid-based approaches (emerging techniques), as illustrated in Fig. 3. The pros and cons of the most commonly utilized segmentation and classification techniques are briefly summarized and compared in Table 1.

2.1 ML-Based Segmentation

Many researchers applied ML-based techniques for the segmentation of brain tumors. Amin et al. have designed an automated segmentation network for brain tumor MR images. A support vector machine (SVM) classifier is employed using different kernels to categorize the cancerous or non-cancerous brain images. The performance of the designed model has been evaluated on standard datasets named Harvard and Rider. The experimental outcome demonstrates that the model performed the segmentation task very efficiently [14]. Mehmood et al. utilized a self-organizing map (SOM) clustering algorithm for brain lesions segmentation [15]. The accuracy of the model was predicted at 0.76%. In another report, Demirhan and Guler proposed the SOM and learning vector quantization (LVQ) to segment WM and GM [16]. Zexuan et al. implemented generalized rough fuzzy C-means clustering for tumor segmentation [17]. In comparing Fuzzy C means (FCM) methods, a state-of-the-art technique was introduced that categorized WM, cerebrospinal fluid (CSF) spaces, and GM using Adaptive Fuzzy K-mean (AFKM) clustering. Researchers state that with the implementation of the AFKM algorithm, superior results differ in contrast to FCM qualitatively and quantitatively [18].

The majority of previous research focused on the machine learning-based approach. Machine learning techniques are uniquely suitable to address big data challenges such as brain tumor segmentation. However, some ML-based methods utilize manually segmented training images. Nevertheless, manual segmentation of the images is expensive, extensive/tedious, and needs a team of expert radiologists. Therefore, ML generally requires human intervention and intelligence [9] as typical ML methods apply human-designed-based feature extraction techniques to differentiate tumor properties and features in imaging data [10].

2.2 DL-Based Segmentation

DL techniques do not need an initial feature selection step because it automatically learns the appropriate features for identification. DL is a subgroup of ML that can automatically mine important features, evaluate patterns, and categorize the information by extracting multi-level features [12]. Various DL models and methods are at hand for tumor segmentation via MRI scans.

Havaei et al. utilized Convolutional Neural Network (CNN) for performing brain tumor segmentation tasks [19]. The experimental results show a 0.88% dice score and also reduce the segmentation time. Pereira et al. established a CNN-based automated brain tumor delineation system with a 0.88% dice score [20]. Researchers demonstrated a 3D-CNN model for the segmentation of brain lesions with a DSC of 0.89% in [21]. An automated brain tumor deep neural network (DNN) based model was proposed for MRI scans [22]. The 0.72% dice score was observed. A fully convolutional residual neural network (FCR-NN) is implemented for the tumor segmentation, with a 0.87% dice score [23]. Similarly, DNN based automated segmentation method, with a dice score of 0.87% was employed by [24]. In addition, the author utilized DNN, i.e., Fully Convolutional Network (FCN), for pixel-wise image representation for tumor semantic segmentation. The MRI scans utilized in the study include T1, T1c, T2, and Flair. In this way, the tumor regions are segmented more accurately [25]. Despite the benchmark results achieved by deep learning algorithms in the brain tumor segmentation domain, only a deep learning-based method still has limitations for accurate automated brain tumor segmentation. For instance, the limited capacity to delineate visual objects and impotent to consider the spatial consistency and appearance of segmentation results [26, 27].

The need for an hour is to design architecture for brain tumor segmentation that can effectively segment the brain tumor regions, require less memory, undergo fast computation, and improve boundary delineation. Therefore, the trend of research has been shifted towards utilizing efficient hybrid techniques.

2.3 Hybrid-Based Segmentation

The recent success of hybrid technology in the medical domain reflects the interest of researchers in computer vision. Hybrid systems combine two or more methods to overcome the various issues involving high computational time, low accuracy, and effectiveness.

Mittal M. et al. suggested a combined framework using SWT-CNN for brain tumor segmentation to enhance the CNN-based model accuracy performance [28]. Stationary Wavelet Transform (SWT) technique was applied for feature extraction rather than Fourier transform that provides improved results for discontinuous data followed by the random forest (RF) method for the classification task. The suggested technique contributes 2% improvement compared with traditional CNN. Nilesh Bhaskarrao et al. proposed Berkeley wavelet transform (BWT) with an SVM framework for tumor segmentation [29]. BWT was employed for the feature extraction task followed by an SVM to perform the classification task. The author reveals the following results: accuracy 96.51%, specificity 94.2%, and sensitivity 97.72%. In another research article, the segmentation method based on the fusion of RF and SVM (RF-SVM) was implemented for tumor lesions. It is the two-stage cascaded framework, where RF learns from tumor labels, and the resultant output is fed to the SVM to classify the labels [30]. Zhao et al. also utilized CNN and conditional random fields (CRFs) hybrid technique for efficient brain tumor segmentation. A dice score of 0.87% was achieved [31]. The review of progress for brain tumor segmentation in the years 2010–2020 is described in Table 2. The overview of freely available databases for brain tumor segmentation is shown in Table 3.

Through this survey, a comparative study of more than fifty segmentation approaches between the years 2010–2020 has urged us to conclude the following findings:

-

(1)

It is evident from Table 2 that various methodologies and algorithms have been developed for brain tumor segmentation in the past few years. Some fusion/hybrid algorithms are utilized, whereas some are the modified version of its basic.

-

(2)

The shift towards the utilization of hybrid techniques is noticeable. However, some researchers are still struggling with simple ML and DL algorithms to achieve touchstone performance. Ito et al. worked on the segmentation of brain tumors using a semi-supervised deep learning technique from the MR images [32]. This technique has attained improved results. Tianbao Ren et al. developed an automated Kernel-based FCM with a weighted fuzzy kernel clustering model that enhances brain image segmentation performance [33]. Results illustrate that the proposed combined algorithm achieves an improved misclassification rate which was less than 2.36%. In the deep learning area, Sundararajan et al. use a CNN algorithm for tumor segmentation with an accuracy of 89% [34]. Wu Deng et al. utilizes a basic CNN model with minor modifications [35]. The accuracy of the model was enhanced to 90.98%.

-

(3)

Over the last few years, deep learning algorithms are the top performers, especially DCNN [19, 36,37,38]. However, the main limitation of DCNN is a dependency on massive training data with expert radiologists annotations from different institutions. It is a pretty tricky task.

-

(4)

Mainly prior knowledge combined with artificial intelligence led to the framework’s design with enhanced brain tumor segmentation results.

-

(5)

It has been observed that the most commonly employed ML methods are SVM, FCM, and C-means, while the commonly employed DL method is CNN and DCNN. Hybrid techniques include the combination of two or more ML or DL techniques. For instance, Thillaikkarasi and Saravanan utilized kernel-based CNN with M-SVM deep learning algorithm for tumor segmentation with a dice score of 0.85% [39]. The guiding principle of hybrid techniques in achieving a robust, accurate, and low-cost solution for tumor segmentation.

3 The Development for Brain Tumor Feature Extraction and Classification Techniques (2010–2020)

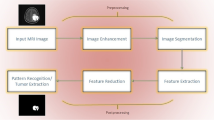

The process of allocating the input features to various categories/classes is termed classification. Before brain tumor classification and detection by CAD system, a key stage is feature analysis and feature selection. The curse of dimensionality is surmounted by reducing the redundancy of feature space through discriminating, appropriate, and compelling feature sets. The feature extraction step requires many MRI slices (such as axial, coronal, and sagittal planes) with ground truth.

3.1 Feature Extraction

It is the process of converting an MRI scan into a set of its features for classification purposes. Extracting the set of distinctive features is a challenging task. Various feature extraction techniques are used for this purpose, including principal component analysis (PCA), spectral mixture analysis (SMA), texture features, Gabor features, nonparametric weighted and decision boundary feature extraction, feature based on wavelet transform, discriminant analysis (DA), and so on [78], as shown in Fig. 3. Recently, the most efficient CAD system performs DWT (discrete wavelet transform) [79] to acquire the wavelet coefficients at various levels.

Feature reduction is an additional step to lessen the data dimension. For this purpose, independent components analysis (ICA), PCA, and linear discriminant analysis (LDA) are commonly applied [80]. The amalgamation between the feature extraction and feature reduction led to the development of a CAD system that will classify the images with clinically acceptable accuracy utilizing few features extracted via low computation resources. Such a developed CAD method can be effectively utilized as a secondary diagnostic tool for brain tumor classification.

Moreover, to reduce the intensity variation of the MRI scans, various filters, feature extraction, and selection or their fusion are performed. For instance, the Gabor wavelet features approach is performed to acquire texture information of the MRI scan. Kernel Principal Component Analysis (KPCA) lowers the redundancy by selecting only a small subset of the features, and Gaussian Radial Basis Function provides eminent information from any set of features [81]. However, in the pre-trained CNNs method, the fine-tuning-based feature extraction is employed [82].

3.2 Tumor Classification

The procedure of categorizing tumor grade or tumor as benign or malignant is called tumor classification. Owing to the distinct shape, location, size, and contrast of tumorous cells, brain tumor classification is challenging. Acquiring superior classification mainly depends on the extraction of an optimum set of features for classification and the choice of a suitable classifier. The factors like classification accuracy, computational resources, and algorithm performance should be considered to choose the optimum classifier.

Input patterns are classified into analogous classes via two types of classification techniques: (1) unsupervised classification, which includes hierarchical clustering, FCM, K-mean clustering, SOM, etc. (2) supervised classification, which includes decision tree, SVM, LDA, KNN, Bayesian classifier, etc. [83], as illustrated in Fig. 3. Unsupervised classification is the recognition of natural classes or groups in multi-spectral data. This classification required no prior knowledge; it recognizes classes as distinct units and has fewer chances for operator error.

However, in supervised classification, the samples of known identity are used to classify the samples of unknown identity. Supervised classification requires prior knowledge, labels are provided for the input dataset, and significant errors might be detected. The overview of detailed literature related to various feature extraction and classification methodologies for MRI images published during 2010–2020 is presented in Table 4.

3.3 ML, DL, and Hybrid-Based Feature Extraction and Classification

The brain tumor classification techniques followed the same paradigm shift as described earlier in the segmentation techniques. As the 10s started, a considerable number of researchers focused on conventional ML-based classifiers for classification.

For instance, Alfonse et al. use the SVM for automated tumor classification using MR scans [123]. Firstly brain images are segmented employing adaptive thresholding. Secondly, features are extracted using Fast Fourier Transform (FFT), then minimal redundancy maximal relevance methods are used for feature selection. This technique achieved 98.9% classification accuracy. In SVM, the classification of different points based on proximity accompanied by splitting hyperplane required more execution time to calculate linear or quadratic complications.

As the decade progressed, deep learning methodologies are employed for classification purposes. In an article, features are extracted using segmentation algorithms, i.e., Dense CNN, while the features are classified using recurrent neural networks (RNN) [112]. Convolutional and fully connected networks are the most commonly employed DL-based classification models of brain tumors [124].

Recently (in the 20s), the emerging hybrid techniques are commonly employed classification methods. Moreover, hybrid intelligent systems are also implemented for the design of classifiers utilizing soft computing approaches. Soft computing intelligent paradigms include neural networks, bio-inspired algorithms such as genetic algorithm (GA), used to build robust classification systems. Sujan M et al. proposed a combined technique with k-mean and FCM [125]. This technique implemented a median filter for MR brain images denoising and brain surface extractor for features extraction; then clustering is done through an integrated hybrid method. Deepak and Ameer developed a CNN-based GoogleNet transfer learning classification model to classify brain tumors including glioma, meningioma, and pituitary [126]. The proposed algorithm attained a better accuracy of 92.3%, which was more enhanced to 97.8% by applying multiclass SVM. Mohsen, H. et al. implemented FCM followed by DWT, combined with the DNN for the tumor classification, utilizing 66 T2-W MRI scans [108]. The model depicted a 96.97% classifying rate. Moreover, Chaplot S. et al. proposed the novel approach of combining wavelets with SOM and SVM for tumor classification by the use of 52 T2-W MRI scans [127]. The proposed method showed more than 94% accuracy for SOM and 98% classification accuracy for SVM.

Through the survey of Table 4 and literature study, it was observed that:

-

(1)

Discrete wavelet transform, PCA, and texture analysis (TA) is the commonly employed feature extractor methods.

-

(2)

CNNs attain high classification and prediction performance when the algorithm is already pre-trained as a feature extractor. In brain tumor patients, the overall survival time prediction played a significant role in the deep feature extractor methods. Numerous methods that embody feature choice, feature pooling, and data augmentation networks are included in CNN + activation feature methodology [124].

-

(3)

Hybrid systems (combined with a pre-feature extractor and different deep learning and machine learning approaches) are commonly employed for efficient tumor classification.

-

(4)

Most of the CAD systems for the classification of brain tumors are imperfect in terms of higher complexity, high dimensions of feature vectors, and high generalization capability. Even though significant efforts have been made in the last decade, much work is still needed to establish a CAD system with a high success rate.

-

(5)

We believe the hybrid intelligent systems designed by integrating machine learning approaches with other methodologies offer a highly proficient, accurate classification system. It appears to give higher classification accuracy in the range of 95%-100%.

4 Statistical Analysis of Conducted Research

The prime objective of this comprehensive section is to acquire the answer to various queries:

4.1 Commonly Employed MRI Modalities

Previous studies already reveal that MRI is the most commonly used modality for performing brain tumor classification and segmentation tasks [128]. However, this research attempts to highlight the frequently used MRI modality. The fact that among the different MRI modalities, the most commonly used single modality is T1-W which is 18% of total reviewed studies. While the image dataset is constituting T1, T2, T1-CE, and FLAIR are the current winners by acquiring 32% of the total publications illustrated in Fig. 4.

4.2 Year-wise Increment in Publication for Brain Tumor Analysis

A range of well-renowned databases, including IEEE Xplorer, Science Direct, PubMed, Scopus, were searched utilizing the brain tumor/CNS cancer? keyword combined with MRI for the segmentation, classification, and detection tasks through machine learning, deep learning, and hybrid approaches. Afterward, the most relevant 248 articles were scrutinized between the years 2010–2020. Despite the benchmark results obtained so far. The era of brain tumor analysis has propelled intense research efforts in the last and present decade to acquire the robust computer vision technique. The yearly development of soft computing viz. artificial intelligence from application-driven and methodology perspectives has been assessed from the rapidly increasing year-wise publications. The year-wise distribution is illustrated in Fig. 5. Undoubtedly, the ?0s would be the decade of computer vision arena for brain tumor segmentation, classification, and detection tasks.

4.3 Commonly Employed Datasets

Acquiring the brain tumor dataset is the primary task. Certain datasets are easily and freely assessed for experimentation like BrainWeb, BRATS [129], Harvard, The Cancer Imaging Archive (TCIA), Oasis, etc. Most of the publications were exploited BRATS data sets which constitute 30% of the current reviewed articles. However, the private dataset ranked second with 25% of the reviewed article, as shown in Fig. 6. This is because before the launch of the representative BRATS dataset research community was utilizing the private dataset acquired from the local laboratories and hospitals. However, the research community is still utilizing the private dataset to compare their proposed models.

4.4 Classifier-Based Publication Statistics

Deep learning technology resulted in great realistic performances in brain tumor image analysis [70]. CNN can be known as an archetypical classifier owing to immense usage in the prognosis of various diseases such as brain tumor classification, segmentation, and detection [20]. Moreover, the number of CNN-based architectures (CNN combined with other architectures) has developed between the years 2010–2020 [14, 130,131,132]. The retrospective analysis reveals that the CNN-based architectures have enhanced the performance accuracy for brain tumor detection. In this review, 64 CNN and CNN-based architectures were utilized for brain tumor analysis; it forms 26% of the designated study. However, the SVM (ML algorithm) ranked second with 39 publications, which constitute 16% of current research. Fig. 7 presented the core statistics of classifier based publications.

4.5 Most Studied CAD Tasks

From the two-decade analysis, it comes to know that brain tumor segmentation is the most studied CAD task so far. However, classification and detection are ranked second and third, as shown in Fig. 8. This is because radiologists mostly find it difficult to segment the tumor from an MR image to classify the tumor type. It is also a time-consuming task. Therefore, more research is carried out on brain tumor segmentation to assist radiologists and clinicians in diagnosing brain tumors and their sub-regions. Automated segmentation also helps in distinguishing tumor regions from the non-tumor region in no time.

4.6 Summarization of Previously Reported Works

This review attempts to summarize the various reviews published between 2010 and 2020 on brain tumor segmentation, classification, and detection to the best of our knowledge. Most of the existing literature covers the conventional ML-based methods for brain tumor analysis, as depicted in Table 5. In comparison, this study will cover a few ML-based methods with special emphasis on deep learning and hybrid-based methods. It depicts that the present review attempts to address all the limiting issues and lacks in the existing surveys.

5 Current Trends on DL-Based Brain Tumor Diagnosis

In contrast to the machine learning algorithms, the deep learning methods showed more usage for the segmentation and classification of brain tumor MR images. Herein, we made a comprehensive study to show the significant advancement in the deep learning approach over the year 2010–2020.

A deep learning network has multiple hidden layers of the network representing input data with various layers of extraction, supporting the reduction of many problems in conventional machine learning methods. Furthermore, deep learning methods have features such as self-learning and generalization ability, enabling good quantitative analysis of medical imaging features. Due to these characteristics, deep learning-based techniques achieve accurate detection results of neurological disorders and are greatly acknowledged in the medical image processing domain [138]. Many CAD systems incorporated deep learning-based segmentation and classification approaches in medical image processing, including chest, breast, pulmonary nodules, and brain tumors [58, 139,140,141].

Different deep learning-based networks such as DCNNs [142], CNN’s, and auto-encoders [143] are designed for effective and accurate segmentation, feature detection, and classification of brain tumors via MRI scans. Many researchers with great motivation are doing more research in different institutes and developing new algorithms to improve performance.

A novel brain tumor segmentation technique named WMMFCM was presented to reduce the challenges of FCM by using three different stages, including wavelet multi-resolution (WM), morphological pyramid (M), and FCM clustering technique. BrainWeb (152 MR scans) and BRATS (81 glioma images) datasets are employed to verify the performance of the proposed architecture. Implemented algorithms achieved 97.05% accuracy and 95.85% accuracy for BrainWeb and BRATS, respectively [144]. Researchers proposed a tumor segmentation technique using semi-automatic software [145] to register multi-modal 159 T1-W and T2-W low-grade gliomas MR scans [146]. The CNN model was applied for the classification task and obtained a cross-domain performance of 87.7%, 93.3%, and 97.7% for accuracy, sensitivity, and specificity. In another report, the author uses the concept of CNN with small kernels to overcome the problem of overfitting and provided small weights in the CNN architecture [20]. Firstly starts with infrequent intensity and patch normalization; the research showed efficiency and effectiveness with the combinations of data augmentation. Secondly, training of patches is conducted through artificially rotating the images. Finally, a defined threshold is employed to enforce volumetric limitations, which means removing small clusters that may be predicted as trivial tumors. Experimental results achieve 84% accuracy for the baseline network while 88% accuracy rate was achieved by U-net.

Over the past few years, the DCNN model has also shown significant advancements. The authors designed an automatic DCNN model aiming to overcome the issue of over-fitting by combining DCNN with max-out and drop-out layers [147]. BRATS 2013 dataset MR scans collection is used to evaluate the performance of the proposed algorithm. Results demonstrated that the dice similarity coefficient was achieved 80%, 67%, 85% for WT, TC, and ET, respectively. Few studies are published to overcome the issues of segmentation using an improved version of DCNN [148, 149]. Another important problem investigated by a large group of authors is the existence of multiple tumors [108]. The diversity of tumors in the human brain demands high accuracy, which surges complexity; in this situation, input MR image and its characteristics play an important role. In another article, the author proposed a novel segmentation method based on a multi-modal super voxel with the RF classifier [150]. To evaluate the performance of the method, BRATS and clinical datasets include; MRI scans and diffusion tensor imaging (DTI) datasets are used in such a way to classify each super voxel as normal or tumorous (core or edema) scan. Sensitivity and dice score is used for performance measures 86% and 0.84% accuracy is reported for clinical dataset while better accuracy 96% and 0.89% are achieved for multi-modal images taken BRATS. Iqbal, Sajid, et al. introduced three different approaches for the segmentation of brain tumors using BRATS 2015 MR scans. Interpolated Network (IntNet), show supremacy over Skip-Net, and SE-Net, IntNet reached top values 90%, 88%, 73% for three considered factors dice coefficient, sensitivity, and specificity respectively [151].

Moreover, various techniques are developed to enhance and outdo the CNN capabilities in terms of accuracy, computational time particularly (when handling massive size datasets), and hardware specifications [108]. Researchers designed the FCM technique to segment 66 T2-W MRI scans into four groups: sarcoma, metastatic bronchogenic carcinoma normal, and glioblastoma brain tumor. This study used a combination of DWT with the DNN algorithm and achieved 96.97% classification accuracy. In the present scenario, improved CNN is employed to resolve the issue of the manual diagnosing process. Another presented an enhanced CNN (ECNN) model integration of the BAT algorithm to segment brain tumors automatically [152]. They implemented an approach that is also useful in controlling over-fitting using function loss of BAT and small kernels features of ECNN. The accuracy of ECNN is found 3% more classical CNN. The author of [38] proposed an end-to-end incremental EnsembleNet algorithm for glioblastomas segmentation and obtained a dice score of 0.88% on BRATS 2017.

As the 20s decade progressed, the number of publications has been increased where the combination of two or more deep learning or machine learning techniques is employed to overcome the limitations of both individual techniques. In this way, a new robust hybrid system can be designed with improved performance metrics.

6 Current Trend on Hybrid-Based Brain Tumor Diagnosis

Hybrid algorithms are a combination of two or more algorithms used to achieve superior performance compared with single methods. Such hybrid algorithms aim to overcome the shortcomings of one method by a second alternative method. SVM is a commonly acknowledged approach in different applications and integrated with conventional and also with current trending approaches.

A novel hybrid wavelet separately and SOM system for the tumor segmentation of 52 axial T2-W MR slices was designed by [127]. SVM technique is applied to classify healthy and unhealthy images affected by Alzheimer’s disease. Significant classification performances, 94% and 98%, were reported for SOM and SVM, respectively. Results determine the effectiveness of the implemented system. A combination of SVM with GA is presented to segment normal and tumor regions. The spatial gray level dependence method (SGLDM) is applied by [88] for texture features extraction. Harvard medical dataset consisting of 83 brain images including (29 normal, 22 malignant, and 32 benign tumor slices) was used for experiments. Results show the varying performance ranging 94.44% to 98.14% accuracy and 91.9% to 97.3% for sensitivity.

FCM clustering is a well-known technique in the hybrid class. Rajendran, A. and R. Dhanasekaran proposed an effective region-based fuzzy clustering segmentation approach termed enhanced possibilistic FCM [153]. The proposed method is implemented to control the initialization and weak boundaries challenges in region-based methods. The integration is applied on 15 CE-T1W and FLAIR scans to classify tumors. Results reported average accuracy indices, 95.3%, and 82.1% for similarity and Jaccard, respectively. Another works to detect and classify brain tumors by merging FCM clustering with an SVM classifier [28]. The model’s performance is compared on different sets of images of 120 patients using ANN and SVM. SVM classifier performs well on small datasets, while for larger datasets, ANN performs better. Abdel-Maksoud et al. explore a novel combination called K-means Integrated with Fuzzy C-means (KIFCM) clustering for brain tumor segmentation using three datasets, including 81 images of BRATS, 152 images of BrainWeb, and 22 images from digital imaging and communications in medicine (DICOM) [154]. K-means decreases computational time, whereas FCM increases the accuracy of brain tumor recognition. KIFCM and FCM algorithms reported the same accuracy, but KIFCM uses a small execution time and achieved 90.5%, 100%, and 100% accuracy for all datasets. An effective and novel detection model based on SOM incorporation with LVQ is presented in [155]. For the experiments, 20 patients of glial tumor MRI scans including; T1-W, T2-W, and FLAIR are used. This research also applied a skull stripping algorithm on the IBSR database, which outperforms other algorithms. However, experimental results on BRATS 2012 obtained dice similarity of 91%, 87%, 96%, 61%, and 77% WM, GM, CSF, tumor, and edema.

Development in hybrid systems is increased further through the amalgamation of more than two approaches. Fuzzy k-means (FKM) poorly supervised problems associated with the huge amount of data. To increase the abilities of data supervision, FKM is combined by SOM to develop a tumor detection method [156]. SOM supports early clustering and decreases the dimensionality of input images. Harvard brain repository is used to evaluate the performance of the proposed model on MRI scans. This integration of algorithms achieves 96.18% accuracy and 87.18% sensitivity. Vishnuvarthanan et al. designed a combined optimization technique with FKM to efficiently process MR image sequences using the bacteria foraging optimization (BFO) method integrated through a modified FKM approach [157]. The suggested model produces promising results with 97.14% sensitivity and 93.94% specificity. Another group designed a hybrid clustering system by merging three different algorithms (k-means, FCM, and SOM) to automatically segment brain tissue [158]. Firstly, pixel intensity values are fixed to improve image resolutions, secondly applied a super-pixel algorithm to link pixels with related intensity into objects. Finally, extracted features and their labels produced by proposed clustering methods are used to train a neural network (NN) for classification. Results achieved 98.10%, 98.97%, and 79.66% for accuracy and specificity. These results outperform other clustering methodologies. Namburu, A. et al. proposed a novel soft fuzzy rough c-means (SFRCM) technique to extract soft tissues like WM, GM, and CSF [159]. To evaluate the efficiency of the SFRCM method 20, 10, and 20 images of BrainWeb, BRATS, and IBSR databases respectively were utilized. MR scans are employed to categorize high-grade glioma and achieved 94.04% accuracy.

This comprehensive study reveals that the hybrid techniques outperform the deep learning approach for brain tumor segmentation and classification in the 20s decade. The whole summary of a few hybrid-based approaches along their association with MR scans modalities, the task performed, data set, and size influencing the performance evaluation is given in Table 6. The abbreviation list of this paper is presented in Table 7.

7 Discussion

In retrospect, this review reveals that brain tumor analysis attains state-of-the-art results in the domain of neuroimaging analysis. This review reports the substantial diversity of various algorithms over the last decade and the past year for brain tumor analysis in terms of segmentation, feature extraction, and classification. In consequence of immense scrutiny following outcomes are made:

-

In the year 2010–2015, conventional/machine learning algorithm was the trend for brain tumor diagnosis, including all its aspects viz. image segmentation, feature extraction, and classification. From 2015 to 2019, the traditional ML techniques have been replaced by deep learning-based algorithms for medical image analysis, especially brain tumor investigation. With the critical analysis of Tables 1 and 2, it was observed that the ML and DL-based systems have accuracy in the range of 75–95%. For example, Macyszyn et al. illustrated the classification of 105 high-grade gliomas (HGG) patients into long and short-term categories utilizing the SVM modal [168]. The accuracy of the architecture lies within the range of 82–85%. In another report, Emblem et al. demonstrated an SVM classifier using histogram data of 235 patients to predict glioma patient overall survival [169]. The accuracy, sensitivity, and specificity were 0.79%, 78%, and 81% at six months and 0.85%, 85%, and 86% respectively at three years for overall survival prediction. Sarkiss et al. undergo a systematic literature analysis (2000–2018) to provide evidence for the utilization of machine learning techniques for glioma detection [170]. The outcome reveals a sensitivity between 78 and 93% and specificity between 76 and 95%. However, the 20s are the emerging hybrid technique era. Hybrid techniques included the amalgamation of one or more deep learning or hybrid techniques and were integrated into current neuroimaging analysis pipelines. These techniques have impactful results regarding efficiency and accuracy of classification (in the range 92-100%. For instance, Nie et al.’s findings suggest that hybridization of traditional ML-based approach named SVM with deep learning framework produce better results than bare models in terms of accurate prediction of overall survival [171]. 3D CNN (deep learning architecture), when combined with SVM (machine learning architecture), attains an accuracy of 96% for the prediction of OS in 69 HGG patients. The comprehensive analysis was carried out to investigate the paradigm shift conventional/Machine learning \(\rightarrow\) Deep \(\rightarrow\) Hybrid approach in the domain of brain tumor analysis. It is justified in the form of a graph presented in Fig. 9.

-

The perfect design/architecture for the ML, DL, and hybrid-based techniques is not the sole determinant for achieving significant results. However, after the literature survey carried out in this review, one can distill the high accuracy architecture method for individual tasks with its application area in the specific type of brain tumor detection. The progress and development of high-performance brain tumor CAD systems are presented in Fig. 10. We found hybrid-based architectures and deep learning approaches compete for performing brain tumor segmentation and classification task through this analysis. However, the researchers that come by significant performance on ML or DL-based systems than the hybrid system might be due to trade outside the network, such as normalization in pre-processing techniques or data augmentation. For instance, Zhang et al. investigated the gliomas grading in 120 patients [172]. Researchers could classify LGG and HGG with 94-96% accuracy by utilizing combined SVM and SMOTE (synthetic minority over-sampling technique). Moreover, by observing different BRATS challenges, even using similar architecture for the same type of network, extensively varying results were obtained. However, accuracy could also be increased by adding more layers to the framework [173, 174].

-

Designing hybrid architecture for specific task properties attains significant results than utilizing straightforward machine learning or deep learning architecture. Since selecting and integrating one or more systems for attaining desired results could be possible in the hybrid system. The researchers who acquire robust performances for hybrid approaches could do so because they implemented the best augmentation and pre-processing techniques. This is an easy way to boost up the generalizability of the network without altering the architecture. The key contributors to the significant performance of any network are data augmentation techniques, pre-processing techniques, hyper-parameter optimization (i.e., learning rate and drop out), etc. Moreover, changes in the network and receptive field’s input size could help domain experts achieve good performance results. However, unfortunately, so far 10s there is a lack of exact techniques or the best suitable hyperparameters for practical implementation. In the brain image analysis domain, Bayesian methods to optimize hypermeters have not been implemented until the 20s.

-

Brain tumor segmentation through radiotherapy treatment planning depends on manual segmentation of tumors by expertise, making the process slow, arduous, and sensitive due to differences of opinion among physicians. For the automatic and accurate segmentation of gliomas, numerous tools and algorithms have been proposed in the 10s [175, 176], and the process continues in the 10s. In this direction, to bring out efficient approaches and routes to solve the challenging problem, BRATS (multi-modal brain tumor segmentation challenge) is organized annually [177, 178]. During half last decade (2015–2019), most of the exploited approaches of the BRATS rely on deep learning architectures, e.g., 3D-CNNs [179]. However, the top-performing approaches utilize ensembles of deep learning architectures [180, 181] or they even hybridized the various deep learning architectures with algorithms like CRFs (conditional random fields) [182] known to be as emerging hybrid techniques. Moreover, in the BRATS 2017 and 2018 challenges, the top-performing methods include cascaded networks, multi-view and multiscale approaches [183] generic U-Net architecture with data augmentation and post-processing for brain tumor segmentation [184]. Thus, emerging hybrid techniques are considered a robust and practical way to improve rugged segmentation results.

8 Future Research Directions

For the last decade, the direction of research on brain tumor diagnosis from MRI has been turned into a hybrid intelligent system derived from the combination of different algorithms and networks as shown in Fig. 10 [19, 20, 38, 41, 45, 49, 50, 52, 57, 70, 160]. This is the easiest way to employ the strengths and weaknesses, leading to more robust and exceptional CAD system performance. Despite extensive research, strength, and huge popularity in terms of accuracy, conventional machine learning, and deep learning methods, especially CNN’s, encounter various challenges. For example, they need a large amount of training data which could either be difficult to acquire for each domain. Moreover, it can be tough to have the desired accuracy for a target problem [185]. Furthermore, the increment in the number of layers in the deep learning model cannot guarantee the increment in classification accuracy. Similarly, owing to running GPU and RAM (hardware devices), the DL models are computationally expensive. Lack of computational power results in more time to train the network, which depends on the size of the training dataset. Thus, employing DL models in real-time scenarios, for instance, the clinical practice remains a mystery [186].

The employment of deep learning in neuroimaging analysis endure black-box problems in artificial intelligence (AI). The researchers are well known for inputs and outputs but not known for internal representations. Therefore, DL methods are highly affected by the inherent problems of medical images, i.e., noise and illumination. However, the solution to this problem is introducing pre-processing steps before sending input to the model to improve the performance. Moreover, acquiring a massive amount of data with expert annotations from multiple institutions is difficult. The BRATS challenge was organized using pre-operative various institutional data of MRI scans for brain tumor sub-regions detection to provide the research community with a rich amount of images and a platform for comparing and evaluating various brain tumor algorithms. The dataset is increasing every year. Despite much momentum gained by ML and DL methods in terms of accuracy, the emerging hybrid techniques have replaced them and have integrated them successfully into neuroimaging pipelines.

One of the significant factors that hamper the effectiveness of deep learning techniques is the requirement of a bulky dataset to train the framework, thus requiring more computation resources. However, acquiring such a huge amount of data in the medical domain is challenging. Therefore, to conquer this loophole, various architectures have been designed so far to overcome the above problem. For instance, the utilization of generative adversarial network (GAN) [187]. This network requires scarce data for training. Even this fact still cant be ignored that more data will give better performance. The dataset is increasing every year. More publically available databases with experts labeling could be created like BRATS to bridge this issue. Furthermore, the data augmentation method can be used to enhance the training dataset. Second, most DL-based frameworks are impotent due to their restricted capacity to delineate visual objects to consider the spatial consistency and appearance of segmentation results [26, 27]. Therefore, a robust hybrid/fusion (a new learning-based method) segmentation method is suggested. Thanks to the hybridized approach, a more accurate and efficient methodology based on integrating machine-learned features and hand-crafted features can resolve this issue for the efficient automated segmentation of brain tumors. Although some research has already been conducted to resolve the issue [188, 189]. However, research in this realm is still ongoing for further advancement.

The current research community is deprived of perfect design for brain tumor MRI segmentation and classification in terms of superior accuracy, low computational time, minimum cost, acquiring a massive amount of data for training, and requiring a group of experts for evaluation so on. Certain areas like excellent accuracy still need extensive research. In a report, accuracies are compared for different ML and DL methods, including SVM, KNN, LDA, and LR. Whereby, the algorithms are tested on 163 samples of BRATS 2017. The study reveals that the best recognition performance is attained using a hybrid system fusing LDA with the CNN classifier [190]. All the other snags of ML and DL methods can be overcome by the fusion of two or more techniques that effectively vanquish the ambiguity in the field of brain tumor segmentation and classification utilizing brain MRI scans.

9 Conclusion

This study systematically covered various brain tumor segmentation, feature extraction, and classification techniques over 2010–2020. Two-decade analysis reveals certain facts related to the development in the usage of artificial intelligence-based approaches for performing tumor segmentation and classification tasks. It will assist radiologists and clinicians in early treatment planning and diagnosis of brain tumors. Finally, considering the statistical analysis of two decades, it has been observed that researchers should practice more deep learning, ensemble, or hybrid-based techniques to design robust CAD systems.

In the future, it can be explored that the combination of few-shot learning techniques with CNN could be more effective towards fulfilling the requirements of segmentation and classification of brain tumors. Since few-shot learning is an advanced technique where less number of images is required for the training the network. Since acquiring a huge amount of data with expert annotations from multiple institutions is difficult in each domain. Moreover, research can be conducted using other medical modalities like computed tomography (CT) for brain tumor detection and classification.

References

Bray F, Ferlay J, Soerjomataram I, Siegel RL, Torre LA, Jemal A (2020) Erratum: Global cancer statistics 2018: Globocan estimates of incidence and mortality worldwide for 36 cancers in 185 countries. CA Cancer J Clin 70(4):313

Louis DN, Perry A, Reifenberger G, Von Deimling A, Figarella-Branger D, Cavenee WK, Ohgaki H, Wiestler OD, Kleihues P, Ellison DW (2016) The 2016 world health organization classification of tumors of the central nervous system: a summary. Acta Neuropathol 131(6):803–820

Gordillo N, Montseny E, Sobrevilla P (2013) State of the art survey on mri brain tumor segmentation. Magn Reson Imaging 31(8):1426–1438

Ganau L, Paris M, Ligarotti GK, Ganau M (2015) Management of gliomas: overview of the latest technological advancements and related behavioral drawbacks. Behav Neurol 1–8:2015

Jayadevappa D, SrinivasKumar S, Murty DS (2011) Medical image segmentation algorithms using deformable models: a review. IETE Tech Rev 28(3):248–255

Yazdani S, Yusof R, Karimian A, Pashna M, Hematian A (2015) Image segmentation methods and applications in mri brain images. IETE Tech Rev 32(6):413–427

Shijin Kumar PS, Dharun VS (2016) A study of mri segmentation methods in automatic brain tumor detection. Int J Eng Technol 8(2):609–614

Preston DC (2006) Magnetic resonance imaging (mri) of the brain and spine: Basics. https://case.edu/med/neurology/NR/MRI%20Basics.htm

Goodfellow I, Bengio Y, Courville A, Bengio Y (2016) Deep learning, vol 1. MIT Press, Cambridge

Zacharaki EI, Wang S, Chawla S, Yoo DS, Wolf R, Melhem ER, Davatzikos C (2009) Classification of brain tumor type and grade using mri texture and shape in a machine learning scheme. Magn Reson Med 62(6):1609–1618

Hu LS, Ning S, Eschbacher JM, Baxter LC, Gaw N, Ranjbar S, Plasencia J, Dueck AC, Peng S, Smith KA et al (2017) Radiogenomics to characterize regional genetic heterogeneity in glioblastoma. Neuro Oncol 19(1):128–137

Zeiler MD, Fergus R (2014) Visualizing and understanding convolutional networks. In Proceedings of the European conference on computer vision, Zürich, Switzerland, pp 818–833. Springer

Yin C, Shi L, Sun R, Wang J (2020) Improved collaborative filtering recommendation algorithm based on differential privacy protection. J Supercomput 76(7):5161–5174

Amin J, Sharif M, Yasmin M, Fernandes SL (2020) A distinctive approach in brain tumor detection and classification using mri. Pattern Recogn Lett 139:118–127

Mehmood I, Ejaz N, Sajjad M, Baik SW (2013) Prioritization of brain mri volumes using medical image perception model and tumor region segmentation. Comput Biol Med 43(10):1471–1483

Demirhan A, Güler I (2011) Combining stationary wavelet transform and self-organizing maps for brain mr image segmentation. Eng Appl Artif Intell 24(2):358–367

Ji Z, Sun Q, Xia Y, Chen Q, Xia D, Feng D (2012) Generalized rough fuzzy c-means algorithm for brain mr image segmentation. Comput Methods Programs Biomed 108(2):644–655

Sulaiman SN, Non NA, Isa IS, Hamzah N (2014) Segmentation of brain mri image based on clustering algorithm. In: Proceedings of the 2014 IEEE Symposium on Industrial Electronics & Applications (ISIEA), Kota Kinabalu, Malaysia, pp 60–65. IEEE

Havaei M, Davy A, Warde-Farley D, Biard A, Courville A, Bengio Y, Pal C, Jodoin P-M, Larochelle H (2017) Brain tumor segmentation with deep neural networks. Med Image Anal 35:18–31

Pereira S, Pinto A, Alves V, Silva CA (2016) Brain tumor segmentation using convolutional neural networks in mri images. IEEE Trans Med Imaging 35(5):1240–1251

Kamnitsas K, Ledig C, Newcombe VFJ, Simpson JP, Kane AD, Menon DK, Rueckert D, Glocker B (2017) Efficient multi-scale 3d cnn with fully connected crf for accurate brain lesion segmentation. Med Image Anal 36:61–78

De Smedt F, Hulens D, Goedemé T. On-board real-time tracking of pedestrians on a uav. In: Proceedings of the IEEE conference on computer vision and pattern recognition workshops, Boston, pp 1–8. IEEE, 2015

Chang PD (2016) Fully convolutional deep residual neural networks for brain tumor segmentation. In: Proceedings of the International workshop on Brainlesion: Glioma, multiple sclerosis, stroke and traumatic brain injuries, Athens, Greece, pp 108–118. Springer

Randhawa RS, Modi A, Jain P, Warier P (2016) Improving boundary classification for brain tumor segmentation and longitudinal disease progression. In: Proceedings of the International Workshop on Brainlesion: Glioma, Multiple Sclerosis, Stroke and Traumatic Brain Injuries, Athens, Greece, pp 65–74. Springer

Rao V, Sarabi MS, Jaiswal A (2015) Brain tumor segmentation with deep learning. MICCAI Multimodal Brain Tumor Segmentation Challenge (BraTS) 59:1–4

Zheng S, Jayasumana S, Romera-Paredes B, Vineet V, Su Z, Du D, Huang C, Torr PHS (2015) Conditional random fields as recurrent neural networks. In Proceedings of the IEEE international conference on computer vision, Santiago, Chile, pp 1529–1537. IEEE

Zhao F, Xie X (2013) An overview of interactive medical image segmentation. Ann BMVA 2013(7):1–22

Singh A et al. (2015) Detection of brain tumor in mri images, using combination of fuzzy c-means and svm. In Proceedings of the 2015 2nd International Conference on Signal Processing and Integrated Networks (SPIN), pp 98–102. IEEE, 2015

Bahadure NB, Ray AK, Thethi HP (2017) Image analysis for mri based brain tumor detection and feature extraction using biologically inspired bwt and svm. Int J Biomed Imaging 1–13:2017

Amiri S, Rekik I, Mahjoub MA (2016) Deep random forest-based learning transfer to svm for brain tumor segmentation. In Proceedings of the 2016 2nd International Conference on Advanced Technologies for Signal and Image Processing (ATSIP), Monastir, Tunisia, pp 297–302. IEEE

Zhao X, Wu Y, Song G, Li Z, Fan Y, Zhang Y (2016) Brain tumor segmentation using a fully convolutional neural network with conditional random fields. In Proceedings of the International Workshop on Brainlesion: Glioma, Multiple Sclerosis, Stroke and Traumatic Brain Injuries, Athens, Greece, pp 75–87. Springer, 2016

Ito R, Nakae K, Hata J, Okano H, Ishii S (2019) Semi-supervised deep learning of brain tissue segmentation. Neural Netw 116:25–34

Ren T, Wang H, Feng H, Chensheng X, Liu G, Ding P (2019) Study on the improved fuzzy clustering algorithm and its application in brain image segmentation. Appl Soft Comput 81:1–9

Sundararajan RSS, Venkatesh S, Jeya Pandian M (2019) Convolutional neural network based medical image classifier. Int J Recent Technol Eng 8(3):4494–4499

Deng W, Shi Q, Luo K, Yang Y, Ning N (2019) Brain tumor segmentation based on improved convolutional neural network in combination with non-quantifiable local texture feature. J Med Syst 43(6):1–9

Hu Y, Xia Y (2017) 3d deep neural network-based brain tumor segmentation using multimodality magnetic resonance sequences. In: Proceedings of the International MICCAI Brainlesion Workshop, Granada, Spain, pp 423–434. Springer

AlBadawy EA, Saha A, Mazurowski MA (2018) Deep learning for segmentation of brain tumors: impact of cross-institutional training and testing. Med Phys 45(3):1150–1158

Saouli R, Akil M, Kachouri R et al (2018) Fully automatic brain tumor segmentation using end-to-end incremental deep neural networks in mri images. Comput Methods Programs Biomed 166:39–49

Thillaikkarasi R, Saravanan S (2019) An enhancement of deep learning algorithm for brain tumor segmentation using kernel based cnn with m-svm. J Med Syst 43(4):1–7

Shanthi KJ, Sasikumar MN, Kesavadas C (2010) Neuro-fuzzy approach toward segmentation of brain mri based on intensity and spatial distribution. J Med imaging Radiat Sci. 41(2):66–71

Somasundaram K, Kalaiselvi T (2010) Fully automatic brain extraction algorithm for axial t2-weighted magnetic resonance images. Comput Biol Med 40(10):811–822

Donoso R, Veloz A, Allende H (2010) Modified expectation maximization algorithm for mri segmentation. In Proceedings of the Iberoamerican Congress on Pattern Recognition, Sao Paulo, Brazil, pp 63–70. Springer

FazelZarandi MH, Zarinbal M, Izadi M (2011) Systematic image processing for diagnosing brain tumors: a type-ii fuzzy expert system approach. Appl Soft Comput 11(1):285–294

Nan Zhang S, Ruan SL, Liao Q, Zhu Y (2011) Kernel feature selection to fuse multi-spectral mri images for brain tumor segmentation. Comput Vis Image Underst 115(2):256–269

Ramasamy R, Anandhakumar P (2011) Brain tissue classification of mr images using fast Fourier transform based expectation-maximization gaussian mixture model. In: Proceedings of the International Conference on Advances in Computing and Information Technology, Berlin, Heidelberg, pp 387–398. Springer

Tanoori B, Azimifar Z, Shakibafar A, Katebi S (2011) Brain volumetry: an active contour model-based segmentation followed by svm-based classification. Comput Biol Med 41(8):619–632

Noreen N, Hayat K, Madani SA (2011) Mri segmentation through wavelets and fuzzy c-means. World Appl Sci J 13:34–39

Mohsen H, Ahmed El-Dahshan E-S, Salem A-BM (2012). A machine learning technique for mri brain images. In Proceedings of the 2012 8th International Conference on Informatics and Systems (INFOS), Cairo University, pp 1–161. IEEE

Gasmi K, Kharrat A, Messaoud MB, Abid M (2012) Automated segmentation of brain tumor using optimal texture features and support vector machine classifier. In Proceedings of the International Conference Image Analysis and Recognition, Aveiro, Portugal, pp 230–239. Springer

Ortiz A, Górriz JM, Ramírez J, Salas-Gonzalez D, Llamas-Elvira JM (2013) Two fully-unsupervised methods for mr brain image segmentation using som-based strategies. Appl Soft Comput 13(5):2668–2682

Havaei M, Jodoin P-M, Larochelle H (2014) Efficient interactive brain tumor segmentation as within-brain knn classification. In: Proceedings of the 2014 22nd International Conference on Pattern Recognition, pp 556–561. IEEE

Charutha S, Jayashree MJ (2014) An efficient brain tumor detection by integrating modified texture based region growing and cellular automata edge detection. In: Proceedings of the 2014 International Conference on Control, Instrumentation, Communication and Computational Technologies (ICCICCT), pp 1193–1199. IEEE

Duraisamy M, Mary F, Jane M (2014) Cellular neural network based medical image segmentation using artificial bee colony algorithm. In: Proceedings of the 2014 International conference on green computing communication and electrical engineering (ICGCCEE, Coimbatore, India), pp 1–6. IEEE

Huang M, Yang W, Yao W, Jiang J, Chen W, Feng Q (2014) Brain tumor segmentation based on local independent projection-based classification. IEEE Trans Biomed Eng 61(10):2633–2645

Agn M, Puonti O, Munck af Rosenschöld P, Law I, Van Leemput k (2015) Brain tumor segmentation using a generative model with an rbm prior on tumor shape. In: Proceedings of the BrainLes 2015, Munich, Germany, pp 168–180. Springer

Zhao L, Jia K (2015) Deep feature learning with discrimination mechanism for brain tumor segmentation and diagnosis. In: Proceedings of the 2015 international conference on intelligent information hiding and multimedia signal processing (IIH-MSP), Adelaide, SA, Australia, pp 306–309. IEEE

Tustison NJ, Shrinidhi KL, Wintermark M, Durst CR, Kandel BM, Gee JC, Grossman MC, Avants BB (2015) Optimal symmetric multimodal templates and concatenated random forests for supervised brain tumor segmentation (simplified) with antsr. Neuroinformatics 13(2):209–225

Xiao Z, Huang R, Ding Y, Lan T, Dong RF, Qin Z, Zhang X, Wang W (2016) A deep learning-based segmentation method for brain tumor in mr images. In: Proceedings of the 2016 IEEE 6th International Conference on Computational Advances in Bio and Medical Sciences (ICCABS), Atlanta, GA, USA, pp 1–6. IEEE, 2016

Kim G (2017) Brain tumor segmentation using deep fully convolutional neural networks. In: Proceedings of the International MICCAI Brainlesion Workshop, Granada, Spain, pp 344–357. Springer

Pourreza R, Zhuge Y, Ning H, Miller R (2017) Brain tumor segmentation in mri scans using deeply-supervised neural networks. In: Proceedings of the International MICCAI Brainlesion Workshop, Granada, Spain, pp 320–331. Springer

Dong H, Yang G, Liu F, Mo Y, Guo Y (2017) Automatic brain tumor detection and segmentation using u-net based fully convolutional networks. In: Proceedings of the annual conference on medical image understanding and analysis, Edinburgh, United Kingdom, pp 506–517, Springer

Kamrul Hasan SM, Linte CA (2018) A modified u-net convolutional network featuring a nearest-neighbor re-sampling-based elastic-transformation for brain tissue characterization and segmentation. In: Proceedings of the 2018 IEEE Western New York Image and Signal Processing Workshop (WNYISPW), Rochester, NY, USA, pp 1–5. IEEE

Perkuhn M, Stavrinou P, Thiele F, Shakirin G, Mohan M, Garmpis D, Kabbasch C, Borggrefe J (2018) Clinical evaluation of a multiparametric deep learning model for glioblastoma segmentation using heterogeneous magnetic resonance imaging data from clinical routine. Invest Radiol 53(11):1–8

Zhao X, Yihong W, Song G, Li Z, Zhang Y, Fan Y (2018) A deep learning model integrating fcnns and crfs for brain tumor segmentation. Med Image Anal 43:98–111

Chang J, Zhang L, Naijie G, Zhang X, Ye M, Yin R, Meng Q (2019) A mix-pooling cnn architecture with fcrf for brain tumor segmentation. J Vis Commun Image Represent 58:316–322

Chang K, Beers AL, Bai HX, Brown JM, Ina Ly K, Li X, Senders JT, Kavouridis VK, Boaro A, Su C et al (2019) Automatic assessment of glioma burden: a deep learning algorithm for fully automated volumetric and bidimensional measurement. Neuro Oncol 21(11):1412–1422

Kumar S, Negi A, Singh JN (2019) Semantic segmentation using deep learning for brain tumor mri via fully convolution neural networks. In: Proceedings of the Information and Communication Technology for Intelligent Systems, pp 11–19. Springer

Sharma A, Kumar S, Narayan Singh S (2019) Brain tumor segmentation using de embedded otsu method and neural network. Multidimension Syst Signal Process 30(3):1263–1291

Feng X, Tustison NJ, Patel SH, Meyer CH (2020) Brain tumor segmentation using an ensemble of 3d u-nets and overall survival prediction using radiomic features. Front Comput Neurosci 14:1–12

Nema S, Dudhane A, Murala S, Naidu S (2020) Rescuenet: an unpaired gan for brain tumor segmentation. Biomed Signal Process Control 55:1–9

Li Q, Yu Z, Wang Y, Zheng H (2018) Tumorgan: a multi-modal data augmentation framework for brain tumor segmentation. Sensors 20(15):1–16

Lin F, Qiang W, Liu J, Wang D, Kong X (2020) Path aggregation u-net model for brain tumor segmentation. Multimedia Tools Appl 80(15):1–14

Kao P-Y, Shailja F, Jiang J, Zhang A, Khan A, Chen JW, Manjunath BS (2020) Improving patch-based convolutional neural networks for mri brain tumor segmentation by leveraging location information. Front Neurosci 13:1–14

Zhou C, Ding C, Wang X, Zhentai L, Tao D (2020) One-pass multi-task networks with cross-task guided attention for brain tumor segmentation. IEEE Trans Image Process 29:4516–4529

Khalil HA, Darwish S, Ibrahim YM, Hassan OF (2020) 3d-mri brain tumor detection model using modified version of level set segmentation based on dragonfly algorithm. Symmetry 12(8):1–22

Liu P, Dou Q, Wang Q, Heng P-A (2020) An encoder-decoder neural network with 3d squeeze-and-excitation and deep supervision for brain tumor segmentation. IEEE Access 8:34029–34037

Naser MA, Deen MJ (2020) Brain tumor segmentation and grading of lower-grade glioma using deep learning in mri images. Comput Biol Med 121:1–8

Khalid S, Khalil T, Nasreen S (2014) A survey of feature selection and feature extraction techniques in machine learning. In: Proceedings of the 2014 science and information conference, Park Inn by Radisson Hotel, London Heathrow, pp 372–378. IEEE

Ranjan Nayak D, Dash R, Majhi B (2016) Brain mr image classification using two-dimensional discrete wavelet transform and adaboost with random forests. Neurocomputing 177:188–197

Mohan G, Monica Subashini M, Survey on brain tumor grade classification (2018) Mri based medical image analysis. Biomed Signal Process Control 39:139–161

Deepa AR, Emmanuel Sam WR (2019) An efficient detection of brain tumor using fused feature adaptive firefly backpropagation neural network. Multimedia Tools Appl 78(9):11799–11814

Diagnosis C-A, Ahmed KB, Hall LO, Goldgof DB, Liu R, Gatenby RA (2017) Fine-tuning convolutional deep features for mri based brain tumor classification. In: Proceedings of the Medical Imaging 2017. Orlando, Florida, USA, vol 10134, pp 1–7

Yassin NIR, Omran S, El Houby EMF, Allam H (2018) Machine learning techniques for breast cancer computer aided diagnosis using different image modalities: a systematic review. Comput Methods Programs Biomed 156:25–45

El-Dahshan E-SA, Hosny T, Salem A-BM (2010) Hybrid intelligent techniques for mri brain images classification. Digital Signal Process 20(2):433–441

Qurat-Ul-Ain GL, Kazmi SB, Jaffar MA, Mirza AM (2010) Classification and segmentation of brain tumor using texture analysis. In: Proceedings of the Recent advances in artificial intelligence, knowledge engineering and data bases, pp 147–155

Kharrat A, Gasmi K, Messaoud MB, Benamrane N, Abid M (2010) A hybrid approach for automatic classification of brain mri using genetic algorithm and support vector machine. Leonardo J Sci 17(1):71–82

Zhang Y-D, Wang S, Lenan W (2010) A novel method for magnetic resonance brain image classification based on adaptive chaotic pso. Progress Electromagn Res 109:325–343

Yamamoto D, Arimura H, Kakeda S, Magome T, Yamashita Y, Toyofuku F, Ohki M, Higashida Y, Korogi Y (2010) Computer-aided detection of multiple sclerosis lesions in brain magnetic resonance images: false positive reduction scheme consisted of rule-based, level set method, and support vector machine. Comput Med Imaging Graph 34(5):404–413

Jafari M, Kasaei S (2011) Automatic brain tissue detection in mri images using seeded region growing segmentation and neural network classification. Aust J Basic Appl Sci 5(8):1066–1079

Zöllner FG, Emblem KE, Schad LR (2012) Svm-based glioma grading: optimization by feature reduction analysis. Z Med Phys 22(3):205–214

Jafarpour S, Sedghi Z, Amirani MC (2012) A robust brain mri classification with glcm features. Int J Comput Appl 37(12):1–5

Arimura H, Tokunaga C, Yamashita Y, Kuwazuru J (2012) Magnetic resonance image analysis for brain cad systems with machine learning. In: Proceedings of the Machine learning in computer-aided diagnosis: medical imaging intelligence and analysis, pp 258–296. IGI Global

Saritha M, Paul Joseph K, Mathew AT (2013) Classification of mri brain images using combined wavelet entropy based spider web plots and probabilistic neural network. Pattern Recogn Lett 34(16):2151–2156

Kalbkhani H, Shayesteh MG, Zali-Vargahan B (2013) Robust algorithm for brain magnetic resonance image (mri) classification based on garch variances series. Biomed Signal Process Control 8(6):909–919

Sindhumol S, Kumar A, Balakrishnan K (2013) Spectral clustering independent component analysis for tissue classification from brain mri. Biomed Signal Process Control 8(6):667–674

Ibrahim WH, AbdelRhman A, Osman A, Ibrahim Mohamed Y (2013) Mri brain image classification using neural networks. In: Proceedings of the 2013 international conference on computing, electrical and electronic engineering (ICCEEE), Khartoum, Sudan, pp 253–258. IEEE, 2013

Amsaveni V, Albert Singh N, Dheeba J (2013) Computer aided detection of tumor in mri brain images using cascaded correlation neural network. In: Proceedings of the IET Chennai Fourth International Conference on Sustainable Energy and Intelligent Systems (SEISCON 2013), Chennai, India, pp 527–532. IET

Bhanumurthy MY, Anne K (2014) An automated detection and segmentation of tumor in brain mri using artificial intelligence. In: Proceedings of the 2014 IEEE International Conference on Computational Intelligence and Computing Research, Coimbatore, India, pp 1–6. IEEE

Babu Nandpuru H, Salankar SS, Bora VR. Mri brain cancer classification using support vector machine. In: Proceedings of the 2014 IEEE Students’ Conference on Electrical, Electronics and Computer Science, Bhopal, India, pp 1–6. IEEE

Preethi G, and Sornagopal V (2014) Mri image classification using glcm texture features. In: proceedings of the 2014 international conference on green computing communication and electrical engineering (ICGCCEE), Coimbatore, India, pp 1–6. IEEE

Nasir M, Khanum A, Baig A (2014) Classification of brain tumor types in mri scans using normalized cross-correlation in polynomial domain. In: Proceedings of the 2014 12th International Conference on Frontiers of Information Technology, Islamabad Pakistan, pp 280–285. IEEE

Xu Y, Jia Z, Ai Y, Zhang F, Lai M, Eric I, Chang C (2015) Deep convolutional activation features for large scale brain tumor histopathology image classification and segmentation. In: Proceedings of the 2015 IEEE international conference on acoustics, speech and signal processing (ICASSP), South Brisbane, QLD, Australia, pp 947–951. IEEE

Yang G, Zhang Y, Yang J, Ji G, Dong Z, Wang S, Feng C, Wang Q (2016) Automated classification of brain images using wavelet-energy and biogeography-based optimization. Multimedia Tools Appl 75(23):15601–15617

Machhale K, Babu Nandpuru H, Kapur V, Kosta L (2015) Mri brain cancer classification using hybrid classifier (svm-knn). In: Proceedings of the 2015 International Conference on Industrial Instrumentation and Control (ICIC), Pune, India, pp 60–65. IEEE

Kharrat A, Ben Halima M, Ben Ayed M (2015) Mri brain tumor classification using support vector machines and meta-heuristic method. In: Proceedings of the 2015 15th International Conference on Intelligent Systems Design and Applications (ISDA), Marrakech, Morocco, pp 446–451. IEEE

Rajesh Chandra G, Ramchand K, Rao H (2016) Tumor detection in brain using genetic algorithm. Procedia Comput Sci 79:449–457

Ishikawa Y, WashiyaK, Aoki K, Nagahashi H (2016) Brain tumor classification of microscopy images using deep residual learning. In: Proceedings of the SPIE BioPhotonics Australasia, Adelaide, Australia, vol 10013, pp 1–10. SPIE

Mohsen H, El-Dahshan E-SA, El-Horbaty E-SM, Salem A-BM (2018) Classification using deep learning neural networks for brain tumors. Future Comput Inf J 3(1):68–71

Paul JS, Plassard AJ, Landman BA, Fabbri D (2017) Deep learning for brain tumor classification. In: Proceedings of the Medical Imaging 2017: Biomedical Applications in Molecular, Structural, and Functional Imaging, Orlando, Florida, USA, vol 10137, pp 1–16. SPIE