Abstract

Images acquired in poor weather conditions (haze, fog, smog, mist, etc.) are often severely degraded. In the atmosphere, there exists two types of particles: dry particles (dust, smoke, etc.) and wet particles (water droplets, rain, etc.) Due to the scattering and absorption of these particles, various adverse effects, including reduced visibility and contrast, color distortions, etc. are introduced in the image. These degraded images are not acceptable for many computer vision applications such as smart transportation, video surveillance, weather forecasting, remote sensing, etc. The computer vision task associated with the mitigation of this effect is known as image dehazing. A high-quality input image (haze-free) is required to ensure the accurate working of these applications, supplied by image dehazing methods. The haze effect in the captured image is dependent on the distance from the observer to the scene. Besides, the scattering of particles adds non-linear and data-dependent noise to the captured image. Single image dehazing utilizes the physical model of hazy image formation in which estimation of depth or transmission is an important parameter to obtain a haze-free image. This review article groups the recent dehazing methods into different categories and elaborates the popular dehazing methods of each category. This category-wise analysis of different dehazing methods reveals that the deep learning and the restoration-based methods with priors have attracted the attention of the researchers in recent years in solving two challenging problems of image dehazing: dense haze and non-homogeneous haze. Also, recently, hardware implementation-based methods are introduced to assist smart transportation systems. This paper provides in-depth knowledge of this field; progress made to date and compares performance (both qualitative and quantitative) of the latest works. It covers a detailed description of dehazing methods, motivation, popular, and challenging datasets used for testing, metrics used for evaluation, and issues/challenges in this field from a new perspective. This paper will be useful to all types of researchers from novice to highly experienced in this field. It also suggests research gaps in this field where recent methods are lacking.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

The computer vision is defined as a field of study that deals in developing techniques to help computers gain high-level understanding from digital images or videos. It automates various tasks and extracts useful information from images/videos with the help of artificial intelligence systems. There are numerous computer vision applications, including smart transportation systems, video surveillance, object detection, weather forecasting, etc. [1] that require high-quality input images or videos to “see” and analyze the contents. Unfortunately, poor weather conditions (haze, fog, rain, etc.) diminish the visibility and lead to the failure of these applications. The image captured under these circumstances suffers from various degradations, namely low contrast, faded colors and most importantly reduced visibility. These degradations occur in the captured image due to the scattering of atmospheric particles (aerosols, water droplets, molecules, etc.) suspended in the atmosphere.

The role of image dehazing is to improve the visual quality of a degraded image and remove the influence of the weather. Therefore, the image dehazing algorithm acts as preprocessing tool for many computer vision applications, as shown in Fig. 1.

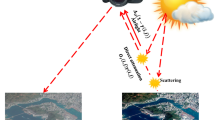

Fog, mist, and haze are the atmospheric phenomena that reduce the visibility of the image. Fog and mist both occur when the air has wet particles or water droplets. Both the terms are almost the same and the only difference is how far we can see. Fog is the term generally referred to when visibility is less than 1 km. If we can see more than 1 km away, it is considered as mist. Haze is a slightly different phenomenon, in which extremely small, dry particles, for example, air pollutants, dust, smoke, chemicals, etc. are suspended in the air. These dry particles are invisible to the naked eyes but sufficient to degrade the quality of the image in terms of visibility, contrast, and color. The visibility is less than 1.25 miles in the presence of haze. These dry particles are generated through various sources including farming, traffic, industry, and wildfires. Figure 2 shows the example image of fog, mist, and haze and also various sources of hazy image formation.

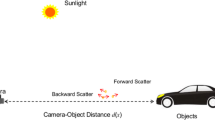

The hazy effect in the captured image is expressed by the atmospheric scattering model (ASM) or the physical model of hazy image formation, as shown in Fig. 3. When incident light is reflected from the object, reflected light is attenuated due to the distance between observer and scene. In addition, due to the scattering of particles, airlight is also introduced into the camera. Therefore, a hazy image is composed of direct attenuation and airlight. Direct attenuation distorts the color whereas airlight reduces the visibility. The physical model is given as follows [2]:

where \(c \in \{ r,g,b\}\) is the color channel, \(I_{hazy}^{c}\) is the captured hazy image, \(J_{haze - free}^{c}\) is the haze-free image, \(A_{t}^{c}\) is the atmospheric light, \(T_{r}\) is the transmission medium, and x is a pixel position. The transmission describes the portion of light, directly reaching the camera without scattering. The value of the transmission medium lies in the range of [0, 1]. Furthermore, it is expressed as an exponential function of distance and depends on two parameters: distance d and scattering coefficient \(\beta\), as follows:

Haze-free image \(J_{haze - free}^{c}\) can be obtained in the inverse way as follows:

Single image dehazing (SID) is an ill-posed problem because we have to estimate two key parameters \(A_{t}^{c}\) and \(T_{r}\) from \(I_{hazy}^{c}\) to find hazy-free image \(J_{haze - free}^{c}\). The performance of a dehazing method depends on the estimation of key parameters.

In the past, many dehazing methods came into existence that utilizes various prior knowledge or assumptions to compute the depth information. However, the performance of these methods depends on the validity of these priors and may lead to various issues, such as color distortions, incomplete haze removal, halo artifacts, etc. In the literature, image enhancement based dehazing methods were also reported which do not require the estimation of the transmission and its costly refinement process. Since it does not consider the degradation mechanism into account while recovering an image. They suffer from the problem of over/under enhancement, over-saturation, and loss of information and are also unable to deal with dense hazy images. To overcome the problem of restoration and enhancement-based methods, many machine learning and deep learning methods are successfully implemented to compute an accurate transmission map. These methods require a vast amount of hazy and corresponding clean images to train the model. However, it is very difficult to obtain hazy images and their GT image in the real world. The related work section describes the recent dehazing methods of each category along with their pros and cons.

In this review article, we have mainly focused on haze removal methods from a single image proposed in 2016 and onwards. The major contributions are as follows:

-

(1)

This paper provides an extensive study of various recent the state-of-the-art dehazing methods. It classifies these methods into twelve categories: Image enhancement, Image restoration with prior, Image fusion, Superpixel, Machine learning, Deep learning, Polarization, DCP based, Airlight estimation, Hardware implementation, Non-homogenous and Miscellaneous. All these methods are investigated on various dehazing parameters, namely key technique, dataset, issues of dehazing, evaluation metrics, etc.

-

(2)

It provides a comprehensive study of various datasets used in image dehazing to date. It also discusses datasets of various haze densities from thin haze to very dense haze including real hazy images and synthetic hazy images. These datasets are assessed on various parameters, namely haze concentrations, number of images, and performance of recent dehazing methods.

-

(3)

This paper also explores different metrics introduced in recent works for the evaluation of dehazing algorithms with their merits and demerits.

-

(4)

Furthermore, this paper focuses on the latest technology advancement and development in this field from the perspective of non-homogenous haze removal, dense haze, hardware architecture, ensemble networks and deep learning methods.

-

(5)

Finally, it provides research gaps in single image dehazing where recent the state-of-the-art methods are lacking.

There are few papers available in the field of single image dehazing, however, they are limited to certain aspects. For instance, [3] concentrated on discussing various haze removal methods and quantitative results. Later, Wang et al. [4] added a description of different evaluation metrics. Singh et al. [5] explained numerous categories of dehazing methods with their pros and cons and analyzed methods based on issues of dehazing. However, it did not provide the qualitative and quantitative analysis of dehazing methods. In addition, it did not talk about standard dehazing datasets available for assessment. In the year 2020, two survey papers [6, 7] were reported. However, they take into consideration only a few recent papers from the year 2017 to 2020. This article considers approximately 150 recent papers in comparison to 46 in [7] and 51 in [6]. The comparison with existing survey/review papers is illustrated in Table 1. In this table, we can visualize the strength on various parameters of image dehazing. In addition to the previous research, this paper explores various untouched haze removal techniques for handling the most challenging problems of dehazing such as removal of non-homogeneous haze, superpixels, dense haze, and real-time applicability (hardware implementation). This article provides an extensive review of recent and popular dehazing techniques based on qualitative and quantitative comparisons, challenges in dehazing, available datasets, and evaluation metrics. This paper aspires to serve as a guide in all aspects of image dehazing for the researchers to find a path for their work.

2 Applications of Image Dehazing

Image dehazing is an important area of research. The output of dehazing algorithms acts as an input to various vision applications. Some of the motivations are shown in Fig. 4 and discussed as follows:

2.1 Video Surveillance

A video surveillance system is a key component in the field of security. The effectiveness and accuracy of the visual surveillance system depend on the quality of visual input. However, the poor weather condition affects the quality of input. The video captured by the camera of a surveillance system degrades due to scattering and absorption of light by the atmosphere. For example, video recorded in hazy weather has limited visibility which could be problematic for police, investigating a crime. Thus, these systems do perform poorly in hazy weather conditions. Hence, a robust surveillance system is required.

2.2 Intelligent Transportation System

The foggy weather conditions affect the driver’s capabilities and increase the risk of accidents and the travel time significantly due to limited visibility. In past years, fog-related road fatalities have increased significantly. Road crashes, injuries or deaths on account of poor weather conditions like thick fog run in thousand every year on highways. The bad news is that this number is increasing every year [8].

In addition to roads or highways, fog also affects other transportation systems like airplanes and railways. Generally, takeoff and landing of airplanes become a very challenging task in a hazy environment. Due to which many flights get delayed or sometimes, they are canceled. Similarly, in the case of railway transportation, thick foggy conditions are a hazard to the passengers and crew members that could easily result in loss of life. The driver may miss the signals due to impaired visibility. Therefore, we require an intelligent transportation system that can provide a clear view to the driver in these transports to save life and property.

2.3 Underwater Image Enhancement

Underwater imaging often suffers poor visibility and color distortions. The poor visibility is produced by the haze effect due to the scattering of light by water particles multiple times. Color distortion is due to the attenuation of light and makes an image bluish. Therefore, an underwater vision system requires an image dehazing algorithm as a preprocessing so that a human can see the underwater objects.

2.4 Remote Sensing

In remote sensing, images are captured to obtain information about objects or areas. These images are usually taken from satellites or aircraft. Due to the high difference of distance in the camera and the scene, the haze effect is introduced in the captured scene. Therefore, this application also demands image dehazing as a preprocessing tool to improve the visual quality of an image before analysis.

Besides these applications, image dehazing also plays an important role in other applications, such as astronomy, medical science, agronomy, border security, archaeology, environmental studies and many more.

Therefore, it is important for computer vision applications to improve the visual quality of the image and highlight the image details. With respect to hardware aspects of camera sensors, many super-telephoto lenses are designed to incorporate scientific filtering and coating to enhance the contrast of the image. However, these lenses are very expensive and bulky and not applicable in daily life. Therefore, the restoration of hazy images or videos has attracted increasing interest in the last few years.

3 Issues/Challenges of Image Dehazing

The dehazed image may suffer from various types of issues like color shift, over enhancement, structure damage or incomplete haze removal, as shown in Fig. 5.

[9]: Various issues of image dehazing a incomplete haze removal, b structure damage, c color shift, d over enhancement

3.1 Under/Over Enhancement

Restoration of hazy images often leads to two phenomena: under enhancement and over enhancement, as shown in Fig. 6. In under enhancement, haze is not completely removed from the original image. Hence, the visibility is not improved as desired. In case of over enhancement, the original information is changed in haze-free regions and color shift is caused in hazy regions during dehazing process [9]. This problem is generally observed in dense hazy regions which are having low contrast. Over dehazing makes the color much darker and causes saturation of pixels.

The image dehazing algorithms must keep the information of haze-free regions unchanged, meanwhile, capable enough to improve the visibility in hazy regions without color distortions.

3.2 Halo Artifact and Noise Amplification

The existing image dehazing method generally used patch-based method to estimate the transmission to recover the hazy image. Inaccurate estimation of the transmission may lead to distortions in the dehazed image, as shown in Fig. 7. Most of the method is also based on the assumption that local patches have similar depth. Depth discontinuities or abrupt jumps in an image will cause halo artifacts. To remove the problem of halo artifacts various refinement methods like Guided filtering, contextual regularization, total variation, etc. are utilized in many works. Still, the problem exists, halo artifacts are reduced but they are not completely removed.

Moreover, in presence of dense haze, noises and artifacts are not visible in the hazy images. The existing methods may amplify these noises and artifacts depending upon the depth and concentration of the haze during dehazing process [10]. Some of them introduce other distortion like the blurring effect in the dehazed images.

3.3 Dense Fog Removal/ Different Foggy Weather Conditions

In state-of-the-art dehazing methods, till now, there is not even a single method that can remove the effect of varying and challenging weather conditions like removal of all types of haze ranging from thin haze to very thick haze, night-time haze removal, non-homogenous haze (uneven distribution of haze), etc. as shown in Fig. 8.

Most of the methods work well in daytime scenes; they fail in night-time hazy conditions due to inaccurate estimation of the airlight. Generally, an airlight is estimated by the brightest pixels. This estimator faces two challenges when it is applied to night-time scenes (1) it is estimated globally over the entire image, whereas there are multiple local sources of light and they are non-uniform in nature. (2) It selects the white pixels which are the brightest pixels in the hazy image. But, it is not true for night-time scenes that exhibit strong color lighting [11].

The majority of the methods are able to remove the mild or thin haze. In presence of dense haze, either they fail to remove the haze completely or may result in loss of information in form of saturation of pixels. In the case of thick fog, scene reflection becomes very small due to the small value of the transmission. The reason for small transmission is due to the large scattering coefficient, meanwhile, the proportion of airlight increases significantly. Therefore, it is a very challenging task to remove the thick haze considering minuscule reflection.

3.4 Adaptive Parameter Setting

The performance of the dehazing methods greatly depends on the selection of the different parameters, namely patch size, dehazing controlling parameter, Gamma correction, size of the filter, regularization term, scaling factor, number of superpixels, etc. For e.g., if the patch size is small, it may underestimate the transmission, especially for the regions with bright and white objects and may lead to over enhancement. By contrast, if the patch size is large, it may introduce the halo artifacts at depth discontinuities and also will increase the computation [12]. Therefore, for a good recovery result, patch size must be selected adaptively depending upon the pixels. Another parameter that is used by most of the methods is dehazing controlling parameter, as shown in Fig. 9.

Restored images with different δ by method [30]. a Original image, b δ = 1.0, c δ = 0.8, d δ = 0.6, e δ = 0.4

All these parameters are set manually according to the experimental setup. They may not fit for different degrees of haze present in images. These parameters must be set adaptively to improve the performance because haze density on a given image varies from image to image and atmospheric veil.

3.5 Speed of Dehazing

Another drawback with existing dehazing methods is the computational complexity of the dehazing process. It is still a very challenging task to dehaze an image/video in real-time by which various vision applications, such as intelligent transportation systems or video surveillance can be benefited. The time complexity can be reduced by joint estimation of airlight and transmission and to avoid the costly refinement process of the transmission.

4 Related Works

In recent years, significant progress is made in the field of image dehazing. We present recent and popular dehazing methods in this section. For convenience, we have divided these methods into the following categories: (1) image enhancement based, (2) image restoration with priors (3) image fusion based (4) non-homogeneous haze (5) hardware implementation based (6) polarization based (7) traditional learning based (8) deep learning based (9) superpixel based. Furthermore, subcategories of each category are identified, as shown in Fig. 10.

4.1 Image Enhancement based Methods

Image enhancement-based method can be divided in two sub-categories (1) the methods do not consider the atmospheric scattering model or degradation mechanism to enhance the visual quality of the hazy images. Therefore, they do not estimate the transmission and atmospheric light. (2) image enhancement operations are utilized in estimation of transmission or airlight. Hence, they may fall in methods of other categories too, such as restoration or fusion-based. Both sub-categories use various image enhancement techniques, including histogram equalization [13, 14], Bi-histogram modification [15], weighted histograms[16], Gamma correction [13, 17,18,19,20], multi-scale retinex [21], wavelet decomposition [22,23,24,25], multi-scale gradient domain contrast enhancement [17], texture filtering [26], bilateral filter [26, 27], white balance method [26, 28], median filtering [28, 29], Linear Transformation [30], morphological constructions [31], Discrete cosine transform [14], Guided filter [32,33,34,35,36,37,38,39], anisotropic diffusion [40, 41], contrast enhancement [42,43,44], quadtree Decomposition: [30, 45, 46], Contextual Regularization: [45, 47,48,49], weighted L1-norm regularization [50], and total variation [51,52,53] (Table 2).

Wang et al. [21] proposes a multi-scale Retinex based algorithm with color restoration to compute the transmission. The author estimated the atmospheric light by dark channel image and a decision image according to a threshold. However, dehazed image contains small halos and also appears dark in the regions of small gradients and bright areas. Cui et al. [50] proposed a SID method based on the region segmentation which separates the hazy image into bright and non-bright regions. This removes the problem of overestimation of the transmission in non-bright regions and underestimation of the transmission in bright regions of the DCP method. Weighted L1-norm regularization is used for refining the transmission. However, this method suffers from over-saturation. Moreover, it underestimates the transmission for the object similar to the dense haze and leads to the over enhancement. Liu et al. [53] proposed a solution for two challenging problems of existing dehazing methods. These two challenges are (1) halo artifacts due to insufficiency of edges in estimated transmission and (2) amplification of noise and artifacts in presence of dense haze. This method estimates the initial transmission by boundary constraint and its refinement is done by non-local total variation (NLTV) regularization. However, this method fails in the presence of white objects such as clouds, dense haze, etc. and as a result, the dehazed image looks darker. Furthermore, to improve the quality of the haze-free image, a post-processing method is required. Moreover, lower values of SSIM AND CIEDE2000 indicate that performance of this method is not satisfactory on synthetic hazy images. Raikwar et al. [47] estimate a lower bound on the transmission by considering the difference between the minimum channel of a hazy and haze-free image. A lower bound is characterized by a bounding function and a quality control parameter. The bounding function is estimated by a non-linear model and a control parameter is used to control the degree of dehazing. However, this method is unable to increase the contrast of dense hazy images. Wu et al. [54] proposed a variational model to remove artifacts due to noise present in the hazy image. They proposed a transmission-aware non-local regularization that suppresses the noises and provides the fine details of the dehazed image without amplification of noises. In addition, to smooth the transmission, semantic-guided regularization is proposed. This method provides satisfactory results without amplification of noises. However, this method fails on non-homogeneous hazy images. Furthermore, when objects are in the same plane and look similar, vanishing lines are falsely estimated and unable to update the segmentation process. In this case, it wrongly estimates the transmission, scene radiance and the segmentation map of a hazy image.

In summary, the image enhancement-based methods don’t use the physical model of haze formation and also don’t concentrate on the image quality. They only highlight certain details of the image while may reduce or remove some information from the dehazed image. These methods suffer from the problem of over-saturation of pixels and over enhancement. In addition, they are not able to remove the dense haze. However, when image enhancement-based techniques are combined with a physical model like [22, 30, 45], their performance is improved a lot.

4.2 Image Fusion Based Methods

Image fusion is an image processing technique that selects the best regions from multiple images and combines them into a single high-quality image. A fused image is generated in a transformed domain such as Gaussian and Laplacian pyramids, Gradient-domain, Linear, High boost filtering, Guided filtering, Variation based, etc.(Table 3).

In [52] proposed a multiple prior based method to estimate the global atmospheric light. Three priors: color saturation, brightness and gradient map are combined to judge a pixel whether it belongs to an atmospheric light or not. This method computes two coarse transmission maps: pixel-based transmission (PTM) and block level transmission map (BTM). A fusion procedure is employed to combine these two transmissions as follows:

Laplacian Pyramid is used to compute the transmission map in which N is the number of decomposition levels in the Laplacian pyramid. Pi and Bi denote the decomposition result of PTM and BTM, respectively. Fi is the linear fusion of two transmissions Pi and Bi. Furthermore, fused transmission is refined by a total variation. This method suffers from various problems-e.g., incomplete haze removal, unable to highlight the local details of the image and also not being able to remove dense haze.

The existing deep learning methods are trained on synthetic indoor hazy images. Therefore, their performance is not satisfactory on outdoor hazy images. Park et al. [55] proposed a heterogeneous generative adversarial network (GAN), consisting of a CycleGAN and a conditional GAN for restoring a haze-free image with the preservation of texture details. In Phase 1, a cycleGAN is trained on unpaired outdoor synthetic hazy images. Phase 2 utilizes various networks, such as atmospheric light estimation, transmission map estimation, and a fusion CNN. Finally, these three networks are trained through adversarial learning. Fusion CNN combines the output of Phase 1 and Phase 2 to achieve the dehazed image.

Zhu et al. [56] proposed a fusion-based algorithm to solve the image dehazing problem without considering the degradation mechanism. A set of under-exposed images are generated using Gamma correction coefficients. A Guided filter is used to decompose an under-exposed image into local components and global components. For the local components, the exposure quality of the image is measured by applying the average filter to the luminance component. Global components reflect the structure information of the image and its weight is calculated using initial global components and quadratic function of average luminance. Once the weights are ready for under-exposed images, they are fused in a pixel-wise manner. Global components Bi and global components Di of multiple gammas corrected input images are fused as shown:

where \(\alpha \ge 1\) controls the local details in the fused image. Finally, to improve the quality of the dehazed image in terms of color quality, saturation adjustment is performed. The framework of this method is shown in Fig. 11. The overall performance of this method is good and achieves satisfactory results with computational efficiency.

The framework of the method [56]

Yuan et al. [57] proposed a transmission fusion strategy for handling normal and bright regions of the hazy image. They propose soft segmentation based on image matting to segment the image. Means and variances of local patches are calculated and binary classification is performed to generate the trimap. In the next step, image matting segments the hazy image into normal and bright regions. For normal regions, the transmission is calculated by DCP while transmission for bright regions is calculated by the atmospheric veil correction method. Finally, the fuzzy fusion method fuses these two transmissions obtained by DCP and AVC. The proposed framework of the method [57] is shown in Fig. 12. This method is tested on various challenging hazy images. However, this method has high computation complexity due to the estimation of two transmissions, binary classification and fuzzy fusion. It also suffers from the problem of over enhancement and halo artifacts.

The framework of the method [57]

Ma et al. [58] proposed a method to enhance the visibility of sea fog images. In the fusion process, the first image is obtained by a linear transformation. The second image is generated by a high-boost filtering algorithm based on a Guided filter. A simple fusion process is followed to combine these two images. The dehazed image is obtained by performing white balancing on a fused image. However, this method produces halo artifacts and is unable to remove noises in the dehazed image.

Son et al. [59] proposed a near-infrared fusion model to deal with the color distortions and removal of haze. This method develops the color and depth regularizations with the traditional degradation model of haze. The color regularization assigns colors to the haze-free image based on colors from the colorized near-infrared image and visible color image. The depth regularization estimates the depth of the colorized near-infrared image. Finally, both regularizations transfer the visibility and colors into a dehazed version of the captured visible image. Shibata et al. [60] focused on developing an application adaptive importance measure image fusion method that can be applied to many applications, including night vision, temperature-perceptible fusion, depth-perceptible fusion, haze removal, image restoration, etc. This method is a learning-based framework that extracts various features (Gabor, intensity, local contrast, gradient) from the decomposed images and learns the important area of the image without knowing the application. Zhao et al. [61] handle two problems of dehazing: misestimation of transmission and oversaturation. It first identified the edges called TME which are misestimating the transmission. Accordingly, a hazy image is divided into two regions: TME and non-TME regions. Multi-scale fusion is used to fuse both patch-wise transmission and pixel-wise transmission. This method greatly enhances the visibility of the hazy image. However, it has a high computation time. Moreover, two post-processing methods (Fast Gradient Domain GIF and exposure enhancement) are utilized on a fused image to obtain the final haze-free image.

Agrawal et al. [62] proposed a fusion based method based on the joint cumulative distribution function (JCDF). This method dehazed the long shot hazy image without color distortions in nearby regions and at the same time, it can enhance the visibility in faraway regions. This method generates multiple images from different modules, such as faraway, nearby, CLAHE. Finally, these multiple images are fused into a single high-quality and artifacts-free image in the gradient domain.

The method uses the following JCDF equation to generate multiple images in nearby and faraway modules:

where \(z = x_{1} + x_{2} = x^{{d_{\min } }} + x^{{d_{\max } }}\),\(d_{\min }\) deals with the fog in nearby regions whereas \(d_{\max }\) deals with the fog in faraway regions. The parameters \(d_{\min }\) and \(d_{\max }\) are set to 2 and 10, respectively. \(\lambda\) is the dehazing parameter and used to generate the images for the fusion process. It generates 1 image with \(\lambda = 2\) in faraway region and 3 images in the nearby region with \(\lambda = 5,8,40\) to avoid the problem of over-saturation and color distortions. Furthermore, to increase the contrast, CLAHE is used to generate 1 more image. Finally, all these images are fused in a single dehazed image in the gradient domain, as shown in Fig. 13.

Recently, several effective fusion-based techniques were introduced which combine the multiple images generated from image enhancement or restoration-based methods. These methods successfully solve the problem of DCP, edge preservation, dense haze removal and halo artifacts. However, the fusion procedure may be complex and the dehazing speed may be decreased due to the generation of multiple images from enhancement-based operators.

4.3 Superpixel Based Dehazing

Another category of dehazing method introduced recently is superpixel based. The superpixels are utilized in dehazing methods in two ways. First, they are used to segment the sky and non-sky regions to remove the problem of color distortions or color artifacts of DCP in sky regions. Another use of superpixel segmentation is to replace the patch-based operations with a superpixel. It offers two advantages: good dehazing speed and reduction of the halo artifacts (Table 4).

The two problems are associated with superpixels based approaches: over enhancement and time complexity. In superpixel based approaches, the number of superpixels is decided manually. The higher number of superpixels may introduce the problem of darkening of color while a smaller number may not sufficient to remove the haze. Another problem is the selection of a superpixel segmentation algorithm. Some algorithms have high computational complexity. Therefore, it is advised to select an algorithm that extracts the superpixels in real-time.

4.4 Prior Based Methods

The restoration-based method uses the physical model or haze formation. They compute the transmission map or depth map based on priors/ assumptions, such as dark channel prior [63], color attenuation prior [64], average saturation prior [65], non-local prior [66], gradient profile prior [27], color ellipsoid prior [67], etc.

Berman et al. [66] proposed a non-local prior as opposed to priors based on local patches. According to this prior, a haze-free image can be expressed by a few hundred colors from the RGB cluster and these pixels of RGB clusters are spread over the entire image. Each cluster in RGB space can be represented using lines termed haze-lines. These haze lines are used to estimate the atmospheric light, distance map and haze-free image. The failure case of this method is the non-uniform lighting which may lead to over enhancement and artifacts (Table 5).

Singh et al. [40] handles the problem of preserving the texture details in the presence of complex background and large haze gradient. They proposed a new prior called gradient profile prior to evaluate the depth map. The transmission map is refined by the anisotropic diffusion and iterative learning base image filter. The image gradient gives the direction and magnitude and is calculated as follows:

where \(\frac{\partial I}{{\partial m}}\) represents partial derivatives of an image in m direction while \(\frac{\partial I}{{\partial n}}\) shows partial derivatives for n direction. \(\frac{\partial I}{{\partial m}}\) is calculated as differences at one pixel, before it and after it and calculated as follows:

and similarly, \(\frac{\partial I}{{\partial n}}\) is written as:

The maximum gradient values in I are considered as global atmospheric light and is estimated as follows:

and transmission map is estimated as follows:

where \(\Delta n \in \Omega (j)\left( {\Delta c\frac{{I_{m}^{c} (n)}}{{A_{l}^{c} }}} \right)\) is the gradient profile prior of the normalized image. It overcomes the sky region problem of the DCP method as it is computed toward 1 and t(j) will be toward 0. Some haze \(\beta\) is added to the image to look more natural.

Most of the prior based methods follow a physical model of haze formation which assumes single scattering under the homogeneous haze. However, in a realistic environment, haze behavior is non-homogeneous and there are multiple sources of scattering [68]. Besides, the dehazing results depend on the validity of priors. If assumptions or priors do not hold, it may result in various issues, such as incomplete haze removal, color distortions or artifacts due to the wrong estimation of the transmission.

4.5 Polarization Based Dehazing

The polarization-based methods utilized the polarized characteristic of the light. Therefore, it restores the depth information of the hazy image using multiple images with different degrees of polarization, generally represented as I0 and I90. Some methods based on this category are listed in Table 6.

Polarization based dehazing methods have a great advantage in terms of high efficiency and low computational complexity. These methods are effective in all kinds of turbid media, including haze, fog, water, etc. They are also capable to restore dense hazy images with detailed information. However, it requires a precise selection of image regions such as the sky region to estimate the key parameters which are not applicable in the real world. Also, a photon noise, a well-known quantum–mechanical effect is ignored by most of the existing polarization-based methods, resulting in amplification of noise in the dehazed image.

4.6 DCP Based Dehazing

Dark channel prior (DCP) is very simple and popular prior for haze removal. This prior is based on the observation of the haze-free images that at least one-color channel is significantly dark i.e. minimum color channel in a haze-free image is very close to 0 except the sky regions. This prior was introduced in the year 2010. Since 2010, a lot of research work is going on to improve the performance of DCP. In this section, we discuss recent methods based on DCP along with which problem of DCP they have solved (Table 7).

Atmospheric particles degrade the quality of the image in terms of blurring, distortion, color attenuation and cause low visibility. The method [69] proposed an improved version of DCP to handle the artifacts in the original DCP method. This method defines α as a square window of size l and calculates the dark channel as follows:

where α is a square window of size l and calculated as follows:

This method is managed to remove the artifact but it is not comparable to the DCP method in quantitative evaluation.

Chen et al. [51] proposed a DCP based method for suppressing artifacts and noises using gradient residual minimization. However, due to ambiguity between artifacts and objects, it is unable to increase the contrast for the objects located at a far distance also slightly blurs the details.

In summary, many researchers addressed the problems of DCP and according presented their solution. For example, the method [31] proposed an alternative method for fast computation of the transmission map using morphological reconstruction. Since the performance of DCP is not good in the sky regions, the method [46] proposed a solution using quadtree decomposition and a region-wise transmission map. The method [70] removes the problem of color distortions for bright white objects using superpixels. The method [71] removes the problem of halo artifacts of DCP using energy minimization.

4.7 Airlight Based Methods

The existing dehazing methods focus on estimating the transmission only and ignore the contribution of airlight in the dehazing process. These methods produce over smoothed image without fine details. Two factors: wrong estimation of airlight and ignorance of multiple scattering contribute toward this problem. Besides, inaccurate airlight is also responsible for color distortions in the dehazed image. Therefore, recently, some works related to the estimation of airlight are reported in the literature (Table 8).

Therefore, the estimation of the airlight is as important as the estimation of the transmission. Inaccurate estimation of the atmospheric light may cause a haze-free image to look unrealistic and color distortions in the dehazed image.

4.8 Hardware Implementation Based Methods

In recent years, significant progress is made toward the development of real-time dehazing applications. Real-time dehazing is highly demanded in smart transportation systems and advanced driver assistance systems (ADAS). These applications demand a higher frame rate, low-cost hardware and power consumption. To date, the methods which fulfill these requirements are very rare. Image dehazing consists of many steps: estimation of transmission, airlight, refinement of the transmission, recovery of haze-free image and an optional step post-processing operation on a haze-free image which leads to computational complexity. Many hardware such as Cortex A8 processor, field-programmable gate array (FPGA), TSMC 0.13-μm, TSMC 0.18-μm, DSP Processor, Graphics Processing Unit (GPU), application-specific integrated circuit (ASIC), etc. Therefore, dehazing method requires hardware implementation for resource-constrained embedded systems to meet the real-time challenge. This section discusses the state-of-the-art methods in aspects of hardware architecture (Table 9).

Shiau et al. [72] proposed an extremum approximate method to estimate the atmospheric light that uses a 3*3 minimum filter to obtain the dark channel and contour preserving estimation to calculate the transmission. This method is implemented on 11 stage pipeline architecture for real-time applications. The architecture is divided into four modules: register bank, atmospheric light estimation, transmission estimation and scene recovery, as shown in Fig. 14. It can process one pixel per clock cycle. It can achieve 200 MHZ with 12,816 Gate counts by TSMC 0.13-μm technology. The power consumption is 11.9 mW.

General framework of Hardware based implementation [72]

The register bank modules provided 9-pixel values of the current 3*3 window as an input to the atmospheric light estimation module. Line buffers are used to store the pixel values of 2 rows of an input hazy image. Because of the independent nature of ALE and TE, clock gating help to switch between them for power saving.

4.9 Supervised Learning/Machine Learning Based Methods

Despite numerous methods proposed in the literature, they are restricted to only hand-crafted features. However, effective and reliable restoration of a hazy image is still an open challenge. The accuracy of the restoration-based methods depends on the validity of the prior. In a failure of prior, they may cause various issues, such as residual haze or an unrealistic hazy image. Therefore, the effort had been made toward developing machine learning methods for reliable estimation of the transmission for restoring a haze-free image. However, these techniques require a vast amount of data of hazy and their ground truth image, which is not available. For training the model, a lot of synthetic data using Eq. 1 is generated which limits the performance when they are tested on natural or realistic hazy images. For ease of understanding, machine learning methods are further categorized as traditional or simple learning and deep learning. This section focuses on simple learning techniques. These techniques used linear and non-linear regression, support vector regression, linear model, radial basis function, conditional random field, etc. (Table 10).

4.10 Deep Learning Based Methods

Recently deep learning based had attracted the researcher and successfully implemented in dehazing. These techniques not only remove the haze from an image but also offer a fast and quality dehazed image. Two types of methods exist in the literature for deep learning, one which utilize physical model [73,74,75], and another is without physical model [76,77,78,79,80]. Furthermore, some techniques [73,74,75, 77, 79] require mapping of hazy and their corresponding GT image for training the model while other techniques do not need hazy images and corresponding haze-free images for training [76, 80, 81]. Several deep learning base techniques are reported, including multi-scale convolutional neural network (MSCNN) [73], Dehaze Net [74], All-in-One Dehazing Network (AOD-Net) [75], Cycle-Dehaze [76], Gated Fusion Network [77], Generic Model-Agnostic (GMAN) [78], back projected pyramid network [79], Double DIP [80] (Table 11).

In [82], proposed a variational and deep CNN based dehazing method for estimating transmission, airlight and dehazed image simultaneously. The deep CNN is employed to teach haze-relevant priors (fidelity terms and prior terms). Furthermore, an iterative optimization method based on gradient descent is utilized to solve the variational model.

The method [83] proposed a GAN based method that jointly learns the transmission and haze-free image using loss functions (perceptual loss and Euclidean distance). In the first step, the transmission is estimated by a hazy image and it is combined with high dimension features. Afterward, both features and transmission are fed to the Guided dehazing module to recover a haze-free image. This approach is shown in Fig. 15.

A framework of the GAN based image dehazing method [83]

The traditional methods used hand-crafted features such as contrast maximization, dark channel, etc. The method [84] used an encoder-decoder based structure called gated context aggregation network (GCANet) to directly recover a haze-free image. This architecture utilized smoothed dilated convolution to avoid the artifact. Moreover, a subnetwork is proposed to fuse the features at different levels.

Zhang et al. [85] presented a multi-scale dehazing network called the perceptual pyramid deep network. This encoder and decoder-based method directly learn the mapping between a hazy and a clear image without estimating the transmission map. An encoder is constructed through the dense block and residual block while a decoder consists of a dense residual block with a pyramid pooling module to retain contextual information of the scene, as shown in Fig. 16. The network is optimized by mean squared error and perceptual losses.

Encoder-decoder structure framework of image dehazing [85]

Qin et al. [86] proposed FFA-net (feature fusion attention network) to obtain a haze-free image. This method consists of three modules: feature attention module (which combines channel attention and pixel attention and focuses on thick haze removal), local residual learning (deal with thin haze) and feature fusion attention (adaptively learns the weights from the feature attention module. As shown in Fig. 17, a hazy image is provided input to a shallow feature extraction module. After that, it is fed into an N block structure with skip connection and output is fused into a feature fusion module. Finally, global residual learning is used to restore a haze-free image.

Feature fusion attention network [86]

The prior based methods estimate the transmission on the basis of haze-relevant priors. As a result, dehazed image may suffer from darkened or brightened artifacts.

Recently, end to end CNN based deep learning methods had shown great potential in image dehazing. However, these methods fail to handle non-homogenous haze. In addition, the existing popular multi-scale approaches are utilized to solve various issues of dehazing, namely color distortions, artifacts and some of them also can handle dense haze, but they are not computationally and memory efficient. Deep learning methods produce a visually pleasing result for most hazy images. However, their performance relies heavily on several training samples and the quality of these sample images.

4.11 Miscellaneous Category

In this section, we present the miscellaneous category of dehazing methods. This category includes semi-supervised, unsupervised and ensemble network. In semi-supervised learning, both approaches supervised and unsupervised are utilized in deep CNN. For example, in [87] supervised learning is performed using supervised loss (mean squared, adversarial and perceptual loss) of clean image and hazy image for synthetic images and unsupervised learning is exploited using DCP and gradient prior on real images.

Unsupervised learning does not require the hazy and haze-free image pairs for training the deep neural network. These methods avoid the need for a large-scale synthetic dataset required for training the model. Recent learning-based methods utilized a deep learning model to establish the relationship between hazy and clear images. However, it is difficult to collect a vast amount of hazy and clear images for the training. Therefore, these models are trained on synthetic images, generated using indoor images and corresponding depth images. The performance of these methods is degraded on outdoor hazy images. Some research works use unsupervised learning which does not require hazy images and corresponding GT images during the training phase [88]. It uses only a single captured hazy image to learn and inference the haze-free image.

Another interesting category of image dehazing method is the ensemble, where multiple deep CNN are exploited. For example, in method [89], multiple neural networks were utilized to estimate the transmission to solve the problem of overfitting. Yu et al. [90] proposed three ensemble models: EDN-AT, EDN-EDU and EDN-3J. One of them, EDN-EDU is an ensemble (Encoder-decode and U-net) of two sequential hierarchical different dehazing networks. The ensemble networks can remove the non-homogeneous haze (Table 12).

The atmospheric model assumes the global airlight and scattering coefficient. Therefore, it introduces unrealistic color distortions in dehazed images. The method [91] proposed a color constrained dehazing model to produce a realistic haze-free image. This method solves the dehazing problem as an optimization problem where cost function considers color, local smoothness of transmission and airlight. Moreover, this method can be developed as a semi-supervised dehazing model. It is modeled as three networks by training on synthetic datasets for estimating airlight, transmission and haze-free image. The proposed loss function considers loss in the reconstruction of the hazy image, reconstruction loss of haze-free image, smoothing loss of airlight and transmission map. Golts et al. [92] proposed a deep energy method that offers an unsupervised energy function that replaces the supervised loss. This deep neural network performs training on real world input without the requirement of manually annotated labels. This method is used in three different tasks: Single image dehazing, image matting and seeded segmentation. Experiments are performed on RESIDE dataset.

Li et al. [93] proposed an unsupervised and untrained neural network for image dehazing, called as you only look yourself (YOLY). This method utilized three subnetworks to decompose the hazy image into three latent layers, i.e., haze-free layer, transmission layer and airlight.

Figure 18 shows the input hazy image x is decomposed into three layers using three joint subnetworks. This approach feed x simultaneously into a haze-free estimation network (J-net), a transmission network (T-net) and airlight network (A-net). After that, a hazy image is reconstructed through an atmospheric scattering model. In this way, it is learned in an unsupervised manner, and networks are optimized by the loss function. For the J-net network, a loss function considers the minimization of loss by taking the difference of brightness and saturation.

General framework of unsupervised image dehazing [93]

4.12 Non-Homogeneous Haze

Although deep learning-based methods had been successfully implemented in image dehazing, one of the most challenging problems is to remove the non-homogeneous haze. Most of the method works effectively in presence of homogeneous haze. However, in a real scenario, haze is not homogeneous i.e., not evenly distributed across the image. A dehazing method is required to enhance the visibility without color distortions under the non-uniform airlight (Table 13).

The traditional methods either directly recovering haze-free image (J) with image enhancement or fusion based methods or restoration-based method which estimate transmission map and airlight, fail in case of non-homogenous haze where there is an uneven distribution of haze in the image, i.e., some part of the image is covered with denser haze and other parts with the thin haze. The method [94] takes advantage of both methods to estimate a weight map w. w combines the result of directly estimated J by a physical model. This architecture uses one encoder and four decoders to estimate dehazing parameters J, A, t and w, as shown in Fig. 19. Channel attention is added to generate unique feature maps for these decoders. Moreover, dilation inception is proposed to fill the missing information by non-local features.

U-net structure for non-homogeneous haze removal [94]

Wu et al. [95] proposed a knowledge transfer dehazing network (KTTD) which consists of two networks, i.e., teacher network and dehazing network, as shown in Fig. 20. The teacher network learns the knowledge about clear image and transfers this knowledge to the dehazing network. Furthermore, a feature attention module comprises channel attention and pixel attention is employed to extract important details of the image. Finally, an enhancing module is developed to refine the texture details.

The dual network (knowledge transfer dehazing network) for non-homogeneous haze removal [95]

5 Datasets Used for Image Dehazing

At the beginning of this field, there were very limited datasets available and also the size of these datasets was very small. The researcher used only a few images for validating the performance of their proposed haze removal algorithm. They download the hazy images from the Internet for the dehazing task. The drawback of this approach is that these images do not contain the ground truth images. The lack of ground truth images manifests a great challenge for the researchers in evaluating their methods qualitatively and quantitatively. Therefore, various blind dehazing metrics were introduced but these metrics were not accepted by the global community to conclude due to a lack of haze-free images.

Now a day, two types of datasets are used in this field: a natural hazy image without reference image known as a real image and a synthetic hazy image along with the depth image or ground truth image. The assessment methods are also different for both types of hazy images, which will be discussed in the next section. We discuss all the datasets used in this field based on various parameters, namely the process of hazy image generation, number of images, types of hazy images, etc. The performance of different dehazing methods on these datasets is also explained in the experiment and results section.

5.1 Frida Dataset [96]

The dataset foggy road image database consists of 90 synthetic images of 18 urban road scenes. Frida2 comprises 330 synthetic images of 66 diverse road scenes. Each fog-free image contains 4 foggy images and a depth map, as shown in Fig. 21. The dataset considers four types of fog: uniform fog, heterogeneous fog, cloudy fog, and cloudy heterogeneous fog. Uniform fog is synthesized according to the physical model and Perlin’s noise between 0 and 1 is added to simulate heterogeneous fog. This dataset is helpful to improve the performance of a camera-based driver assistance systems whose objective is to provide a clearer view of the road in the presence of fog to minimize accidents.

5.2 Fattal’s Dataset [97]

This is the most popular dataset available to the research community for the assessment of dehazing capability. This dataset provided 12 synthetic hazy images along with 31 realistic hazy images. This dataset contains various benchmarks hazy images, consisting of several challenges: night-time haze, heavily dense haze, white objects, depth discontinuities, different illumination conditions, sky regions, etc. Some sample images from this dataset are shown in Fig. 22a.

5.3 Waterloo IVC [98]

The dataset consists of 25 realistic hazy images of diverse scenes in an outdoor and indoor environment. There are 22 outdoor real-world hazy images, captured in different haze concentrations while 3 indoor images are simulated using physical mode. This dataset is widely used in single image dehazing to evaluate performance. Some sample images from this dataset are shown in Fig. 22b.

5.4 500 Foggy Images [99]

The dataset consists of 500 natural foggy images, used in many research papers for evaluation of their method. These images comprise different sizes, different fog densities ranging from light fog to dense fog, and diverse image contents. Some sample images from this dataset are shown in Fig. 22c.

5.5 D-Hazy [100]

This dataset contains 1400+ pairs of synthetic hazy and haze-free images of indoor scenes. This dataset is generated using Middlebury and NYU depth datasets, containing their corresponding depth maps. For each image, the transmission map is computed based on atmospheric light and the scattering coefficient. Atmospheric light is assumed to be pure white [101] and the scattering coefficient is set by default as 1. Some sample images from this dataset are shown in Fig. 23a.

5.6 Semantic Understanding of Foggy Scenes [102]

Sakaridis et al. [102] presented two distinct datasets: foggy cityscapes and foggy driving. The foggy cityscapes dataset was derived from the cityscape dataset and contains outdoor synthetic hazy images with different scattering coefficients. It preserves the semantic annotation of the original images. Foggy driving was comprised of 101 real-world foggy road scenes with annotation and a maximum resolution of 960*1280 pixels, as shown in Fig. 23b.

5.7 Haze RD Dataset [103]

This dataset contains 15 outdoor scenes with realistic hazy conditions. Each hazy scene is simulated with five different weather conditions, ranging from thin haze to dense haze and visible range from 50 to 1000 m, as shown in Fig. 24. These images are of high resolutions and justify the scattering theory of the physical model. A depth map of each hazy scene is estimated by fusing structure from motion and lidar.

5.8 I-Haze Dataset [104]

The dataset contains 35 indoor image pairs of hazy and corresponding haze-free images. The real haze appearance is produced by a professional haze machine and captured in a controlled environment under the same illumination for both hazy and haze-free images. Some sample images along with their GT images from this dataset are shown in Fig. 25a.

5.9 O-Haze [105]

This dataset is an outdoor scene dataset comprised pairs of real hazy and corresponding haze-free images. O-haze contains 45 different outdoor scenes in which real haze is produced by a professional haze machine that simulates a hazy environment. These scenes were captured on cloudy days, morning, sunset or when wind speed was below 3 km/h. Some sample images along with GT images from this dataset are shown in Fig. 25b.

5.10 Dense-Haze [106]

Ancuti et al. [106] proposed a Dense-haze dataset containing real-world hazy images, characterized by dense and homogenous haze. It consists of 33 pairs of real hazy and their corresponding haze-free images. Some sample images along with GT images from this dataset are shown in Fig. 25c.

5.11 RESIDE [107]

This is the recent and large-scale dataset of hazy images containing both synthetic and realistic hazy images, called realistic single image dehazing (RESIDE). This dataset is available in RESIDE standard and RESIDE-β. The standard RESIDE contains three subsets: indoor training test (ITS), synthetic objective testing set (SOTS), and hybrid subjective testing set (HSTS). The ITS contains 13,990 synthetic hazy images generated using 1399 haze-free images from NYU2 and Middlebury stereo indoor datasets. For each haze-free image, 10 synthetic hazy images are generated. Atmospheric light is taken uniformly randomly in between [0.7, 1.0] and the scattering coefficient is also randomly uniform in between [0.6, 1.8]. Testing sets are designed for evaluation purposes. The SOTS contains 500 different images with white scenes and dense haze synthesized from NYU2 which are not used in the training set. HSTS selects 10 synthetic outdoor hazy images, together with 10 realistic hazy images. Besides, RESIDE-β provides two more subsets: outdoor training set (OTS) and real-world task-driven testing set (RTTS). The OTS contains 72,135 hazy images and RTTS contains 4322 images.

This dataset provided a new dimension in the single image dehazing for the evaluation of various dehazing methods on a large-scale dataset in terms of full reference metric, no-reference metric, and human subjective rating in visual analysis. The sample images from each part of the RESIDE datasets are shown in Fig. 26.

Sample images from different category of RESIDE dataset [107] a ITS, b SOTS, c HSTS, d OTS, e RTTS

5.12 NH-Haze [108]

In the previous datasets, haze is characterized as homogeneous over the entire image. Since, haze is not distributed uniformly across the scene in reality, Ancuti et al. [108] proposed a non-homogenous realistic dataset. This dataset contains 55 real outdoor hazy images along with their corresponding haze-free images, generated by a professional haze machine by simulating the real conditions, as shown in Fig. 27.

Non-homogenous hazy image and GT image from [108], a hazy images, b GT images

Table 14 illustrates the different datasets used in the state-of-the-art methods. Two types of datasets are available for evaluation: real hazy images and synthetic hazy images. For real images, no GT image or depth map is available. Many works are reported on these datasets. After analysis of the dataset used by the recent methods, we found that Fattal’s dataset [97] and RESIDE [107] are the first choices for real and synthetic images, respectively.

6 Evaluation Metrics

There are several evaluation metrics used for testing the capability of the dehazing algorithms (DHA). At present, the images used in assessment can be divided into two categories: when ground truth image is available and when ground truth image is not available. Therefore, two categories of quantitative metrics depending upon the availability of images are introduced: full reference metric and no-reference metric, as shown in Fig. 28. Since it is difficult to obtain a haze-free image of the same scene. Therefore, no-reference metrics are often used for the assessment of DHA.

Assessment criteria of real and synthetic hazy images [9]

During dehazing, various issues may remain unresolved, including residual haze, structure damage, color distortions, over enhancement, halo artifacts, noise amplification, blurring effects, edge preservation, etc. To measure these distortions, many dehazing quality assessment methods were introduced in the literature. In this section, we will explore all these metrics.

6.1 No-Reference Metrics

A good DHA must ensure the following qualities in the dehazed image: improved visibility, removal of artifacts, over enhancement, contrast enhancement, structure preservation, and edge preservations. By considering all these qualities, many dehazing metrics were introduced. Unfortunately, there is no single DHA that can test all the dehazing capabilities. In this section, we discuss some well-known and dehazing metrics introduced in recent years.

6.1.1 Blind Contrast Enhancement Assessment [110]

The contrast of the image under adverse weather conditions is reduced significantly due to the scattering of the particles. This method is widely accepted in many dehazing works where the reference image is not available. This method is based on the assessment of contrast in terms of visible edges before and after restoration. It uses three descriptors: rate of new visible edges (e), the gain of visibility level (r), and saturated pixel ration (σ). The value of the e metric specifies the ability of the dehazing method in terms of new visible edges in the restored image that are not seen in the original hazy image. It is calculated as follows:

where \(n_{h}\) and \(n_{hf}\) represent the cardinality of visible edges in hazy and haze-free images, respectively.

The second metric r is the ratio of the visibility level of objects in the restored image and the visibility level of objects in a hazy image. This metric considers visible and invisible edges both in the hazy image as follows:

where \(\psi_{hf}\) represents the set of visible edges in a haze-free image and ri is the gradient of pi and the corresponding pixels in a hazy image.

The third metric is the saturated pixel ratio. This metric talks about pixels which become saturated (black or white) after applying the dehazing process.

where ns is the number of saturated pixels and dimx and dimy represent the width and height of the image, respectively.

A high value of e and r indicates good quality of a dehazed image in terms of edges preservation and contrast enhancement while a small value of σ is an indication that a dehazed image has fewer saturated pixels or color distortions than a hazy image.

6.1.2 Non-Reference Image Quality Assessment based Blockiness and Luminance Change (BALC) [111]

This metric is designed to measure the two distortions in an image: blocking artifacts and improper luminance change. It is a no-reference metric and obtains the quality score of a dehazed image based on these distortions. These distortions in the dehazed image are estimated based on gradient. Usually, halo artifacts appear in the image at depth discontinuities. This method divides the image into 8*8 non-overlapping blocks. The blockiness of a block is measured by taking the average of discontinuities along the four boundaries of the block. For luminance change or blurring effect, it calculates the average of gradients inside the block. Finally, two measures are combined into a single metric as follows:

where \(B_{hf}\) and \(L_{hf}\) denote artifact and blurring effect of the haze-free image. \(\lambda \ge 0\) is a parameter, used to adjust the importance of these two distortions.

A small value of BALC indicates the good quality of the haze-free image in terms of artifacts and blurring effects.

6.1.3 Blur Metric [112]

After the dehazing process, some methods introduce a blurring effect in the haze-free image. To check the quality of the dehazed image in terms of blur perception, many recent works used this metric.

This metric applies the low-pass filter on the dehazed image to obtain a blurred version of this image. The comparison of intensity variations between two images (the dehazed image and the blurred dehazed image) indicates blur annoyance. Thus, a high variation in intensity values between these two images signifies that the dehazed image is not blurred whereas a small difference indicates that the dehazed image is blurred.

Blur metric provides a score ranging from 0 to 1 which represents the best and the worst quality, respectively in aspects of blur perception.

6.1.4 Blind Image Quality Assessment (BLIIND-II) [113]

BLIIND-II is a no-reference image quality assessment metric based on a probabilistic model that predicts the quality score of an image. This metric uses the natural scene statistics (NSS) model which relies on discrete cosine transform coefficients. NSS model is built from undistorted natural scenes and requires a small number of training examples. The estimation of the predicted score consists of four stages. In the first stage, the image is divided into n*n blocks, then computing the DCT coefficients for each block. In the second stage, a generalized Gaussian density model is applied to each block that provides the model parameters. Four features: shape parameter, coefficient of variation, energy subband ratio measure and orientation features are extracted in the third stage from model parameters. Finally, the fourth stage consists of a Bayesian model that predicts the perceptual quality of the dehazed image. The steps for the computation of this metric are shown in Fig. 29.

Steps of computing BLIIND-II [113] metric

It considers various types of distortions, such as artifacts, white noise, Gaussian blur, fast fading channel, etc. in the estimation of a quality score. The values of this metric are in the range of [0, 100]. A higher value of BLIIND-II indicates the poor quality or distortions in the image. During dehazing, many periodic patterns (checkerboard and blocking artifacts) are generated in the haze-free image. Therefore, this metric can be used to identify these distortions in the image.

6.1.5 Blind/No-Reference Image Spatial Quality Evaluator (BRISQUE) [114]

Mittal et al. [114] proposed a blind/no-reference image spatial quality evaluator (BRISQUE) metric which measures the losses of naturalness of an image without calculating the distortion-specific features, such as blocking, artifacts, blur, ringing artifacts, etc. It computes the local luminance coefficients and observed that these normalized luminance coefficients follow a Gaussian distribution for the natural scene. They extracted 36 natural scene statistics features at 2 scales-18 features per scale, used to identify all types of distortions. Finally, a regression module, support vector regression is used to calculate the quality score of an image. This model is tested on a LIVE IQA database which consists of 29 reference images and 779 distorted images spanning different types of distortions.

6.1.6 Fog Aware Density Evaluator (FADE) [99]

This metric is specially designed for the evaluation of DHA to judge the visibility of the restored image. This fog-aware density evaluator (FADE) metric does not consider the various approaches used previously, such as estimation of the transmission, salient region, human-related opinion, etc. This makes the judgment of visibility based on deviations in the spatial domain, seen in hazy and haze-free images. A set of fog-aware statistical features, namely MSCN (mean subtracted contrast normalized) coefficients, sharpness, contrast energy, colorfulness, color saturation, image entropy and dark channel prior are extracted from foggy images. It used 500 foggy and 500 fog-free images to extract these features. A test foggy image is divided into p*p patches and average feature values for statistical features for each patch are extracted. A multivariate Gaussian (MVG) probability density in the d dimension is computed between a test foggy image and 500 natural fog-free images as follows:

where f represents fog aware features while \(\mu\) and \(\sigma\) denote mean and covariance, respectively. In the next step, the Mahalanobis distance measure is computed between the MVG fit to features extracted from a test foggy image and the MVG model of 500 fog-free images as follows:

where \(\mu_{1} ,\mu_{2}\) and \(\sigma_{1} ,\sigma_{2}\) are the mean and covariance of the MVG model of the 500 fog-free images and a test foggy image, respectively. Similarly, \(D_{ff}\) is calculated between the MVG of 500 foggy images and a test image. Finally, the fog density of a hazy image is calculated as follows:

Constant 1 is added to the denominator to prevent divide by zero exception. Smaller values of D represent lower fog density, i.e. A DHA is improving the visibility of the hazy image to great extent.

A smaller FADE value indicates less residual haze present in the dehazed result. The residual haze, artifacts and noises, on images reduce the FADE scores. However, the bright scenes may be mistaken as residual haze by FADE and increase the value of FADE.

6.1.7 Natural Image Quality Evaluator (NIQE) [115]

This is another no-reference metric used in DHA for measuring the distortion during the dehazing process. This metric provides a natural image quality evaluator based on quality-aware features of the natural scene statistics model. These features are extracted from a corpus of undistorted natural images. The 36 features are extracted from a dehazed image (whose quality is to be analyzed) by dividing the image into p*p patches and then comparing its MVG fit to the MVG model.

6.1.8 Dehazing Quality (DHQ) [116]

Min et al. [116] proposed an objective measure for the quantitative evaluation of dehazed images. To assess overall dehazing quality, first, they constructed a database of 1750 dehazed images generated from 250 real hazy images using 7 dehazing algorithms of different haze densities. Afterward, subjective quality evaluation is conducted on this dataset. Finally, the regression module predicts the dehazing quality (DHQ) by extracting several features from a dehazed image. The overall dehazing quality is measured in three aspects: haze removal, preservation of structure and over enhancement, as shown in Fig. 30.

Quantitative evaluation to measure overall issues of dehazing in real hazy images using non-reference based metric DHQ [116]

Haze removing features aim to design haze-relevant descriptors to evaluate haze removing effect. It considers five features: pixel wise DCP, image entropy, local variance, normalized local variance and contrast energy. Another important parameter is structure preservation used to judge the quality of the dehazed image. The dehazing process sometimes can introduce structure degradation or artifacts. To account for structure preservation, various features, such as variance similarity, normalized variance similarity and normalized image similarity are used. The third important quality indicator of the dehazing process is the identification of over enhancement problem in dehazed images. During the dehazing process, details in low contrast areas are darkened; colors are distorted or may introduce structural artifacts. It is measured in the form of low contrast areas and blockiness.

6.2 Full-Reference Metrics

Full-reference metrics are used to evaluate a method when a GT image is available. This method is applicable to test the performance of synthetic images. Recently, several metrics: PSNR, SSIM, LPIPS, CIEDE 2000 and SHRQ had been utilized in many works. In this section, we have explored all such metrics.

6.2.1 Learned Perceptual Image Patch Similarity Metric (LPIPS) [117]

Pixel-wise metrics such as PSNR and SSIM disagree with human judgment in assessing the perceptual quality of the dehazed image. Therefore, Zhang et al. [118] proposed a learned perceptual image patch similarity metric (LPIPS) that establishes the perceptual similarity between two images that resemble human opinion. It is based on deep features, trained on some well-known deep learning frameworks like supervised, self-supervised, unsupervised, etc. This metric can identify a wide range of distortions in the image, including photometric (color shift, contrast, saturation), noise (white, artifacts), blur, and compression. Three network architectures including AlexNet, SqueezeNet and VGG are considered for supervised training. The overall framework of this metric is shown in Fig. 31.

This diagram shows how the distance between two patches x (patch of GT image) and x0 (patch of dehazed image) is calculated by a network F. The features are extracted from many layers, normalize in channel dimension, scale each channel by vector w and compute the l2 norm. Finally, the average of spatial and channel-wise is taken. G is a network trained to predict perceptual quality h from distance pair d0 and d1.

The lower LPIPS score indicates a higher similarity between the two images.

6.2.2 Peak Signal to Noise Ratio (PSNR) [119]

Peak signal to noise ratio (PSNR) measures the degree of signal distortion between a haze-free image obtained by a DHA and GT image. A high value of PSNR signifies the good quality of the dehazed image. It is calculated as:

where MSE is used to calculate the error between dehazed image and ground truth image. It must be minimized and calculated as follows:

where G and Ihf are the ground truth and dehazed images, respectively.

6.2.3 Structural Similarity Index Metric (SSIM) [120]

Since PSNR is not effective in terms of human visual judgment. Therefore, many researchers utilized the structural similarity index metric (SSIM) which evaluates the dehazing performance in terms of contrast, luminance and structure between ground truth and dehazed images. It is calculated as follows:

Here, \(\mu_{r}\) and \(\mu_{i}\) are means of r (restored image) and i (GT image), respectively. \(\sigma_{r}^{2}\) and \(\sigma_{i}^{2}\) are the variances of r and i and \(\mu_{ri}\) is the cross-variance between r and i. Default values of \(c_{1}\) and \(c_{2}\) are 0.01 and 0.03.

SSIM yields a decimal score between 0 and 1. The score value of 1 indicates that the two images are identical. SSIM is highly sensitive to variations of contrast and illumination. Therefore, it can judge the issues of dehazing, such as incomplete haze removal or over-saturation of pixels.

6.2.4 CIEDE 2000 [121, 122]

During the dehazing process, color distortions may be introduced in a restored image. It cannot be reliably evaluated by PSNR or SSIM. Therefore, researchers in this field also used an accurate color difference metric CIEDE 2000 which assesses dehazing in terms of color restoration closer to human eye perception in color difference.

It yields values in the range [0,100] with smaller values indicating better color preservation, and values less than 1 corresponding to imperceptible by the human eye. A value of 100 indicates that colors are the opposite of two images.

6.2.5 Synthetic Haze Removing Quality (SHRQ) [9]

Min et al. [123] proposed a full reference metric called synthetic haze removing quality (SHRQ) to evaluate the overall quality of a dehazed image. The proposed dehazing quality evaluator integrates many quality parameters raised during the dehazing process. These issues of dehazing are structure recovery, color rendition and over-enouncement. The author first creates an SHRQ database that consists of two subsets: regular and aerial images. The regular image dataset consists of 45 haze-free images while the aerial dataset contains 30 high-quality aerial images. The ASM model is utilized to get the synthetic hazy images. These hazy images are processed by eight state-of-the-art methods. The overall quality of a dehazed image is estimated as follows: