Abstract

This paper presents a novel adaptive structure for audio noise removal, aiming to enhance the performance of noise reduction. The proposed structure consists of a bank of parallel least-mean-squares, time-domain adaptive filters. Multiple microphones are employed to capture the noise source signal, while another microphone records the corrupted speech signal. By passing the recorded noise signals through the parallel adaptive filter bank structure and subtracting the results from the speech signal, the noise is effectively suppressed. Additionally, the noise removal performance is further improved by linearly combining the error signals, which include the noise-free speech signal. The effectiveness of the proposed adaptive structure is demonstrated through theoretical analysis and numerical simulations, highlighting its superior noise removal performance compared to traditional acoustic noise cancellation approaches.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Audio noise removal technique is used as an art to eliminate the acoustic noise from the speech signal and therefore it can enhance the quality of speech signal. There have been a lot of research works in the context of acoustic noise cancellation (ANC) by applying adaptive filters [1,2,3,4,5,6,7,8,9,10,11,12,13,14]. The original idea of noise cancellation via adaptive algorithm was firstly proposed by Bernard Widrow [1]. Since then, several adaptive cancellation algorithms have been proposed to address the stability, misadjustment, and computational complexity.

In [2], the least-mean-squares (LMS) algorithm with variable step size is proposed in which the adaptive weights are frozen when the target signal is strong. In [3], a robust variable step-size normalized LMS algorithm is presented. A constrained optimization problem is derived by minimizing the \(l_{2}\) norm of the error signal with a constraint on the filter weights. A novel LMS-based adaptive algorithm for ANC application is introduced in [4] which applies nonlinearities to the input and error signals by using the Lagrange multiplier method.

In some ANC applications, there is a secondary path between the output of adaptive filter and the error signal. The secondary path causes phase shifts or delays in signal transmission. Conventional LMS algorithms cannot compensate for the effect of the secondary path. For those applications, the filtered-x LMS (FXLMS) algorithm is proposed [5]. Adaptive volterra filtered-x least-mean-squares (VFXLMS) algorithm has been derived in [6] for ANC application with nonlinear effects. Adaptive filtered-s least-mean-square (FSLMS) algorithm is proposed in [7] which has a better noise cancellation performance with less computational complexity compared to second-order VFXLMS algorithm. In [8], an adaptive noise cancellation, based on multiple sub-filters, is studied which improves the convergence performance; however, it deteriorates the steady-state performance compared to the single-filter approaches. A novel adaptive algorithm for cancelling residual echo is proposed in [9], where the complex-valued residual echo is estimated and corrected. Unlike the conventional single-channel echo cancellation algorithms, this approach considers both the amplitude and the phases of far-end signal. The idea of multichannel acoustic echo cancellation is addressed in [10] by using a multiple-input multiple-output (MIMO) adaptive filtering. In [11], a multiple reference adaptive filtering for ANC application in propeller aircraft has been studied. A multichannel structure for ANC has been proposed in [12] where a filtered-x affine projection algorithm is derived for noise cancellation. An adaptive Bayesian algorithm is proposed in the frequency-domain [13] to address the multichannel acoustic echo cancellation problem. The echo paths between the loudspeakers and the near-end microphone are modelled as a multichannel random variable with a first-order Markov property. Multi-microphone speech enhancement methods are applied to remove the background noise and undesired echos in order to achieve a high-quality speech [14].

In this paper, we propose a novel structure for ANC application in which multiple adaptive filters are used to eliminate the acoustic noise from the speech signal. We measure the noise source by multiple microphones and use a bank of time-domain adaptive filters to eliminate them from the speech. Since the measured noises are uncorrelated with each other, we can enhance the filtering performance by using a linear combination of error signals. The steady-state mean-square deviation (MSD) performance is also analysed. The theoretical findings verify computer simulation results.

The organization of this paper is stated in the following. In Sect. 2, the problem formulation of ANC is introduced. In Sect. 3, we introduce our proposed time-domain adaptive filter bank for cancelling the noise signal. Section 4 discusses the theoretical performance analysis of the proposed algorithm. Computer simulation results are represented in Sect. 5. Finally, Sect. 6 concludes the paper.

Notations: In this paper, small letters with subscript, e.g. \(x_{i}\), display vectors, capital letters, e.g. \(R_{i}\), display matrices and small letters with parentheses, e.g. x(i), display scalars. The superscripts \(x^{T}\) represent the transpose of a matrix or vector. All vectors are column vectors. Random variables display with boldface letters, e.g. scalars, vectors, and matrices, are denoted by \(\varvec{x}(i)\), \(\varvec{w}_{i}\), and \(\varvec{R}_{i} \), respectively. The \(\textrm{Tr}\big (.\big )\) symbol shows the trace operator, and \(\textrm{E}\big (.\big )\) symbol denotes the expectation operator. The \(\textrm{vec}(.)\) operator stacks the columns of a matrix into a vector on top of each other. The \(\lambda _\textrm{max}(.)\) denotes the largest eigenvalue of its matrix argument.

2 Problem formulation

Figure 1 shows the configuration of a noise cancellation system. It consists of one adaptive filter \(\varvec{w}_{i}\) and two microphones MIC1 and MIC2. MIC1 captures the noise source signal and MIC2 captures the speech signal. Both microphone’s records are contaminated by a measurement noise. The measurement noise of MIC1 is denoted by \(\varvec{v}(i)\) and the measurement noise of MIC2 is represented by \(\varvec{z}(i)\). Assume that the noise source signal \(\varvec{x}(i)\) passes through the acoustic channel \(w^{o}\) with the following impulse response

By sorting the channel coefficients \(w^{o}(i)\) into the column vector \(w^{o}\), the acoustic channel impulse response can be expressed as,

where \((.)^{T}\) represents the transpose operator. According to Fig. 1, the second microhpone, MIC2, measures the sum of the channel output and the speech signal, \(\varvec{s}(i)\). Therefore, the desired signal at the adaptive filter is achieved as

where \(\varvec{z}(i)\) is the measurement noise of the second microphone and

is the vector form of the input signal \(\varvec{x}(i)\) which is measured by the first microphone MIC1.

The input signal of the adaptive filter is

where \(\varvec{v}(i)\) is the measurement noise of the first microphone MIC1. The output signal of the adaptive filter is denoted by \(\varvec{y}(i)\), that is

where \(\varvec{u}_{i}\) is a vector of input signal \(\varvec{u}(i)\) as follows:

and \(\varvec{w}_{i}\) is a vector of filter weights

The error signal \(\varvec{e}(i)\) is obtained by subtracting the filter output signal \(\varvec{y}(i)\) from the desired signal \(\varvec{d}(i)\) as

The LMS algorithm is used to estimate the acoustic channel impulse response \(w^{o}\) by updating the weight vector \(\varvec{w}_{i}\) of the adaptive filter [15] as

where \(\mu \) is the step-size parameter. For a sufficiently long time, the adaptive filter weight vector \(\varvec{w}_{i}\) converges to the optimal vector \(w^{o}\). As a result, the filter’s output signal is

Therefore, according to (3), (5), (9), and (11), the error signal is

In the ideal case, where the measurement noises \(\varvec{z}(i)\) and \(\varvec{v}_{i}\) are zero, the error signal \(\varvec{e}(i)\) is a good estimation of the noise-free speech signal, such that

In order to decrease the effect of measurement noise, we can use multiple microphones with uncorrelated measurement noises. In this paper, we propose a novel structure for ANC application in which we alleviate the effect of input measurement noise with using multiple microphones measuring the noise source.

3 A bank of parallel adaptive filters

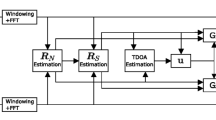

In this section, we propose a novel structure for noise cancellation in which there are multiple adaptive filters each of which is connected to a microphone to capture the noise source signal \(\varvec{x}(i)\) as shown in Fig. 2. The LMS adaptation rule of each adaptive filter is as follows:

where \(\varvec{w}_{k,i}\) is the kth adaptive filter weight vector at time instant i, and \(\varvec{u}_{k,i}\) is the input signal of the kth adaptive filter. Since the measurement noises \(\varvec{v}_{k}(i)\) are uncorrelated, we can reduce the noise power by linearly combining the error signals \(\varvec{e}_{k}(i)\) as

where \(\varvec{e}_{A}(i) \) is the average error signal of adaptive filter bank which is approximately equal to the noise-free speech signal. In order to compute the error signal \(\varvec{e}_{k}(i)\) at the kth adaptive filter, we need to define some variables as follows:

where \(\varvec{d}(i)\) is defined in (3) and \(\varvec{y}_{k}(i)\) is the output signal of kth adaptive filter and given as

Inserting (3) and (17) in (16), we obtain the kth error signal

where we define the error vector \(\tilde{ \varvec{w} }_{k,i}\) as follows:

By substituting (18) into (15), we have

The variance of the average error signal is equal to

where the input signals \(\varvec{u}_{k,i}\) and the noise vectors \(\varvec{v}_{k,i}\) are random variables with the following \(M\times M\) covariance matrices

The noise scalar \(\varvec{z}(i)\) is zero-mean Gaussian random variables with variance \(\sigma _{z}^2\) and the speech signal \(\varvec{s}(i)\) has the following variance \(\sigma _{s}^2\)

It should be noted that the following parameters are mutually independent, because the origin of their production is separate

In order to calculate the signal-to-noise-ratio (SNR) of the cleared signal, we need to derive the variance of the local error signal (18) as

Therefore, we can obtain the SNR of local error signal as

On the other hand, according to (21), the SNR of the average error signal (20) is

Comparing the SNR of the local error signal (26) and the SNR of the average error signal (27), we conclude that the denominator of the SNR fraction has become smaller in our proposed ANC structure. In other words, linear combination of local error signals leads to better output SNR.

4 Performance analysis

In this section, the behaviour of the error vector \(\tilde{ \varvec{w} }_{k,i}\) in the mean sense and in the mean-square sense is analysed. By substituting Eq. (18) into the adaptation rule (14), we have

By subtracting (28) from \(w^{o}\), the error vector of kth adaptive filter is obtained as

where \(I_{M}\) is the identity matrix with dimension \(M\times M\) and

4.1 The mean analysis

By computing the expected value of both sides of (29), we have

By considering this fact that the measurement noises \(\varvec{v}_{k,i}\), \(\varvec{z}(i)\), and also the noise source \(\varvec{x}_{i}\) are zero-mean and mutually independent as stated in (24), we can simplify Eq. (31) as

where we have defined

Since the error vector \(\tilde{\varvec{w}}_{k,i}\) depends on the data up to time \(i-1\), and the matrix \(\varvec{B}_{k,i}\) is a function of the input data at time i, we consider the independence assumption of \(\varvec{B}_{k,i}\) and \(\tilde{\varvec{w}}_{k,i}\) in Eq. (31). The mean equation of the error vector can be further simplified as

where we use the following assumptions

By taking the limit of the mean relation (35) in steady-state regime, when \(i\rightarrow \infty \), and using the following approximation in steady-state regime

we can group the two terms with expectation and thus the mean relation of the error vector results in

This relation shows that when there is a measurement noise \(\varvec{v}_{k,i}\) in the ANC problem, mean of the error vector is nonzero and therefore the estimation is biased. The bias value is directly related to the measurement noise power \(R_{v,k}\). We can decline the bias value by linearly combining the error signals of adaptive filters since the noise power decreases from \(R_{v,k}\) to \(\frac{1}{N}R_{v,k}\).

4.2 The mean-square analysis

By inserting (34) into (29), we can rewrite the error vector as

In this section, we study the behaviour of the error vector variance by analysing the mean of weighted squared norm given as

in which other terms are discarded because \(\varvec{v}_{k,i}\), \( \varvec{z}(i)\) and \(\varvec{x}_{i}\) are mutually independent and zero-mean samples. According to the LMS adaptation rule, the estimation vector \(\varvec{w}_{k,i}\) depends on the data up to time \(i-1\), \(\varvec{u}_{k,i-1}\). Thus, we can assume that the error vector \(\tilde{\varvec{w}}_{k,i}\) is independent of the input data \(\varvec{u}_{k,i}\). Therefore, we conclude that the error vector \(\tilde{\varvec{w}}_{k,i}\) is independent of matrix \(\varvec{ B}_{k,i}\), where \(\varvec{ B}_{k,i}\) is defined in (30). Thus, the first expectation term in (40) is

where the weighting matrix \(\Sigma ^{\prime }\) is defined as follows:

In linear algebra, we have the following property for Kronecker product [16]:

where

Applying the \(\textrm{vec}(.)\) operation to both sides of (42), we have

where

By substituting \( \varvec{ B}_{k,i}\) from (30) into (46), we have

One can conclude that the expectation in (47) is time-invariant and, thus,

where \(\mathcal {O}(\mu ^{2})\) denotes

Calculating (49) needs the knowledge of fourth-order statistics which are not available. The effect of this term can be ignored by assuming a sufficiently small step size as used in [16]. Therefore, matrix \(\mathcal {F}\) became time-invariant and can be approximated as

Using this property that \(\textrm{Tr}[AB] = \textrm{Tr}[BA]\), we can rewrite the second expectation of (40) as

where \(R_{u,k}\) and \(\sigma _{s}^2\) are defined in (22) and (23), respectively. Similar to (51), the third expectation of (40) can be derived as

The fourth expectation term of (40) is also calculated as

where we used the \(R_{v,k}\) definition in (22) and the following property of the trace operator

The fifth expectation term of (40) is approximated as the following

Since the error vector \(\tilde{\varvec{w}}_{k,i}\), the matrix \(\varvec{B}_{k,i}\), and the noise vector \(\varvec{v}_{k,i}\) are mutually independent, we can separate the sixth expectation term of (40) as

By considering the steady-state mode of the mean of the error vector (38), the expectation in (56) is obtained in steady-state mode as

Finally, replacing the achievements of (41), (51), (52), (53), (55), and (57) into (40) yields to

Using the following property of the trace operator

We can write the variance (58) in the vector expression

By taking the limit of the variance (58) in the steady-state regime, when \(i\rightarrow \infty \), and using the following approximation

We can group the two terms with expectation in (58), and thus the variance of the error vector turns to

In order to compute the variance of the average error signal (21), we should choose the vector \(\sigma \) as

By solving (63) for \(\sigma \), we have

Inserting (64) into (62), the steady-state MSD performance is obtained as

Using the following property of the Kronecker product [17],

and considering (50), we can write

Thus, we conclude that matrix \(\mathcal {F}\) is stable when matrix \(B_{k}\) is stable, that is

In order to guarantee this stability, the following condition needs to be satisfied for the step size

where \(R_{u,k}\) is defined in (22). Inserting (65) into (21), the variance of the total error signal in steady-state equals to

From (70), we conclude that the influencing factors on the mean-square behaviour of the performance are: (i) the step size, \(\mu \), (ii) the signal power, \(\sigma _{s}^{2}\), (iii) the measurement noise power, \(\sigma _{z}^{2}\), (iv) the noise covariance matrices \(R_{x}\) and \(R_{v,k}\), (v) the input regressors via matrix \( B_{k} \), and (vi) the optimal vector of the acoustic channel \(w^{o}\).

5 Simulation results

In the first part of the simulations, we verify the theoretical achievement about the MSE of the proposed ANC in (70). To this end, the noise source signal \(\varvec{x}_{i}\), the measurement noises \(\varvec{v}_{k,i}\) and \(\varvec{z}(i)\) are generated from zero-mean Gaussian random variables with covariance matrices \(R_{x}= I_{M}\), \(R_{v,k}=0.029I_{M}\), and \(\sigma _{z}^{2}=0.001\), respectively. We assume the adaptive time-domain filter bank has \(N=7\) filters. In order to compare the theoretical findings with computer simulation results, we assume that the speech signal \(\varvec{s}(i)\) is of a zero-mean Gaussian process. The step size is set to \(\mu =0.01\). Figure 3 compares the numerical learning behaviour of the proposed filter bank method and that of the single adaptive filtering [4]. We also include the theoretical MSE of the proposed method derived in (70) and that of single LMS where \(N=1\). The numerical results are averaged over 250 experiments. The acoustic channel is modelled as

where \(M=4\).

As seen in Fig. 3, the theoretical finding (70) is well matched to the numerical results.

We repeat the same experiment when \(N=2\) is chosen in the proposed method. The results are represented in Fig. 4. By comparing Figs. 3, 4, one can conclude that, in the proposed method, the larger N the better noise cancellation is attained.

In the next scenario of simulations, we run the proposed ANC algorithm for different number of adaptive filters N. As seen in Fig. 5, the MSE error for this experiment is improved when the number of adaptive filters N is increased.

In the next part of simulations, we use the proposed ANC method with \(N=10\), and compare it to the conventional ANC in [11], over a real speech signal. The speech and the noise source signals are shown in Figs. 6a, b, respectively. The results for the conventional and the proposed ANC methods are represented in Figs. 6c, d, respectively. As seen, the outcome of the conventional ANC with one filter is steel noisy; however, the result of the proposed method is less noisy and very similar to the original speech signal in Fig. 6a.

The MSE error for this experiment is also provided in Fig. 7. The results in Fig. 6 and Fig. 7 indicate that how the proposed method improves the noise cancellation performance with \(N=8\) filters.

In the next scenario, we have attempted to replicate real-life conditions as accurately as possible. The captured audio signal utilized is depicted in Fig. 8. The noise signal corresponds to the sound of a vacuum cleaner, and its non-stationary characteristics are evident in Fig. 9. Figure 10 showcases the captured speech signal in the presence of the vacuum cleaner noise.

The parameters of the weight update equations were carefully selected to ensure a rapid convergence rate. Figure 11 presents the output signals of a single adaptive filter (bottom figure) for noise cancellation, contrasting it with the scenario where we employ \(N=10\) parallel adaptive filters (top figure). It is evident that the top figure exhibits superior noise cancellation performance, as indicated by the remaining noise level highlighted by a red arrow.

6 Conclusion

This work deals with acoustic noise cancellation problem where multiple microphones are used to record the noise source signal. A bank of least-mean-squares (LMS) time-domain adaptive filters are proposed to enhance the noise cancellation performance. The recorded noise signals are filtered by an adaptive filter bank. We show that the noise cancellation performance is enhanced by linearly combining the error signals of adaptive filters. We derived an expression for the noise cancellation behaviour in terms of the steady-state mean-square error performance. Numerical simulations verify the theoretical derivations. According to the simulation results, the proposed adaptive structure has a better noise cancellation performance compared to the traditional ANC structure.

Data availability

The speech signal of airplane captain that is depicted in Fig. 6 can be downloaded from the following website: https://www.audiomicro.com/sound-effects/voice-prompts-and-spoken-phrases/pilots

References

Widrow, B., et al.: Adaptive noise cancelling: principles and applications. Proc. IEEE 63(12), 1692–1716 (1975)

Greenberg, J.E.: Modified LMS algorithms for speech processing with an adaptive noise canceller. IEEE Trans. Speech Audio Process. 6(4), 338–351 (1998)

Zhang, S., Zhang, J., Han, H.: Robust variable step-size decorrelation normalized least-mean-square algorithm and its application to acoustic echo cancellation. IEEE/ACM Trans. Audio Speech Lang. Process. 24(12), 2368–2376 (2016)

Gorriz, J.M., et al.: A novel LMS algorithm applied to adaptive noise cancellation. IEEE Signal Process. Lett. 16(1), 34–37 (2009)

Bjarnason, E.: Analysis of the filtered-x LMS algorithm. IEEE Trans. Speech Audio Process. 3(6), 504–514 (1995)

Tan, L., Jiang, J.: Adaptive Volterra filters for active control of nonlinear noise processes. IEEE Trans. Signal Process. 49(8), 1667–1676 (2001)

Das, D.P., Panda, G.: Active mitigation of nonlinear noise processes using a novel filtered-s LMS algorithm. IEEE Trans. Speech Audio Process. 12(3), 313–322 (2004)

Barik, A., et. al.: LMS adaptive multiple sub-filters based acoustic echo cancellation. In: International Conference on Computer and Communication Technology (ICCCT), 17–19 Sept. Allahabad, India (2010)

Emura, Satoru: Residual echo reduction for multichannel acoustic echo cancelers with a complex-valued residual echo estimate. IEEE/ACM Trans. Audio Speech Lang. Process. 26(3), 485–500 (2018)

Malik, S., et al.: Double-talk robust multichannel acoustic echo cancellation using least-squares MIMO adaptive filtering: transversal, array, and lattice forms. IEEE Trans. Signal Process. 68(1), 4887–4902 (2020)

Johansson, S., et al.: Evaluation of multiple reference active noise control algorithms on Dornier 328 aircraft data. IEEE Trans. Speech Audio Process. 7(4), 473–477 (1999)

Sicuranza, G.L., Carini, A.: Filtered-x affine projection algorithm for multichannel active noise control using second order volterra filters. IEEE Signal Process. Lett. 11(11), 853–857 (2004)

Malik, S., Enzner, G.: Recursive Bayesian control of multichannel acoustic echo cancellation. IEEE Signal Process. Lett. 18(11), 619–622 (2011)

Valero, M.L., Habets, E.A.P.: Low-complexity multi-microphone acoustic echo control in the short-time Fourier transform domain. IEEE/ACM Trans. Audio Speech Lang. Process. 27(3), 595–609 (2019)

Sayed, A.H.: Adaptive Filters. Wiley, New Jersey (2008)

Hajiabadi, M., Hodtani, G.A., Khoshbin, H.: Robust learning over multitask adaptive networks with wireless communication links. IEEE Trans. Circuits Syst. II Express Briefs 66(6), 1083–1087 (2019)

Laub, A.J.: Matrix Analysis for Scientists and Engineers, p. 184. SIAM Society for Industrial and Applied Mathematics, Philadelphia (2005)

Funding

There is no grant for this research work.

Author information

Authors and Affiliations

Contributions

All tasks related to this article, including writing the paper, preparing figures, computer simulations, and theoretical analyses, have been carried out by the responsible author of the article. Mojtaba Hajiabadi wrote the main manuscript text and prepared all figures and computer simulations.

Corresponding author

Ethics declarations

Conflict of interest

The interest of author lies in adaptive filters, and this research has been conducted to study this field. The author declares that he has no competing interests as defined by Springer, or other interests that might be perceived to influence the results and/or discussion reported in this paper.

Ethical approval

The manuscript is not submitted to more than one journal for simultaneous consideration. The submitted work is original and has not been published elsewhere in any form or language.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Hajiabadi, M. Exploring the potential of parallel adaptive filters for audio noise removal. SIViP 18, 445–454 (2024). https://doi.org/10.1007/s11760-023-02771-0

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11760-023-02771-0