Abstract

Echocardiography-based cardiac boundary tracking provides valuable information about the heart condition for interventional procedures and intensive care applications. Nevertheless, echocardiographic images come with several issues, making it a challenging task to develop a tracking and segmentation algorithm that is robust to shadows, occlusions, and heart rate changes. We propose an autonomous tracking method to improve the robustness and efficiency of echocardiographic tracking. A method denoted by hybrid Condensation and adaptive Kalman filter (HCAKF) is proposed to overcome tracking challenges of echocardiograms, such as variable heart rate and sensitivity to the initialization stage. The tracking process is initiated by utilizing active shape model, which provides the tracking methods with a number of tracking features. The procedure tracks the endocardium borders, and it is able to adapt to changes in the cardiac boundaries velocity and visibility. HCAKF enables one to use a much smaller number of samples that is used in Condensation without sacrificing tracking accuracy. Furthermore, despite combining the two methods, our complexity analysis shows that HCAKF can produce results in real-time. The obtained results demonstrate the robustness of the proposed method to the changes in the heart rate, yielding an Hausdorff distance of \(1.032\pm 0.375\) while providing adequate efficiency for real-time operations.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Cardiovascular diseases (CVDs) are one of the major causes of death for both genders, with over 17.8 million fatalities in 2017 (\(\sim 31\%\) of all deaths) according to World Health Organization [1]. Therefore, fast and reproducible methods to evaluate cardiac function are paramount to early detection of many heart diseases and prevention of related fatalities. Image modalities such as magnetic resonance imaging (MRI) and echocardiography can provide cardiac images to evaluate the cardiac functionality. Among those, echocardiography provides several advantages including its cost-effectiveness and portability, enabling examination of the patient’s heart functionality outside clinics. For these applications, an accurate, robust and efficient tracking algorithm is a key to the successful assessment of the aforementioned cardiac functionalities. Additionally, real-time tracking of the cardiac borders is very desirable for interventional procedures and intensive care unit applications with continuous monitoring requirements [2].

The primary challenge in detecting echocardiographic boundaries is the presence of shadows and occlusions [3]. Temporal consistency methods such as optical flow [4], speckle tracking echocardiography [5, 6], and combined methods [7] track cardiac boundaries by calculating the current position based on the previously detected position. However, these methods are susceptible to shadows and occlusions. Furthermore, they suffer from a high computational requirement. Moreover, they are not robust to changes in the measurements and system dynamics.

Due to the fact that the object of interest moves in cardiac boundary tracking, it is natural to consider echocardiographic tracking as a spatio-temporal problem. State estimation methods introduced to tracking cardiac boundaries are often based on Blake’s framework [8]. This framework uses a Kalman filter to track B-spline contours deformed within a specific model “shape space.” Jacob et al. [9] introduced real-time tracking based on state estimation methods that utilizes shape space model. Their spatio-temporal contour model consists of a shape model and training set. However, their approach is restricted to linear transformations because of the use of a shape space model. On the other hand, Condensation algorithms are alternative methods of tracking which do not necessitate a linear parameterization [10]. These algorithms could also be used with nonlinear parameterized kinematics. However, Condensation algorithms are not generally efficient. In fact, accuracy-efficiency trade-off strongly holds for Condensation algorithm.

In this paper, we propose a novel hybrid Adaptive Kalman Filter (AKF) and Condensation technique to achieve a robust and real-time cardiac boundary tracking algorithm. Thanks to the combination of AKF and Condensation algorithm, the resulting approach will be robust against noise and changes in the heart beat rate. The integration of AKF circumvents the issue of accuracy-efficiency trade-off in Condensation algorithm by reducing the size of the samples. Furthermore, adaptation capability in AKF enables coping with the changes of measurement and system dynamics. When compared to solely Condensation algorithm, the proposed hybrid algorithm provides superior accuracy. The introduced structure enables using a smaller number of samples, yet producing sufficient accuracy and robustness to dynamic changes. A side contribution of this work relates to the initialization (segmentation) procedure. To the best of the authors’ knowledge, this paper for the first time integrates active shape model (ASM) for initializing echocardiograms tracking. Due to the difficulties associated with manual segmentation [11], such as inconsistent results and the fact that it is a time-consuming process, automated segmentation method by ASM could potentially improve convergence and accuracy. Therefore, ASM is used in this manuscript to feed the tracking algorithm with a number of positions (features) to track.

The paper is structured as follows. Second section introduces the framework for hybrid tracking algorithm. The third section provides the simulations results. Finally, we summarize the major conclusions and perspectives in the last section.

2 Background

2.1 Condensation tracking

The Condensation algorithm employs samples spread around a specified image feature to estimate the current object position. The tracking result of an image feature n, \(\hat{\varPsi }^{i}(n,:)\), which is an n \(\times \) 2 matrix will require a set of randomly distributed samples around it, \(S^{n}(:,:)\), to predict the current position of the tracked point. We use N samples to ensure that the algorithm is efficient and capable of producing acceptable results.

Condensation algorithm is built upon an assumption which states that, given a learned prior, an observation density z, and a curve state \(\varPsi ^{i}\), a posterior distribution can be estimated for \(\varPsi ^{i}(n,:)\) given \(S^{n}(:,:)\) at successive time i. This algorithm keeps track of the point by updating the state density, which is estimated as follows:

-

1.

Generate random sample set \(S^{n}(N,:)\) from a distribution that approximates the conditional state density \(p(z^{i}(n,:)|\varPsi ^{i-1}(n,:))\) at frame i, where z represents the detected points.

-

2.

Calculate the weight of each sample as in (2).

-

3.

Regenerate the samples distribution \(\hat{S}^{n}(l,:)\) based on the calculated weights \(\Pi ^{n}(S^{n}(:,:))\).

-

4.

Calculate the average position using the calculated weights and the samples as follows:

and the observation data \(z^{i}\) are defined as

This procedure will approximate the posterior distribution, which defines the current state of the Condensation algorithm. The distributed samples will be used to initiate the tracking in the next frame.

The Condensation algorithm suffers from degeneracy after a few iterations in the tracking procedure. In the simulations and results section, we will evaluate the regularized Condensation algorithm ( mitigating degeneracy in the Condensation algorithm).

2.2 Kalman filter smoother

Kalman filter (KF) provides an estimation of a linear state system that follows a Gaussian distribution. Mainly this filter processes the observed data and the states to provide a smooth prediction of the current state. KF is composed of two steps, prediction and assimilation of observation. To calculate the next position using the previous output \(\hat{\varPsi }^{i}(n,:)\) from (1), the following dynamic equation is used:

where \(w^{i}=N(0,Q^{i})\) is white process noise, \(Q^{i}\) is the process noise covariance matrix, and A represents the transition model.

The calculation of the measurement residual is carried out by calculating the error between the detection method results and the predicted position. The relationship between the state and the measurement at the current step is calculated as follows:

where observation model H maps the required data in the tracking process and is defined as

where \(v\sim N(0,R)\), and R is the observation noise covariance matrix.

The final results of the tracked points at the current frame i are then stored in matrix \(\varPsi ^{i}\). That is

where \(x_{b}^{i},y_{b}^{i}\) represent the position of a tracked point b at frame i. \(\varPsi ^{i}\) is a matrix that represents the state of b tracked points along the contour in frame i and k is the total number of frames.

3 Hybrid tracking algorithm

In this section, we are going to explain in details the hybrid algorithm.

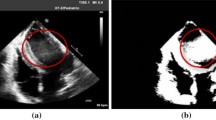

3.1 Initialization step

Conventional ASM requires manual initialization with an average shape in order to initiate the convergence process. The manual initialization of ASM is not appropriate in this application, where it uses an average shape that is created during the training phase. This average shape has several drawbacks. First, it is not likely to be near the testing shape boundary. Secondly, it has soft edges because of averaging all the training data. These issues slow down the shape convergence, making it difficult to achieve satisfactory results in a timely manner. Therefore, the process of initializing ASM must be modified to provide an initial shape that is close to the test subject boundary and has a similar structure to its border. The modification is carried out by replacing the average shape created during the ASM training process with another shape that has a similar shape to the current cardiac boundary. A multilayer perceptron with back propagation will be used as artificial neural network (ANN) to predict the nearest possible fit for the left ventricle (LV) boundary. This neural network is made up of three layers (an input layer, a hidden layer, and an output layer), and the cross-entropy is used as a cost function in this design. Our inputs are echocardiogram pixel values. Because the images are 128\(\times \)128, this gives us 16,384 input layer units. The output layer is a vector of eight numbers that represent the landmark positions in the current echocardiogram. The network is trained on 200 echocardiograms acquired at St. Michael’s Hospital (160 training, 40 testing), which were chosen so that their boundaries were not obscured by shadows (good quality images). After applying the model to the testing echocardiograms, it achieved a 93 percent accuracy.

This initial shape provided by ANN will be used by ASM. During its initial stage, ASM will zero its parameter vector, which results in using the average shape as an initial shape. However, instead of using the average shape, the ANN’s proposed shape will be used as an initialization shape. The ANN’s proposed shape is close to the test subject boundaries, and as a result, the time required for ASM to converge is minimal, compared to the time needed for the conventional ASM. This improvement in the ASM efficiency is because the number of iterations needed by ASM to converge to the final shape is very small. The shape produced will be used by the tracking method to start tracking the cardiac boundary.

3.2 Hybrid condensation algorithm and Kalman filter (HCKF)

The Condensation algorithm employs samples spread around a specified image feature to estimate its current position. The number of samples is very crucial as the algorithm will provide better results. On the other hand, increasing the number of samples will result in increasing the processing time. Therefore, to make the Condensation algorithm produce its results in real-time, some trade-off between robustness and efficiency is needed. Using a small number of samples will result in another issue, which is the inability to handle changes in measurement and system dynamics. Therefore, reducing the number of samples will not be effective as the algorithm’s tracking error would increase. Accordingly, a KF is used alongside the Condensation algorithm to smoothen and minimize the issue related to changes in the system dynamics. A hybrid tracking algorithm that consists of two tracking methods (HCKF: Hybrid Condensation algorithm and Kalman filter) is introduced to track the endocardium boundary. It is essential to provide an appropriate environment to carry on the process of segmentation and tracking.

Tracking a number of points on the cardiac boundary through a frame sequence requires creating a notation system to enable one to describe the process of tracking and comparison. Therefore, two different matrices are necessary for this case; the first matrix \(\varPsi ^{i}\) holds the results of the tracking method, and the second matrix \(\varOmega ^{i}\) contains ground truth data. That is

where \(\overline{x}_{b}^{i},\overline{y}_{b}^{i}\) represent the ground-truth position of the tracked point b at frame i. In order to calculate distance between the ground truth \(\varOmega ^{i}\) and the produced points \(\varPsi ^{i}\), Frobenius norm is used. This will aid in quantifying any improvements in the tracking algorithm’s accuracy.

3.2.1 Tracking procedure

The gray value distribution in echocardiographic images is highly nonlinear and non-Gaussian, which is the reason for using a Condensation algorithm in this research. Gradient values will be used to weigh the sample importance during the sample importance resampling (SIR) process by giving higher weights to the samples on the edges. As a result, the average of those samples will likely result in a point located on the cardiac boundary. Moreover, this will lead to a decrease in the number of samples required to only the ones near edges, which will reduce the computation time. Furthermore, KF is used to overcome the disadvantage of using a small number of samples in the Condensation algorithm and provide a smooth tracking path of the cardiac boundary. To track several points on a cardiac boundary, we initialize the process by using ASM, which will provide the system with eight different positions \(\varPsi ^{0}\). These positions are chosen to accomplish two objectives: first, to keep track of the cardiac boundary; and second, to enable the system to provide the results in real-time (since our system cannot track more than eight features simultaneously). Condensation algorithm will use those positions as the current points to be tracked. The output of the Condensation algorithm is then sent to KF. The Condensation algorithm then uses KF outputs during the SIR stage. The steps of tracking a single position on a cardiac boundary in our approach are depicted in Fig. 1.

The use of KF results in improving the tracking process as it reduces the error between the generated points and the ground truth data. When compared to the expected time after increasing the number of samples, it managed to return results in less than 0.0050 seconds, which is impressive given the 25 frame per second frame rate, making it suitable for real-time tracking applications. The roles of KF and Condensation algorithm are complementary. As a result, combined Condensation and KF smoother for tracking performance will have the advantage of providing good tracking using a small number of samples while avoiding drawbacks such as high computation time.

3.3 Hybrid condensation algorithm with adaptive Kalman filter (HCAKF)

Another objective of this work is to create a robust tracking method that can handle the change in the heart beat rate. HCKF is not able to adapt to the change in the heart beat, which increases the tracking error. Therefore, an adaptive Kalman filter (AKF) will be used instead of the conventional KF as shown in Fig. 1 and will update the covariance matrices to keep up with the sudden changes in the heart beat rate.

3.4 Adaptive Kalman filter

The problem with using the conventional KF is that prior knowledge of the covariance matrix (\(Q_{n}^{i}\)) is required. Unlike the process noise covariance matrix, the observation noise covariance matrix (R) depends on the feature used for tracking [12]. In contrast, the process noise covariance matrix depends on the motion of the tracked objects, and because this motion is unknown, determining \(Q_{n}^{i}\) is not an easy task. Some existing methods could be used to estimate this covariance matrix. These methods can be grouped into correlation, Bayesian, covariance matching, and the maximum likelihood method. In this experiment, the latter approach is used to update the noise statistics, as it is computationally efficient compared to other methods. This method estimates the covariance matrix \(Q_{n}^{i}\) by calculating the residual:

and the residual between the estimated state errors in the current and the previous frame, as

where \(P_{n}^{i-1}\) is the a posteriori covariance matrix and

Here, \(\bar{r}_{n}^{i}\) is the average value of the calculated residuals for frame i and J denotes the number of past measurements. The performance of the algorithm depends on J, which is usually chosen empirically. However, while a large window size offers a more accurate approximation, this will affect the flexibility of the system dynamicity. The number of computations performed can be greatly reduced by using the limited memory filter algorithm [12].

where \(W^{i}\) is weighting element.

At the initial stages in the KF, the covariance matrix and the state vector are initialized with some values where there is little confidence in their accuracy. Since the initial samples in the KF are not accurate, a weighting function is required to minimize the effect of those samples on the calculated covariance matrix and to give confidence in the samples over time. Thus,

It is clear in (4) that there is no control over the heart motion, and the change of heart beat rate is followed by adaptively changing the process noise covariance matrix Q (covariance matrix of the motion error). The AKF will adapt to the increase in the tracked point velocity, which makes it robust to changes in the system dynamics. AKF will receive the Condensation output as the input and start the process of filtering and smoothing those inputs to keep track of the cardiac boundaries. As a result, the use of AKF helps resolving the issue by adapting to the changes that happen during the tracking process. Furthermore, the computation requirement of the AKF depends on the window size (J), where using a limited memory filter algorithm will significantly reduce the required computation time [12].

3.5 Complexity analysis

The computational complexity of the Condensation algorithm is of order \(\mathcal {O}\)(\(N\log N\)) [10]. On the other hand, it is known that the computational complexity of the KF when the number of samples is n is of order \(\mathcal {O}\)(\(n^{3}\)). Cascading the two algorithms in HCAKF results in the computational complexity of the sum of these computational complexities. However, introducing the KF states allows us to reduce the number of samples in the Condensation algorithm. Our simulation results show that reducing the samples by a factor of ten allows us to maintain the algorithm’s accuracy and efficiency acceptable for many clinical applications.

4 Simulations results

This section will provide an evaluation of the effectiveness of the proposed hybrid tracking method and its performance and robustness to noise and heart beat rate change. The mean square error (MSE) and Hausdorff metric (HD) are used in this experiment to measure the distance between two subsets (surfaces) and provides a metric that specifies the maximum distance between two surfaces. Hausdorff metric measures the distance from one point on one surface (first surface) to all points on the other surface (second surface). This method will produce a \(b\times b\) matrix (assuming both surfaces have the same number of points), which represents the distance of each point on the first surface to all points on the second surface. The method then picks the minimum distance for each point, resulting in a \(b\times 1\) vector. The maximum distance between those b distances is then selected. The same process will be applied to the second surface. This process will result in having two values, and the maximum value will be selected. Hence,

Five simulation scenarios were created, each having to track eight different positions on the LV endocardium through 28 echocardiographic sequences of 10 patients acquired at St. Michael’s Hospital (SMH). These echocardiograms were not affected by shadows, and the sequence represents a single cardiac cycle. In Case 1 (CondA-C), the Condensation algorithm with 100 samples is used, while Case 2 (CondA-HCKF) includes the KF in the Case 1, and Case 3 (CondB-C) uses the Condensation algorithm with 1000 samples. Case 4 (CondA-CR) is the same as Case 1, except it uses a different re-sampling approach to reduce the degeneracy issue. The results presented in Table 1 indicate that the use of HCKF did not increase the computation time significantly and remained in the same order of magnitude as Condensation algorithm with 100 samples. Therefore, in both Case 3 and 4, the time is increased significantly due to having more operations. This work focuses on providing an accurate method that is inexpensive in terms of computation time to be able to provide real-time results. Therefore, to achieve a reasonable computational complexity, we used 100 samples as it managed to track the points in 0.0034 seconds. Furthermore, as shown in Table 2, the use of the KF improved the tracking results and helped the Condensation algorithm concentrate on distributing its samples around the tracked feature in terms of HD and MSE. Additionally, the application of the regularized resampling in Case 1 produced better results. However, it required more computation time than a conventional Condensation algorithm, as shown in Table 1. Moreover, we tested Case 4 with only 42 samples, and it provided similar results to the Condensation algorithm in Case 1. However, the computation time is still higher than the original Condensation algorithm with 100 samples.

Table 2 shows that the use of hybrid tracking methods considerably reduces the error of the Condensation algorithm when using 100 samples. Figure 2 shows the results of HCAKF after 9 iterations on one patient (red dots are the Condensation algorithm output, and green line is the output of the KF).

The heart beat rate can change in speed as a result of internal or external effects, such as stress or sickness, or introduced medications. This issue will affect the tracking approach, where it will provide a higher rate of errors than usual. The adaptive Kalman filter is used to provide a real-time update of the process noise covariance matrix based on the tracking results in a specified window which is usually chosen empirically. The results proved that using an adaptive method enhances the tracking process by reducing the overall tracking error compared to the Condensation algorithm, which cannot handle the increase in heart beat rate as shown in Fig. 3. Case 5 (HCAKF) represents the results of HCAKF algorithm using 100 samples. Moreover, Fig.3 demonstrates the superiority of using HCAKF as it is robust to changes in the system dynamics, for instance due to sudden changes in frame 115.

Additionally, we tested HCAKF using a smaller sample size in the Condensation algorithm. The outcome was unsatisfactory, as the landmarks began to lose track of the features, as in Fig. 4. Another issue we encountered was during the initialization stage, when the ANN was unable to correctly identify one of the tracked features (Table 3).

Also, we have tested our method against one of the recent successful techniques, namely B-Spline Explicit Active Surface (BEAS) [7] with the results shown in Table 4. The key concept of BEAS is to consider the boundary of a deformable interface as an explicit function. Surface initialization on the first frame is performed through an ellipsoid. Propagation of LV segmentation to the following temporal frames is then performed using an anatomically affine optical flow framework. Two datasets were used: one from SMH and another from Stanford dataset [13]. In the first dataset, HCAKF outperformed BEAS and successfully maintained cardiac boundaries as in Fig. 5. Additionally, HCAKF was able to maintain real-time results. However, HCAKF lagged BEAS in the Stanford dataset. This is because HCAKF encountered difficulties due to the low quality of the Stanford dataset. Nonetheless, the results are acceptable and comparable to competitive methods. Physicians will tolerate an error with a specified variance expressed in millimeters and represent the mean surface error. Leclerc et al. [14] addressed the subjectivity of physician opinion by performing a cross-validation of manual segmentation performed by three different experts and discovering that the results were not equivalent. Additionally, the differences between the HCAKF and BEAS results are less than those observed in Leclerc et al. cross-validation.

In short, the proposed hybrid approach (HCAKF) managed to keep track of the cardiac boundary despite the change in the system dynamics as shown in Table 3. Moreover, HCAKF was able to track cardiac boundaries and provide its results in real-time.

The study that was used as the source of images for analysis reported in this manuscript was approved by the Institutional Review Board of the St. Michael’s Hospital. The method was implemented in Matlab R2018a using a PC with Intel(R) Core i7, and 16 GB RAM.

To investigate the accuracy-efficiency tradeoff, we also compared the performance of the proposed ANN-based cardiac segmentation technique with the case when the ANN is replaced with the previously developed ResU technique [15]. The 40 images from the SMH dataset were used for the comparison. It was observed that while the accuracy of ResU-based segmentation was slightly improved (0.951 ± 0.201 vs. 0.92 ± 0.033 DICE), the ANN-based segmentation was significantly faster (0.0042± 0.002 vs. 0.051 ± 0.033 s). Additionally, ResU requires a significantly larger training set.

5 Conclusion

To provide an accurate yet efficient tracking of boundaries in echocardiograms, a hybrid algorithm was proposed. To address efficiency problem of Condensation algorithm, a KF is integrated to enable using smaller number of samples. Also, an ASM framework is introduced for initialization of the hybrid tracking method for a good estimate of the current position. To enhance the robustness of the method to changes of the target dynamics (e.g., due to changes of heart beat rate), an adaptation method is introduced into KF (and hence AKF) to estimate noise covariance matrices during tracking. Several scenarios are introduced in this paper to demonstrate the effectiveness of the proposed hybrid method. In future research, the initiation process could be improved by introducing a deep learning approach. Also, the Kernel KF could be utilized to enable the use of linear estimation methods to solve nonlinear estimation problems. Using a kernel KF rather than the Condensation algorithm could improve the proposed model’s efficiency even further.

References

Roth, G.A., et al.: Global, regional, and national age-sex-specific mortality for 282 causes of death in 195 countries and territories, 1980–2017: a systematic analysis for the global burden of disease study 2017 392(10159), 1736–1788 (2018)

Hatt, C.R., et al.: Mri 3d ultrasound x-ray image fusion with electromagnetic tracking for transendocardial therapeutic injections: in-vitro validation and in-vivo feasibility. Comput. Med. Imaging Graph. 37(2), 162–173 (2013)

Belaid, A., Boukerroui, D.: Local maximum likelihood segmentation of echocardiographic images with rayleigh distribution. SIViP 12(6), 1087–1096 (2018)

Pratiwi, A.A., et al.: Improved ejection fraction measurement on cardiac image using optical flow. In: 2017 International Electronics Symposium on Knowledge Creation and Intelligent Computing (IES-KCIC), pp. 295–300. IEEE (2017)

Joos, P., et al.: High-frame-rate speckle-tracking echocardiography. IEEE Trans. Ultrason. Ferroelectr. Freq. Control 65(5), 720–728 (2018)

Papangelopoulou, K., et al.: High frame rate speckle tracking echocardiography to assess diastolic function. Eur. Heart J. 42(Supplement–1), 724-ehab031 (2021)

Pedrosa, J., et al.: Fast and fully automatic left ventricular segmentation and tracking in echocardiography using shape-based b-spline explicit active surfaces. IEEE Trans. Med. Imaging 36(11), 2287–2296 (2017)

Blake, A., Curwen, R., Zisserman, A.: A framework for spatiotemporal control in the tracking of visual contours. Int. J. Comput. Vis. 11(2), 127–145 (1993)

Jacob, G., et al.: A shape-space-based approach to tracking myocardial borders and quantifying regional left-ventricular function applied in echocardiography. IEEE Trans. Med. Imaging 21(3), 226–238 (2002)

Isard, M., Blake, A.: Condensation conditional density propagation for visual tracking. Int. J. Comput. Vis. 29(1), 5–28 (1998)

Bernier, M., et al.: Graph cut-based method for segmenting the left ventricle from mri or echocardiographic images. Comput. Med. Imaging Graph. 58, 1–12 (2017)

Ficocelli, M., Janabi-Sharifi, F.: Adaptive filtering for pose estimation in visual servoing. In: Proc. 2001 IEEE/RSJ International Conference on Intelligent Robots and Systems. Expanding the Societal Role of Robotics in the the Next Millennium (Cat. No. 01CH37180), vol. 1, pp. 19–24. IEEE, Maui, USA (2001)

Ouyang, D., et al.: Video-based ai for beat-to-beat assessment of cardiac function. Nature 580(7802), 252–256 (2020)

Leclerc, S., et al.: Deep learning for segmentation using an open large-scale dataset in 2d echocardiography. IEEE Trans. Med. Imaging 38(9), 2198–2210 (2019)

Ali, Y., Janabi-Sharifi, F., Beheshti, S.: Echocardiographic image segmentation using deep res-u network. Biomed. Signal Process. Control 64, 102248 (2021)

Funding

The funding was provided by Natural Sciences and Engineering Research Council of Canada (Grant No. 2017–06930).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Ali, Y., Beheshti, S., Janabi-Sharifi, F. et al. A hybrid approach for tracking borders in echocardiograms. SIViP 17, 453–461 (2023). https://doi.org/10.1007/s11760-022-02250-y

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11760-022-02250-y