Abstract

In this paper, an object blur detection and deblurring technique is proposed to restore multi-directional motion blurred objects in a single image. We have proposed local blur angle detection method based on Radon transform (RT) and Laplacian of Gaussian (LoG). While capturing the images, motion blur occurs mainly due to either movement of the objects or movement of the camera. Here, we have focused to restore the objects which has been blurred by motion of the objects. The estimation of likely blur direction is calculated in the blurred image using RT and gradient operators. To detect blur angle locally at each pixel, the new local blur angle estimator using RT and LoG has been developed. Numerical experiments have been carried out for the proposed method, and the results are compared with the state-of-the-art methods.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

In classical theory of digital image processing, the image restoration is a challenging job which has been discussed since the last three decades [1,2,3,4]. There are two kinds of motion blur problems studied in the field of image restoration, i.e., blur due to camera motion and blur due to object motion. In the past, lots of attention has been given toward camera motion blur, while few work has been found in the literature toward object motion blur. In this paper, we have focused on detection and restoration of blur occurred due to object motion. Motion blur in the object arises when speed of the object is greater than the camera shutter speed while capturing the image. Image restoration is one of the area of digital image processing, where the researchers have tried to resolve blur through development of blur models and de-blurring techniques. Image restoration techniques are classified mainly into two categories: blind image deconvolution (BID) if blur function (PSF) is unknown and non-blind image deconvolution (NBID) if blur function (PSF) is known. Most familiar blur happens due to uniform motion of the camera which is defined as follows:

where h(x, y) is degradation function (it is also called blur function or point spread function or blur kernel), \( f(x,y)\) is original image, \( n(x,y)\) is noise function, and \( g(x,y)\) is degraded image. And “\(*\)” is denoted as two-dimensional convolution operator. Blur function represents the cumulative effects of distortions caused by uniform movement of either camera or object, as well as all optical and electronic aberrations produced by imperfect sensing and recording equipment. Identification of degradation function is the first and most important step in restoring the original image \( f(x,y)\) from degraded image \( g(x,y)\). In BID, estimation of two functions, \(f(x,y)\) and \( h(x,y)\), is difficult problem in general and it is classified as ill-posed problem in the absence of a prior knowledge about the actual image and blur function. Different kinds of motion blurred images exist based on various blur kernels shown in Fig. 1. Blur model described by Eq. (1) is applicable when camera is in linear motion. However, this model is inappropriate when more than one object in the image are moving simultaneously with different speeds and with different directions as shown in Fig. 2. In our work, we have considered linear motion of the moving objects as shown in Fig. 2a. In general, motion of the objects in the scene can be combination of linear and nonlinear motions either in the same or in different directions with various motion parameters as shown in Fig. 2b–d.

This paper describes a novel algorithm for detection and restoration of multiple objects which have been blurred due to motion. Here, we have estimated global blur parameters of blurred objects of the image by localization, in which we propose new technique using LoG and directional derivative on each pixel followed by RT [5] to find blur parameters. Here, we have considered three assumptions: (i) availability of rich texture in the blurred region, (ii) each object has blurred uniformly throughout image and (iii) object motions are parallel to image plane. In addition, we have used Chan Vese (CV) segmentation method [6] to segment objects having different motions.

In our approach, RT is used locally for measuring direction of blur at a pixel location instead of intensity in fidelity term in Chan Vese segmentation technique. We have tested accuracy of the result by determining the cumulative frequency of absolute error of large number of samples. The behavior of the algorithm is validated by comparison with root-mean-square error (RMSE) of proposed method and state-of-the-art methods [7, 8] through simulation.

A brief outline of this paper is as follows. In Sect. 2, we have reviewed earlier works related to deconvolution of camera motion blur images and object motion blur images along with few segmentation techniques. In Sect. 3, we have discussed blur model for construction of synthetic image. The proposed technique for blur parameter estimation for more than two blurred objects is described in Sect. 4. Experimental results and implementation are given in Sect. 5, and finally, conclusion and future scope are discussed in Sect. 6.

2 Earlier works

In this section, we have reviewed earlier works related to blind image deblurring techniques. We categorized blur motion into two ways: blur due to camera motion and blur due to object motion. Also we have reviewed few contour-based segmentation techniques.

2.1 Earlier works on camera motion deblurring

Many researchers have developed parametric blur models for estimating PSF shown in Fig. 1a. Using these models it is easy to restore blurred image in comparison with nonlinear motion blur which is shown in Fig. 1b. For a parametric linear motion blur model, it is modeled using convolution of blur function with latent image as expressed in Eq. (1) for linear motion of sensor (see, [9]). In [10], Cai et. al. have tried to remove camera shake using regularization and split Bergman method where they used sparsity-based regularization terms on both images and motion blur kernel which is extension of work done in [11,12,13]. In [9], Almeida et. al. have used concept of sparsity of edges existing in blur and deblur image in regularization technique for restoration of the natural images. In [14], Cho et. al. have proposed maximum a prior (MAP) using RT to detect blur direction in the uniform motion blurred images. Subsequently, Ji et. al. [15] have done similar work based on gradient of the image. In [16,17,18], authors have discussed RT with image gradient to deblur noisy blurred images using Lucy–Richardson deblurring and Weiner filter methods. In [19], Sakano has used Hough transform concerning gradient vectors for PSF estimation. In [20], Gupta et. al. have used motion density function (MDF) to record camera motion. Using MDF they have estimated space-variant motion in 6D by approximating three degrees of motion. But this method will work only for small length of motions as it approximates 6D by 3D. Similar work has been discussed in [21], where authors tried to estimate space-variant motion blur using hybrid camera, user interaction and alpha map. In [5], Oliveira et. al. have used the concept of spectral behavior of natural images to estimate motion blur as it gives sinc-like behavior in Fourier domain. Using RT on FT, orientation and length of motion blur are estimated. In [22], Goldstein et. al. have pointed out about spectral irregularities occurring due to strong edges. However, in [8] Oliveira et. al. have proposed modified RT, called Radon-d transform and its approximation by cubic polynomial to avoid these irregularities. In [7], Krahmer et. al. have proposed cepstrum and steerable filter method for estimating blur parameters. In [23], Oyamada et. al. have used these techniques extensively to estimate piecewise linear and nonlinear motion blur parameters. In [24], Amin et. al. have used kernel similarity-based algorithm to restore linear/nonlinear blur images, where they have minimized objective function using conjugate gradient (CG) to obtain corresponding estimate of parameters and compared it with other nonlocal regularization methods described in [25, 26].

2.2 Earlier works on object motion deblurring

As dynamic scene deblurring is more complex than static scene deblurring, little work has been done in this field. Figure 2a–d shows various kinds of blur motion for two moving objects in a single image. In [27], Kim et. al. have addressed the deblurring problem of dynamic scenes which contains multiple moving objects. In [28], Harmeling et. al. have proposed a method that restores overlapping patches of the blurred image, but it is not working at boundary of the moving object as they are not segmenting motion blur. Similar work has been done in [29], based on two-stage approach, one related to object and other related to background similar to that [26] by Levin. In [30], Mariana et. al. have discussed similar concept by extending their previous single-layer method which is presented in [9, 31]. Also complex dynamic scene deblurring has been tried in [27] by Kim et. al. They have inferred optical flow from blurred videos which is blurred by global and local varying blurs caused by various reasons such as moving objects, camera shake, depth variation and defocus. MAP framework has been used to segment variant blurred regions in [32]. Here authors have tried to incorporate a soft-segmentation method to take moving objects and background regions into account for kernel estimation. Similar method has been discussed in [33, 34] where authors have deblurred using regularization method without segmentation. In [35], Couzinie-Devy et. al. solved this problem by casting as a multi-label segmentation problem and estimating local varying blur.

In [36], Favaro et. al. have proposed motion deblurring and scene reconstruction of multiple moving objects. But they have used multiple images to avoid parameter assumption, while Choung et. al. [37] and Min et. al. [38] have used single image with parameter assumption. In [39], Morgan et. al. have presented reconstruction filter for game-like application of object motion blur.

Chan et. al. (see, [6]) have proposed a new model for active contours to detect objects in a given image. This technique is based on curve evolution, Mumford–Shah functional for segmentation [40] and level sets [41], which uses region segmentation-based stopping criteria than edge detection-based stopping criteria on active contour models presented in [42, 43].

3 Blur model

The available blur model for one-directional motion blur is given in Eq. (2).

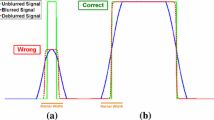

where L is a blur length (which is proportional to motion velocity and camera exposure time), \(\theta \) is a blur angle, and x, y are pixel positions. Figure 3b shows uniform motion blurred image of the original image in Fig. 3a using Eq. (2) in the direction of \(45^{\circ }\) and 80 pixels blur length. But the model fails to generate blurred images wherein one or more objects are moving. Once we know PSF, using convolution theorem we can find original image. We have used blur model locally which is given in Eq. (2) to generate synthetic images for testing and validation of our proposed model. This kind of motion occurs in images where object is moving, with assumption that all parts of object have the same motion during camera exposure time. The generated blurred image for two motion blurred objects using blur model given in Eq. (2) is shown in Fig. 4b.

4 Proposed technique

In this section, we propose local blur angle estimators and introduced the RT and Chan Vese segmentation technique.

4.1 Step 1: blur detection technique

RT is the integral of a function along straight lines (see in, [44]). For a two-dimensional function \( I(x, y)\), RT is defined as

Equation (3) is known as RT which is an integration of image over line at a distance \( \rho \) from origin and at an angle \( \theta \) from x-axis (see in, [44]). Largest value of RT in the range \([0^{\circ },180^{\circ }]\) will determine the blur angle [45]. Variant of RT is proposed in [5] as Radon-d transform which is defined as

where \( R_{d}\) is modified RT, called Radon-d transform, which is changing the integration limit of RT to maximum inscribed square, with \( d=m/\sqrt{(}2)\), \(m=\text {min}\{M,N\}\) for \( M \times N \) image. In classical approach RT of FT of an image is used to calculate blur direction, but this technique does not give blur direction in small size images as FT of small size images does not produce enough parallel lines of frequency information (sinc-like structure) generated due to motion which is used in RT to estimate blur angle. When we use FFT, spectral irregularities occur (see in, [22]), as well as high-frequency content observed along lines at \(0^{\circ }\) and \(90^{\circ }\). To avoid these problems, approximation of Radon-d transform has been proposed in [8] by fitting a third-order polynomial to estimate blur angle, i.e.,

In our approach, we have calculated RT on directional derivative on small region of blurred image to determine likely blur angles in the image. To calculate likely blur directions in the image the following blur angle estimators are proposed:

where \(\nabla \) is gradient operator, \( {R}\) denotes RT, and \( \nabla _{v} \) is derivative along vertical direction in the RT. After estimation of first likely direction using Eq. (6), second direction can be estimated as follows:

Similarly, after estimation of first, second,\(\ldots \), and (\(\textit{n}-1)\mathrm{{th}}\) likely directions, the \(\textit{n}\mathrm{{th}}\) direction can be estimated by

Here \(\hat{\theta }_{1}^{g},\hat{\theta }_{2}^{g},\ldots ,\hat{\theta }_{n}^{g} \) are estimated blur direction related to blurred objects available in the image. We have used gradient operator to sharp the edges created due to blurring in the objects. Local maximum variation in the RT will give likely blur angle related to each object as shown in Fig. 4a. Calculated likely blur direction in the image is used to find its availability and location in the image using directional derivative. Directional derivative of function \(I( x,y)\) at \((a,b)\) in the direction of a unit vector \( u=(u_{1},u_{2}) \) in xy-plane is defined as

where \(\nabla _{x} \text { and } \nabla _{y}\) are derivative operators with respect to x-axis and y-axis, respectively. If the prior knowledge about existing blur directions in the blurred image is available, then we can use directional derivative to emphasize detection of blur angle in that direction locally. Then, the pixelwise blur angle is determined by using LoG on directional derivative followed by RT. LoG is defined as follows:

where \( \sigma \) is standard deviation, and x and y are pixel locations. The proposed pixelwise blur angle estimator is derived as follows:

where \(\tilde{\theta }^{l} \) is estimated blur direction determined locally for each pixels considering lowest error. Accuracy of the blur angle estimator depends on the selection of the window size around each pixel. Techniques mentioned earlier in Sects. 2 and 2.1 do not work for small image size. The proposed local blur angle estimator given by Eq. (11) is novel technique to estimate blur angle for smaller size of image or locally. The proposed estimators are better than other FT-based estimators [5, 7, 8]. Pseudocode of the proposed blur angle detector is given in Algorithm 1. Experimental results are discussed in Sect. 5.

4.2 Step 2: blurred object segmentation and deconvolution

In this subsection, we have segmented the individual objects having the same blur angle using Chan Vese (CV) segmentation technique. CV segmentation is based on Mumford–Shah segmentation, level set method and curve evolution techniques (see in, [6, 40, 41]). Basic concept in this method is to minimize the following functional:

where \(c_{1}\) and \(c_{2}\) are average intensity values inside and outside contour C, respectively, and \( \alpha _{1} \) and \(\alpha _{2}\) are constant control parameters. Also, \(\mu \) and \(\gamma \) are constant parameters to give weightage to length or area (usually, \(\mu ,~\gamma \equiv 1\)), C is boundary of the object, and I is an input image.

To segment objects based on blur angle information, we replace intensity value in Eq. (12) by estimated blur angle determined by Eq. (11). The modified equation is given as:

Here \(\theta _{1}\), \(\theta _{2}\) are average angles inside and outside contour C, respectively, and \( \alpha _{1} \) and \(\alpha _{2}\) are constant control parameters. Also, \(\mu \) and \(\gamma \) are constant parameters to give weightage to length or area (usually, \(\mu ,~\gamma \equiv 1\)), C is boundary of the object, and \(\tilde{\theta }\) is blur angle. Once objects are segmented after estimation of blur angle of the object, blur length of that object can be determined using technique from [5]. Consequently, using inverse Weiner filter blurred object is restored. Pseudocode of segmentation and deconvolution steps is given in Algorithm 2.

5 Experimental results

In this section we have discussed experimental results of the proposed method as well as state-of-the-art methods. Tables 1 and 2 show mean and standard deviation of estimated blur angle using LoG-based method and other edge sharpening filter for test image Lena. The test image was blurred with blur parameters; blur angle, i.e., \(30^{\circ }\), and blur length, i.e., 60 pixels. We found LoG is the most suitable edge sharpening filter in both noisy and noiseless image in comparison with other edge sharpening filters.

To test the proposed blur angle estimator, we run simulation on 4 standard test images as shown in Fig. 5. Observation test set (i.e., blurred test images) is generated with blur parameter ranging from \(10^{\circ }\) to \(90^{\circ }\) (blur angle) and 10 to 60 pixels (blur length). From each blurred test images, about 200,000 windows (subimages) selected randomly with dimension ranging from 10 \(\times \) 10 to 90 \(\times \) 90 pixels. Figure 6. shows RMSE of proposed method with state-of-the-art methods [7, 8]. From these figures, it is clearly visible that RMSE from proposed method is smallest in all test images in comparison with other methods.

Figure 7 compares cumulative frequency plots of absolute errors of detected blur angles using proposed method with methods in [7, 8]. From these figures, it is observed that errors in proposed method are the smallest in comparison with other two existing methods [7, 8]. Table 3 shows the performance matrix derived from Jaccard’s similarity index [46]. The table shows the probabilistic similarity value with standard deviation. (Here similarity value 1 means 100 % match.) The value from proposed method is \(0.5 \pm 0.3\) (i.e., 50% match) which is far better than the values \(0.05\pm 10.0\) (5% match) from two existing methods.

Figure 9 shows the blur angle detected using proposed method with other two state-of-the-art method locally in two blurred objects and three blurred objects. Figure 9c is blurred images with blur parameters: blur angles: \(8^{\circ }, 45^{\circ }\), and blur lengths: 30 pixels. Figure 9d is blurred images with three blurred objects with blur parameters: blur angles: \(10^{\circ }, 40^{\circ }, 67^{\circ }\) and blur lengths: 25, 30 and 37 pixels. Figure 9e, f shows the estimated blur angle using proposed method from input images shown in Fig. 9c, d. It is observed that the blur angle estimated by proposed method is uniform throughout the objects region and differentiable with other objects, while blur angle estimated using existing methods is poor in accuracy as well as nondifferentiable. White lines superimposed on the object shown in Fig. 9e are actual boundary of the blurred objects. Excess detection angle is due to window size used for detection. Our recommendation for window size is to be in the range of \(10 \times 10\) to \(20 \times 20\). If we further increase the window size, then it is negatively affecting the performance, like computation time increases and over detection. Figure 8 shows the restoration of two blurred objects of the image from Fig. 9c.

6 Conclusion and future scope

In this paper a new method is proposed to estimate the parameters for multi-directional object motion blur. To identify the likely patterns of linear motion blur locally we used RT of LoG and directional derivative on smallest possible window around each pixel in the image. The identification of the likely blur direction is determined using RT of gradient of blurred image. The correctness of the proposed method is validated in synthetic images by simulations. Results are compared with state-of-the-art methods for blur parameters estimation. From the results, it is observed that our proposed method for blur angle estimation is best among the other existing methods for detecting blur angle locally. This method can further be extended for different nonuniform motions in the object.

References

Gonzalez, R.C., Wintz, P.: Digital Image Processing. Addison-Wesley, New York (1987)

Pratt, W.K.: Digital Image Processing. Wiley, New York (1991)

Jain, A.K.: Fundamentals in Digital Image Processing. Prentice-Hall, Englewood Cliffs (1989)

Lagendijk, R.L., Biemond, J.: Iterative Identification and Restoration of Images. Kluwer, Boston (1991)

Oliveira, J.P., Figueiredo, M.A.T., Bioucas-Dias, J.M.: Blind estimation of motion blur parameters for image deconvolution. In: Iberian Conference on Pattern Recognition and Image Analysis-IbPRIA, pp. 604–611 (2007)

Chan, T.F., Vese, L.A.: Active contours without edges. IEEE Trans. Image Process. 10(2), 266–277 (2001)

Krahmer, F., Lin, Y., McAdoo, B., Ott, K., Wang, J., Widemannk, D.: Blind image deconvolution: motion blur estimation. Technical report, Institute for Mathematics and its Applications, University of Minnesota, Minneapolis, Minnesota (2006)

Oliveira, J.P., Figueiredo, M.A.T., Bioucas-Dias, J.M.: Parametric blur estimation for blind restoration of natural images: linear motion and out-of-focus. IEEE Trans. Image Process. 23(1), 466–477 (2014)

Almeida, M.S.C., Almeida, L.B.: Blind and semi-blind deblurring of natural images. IEEE Trans. Image Process. 19(1), 36–52 (2010)

Cai, J.F., Ji, H., Liu, C., Shen, Z.: Framelet based blind motion deblurring from a single image. IEEE Trans. Image Process. 21(2), 562–572 (2012)

Cai, J.F., Osher, S., Shen, Z.: Linearized Bregman iterations for frame-based image deblurring. SIAM J. Imaging Sci. 2(1), 226–252 (2009)

Cai, J.F., Osher, S., Shen, Z.: Split Bregman method and frame based image restoration. Multiscale Model. Simul. 8(2), 337–369 (2009)

Cai, J.F., Ji, H., Liu, C., Shen, Z.: Blind motion deblurring from a single image using sparse approximation. In: CVPR (2009)

Cho, T.S., Paris, S., Horn, B.K.P., Freeman, W.T.: Blur kernel estimation using the radon transform. In: CVPR, pp. 241–248 (2011)

Ji, H., Liu, C.: Motion blur identification from image gradients. In: CVPR, pp. 1–8 (2008)

Richardson, W.H.: Bayesian-based iterative method of image restoration. J. Opt. Soc. Am. 62(1), 55–59 (1972)

Sun, H., Desvignes, M., Yan, Y., Liu, W.: Motion blur parameters identification from radon transform image gradients. In: Proceedings of the Conference on Industrial Electronics (2009)

Tai, Y.W., Tan, P., Brown, M.S.: Richardson–Lucy deblurring for scenes under a projective motion path. IEEE TPAMI 33(8), 1603–1618 (2011)

Sakano, M., Suetake, N., Uchino, E.: Robust identification of motion blur parameters by using angles of gradient vectors. In: Proceedings of ISPACS, pp. 522–525 (2006)

Gupta, A., Joshi, N., Zitnick, C.L., Cohen, M.F., Curless, B.: Single image deblurring using motion density functions. In: ECCV, pp. 171–184 (2010)

Tai, Y.W., Du, H., Brown, M., Lin, S.: Image/video deblurring using a hybrid camera. In: Proceedings of IEEE CVPR (2008)

Goldstein, A., Fattal, R.: Blur-kernel estimation from spectral irregularities. In: Proceedings of ECCV, pp. 622–635 (2012)

Oyamada, Y., Asai, H., Saito, H.: Blind deconvolution for a curved motion based on cepstral analysis. IPSJ Trans. Comput. Vis. Appl. (CVA) 3, 32–43 (2011)

Kheradmand, A., Milanfar, Peyman: A general framework for regularized, similarity-based image restoration. IEEE Trans. Image Process. 23(12), 5136–5151 (2014)

Ji, H., Wang, K.: A two-stage approach to blind spatially-varying motion deblurring. In: CVPR (2012)

Levin, A.: Blind motion deblurring using image statistics. In: Proceedings of Advances in NIPS, pp. 841–848 (2006)

Kim, T.H., Lee, K.M.: Segmentation-free dynamic scene deblurring. In: CVPR, pp. 2766–2773 (2014)

Harmeling, S., Michael, H., Schoelkopf, B.: Space-variant single-image blind deconvolution for removing camera shake. In: NIPS (2010)

Kim, T.H., Nah, S., Lee, K.M.: Dynamic scene deblurring using a locally adaptive linear blur model. In: Computer Vision (2016)

Almeida, M.S.C., Almeida, L.B.: Blind deblurring of foreground background images. In: International Conference on Image Processing (ICIP), pp. 1301–1304 (2009)

Almeida, M.S.C., Figueiredo, M.A.: Parameter estimation for blind and nonblind deblurring using residual whiteness measures. IEEE Trans. Image Process. 22(7), 2751–2763 (2013)

Pan, J., Hu, Z., Su, Z., Lee, H.-Y., Yang, M.-H.: Soft-segmentation guided object motion deblurring (2015)

Mignotte, M.: An adaptive segmentation-based regularization term for image restoration. In: IEEE International Conference on Image Processing, ICIP, vol. 1, p. I9014 (2005)

Zhang, X., Burger, M., Bresson, X., Osher, S.: Bregmanized nonlocal regularization for deconvolution and sparse reconstruction. SIAM J. Imaging Sci. 3(3), 253–276 (2010)

Couzinie-Devy, F., Sun, J., Alahari, K., Ponce, J.: Learning to estimate and remove non-uniform image blur. In: CVPR (2013)

Favaro, P., Soatto, S.: A variational approach to scene reconstruction and image segmentation from motion-blur cues. In: CVPR (2004)

Kang, S., Choung, Y., Paik, J.: Segmentation-based image restoration for multiple moving objects with different motions. In: ICIP, pp. 376–380 (1999)

Kang, S., Min, J., Paik, J.: Segmentation-based spatially adaptive motion blur removal and its application to surveillance systems. In: ICIP, pp. 245–248 (2001)

McGuire, M., Hennessy, P, Bukowski, M., Osman, B.: A reconstruction filter for plausible motion blur (2012)

Mumford, D., Shah, J.: Optimal approximation by piecewise smooth functions and associated variational problems. Commun. Pure Appl. Math. 42, 577–685 (1989)

Osher, Stanley, Sethian, James A.: Fronts propagating with curvature-dependent speed: algorithms based on Hamilton–Jacobi formulations. J. Comput. Phys. 79(1), 12–49 (1988)

Kass, K., Witkin, A., Terzopoulos, D.: Snakes: active contour models. Int. J. Comput. Vis. 1(4), 321–331 (1988)

Xu, C., Prince, J.L.: Snakes, shapes, and gradient vector flow. IEEE Trans. Image Process. 7(3), 359–369 (1998)

Bracewell, R.: Two-Dimensional Imaging. Prentice-Hall, Upper Saddle River (1995)

Moghaddam, M., Jamzad, M.: Motion blur identification in noisy motion blur identification in noisy images using fuzzy sets, In: Proceedings of the 5th IEEE International Symposium on Signal Processing and Information Technology, pp. 862–866 (2005)

McCormick, W.P., Lyons, N.I., Hutcheson, K.: Distributional properties of Jaccard’s index of similarity. Commun. Stat. Theory Methods 21, 51–68 (1992)

Acknowledgements

The authors would like to thank to Defence Institute of Advanced Technology, Pune, and Centre for Airborne Systems, Bangalore, for providing infrastructure to carry out the research work.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Kapuriya, B.R., Pradhan, D. & Sharma, R. Detection and restoration of multi-directional motion blurred objects. SIViP 13, 1001–1010 (2019). https://doi.org/10.1007/s11760-019-01438-z

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11760-019-01438-z