Abstract

In spite of widespread use of generalized additive models (GAMs) to remedy the “curse of dimensionality”, there is no well-grounded methodology developed for simultaneous inference and variable selection for GAM in existing literature. However, both are essential in enhancing the capability of statistical models. To this end, we establish simultaneous confidence corridors (SCCs) and a type of Bayesian information criterion (BIC) through the spline-backfitted kernel smoothing techniques proposed in recent articles. To characterize the global features of each non-parametric components, SCCs are constructed for testing their overall trends and entire shapes. By extending the BIC in additive models with identity/trivial link, an asymptotically consistent BIC approach for variable selection is built up in GAM to improve the parsimony of model without loss of prediction accuracy. Simulations and a real example corroborate the above findings.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

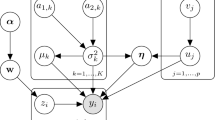

Generalized additive model (GAM) has gained popularity on addressing the curse of dimensionality in multivariate nonparametric regressions with non-Gaussian responses. GAM was developed by Hastie and Tibshirani (1990) for blending generalized linear model with nonparametric additive regression, which stipulates that a data set \(\left\{ Y_{i},\mathbf {X} _{i}^{T}\right\} _{i=1}^{n}\) consists of iid copies of \(\left\{ Y,\mathbf {X} ^{T}\right\} \) that satisfies

where the response Y is one of certain types, such as Bernoulli, Poisson and so forth, the vector \(\mathbf {X}=\left( X_{1},X_{2},\ldots ,X_{d}\right) ^{T} \) consists of the predictors, \(m_{l}(\cdot ),1\le l\le d\) are unknown smooth functions, the white noise \(\varepsilon \) satisfies that \( \mathop {\mathsf{E}}\left( \varepsilon \vert \mathbf {X} \right) =0\) and \(\mathop {\mathsf{E}}\left( \varepsilon ^{2}\vert \mathbf {X} \right) =1\), while c is an unknown constant, \(a\left( \phi \right) \) is a nuisance parameter that quantifies overdispersion, and the known inverse link function \(b^{\prime }\) satisfies that \(b^{\prime }\in C^{2}\left( \mathbb {R}\right) ,b^{\prime \prime }\left( \theta \right) >0,\theta \in \mathbb {R}\), see Assumption (A2) in the Appendix. In particular, if one takes the identity/trivial link, model (1) becomes a common additive model, see Huang and Yang (2004).

The joint density \(f\left( \mathbf {x}\right) \) of \(\left( X_{1},\ldots ,X_{d}\right) \) is assumed to be continuous and

see Assumption (A4) in the Appendix. Furthermore, for each \(1\le l\le d\), the marginal density function \(f_{l}\left( x_{l}\right) \) of \(X_{l}\) has continuous derivatives on \(\left[ 0,1\right] \) and the same uniform bounds \( C_{f}\) and \(c_{f}\). There exists a \(\sigma \)-finite measure \(\lambda \) on \( \mathbb {R}\) such that the distribution of \(Y_{i}\) conditional on \(X_{i}\) has a probability density function \(f_{Y\vert \mathbf {X}}\left( y;b^{\prime }\left\{ m\left( \mathbf {x}\right) \right\} \right) \) relative to \(\lambda \) whose support for y is a common \(\varOmega \), and is continuous in both \(y\in \varOmega \) and \(x\in \left[ 0,1\right] ^{d}\).

It is often the case that in model (1) the probability density function of \(Y_{i}\) conditional on \(\mathbf {X}_{i}\) with respect to a fixed \( \sigma \)-finite measure forms an exponential family:

Nonetheless, such an assumption is not necessary in this paper. Instead, we only stipulate that the conditional variance and conditional mean are linked by

For identifiability, one needs

that leads to unique additive representations of \(m\left( \mathbf {x}\right) =c+\sum \nolimits _{l=1}^{d}m_{l}\left( x_{l}\right) \). Without loss of generality, \(\mathbf {x}\) take values in \(\chi \) \(=\left[ 0,1\right] ^{d}\).

Model (1) has numerous applications. In corporate credit rating, for instance, one is interested in modelling how the default or non-default of a given corporate or company depends on the additive effects of the covariates in financial statements, i.e., the response \(Y=0,1\) with 1 indicating default, 0 indicating non-default, and the predictors are selected from financial statements with a \(\mathop {\mathrm{logit}}\nolimits \)-link \(\left( b^{\prime }\right) ^{-1}(x)\) \(=\) \(\log \left\{ x/\left( 1-x\right) \right\} \). Our method has been applied to 3472 companies in Japan within a 5-year default horizon (2005–2010), and it has been discovered that the current liabilities and stock market returns of current, 3 months and 6 months prior to default are very significant as rating factors, and the default impact of the selected factors are examined via the simultaneous confidence corridors (SCCs) in Fig. 1a–c. More details of this example are contained in Sect. 6.

Plots of the rating factors in a–c SBK estimators (thin), \( 95~\% \) CIs (dashed) and \(95~\%\) SCCs (thick). Plot of the CAPs defined as (24) in d Perfect (dashed), GAM (thick solid), GLM (thin solid), non-informative (dotted). a Current liability. b 3 months earlier return. c 6 months earlier return. d The CAP curves

The smooth functions \(\left\{ m_{l}(x_{l})\right\} _{l=1}^{d}\) in (1) can be estimated by, for instance, kernel methods in Linton and Härdle (1996), Linton (1997) and Yang et al. (2003), B-spline methods in Stone (1986) and Xue and Liang (2010), and two-stage methods in Horowitz and Mammen (2004). To make statistical inference on these functions individually and collectively, however, the proper tools are nonparametric simultaneous confidence corridors (SCCs) and consistent variable selection criteria, both of which are absent in the literature.

Nonparametric SCCs methodology has become increasingly important in statistical literature, see Xia (1998), Fan and Zhang (2000), Wu and Zhao (2007), Zhao and Wu (2008), Ma et al. (2012), Wang et al. (2014), Zheng et al. (2014), Gu et al. (2014), Cai and Yang (2015) and Gu and Yang (2015) for recent theoretical works on nonparametric SCCs. Capturing global shape properties by SCCs of the functions \(\left\{ m_{l}(x_{l})\right\} _{l=1}^{d}\) in GAM (1) is of prime importance. A nonparametric component can be replaced by a parametric one covered entirely within the SCCs, significantly decreasing the estimation variance, see He et al. (2002, 2005) for discussions. As far as we know, SCCs has not been established for functions \( \left\{ m_{l}(x_{l})\right\} _{l=1}^{d}\) in GAM (1) due to the lack of estimators that fit in Gaussian process extreme value theory. Using the spline-backfitted kernel (SBK) smoothing of Liu et al. (2013), we extend the SCCs works of univariate nonparametric regression in Bickel and Rosenblatt (1973) and Härdle (1989) to those of GAM. The SBK smoothing has been well developed in Wang and Yang (2007), Wang and Yang (2009), Liu and Yang (2010) and Ma and Yang (2011) for the much simpler additive model (i.e., GAM with\(\ b^{\prime }\left( x\right) \equiv x\)) including the construction of SCCs, but ours is the first work on SCCs on GAM with nonlinear link.

While variable selection for nonparametric additive model has been investigated under different settings, see Wang et al. (2008), there is lack of theoretically reliable variable selection approach for GAM. To the best of our knowledge, only Zhang and Lin (2006) proposed a sounding method named “COSSO” , which stands for components (CO) LASSO using penalized likelihood method, for selecting components in nonparametric regression with exponential families, but it leaves the asymptotic distributions and variable selection consistency to be desired. Instead, we tackle this issue by building a BIC type criterion based on spline pre-smoothing (first stage in the SBK), which is asymptotically consistent and easy to compute. Our work extends the BIC criterion for additive models (trivial link) in Huang and Yang (2004). Such an extension is challenging since a much more complicated quasi-likelihood is used in GAM with possibly nonlinear link instead of the log mean squared error for trivial link, see the Appendix for details.

The rest of paper is organized as follows. The SBK estimator and its oracle property are briefly described in Sect. 2. Asymptotic extreme value distribution of the SBK estimator is investigated in Sect. 3, which is used to construct the SCCs of component functions. Section 4 introduces a BIC criterion in the GAM setting and provides results on consistent component selection as well as the implementation, followed by the Monte Carlo simulations in Sect. 5. Section 6 illustrates the application of our SCCs and BIC methods to predict default of nearly 3, 500 listed companies in Japan. Technical assumptions and proofs are presented in the Appendix.

2 Spline-backfitted kernel smoothing in GAM

In this section, we briefly describe the spline-backfitted kernel (SBK) estimator for GAM (1) and its oracle properties obtained in Liu et al. (2013). Let \(\left\{ \mathbf {X}_{i},Y_{i}\right\} _{i=1}^{n}\) be i.i.d. observations following model (1). Without loss of generality, one denotes \(\mathbf {x}_{\_1}=\left( x_{2},\ldots ,x_{d}\right) \) and \(m_{\_1}\left( \mathbf {x}_{\_1}\right) =c+\sum _{l=2}^{d}m_{l}\left( x_{l}\right) \) and estimates \(m_{1}\left( x_{1}\right) \).

As a benchmark of efficiency, we introduce the “oracle smoother” by treating the constant c and the last \(d-1\) components \(\left\{ m_{l}\left( x_{l}\right) \right\} _{l=2}^{d}\) as known, then the only unknown component \(m_{1}\left( x_{1}\right) \) may be estimated by the following procedure. Although the exponential family Eq. (2) does not necessarily hold, one still defines, as in Severini and Staniswalis (1994), for each \(x_{1}\in \left[ h,1-h\right] \) a local log-likelihood function \(\tilde{l}\left( a\right) =\tilde{l}\left( a,x_{1}\right) \) as

where \(a\in A\), a set whose interior contains \(m_{1}\left( \left[ 0,1\right] \right) \). The oracle smoother of \(m_{1}\left( x_{1}\right) \) is

Although \(\widetilde{m}_{\mathop {\mathrm{K}},1}\left( x_{1}\right) \) is not a statistic since c and \(\left\{ m_{l}\left( x_{l}\right) \right\} _{l=2}^{d} \) are actually unknown, its asymptotic properties serve as a benchmark for estimators of \(m_{1}\left( x_{1}\right) \) to achieve.

To define the SBK, we introduce the linear B spline basis for smoothing: \( b_{J}\left( x\right) =\left( 1-\vert x-\xi _{J}\vert /H\right) _{+},\) \(0\le J\le N+1\) where \(0=\xi _{0}<\xi _{1}<\cdots <\xi _{N}<\xi _{N+1}=1\) are a sequence of equally spaced points, called interior knots, on interval \(\left[ 0,1\right] \). Denote by \(H=\left( N+1\right) ^{-1}\) the width of each subinterval \(\left[ \xi _{J},\xi _{J+1}\right] ,0\le J\le N\) and the degenerate knots by \(\xi _{-1}=0,\xi _{N+2}=1\). The space of l-empirically centered linear spline functions on [0, 1] is

with empirical expectation \(\mathop {\mathrm{E}}\nolimits _{n}\left\{ g_{l}\left( X_{l}\right) \right\} =n^{-1}\sum _{i=1}^{n}g_{l}\left( X_{li}\right) \). The space of additive spline functions on \(\chi =\left[ 0,1\right] ^{d}\) is

The SBK method is defined in two steps. One first pre-estimates the unknown functions \(\left\{ m_{l}\left( x_{l}\right) \right\} _{l=2}^{d}\) and constants c by linear spline smoothing. We define the log-likelihood function \(\widehat{L}\left( g\right) \) as

According to Lemma 14 of Stone (1986), (5) has a unique maximizer with probability approaching 1. Therefore, the multivariate function \(m\left( \mathbf {x}\right) \) can be estimated by an additive spline function:

The spline estimator is asymptotically consistent, and can be solved efficiently via generalized linear models. However, as stated in Wang and Yang (2007) and Liu et al. (2013), spline methods only provide convergence rates but no asymptotic distributions, so no measures of confidence can be assigned to the estimators. To overcome this problem, we adapt the SBK estimator, which combines the strength of kernel smoothing with regression spline. One then rewrites \(\widehat{m}\left( \mathbf {x} \right) =\hat{c}+\sum _{l=1}^{d}\widehat{m}_{l}\left( x_{l}\right) \) for \( \widehat{c}\in \mathbb {R}\) and \(\widehat{m}_{l}\left( x_{l}\right) \in G_{n,l}^{0}\) and defines a univariate quasi-likelihood function similar to \( \widetilde{l}\left( a,x_{1}\right) \) in (3) as

with \(\widehat{m}_{\_1}\left( \mathbf {x}_{\_1}\right) =\widehat{c} +\sum _{l=2}^{d}\widehat{m}_{l}\left( x_{l}\right) \) being the pilot spline estimator of \(m_{\_1}\left( \mathbf {x}_{\_1}\right) \). Consequently, the spline-backfitted kernel (SBK) estimator of \(m_{1}\left( x_{1}\right) \) is

We now introduce some useful results and definitions from Liu et al. (2013), under Assumptions (A1)–(A7) in appendix, as \(n\rightarrow \infty \),

in which the higher order remainder \(r_{\mathop {\mathrm{K}},1}\left( x_{1}\right) \) satisfies

The scale function \(D_{1}\left( x_{1}\right) \) and bias function \(\mathop {\mathrm{ bias}}\nolimits _{1}\left( x_{1}\right) \) are defined in Liu et al. (2013) as:

where \(\left\| K\right\| _{2}^{2}=\int K^{2}\left( u\right) du\), \(\mu _{2}\left( K\right) =\int K\left( u\right) u^{2}du\). The above Eqs. (8), (9) and (10) lead one to a simplifying decomposition of the estimation error \(\widehat{m}_{\mathop {\mathrm{SBK}}\nolimits ,1}\left( x_{1}\right) -m_{1}\left( x_{1}\right) \)

The decomposition in (12) is fundamental for constructing SCCs in Sect. 3, and it follows from Theorems 1 and 4 of Liu et al. (2013), which were proved under weak dependence. A similar Theorem 2 in Horowitz and Mammen (2004) for the two-stage estimator was established only for a fixed \(x_{1}\), not uniformly for \(x_{1}\) in the growing interval \(\left[ h,1-h\right] \), and exclusively for iid data, not dependent data, see detailed discussion on page 621 of Liu et al. (2013).

3 GAM inference via simultaneous confidence corridor

In this section, we propose SCCs for GAM components based on the SBK smoothing, extending the works for univariate nonparametric function estimation in Bickel and Rosenblatt (1973) and Härdle (1989).

3.1 Main results

Denote \(a_{h}=\sqrt{-2\mathop {\mathrm{log}}h},C\left( K\right) =\left\| K^{\prime }\right\| _{2}^{2}\left\| K\right\| _{2}^{-2}\) and for any \(\alpha \in \left( 0,1\right) \), the quantile

Also with \(D_{1}\left( x_{1}\right) \) and \(v_{1}^{2}\left( x_{1}\right) \) given in (11), we define

Theorem 1

Under Assumptions (A1)–(A7), as \(n\rightarrow \infty \)

A \(100\left( 1-\alpha \right) \%\) simultaneous confidence corridor for \( m_{1}\left( x_{1}\right) \) is

The above SCC for component function \(m_{1}\left( x_{1}\right) \) resembles the SCCs in Bickel and Rosenblatt (1973) and Härdle (1989) for estimating unknown univariate nonparametric function, although it is for multivariate nonparametric regression.

3.2 Implementation

To satisfy Assumption (A4), one could use the transformed \( U_{il}=F_{nl}\left( X_{il}\right) \) instead of \(X_{il}\) as predictors for each \(l=1,\ldots ,d\) and \(i=1,\ldots ,n,\) where \(F_{nl}\) is the empirical distribution of \((X_{1l},\ldots ,X_{nl})\). We still use symbol X instead of U to avoid involving new symbols, but the X variates have been transformed in simulation study and applications.

To construct the SCC for \(m_{1}\left( x_{1}\right) \) in (15), one needs to select the bandwidth h and the number of knots N to evaluate \(m_{\mathop {\mathrm{SBK}}\nolimits ,1}\left( x_{1}\right) ,\) \(Q_{h}\left( \alpha \right) \) and \(\sigma _{n}\left( x_{1}\right) \) given in (7), (13) and (14).

Assumption (A6) requires that the bandwidth for SCCs be different from the mean square optimal bandwidth \(h_{\mathop {\mathrm{opt}}}\sim n^{-1/5}\) (minimizing AMISE) in Liu et al. (2013). This is due to the two conflicting goals in SCCs construction: coverage of the true curve and narrowness of the corridor, are not quantifiable in a single measure to minimize, such as the mean integrated squared error. We, therefore, take \(h=h_{ \mathop {\mathrm{opt}}}(\log n)^{-1/4}\), as a data-driven undersmoothing bandwidth for SCCs construction to fulfill Assumption (A6), where \(h_{\mathop {\mathrm{opt}}}\) is computed as in Liu et al. (2013), page 623–624. Recent articles on SCCs for time series, such as Wu and Zhao (2007), Zhao and Wu (2008), have used similar undersmoothing bandwidths.

For a given l and a chosen bandwidth h, one can easily estimate \(m_{ \mathop {\mathrm{SBK}}\nolimits ,1}\left( x_{1}\right) \) and \(Q_{h}\left( \alpha \right) \) as in (7), (13). To evaluate \(\sigma _{n}\left( x_{1}\right) \), one needs to estimate \(v_{1}\left( x_{1}\right) \) and \( D_{1}^{-1}\left( x_{1}\right) \) given in (11), i.e., estimating \( f\left( x_{1}\right) ,\sigma _{b}^{2}\left( x_{1}\right) \) and \(\sigma ^{2}\left( x_{1}\right) \). The density function \(f\left( x_{1}\right) \) is estimated by \(\widehat{f}\left( x_{1}\right) =\) \(n^{-1}\sum _{i=1}^{n}K_{h_{ \mathop {\mathrm{ROT}}\nolimits }}\left( X_{i1}-x_{1}\right) \), where \(h_{\mathop {\mathrm{ROT}}\nolimits }\) is the rule-of-thumb bandwidth in equation (5.8), page 200 of Fan and Yao (2003), namely \(h_{\mathop {\mathrm{ROT}}\nolimits }=\left( 8\sqrt{\pi }/3\right) ^{1/5}\mu _{2}\left( K\right) \left\| K\right\| _{2}^{2/5}n^{-1/5}\hat{\sigma }\), in which \( \hat{\sigma }\) is the sample standard deviation of \(\left\{ X_{i1}\right\} _{i=1}^{n}\). We further illustrate the spline estimates of \(\sigma _{b}^{2}\left( x_{1}\right) \) and \(\sigma ^{2}\left( x_{1}\right) \) below:

One partitions \(\min _{i}X_{i1}=t_{1,0}<\cdots <t_{1,N+1}=\max _{i}X_{i1}\) where N is the number of spline interior knots, i.e.,

which satisfies Assumption (A7) in the Appendix. Then \(\sigma _{b}^{2}\left( x_{1}\right) \) can be estimated as \(\sum _{k=0}^{3}\widehat{a} _{1,k}x_{1}^{k}+\sum _{k=4}^{N+3}\widehat{a}_{1,k}\left( x_{1}-t_{l,k-3}\right) ^{3}_{+}\) where \(\left\{ \widehat{a}_{1,k}\right\} _{k=0}^{N+3}\) minimize

and \(\sigma ^{2}\left( x_{1}\right) \) can be estimated as \(\sum _{k=0}^{3} \widehat{a}_{1,k}x_{1}^{k}+\sum _{k=4}^{N+3}\widehat{a}_{1,k}\left( x_{1}-t_{l,k-3}\right) ^{3}_{+}\) where \(\left\{ \widehat{a}_{1,k}\right\} _{k=0}^{N+3}\) minimize

The resulted estimate \(\hat{\sigma }_{n}\left( x_{1}\right) \) of \(\sigma _{n}\left( x_{1}\right) \), using (17) and (18) satisfies \(\sup _{x_{1}\in \left[ h,1-h\right] }\big \vert \hat{\sigma }_{n}\left( x_{1}\right) -\sigma _{n}\left( x_{1}\right) \big \vert =\mathcal {O}_{p}\left( n^{-\gamma }\right) \) for some \(\gamma >0\), see Liu et al. (2013) Sect. 5 for details. This consistency and Slutsky’s theorem ensure that

as \(n\rightarrow \infty \), and therefore \(\widehat{m}_{\mathop {\mathrm{SBK}}\nolimits ,1}\left( x_{1}\right) \pm \hat{\sigma }_{n}\left( x_{1}\right) Q_{h}\left( \alpha \right) \) is a \(100\left( 1-\alpha \right) \%\) simultaneous confidence corridor for \(m_{1}\left( x_{1}\right) \). The SCCs constructions of other components \(m_{2}\left( x_{2}\right) ,\ldots ,m_{d}\left( x_{d}\right) \) are similar. It is worth while to emphasize that, based on extensive simulation experiments, the estimators \(\widehat{m}_{\mathop {\mathrm{SBK}}\nolimits ,1}\left( x_{1}\right) ,\) \(\widehat{Q}_{h}\left( \alpha \right) ,\widehat{f}\left( x_{1}\right) \) and \(\hat{\sigma }_{n}\left( x_{1}\right) \) remain stable if h and N slightly vary.

4 Variable selection in GAM

In this section, we propose a Bayesian Information Criterion (BIC) for component function selection based on spline smoothing in step one of the SBK estimation for GAM and an efficient implementation follows.

4.1 Main results

According to Stone (1985), p. 693, the space of l-centered square integrable functions on \(\left[ 0,1\right] \) is defined as

and the model space \(\mathcal {M}\) is

To introduce the proposed BIC, let \(\left\{ 1,\ldots ,d\right\} \) denote the complete set of indices of d tuning variables \(\left( X_{1},\ldots ,X_{d}\right) \). For each subset \(S\subset \left\{ 1,\ldots ,d\right\} \), define a corresponding model space \(\mathcal {M}_{S}\) for S as

with \(\mathcal {H}_{l}^{0}\) given in (19), and the space of the additive spline functions as

with \(G_{n,l}^{0}\) given in (4). Following Definition 1 of Huang and Yang (2004), the set \(S_{0}\) of significant variables is defined as the minimal set \(S\subset \left\{ 1,\ldots ,d\right\} \) such that \(m\in \mathcal {M}_{S}\). According to Lemma 1 of Huang and Yang (2004), the set \(S_{0}\) is uniquely defined. Standard theory of Hilbert space and subspace projection implies that the set \(S_{0}\) is also the minimal set \(S\subset \left\{ 1,\ldots ,d\right\} \) such that \(\mathop {\mathrm{E}}\{m\left( \mathbf {X}\right) -m_{S}\left( \mathbf {X}\right) \}^{2}=0\) in which the least squares projection of function m in \(\mathcal {M}_{S}\) is

To identify \(S_{0}\), one computes for an index set S the BIC as

where \(\widehat{L}\) \(\left( \cdot \right) \) is given in (5), \(\widehat{m}_{S}\left( \mathbf {x}\right) \) \(\in G_{n,S}^{0}\) is the pilot spline estimator as in (6), \(N_{S}=1+\left( N+1\right) \#\left( S\right) \) with N the number of interior knots as defined in (16), \(\#\left( S\right) \) the cardinality of S.

Our variable selection rule takes the subset \(\widehat{S}\subset \left\{ 1,\ldots ,d\right\} \) that minimizes BIC\(_{S}\).

Theorem 2

Under Assumptions (A1)–(A5), (A7), \(\lim _{n\rightarrow \infty }P\left( \widehat{S}=S_{0}\right) =1\).

According to Theorem 2, the variable selection rule based on the BIC in (22) is consistent. The nonparametric version BIC was firstly established in Huang and Yang (2004) for additive autoregression model, and adapted to additive coefficient model by Xue and Yang (2006), to single index model by Wang and Yang (2009). Our proposed BIC differs from all of the above as it is based on quasi-likelihood rather than mean squared error, which makes the technical proof of consistency much more challenging. To the best of our knowledge, it is the first theoretically reliable information criterion in this setting.

4.2 Implementation

We have not implemented the BIC variable selection by a greedy search through all possible subsets. Instead, a forward stepwise procedure is used with minimizing BIC as the criterion since it is more common that only a few variables are significant among many variables. We have also experimented with backward as well as forward–backward stepwise procedures which have yielded similar outcomes in simulation examples.

5 Simulation

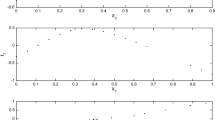

This section studies under simulated setting the performance of the proposed procedures including the computational cost of the SBK, the consistency of selecting variables via BIC and the coverage frequency of the SCCs. The data are generated from

with \(d=10,c=0,m_{3}\left( x\right) =\sin \left( 4\pi x\right) ,m_{4}\left( x\right) =m_{5}\left( x\right) =\sin \left( \pi x\right) ,\) \(m_{6}\left( x\right) =x,m_{7}\left( x\right) =e^{x}-(e-e^{-1})\) and \(m_{l}\left( x\right) =0\) for \(l=1,2,8,9,10\). The predictors are generated by

where \(\varPhi \) is the standard normal c.d.f. and \(\varSigma =\left( 1-r\right) \mathbf {I}_{d\times d}+r\mathbf {1}_{d}\mathbf {1}_{d}^{T}\). The parameter r (\(0\le r<1\)) controls the correlation between \(Z_{il,}1\le l\le d\). To examine the computing advantage of BIC for large d, we have also included results for \(d=50\) with \(m_{3},\ldots ,m_{7}\) as above and all the other component functions are 0.

COSSO is a penalized likelihood method proposed in Zhang and Lin (2006) for LASSO type component selection and nonparametric regression in exponential families. In what follows, the performance of BIC and COSSO is firstly compared, followed by a computational comparison between the SBK and a kernel method in GAM, and it ends with a report on the SCCs coverage frequency for components function (the frequency that SCCs covering the entire curve on the domain). We have tried numbers of knots different from the one in (16) with similar results, so our conclusion is that the performance of BIC is rather insensitive to the number of knots.

Table 1 shows the simulation results from 500 replications, where the outcome is defined in accuracy as correct fitting, if \(\widehat{S} =S_{0}\); overfitting, if \(S_{0}\subset \widehat{S}\); and underfitting, if \(S_{0}\nsubseteq \widehat{S}\). It is clear that the performance of BIC on selecting 5 significant variables \(m_{l}\left( X_{l}\right) ,l=3,\ldots ,7,\) is quite satisfactory. The selection accuracy becomes higher as the sample size increases and/or the correlation decreases; it is poorer with higher dimension d (\(=\!50\)) but still high when sample size \(n=1000\). The accuracy and computing time of COSSO are also listed for comparison (Platform: R; PC: Intel 3.1 GHz processor and 8 GB RAM). It is shown in Table 1 that the BIC significantly outperforms the COSSO in terms of accuracy and computing time, and the advantage in computing time widens significantly for \(d=50\).

In addition to the above comparison for model selection, we have also conducted numerical comparison between COSSO and our proposed SBK estimation method in terms of probability prediction. The proposed SBK method has higher prediction accuracy in almost all cases, see Table 4 in the Supplement. Comparison regarding SCC has not been made against COSSO because it does not produce one.

The SCCs coverage frequency for \(m_{l}\left( x_{l}\right) ,l=1,\ldots ,7\) is reported in Table 2. Among the zero functions, we have omitted the results for \(m_{8},m_{9}\) and \(m_{10}\) because the results are very similar to \(m_{1}\) and \(m_{2}\). The empirical coverage approaches the nominal confidence levels as n increases, and better coverage occurs when the correlation is lower. The coverage frequencies vary slightly when d increases, the numerical results of which have not been included for brevity. We have also compared the coverage frequency of SCC and method VOT (Volume of Tube) in the same setup of the simulation 1 in Wiesenfarth et al. (2012), which considered only the case of trivial link function. The performance of our proposed SCC is quite similar to the VOT method Wiesenfarth et al. (2012), see Table 3 in the Supplement.

The above studies evidently indicate the reliability of our methodology, such as high selection accuracy of the BIC and desired coverage frequency of the SCCs. It ensures their applications for credit rating modelling in the following section.

6 Application

We now return to forecast default probabilities of the listed companies in Japan. The data taken from the Risk Management Institute, National University of Singapore include the comprehensive financial statements and the credit events (default or bankruptcy) from 2005 to 2010 of 3583 Japanese firms.

Berg (2007) found that the liability status was important to indicate the creditworthiness of a company, while Bernhardsen (2001) and Ryser and Denzler (2009) proposed to consider the “leverage effect” expressed by the financial statement ratios. Therefore, we have pooled two situations by considering \(X_{1}\): Current liability, \(X_{2}\): Current stock return, \(X_{3}\): Long-term borrow, \(X_{4}\): Short-term borrow, \(X_{5}\): Total asset, \(X_{6}\): Non-current liability, \(X_{7}\): 3 months earlier (stock) return, \(X_{8}\): 6 months earlier (stock) return, \(X_{9}\): Current ratio, \(X_{10}\): Net liability to shareholder equity, \(X_{11}\): Shareholder equity to total liability and equity, \(X_{12}\): TCE ratio, \(X_{13}\): Total debt to total asset, \(X_{14}\): Quick ratio.

Selecting the rating factors via the BIC given in (22), we have found that \(X_{1}\): Current liabilities, \(X_{7}\): 3 months earlier return, \(X_{8}\): 6 months earlier return are significant. Similar rating covariates were also discovered in Shina and Moore (2003), Berg (2007) and Ryser and Denzler (2009). However, Berg (2007) selected 23 variables which led to a non-parsimonious GAM. In contrast, Ryser and Denzler (2009) had found that 3 financial ratios (capital turnover, long-term debt ratio, return on total capital) were significant based on the blockwise cross-validation (CV) method which is nonetheless extremely time consuming in comparison to the proposed BIC.

Figure 1a–c depicts the SBK estimator of the factor’s default impact curve on domain, while a shoal of \(95~\%\) CIs and the \(95~\%\) SCCs present, respectively, the pointwise and global uncertainty of the whole curve. The SBK estimators indicate overall monotonicities of each rating factors, and the SCCs turn out to be fairly narrow to warrant the global nonlinearities of the factors’ curves which reveal the underlying nonlinear features in different segments of domain.

As for the model evaluations, the cumulative accuracy profile (CAP) is plotted in Fig. 1d. For any score function S, one defines its alarm rate \(F\left( s\right) =P\left( S\le s\right) \) and the hit rate \(F_{\mathop {\mathrm{D}}}\left( s\right) =P\left( S\le s\vert \mathop {\mathrm{D}}\right) \) where \(\mathop {\mathrm{D}}\) represents the conditioning event of “default”. One then defines the \(\mathop {\mathrm{CAP}}\) curve as

which is the percentage of default-infected obligators that are found among the first (according to their scores) \(100u~\%\) of all obligators. A satisfactory model’s CAP would be expected to approach to that of the perfect model (i.e., \(\mathop {\mathrm{CAP}}_{\mathop {\mathrm{P}}}\left( u\right) =\min \left( u/p,1\right) ,u\in \left( 0,1\right) \) where p is the unconditional default probability) and always better than the noinformative. In contrast, a noninformative rating method with zero discriminatory power displays a diagonal line \(\mathop {\mathrm{CAP}}_{\mathop {\mathrm{N}}}\left( u\right) \equiv u,u\in \left( 0,1\right) \). See details of the CAP in Engelmann et al. (2003).

The AR is the ratio of two areas \(a_{R}\) and \(a_{P}\). The area between the given CAP curve and the noninformative diagonal \(\mathop {\mathrm{CAP}}_{\mathop {\mathrm{N}} }\left( u\right) \equiv u\) is \(a_{R}\), whereas \(a_{P}\) is the area between the perfect CAP curve \(\mathop {\mathrm{CAP}}_{\mathop {\mathrm{P}}}\left( u\right) \) and the noninformative diagonal \(\mathop {\mathrm{CAP}}_{\mathop {\mathrm{N}}}\left( u\right) \). Thus,

where \(\mathop {\mathrm{CAP}}\left( u\right) \) is given in (24). The AR takes value in \(\left[ 0,1\right] \), with value 0 corresponding to the noninformative scoring, and 1 the perfect scoring method, a higher AR indicates an overall higher discriminatory power of a method.

Using both GAM and GLM obtained from first 2000 companies to predict the default rate of the rest 1583 companies, the accuracy ratio is \(97.56~\%\) for GAM, much higher than the \(89.76~\%\) for GLM. We have also applied the COSSO method to the same data, and the following error message has appeared “Error in solve.QP(GH$H, GH$H %*% old.theta - GH$G, t(Amat), bvec): matrix D in quadratic function is not positive definite!”, which once again has illustrated the advantage of the proposed BIC procedure over the existing method.

References

Berg D (2007) Bankruptcy prediction by generalized additive models. Appl Stoch Models Bus Ind 23:129–143

Bernhardsen E (2001) A model of bankruptcy prediction. Norges Bank, WP

Bickel PJ, Rosenblatt M (1973) On some global measures of the deviations of density function estimates. Ann Stat 1:1071–1095

Cai L, Yang L (2015) A smooth simultaneous confidence band for conditional variance function. TEST 24:632–655

Engelmann B, Hayden E, Tasche D (2003) Testing rating accuracy. Risk 16:82–86

Fan J, Yao Q (2003) Nonlinear Time Series: Nonparametric and Parametric Methods. Springer-Verlag, Berlin

Fan J, Zhang WY (2000) Simultaneous confidence bands and hypothesis testing in varying-coefficient models. Scand J Stat 27:715–731

Gu L, Wang L, Härdle W, Yang L (2014) A simultaneous confidence corridor for varying coefficient regression with sparse functional data. TEST 23:806–843

Gu L, Yang L (2015) Oracally efficient estimation for single-index link function with simultaneous confidence band. Electr J Stat 9:1540–1561

Härdle W (1989) Asymptotic maximal deviation of M-smoothers. J Multivariate Anal 29:163–179

Hastie TJ, Tibshirani RJ (1990) Generalized additive models. Chapman and Hall, London

He X, Fung W, Zhu Z (2005) Robust estimation in generalized partial linear models for clustered data. J Am Stat Assoc 100:1176–1184

He X, Zhu Z, Fung W (2002) Estimation in a semiparamtric model for longitudinal data with unspecified dependence structure. Biometrika 89:579–590

Horowitz J, Mammen E (2004) Nonparametric estimation of an additive model with a link function. Ann Stat 32:2412–2443

Huang JZ, Yang L (2004) Identification of nonlinear additive autoregression models. J R Stat Soc Ser B Stat Methodol 66:463–477

Linton OB (1997) Efficient estimation of additive nonparametric regression models. Biometrika 84:469–473

Linton OB, Härdle W (1996) Estimation of additive regression models with known links. Biometrika 83:529–540

Liu R, Yang L (2010) Spline-backfitted kernel smoothing of additive coefficient model. Econom Theory 26:29–59

Liu R, Yang L, Härdle W (2013) Oracally efficient two-step estimation of generalized additive model. J Am Stat Assoc 108:619–631

Ma S, Yang L (2011) Spline-backfitted kernel smoothing of partially linear additive model. J Stat Plan Inference 141:204–219

Ma S, Yang L, Carroll RJ (2012) Simultaneous confidence band for sparse longitudinal regression. Stat Sin 22:95–122

Ryser M, Denzler S (2009) Selecting credit rating models: a cross-validation-based comparison of discriminatory power. Financ Mark Portf Manag 23:187–203

Severini T, Staniswalis J (1994) Quasi-likelihood estimation in semiparametric models. J Am Stat Assoc 89:501–511

Shina Y, Moore W (2003) Explaining credit rating differences between Japanese and U.S. agencies. Rev Finan Econ 12:327–344

Stone CJ (1985) Additive regression and other nonparametric models. Ann Statist 13:689–705

Stone CJ (1986) The dimensionality reduction principle for generalized additive models. Ann Statist 14:590–606

Tusnady G (1977) A remark on the approximation of the sample distribution function in the multidimensional case. Period Math Hungar 8:53–55

Wang J, Liu R, Cheng F, Yang L (2014) Oracally efficient estimation of autoregressive error distribution with simultaneous confidence band. Ann Stat 42:654–668

Wang L, Yang L (2007) Spline-backfitted kernel smoothing of nonlinear additive autoregression model. Ann Stat 35:2474–2503

Wang L, Yang L (2009) Spline estimation of single index model. Stat Sin 19:765–783

Wang L, Li H, Huang J (2008) Variable selection in nonparametric varying-coefficient models for analysis of repeated measurements. J Am Stat Assoc 103:1556–1569

Wiesenfarth M, Krivobokova T, Klasen S, Sperlich S (2012) Direct Simultaneous Inference in Additive Models and its Application to Model Undernutrition. J Am Stat Assoc 107:1286–1296

Wu W, Zhao Z (2007) Inference of trends in time series. J R Stat Soc Ser B Stat Methodol 69:391–410

Xia Y (1998) Bias-corrected confidence bands in nonparametric regression. J R Stat Soc Ser B Stat Methodol 60:797–811

Xue L, Yang L (2006) Additive coefficient modeling via polynomial spline. Stat Sin 16:1423–1446

Yang L, Sperlich S, Härdle W (2003) Derivative estimation and testing in generalized additive models. J Stat Plan Inference 115:521–542

Zhang H, Lin Y (2006) Component selection and smoothing for nonparametric regression in exponential families. Stat Sin 16:1021–1042

Zhao Z, Wu W (2008) Confidence bands in nonparametric time series regression. Ann Stat 36:1854–1878

Zheng S, Yang L, Härdle W (2014) A smooth simultaneous confidence corridor for the mean of sparse functional data. J Am Stat Assoc 109:661–673

Acknowledgments

This work is supported in part by the Jiangsu Specially Appointed Professor Program SR10700111, the Jiangsu Key Discipline Program (Statistics) ZY107002, ZY107992 National Natural Science Foundation of China award 11371272, Research Fund for the Doctoral Program of Higher Education of China award 20133201110002, United States NSF awards DMS 0706518, DMS 1007594, an Michigan State University Dissertation Continuation Fellowship, funding from the National University of Singapore, the Deutsche Forschungsgemeinschaft (DFG) via SFB 649 “Economic Risk”, and the International Research Training Group (IRTG) 1792. The helpful comments from two Reviewers and an Associate Editor are gratefully acknowledged.

Author information

Authors and Affiliations

Corresponding author

Electronic supplementary material

Below is the link to the electronic supplementary material.

Appendix

Appendix

In what follows, we take \(\left\| \cdot \right\| \) and \(\left\| \cdot \right\| _{\infty }\) as the Euclidean and supremum norms, respectively, i.e., for any \(\mathbf {x}=\left( x_{1},x_{2},\ldots ,x_{d}\right) \) \(^{T}\in \mathbb {R}^{d},\left\| \mathbf {x} \right\| =\left( \sum _{l=1}^{d}x_{l}^{2}\right) ^{1/2}\) and \(\left\| \mathbf {x}\right\| _{\infty }=\max \limits _{1\le l\le d}\big \vert x_{l}\big \vert \). For any interval \(\left[ a,b\right] \), denote the space of pth order smooth function by \(C^{\left( p\right) }[a,b]=\left\{ g\big \vert g^{\left( p\right) }\in C\left[ a,b\right] \right\} \), and the class of Lipschitz continuous functions by

for constant \(C>0\). Lastly, define the following latent regression errors

1.1 Technical assumptions

We need the following technical assumptions:

-

(A1)

The additive component functions \(m_{l}\in C^{\left( 1\right) }\left[ 0,1\right] ,1\le l\le d\) : \(m_{1}\in C^{\left( 2\right) }\left[ 0,1\right] \), \(m_{l}^{\prime }\in \mathop {\mathrm{Lip}}\left( \left[ 0,1\right] ,C_{m}\right) \) \(,2\le l\le d\) for some constant \( C_{m}>0\).

-

(A2)

The inverse link function \(b^{\prime }\) satisfies that \(b^{\prime }\in C^{2}\left( \mathbb {R}\right) ,b^{\prime \prime }\left( \theta \right) >0,\theta \in \mathbb {R}\) . For a compact interval \(\Theta \) whose interior contains \(m\left( \left[ 0,1\right] ^{d}\right) \) , \(C_{b}>\max _{\theta \in \Theta }b^{\prime \prime }\left( \theta \right) \ge \min _{\theta \in \Theta }b^{\prime \prime }\left( \theta \right) >c_{b}\) for constants \(0<c_{b}<C_{b}<\infty \) .

-

(A3)

The conditional variance function \(\sigma ^{2}\left( \mathbf {x}\right) \) is continuous and positive for \(\mathbf {x}\in \left[ 0,1\right] ^{d}\) . The errors \(\left\{ \varepsilon _{i}\right\} _{i=1}^{n}\) satisfy that \(\mathop {\mathrm{E}}\left( \varepsilon _{i}\vert \mathbf {X}_{i} \right) =0,\) \(\mathop {\mathrm{E}}\left( \vert \varepsilon _{i}\vert ^{2+\eta }\right) \le C_{\eta }\) for some \(\eta \in \left( 1/2,1\right] \).

-

(A4)

The joint density \(f\left( \mathbf {x}\right) \) of \(\left( X_{1},\ldots ,X_{d}\right) \) is continuous and

$$\begin{aligned} 0<c_{f}\le \inf \nolimits _{\mathbf {x}\in \left[ 0,1\right] ^{d}}f\left( \mathbf {x}\right) \le \sup \nolimits _{\mathbf {x}\in \left[ 0,1\right] ^{d}}f\left( \mathbf {x}\right) \le C_{f}<\infty . \end{aligned}$$For each \(1\le l\le d\) , the marginal density function \( f_{l}\left( x_{l}\right) \) of \(X_{l}\) has continuous derivatives on \(\left[ 0,1\right] \) and the same uniform bounds \( C_{f}\) and \(c_{f}\) . There exists a \(\sigma \) -finite measure \(\lambda \) on \(\mathbb {R}\) such that the distribution of \(Y_{i}\) conditional on \(\mathbf {X}_{i}\) has a probability density function \(f_{Y\vert \mathbf {X}}\left( y;b^{\prime }\left\{ m\left( \mathbf {x}\right) \right\} \right) \) relative to \(\lambda \) whose support for y is a common \(\varOmega \) , and is continuous in both \(y\in \varOmega \) and \(\mathbf {x}\in \left[ 0,1\right] ^{d}\) .

-

(A5)

\(\left\{ \mathbf {Z}_{i}=\left( \mathbf {X}_{i}^{T},\varepsilon _{i}\right) \right\} _{i=1}^{n}\) are independent and identically distributed.

-

(A6)

The kernel function \(K\left( x\right) \) is a symmetric probability density function supported on \([-1,1]\) and \( \in C^{1}[-1,1]\). The bandwidth \(h=h_{n}\) satisfies that \( h=o\left( n^{-1/5}(\log n)^{-1/5}\right) ,\) \(h^{-1}=\mathcal {O}\left( n^{1/5}\left( \log n\right) ^{\delta }\right) \) for some constant \( \delta >1/5\).

-

(A7)

The number of interior knots N satisfies \(c_{N}n^{1/4}\log n\le N\le C_{N}n^{1/4}\log n \,\textit{for} \textit{some constants} c_{N}, C_{N}>0\).

Assumptions (A1)–(A7) are standard in GAM, see Stone (1986), Xue and Yang (2006). The i.i.d. feature is technically acceptable if the data are collected across a large number of sections, for instance, our real example in Sect. 6. Assumptions (A5), (A6) are more restrictive than in Liu et al. (2013) for the purpose of constructing simultaneous confidence corridor, but are unnecessary for Theorem 2 on the consistency of BIC. All these assumptions are satisfied by the simulation example in Sect. 5.

1.2 Preliminaries

Throughout this section, C denotes some generic positive constant unless stated otherwise. Define

where \(W\left( x\right) \) is a Wiener process defined on \(\left( 0,\infty \right) \) and denote

with \(C\left( K\right) \) given in (13).

Lemma 1

Under Assumption (A6), for any \(x\in \mathbb {R}\)

Proof

One simply applies the same steps in proving Lemma 2.2 of Härdle (1989).

Denote by \(T_{i}\) the random variable \(b^{\prime }\left\{ m\left( \mathbf {X} _{i}\right) \right\} \), and the Lebesgue measure on \(\mathbb {R}^{d}\) as \(\mu ^{\left( d\right) }\). By Assumption (A4), \(\mathbf {X}_{i}\) has pdf w.r.t. the Lebesgue measure \(\mu ^{\left( d\right) }\), and Assumptions (A1) and (A2) ensure that functions \(b^{\prime }\) and m are at least \(C^{1}\), thus the random vector \(\left( T_{i},X_{i1}\right) \) has a joint pdf w.r.t. the Lebesgue measure \(\mu ^{\left( 2\right) }\), which one denotes as \(f_{T,X_{1}}\left( t,x_{1}\right) \). \(\square \)

Lemma 2

Under Assumptions (A1)–(A5), for \(\xi _{i}\) in (26), the distribution of \(\left( \xi _{i},X_{i1}\right) \) has joint pdf w.r.t. \(\mu ^{\left( 2\right) }\) as

Proof

The joint pdf of \(\left( Y_{i},T_{i},X_{i1}\right) \) wrt \(\lambda \times \mu ^{\left( 2\right) }\) is \(f_{Y\vert \mathbf {X}}\left( y;t\right) f_{T,X_{1}}\left( t,x_{1}\right) \). For any \(\left( z,x_{1}\right) \in \mathbb {R}\times \left[ 0,1\right] \), and \(\triangle z,\triangle x_{1}>0\), one has

Applying dominated convergence theorem, one has as \(\max \left( \triangle z,\triangle x_{1}\right) \rightarrow 0,\)

hence the joint pdf of \(\left( \xi _{i},X_{i1}\right) \) wrt \(\mu ^{\left( 2\right) }\) is \(\int _{\varOmega }f_{Y\vert \mathbf {X}}\left( y;y-z\right) f_{T,X_{1}}\left( y-z,x_{1}\right) d\lambda \left( y\right) \).

For theoretical analysis, we write\(\ c_{J,l}=\mathop {\mathrm{E}}b_{J}\left( X_{l}\right) =\int b_{J}\left( x_{l}\right) f_{l}\left( x_{l}\right) dx_{l}\) and define the centered B spline basis \(b_{J,l}\left( x_{l}\right) \) and the standardized B spline basis \(B_{J,l}\left( x_{l}\right) \) respectively as

so that \(\mathop {\mathrm{E}}B_{J,l}\left( X_{l}\right) \equiv 0\), \(\mathop {\mathrm{E}} B_{J,l}^{2}\left( X_{l}\right) \equiv 1\).

With slight abuse of notations the log-likelihood \(\widehat{L}\left( g\right) \) in (5) is

with \(g\left( \mathbf {X}_{i}\right) =\) \(\mathbf {\lambda }^{T}\mathbf {B} \left( \mathbf {X}_{i}\right) \in G_{n}^{0}\), \(\mathbf {\lambda =}\left( \lambda _{0},\lambda _{J,l}\right) _{1\le J\le N+1,1\le l\le d}^{T}\in \mathbb {R}^{N_{d}}\) with \(N_{d}=\left( N+1\right) d+1\), \(\mathbf {B}\left( \mathbf {x}\right) =\left\{ 1,B_{1,1}\left( x_{1}\right) ,\ldots ,B_{N+1,d}\left( x_{d}\right) \right\} ^{T}\) and \(B_{J,l}\left( x_{l}\right) \) as given in (28). It is straightforward to verify that the gradient and Hessian of \(\widehat{L}\left( \mathbf {\lambda }\right) \) are

\(\square \)

Proposition 1

Under Assumptions (A1)–(A5) and (A7), for \(m\in M\) with M given in (20) and \(\widehat{m}\) as in (6), as \(n\rightarrow \infty \), \(\left\| m-\widehat{m}\right\| _{2,n}+\left\| m-\widehat{m}\right\| _{2}=\mathcal {O}_{a.s.}\left( N^{1/2}n^{-1/2}\log n\right) \) and \(\left\| m-\widehat{m}\right\| _{\infty }=\mathcal {O}_{a.s.}\left( Nn^{-1/2}\log n\right) \). With probability approaching 1, the Hessian matrix \(\nabla ^{2}\hat{L}\left( \mathbf {\lambda }\right) \) satisfies that \(\nabla ^{2}\hat{L}\left( \mathbf {\lambda }\right) <\mathbf {0},\forall \mathbf {\lambda }\) and \(\nabla ^{2}\hat{L}\left( \mathbf {\lambda }\right) \le -c_{b}c_{V}\mathbf {I}\) if \(\mathbf {\lambda }^{T}\mathbf {B}\left( \mathbf {X}_{i}\right) \in \Theta ,1\le i\le n \).

Proof

See Lemma A.13 of Liu et al. (2013), Assumption (A2), Eq. (29) and Lemma A.11 of Liu et al. (2013). \(\square \)

1.3 Proof of Theorem 1

Define a stochastic process \(\widehat{\varepsilon }_{n}\left( x_{1}\right) =n^{-1}\sum \nolimits _{i=1}^{n}K_{h}\left( X_{i1}-x_{1}\right) \) \(\xi _{i},x_{1}\in \left[ 0,1\right] \) with \(\xi _{i}\) given in (26), then (9) and (10) show that

which, together with (8), lead to

Using \(v_{1}\left( x_{1}\right) \) given in (11), one can standardize \(\widehat{\varepsilon }_{n}\left( x_{1}\right) \) to obtain

Assumptions (A5), (A8) imply that the following Rosenblatt transformation to the two-dimensional sequence \(\left\{ X_{i1},\xi _{i}\right\} _{i=1}^{n}\) produces \(\left\{ X_{i1}^{\prime },\xi _{i}^{\prime }\right\} _{i=1}^{n}\) with \(\left( X_{i1}^{\prime },\xi _{i}^{\prime }\right) \) uniformly distributed on \(\left[ 0,1\right] ^{2}\):

Denote \(Z_{n}\left( x_{1},\xi \right) =\sqrt{n}\left\{ F_{n}\left( x_{1},\xi \right) -F\left( x_{1},\xi \right) \right\} \) where \(F_{n}\left( x_{1},\xi \right) \) is the empirical distribution of \(\left\{ X_{i1},\xi _{i}\right\} _{i=1}^{n}\), one can rewrite \(\widehat{\zeta }_{n}\left( x_{1}\right) \) as

By the strong approximation theorem in Tusnady (1977), there exists a version of the two-dimensional Brownian Bridge \(B_{n}\left( x_{1}^{\prime },\xi ^{\prime }\right) \) such that

Applying standard techniques used in Bickel and Rosenblatt (1973), Härdle (1989), one can show that

for a version of the \(M_{h}\left( t\right) \) given in (27). Similar result can be found in Xia (1998). Furthermore, (30) and (31) imply that

with \(\sigma _{n}\left( x\right) \) given in (14). Under Assumption (A6), which entails that \(\left( -2\log h\right) ^{1/2}\) is of the same order as \(\left( \log n\right) ^{1/2}\), (32) and (33) can show that

Finally, Theorem 1 follows from Lemma 1 and Slutsky’s Theorem.

1.4 Proof of Theorem 2

See the Supplement.

Rights and permissions

About this article

Cite this article

Zheng, S., Liu, R., Yang, L. et al. Statistical inference for generalized additive models: simultaneous confidence corridors and variable selection. TEST 25, 607–626 (2016). https://doi.org/10.1007/s11749-016-0480-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11749-016-0480-8