Abstract

In this article, we propose two classes of semiparametric mixture regression models with single-index for model based clustering. Unlike many semiparametric/nonparametric mixture regression models that can only be applied to low dimensional predictors, the new semiparametric models can easily incorporate high dimensional predictors into the nonparametric components. The proposed models are very general, and many of the recently proposed semiparametric/nonparametric mixture regression models are indeed special cases of the new models. Backfitting estimates and the corresponding modified EM algorithms are proposed to achieve optimal convergence rates for both parametric and nonparametric parts. We establish the identifiability results of the proposed two models and investigate the asymptotic properties of the proposed estimation procedures. Simulation studies are conducted to demonstrate the finite sample performance of the proposed models. Two real data applications using the new models reveal some interesting findings.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Mixtures of regression models are commonly used as “model based clustering” methods to reveal the relationship among variables of interest if the population consists of several homogeneous subgroups. This type of application is commonly seen in econometrics, where it is also known as switching regression models, and in various other fields, see, for example, in econometrics (Wedel and DeSarbo 1993; Frühwirth-Schnatter 2001), and in epidemiology (Green and Richardson 2002). Another wide application of finite mixture of regressions is in outlier detection or robust regression estimation (Young and Hunter 2010). Traditional mixture of linear regression models require strong parametric assumptions: linear component regression functions, constant component variances, and constant component proportions. The fully parametric hierarchical mixtures of experts model (Jordan and Jacobs 1994) has been proposed to allow the component proportions to depend on the covariates in machine learning. Recently, many semiparametric and nonparametric mixture regression models have been proposed to relax the parametric assumptions of mixture of regression models. See, for example, Young and Hunter (2010), Huang and Yao (2012), Cao and Yao (2012),Huang et al. (2013, 2014), Hu et al. (2017), Xiang and Yao (2018), among others. Xiang et al. (2019) provided a good review of many semiparametric regression models. However, most of those existing semiparametric or nonparametric mixture regressions can only be applied to low dimensional predictors due to the “curse of dimensionality”. It will be desirable to be able to relax parametric assumptions of traditional mixtures of regression models when the dimension of predictors is high.

In this article, we propose a mixture of single-index models (MSIM) and a mixture of regression models with varying single-index proportions (MRSIP) to reduce the dimension of high dimensional predictors before modeling them nonparametrically. Many existing popular models can be considered as special cases of the proposed two models. Huang et al. (2013) proposed the nonparametric mixture of regression models

where \(\pi _j(x), m_j(x),\) and \(\sigma _j^2(x)\) are unknown smoothing functions, and \(\phi (y|\mu ,\sigma ^2)\) is the normal density with mean \(\mu \) and variance \(\sigma ^2\). Their proposed model can drastically reduce the modeling bias when the strong parametric assumption of traditional mixture of linear regression models does not hold. However, the above model is not applicable to high dimensional predictors due to the kernel estimation used for nonparametric parts. To solve the above problem, we propose a mixture of single-index models

in which the single index \({\varvec{\alpha }}^\top {{\varvec{x}}}\) transfers the high dimensional nonparametric problem to a univariate nonparametric problem. When \(k=1\), model (1) reduces to a single index model (Ichimura 1993; Härdle et al. 1993). If \({{\varvec{x}}}\) is a scalar, then model (1) reduces to the nonparametric mixture of regression models proposed by Huang et al. (2013). Zeng (2012) also applied the single index idea to the component means and variances and assumed that component proportions do not depend on the predictor \({{\varvec{x}}}\). However, Zeng (2012) did not give any theoretical properties of their proposed estimates.

Young and Hunter (2010) and Huang and Yao (2012) proposed a semiparametric mixture of regression models

where \(\pi _j({{\varvec{x}}})\)’s are unknown smoothing functions, to combine nice properties of both nonparametric mixture regression models and traditional parametric mixture regression models. Their semiparametric mixture models assume that component proportions depend on covariates nonparametrically to reduce the modeling bias while component regression functions are still assumed to be linear to have better model interpretation. However, their estimation procedures cannot be applied if the dimension of predictors \({{\varvec{x}}}\) is high due to the kernel estimation used for \(\pi _j({{\varvec{x}}})\). We propose a mixture of regression models with varying single-index proportions

which uses the idea of single index to model the nonparametric effect of predictors on component proportions, while allowing easy interpretation of linear component regression functions. When \(k=1\), model (2) reduces to the traditional linear regression model. If \({{\varvec{x}}}\) is a scalar, then model (2) reduces to the semiparametric mixture models considered by Young and Hunter (2010) and Huang and Yao (2012). Modeling component proportions nonparametrically can reduce the modeling bias and better cluster the data when the traditional parametric assumptions of component proportions do not hold (Young and Hunter 2010; Huang and Yao 2012).

We prove the identifiability results of the two models under some mild conditions. We propose a modified EM algorithm, which combines the ideas of backfitting algorithm, kernel estimation, and local likelihood, to estimate both the global parameters and the nonparametric functions. In addition, the asymptotic properties of the proposed estimation procedures are also investigated. Simulation studies are conducted to demonstrate the finite sample performance of the proposed models. Two real data applications reveal some new interesting findings.

The rest of the paper is organized as follows. In Sect. 2, we introduce the MSIM and study its identifiability result. A one-step and a fully-iterated backfitting estimate are proposed, and their asymptotic properties are also studied. In Sect. 3, the MRSIP is proposed. The identifiability result and the asymptotic properties of the proposed estimates are given. In Sects. 4 and 5, Monte Carlo studies and two real data examples are illustrated to demonstrate the finite sample performance of the two models. A discussion section is given in Sect. 6 and we defer the technical conditions and proofs to the “Appendix”.

2 Mixture of single-index models

2.1 Model definition and identifiability

Assume that \(\{({{\varvec{x}}}_i,Y_i),i=1,\ldots ,n\}\) is a random sample from the population \(({{\varvec{x}}},Y)\), where \({{\varvec{x}}}\) is p-dimensional and Y is univariate. Let \(\mathscr {C}\) be a latent variable, and has a discrete distribution \(P(\mathscr {C}=j|{{\varvec{x}}})=\pi _j({\varvec{\alpha }}^\top {{\varvec{x}}})\) for \(j=1,\ldots ,k\). Conditional on \(\mathscr {C}=j\) and \({{\varvec{x}}}\), Y follows a normal distribution with mean \(m_j({\varvec{\alpha }}^\top {{\varvec{x}}})\) and variance \(\sigma _j^2({\varvec{\alpha }}^\top {{\varvec{x}}})\). Without observing \(\mathscr {C}\), the conditional distribution of Y given \({{\varvec{x}}}\) can be written as:

The above model is the proposed mixture of single-index models. Throughout the paper, we assume that k is fixed, and refer to model (1) as a finite semiparametric mixture of regression models, since \(\pi _j(\cdot )\), \(m_j(\cdot )\) and \(\sigma _j^2(\cdot )\) are all nonparametric. In the model (1), we use the same index \({\varvec{\alpha }}\) for all components. But our proposed estimation procedure and asymptotic results can be easily extended to the cases where components have different index \({\varvec{\alpha }}\).

Compared to Huang et al. (2013), the appeal of the proposed MSIM is that by using an index \({\varvec{\alpha }}^\top {{\varvec{x}}}\), the so-called “curse of dimensionality” in fitting multivariate nonparametric regression functions is avoided. It is of dimension-reduction structure in the sense that, given the estimate of \({\varvec{\alpha }}\), denoted by \(\hat{{\varvec{\alpha }}}\), we can use the univariate \(\hat{{\varvec{\alpha }}}^\top {{\varvec{x}}}\) as the covariate and simplify model (1) by the nonparametric mixture regression model proposed by Huang et al. (2013). Therefore, model (1) is a reasonable compromise between fully parametric and fully nonparametric modeling.

Identifiability is a major concern for most mixture models. Some well known identifiability results of finite mixture models include: mixture of univariate normals is identifiable up to relabeling (Titterington et al. 1985) and finite mixture of regression models is identifiable up to relabeling provided that covariates have a certain level of variability (Henning 2000). Wang et al. (2014) established some general identifiability results for many existing nonparametric or semiparametric mixture regression models. The following theorem establishes the identifiability result of the model (1) and its proof is given in the “Appendix”.

Theorem 1

Assume that

-

1.

\(\pi _j(z)\), \(m_j(z)\), and \(\sigma _j^2(z)\) are differentiable and not constant on the support of \({\varvec{\alpha }}^\top {{\varvec{x}}}\), \(j=1,\ldots ,k\);

-

2.

The \({{\varvec{x}}}\) is continuously distributed random variable that has a joint probability density function;

-

3.

The support of \({{\varvec{x}}}\) is not contained in any proper linear subspace of \(\mathbb {R}^p\);

-

4.

\(\Vert {\varvec{\alpha }}\Vert =1\) and the first nonzero element of \({\varvec{\alpha }}\) is positive;

-

5.

For any \(1\le i\ne j\le k\),

$$\begin{aligned} \sum _{l=0}^{1} \Vert m^{(l)}_i(z)-m^{(l)}_j(z)\Vert ^2+ \sum _{l=0}^{1}\Vert \sigma ^{(l)}_i(z)-\sigma ^{(l)}_j(z)\Vert ^2\ne 0, \end{aligned}$$for any z where \(g^{(l)}\) is the lth derivative of g and equal to g if \(l=0\).

Then, model (1) is identifiable.

2.2 Estimation procedure

In this subsection, we propose a one-step estimation procedure and a backfitting algorithm to estimate the nonparametric functions and the single index of the model (1).

Let \(\ell ^{*(1)}({\varvec{\pi }},{{\varvec{m}}},{{\varvec{\sigma }}}^2,{\varvec{\alpha }})\) be the log-likelihood of the collected data \(\{({{\varvec{x}}}_i,Y_i),i=1,\ldots ,n\}\) from the model (1). That is:

where \(\phi (y|\mu ,\sigma ^2)\) is the normal density with mean \(\mu \) and variance \(\sigma ^2\), \({\varvec{\pi }}(\cdot )=\{\pi _1(\cdot ),\ldots ,\pi _{k-1}(\cdot )\}^\top \), \({{\varvec{m}}}(\cdot )=\{m_1(\cdot ),\ldots ,m_k(\cdot )\}^\top \), and \({{\varvec{\sigma }}}^2(\cdot )=\{\sigma _1^2(\cdot ),\ldots ,\sigma _k^2(\cdot )\}^\top \). Since \({\varvec{\pi }}(\cdot )\), \({{\varvec{m}}}(\cdot )\) and \({{\varvec{\sigma }}}^2(\cdot )\) consist of nonparametric functions, (3) is not ready for maximization.

Note that for the model (1), the space spanned by the single index \({\varvec{\alpha }}\) is in fact the central mean subspace of \(Y|{{\varvec{x}}}\) (Cook and Li 2002) in the literature of sufficient dimension reduction. Therefore, we can employ existing sufficient dimension reduction methods to find an initial estimate of \({\varvec{\alpha }}\). Please see, for example, Li (1991), Li et al. (2005), Wang and Xia (2008), Luo et al. (2009), Wang and Yao (2012), Ma and Zhu (2012, 2013), Yao et al. (2019). In this article, we will simply employ sliced inverse regression (Li 1991) to obtain an initial estimate of \({\varvec{\alpha }}\), denoted by \(\tilde{{\varvec{\alpha }}}\).

Given the estimated single index \(\tilde{{\varvec{\alpha }}}\), the nonparametric functions \({\varvec{\pi }}(z)\), \({{\varvec{m}}}(z)\) and \({{\varvec{\sigma }}}^2(z)\) can then be estimated by maximizing the following local log-likelihood function:

where \(K_h(z)=\frac{1}{h}K(\frac{z}{h})\), \(K(\cdot )\) is a kernel density function, and h is a tuning parameter. Let \(\hat{{\varvec{\pi }}}(\cdot )\), \(\hat{{{\varvec{m}}}}(\cdot )\) and \(\hat{{{\varvec{\sigma }}}}^2(\cdot )\) be the estimates that maximize (4). The above estimates are the proposed one-step estimate.

We propose a modified EM-type algorithm to maximize \(\ell _1^{(1)}\). In practice, we usually want to evaluate unknown functions at a set of grid points, which in this case, requires us to maximize local log-likelihood functions at a set of grid points. If we simply employ the EM algorithm separately for each grid point, the labels in the EM algorithm may change at different grid points, and we may not be able to get smoothed estimated curves (Huang and Yao 2012). Therefore, we propose the following modified EM-type algorithm, which estimates the nonparametric functions simultaneously at a set of grid points, say \(\{u_t,t=1,\ldots ,N\}\), and provides a unified label for each observation across all grid points.

Algorithm 1

Modified EM-type algorithm to maximize (4) given the single index estimate \(\tilde{{\varvec{\alpha }}}\).

- E-step::

-

Calculate the expectations of component labels based on estimates from lth iteration:

$$\begin{aligned} p_{ij}^{(l+1)}=\frac{\pi _j^{(l)}(\tilde{{\varvec{\alpha }}}^\top {{\varvec{x}}}_i)\phi (Y_i|m_j^{(l)}(\tilde{{\varvec{\alpha }}}^\top {{\varvec{x}}}_i),\sigma _j^{2(l)}(\tilde{{\varvec{\alpha }}}^\top {{\varvec{x}}}_i))}{\sum _{j=1}^k \pi _j^{(l)}(\tilde{{\varvec{\alpha }}}^\top {{\varvec{x}}}_i)\phi (Y_i|m_j^{(l)}(\tilde{{\varvec{\alpha }}}^\top {{\varvec{x}}}_i),\sigma _j^{2(l)}(\tilde{{\varvec{\alpha }}}^\top {{\varvec{x}}}_i))}, \end{aligned}$$(5)where \(i=1,\ldots ,n,j=1,\ldots ,k.\)

- M-step::

-

Update the estimates

$$\begin{aligned}&\pi _j^{(l+1)}(z)=\frac{\sum _{i=1}^np_{ij}^{(l+1)}K_h(\tilde{{\varvec{\alpha }}}^\top {{\varvec{x}}}_i-z)}{\sum _{i=1}^nK_h(\tilde{{\varvec{\alpha }}}^\top {{\varvec{x}}}_i-z)}, \end{aligned}$$(6)$$\begin{aligned}&m_j^{(l+1)}(z)=\frac{\sum _{i=1}^np_{ij}^{(l+1)}Y_iK_h(\tilde{{\varvec{\alpha }}}^\top {{\varvec{x}}}_i-z)}{\sum _{i=1}^np_{ij}^{(l+1)}K_h(\tilde{{\varvec{\alpha }}}^\top {{\varvec{x}}}_i-z)}, \end{aligned}$$(7)$$\begin{aligned}&\sigma _j^{2(l+1)}(z)=\frac{\sum _{i=1}^np_{ij}^{(l+1)}(Y_i-m_j^{(l+1)}(z))^2K_h(\tilde{{\varvec{\alpha }}}^\top {{\varvec{x}}}_i-z)}{\sum _{i=1}^np_{ij}^{(l+1)}K_h(\tilde{{\varvec{\alpha }}}^\top {{\varvec{x}}}_i-z)}, \end{aligned}$$(8)for \(z\in \{u_t,t=1,\ldots ,N\}\) and \(j=1,\ldots ,k\). We then update \(\pi _j^{(l+1)}(\tilde{{\varvec{\alpha }}}^\top {{\varvec{x}}}_i)\), \(m_j^{(l+1)}(\tilde{{\varvec{\alpha }}}^\top {{\varvec{x}}}_i)\) and \(\sigma _j^{2(l+1)}(\tilde{{\varvec{\alpha }}}^\top {{\varvec{x}}}_i)\), \(i=1,\ldots ,n\), by linear interpolating \(\pi _j^{(l+1)}(u_t)\), \(m_j^{(l+1)}(u_t)\) and \(\sigma _j^{2(l+1)}(u_t)\), \(t=1,\ldots ,N\), respectively.

Note that in the M-step, the nonparametric functions are estimated simultaneously at a set of grid points, and therefore, the classification probabilities in the the E-step can be estimated globally to avoid the label switching problem (Stephens 2000; Yao and Lindsay 2009). If the sample size n is not too large, one can also take all \(\{\tilde{{\varvec{\alpha }}}^\top {{\varvec{x}}}_i, i=1,\ldots ,n\}\) as grid points for z in the M-step.

The initial estimate \(\tilde{{\varvec{\alpha }}}\) by SIR does not make use of the mixture information and thus is not efficient. Given one step estimate \(\hat{{\varvec{\pi }}}(\cdot )\), \(\hat{{{\varvec{m}}}}(\cdot )\) and \(\hat{{{\varvec{\sigma }}}}^2(\cdot )\), we can further improve the estimate of \({\varvec{\alpha }}\) by maximizing

with respect to \({\varvec{\alpha }}\). The proposed fully iterative backfitting estimator of \({\varvec{\alpha }}\), denoted by \(\hat{{\varvec{\alpha }}}\), iterates the above two steps until convergence.

Algorithm 2

Fully iterative backfitting estimator (FIB)

- Step 1::

-

Apply sliced inverse regression (SIR) to obtain an initial estimate of the single index parameter \({\varvec{\alpha }}\), denoted by \(\tilde{{\varvec{\alpha }}}\).

- Step 2::

-

Given \(\tilde{{\varvec{\alpha }}}\), apply the modified EM-algorithm (5)–(8) to maximize \(\ell ^{(1)}_1\) in (4) to obtain the estimates \(\hat{{\varvec{\pi }}}(\cdot )\), \(\hat{{{\varvec{m}}}}(\cdot )\), and \(\hat{{{\varvec{\sigma }}}}^2(\cdot )\).

- Step 3::

-

Given \(\hat{{\varvec{\pi }}}(\cdot )\), \(\hat{{{\varvec{m}}}}(\cdot )\), and \(\hat{{{\varvec{\sigma }}}}^2(\cdot )\) from Step 2, update the estimate of \({\varvec{\alpha }}\) by maximizing \(\ell ^{(1)}_2\) in (9).

- Step 4::

-

Iterate Steps 2–3 until convergence.

2.3 Asymptotic properties

The asymptotic properties of the proposed estimates are investigated below. Let \({\varvec{\theta }}(z)=({\varvec{\pi }}^\top (z),{{\varvec{m}}}^\top (z),({{\varvec{\sigma }}}^2)^\top (z))^\top \). Define

Under further conditions defined in the “Appendix”, the asymptotic properties of the one-step estimates \(\hat{{\varvec{\pi }}}(\cdot )\), \(\hat{{{\varvec{m}}}}(\cdot )\), and \(\hat{{{\varvec{\sigma }}}}^2(\cdot )\) are given in the following theorem.

Theorem 2

Assume that conditions (C1)–(C7) in the “Appendix” hold. Then, as \(n\rightarrow \infty \), \(h\rightarrow 0\) and \(nh\rightarrow \infty \), we have

where

with \(f(\cdot )\) the marginal density function of \({\varvec{\alpha }}^\top {{\varvec{x}}}\), \(\kappa _l=\int t^lK(t)dt\) and \(\nu _l=\int t^lK^2(t)dt\).

Note that the asymptotic variance of \(\hat{{\varvec{\theta }}}(z)\) is the same as those given in Huang et al. (2013). Thus, the nonparametric functions can be estimated with the same accuracy as it would have if the single index \({\varvec{\alpha }}^\top {{\varvec{x}}}\) were known. This is expected since the index \({\varvec{\alpha }}\) can be estimated at a root n convergence rate which is faster than \(\hat{{\varvec{\theta }}}(z)\). In addition, note that the one-step estimates of \({\varvec{\theta }}(z)\) have the same asymptotic variance (up to the first order) as the fully iterative backfitting algorithm but with much less computations. Our simulation results in Sect. 4 further confirm this result.

The next theorem gives the asymptotic results of the \(\hat{{\varvec{\alpha }}}\) given by the fully iterative backfitting algorithm.

Theorem 3

Assume that conditions (C1)–(C8) in the “Appendix” hold. Then, as \(n\rightarrow \infty \), \(nh^4\rightarrow 0\), and \(nh^2/\log (1/h)\rightarrow \infty \),

where

3 Mixtures of regression models with varying single-index proportions

3.1 Model definition and identifiability

The MRSIP assumes that \(P(\mathscr {C}=j|{{\varvec{x}}})=\pi _j({\varvec{\alpha }}^\top {{\varvec{x}}})\) for \(j=1,\ldots ,k\), and conditional on \(\mathscr {C}=j\) and \({{\varvec{x}}}\), Y follows a normal distribution with mean \({{\varvec{x}}}^\top {\varvec{\beta }}_j\) and variance \(\sigma ^2_j\). That is,

Since \(\pi _j(\cdot )\)’s are nonparametric, model (2) is also a finite semiparametric mixture of regression models. The linear component regression functions \({{\varvec{x}}}^\top {\varvec{\beta }}_j\) enjoy simple interpretation, while nonparametric functions \(\pi _j({\varvec{\alpha }}^\top {{\varvec{x}}})\) can incorporate the effects of predictors on component proportions more flexibly to reduce the modeling bias. See Young and Hunter (2010), Huang et al. (2013) for more information. We first prove the identifiability result of model (2) in the following theorem and defer its proof to the “Appendix”.

Theorem 4

Assume that

-

1.

\(\pi _j(z)>0\) are differentiable and not constant on the support of \({\varvec{\alpha }}^\top {{\varvec{x}}}\), \(j=1,\ldots ,k\);

-

2.

The components of \({{\varvec{x}}}\) are continuously distributed random variables that have a joint probability density function;

-

3.

The support of \({{\varvec{x}}}\) contains an open set in \(\mathbb {R}^p\) and is not contained in any proper linear subspace of \(\mathbb {R}^p\);

-

4.

\(\Vert {\varvec{\alpha }}\Vert =1\) and the first nonzero element of \({\varvec{\alpha }}\) is positive;

-

5.

\(({\varvec{\beta }}_j,\sigma _j^2)\), \(j=1,\ldots ,k\), are distinct pairs.

Then, model (2) is identifiable.

3.2 Estimation procedure

The log-likelihood of the collected data for model (2) is:

where \({\varvec{\pi }}(\cdot )=\{\pi _1(\cdot ),\ldots ,\pi _{k-1}(\cdot )\}^\top \), \({{\varvec{\sigma }}}^2=\{\sigma _1^2,\ldots ,\sigma _k^2\}^\top \), and \({\varvec{\beta }}=\{{\varvec{\beta }}_1,\ldots ,{\varvec{\beta }}_k\}^\top \). Since \({\varvec{\pi }}(\cdot )\) consists of nonparametric functions, (12) is not ready for maximization. We propose a backfitting algorithm to iterate between estimating the parameters \(({\varvec{\alpha }},{\varvec{\beta }},{{\varvec{\sigma }}}^2)\) and the nonparametric functions \({\varvec{\pi }}(\cdot )\).

Given the estimates of \(({\varvec{\alpha }},{\varvec{\beta }},{{\varvec{\sigma }}}^2)\), say \((\hat{{\varvec{\alpha }}},\hat{{\varvec{\beta }}},\hat{{{\varvec{\sigma }}}}^2)\), then \({\varvec{\pi }}(\cdot )\) can be estimated locally by maximizing the following local log-likelihood function:

Let \(\hat{{\varvec{\pi }}}(\cdot )\) be the estimate that maximizes (13). We can then further update the estimate of \(({\varvec{\alpha }},{\varvec{\beta }},{{\varvec{\sigma }}}^2)\) by maximizing

The backfitting algorithm by iterating the above two steps can be summarized as follows.

Algorithm 3

Backfitting algorithm to estimate the model (2).

-

Step 1:

Obtain an initial estimate of \(({\varvec{\alpha }},{\varvec{\beta }},{{\varvec{\sigma }}}^2)\).

-

Step 2:

Given \((\hat{{\varvec{\alpha }}},\hat{{\varvec{\beta }}},\hat{{{\varvec{\sigma }}}}^2)\), use the following modified EM-type algorithm to maximize \(\ell ^{(2)}_1\) in (13).

E-step: Calculate the expectations of component labels based on estimates from lth iteration:

$$\begin{aligned} p_{ij}^{(l+1)}=\frac{\pi ^{(l)}_j(\hat{{\varvec{\alpha }}}^\top {{\varvec{x}}}_i)\phi (Y_i|{{\varvec{x}}}_i^\top \hat{{\varvec{\beta }}}_j,\hat{\sigma }_j^2)}{\sum _{j=1}^k\pi ^{(l)}_j(\hat{{\varvec{\alpha }}}^\top {{\varvec{x}}}_i)\phi (Y_i|{{\varvec{x}}}_i^\top \hat{{\varvec{\beta }}}_j,\hat{\sigma }_j^2)}, \end{aligned}$$(15)where \(i=1,\ldots ,n, j=1,\ldots ,k\).

M-step: Update the estimate

$$\begin{aligned} \pi _j^{(l+1)}(z)=\frac{\sum _{i=1}^np_{ij}^{(l+1)}K_h(\hat{{\varvec{\alpha }}}^\top {{\varvec{x}}}_i-z)}{\sum _{i=1}^nK_h(\hat{{\varvec{\alpha }}}^\top {{\varvec{x}}}_i-z)} \end{aligned}$$(16)for \(z\in \{u_t,t=1,\ldots ,N\}\). We then update \(\pi _j^{(l+1)}(\hat{{\varvec{\alpha }}}^\top {{\varvec{x}}}_i)\), \(i=1,\ldots ,n\) by linear interpolating \(\pi _j^{(l+1)}(u_t)\), \(t=1,\ldots ,N\).

-

Step 3:

Given \(\hat{{\varvec{\pi }}}(\cdot )\) from Step 2, update \((\hat{{\varvec{\alpha }}},\hat{{\varvec{\beta }}},\hat{{{\varvec{\sigma }}}}^2)\) by maximizing (14). We propose to iterate between updating \({\varvec{\alpha }}\) and \(({\varvec{\beta }}, {{\varvec{\sigma }}})\).

-

Step 3.1:

Given \(\hat{{\varvec{\alpha }}}\), update \(({\varvec{\beta }},{{\varvec{\sigma }}}^2)\).

E-step: Calculate the classification probabilities:

$$\begin{aligned} p_{ij}^{(l+1)}=\frac{\hat{\pi }_j(\hat{{\varvec{\alpha }}}^\top {{\varvec{x}}}_i)\phi (Y_i|{{\varvec{x}}}_i^\top {\varvec{\beta }}^{(l)}_j,\sigma ^{2(l)}_j)}{\sum _{j=1}^k\hat{\pi }_j(\hat{{\varvec{\alpha }}}^\top {{\varvec{x}}}_i)\phi (Y_i|{{\varvec{x}}}_i^\top {\varvec{\beta }}^{(l)}_j,\sigma ^{2(l)}_j)},\quad j=1,\ldots ,k. \end{aligned}$$(17)M-step: Update \({\varvec{\beta }}\) and \({{\varvec{\sigma }}}^2\):

$$\begin{aligned} {\varvec{\beta }}_j^{(l+1)}&=({{\varvec{S}}}^\top {{\varvec{R}}}_j^{(l+1)}{{\varvec{S}}})^{-1}{{\varvec{S}}}^\top {{\varvec{R}}}_j^{(l+1)}{{\varvec{y}}}, \end{aligned}$$(18)$$\begin{aligned} \sigma _j^{2(l+1)}&=\frac{\sum _{i=1}^np_{ij}^{(l+1)}(Y_i-{{\varvec{x}}}_i^\top {\varvec{\beta }}_j^{(l+1)})^2}{\sum _{i=1}^np_{ij}^{(l+1)}}, \end{aligned}$$(19)where \(j=1,\ldots ,k\), \({{\varvec{R}}}_j^{(l+1)}=diag\{p_{ij}^{(l+1)},\ldots ,p_{nj}^{(l+1)}\}\), and \({{\varvec{S}}}=({{\varvec{x}}}_1,\ldots ,{{\varvec{x}}}_n)^\top \).

-

Step 3.2:

Given \((\hat{{\varvec{\beta }}},\hat{{{\varvec{\sigma }}}}^2)\), update \({\varvec{\alpha }}\) by maximizing the following log-likelihood

$$\begin{aligned} \ell ^{(2)}_3({\varvec{\alpha }})=\sum _{i=1}^n\log \left\{ \sum _{j=1}^k\hat{\pi }_j({\varvec{\alpha }}^\top {{\varvec{x}}}_i)\phi (Y_i|{{\varvec{x}}}_i^\top \hat{{\varvec{\beta }}}_j,\hat{\sigma }^2_j)\right\} . \end{aligned}$$ -

Step 3.3:

Iterate Steps 3.1–3.2 until convergence.

-

Step 4:

Iterate Steps 2–3 until convergence.

There are many ways to obtain an initial estimate of \(({\varvec{\alpha }},{\varvec{\beta }},{{\varvec{\sigma }}}^2)\). In our numerical studies, we get an initial estimate of \(({\varvec{\beta }},{{\varvec{\sigma }}}^2)\) by fitting traditional mixtures of linear regression models. Using the resulting hard-clustering results as new response variable, we then apply the SIR to get an initial estimate for \({\varvec{\alpha }}\).

3.3 Asymptotic properties

Let \((\hat{{\varvec{\pi }}}(z),\hat{{\varvec{\alpha }}},\hat{{\varvec{\beta }}},\hat{{{\varvec{\sigma }}}}^2)\) be the resulting estimate of Algorithm 3. In this section, we investigate their asymptotic properties. Let \({{\varvec{\eta }}}=({\varvec{\beta }}^\top ,({{\varvec{\sigma }}}^2)^\top )^\top \) and \({{\varvec{\lambda }}}=({\varvec{\alpha }}^\top ,{{\varvec{\eta }}}^\top )^\top \). Define

Similarly, define \(q_{\lambda }\), \(q_{\lambda \lambda }\), and \(q_{\pi \eta }\). Denote \(\mathscr {I}^{(2)}_\pi (z)=-E[q_{\pi \pi }(Z)|Z=z]\) and \(\varLambda _2(u|z)=E[q_{\pi }(z)|Z=u]\).

Under some regularity conditions, the asymptotic properties of \(\hat{{\varvec{\pi }}}(z)\) are given in the following theorem and its proof is given in the “Appendix”.

Theorem 5

Assume that conditions (C1)–(C4) and (C9)–(C11) in the “Appendix” hold. Then, as \(n\rightarrow \infty \), \(h\rightarrow 0\) and \(nh\rightarrow \infty \), we have

where

The asymptotic property of the parametric estimate \(\hat{{{\varvec{\lambda }}}}\) is given in the following theorem.

Theorem 6

Assume that conditions (C1)–(C4) and (C9)–(C12) in the “Appendix” hold. Then, as \(n\rightarrow \infty \), \(nh^4\rightarrow 0\), and \(nh^2/\log (1/h)\rightarrow \infty \),

where,

4 Simulation studies

In this section, we conduct simulation studies to test the performance of the proposed models and estimation procedures.

The performance of the estimates are measured via the absolute bias (AB), standard deviation (SD), and root mean squared error (RMSE), which is \((AB^2+SD^2)^{1/2}\). Specifically, the AB and SD of the mean functions \(m_j(\cdot )\)’s in the model (1) is defined as:

and

where \(\hat{m}_j(\cdot )\) is an estimator of \(m_j(\cdot )\), and \(E\hat{m}_j(u_t)\) are estimated by their replicates of studies. Similarly, we can define the AB and SD for variance functions \(\sigma _j^2(\cdot )\)’s and proportion functions \(\pi _j(\cdot )\)’s. In the following numerical studies, we set \(N=100\).

In addition, a conditional bootstrap procedure is applied to estimate the standard error of estimates and construct confidence intervals for the parameters.

Example 1

Generate data from the following two-component MSIM:

where \(z_i={\varvec{\alpha }}^\top {{\varvec{x}}}_i\), \({{\varvec{x}}}_i\) is an eight-dimensional random vector with independent uniform (0,1) components, and the direction parameter is \({\varvec{\alpha }}=(1,1,1,0,0,0,0,0)^\top /\sqrt{3}\). The sample sizes \(n=200\) and \(n=400\) are conducted over 500 repetitions. To estimate \({\varvec{\alpha }}\), we use sliced inverse regression (SIR) and the fully iterative backfitting estimate (MSIM). To estimate the nonparametric functions, we apply the one-step estimate (OS) and MSIM. For MSIM, we use both true value (T) and SIR (S) as the initial values.

First, a proper bandwidth for estimating \({\varvec{\pi }}(\cdot )\), \({{\varvec{m}}}(\cdot )\) and \({{\varvec{\sigma }}}^2(\cdot )\) is selected. Based on Theorem 2, one can calculate the theoretical optimal bandwidth by minimizing asymptotic mean squared errors. However, the theoretical optimal bandwidth depends on many unknown quantities, which are not easy to estimate in practice. In our examples, we propose to use the following cross-validation (CV) method to choose the bandwidth. Let \(\mathscr {D}\) be the full data set, and divide \(\mathscr {D}\) into a training set \(\mathscr {R}_l\) and a test set \(\mathscr {T}_l\). That is, \(\mathscr {R}_l\cup \mathscr {T}_l=\mathscr {D}\) for \(l=1,\ldots ,L\). We use the training set \(\mathscr {R}_l\) to obtain the estimates \(\{\hat{{\varvec{\pi }}}(\cdot ),\hat{{{\varvec{m}}}}(\cdot ),\hat{{{\varvec{\sigma }}}}^2(\cdot ),\hat{{\varvec{\alpha }}}\}\), then evaluate \({\varvec{\pi }}(\cdot )\), \({{\varvec{m}}}(\cdot )\) and \({{\varvec{\sigma }}}^2(\cdot )\) for the test data set \(\mathscr {T}_l\). For each \(({{\varvec{x}}}_t,y_t)\in \mathscr {T}_l\), calculate the classification probability as

for \(j=1,\ldots ,k\). The regular CV is considered, which is defined by

where \(\hat{y}_t=\sum _{j=1}^k\hat{p}_{tj}\hat{m}_j(\hat{{\varvec{\alpha }}}^\top {{\varvec{x}}}_t)\). We also implemented the likelihood based cross validation to choose the bandwidth and the results are similar but with more computations.

Throughout the simulation, \(L=10\) and the data are randomly partitioned. The procedure is repeated 30 times, and the average of the selected bandwidth is taken as the optimal bandwidth, denoted by \(\hat{h}\).

Tables 1 and 2 present the AB, SD, and RMSE for the estimates. We summarize our findings as follows. The AB, SD, and RMSE of the index parameter estimates from MSIM are consistently smaller than the OS estimates, which indicates that the MSIM is superior to the OS. This is reasonable, since the MSIM makes use of mixture information while SIR does not. Furthermore, the performance of MSIM(T) is slightly better than MSIM(S), but the improvement is negligible, indicating that the sliced inverse regression provides good initial values for our method. In addition, the analysis results about nonparametric function estimates demonstrate that MSIM works slightly better than OS. This verifies the theoretical results stated in Sect. 2.3.

In addition, to see how the selected bandwidth works, we also did simulation for two other bandwidths, \(\hat{h}\times n^{-2/15}\) and \(1.5\hat{h}\), which correspond to the under-smoothing and over-smoothing conditions, respectively. The results are summarized in Table 3 and 4. We can see that the proposed bandwidth selection procedure works reasonably well since the corresponding AB, SD, and RMSE are often the smallest.

Next, we test the accuracy of the standard error estimation and the confidence interval constructed for \(\alpha \) via a conditional bootstrap procedure. Given the covariate x, the response Y can be generated from the estimated distribution

For the simplicity of presentation, only the results from MSIM(S) are reported. We apply the proposed estimation procedure to each of the 200 bootstrap samples, and further obtain the confidence intervals. Table 5 summarizes the performance of the bootstrap procedure. The average and standard deviation of the 500 estimated standard errors are reported, and are denoted by SE and STD, respectively. The actual coverage probabilities based on the constructed confidence intervals are also reported. From Table 5, we can see that the actual coverage probabilities are usually very close to the nominal coverage probabilities, although are conservative for some parameters.

In the following, we explore the performance of MSIM in even higher dimensional spaces. To be more specific, \({\varvec{\alpha }}\) is now set to be a 50-dimensional vector, whose first three elements are \(1/\sqrt{3}\) and the others are zeros. \({{\varvec{x}}}_i\) and the nonparametric functions are generated in the similar manner as before. That is to say, we are now exploring the cases when \(n=200, p=50\) and \(n=400, p=50\). The AD, SD, and RMSE for the nonparametric functions are reported in Table 6. For the global vector \({\varvec{\alpha }}\), we reported the pooled AB, SD, and RMSE, which are defined by

The results are based on MSIM(S). The results from the previous \(n=200, p=8\) and \(n=400, p=8\) cases are also reported for comparison. It can be seen that when we increase p to 50, the MSIM still provides reasonable, although slightly worse, estimates.

Example 2

Next, we consider a two-component MRSIP:

where \(m_1({{\varvec{x}}})\) and \(m_2({{\varvec{x}}})\) are the regression functions for the first and second components, respectively, and \({{\varvec{x}}}_i\) is an eight-component random vector with independent uniform (0,1) components. Let \({\varvec{\beta }}_1=(3,0,3,0,0,0,0,0,0)^\top \), \({\varvec{\beta }}_2=(-3,2,0,3,0,0,0,0,0)^\top ,\) and \({\varvec{\alpha }}=(1,1,1,0,0,0,0,0)^\top /\sqrt{3}\). MRSIP with true value (T) and SIR (S) as initial values are used to fit the data, and the results are compared to the traditional mixture of linear regression models (MLR). The bandwidth for MRSIP is chosen based on the cross-validation, similar to Example 1.

Tables 7 and 8 report the AB, SD, and RMSE for the estimates. From both tables, we can see that MRSIP works comparable to MLR when the sample size is small, and outperforms MLR when the sample size is large. It is clear that the MRSIP provides better estimates of component proportions than MLR since the constant assumption of component proportions by MLR is violated. By reducing the modeling bias of component proportions, MRSIP is able to better classify observations into two components and thus provide better component regression parameters. In addition, we can see that MRSIP(S) provides similar results to MRSIP(T), which demonstrates that SIR provides good initial values for MRSIP.

Next, the standard error estimation and the confidence interval construction for \({\varvec{\beta }}_1, {\varvec{\beta }}_2, \sigma _1^2, \sigma _2^2\) and \({\varvec{\alpha }}\) are reported in Table 9 via the conditional bootstrap procedure introduced in Example 1. The results are based on MRSIP(S). From Table 9, we can see that the actual coverage probabilities are usually close to the nominal coverage probabilities.

We now illustrate the performance of the MRSIP model for a high dimensional setting when p is increased to 50. In this case, \({\varvec{\alpha }}=(1,1,1,0,\ldots ,0)^\top /\sqrt{3}\) is a 50 dimensional vector, \({\varvec{\beta }}_1=(3,0,3,0,\ldots ,0)^\top \) and \({\varvec{\beta }}_2=(-3,2,0,3,0,\ldots ,0)^\top \) are 51-dimensional vectors. \(\pi _j(\cdot )\) and \(\sigma _j^2\), \(j=1,2\) are still the same as before. The results are summarized in Table 10, where the AB and SD of \({\varvec{\beta }}\) are defined as

The results are based on MRSIP(S). From the table, we can see that, given the same sample size, increasing the number of predictors would downgrade the performance of MRSIP. However, if we increase the sample size to 800, even with 50 predictors, the MRSIP still works very well.

5 Real data examples

Example 1

(NBA data) We illustrate the proposed methodology by an analysis of “The effectiveness of National Basketball Association guards”. There are many ways to measure the (statistical) performance of guards in the NBA. Of interest is how the height of the player (Height), minutes per game (MPG) and free throw percentage (FTP) affect points per game (PPM) (Chatterjee et al. 1995).

The data set contains some descriptive statistics for all 105 guards for the 1992–1993 season. Since players playing very few minutes are quite different from those who play a sizable part of the season, we only look at those players playing 10 or more minutes per game and appearing in 10 or more games. In addition, Michael Jordan is an outlier, so we also omit him from our data analysis. These exclude 10 players (Chatterjee et al. 1995). We divide each variable by its corresponding standard deviation, so that they have comparable numerical scales.

To evaluate the prediction performance of the proposed models and compared them to the linear regression model (Linear), the mixture of linear regression models (MLR), the nonparametric mixture of regression models (MNP, Huang et al. 2013), the mixture of regression models with varying mixing proportions (VaryPr1, Huang and Yao 2012), and the mixtures of regressions with predictor-dependent mixing proportions (VaryPr2, Young and Hunter 2010), we used d-fold cross-validation with \(d=5\), 10, and the Monte-Carlo cross-validation (MCCV) with \(d=10\), 20 (Shao 1993). In MCCV, the data were partitioned 500 times into disjoint training subsets (with size \(n-d\)) and test subsets (with size d). The mean squared prediction error evaluated at the test data sets are reported as boxplots in Fig. 1b. Apparently, the MSIM has superior prediction power than the rest of the models, followed by MNP. The average improving rates of MSIM over MNP, for the four cross-validation methods, are 46.64%, 15.16%, 22.87%, and 25.22%, respectively.

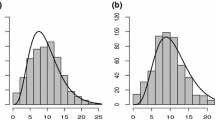

Next, we give detailed analysis of this dataset through the MSIM. An optimal bandwidth is selected at 0.344 by CV procedure. Figure 1a contains the estimated mean functions and hard-clustering results, denoted by dots and squares, respectively. The 95% confidence interval for \(\hat{{\varvec{\alpha }}}\) are (0.134,0.541), (0.715,0.949) and (0.202,0.679). Therefore, MPG is the most influential factor on PPM. This might be partly explained by that coaches tend to let good players with higher PPM play longer minutes per game (i.e., higher MPG). The two groups of guards our new models found might be explained by the difference between shooting guards and passing guards.

Example 2

(Corporations core competition data) We now analyze a corporations core competition dataset, which includes descriptive statistics and financial data of 196 manufacturing listed companies for the year of 2017. Of interest is what determines the value of a corporation. The response variable is the market value Y, and the independent variables are general assets (\(X_1\)), sales revenue (\(X_2\)), sales revenue growth rate (\(X_3\)), income per capita (\(X_4\)), earning cash flow (\(X_5\)), inventory turnover ratio (\(X_6\)), accounts receivable turnover (\(X_7\)), earning per share (\(X_8\)), return on equity (\(X_9\)), research and development expenditure (\(X_{10}\)), proportion of scientific research personnel (\(X_{11}\)), proportions of stuffs with undergraduate degrees (\(X_{12}\)), rate of production equipment updates (\(X_{13}\)), sales revenue within industry (\(X_{14}\)), and market share (\(X_{15}\)). Each variable is scaled before further analysis.

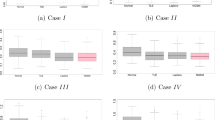

Similar to the previous example, we compare the prediction performance of the proposed models to the five existing models. Both CV and MCCV are applied to this dataset and the mean squared prediction errors are shown in Fig. 2. We can see that the MRSIP and the MLR are the best two models for this dataset. To better compare these two methods, we also compute the average improving rates of MRSIP over MLR, for the four cross-validation methods, which are 11.19%, 6.2%, 6.8%, and 27.10%, respectively. As a conclusion, MRSIP is the best choice for this dataset, followed by mixture of linear regressions.

Table 11 shows the standard errors and 95% confidence intervals for \(\alpha \), \(\beta _1\), and \(\beta _2\), assuming the MRSIP. It can be seen that \(X_1, X_2\), and \(X_4\) have significant effects on mixing proportions. Note that there are variables like \(X_3\), which does not have significant effect on \(\alpha \) or \(\beta \)’s, and therefore, variable selection methods might be helpful to further improve the accuracy and homogeneity of the model estimation. Hard clustering shows that most companies in the first component are innovative companies, who spend huge amount of money on recruiting high-end talents and developing new products, while the second component contains companies that are mostly more traditional and conservative.

6 Discussion

In this paper, we propose two finite semiparametric mixture of regression models and provide the modified EM algorithms to estimate them. We establish the identifiability results of the new models and investigate the asymptotic properties of the proposed estimation procedures. Throughout the article, we assume that the number of components is known and fixed, but it requires more research to select the number of components for the proposed semiparametric mixture models. It will be interesting to know whether the recently proposed EM test (Chen and Li 2009; Li and Chen 2010) can be extended to the proposed semiparametric mixture models. In addition, it is also interesting to build some formal model selection procedure to compare different semiparametric mixture models. In the real data applications, we use the cross-validation criteria to compare different models. When the models are nested, one might use generalized likelihood ratio statistic proposed by Fan et al. (2001) to test any parametric assumption for the semiparametric models. Furthermore, the assumption of fixed dimension of predictors can be relaxed and the proposed models can be extended to the cases where the dimension of predictors p also diverges with the sample size n. This might be done by using the idea of penalized local likelihood if the sparsity assumption is added on the predictors. We employed kernel regression method to estimate the nonparametric functions. One can also use local polynomial regression method (such as local linear) to possibly reduce the bias and boundary effects of the estimators.

References

Cao J, Yao W (2012) Semiparametric mixture of binomial regression with a degenerate component. Statistica Sinica 22:27–46

Chatterjee S, Handcock MS, Simmonoff JS (1995) A casebook for a first course in statistics and data analysis. Wiley, New York

Chen J, Li P (2009) Hypothesis test for normal mixture models: the EM approach. Ann Stat 37:2523–2542

Cook RD, Li B (2002) Dimension reduction for conditional mean in regression. Ann Stat 30:455–474

Fan J, Zhang C, Zhang J (2001) Generalized likelihood ratio statistics and Wilks phenomenon. Ann Stat 29:153–193

Frühwirth-Schnatter S (2001) Markov chain Monte Carlo estimation of classical and dynamic switching and mixture models. J Am Stat Assoc 96:194–209

Green PJ, Richardson S (2002) Hidden markov models and disease mapping. J Am Stat Assoc 97:1055–1070

Härdle W, Hall P, Ichimura H (1993) Optimal smoothing in single-index models. Ann Stat 21:157–178

Henning C (2000) Identifiability of models for clusterwise linear regression. J Classif 17:273–296

Hu H, Yao W, Wu Y (2017) The robust EM-type algorithms for log-concave mixtures of regression models. Comput Stat Data Anal 111:14–26

Huang M, Yao W (2012) Mixture of regression models with varying mixing proportions: a semiparametric approach. J Am Stat Assoc 107:711–724

Huang M, Li R, Wang S (2013) Nonparametric mixture of regression models. J Am Stat Assoc 108:929–941

Huang M, Li R, Wang H, Yao W (2014) Estimating mixture of Gaussian processes by kernel smoothing. J Bus Econ Stat 32:259–270

Ichimura H (1993) Semiparametric least squares (SLS) and weighted SLS estimation of single-index models. J Econom 58:71–120

Jordan MI, Jacobs RA (1994) Hierarchical mixtures of experts and the EM algorithm. Neural Comput 6:181–214

Li K (1991) Sliced inverse regression for dimension reduction. J Am Stat Assoc 86(414):316–327

Li P, Chen J (2010) Testing the order of a finite mixture. J Am Stat Assoc 105:1084–1092

Li B, Zha H, Chiaromonte F (2005) Contour regression: a general approach to dimension reduction. Ann Stat 33:1580–1616

Luo R, Wang H, Tsai CL (2009) Contour projected dimension reduction. Ann Stat 37:3743–3778

Ma Y, Zhu L (2012) A semiparametric approach to dimension reduction. J Am Stat Assoc 107(497):168–179

Ma Y, Zhu L (2013) Efficient estimation in sufficient dimension reduction. Ann Stat 41:250–268

Shao J (1993) Linear models selection by cross-validation. J Am Stat Assoc 88:486–494

Stephens M (2000) Dealing with label switching in mixture models. J R Stat Soc B 62:795–809

Titterington D, Smith A, Makov U (1985) Statistical analysis of finite mixture distribution. Wiley, New York

Wang H, Xia Y (2008) Sliced regression for dimension reduction. J Am Stat Assoc 103:811–821

Wang Q, Yao W (2012) An adaptive estimation of MAVE. J Multivar Anal 104:88–100

Wang S, Yao W, Huang M (2014) A note on the identiability of nonparametric and semiparametric mixtures of GLMs. Stat Probab Lett 93:41–45

Wedel M, DeSarbo WS (1993) A latent class binomial logit methodology for the analysis of paired comparison data. Decis Sci 24:1157–1170

Xiang S, Yao W (2018) Semiparametric mixtures of nonparametric regressions. Ann Inst Stat Math 70:131–154

Xiang S, Yao W, Yang G (2019) An overview of semiparametric extensions of finite mixture models. Stat Sci 34:391–404

Yao W, Lindsay BG (2009) Bayesian mixture labeling by highest posterior density. J Am Stat Assoc 104:758–767

Yao W, Nandy D, Lindsay B, Chiaromonte F (2019) Covariate information matrix for sufficient dimension reduction. J Am Stat Assoc 114:1752–1764

Young DS, Hunter DR (2010) Mixtures of regressions with predictors dependent mixing proportions. Comput Stat Data Anal 54:2253–2266

Zeng P (2012) Finite mixture of heteroscedastic single-index models. Open J Stat 2:12–20

Acknowledgements

The authors are grateful to the editor, the guest editor, and two referees for numerous helpful comments during the preparation of the article. Funding was provided by National Natural Science Foundation of China (Grant No. 11601477), Natural Science Foundation (USA) (Grant No. DMS-1461677), Department of Energy (Grant No. 10006272), the First Class Discipline of Zhejiang - A (Zhejiang University of Finance and Economics-Statistics), China (Grant No. NA) and Natural Science Foundation of Zhejiang Province (Grant No. LY19A010006).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix A

Appendix A

Technical conditions

-

(C1)

The sample \(\{({{\varvec{x}}}_i,Y_i),i=1,\ldots ,n\}\) is independent and identically distributed from its population \(({{\varvec{x}}},Y)\). The support for \({{\varvec{x}}}\), denoted by \(\mathscr {X}\), is a compact subset of \(\mathbb {R}^3\).

-

(C2)

The marginal density of \({\varvec{\alpha }}^\top {{\varvec{x}}}\), denoted by \(f(\cdot )\), is twice continuously differentiable and positive at the point z.

-

(C3)

The kernel function \(K(\cdot )\) has a bounded support, and satisfies that

$$\begin{aligned}&\int K(t)dt=1,\qquad \int tK(t)dt=0,\qquad \int t^2K(t)dt<\infty ,\nonumber \\&\int K^2(t)dt<\infty ,\qquad \int |K^3(t)|dt<\infty . \end{aligned}$$ -

(C4)

\(h\rightarrow 0\), \(nh\rightarrow 0\), and \(nh^5=O(1)\) as \(n\rightarrow \infty \).

-

(C5)

The third derivative \(|\partial ^3\ell ({\varvec{\theta }},y)/\partial \theta _i\partial \theta _j\partial \theta _k|\le M(y)\) for all y and all \({\varvec{\theta }}\) in a neighborhood of \({\varvec{\theta }}(z)\), and \(E[M(y)]<\infty \).

-

(C6)

The unknown functions \({\varvec{\theta }}(z)\) have continuous second derivative. For \(j=1,\ldots ,k\), \(\sigma _j^2(z)>0\), and \(\pi _j(z)>0\) for all \({{\varvec{x}}}\in \mathscr {X}\).

-

(C7)

For all i and j, the following conditions hold:

$$\begin{aligned} E\left[ \left| \frac{\partial \ell ({\varvec{\theta }}(z),Y)}{\partial \theta _i}\right| ^3\right]<\infty \qquad E\left[ \left( \frac{\partial ^2\ell ({\varvec{\theta }}(z),Y)}{\partial \theta _i\partial \theta _j}\right) ^2\right] <\infty \end{aligned}$$ -

(C8)

\({\varvec{\theta }}_0''(\cdot )\) is continuous at the point z.

-

(C9)

The third derivative \(|\partial ^3\ell ({\varvec{\pi }},y)/\partial \pi _i\partial \pi _j\partial \pi _k|\le M(y)\) for all y and all \({\varvec{\pi }}\) in a neighborhood of \({\varvec{\pi }}(z)\), and \(E[M(y)]<\infty \).

-

(C10)

The unknown functions \({\varvec{\pi }}(z)\) have continuous second derivative. For \(j=1,\ldots ,k\), \(\pi _j(z)>0\) for all \({{\varvec{x}}}\in \mathscr {X}\).

-

(C11)

For all i and j, the following conditions hold:

$$\begin{aligned} E\left[ \left| \frac{\partial \ell ({\varvec{\pi }}(z),Y)}{\partial \pi _i}\right| ^3\right]<\infty \qquad E\left[ \left( \frac{\partial ^2\ell ({\varvec{\pi }}(z),Y)}{\partial \pi _i\partial \pi _j}\right) ^2\right] <\infty \end{aligned}$$ -

(C11)

\({\varvec{\pi }}''(\cdot )\) is continuous at the point z.

Proof of Theorem 1

Ichimura (1993) have shown that under conditions (i)–(iv), \({\varvec{\alpha }}\) is identifiable. Further, Huang et al. (2013) showed that with condition (v), the nonparametric functions are identifiable. Thus completes the proof. \(\square \)

Proof of Theorem 2

Let

Define \(\hat{{\varvec{\pi }}}^*=(\hat{\pi }_1^*,\ldots ,\hat{\pi }_{k-1}^*)^\top \), \(\hat{{{\varvec{m}}}}^*=(\hat{m}_1^*,\ldots ,\hat{m}_k^*)^\top \), \(\hat{{{\varvec{\sigma }}}}^*=(\hat{\sigma }_1^*,\ldots ,\hat{\sigma }_k^*)^\top \) and denote \(\hat{{\varvec{\theta }}}^*=(\hat{{\varvec{\pi }}}^{*T},\hat{{{\varvec{m}}}}^{*T},(\hat{{{\varvec{\sigma }}}}^{*2})^\top )^\top \). Let \(a_n=(nh)^{-1/2}\) and

If \((\hat{{\varvec{\pi }}},\hat{{{\varvec{m}}}},\hat{{{\varvec{\sigma }}}}^2)^\top \) maximizes (4), then \(\hat{{\varvec{\theta }}}^*\) maximizes

with respect to \({\varvec{\theta }}^*\). By a Taylor expansion,

where

and

By WLLN, it can be shown that \({{\varvec{A}}}_{1n}=-f(z)\mathscr {I}^{(1)}_\theta (z)+o_p(1)\). Therefore,

Using the quadratic approximation lemma (see, for example, Fan and Gijbels 1996), we have that

Note that

where

Since \(\sqrt{n}(\tilde{{\varvec{\alpha }}}-{\varvec{\alpha }})=O_p(1)\), it can be shown that

and

Therefore,

To complete the proof, we now calculate the mean and variance of \({{\varvec{W}}}_n\). Note that

Similarly, we can show that

where \(\kappa _l=\int t^lK(t)dt\) and \(\nu _l=\int t^lK^2(t)dt\). The rest of the proof follows a standard argument. \(\square \)

Proof of Theorem 3

Denote \(Z={\varvec{\alpha }}^\top {{\varvec{x}}}\) and \(\hat{Z}=\hat{{\varvec{\alpha }}}^\top {{\varvec{x}}}\). Let \(\ell ({\varvec{\theta }}(z),X,Y)=\log \sum _{j=1}^k\pi _j(z)\phi (Y|m_j(z),\sigma _j^2(z))\). If \(\hat{{\varvec{\theta }}}(z_0;\hat{{\varvec{\alpha }}})\) maximizes (4), then it solves

Apply a Taylor expansion and use the conditions on h, we obtain

By similar argument as in the previous proof,

Note that

where the second part is handled by (27).

Since \(\hat{{\varvec{\alpha }}}\) maximizes (9), it is the solution to

where \(\lambda \) is the Lagrange multiplier. By the Taylor expansion and using (28), we have that

Define

and apply (27),

Interchanging the summations in the last term, we get

Let \(\varGamma _\alpha =I-{\varvec{\alpha }}{\varvec{\alpha }}^\top +o_p(1)\). Combining (29) and (30), and multiply by \(\varGamma _\alpha \), we have

It can be shown that the right-hand side of (31) has the covariance matrix \(\varGamma _\alpha {{\varvec{Q}}}_1\varGamma _\alpha \), and therefore, completes the proof. \(\square \)

Proof of Theorem 4

Ichimura (1993) have shown that under conditions (i)–(iv), \({\varvec{\alpha }}\) is identifiable. Furthermore, Huang and Yao (2012) showed that with condition (v), \(({\varvec{\pi }}(\cdot ),{\varvec{\beta }},{{\varvec{\sigma }}}^2)\) are identifiable. Thus completes the proof. \(\square \)

Proof of Theorem 5

This proof is similar to the proof of Theorem 2.

Let \(\hat{\pi }_j^*=\sqrt{nh}\{\hat{\pi }_j-\pi _j(z)\}\), \(j=1,\ldots ,k-1\), and \(\hat{{\varvec{\pi }}}^*=(\hat{\pi }_1^*,\ldots ,\hat{\pi }_{k-1}^*)^\top \). It can be shown that

where

To complete the proof, notice that

and Cov\(({{\varvec{W}}}_{2n})=f(z)\mathscr {I}^{(2)}_\pi (z)\nu _0+o_p(1)\). The rest of the proof follows a standard argument. \(\square \)

Proof of Theorem 6s

The proof is similar to the proof of Theorem 3. It can be shown that

and therefore,

Since \(\hat{{{\varvec{\lambda }}}}\) maximizes (14), it is the solution to

where \(\gamma \) is the Lagrange multiplier. By Taylor series and (32)

where \({{\varvec{\varLambda }}}_{1i}=\begin{pmatrix}{{\varvec{x}}}_i{\varvec{\pi }}'(Z_i)\\ \mathbf{I} \end{pmatrix}\), and the last equation is the result of interchanging the summations. Let \(\varGamma _\alpha =\begin{pmatrix}{{\varvec{I}}}-{\varvec{\alpha }}{\varvec{\alpha }}^\top &{}\mathbf 0 \\ \mathbf 0 &{}{{\varvec{I}}}\end{pmatrix}+o_p(1)\). By (33), and multiply by \(\varGamma _\alpha \), we have

It can be shown that the right-hand side of (34) has the covariance matrix \(\varGamma _\alpha {{\varvec{Q}}}_2\varGamma _\alpha \), and thus, completes the proof. \(\square \)

Rights and permissions

About this article

Cite this article

Xiang, S., Yao, W. Semiparametric mixtures of regressions with single-index for model based clustering. Adv Data Anal Classif 14, 261–292 (2020). https://doi.org/10.1007/s11634-020-00392-w

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11634-020-00392-w