Abstract

The aim of this paper is to explore the phenomenon of aperiodic stochastic resonance in neural systems with colored noise. For nonlinear dynamical systems driven by Gaussian colored noise, we prove that the stochastic sample trajectory can converge to the corresponding deterministic trajectory as noise intensity tends to zero in mean square, under global and local Lipschitz conditions, respectively. Then, following forbidden interval theorem we predict the phenomenon of aperiodic stochastic resonance in bistable and excitable neural systems. Two neuron models are further used to verify the theoretical prediction. Moreover, we disclose the phenomenon of aperiodic stochastic resonance induced by correlation time and this finding suggests that adjusting noise correlation might be a biologically more plausible mechanism in neural signal processing.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Dynamical models from cellular level to network and cortical level usually play a necessary role in cognitive neuroscience (Levin and Miller 1996; Wang et al. 2014; Déli et al. 2017; Mizraji and Lin 2017; Song et al. 2019). Due to the random release of neurotransmitter, the stochastic bombing of synaptic inputs and the random opening and closing of ion channels, noise is ubiquitous in neural systems. Various noise-induced non-equilibrium phenomena disclosed in experimental or dynamical models, such as stochastic synchronization (Kim and Lim 2018), noise induced phase transition (Lee et al. 2014) and stochastic integer multiple discharge (Gu and Pan 2015), are helpful in explaining the biophysical mechanisms underlying neural information processing and coding.

Stochastic resonance, initially proposed in exploring the periodicity of the continental ice volume in the quaternary era (Benzi et al. 1981), is such an anti-intuitive phenomenon (Gammaitoni et al. 1998; Nakamura and Tateno 2019; Xu et al. 2020; Zhao et al. 2020), where weak coherent signal can be amplified by noise through certain nonlinearity. In general, a suitable external weak signal is prerequisite for stochastic resonance. When the external weak signal is absent or replaced by an intrinsic periodicity, it is referred to as coherence resonance (Guan et al. 2020), which often appears in systems close to Hopf bifurcation. When the external weak signal is not periodic, it is called aperiodic stochastic resonance (Collins et al. 1995; 1996a, b; Tiwari et al. 2016).

Thanks to the aperiodicity of the weak signal, the spectral amplification factor or the output signal-to-noise ratio, typical for periodic signals (Liu and Kang 2018; Yan et al. 2013), is no longer suitable to be a quantifying index. In fact, for aperiodic stochastic resonance, instead of emphasizing frequency matching, shape matching should be emphasized, thus the cross-correlation measure (Collins et al. 1995; 1996a, b) and the input–output mutual information (Patel and Kosko 2005, 2008) are commonly used indexes. Although the quantification is seemingly complex, the principle of aperiodic stochastic resonance has found much significance in neural processing and coding, since the spike trains of action potential observed in hearing enhancement (Zeng et al. 2000) and visual perception experiments (Dylov and Fleischer 2010; Liu and Li 2015; Yang 1998) tend to be nonharmonic. Very recently, the principle of aperiodic stochastic resonance has been effectively applied to design visual perception algorithm using spiking networks (Fu et al. 2020).

Noise correlation is common in cortical firing activities. Nevertheless, most of the literatures took Gaussian white noise for grant, except that a few (Averbeck et al. 2006; Guo 2011; Sakai et al.1999) paid attention to the “color” of noise but far from enough. Therefore, in this paper we investigate the effect of (Orenstein-Ulunbeck type) Gaussian colored noise (Floris 2015; Wang and Wu 2016) of nonzero correlation time on the aperiodic stochastic resonance. As a starting point, we will generalize the existing zeroth order perturbation results (Freidlin et al. 2012) of nonlinear dynamical systems from Gaussian white noise to Gaussian colored noise. And then, we follow the “forbidden interval” theorem (Kosko et al. 2009) and direct simulation to explore the aperiodic stochastic resonance in bistable and excitable neural systems.

The paper is structured as follows. In “General results” section, we introduce some preliminaries and main results. In “Proof of general results”, we provide the proof for the perturbation property under global and local Lipschitz conditions, respectively. And then we predict the phenomenon of aperiodic stochastic resonance based on information theory measure through Theorem 3 in the same section. In “Numerical verification” section, numerical results based on two types of neuron models are shown to disclose the functional role of noise correlation time. Finally, conclusions are drawn in “Conclusion and discussion” section.

General results

Suppose that \(X (t )\) satisfy the general d-dimensional stochastic differential equation driven by an m-dimensional Ornstein–Ulenbeck process

where \(X_{t} = (X^{1} (t)\;\;X^{2} (t) \;\ldots \;X^{d} (t))'\) and \(f(X_{t} ,t) = (f^{1} (X_{t} ,t)\;\;\;f^{2} (X_{t} ,t) \;\ldots\; f^{d} (X_{t} ,t))'\) is the state vector and the field vector, the function matrix \(g(X_{t} ,t) = (g_{j}^{i} (X_{t} ,t))_{d \times m}\) describes the noise intensity, and \(U(t) = (u_{1} (t)\;\;u_{2} (t) \;\ldots\; u_{m} (t)\;)'\) is the m-dimensional Ornstein–Ulenbeck process. Equation (1) is essentially shorthand for the following equations

for \(i = 1,\,2, \ldots ,d\) and \(j = 1,2, \ldots ,m\). Here, each scalar Ornstein–Ulenbeck process \(u_{j} (t)\), also referred to as Gaussian colored noise, is defined on complete probability space \((\varOmega ,\mathscr{F},\{ {\mathscr{F}}_{t} \}_{t \ge 0} ,P)\) with a filtration \(\{ {\mathscr{F}}_{t} \}_{t \ge 0}\), which satisfies the usual conventions (Øksendal 2005; Mao 2007): it is increasing and right continuous and \({\mathscr{F}}_{0}\) contains all P-null sets. In Eq. (1b), where \(W_{i} (t)\)(\(1 \le i \le m\)) are statistically independent Wiener processes satisfying

In this paper, we assume that the Ornstein–Ulenbeck process \(u_{j} (t)\) is stationary. That is, \({{u}_{j}}(t)\sim N(0,0.5\tau {{\sigma }^{2}})\) for all \(t \ge 0\). It is also known from Ito formula that

Suppose that \(\hat{X}(t)\) satisfy

Then, the following main results in Theorems 1 and 2 state that

as \(\sigma \to 0\) under the global and local Lipschitz conditions, respectively.

Theorem 1

Let \(f^{i} :\;R^{d} \to R\) and \(g_{j}^{i} :\;R^{d} \to R\) in the system (1) be Borel measurable functions. Assume that there is a positive constant \(L\) such that \(f^{i}\) and \(g_{j}^{i}\) satisfy

for \(\forall x_1, x_2 \in R^d\), namely, \(f^{i}\) and \(g_{j}^{i}\) are global Lipschitz continuous. Also, assume that there is a pair of positive constants \(K\) and \(\gamma \in (0,1)\) such that \(f^{i}\) and \(g_{j}^{i}\) satisfy the global growth conditions

for \(\forall (x,t) \in R^d \times [0, T]\). Here \(i = 1,2, \ldots ,d\) and \(j = 1,2, \ldots ,m\). Then, for every \(T > 0\), there exist positive constants \(a_{4}\) and \(b_{4}\) (see Eqs. (11) and (12) in “Proof of general results” section, respectively) such that

where \(A_{ 1} = 2dm(m + 1)T^{2} K^{2} (4\xi_{1} + \sqrt {\xi_{2} a_{4} \exp (b_{4} T)} )\), \(B_{ 1} = (m + 1)dTL\), and

for \(k = 1,2, \ldots\).

Theorem 2

Let \(f^{i} :\;R^{d} \to R\) and \(g_{j}^{i} :\;R^{d} \to R\) in the system (1) for all \(i = 1,2,\ldots,d\) and \(j = 1,2,\ldots,m\) be Borel measurable functions, satisfying the local Lipschitz condition

for all \(x_{1}\),\(x_{2} \in R^{d}\) with \(\left\| {x_{1} } \right\| \le N\) and \(\left\| {x_{2} } \right\| \le N\) and the growth conditions (5) . Here \(L_{N}\) is a positive constant for any \(N > 0\). Then, for every \(T > 0\), there holds \(E\left[ {\;\mathop {\sup }\limits_{0 \le t \le T} \left| {X_{t} - \hat{X}_{t} } \right|^{2} } \right] \to 0\) as \(\sigma \to 0\).

Throughout the context, we use \(\;\left| {\; \cdot \;} \right|\) to denote the Euclidean norm in \(R^{d}\) or the trace norm of matrices, that is to say that for a vector \(X\), \(\left| X \right|^{2} = \sum\nolimits_{i} {\left| {X_{i} } \right|^{2} }\) and for a matrix \(A\), \(\left| A \right|^{2} = \sum\nolimits_{i,j} {\left| {A_{ij} } \right|^{2} }\). Here, we remark that Theorem 1 states that the solution of the perturbed system (1) satisfying global Liptschitz condition and the growth condition can be approximated by the unperturbed system when the noise intensity of the Gaussian colored noise tends to zero, while Theorem 2 states the same conclusion but relaxs the global Liptschitz condition into the local Lipschitz condition. Both of them can be regarded as the generalization of the perturbation results associated with zeroth order approximation (Freidlin et al. 2012). More exactly, the corresponding perturbation result in the book of Freidlin and Wentzell can be recovered from Theorem 1 when \(\tau \to 0\) (i.e. in the Gaussian white noise limit). By utilizing the two theorems, we can provide an assertion in the theorem 3 below for the existence of aperiodic stochastic resonance in certain nonlinear systems with Gaussian colored noise.

The aperiodic stochastic resonance phenomenon is usually referred to as a kind of special stochastic resonance where the weak drive signal is aperiodic. As pointed in the introduction section, the mutual information is more qualified as the index to quantify aperiodic stochastic resonance than the signal-to-noise ratio. To this end, we suppose that the nonlinear system receives binary random signals, denoted by \(S (t )\in \left\{ {s_{1} ,s_{2} } \right\}\) and its output \(Y (t )\in \left\{ {0 ,\;1} \right\}\) is a quantized signal as well, depending on whether the output response \(x (t )\) is below or over a certain threshold. We emphasize that this kind of quantized treatment is very common in the background of stochastic resonance and neural dynamics.

Let \(I(S,Y)\) be the Shannon mutual information of the discrete input signal \(S\) and the discrete output signal \(Y\), then it can be defined by the difference of the output’s unconditional entropy and conditional entropy (Cover and Thomas 1991), namely \(I(S,Y) = H(Y) - H(Y\left| S \right.)\). Denote by \(P_{S} (s)\) the probability density of the input signal, \(P_{Y} (y)\) the probability density of the output signal, \(P_{Y\left| S \right.} (y\left| s \right.)\) the conditional density of the output given the input, and \(P_{S,Y} (s,y)\) the joint density of the input and the output. Then,

From the above final equation, it is clear to see that \(I(S,Y) = 0\) if and only if \(P_{S,Y} (s,y) = P_{S} (s)P_{Y} (y)\). Moreover, by mean of Jensen’s inequality one can find that \(I(S,Y) \ge 0\), where the equal sign holds true if and only if the input signal and the output signal are mutually independent. Hence, Shannon mutual information, capable of measuring the statistical correlation between the input and output signals, is suitable for detecting how much of the subthreshold aperiodic signal being contained in the output spike trains. Noise deteriorates the transmission performance of dynamical systems, however, when aperiodic stochastic resonance occurs, the transmission capacity can be optimally enhanced at an intermediate noise level.

Note that the nonmonotonic dependence of the input–output mutual information on noise intensity signifies the occurrence of aperiodic stochastic resonance, thus a direct proof for the existence of aperiodic stochastic resonance should contain a basic deduction of the extreme point of the mutual information. But, the explicit formulaes for mutual information are often hard to acquire, thus such a direct proof is almost impossible. In order to make our results generally applicable, we adopt an indirect proof based on the “forbidden interval” theorem (Patel and Kosko 2008), as stated by Theorem 3.

Theorem 3

Consider stochastic resonant systems of the form in Eq. (1) with \(f(X_{t} ,t) = \tilde{f}(X_{t} ) + \tilde{S}(t)\) and \(\tilde{S}(t) = [S(t)\;\;0\;\; \ldots \;\;0]'\). Suppose that \(\tilde{f}(x)\) and \(g(x)\) satisfy local Lipschitz condition and the growth condition (5). Suppose that the input signal \(S(t) \in \{ s_{1} ,s_{2} \}\) is subthreshold, that is, \(S(t) < \theta\) with \(\theta\) being some crossing threshold. Suppose that for some sufficiently larger noise intensity, there is some statistical dependence between the binary input and the impulsive output, that is to say, \(I(S,Y) > 0\) holds true for some \(\sigma_{0} > 0\). Then, the stochastic resonant systems can exhibit the aperiodic stochastic resonance effect in the sense that \(I(S,Y) \to 0\) as \(\sigma \to 0\).

Theorem 3 gives a sufficient condition for the aperiodic stochastic resonance in the system (1) with subthreshold signals. As is known from Jensen’s inequality that \(I(S,Y) \ge 0\) and \(I(S,Y) = 0\) if and only if \(S\) and \(Y\) are statistically independent. Then, we can reasonably suppose there exist some \(\sigma_{0} > 0\) such that \(I(S,Y) > 0\). The “forbidden interval” theorem states that what goes down must go up (Patel and Kosko 2005, 2008; Kosko et al. 2009), thus the assertion in Theorem 3 can be proven if one can verify that \(I(S,Y) \to 0\) as \(\sigma \to 0\). Therefore, the increase of noise intensity will lead to the increase of the mutual information and then will enhance the discriminating ability to subthreshold signals.

Proof of general results

In this section we list the proofs of the above theorems. To avoid too lengthy and tedious deduction, we only list the involving Lemmas here but move their proof to appendix.

Lemma 1

Let \(k \ge 1\) be an integer. The stationary OU process (1b) has the property that for \(\forall T \ge 0\),

Lemma 2

Let \(f^{i} :\;R^{d} \to R\) and \(g_{j}^{i} :\;R^{d} \to R\) in Eq. (1) be Borel measurable functions that satisfy the global Lipschitz condition (4) or the local Lipschitz condition (8) and the growth conditions (5). Then for any initial value \(X_{0} \in R^{d}\), Eq. (1) has a unique global solution \(X_{t}\) on \(t \ge 0\). Moreover, for any integer \(p \ge 2\), the solution has the property that

with

and \(\bar{k}\) is an integer satisfying

Proof of Theorem 1

Fix \(T > 0\) arbitrarily. Using the elementary inequality \(u^{\gamma } \le 1 + u\) for any \(u \ge 0\), we see from (5) that

To show the assertion (6), let us start with the scalar equation

Using the inequality \((u_{1} + \cdots + u_{n} )^{2} \le n(u_{1}^{2} + \cdots + u_{n}^{2} )\), we get

for \(0 < t < T\). We emphasize that the inequality (14) has been used here. As the right-hand-side terms are increasing in \(t\), we derive

An application of the Gronwall inequality implies the required assertion (6). □

Lemma 3

Let \(f^{i} :\;R^{d} \to R\) in Eq. (1) be Borel measurable functions that satisfy the local Lipschitz condition (8) and the growth condition (5). Then for any initial value \(X_{0} \in R^{d}\), Eq. (3) has a unique global solution \(\hat{X}_{t}\) on \(t \ge 0\). Moreover, for any \(T > 0\), the solution has the property that

with \(c_{p} = d^{{\frac{p}{2}}} \left( {2^{p - 1} \left| {X_{0} } \right|^{p} + T^{p} 2^{2(p - 1)} d^{{\frac{p}{2}}} K^{p} T^{p} } \right)\).

Proof of Theorem 2

The local Lipschitz condition and the growth condition ensure that the existence of the unique solution of the system (1). We are going to use the technique adapted from the work of Mao and Sababis (2003) to show the required assertion (3). For each \(N > \sqrt d (\left| {X_{0} } \right| + TK)\exp (\sqrt d KT)\), then by Lemma 3, \(\mathop {\sup }\limits_{0 \le t \le T} \left| {\hat{X}_{t} } \right| < N\) Let us define the stopping time \(\tau_{N} = \inf \{ t \ge 0:\left| {X_{t} } \right| \ge N\}\). Clearly

where \(1_{A}\) is the indicator function of set \(A\).

Let us estimate the first term in the right-hand side of Eq. (16). Noting that the Young inequality (Prato and Zabczyk 1992) \(\alpha \beta \le \eta \frac{{\alpha^{\mu } }}{\mu } + \frac{{\alpha^{\mu } }}{{\mu^{{\frac{v}{\mu }}} }}\frac{{\beta^{v} }}{v}\) holds true for all \(\alpha ,\beta ,\eta ,\mu\) and \(v\) when \(u^{ - 1} + v^{ - 1} = 1\), we have

where \(p > 2\) is an integer and \(\eta\) is a positive number from which it can be deduced that

We know

from Lemma 2 and

from Lemma 3, then,

Substitution of Eqs. (18) and (19) into Eq. (17) yields

Next, we estimate the second term in the right-hand side of Eq. (16). The involving process is very close to the proof for Theorem 1, and here we list details to enhance the reader’s readability. Clearly,

Noting that \(\left| {X_{{t \wedge \tau_{N} }} - \hat{X}_{{t \wedge \tau_{N} }} } \right|^{2} = \left| {\int_{0}^{{t \wedge \tau_{N} }} {(f^{i} (X_{s} ,s) - f^{i} (\hat{X}_{s} ,s))ds} + \sum\limits_{j = 1}^{m} {\int_{0}^{{t \wedge \tau_{N} }} {g_{j}^{i} (X_{s} ,s)u_{j} (s)ds} } } \right|^{2}\), then using the Hölder inequality, the local Lipschitz condition (8) and the growth condition (5) in turn arrives at

As the right-hand-side terms are increasing in \(t\), we derive

and then\(E\left[ {\mathop {\sup }\limits_{0 \le s \le t} \left| {X_{{t \wedge \tau_{N} }} - \hat{X}_{{t \wedge \tau_{N} }} } \right|^{2} } \right] \le d(m + 1)\left( {TL_{N} \int_{0}^{t} {E\left[ {\mathop {\sup }\limits_{0 \le r \le s} \left| {X_{{r \wedge \tau_{N} }} - \hat{X}_{{r \wedge \tau_{N} }} } \right|^{2} } \right]ds} + 2\sigma^{2} mT^{2} K^{2} \left( {4\xi_{1} + \sqrt {\xi_{2} a_{4} \exp (b_{4} T)} } \right)} \right)\) By the Gronwall inequality we obtain

Combination of Eqs. (21) and (22) yields

With Eqs. (20) and (23) substituted into Eq. (16), it is obtained that

For any \(\varepsilon > 0\), we choose \(\eta\) sufficiently small to get \(\frac{{2^{p} \eta }}{p}\left( {a_{p} \exp (b_{p} T) + c_{p} } \right) < \frac{\varepsilon }{3}\) and \(N\) sufficiently large such that \(\frac{p - 2}{{p\eta^{{{2 \mathord{\left/ {\vphantom {2 {(p - 2)}}} \right. \kern-0pt} {(p - 2)}}}} }}\frac{1}{{N^{p} }}a_{p} \exp (b_{p} T) < \frac{\varepsilon }{3}\) . Then, we can choose \(\sigma\) small enough to ensure \(2d\sigma^{2} m(m + 1)T^{2} K^{2} \left( {4\xi_{1} + \sqrt {\xi_{2} a_{4} \exp (b_{4} T)} } \right)\exp \left( {d(m + 1)TL_{N} } \right) < \frac{\varepsilon }{3}.\) Hence, there exists a critical value \(\sigma_{c}\) such that \(E\left[ {\mathop {\sup }\limits_{0 \le t \le T} \left| {X_{t} - \hat{X}_{t} } \right|^{2} } \right] < \varepsilon\) when \(\sigma < \sigma_{c}\). □

Lemma 4

Consider a nonlinear system with \(f(X_{t} ,t) = \tilde{f}(X_{t} ) + S(t)\) . Assume \(\tilde{f}\left( x \right)\) and \(g\left( x \right)\) satisfy the local Lipschitz condition and \(g\left( x \right)\) obey the growth condition. Suppose that the system receives a binay input \(S(t) \in \{ s_{1} ,s_{2} \}\) . Then for every \(T > 0\) and \(\varepsilon > 0\) , as \(\sigma \to 0\) there hold

and

Proof of Theorem 3

Let \(\left\{ {\sigma_{k} } \right\}_{k = 1}^{\infty }\) be arbitrary decreasing sequence of intensity parameter of Gaussian colored noise such that \(\sigma_{k} \to 0\) as \(k \to \infty\). Denote the corresponding solution process and the discrete output process of “0” and “1” by \(X_{k} (t)\) and \(Y_{k} (t)\) with Gaussian colored noise parameter \(\sigma_{k}\) instead of \(\sigma\). Recalling that \(I(S,Y) = 0\) if and only if \(S\) and \(Y\) are statistically independent, so one only needs to show that \(F_{S,Y} (s,y) = F_{S} (s)F_{Y} (y)\) or \(F_{Y\left| S \right.} (y\left| s \right.) = F_{Y} (y)\) as \(\sigma \to 0\) for signal symbols \(s \in \left\{ {s_{1} ,s_{2} } \right\}\) and for all \(y \ge 0\). Here \(F_{S,Y}\) represents for the joint distribution function and \(F_{Y\left| S \right.}\) is the conditional distribution function.

Note that \(y \ge 0\) means that \(X^{1} (t)\) is capable of crossing the firing threshold from below, then

\(P(Y_{k} (t)\left| {S = s_{i} } \right.) \le P\left( {\mathop {\sup }\limits_{{t_{1} \le t \le t_{2} }} X_{k}^{1} (t) > \theta \left| {S = s_{i} } \right.} \right)\),

and by Lemma 4,

where the first equality is owing to that the input signal is subthreshold. Thus,

or equivalently

Then, using the total probability formula,

Taking the \(k \to \infty\) limit on the both sides of this equation, we arrive at

This demonstrates that \(S\) and \(Y\) are statistically independent, and hence \(I(S,Y) = 0\) as \(\sigma \to 0\).□

Numerical verification

Theorem 3 builds a bridge between the perturbation theorem and the existence of stochastic resonance. In order to have an intuitive verification of Theorem 3, let us take two examples into account. The first example is the noisy feedback neuron models with a quantized output into account (Patel and Kosko 2008; Gao et al. 2018). Let \(x\) denote the membrane voltage, then

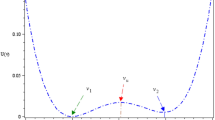

where the logistic function \(h(x) = (1 + e^{ - ax} )^{ - 1}\)(\(a = \text{8}\)) gives a bistable artificial neuron model, the signal \(S (t )\in \left\{ {A ,\;B} \right\}\) represents the net excitatory or inhibitory input. Here the value of \(S (t )\) is taken from the binary distribution:\(P (S (t )= A )= p\),\(P (S (t )= B )= 1 - p\), and the duration time of each value of \(S (t )\) is considerably larger than the decay time constant \(\tau\). The more details are can be found from the subsequent figures and the numerical steps for mutual information. The neuron feeds its activation back to itself through \(- x(t) + h(x(t))\) and action potential can be generated if the membrane potential (spike) is larger than zero. Here note that the vector field \(f(x) = - x(t) + h(x(t))\). According to the graphic method it can be seen in Fig. 1 that if the input signal \(S (t )\in \left\{ {A ,\;B} \right\}\) take opposite value between the two dot lines, that is, \(- 0.63 < A < B < { - }0.37\), then by linear stability analysis, the neuron has three equilibrium points, namely, two stable and one unstable. Since the neuron information is mainly transmitted by the spiking train, the quantized output \(y(t)\) can be defined as

Schemata of the vector field function of \(f(x)\) (blue solid line). The value of the above dot line is 0.63, and the value of the bottom dotted line is 0.37. In the figure, the intersection of the dash line with the S-shaped curve stands for the equilibrium points, and the one-order derivative of the vector field just is the resultant slope of tangent line. Since two of the three slopes are negative and one is positive, two of the three equilibrium points are stable, and one is unstable. (Color figure online)

Note that

and

The two inequalities together imply the vector field of the bistable neuron model \(f(x)\) satisfies global Lipschitz condition (4) and growth condition (5). Note that the global Lipschitz condition implies the local Lipschitz condition, which can assure the existence and uniqueness of the solution of the model, thus according to Theorem 3, the phenomenon of aperiodic stochastic resonance should exist for a subthreshold input signal. Here, by “subthreshold”, it means that the weak signal cannot spontaneously emit action potential without the help of noise. We can guarantee that when the constant value of the input signal enables the model to be bistable, the input signal is subthresold.

Before exhibiting the numerical results of aperiodic stochastic resonance, let us list the numerical steps for mutual information calculation for the sake of the reader’s reference.

-

(I)

Initialize the parameters A, B, p and \(x(0)\).

-

(II)

Given the time step-length \(\Delta t = 0.01\) and a series of the duration time \(T_{i}\)(\(i \ge 1\)).

-

(III)

For each time span of duration time \(T_{i}\), generate a uniformly distributed number r, and then let \(S(t) = A\) if \(r > p\). Otherwise, \(S(t) = B\).

-

(IV)

Apply Euler difference scheme and Box-Mueller algorithm to Eq. (27) (or Eq. (28)) to generate the output spike train \(y(t)\).

-

(V)

Calculate the marginal probability laws \(P(S(t))\) and \(P(y(t))\) and the joint probability law \(P(S(t),\;y(t))\).

-

(VI)

Substitute the above probability laws into Eq. (9) for the mutual information.

We remark that in the above Step (V), the involving probabilities (also refer to Table 1) are approximated by statistical frequencies. In all numerical implements except Fig. 4, the dimensionless duration time parameter for the input signal \(S(t)\) is fixed as \(T = 40\), and the simulating time span is taken as 50 such constant duration times. Over one time span, one membrane evolution trajectory or output spike train can be tracked, and then the mutual information can be acquired from one trial. Note that the definition in Eq. (9) can be rewritten into

thus the mutual information is actually the mathematical expectation of the random variable \(\log \frac{{P_{SY} (s,y)}}{{P_{S} (s)P_{Y} (y)}}\) (Patel and Kosko 2008). So, in order to improve the accuracy of the above calculation, for each set of given parameters, we employ 100 trials to obtain the averaged mutual information, as shown in all the involving figures.

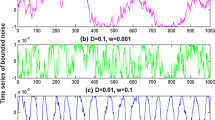

The non-monotonic dependence of mutual information on noise intensity signifies the occurrence of stochastic resonance, as shown in Fig. 2. Since the binary input is subthreshold, there is no spike in absent of Gaussian colored noise (Fig. 2b). As the noise of small amount is added, the neuron starts to spike (Fig. 2c), but the output signal is much different from the binary input (Fig. 2a). When the noise is at an appropriate level, the output signal greatly resembles the input signal in shape (Fig. 2d), but the resemblance in shape is gradually broken as overmuch amount of noise could cause too frequent spikes (Fig. 2e). The non-monotonic dependence of the input–output mutual information on noise intensity exactly reflects the change in the resemblance (Fig. 2f), thus the phenomenon of stochastic resonance is confirmed.

Stochastic resonance in the bistable neuron model with quantized output. The binary signal is shown in panel (a). Here \(A = { - }0.6\), \(B = { - }0.4\) and \(p = 0.7\). Since the input signal is subthreshold, there is no 1 in the quantized output when the Gaussian colored noise is absent (\(\sigma = 0\),\(\tau = 0.4\)), as shown in panel (b). As the noise intensity of the Gaussian colored noise is introduced, more and more “1s” occur in the quantized output, as shown in panel (c) (\(\sigma = 0.1\), \(\tau = 0.4\)), (d) (\(\sigma = 0.4\), \(\tau = 0.4\)) and (e) (\(\sigma = 1\), \(\tau = 0.4\)), but obviously too much Gaussian colored noise will reduce the input–output coherence, so there is a mono-peak structure in the curves of mutual information via noise intensity as shown in panel (f): \(\tau = 0.2\)(blue dot curve),\(\tau = 0.4\)(red broken curve) and \(\tau = 0.6\) (green solid curve). (Color figure online)

From Fig. 2f one further sees that the correlation time has a certain effect on the bell shaped curve of the input–output mutual information. That is, the peak height of the mutual information is a decreasing function of the correlation time, but at the same time, the optimal noise intensity at which the resonant peak locates shifts to a weaker noise level. In order to more systematically disclose the influence of colored noise, we plot the mutual information as function of correlation time in Fig. 3. Surprisingly, the correlation time induced aperiodic stochastic resonance is observed for given noise intensity, and there exists optimal correlation time at which the shape matching between the input signal and the output signal. Moreover, as noise intensity increases, the optimal correlation time of the maximal mutual information decreases. The similarity between Fig. 2 and Fig. 3 suggests noise intensity and correlation time play a similar role. In fact, our conjecture can be confirmed by checking the steady fluctuation of Gaussian colored noise. After a simple calculation it can be found that the steady noise variance is proportional to the correlation time and the square of noise intensity, namely \(D(u) = {{\tau \sigma^{2} } \mathord{\left/ {\vphantom {{\tau \sigma^{2} } 2}} \right. \kern-0pt} 2}\). Although this finding is a bit different from the observation related in Gaussian colored noise induced conventional (periodic) stochastic resonance (Gammaitoni et al. 1998), where the resonant peak tends to shift to a larger noise level as the correlation time increases, it is meaningful, since in neural circuit design noise intensity might be usually hard to change; one may tune the correlation time to realize the enhancement of information capacity instead. Additionally, the influence of different duration time parameter for the input signal on the aperiodic stochastic resonance is also checked in Fig. 4. It is observed that as the duration time decreases, both the effect of resonance induced by Gaussian colored noise and correlation time become weak. This is common with the conventional stochastic resonance, where only a slowly varying periodic signal can be amplified by noise rather than a high frequency signal (Kang et al. 2005).

Stochastic resonance in the bistable neuron model with quantized output. The binary signal is shown in panel (a). Here \(A = { - }0.6\), \(B = { - }0.4\) and \(p = 0.7\). There is no 1 in the quantized output when the correlation time constant of Gaussian colored noise is close to zero (\(\tau = 0.001\),\(\sigma = 0.3\)), as shown in panel (b). As the correlation time constant increases, more and more “1s” occur in the quantized output, as shown in panel (c) (\(\tau = 0.2\), \(\sigma = 0.3\)) (d) (\(\tau = 0.5\), \(\sigma = 0.3\)), and (e) (\(\tau = 1.5\), \(\sigma = 0.3\)), but obviously too large correlation time constant will reduce the input–output coherence, so there is a mono-peak structure in the curves of mutual information via correlation time constant as shown in panel (f): \(\sigma = 0.3\) (blue dot curve), \(\sigma = 0.5\) (red broken curve) and \(\sigma = 0.7\)(green solid curve). (Color figure online)

The second example is the FitzHugh–Nagumo neuron model (Capurro et al. 1998), governed by

where \(v\) stands for the transmembrane voltage, \(w\) denotes a slow recovery variable, and the input signal \(S (t )\in \left\{ {A ,\;B} \right\}\) is again taken as the subthreshold binary signal. Whenever the membrane voltage crosses the threshold value \(\theta = 0.5\) from below, the neuron emits a spike, and the output spiking train can be formulated as

with \(t_{i}\) being the occurring time of the ith spike.

Note that

and

for all \(v_{1} ,\,v_{2} \in R\) with \(\left| v \right|_{1} \le N\) and \(\left| {v_{2} } \right| \le N\). Here, the region-dependent Lipschitz constant \(L_{N} = 4(9N^{4} + 4N^{2} + a^{2} + 1)\). Thus, the vector field of the FitzHugh–Nagumo model is local Lipschitz. Actually, the local but not global Lipschitz property of the vector field has been proven by the mean value theorem (Patel and Kosko 2008). On the other hand, since the transmembrane voltage and the slow recovery variable are always bounded, one can assume that there exists constant \(C\) such that for any \(t > 0\), \(\hbox{max} (\left| v \right|,\left| w \right|) \le C\), then

and

that is, the growth condition is satisfied. Again we can choose \(g(v) = 1\) (Figs. 5 and 6) to denote the additive intensity, or \(g(v) = \frac{{v^{2} }}{{\sqrt {1 + v^{4} } }}\) (Fig. 7) such that it stands for the multiplicative noise intensity but is easy to verify the Lipschitz and growth conditions. Then according to Theorem 3, the Gaussian colored noise induced aperiodic stochastic resonance in the neuron model can be anticipated.

Stochastic resonance in the FitzHugh–Nagumo neuron model. Here \(g (v )= 1\),\(A = { - }0.035\), \(B = { - }0.125\) and \(p = 0.7\). (a) The subthreshold binary signal. (b) Output spikes when the Gaussian colored noise is absent (\(\sigma = 0\),\(\tau = 0.4\)). (c) Output spikes when the noise intensity of Gaussian colored noise is small (\(\sigma = 0.003\), \(\tau = 0.4\)). (d) Stochastic resonance effect: Output spikes when the noise intensity of Gaussian colored noise is moderate (\(\sigma = 0.01\), \(\tau = 0.4\)). (e) Output spikes when the noise intensity of Gaussian colored noise is large (\(\sigma = 0.04\),\(\tau = 0.4\)). Obviously too much Gaussian colored noise will reduce the input–output coherence, so there is a mono-peak structure in the curves of mutual information via noise intensity as shown in panel (f): \(\tau = 0.2\) (blue dot curve),\(\tau = 0.4\)(red broken curve) and \(\tau = 0.6\) (green solid curve). (Color figure online)

Stochastic resonance in the FitzHugh–Nagumo neuron model. Here \(g (v )= 1\), \(A = { - }0.035\), \(B = { - }0.125\) and \(p = 0.7\). (a) The subthreshold binary signal. (b) Output spikes when the correlation time constant of Gaussian colored noise is close to zero (\(\tau = 0.001\),\(\sigma = 0.03\)). (c) Output spikes when the correlation time constant is small (\(\tau = 0.01\), \(\sigma = 0.03\)). (d) Stochastic resonance effect: Output spikes when the correlation time constant is moderate (\(\tau = 0.05\), \(\sigma = 0.03\)). (e) Output spikes when the correlation time constant is large (\(\tau = 0.2\), \(\sigma = 0.03\)). Obviously too large correlation time constant will reduce the input–output coherence, so there is a mono-peak structure in the curves of mutual information via correlation time constant as shown in panel (f): \(\sigma = 0.03\) (blue dot curve), \(\sigma = 0.05\) (red broken curve) and \(\sigma = 0.07\) (green solid curve). (Color figure online)

In the numerical simulation of the second example, we take \(a = 0.5\),\(A_{0} = 0.04\),\(\varepsilon = 0.005\),\(b = 0.2466\), \(\Delta t = 0.001\) and the duration time of \(S (t )\) is again taken as 40 time units. We point out that the input binary signals in Figs. 5, 6a are still subthreshold, although a spike generation happens at the moment the signal is switched from one value to the other in Figs. 5, 6b in absence of noise, (Patel and Kosko 2005). Figures 5, 6d demonstrate again that the best shape matching can happen at a suitable noise intensity or correlation time, at which the input–output mutual information in Figs. 5, 6f attains its maximum. Thus, the aperiodic stochastic resonance induced by Gaussian colored noise is confirmed. Moreover, Fig. 7 shows that this phenomenon can also be induced by the multiplicative Gaussian colored noise. From Fig. 7, a similar effect of correlation time on resonant peak is observed. This similarity implies that increasing correlation time inhibits the aperiodic stochastic resonance effect but reduces the optimal noise intensity. This feature reflects the noise intensity and the correlation time play the same role here. Note that the “color” of the Gaussian noise always restrains the effect of conventional periodic stochastic resonance and shifts the resonant peak to larger noise intensity (Gammaitoni et al. 1998), thus the properties of aperiodic stochastic resonance seems not suitable for being directly generalized from the conventional stochastic resonance. In fact, we infer the properties of aperiodic stochastic resonance should be similar to stochastic synchronization, since they can be measured by the same quantifying index.

The above neuron models have verified the assertion in Theorem 3. In fact, Theorem 3 gives necessary conditions for aperiodic stochastic resonance effect of Gaussian colored noise in neuron models for subthreshold input signals. By utilizing the statement of Theorem 3, the investigation of the aperiodic stochastic resonance under Gaussian colored noise can be reduced to a simple task of showing that a zero limit of the input–output mutual information exists. Then, just as the theorems stated in the work of Patel and Kosko (2005, 2008) and Kosko et al. (2009), Theorem 3 again acts as a type of screening device to filter whether noise benefits in the detection of subthreshold signals based on the measurement of mutual information.

Conclusion and discussion

After proving that under certain conditions the solution of nonlinear dynamic systems perturbed by Gaussian colored noise can converge to the solution of the deterministic counterpart as noise intensity tends to zero, we theoretically predicted the occurrence of the aperiodic stochastic resonance induced by Gaussian colored noise in bistable and excitable neuron systems based on the “forbidden interval” theorem. The theoretical prediction actually presents a technical tool that screen for whether the mutual-information measured stochastic resonance occurs in the detection of subthreshold signals in the background of Gaussian colored noise. The simulated results with two typical neuron models further verified the occurrence of aperiodic stochastic resonance for weak input signals. Particularly, we disclose the novel inhibitive effect of the correlation time of Gaussian colored noise on the aperiodic stochastic resonance, and found the “color” of noise plays the same role as noise intensity. Since in the design of neural circuits, the noise intensity is not always easy to be tuned for utilizing the benefit of noise, our finding provides an alternative way to implement the effect of aperiodic stochastic resonance by adjusting the correlation time.

At the end, let us stress the main difference from the existing theoretical proofs, and let us also have some prospect. As it is known, Gaussian white noise, as the formal derivative of Wiener process of stationary independent increments, cannot describe the correlation of environmental fluctuations, the fractional Gaussian noise, as the formal derivative of fractional Brownian motion, has power-law feature in power spectral density and can model the fluctuations of long range temporal correlation, while the Gaussian colored noise, generated by the Ornstein–Ulenbeck process, is applicable for modeling the short-time correlation. Thus, the work of this paper actually shrinks the gap between the aperiodic stochastic resonance induced by Gaussian white noise (Patel and Kosko 2005) and induced by fractional Gaussian noise (Gao et al. 2018). Moreover, Levy noises are the formal derivative of the jump-diffusion Levy processes of stationary independent increments, thus the aperiodic stochastic resonance with Levy noise (Patel and Kosko 2008) did not consider the effect of “color”. Note that Gaussian colored noise is only a special member of the family of Levy colored noise (Lü and Lu 2019), which is capable of describing the subquantal release of neurotransmitter, thus it will be meaningful to explore the beneficial role of the more general Levy colored noise in neural processing in the future.

References

Averbeck BB, Latham PE, Pouget A (2006) Neural correlations, population coding and computation. Nat Rev Neurosci 7(5):358–366

Benzi R, Sutera A, Vulpiani A (1981) The mechanism of stochastic resonance. J Phys A 14(11):L453–L457

Capurro A, Pakdaman K, Nomura T, Sato S (1998) Aperiodic stochastic resonance with correlated noise. Phys Rev E 58(4):4820–4827

Collins JJ, Chow CC, Imhoff TT (1995) Aperiodic stochastic resonance in excitable systems. Phys Rev E 52(4):R3321–R3324

Collins JJ, Chow CC, Capela AC, Imhoff TT (1996a) Aperiodic stochastic resonance. Phys Rev E 54(5):5575–5584

Collins JJ, Imhoff TT, Grigg P (1996b) Noise-enhanced information transmission in rat SA1 cutaneous mechanoreceptors via aperiodic stochastic resonance. J Neurophysiol 76(1):642–645

Cover TM, Thomas JA (1991) Elements of information theory. Wiley, New York

Déli E, Tozzi A, Peters JF (2017) Relationships between short and fast brain timescales. Cogn Neurodyn 11(6):539–552

Dylov DV, Fleischer JW (2010) Nonlinear self-filtering of noisy images via dynamical stochastic resonance. Nat Photon 4(5):323–328

Floris C (2015) Mean square stability of a second-order parametric linear system excited by a colored Gaussian noise. J Sound Vib 336:82–95

Freidlin MI, Wentzell AD, Tr. by Szuecs J (2012) Random perturbations of dynamical systems. Springer, Berlin

Fu YX, Kang YM, Chen GR (2020) Stochastic resonance based visual perception using spiking neural networks. Front Comput Neurosci 14:24

Gammaitoni L, Hänggi P, Jung P, Marchesoni F (1998) Stochastic resonance. Rev Mod Phys 70(1):223–287

Gao FY, Kang YM, Chen X, Chen GR (2018) Fractional Gaussian noise enhanced information capacity of a nonlinear neuron model with binary input. Phys Rev E 97(5):052142

Gu HG, Pan BB (2015) Identification of neural firing patterns, frequency and temporal coding mechanisms in individual aortic baroreceptors. Front Comput Neurosci 9:108

Guan LN, Gu HG, Jia YB (2020) Multiple coherence resonances evoked from bursting and the underlying bifurcation mechanism. Nonlinear Dyn 100:3645–3666

Guo DQ (2011) Inhibition of rhythmic spiking by colored noise in neural systems. Cogn Neurodyn 5(3):293–300

Kang YM, Xu JX, Xie Y (2005) Signal-to-noise ratio gain of a noisy neuron that transmits subthreshold periodic spike trains. Phys Rev E 72(2):021902

Kim SY, Lim W (2018) Effect of spike-timing-dependent plasticity on stochastic burst synchronization in a scale-free neuronal network. Cogn Neurodyn 12(3):315–342

Kosko B, Lee I, Mitaim S, Patel A, Wilde MM (2009) Applications of forbidden interval theorems in stochastic resonance. In: Applications of Nonlinear Dynamics. Springer, New York

Lee KE, Lopes MA, Mendes JFF, Goltsev AV (2014) Critical phenomena and noise-induced phase transitions in neuronal networks. Phys Rev E 89(1):012701

Levin JE, Miller JP (1996) Broadband neural encoding in the cricket cereal sensory system enhanced by stochastic resonance. Nature 380(6570):165–168

Liu RN, Kang YM (2018) Stochastic resonance in underdamped periodic potential systems with alpha stable Lévy noise. Phys Lett A 382(25):1656–1664

Liu J, Li Z (2015) Binary image enhancement based on aperiodic stochastic resonance. IET Image Process 9(12):1033–1038

Lü Y, Lu H (2019) Anomalous dynamics of inertial systems driven by colored Lévy noise. J Stat Phys 176(4):1046–1056

Mao XR (2007) Stochastic differential equations and applications, 2nd edn. Woodhead Publishing Limited, London

Mao XR, Sababis S (2003) Numerical solutions of stochastic differential delay equations under local Lipschitz condition. J Comput Appl Math 151(1):215–227

Mizraji E, Lin J (2017) The feeling of understanding: an exploration with neural models. Cogn Neurodyn 11(2):135–146

Nakamura O, Tateno K (2019) Random pulse induced synchronization and resonance in uncoupled non-identical neuron models. Cogn Neurodyn 13(3):303–312

Øksendal B (2005) Stochastic differential equations: an introduction with applications, 6th edn. Springer, Berlin

Patel A, Kosko B (2005) Stochastic resonance in noisy spiking retinal and sensory neuron models. Neural Netw 18(5–6):467–478

Patel A, Kosko B (2008) Stochastic resonance in continuous and spiking neuron models with Levy noise. IEEE Trans Neural Netw 19(12):1993–2008

Prato GD, Zabczyk J (1992) Stochastic equations in infinite dimensions. Cambridge University Press, Cambridge

Sakai Y, Funahashi S, Shinomoto S (1999) Temporally correlated inputs to leaky integrate-and-fire models can reproduce spiking statistics of cortical neurons. Neural Netw 12(7–8):1181–1190

Song JL, Paixao L, Li Q, Li SH, Zhang R, Westover MB (2019) A novel neural computational model of generalized periodic discharges in acute hepatic encephalopathy. J Comput Neurosci 47(2–3):109–124

Tiwari I, Phogat R, Parmananda P, Ocampo-Espindola JL, Rivera M (2016) Intrinsic periodic and aperiodic stochastic resonance in an electrochemical cell. Phys Rev E 94(2):022210

Wang HY, Wu YJ (2016) First-passage problem of a class of internally resonant quasi-integrable Hamiltonian system under wide-band stochastic excitations. Int J Nonlin Mech 85:143–151

Wang RB, Wang GZ, Zheng JC (2014) An exploration of the range of noise intensity that affects the membrane potential of neurons. Abstr Appl Anal 2014:801642

Xu Y, Guo YY, Ren GD, Ma J (2020) Dynamics and stochastic resonance in a thermosensitive neuron. Appl Math Comput 385(15):125427

Yan CK, Wang RB, Pan XC (2013) A model of hippocampal memory based on an adaptive learning rule of synapses. J Biol Syst 21(03):1350016

Yang T (1998) Adaptively optimizing stochastic resonance in visual system. Phys Lett A 245:79–86

Zeng FG, Fu QJ, Morse R (2000) Human hearing enhanced by noise. Brain Res 869:251–255

Zhao J, Qin YM, Che YQ, Ran HYQ, Li JW (2020) Effects of network topologies on stochastic resonance in feedforward neural network. Cogn Neurodyn 14:399–409

Acknowledgements

This work is financially supported by the National Natural Science Foundation of China (Grant No. 11772241).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

Proof of Lemma 1

Proof

Fix \(T \ge 0\) arbitrarily. The Ito formula (Øksendal 2005; Mao 2007) shows that

for \(0 \le t \le T\). By the moment property (3) of the stationary OU process, we get

By the Burkholder–Davis–Gundy inequality (Prato and Zabczyk 1992),

Using the Hölder inequality we then derive

□

Proof of Lemma 2

Proof

It is well known that almost all sample paths of the Ornstein–Ulenbeck process are continuous. It is therefore easy to see from the classical theory of ordinary differential equations that for any initial value \(X_{0} \in R^{d}\), Eq. (1) has a unique global solution \(X_{t}\) on \(t \ge 0\). Fix \(T \ge 0\) arbitrarily. According to Lemma 1,

with \(\xi_{k}\) given by Eq. (7).

Define the stopping times \(\tau_{h} = \inf \{ t \ge 0:\left| {X_{t} } \right| \ge h\}\) for all integers \(h > \left| {X_{0} } \right|\), where throughout this paper we set \(\inf \varPhi = \infty\). Here \(\varPhi\) stands for the empty set. Clearly, \(\tau_{h} \to \infty\) almost surely as \(h \to \infty\). For \(t \in \left[ {0,T} \right]\), it follows from Eq. (1a) that

Here, the first inequality is due to \((a_{1} + \cdots + a_{m} )^{p} \le m^{p - 1} (\left| {a_{1} } \right|^{p} + \cdots + \left| {a_{m} } \right|^{p} )\), the second inequality is owing to the Hölder inequality; the growth conditions are adopted for the last second equality; and the inequality \((\left| a \right| + \left| b \right| )^{p} \le 2^{p - 1} (\left| a \right|^{p} + \left| b \right|^{p} )\) is used in the last inequality. As the right-hand-side terms are increasing in \(t\), we see easily that

and then by \(\left| {X_{ 0}^{i} } \right|^{p} = \left( {\left| {X_{ 0}^{i} } \right|^{ 2} } \right)^{{\frac{p}{2}}} \le \left( {\sum\limits_{i = 1}^{d} {\left| {X_{ 0}^{i} } \right|^{ 2} } } \right)^{{\frac{p}{2}}} = \left| {X_{0} } \right|^{p} ,\)

By the well-known Young inequality \(xy \le \frac{{x^{p} }}{p} + \frac{{y^{q} }}{q}\) for \(x,y \ge 0\) and \(p,q > 0\) with \(\frac{1}{p} + \frac{1}{q} = 1\),

while recalling that \(\bar{k} \ge \frac{p}{2(1 - \gamma )}\) in Eq. (13), then by the Hölder inequality,

Hence, by Eq. (30),

Considering

then

Here, the distribution property for the maximum of multiple mutually independent random variables is adopted. Then for any \(0 \le t \le T\),

with \(a_{p}\) and \(b_{p}\) given in Eqs. (11) and (12). And then, the application of the Gronwall inequality to Eq. (31) yields

Letting \(h \to \infty\) implies the required assertion (10). □

Proof of Lemma 3

Proof

Note the inequality (15) can be proven with technique somehow parallel to that of Lemma 2. It is well known that under given conditions Eq. (3) has a unique global solution \(\hat{X}_{t}\) on \(t \ge 0\). Define a sequence \(v_{h} = \inf \{ t \ge 0:\left| {\hat{X}_{t} } \right| \ge h\}\) for all integers \(h \ge \left| {X_{0} } \right|\), with \(\inf \varPhi = \infty\) for an empty set \(\varPhi\). Clearly, \(v_{h} \to \infty\) almost surely as \(h \to \infty\). For \(t \in \left[ {0,T} \right]\), it can be deduced from (3) that for \(0 < t < T\),

Then, the Gronwall inequality implies

Letting \(h \to \infty\) implies the assertion (15) immediately. □

Proof of Lemma 4

Proof

Recalling the duplicate property of the conditional probability distribution

we obtain

from which it can be deduced that

and thus by Theorem 2, Eq. (25) is found true. Then, application of Markov’s inequality immediately gives Eq. (26). □

Rights and permissions

About this article

Cite this article

Kang, Y., Liu, R. & Mao, X. Aperiodic stochastic resonance in neural information processing with Gaussian colored noise. Cogn Neurodyn 15, 517–532 (2021). https://doi.org/10.1007/s11571-020-09632-3

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11571-020-09632-3