Abstract

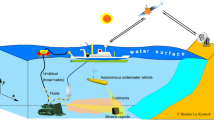

In recent years, deep sea and ocean explorations have attracted more attention in the marine industry. Most of the marine vehicles, including robots, submarines, and ships, would be equipped with automatic imaging of deep sea layers. There is a reason which the quality of the images taken by the underwater devices is not optimal due to water properties and impurities. Consequently, water absorbs a series of colors, so processing gets more difficult. Scattering and absorption are related to underwater imaging light and are called light attenuation in water. The examination has previously shown that the emergence of some inherent limitations is due to the presence of artifacts and environmental noise in underwater images. As a result, it is hard to distinguish objects from their backgrounds in those images in a real-time system. This paper discusses the effect of the software and hardware parts for the underwater image, surveys the state-of-art different strategies and algorithms in underwater image enhancement, and measures the algorithm performance from various aspects. We also consider the important conducted studies on the field of quality enhancement in underwater images. We have analyzed the methods from five perspectives: (a) hardware and software tools, (b) a variety of underwater imaging techniques, (c) improving real-time image quality, (d) identifying specific objectives in underwater imaging, and (e) assessments. Finally, the advantages and disadvantages of the presented real/non-real-time image processing techniques are addressed to improve the quality of the underwater images. This systematic review provides an overview of the major underwater image algorithms and real/non-real-time processing.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

The studies in recent years on the use of image processing techniques have largely been addressed by researchers in the field of automated real-time processing. Studies have included identifying, detecting, analyzing objects, living organisms at the macro, and sometimes micro-levels focusing on a category of topics in recent years.

It is known that dynamic light diffusion is a physical method used to determine the distribution of particles in solutions and suspensions. These non-destructive and fast methods are used to determine the particle size in the range of few nanometers to microns. It is also known that the emission wavelength of a medium in question reflects the amount of its deviation [1].

The light emission is also overshadowed by the accumulation of the water particles. Thus, the particles can further increase the deviation of the angle and the orientation of direct motion of light in water. In other words, absorbing wavelengths in the geographic position of seawater is emitted, and hence, the angular deviation of light and change in the water wavelength are largely dynamic [2]. Decreasing the dynamic range of intensities at an image plane can be a significant problem, because a minimum of 2–4% contrast is essential for the detection of an object by a human observer [3].

The real-time image processing techniques are always in high demand for multiple requisitions used in automated systems such as remote sensing, manufacturing process, and multimedia applications. This application required to have high performance. Based on that requirement, image processing and machine learning systems have been investigated in this paper by discussing real-time procedures for underwater imaging.

The main suggestion in this paper is based on analyzing the automated frameworks that adapt to their dynamic conditions and real/non-real-time manner. If conditions were ruled by the underwater imaging environment, the real-time and automated analysis of images could be effective in a direction that would take all the constraints to satisfy alongside.

This paper attempts to present a general overview of methodical studies in recent years that will later be used to enhance the quality of future studies. First of all, there is a need to present a sort of classification in analyzing image enhancement for the previous strategies. Then, we require to mention the advantages/disadvantages and the extent to which they will be used to increase the comprehensiveness of the paper. It should be noted that hardware implementation is one of the most important aspects of real-time image processing, which is also addressed here.

This systematic review carries out an overview of the major underwater image algorithms and real/non-real-time processing in various applications. We have analyzed the application of underwater images in different fields, comprising underwater target detection, underwater navigation, Intelligent Underwater Vehicles (IUV), and marine remotely control technologies.

The rest of the paper is structured as follows: in Sect. 2, the categorization of the related studies will be introduced and some real/non-real-time underwater image enhancement methods will be discussed. In Sect. 3, a comparison among the underwater imaging techniques and their improvement with image processing will be focused. Finally, in Sect. 4, the overall conclusion is presented.

2 Underwater imaging

This section discusses underwater imaging. Modeling the water with Linear Position Invariant Systems (LPI) [4] and Image Recovery in De-convolution [5, 6] is one of the automated models that are presented. Many image recovery methods, such as Blind De-convolution [7, 8] and Wiener Filter [9], are based on conventional techniques.

2.1 Real-time processing

Real-time processing is defined as responses of the system in the order of milliseconds or microseconds. Sometimes, we can design processing algorithms that produce outputs in a short time relative to the input. To achieve real-time processing implications, we may have to avoid some of the processing benefits. Thus, this challenge can be accomplished by reducing the time and number of loops and commands of the processing program. However, this method is not always possible due to system loads, expected results, and hardware limitations. As a result, we need a trade-off between software, hardware, and real-time performance.

2.2 Hardware and software

Some studies have examined the hardware and software aspects of real-time detecting objects in underwater images [1,2,3, 10]. Some optical imaging analysis through real-time automated processing is partly studied [11,12,13].

A classification structure for underwater imaging systems based on Jaffe et al. [11] is shown in Fig. 1. In this figure, based on light properties, camera light close, camera light separated, range-gated, and synchronous scan states are shown from left to right similar real-time model, respectively. In [14], a synopsis of research and technical innovations was presented, organized in much the same way as the previous report of Kocak and Caimi [15]. They investigated image formation and image processing methods, extended range imaging techniques, imaging using spatial coherency (e.g., holography), and multiple-dimensional image acquisition [12].

Source: Jaffe et al. [11]

The accordance between camera light separation, more exotic imaging systems (range-gated and synchronous scan), and the obtainable viewing distances are shown.

The simultaneous attention to achieve a real-time model based on hardware and software underwater imaging is also found in research [16]. Bouchette et al. had suggested an integrated structure to investigate the performance and phenomenology of Electrical Impedance Tomography (EIT) for underwater applications [16].

Color correction of underwater images for aquatic robot inspection is one of the fast models that its improvement is based on a combination of color matching correspondences from the training data and local context via belief propagation for the aquatic robot [17]. Their aquatic robot [18] swims through the ocean and captures video images and real-time enhancements.

The use of structured illumination for increasing the resolution of underwater optical images was proposed in [19]. This goal is not fast in Jaffe’s work was achieved, so that a set of computer programs was employed to implement both one- and two-dimensional scanned images. Transmitting a short pulse of light in a grid, like a pattern that included multiple, narrow, delta/function like beams, was his proposed method.

McLeod et al. [20] introduced autonomous inspection using the underwater 3D Light Detection and Ranging (LiDAR). Accordingly, some benefits such as desirable enhancement of safety, reliability, and the reduction in risks provide users and operators with significant improvements over general visual inspection. This possibility is performed by the addition of sensors that cannot be constructed in real-time 3D models of the framework being inspected.

2.3 Types of underwater imaging methods

Some researchers try to improve image quality as real-time applications by basic tools to be able to analyze images of high quality and appropriate [21,22,23,24,25]. Tran et al. [21] develop a method to determine shape optimization of acoustic lenses for underwater imaging using geometrical and wave acoustics. They revealed that pressure at the focal point is corrected using geometric parameters for lens surfaces as design variables. They optimized lens operation due to the shear wave effect of the lens, and then, the near field pressure behind the lens showed shorter oscillations, which could not be neglected. Accordingly, their model is not real time, and then also investigated [26], a lens surface is parameterized as (1):

where four parameters consist of c, k, a1, and a2, which are used as design variables for correction and optimization of the process. Schechner and Averbuch [22] proposed a fast adaptive filtering approach that counters the noise amplification in pixels corresponding to distant objects, where the medium transmittance is low. They used Gradient and Hessian operators. Hereupon, if m = 1 and f be a scalar field, then the Jacobian matrix is reduced to a row vector of partial derivatives of f—i.e., the gradient of f [27, 28].

Photometric stereo is a time-consuming model but widely used for 3D reconstruction [23]. In their study, a perspective camera is imaging an object point at X with a normal N, illuminated by a point light source at S, as shown in Fig. 2. Imaging in turbid environments often relies heavily on artificial sources for illumination [24]. Treibitz and Schechner [24] considered non-real-time turbid scene enhancement using multi-directional illumination fusion. They mentioned that simultaneously illuminating the scene from multiple directions increases the backscatter and fills in shadows, both of which degrade local contrast. Simultaneous underwater visibility assessment, enhancement, and improved stereo were conducted by Roser and et al. [25]. They implemented a non-real-time method that simultaneously performs underwater image quality assessment, visibility enhancement, and improved stereo estimation.

Source: Murez et al. [23]

The object is in a scattering medium, and thus, light may be scattered in the three ways shown.

Fundamental and modern methods of sonar imaging underwater include real-time and multi-beam forming sonar, lateral scan sonar, synthetic aperture sonar, acoustic lensing, and acoustical holography [1, 10]. Side Scan Sonar is a sonar model that sends its pulse to the side and processes the recursive echoes.

2.4 Underwater image quality improvement

Noise reduction is the time-consuming process of eliminating noise from the original signal. In underwater images, in addition to artifacts such as noise, there is a lack of ambient light, low contrast, non-uniform lighting, shadows, suspended particles, and disruptive agents [29,30,31,32,33,34]. Ghani and Isa [29] were presented a non-real-time approach for underwater image quality improvement. Their procedure successfully improved the contrast and reduced the noise of the original method of the Integrated Color Model (ICM) and the Unsupervised Color Correction Method (UCM) previously proposed by Iqbal et al. [35]. As proposed by Iqbal et al. [36], the output image in the RGB color model is stretched over the entire dynamic range. The image is then converted to the HSI color space in which the S and I components are applied with contrast stretching.

The RGB color space fits well with the fact that "The human eye understands the colors of the red, green, and blue colors." Although RGB to HIS or HSV spaces is near real-time technique, unfortunately, other color spaces such as CMY and even RGB and other similar color models are not suitable for describing colors based on human interpretation. To convert RGB to HSI space and vice versa [37], it operates in terms of the number of bits. For ease, the dependence between x and y is not considered.

The effect of HSV and HSI spaces is to homogenize the images. They can prevent some optical artifacts such as excessive light radiation and blackout of the image. For example, in images with large shadows of different objects, the uses of HSV and HSI spaces are effective in helping to remove artifacts. The effect of this transformation in other studies, for underwater images of aquatic animals or objects, has led to a major change in the image from saturation. Hence, we can measure the degree of pure light scattering by small particles, which is understood by the observer as recognizable. A sample of the space transfer images is shown in Fig. 3. The use of contrast stretching in similar studies also has a significant impact on improving the quality of underwater images as a near-real-time approach. Besides, the studies [35, 36, 38] use evolutionary algorithms (PSO) to improve the quality of the images, and it is done along with contrast stretching. A sample of improvement in [38] is shown in Fig. 4. In the PSO algorithm, each particle is characterized by multidimensional (depending on the problem) with two vectors Vi [t] and Xi [t], which represent the position and current velocity in the moment t of the particle i. At each stage of population movement, the location of each bird comes up with two values of the best personal experience, and the best group experience [38].

Source: Abonaser et al. [38]

Improving the quality of the original image (left) by the PSO algorithm and contrast stretching (right).

The evolutionary algorithms cannot be applied in real-time applications, such as underwater image enhancement. Depending on the results of research on the removal of artifacts and the improvement of image quality underwater, approaches can be divided into two general methods: wavelength compensation (i.e., dispersion of substances and particles in water) and the reconstruction of color (i.e., absorption of light) [10].

Sometimes methods use physical or hardware optimization. The optimization procedures include most non-physical and software methods to improve image quality.

Although, some software methods, such as the use of mapping filter, are a real-time technique which their effects cannot accept in the image. The reason for this problem is the result of the deletion of some image information by the definition of noise removal or other artifacts [39, 40].

Panetta et al. [39] presented a new non-reference Underwater Image Quality Measure (UIQM) that comprises three underwater image attribute measures. (1) The Underwater Image Colorfulness Measure (UICM), (2) the Underwater Image Sharpness Measure (UISM), and (3) the Underwater Image contrast Measure (UIConM).

Based on [40], the object detection algorithm can be divided into three stages: (1) non-real-time image enhancement via enhancer techniques, (2) edge detection, and (3) object detection.

The recent methods used to improve the image quality underwater are the reconstruction of color-based restorations in [41,42,43,44,45,46,47], the compensation of wavelengths of light (i.e., physical and non-physical models) [38,39,40,41,42,43,44,45,46, 48], and Polarization (hardware) [47, 49].

Bianco and Neumann [41] used a real-time and non-uniformly method to improve underwater images. Their method was based on the Gray-World assumption in turning color space. The use of the red channel as one of the methods of color decomposition can be seen in the research by Galdran et al. [42].

Bianco et al. [43] had already declared that the advantage of some methods is that they do not entail the learning of the medium physical parameters, while some underwater image adjustments can be performed by non-automatic (as histogram stretching [50]). In some studies, the color change is also used to reduce the intensity of blurring, and excessive blurring is referred to in the underwater image called fog image [43].

Similar to [42, 43], a study by Li et al. [44] has been used to reshape and redone the red channel in a De-hazing process based on a non-real-time application. They initially improved blue and green color channels through the Dehazing algorithm and based on the expansion and modification of the Dark Channel Prior algorithm. Then, the red channel is corrected after the theory of a global hypothesis. In [45], a non-real-time effective color correction strategy was introduced based on linear particle transduction to respond to color distortion, an innovative contrast enhancement technique that was able to reduce artifacts to lower contrast.

Also, [46] introduced a near real-time effective algorithm to optimize the quality of captured images underwater and degraded due to the medium scattering and absorption. As illustrated in Fig. 5, their approach built on the fusion of multiple inputs. The method of [47] was based on light attenuation inversion after processing a color space contraction using quaternions. In fact, they applied the algorithm to the white, and then, the attenuation gave a hue vector characterizing the watercolor.

Source: Ancuti et al. [46]

Method overview.

In some methods, conventional filters are used which serve as a mean low-pass filter of a neighborhood of dimensions m × n, and its method is to interlock all neighborhood pixels, arranges the ascending order, and selects the middle element of the ordered numbers, and replaces the center pixel [37].

Some also suggest the use of homomorphic filtering [51], which has been successful in combining methods such as discrete wavelet transform and histogram matching. Similar to [51], there is also research that uses real-time filtering on an image, color correction, noise elimination, correction, and avoidance of high light propagation, and is considered an efficient tool [52]. Sub water imaging can be modeled as Fig. 6 by taking into account the noise. According to Fig. 6, the image of the object being modeled with f(x,y) is disrupted by passing through the water environment and the components of the imaging system by the expansion function h(x,y) [53, 54]. Finally, the recorded image is a distorted image of g(x,y), which is impregnated with noise caused by the detector environment n(x,y). The relation between the main image, the point expansion function, the received image, and the noise can be expressed as (2) [55]:

Here, × sign is a convolution operator, and the goal is to compute the original image f of the impeded image and impart g noise.

One of the most important real-time filters that can simultaneously try to remove the blurring and noise from original images such as underwater images is the Wiener filter [9]. This filter is defined by relation, so that when power is reduced in it, the Wiener filter will act as the reverse filter. Wiener filter, which seeks to find an optimal trade-off between inverse filtering and denoising, is one of the linear estimators that use the concepts of orthogonally properties [10, 54, 56]. The frequency response of this filter can be expressed according to (3) [9, 57,58,59,60]:

where H(u,v) is the frequency response of blur Point Spread Function (PSF), and Sgg(u,v) and Sɳɳ(u,v) are the power spectral density of the degraded image of g(x,y) and noise ɳ(x,y).

Adaptive histogram correction is also found in other studies such as Ghani et al. [61] and Althaf et al. [62], which improve the quality of underwater images to improve color and contrast.

Other methods have been suggested in this regard, which has been shown to have high efficacy in improving the quality of underwater images, such as the multi-scale Retinex transformation method [63,64,65].

Since the design and manufacturing of real-time Synthetic Aperture Sonar (SAS) [66, 67] has a short life and has been scattered in research and industrial centers around the world, it has been attempted to elaborate on how to calculate the most important SAS parameters using research.

In the same year, Hu et al. [68] published a research project aimed at enhancing resolution for underwater images and fixing the motion transmission or modification of underwater sensor network simulators [68, 69]. Image quality improvement is also one of the real-time techniques that Wang et al. [70] and another researcher [71] have found by measuring the amount of image impairment. Similar to [68, 70, 71], another study was conducted to near real-time recover underwater scenes based on image blurriness and also light absorption [72]. This process practically enhanced the images. Underwater image recovery under the non-uniform optical field based on polarimetric imaging was among new studies conducted by Hu et al. [73].

3 Targets in underwater images

In this section, we study the methods that have worked on the targets in the underwater images. It may be necessary for some applications to track a particular object or target, and therefore, it is necessary to suggest and operating methods that are appropriate to what is contemplated in underwater images.

3.1 Detection of specific targets

The capability to extract features from underwater images and adaptation based on visual recognition is interesting research having presented to detect targets and objects as real-time applications [52]. Sometimes unsupervised methods, as a separate network, not only enhance image quality but also can effectively real-time detect the objects appearing in the images [74]. The attempt made in this field is an unsupervised generative network to produce high-quality underwater images and to correct the color of the images [66, 75,76,77,78,79].

The detection of the Region of Interest (ROI) in images is achieved by extracting features and, consequently, calculating the contrast in the underwater images [80]. Wang et al. [81] have proposed a non-real-time model that detects the objects in underwater images with logical randomized escalation as a strategy that is delayed in the implementation loop. In Fig. 7, a scheme of the strategy function [81] is displayed for an underwater image in detecting the image of number "4".

Source: Wang et al. [81]

A work sample by Wang et al. in near real-time semi-automatic detection of targets from underwater images.

3.2 Assessment criteria

Quality assessment can be considered as a comprehensiveness method that improves underwater image structures and is more suitable for low depth images [82].

The advantages of this method are having a combination of different techniques, and it can eliminate noise, scattering, hazing, contrast, and improper color distribution [83,84,85]. However, these techniques are not applied simultaneously and can be applied hierarchically on underwater images according to comprehensiveness in method; it is likely to ruin the information in the process. Normally, the real-time and less time-consuming methods are used.

Some real/non-real-time methods have employed standard deviation, mean, normal distribution, and geometric characteristics analysis [86]. Real-time processing is sometimes misapprehending to be high-performance computing, but this is not an accurate classification [87,88,89]. Some studies have used the calculation of the quality, similarity, or difference of the processed and original images, which can be considered as a more important assessment method [86, 90].

Besides detecting the desired goals in images, try to improve the quality is necessary to apply techniques for estimating the image quality and the detected targets, such as studies [29,30,31,32,33,34]. In these examinations, entropy, mean square error (MSE), and peak signal-to-noise ratio (PSNR) have been used as assessment criteria. Similar, [91] in [29,30,31, 92] used MSE and PSNR as assessment criteria to measure the quality improvement of underwater images. Time estimation, MSE, PSNR, and entropy are among the benchmarks mentioned in [32]. Precision and factors such as the partial decision rate and detection sensitivity are calculated in some methods [91]. The Structural Similarity Index (SSIM) of two images, peak signal-to-noise ratio, and MSE in the proposed model in [33] have been considered in assessing the removal of artifacts into underwater images. Perhaps, the research conducted by Yang et al. [83] is one of the best studies for the quality assessment of underwater images. In addition to the conventional benchmarks that have just been mentioned, they also used benchmarks to estimate the best features extracted from the image. In studies [39, 40], in addition to the conventional benchmarks, the Pearson coefficient was employed as a correlation coefficient between images. Besides these, the [52] has also used SSIM, MSE, and PSNR. Using the PSNR is also evident in [93]. MSE, PSNR, entropy, Structural Contrast Index (SCI), quality assessment of underwater images, and time components were among the characteristics of [94]. The average color matching coefficient and the quality of the underwater color image are among the benchmarks used in [95]. Also, the mean gradient, MSE and PSNR, and entropy are among the benchmarks discussed in the underwater image quality assessment [53, 96]. Medium entropy and quality improvement factor are among the other benchmarks in [56].

Many different methods exist to measure execution time and time components, but there is a no single best assessment criterion. Moreover, each criterion is a compromise between multiple features, such as resolution, accuracy, and computational complexity of the problem.

3.3 Advantages and disadvantages

There is a little investigation based on the advantages and disadvantages to improve real-time and non-real-time underwater imaging quality, and there are many maneuvers on this topic.

3.4 Comparison with experiment

Certainly, data loss is to be expected in some ways. For example, in filtering methods, the loss of image information as a result of filtering is high. This can also cause problems in transferring images to main processing center online. Accordingly, some methods can be suggested to resolve the transferring images. As an example, the use of deep learning can reduce the loss of image information, but its real time is affected. Therefore, we can compromise in this regard and expect that the loss of image information is somewhat negligible. Another suggestion is to use deep learning light weight structures to compensate for the loss of image information and to maintain real-time capability. Other methods can be used to improve the resolution, such as super-resolution or enhance the details of underwater images.

Although hybrid and multi-step models have better outputs in improving the quality of underwater images, they spend more time processing than the conventional and classic methods. Therefore, hybrid and multi-step models are not appropriate for real-time processing. Problems with image quality degradation are mainly addressed by the selective absorption and scattering of light in water as well as the use of artificial light in deep water. Degraded underwater images have low contrast, low brightness, color aberration, blurred details, and uneven specifications that limit their applications in practical scenarios. For this reason, the use of hybrid methods and more recent methods based on deep learning can significantly improve the quality of the processed image. Several underwater image enhancement algorithms are summarized in Table 1, with their characteristics, along with some of their representative investigations.

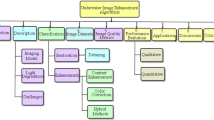

In Fig. 8, a configuration of previous underwater image enhancement methods is shown for the presented underwater image quality that the branch growth of each demonstrates better performance and high efficiency. We discuss different conditions such as hardware, software, and other related methods to enhance the quality of underwater imaging in Fig. 8. The advantages and disadvantages of each part are pointed out.

3.4.1 Hardware

Different models such as polarization, laser imaging, multi-imaging, and stereo imaging are affecting the precision of hardware. Some studies such as [47, 49] are as methods with sonar demonstration, and one of the main shortcomings of the sonar is its dependence to signal and noise on it. Studies [97, 98] are the other hardware promotion methods based on non-uniform distribution effect and proper recovery. On the other hand, some variables such as light change or magnitude of noise and water opacity are not considered [1, 10, 14, 54]. Authors in [99, 100] suggested that laser imaging can be used for underwater robots, while lack of controlling laser can affect the precision of the detection. For example, how to send pulses can cause many problems. Multi-imaging and stereo imaging can be more effective compared with other hardware methods [23, 24, 100]. Photometric measuring might not be possible for images with different dimensions and not meet the optimal hardware condition. However, illumination fusion [24] is more efficient among hardware promotion methods as they can provide a better and more transparent image compared with other solutions.

3.4.2 Software

-

(a)

De-hazing

Software promotion is one of the main issues of improving quality of underwater images. Some estimation proposed from optical transmission aiming at de-hazing and contrast improvement [101,102,103] may not always have ideal results. The mentioned conditions will encounter more complexity if the objects have a large scale than other parts of images. Some studies use the Laplacian pyramid [104] that depended on the low- or high-pass filter, and it is likely to ruin the information. For de-hazing the images, color patches [105] are an interesting idea. The image information is protected and can be used for underwater imaging, but also it is not so effective in overcoming underwater noise.

-

(b)

De-flickering and de-scattering

Compensating for the attenuation discrepancy along the propagation path is one of the most unique and efficient methods in [106] and suitable for de-flickering and de-scattering. The strong point of [106] is that the process of de-scattering is implemented in addition to de-flickering.

-

(c)

Enhancement

Some techniques such as Local Histogram Equalization [107, 108], Contrast Limited Adaptive Histogram Equalization (CLAHE) [13], Low level Noise [109], Wiener Filtering [110], and Slide Stretching [36] are some methods of image quality improvements without ruining information and the simplest methods ever [1, 10, 14, 54].

-

(d)

Restoration

Ordinarily, the image restoration improvement model is based on repairing or changing the color channel. In [42], de-hazing is used in addition to image restoration, which can be considered as a combined model in underwater image enhancement.

-

(e)

De-noising

It is suggested to use denoising as adaptive smoothing as in other methods [111], but one of the main disadvantages of denoising models is ruining part of the essential information that is not noise.

f) Color correction

Color correction is a method for estimating quality [17, 47, 112,113,114], while other methods ruining information have little destruction and they are not very accurate.

g) Classification and clustering

Although learning in classification is preferred to clustering, but clustering is used in segmentation and even image quality improvement [115, 116]. Deep learning theory (also known as hierarchical learning) is part of a broader category of machine learning methods based on learning data representations, as opposed to task-specific algorithms. This process is modeled using a deep graph with several processed layers, including linear and nonlinear converting layers [89, 117,118,119].

A key issue for underwater target detection is intertwined with goals such as proper object classification accuracy, rapid detection, and low complexity competing. It is clear that when images are not preprocessed properly, the recognition process is fraught with error. Although designing high deep architecture would resolve the recognition procedure in images, but it takes a lot of time, and this makes it impossible to model real-processing time.

3.4.3 Computational complexity

Investigating computational complexity among the various strategies for underwater image enhancement is more important than software. The collection of images received from the underwater was used to estimate the time criteria for the methods, which are real and artificial sets produced by the PBRT Laboratory. This collection is composed of several images, most of which are freely available [103, 120]. Based on time calculations, we compared the types of algorithms based on the enhancement of underwater images. The results are shown in Fig. 9, where the methods that can perform real time are compared in terms of computational complexity, time, and percentage delay. Calculation of relative time complexity is considered, and the experiment is performed for five repetitions by each method.

Figures 9 and 10 show that the values of delay, computational computation, and time-consuming are estimated based on the performance criteria of each algorithm. For example, after simulating the method, the functions of estimating time delay in response, as well as temporal and computational complexity in MATLAB software, have been used. Methods such as color correction and image de-hazing are methods that can be less computationally complex. There are some methods such as classification models in this field. Although they have the ability to update the structure and create better outputs, they require training. If the training step is removed from the algorithm's main processing time set, they can respond quickly and apply real time by applying similar data applied in other ways. Figure 10 shows the variance and time interval based on the computational complexity of the methods in different iterations. As shown in Figs. 9 and 10, the estimation of mean values and the variance of time response in color correction and clustering techniques are minimal. The low variance between responses indicates functional stability. It is inferred that some methods, such as the de-flickering and de-scattering methods, take less time than techniques such as clustering or denoising. Image restoration and classification methods also have the least time variance among the image quality improvement procedures.

4 Conclusions

In this paper, a comprehensive review of underwater image processing concerning real-time and unreal-time improvements was presented. Depending on the different states of water, its depths and its varying levels, as well as how to move the control vehicle remotely, various types of the direction of the possible optimum image quality were investigated. These real-time or near real-time approaches include five main steps based on image processing. These five steps are color space transfer, noise reduction, blurring removal, and contrast enhancement of the underwater image in ways such as histogram equalization, histogram stretching, transforms such as wavelet transformation, and image restoration. Each of the approaches has been employed in certain categories to improve the quality of underwater images. By combining them or using the improved application of solutions, the quality of underwater images or videos can be enhanced.

References

Lu, H., Li, Y., Serikawa, S.: Computer vision for ocean observing. In: Artificial Intelligence and Computer Vision, pp. 1–16. Springer (2017) (ebook)

Xu, R.: Particle characterization: light scattering methods, vol 13, 1st edn, pp. 56–110. Springer, Netherlands, Berlin (2001). ISBN 978-0-306-47124-7. https://doi.org/10.1007/0-306-47124-8 (ebook)

Khosravi, M.R., Samadi, S.: Data compression in ViSAR sensor networks using non-linear adaptive weighting. J. Wireless. Com. Network. 2019(1), 1–8 (2019). https://doi.org/10.1186/s13638-019-1577-z

Huo, X., Tong, X.G., Liu, K.Z., Ma, K.M.: A compound control method for the rejection of spatially periodic and uncertain disturbances of rotary machines and its implementation under uniform time sampling. Control Eng. Pract. 53, 68–78 (2016)

Lu, H., Li, Y., Xu, X., Li, J., Liu, Z., Li, X., Serikawa, S.: Underwater image enhancement method using weighted guided trigonometric filtering and artificial light correction. J. Vis. Commun. Image Represent. 38, 504–516 (2016)

Moghimi, M.K., Mohanna, F.: Underwater optical image coding for marine health monitoring based on DCT. Curr. Signal Transduct. Ther 14, 1–15 (2019)

Kim, J.H., Dowling, D.R.: Blind deconvolution of extended duration underwater signals. J. Acoust. Soc. Am. 135(4), 2200–2200 (2014)

Priyadharsini, R., Sharmila, T.S., Rajendran, V.: An efficient edge detection technique using filtering and morphological operations for underwater acoustic images. In: Proceedings of the Second International Conference on Information and Communication Technology for Competitive Strategies, p. 108. ACM (2016)

Jeelani, A., Veena, M.B.: Denoising the underwater images by using adaptive filters. Adv. Image Video Process. 5(2), 01 (2017)

Lu, H., Li, Y., Zhang, Y., Chen, M., Serikawa, S., Kim, H.: Underwater optical image processing: a comprehensive review. Mob. Netw. Appl. 22(6), 1204–1211 (2017)

Jaffe, J.S., Moore, K.D., McLean, J., Strand, M.P.: Underwater optical imaging: status and prospects. Oceanography 14(3), 64–75 (2001). https://doi.org/10.5670/oceanog.2001.24

Caimi, F.M., Kocak, D.M., Dalgleish, F., Watson, J.: Underwater imaging and optics: Recent advances. In OCEANS 2008, pp. 1–9. IEEE (2008)

Hitam, M.S., Awalludin, E.A., Yussof, W.N.J.H.W., Bachok, Z.: Mixture contrast limited adaptive histogram equalization for underwater image enhancement. In: Computer Applications Technology (ICCAT), 2013 International Conference on, pp. 1–5. IEEE (2013)

Kocak, D.M., Dalgleish, F.R., Caimi, F.M., Schechner, Y.Y.: A focus on recent developments and trends in underwater imaging. Mar. Technol. Soc. J. 42(1), 52–67 (2008)

Kocak, D.M., Caimi, F.M.: The current art of underwater imaging–with a glimpse of the past and vision of the future. Mar. Technol. Soc. J. 39(3), 5–26 (2005)

Bouchette, G., Church, P., Mcfee, J.E., Adler, A.: Imaging of compact objects buried in underwater sediments using electrical impedance tomography. IEEE Trans. Geosci. Remote Sens. 52(2), 1407–1417 (2014)

Torres-Méndez, L.A., Dudek, G.: Color correction of underwater images for aquatic robot inspection. In: International Workshop on Energy Minimization Methods in Computer Vision and Pattern Recognition, pp. 60–73. Springer, Berlin, Heidelberg (2005)

Georgiades, C., German, A., Hogue, A., Liu, H., Prahacs, C., Ripsman, A., Dudek, G.: AQUA: an aquatic walking robot. In: Intelligent Robots and Systems, (IROS 2004). Proceedings. 2004 IEEE/RSJ International Conference on, Vol. 4, pp. 3525–3531. IEEE, (2004)

Jaffe, J.S.: Enhanced extended range underwater imaging via structured illumination. Opt. Express 18(12), 12328–12340 (2010)

McLeod, D., Jacobson, J., Hardy, M., Embry, C.: Autonomous inspection using an underwater 3D LiDAR. In: Oceans-San Diego, 2013, pp. 1–8. IEEE, (2013)

Tran, Q.D., Jang, G.W., Kwon, H.S., Cho, W.H., Cho, S.H., Cho, Y.H., Seo, H.S.: Shape optimization of acoustic lenses for underwater imaging. J. Mech. Sci. Technol. 30(10), 4633–4644 (2016)

Khosravi, M.R., Basri, H., Rostami, H., Samadi, S.: Distributed random cooperation for VBF-based routing in high-speed dense underwater acoustic sensor networks. J. Supercomput. 74(11), 6184–6200 (2018)

Murez, Z., Treibitz, T., Ramamoorthi, R., Kriegman, D.J.: Photometric stereo in a scattering medium. IEEE Trans. Pattern Anal. Mach. Intell. 39(9), 1880–1891 (2017). https://doi.org/10.1109/TPAMI.2016.2613862

Treibitz, T., Schechner, Y.Y.: Turbid scene enhancement using multi-directional illumination fusion. IEEE Trans. Image Process. 21(11), 4662–4667 (2012)

Roser, M., Dunbabin, M., Geiger, A.: Simultaneous underwater visibility assessment, enhancement and improved stereo. In: Robotics and Automation (ICRA), 2014 IEEE International Conference on, pp. 3840–3847, (2014)

Mori, K., Ogasawara, H., Nakamura, T., Tsuchiya, T., Endoh, N.: Design and convergence performance analysis of aspherical acoustic lens applied to ambient noise imaging in actual ocean experiment. Japn. J. Appl. Phys. 50(7S), 07HG09 (2011)

Kussmann, J., Luenser, A., Beer, M., Ochsenfeld, C.: A reduced-scaling density matrix-based method for the computation of the vibrational Hessian matrix at the self-consistent field level. J. Chem. Phys. 142(9), 094101 (2015)

Sundararajan, S.K., Gomathi, B.S., Priya, D.S.: Continuous set of image processing methodology for efficient image retrieval using BOW SHIFT and SURF features for emerging image processing applications. In 2017 International Conference on Technological Advancements in Power and Energy (TAP Energy), pp. 1–7. IEEE (2015)

Ghani, A.S.A., Isa, N.A.M.: Underwater image quality enhancement through Rayleigh-stretching and averaging image planes. Int. J. Naval Arch. Ocean Eng. 6(4), 840–866 (2014)

Ghani, A.S.A., Isa, N.A.M.: Underwater image quality enhancement through composition of dual-intensity images and Rayleigh-stretching. SpringerPlus 3(1), 757 (2014)

Ghani, A.S.A., Isa, N.A.M.: Underwater Image Contrast Enhancement through Multilevel Histogram Modification Based on Color Channels Percentages (2014). https://doi.org/10.13140/RG.2.1.2037.6405

Ghani, A.S.A., Isa, N.A.M.: Enhancement of low quality underwater image through integrated global and local contrast correction. Appl. Soft Comput. 37, 332–344 (2015)

Ghani, A.S.A., Isa, N.A.M.: Underwater image quality enhancement through integrated color model with Rayleigh distribution. Appl. Soft Comput. 27, 219–230 (2015)

Seemakurthy, K., Rajagopalan, A.N.: Deskewing of underwater images. IEEE Trans. Image Process. 24(3), 1046–1059 (2015)

Iqbal, K., Odetayo, M., James, A., Salam, R.A., Talib, A.Z.H.: Enhancing the low quality images using unsupervised colour correction method. In: Systems Man and Cybernetics (SMC), 2010 IEEE International Conference on, pp. 1703–1709. IEEE (2010)

Iqbal, K., Salam, R.A., Osman, A., Talib, A.Z.: Underwater image enhancement using an integrated colour model. IAENG Int. J. Comput. Sci. 34(2), 239–244 (2007)

Chernov, V., Alander, J., Bochko, V.: Integer-based accurate conversion between RGB and HSV color spaces. Comput. Electr. Eng. 46, 328–337 (2015)

AbuNaser, A., Doush, I.A., Mansour, N., Alshattnawi, S.: Underwater image enhancement using particle swarm optimization. J. Intell. Syst. 24(1), 99–115 (2014). https://doi.org/10.1515/jisys-2014-0012

Panetta, K., Gao, C., Agaian, S.: Human-visual-system-inspired underwater image quality measures. IEEE J. Ocean. Eng. 41(3), 541–551 (2016)

Xu, T., Yang, K., Xia, M., Li, W., Guo, W. Underwater linear object detection based on optical imaging. In: AOPC 2017: Optical Sensing and Imaging Technology and Applications, Vol. 10462, p. 1046221. International Society for Optics and Photonics (2017)

Bianco, G., Neumann, L.: A fast enhancing method for non-uniformly illuminated underwater images. In: IEEE International Conferrence on OCEANS 2017 OCEANS 2017 - Anchorage, Anchorage, AK, pp. 1–6 (2017)

Galdran, A., Pardo, D., Picón, A., Alvarez-Gila, A.: Automatic red-channel underwater image restoration. J. Vis. Commun. Image Represent. 26, 132–145 (2015). https://doi.org/10.1016/j.jvcir.2014.11.006

Bianco, G., Muzzupappa, M., Bruno, F., Garcia, R., Neumann, L.: A new color correction method for underwater imaging. ISPRS Int. Arch. Photogramm. Remote Sens. Sp. Inf. Sci. 40(5), 25–32 (2015). https://doi.org/10.5194/isprsarchives-XL-5-W5-25-2015

Li, C., Quo, J., Pang, Y., Chen, S., Wang, J.: Single underwater image restoration by blue-green channels dehazing and red channel correction. In: Acoustics, Speech and Signal Processing (ICASSP), 2016 IEEE International Conference on, pp. 1731–1735. IEEE (2016)

Fu, X., Fan, Z., Ling, M., Huang, Y., Ding, X.: Two-step approach for single underwater image enhancement. In: Intelligent Signal Processing and Communication Systems (ISPACS), 2017 International Symposium on, pp. 789–794. IEEE (2017)

Ancuti, C.O., Ancuti, C., De Vleeschouwer, C., Bekaert, P.: Color balance and fusion for underwater image enhancement. IEEE Trans. Image Process. 27(1), 379–393 (2018)

Petit, F., Capelle-Laize, A.S., Carre, P.: Underwater image enhancement by attenuation inversion with quaternions. In: Acoustics, Speech and Signal Processing, 2009. ICASSP 2009. IEEE International Conference on, pp. 1177–1180. IEEE (2009)

Gonzalez, R.C.: Digital image processing. (2016)

Hurtós, N., Ribas, D., Cufí, X., Petillot, Y., Salvi, J.: Fourier-based registration for robust forward-looking sonar mosaicing in low-visibility underwater environments. J. Field Rob. 32(1), 123–151 (2015)

Bernotas, M., Nelson, C.: Probability density function analysis for optimization of underwater optical communications systems. In: OCEANS'15 MTS/IEEE Washington, pp. 1–8. IEEE (2015)

Ghani, A.S.A., Isa, N.A.M.: Homomorphic filtering with image fusion for enhancement of details and homogeneous contrast of underwater image. (2015)

Lu, H., Li, Y., Hu, X., Yang, S., Serikawa, S.: Real-time underwater image contrast enhancement through guided filtering. In: International Conference on Image and Graphics, pp. 137–147. Springer, Cham (2015)

Serikawa, S., Lu, H.: Underwater image dehazing using joint trilateral filter. Comput. Electr. Eng. 40(1), 41–50 (2014)

Namdeo, A., Bhadoriya, S.S.: A review on image enhancement techniques with its advantages and disadvantages. Int. J. Sci. Adv. Res. Technol. 2(5), 171–182 (2016)

Cai, B., Xu, X., Jia, K., Qing, C., Tao, D.: Dehazenet: an end-to-end system for single image haze removal. IEEE Trans. Image Process. 25(11), 5187–5198 (2016)

Qiao, X., Bao, J., Zhang, H., Zeng, L., Li, D.: Underwater image quality enhancement of sea cucumbers based on improved histogram equalization and wavelet transform. Inf. Process. Agric. 4(3), 206–213 (2017)

Khosravi, M.R., Moghimi, M.K.: Underwater optical image processing. Mod. Approach Oceanogr. Petrochem. Sci. 1(1), 1–2 (2018)

Jaybhay, J., Shastri, R.: A study of speckle noise reduction filters. Signal Image Process. Int. J. (SIPIJ) 6(3), 71–80 (2015). https://doi.org/10.5121/sipij.2015.6306

Shechtman, Y., Weiss, L.E., Backer, A.S., Sahl, S.J., Moerner, W.E.: Precise three-dimensional scan-free multiple-particle tracking over large axial ranges with tetrapod point spread functions. Nano Lett. 15(6), 4194–4199 (2015)

Rezaee, K., Haddadnia, J., Tashk, A.: Optimized clinical segmentation of retinal blood vessels by using combination of adaptive filtering, fuzzy entropy and skeletonization. Appl. Soft Comput. 52, 937–951 (2017)

Ghani, A.S.A., Isa, N.A.M.: Automatic system for improving underwater image contrast and color through recursive adaptive histogram modification. Comput. Electron. Agric. 141, 181–195 (2017)

Althaf, S.K., Basha, J., Shaik, M.A.: A Study on histogram equalization techniques for underwater image enhancement. Int. J. Sci. Res. Comput. Sci. Eng. Inf. Technol. 2(5), 210–216 (2017)

Fiuzy, M.M., Rezaei, K.F., Haddadnia, J.M.: A novel approach for segmentation special region in an image. Majlesi J. Multimed. Process., 1(2), (2011)

Rezaee, A., Rezaee, K., Haddadnia, J., Gorji, H.T.: Supervised meta-heuristic extreme learning machine for multiple sclerosis detection based on multiple feature descriptors in MR images. SN Appl. Sci. 2, 1–19 (2020)

Badgujar, P.N., Singh, J.K.: Underwater image enhancement using generalized histogram equalization, discrete wavelet transform & KL-transform. Int. J. Innov. Res. Sci. Eng. Technol. (IJIRSET) 6(6), 11834–11840 (2017). https://doi.org/10.15680/IJIRSET.2017.0606169

Lu, H., Li, Y., Nakashima, S., Kim, H., Serikawa, S.: Underwater image super-resolution by descattering and fusion. IEEE Access 5, 670–679 (2017)

Galusha, A., Galusha, G., Keller, J.M., Zare, A: A fast target detection algorithm for underwater synthetic aperture sonar imagery. In: Detection and Sensing of Mines, Explosive Objects, and Obscured Targets XXIII, Vol. 10628, p. 106280Z. International Society for Optics and Photonics (2018)

Hu, H., Zhao, L., Huang, B., Li, X., Wang, H., Liu, T.: Enhancing visibility of polarimetric underwater image by transmittance correction. IEEE Photon. J. 9(3), 1–10 (2017). https://doi.org/10.1109/JPHOT.2017.2698000

Khosravi, M.R.: The shortfalls of underwater sensor network simulators. Ses Technnol 60(5), 41 (2019)

Wang, N., Zheng, H., Zheng, B.: Underwater Image Restoration via Maximum Attenuation Identification. IEEE Access 5, 18941–18952 (2017)

Wang, Y., Liu, H., Chau, L.P.: Single underwater image restoration using adaptive attenuation-curve prior. IEEE Trans. Circ. Syst. I Regul. Pap. 65(3), 992–1002 (2018)

Peng, Y.T., Cosman, P.C.: Underwater image restoration based on image blurriness and light absorption. IEEE Trans. Image Process. 26(4), 1579–1594 (2017)

Hu, H., Zhao, L., Li, X., Wang, H., Liu, T.: Underwater image recovery under the non-uniform optical field based on polarimetric imaging. IEEE Photon. J. (2018). https://doi.org/10.1109/JPHOT.2018.2791517

Li, J., Skinner, K.A., Eustice, R.M., Johnson-Roberson, M.: WaterGAN: unsupervised generative network to enable real-time color correction of monocular underwater images. IEEE Robot. Autom. Lett. 3(1), 387–394 (2018). https://doi.org/10.1109/LRA.2017.2730363

Moghimi, M.K., Mohanna, F.: Real-time underwater image resolution enhancement using super-resolution with deep convolutional neural networks. J Real-Time Image Proc (2020). https://doi.org/10.1007/s11554-020-01024-4

Mercado, M.A., Ishii, K., Ahn, J.: Deep-sea image enhancement using multi-scale retinex with reverse color loss for autonomous underwater vehicles. In: OCEANS-Anchorage, 2017, pp. 1–6. IEEE (2017)

Khosravi, M.R., Basri, H., Rostami, H.: Efficient routing for dense UWSNs with high-speed mobile nodes using spherical divisions. J. Supercomput. 74(2), 696–716 (2018)

Ghani, A.S.A., Nasir, A.F.A., Tarmizi, W.F.W.: Integration of enhanced background filtering and wavelet fusion for high visibility and detection rate of deep sea underwater image of underwater vehicle. In: Information and Communication Technology (ICoIC7), 2017 5th International Conference on, pp. 1–6. IEEE (2017)

Moghimi, M.K., Mohanna, F.: A joint adaptive evolutionary model towards optical image contrast enhancement and geometrical reconstruction approach in underwater remote sensing. SN Appl. Sci. 1(10), 1242 (2019). https://doi.org/10.1007/s42452-019-1255-0

Chen, Z., Zhang, Z., Dai, F., Bu, Y., Wang, H.: Monocular vision-based underwater object detection. Sensors 17(8), 1784 (2017)

Wang, N., Zheng, B., Zheng, H., Yu, Z.: Feeble object detection of underwater images through LSR with delay loop. Opt. Express 25(19), 22490–22498 (2017)

Lu, H., Serikawa, S.: Underwater scene enhancement using weighted guided median filter. In: 2014 IEEE International Conference on Multimedia and Expo (ICME), pp. 1–6. IEEE (2014)

Yang, M., Sowmya, A.: An underwater color image quality evaluation metric. IEEE Trans. Image Process. 24(12), 6062–6071 (2015)

Wang, Z., Bovik, A.C., Sheikh, H.R., Simoncelli, E.P.: Image quality assessment: from error visibility to structural similarity. IEEE Trans. Image Process. 13(4), 600–612 (2004)

Arredondo, M., Lebart, K.: A methodology for the systematic assessment of underwater video processing algorithms. In: Oceans 2005-Europe, Vol. 1, pp. 362–367. IEEE (2005)

Fandos, R., Zoubir, A.M.: Optimal feature set for automatic detection and classification of underwater objects in SAS images. IEEE J. Sel. Top. Signal Process. 5(3), 454–468 (2011)

Lee, E.A.: Cyber physical systems: Design challenges. In 2008 11th IEEE International Symposium on Object and Component-Oriented Real-Time Distributed Computing (ISORC), pp. 363–369. IEEE (2008)

Li, C., Anwar, S., Porikli, F.: Underwater scene prior inspired deep underwater image and video enhancement. Pattern Recogn. 98, 107038 (2020)

Yang, M., Hu, K., Du, Y., Wei, Z., Sheng, Z., Hu, J.: Underwater image enhancement based on conditional generative adversarial network. Signal Process. Image Commun. 81, 115723 (2020)

Jay, S., Guillaume, M., Blanc-Talon, J.: Underwater target detection with hyperspectral data: solutions for both known and unknown water quality. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 5(4), 1213–1221 (2012)

Chuang, M.C., Hwang, J.N., Williams, K.: A feature learning and object recognition framework for underwater fish images. IEEE Trans. Image Process. 25(4), 1862–1872 (2016)

Chikkerur, S., Sundaram, V., Reisslein, M., Karam, L.J.: Objective video quality assessment methods: a classification, review, and performance comparison. IEEE Trans. Broadcast. 57(2), 165 (2011)

Boudhane, M., Nsiri, B.: Underwater image processing method for fish localization and detection in submarine environment. J. Vis. Commun. Image Represent. 39, 226–238 (2016)

Li, C.Y., Guo, J.C., Cong, R.M., Pang, Y.W., Wang, B.: Underwater image enhancement by dehazing with minimum information loss and histogram distribution prior. IEEE Trans. Image Process. 25(12), 5664–5677 (2016)

Liu, X., Zhong, G., Liu, C., Dong, J.: Underwater image colour constancy based on DSNMF. IET Image Proc. 11(1), 38–43 (2017). https://doi.org/10.1049/iet-ipr.2016.0543

Ghani, A.S.A., Aris, R.S.N.A.R., Zain, M.L.M.: Unsupervised contrast correction for underwater image quality enhancement through integrated-intensity stretched-Rayleigh histograms. J. Telecommun. Electron. Comput. Eng. (JTEC), 8(3), 1–7. http://journal.utem.edu.my/index.php/jtec/article/view/993 (2016)

Yemelyanov, K.M., Lin, S.S., Pugh, E.N., Jr., Engheta, N.: Adaptive algorithms for two-channel polarization sensing under various polarization statistics with nonuniform distributions. Appl. Opt. 45(22), 5504–5520 (2006)

Huang, B., Liu, T., Hu, H., Han, J., Yu, M.: Underwater image recovery considering polarization effects of objects. Opt. Express 24(9), 9826–9838 (2016)

Tan, C.S., Sluzek, A., GL, G.S., & Jiang, T.Y.: Range gated imaging system for underwater robotic vehicle. In OCEANS 2006-Asia Pacific, pp. 1–6. IEEE (2007)

Tan, C., Seet, G., Sluzek, A., He, D.: A novel application of range-gated underwater laser imaging system (ULIS) in near-target turbid medium. Opt. Lasers Eng. 43(9), 995–1009 (2005)

Fattal, R.: Single image dehazing. ACM Trans. Graph. SESSION: Deblurring and dehazing. Association for Computing Machinery. (TOG) 27(3), 1–9 (2008). https://doi.org/10.1145/1360612.1360671

Fattal, R.: Dehazing using color-lines. ACM Trans. Graph. (TOG) 34(1), 1–13 (2014)

He, K., Sun, J., Tang, X.: Single image haze removal using dark channel prior. IEEE Trans. Pattern Anal. Mach. Intell. 33(12), 2341–2353 (2011)

Ancuti, C.O., Ancuti, C.: Single image dehazing by multi-scale fusion. IEEE Trans. Image Process. 22(8), 3271–3282 (2013)

Chiang, J.Y., Ying-Ching Chen, A.: Underwater image enhancement by wavelength compensation and dehazing (WCID). IEEE Trans. Image Process. 21(4), 1756–1769 (2012)

Lu, H., Li, Y., Zhang, L., Serikawa, S.: Contrast enhancement for images in turbid water. JOSA A 32(5), 886–893 (2015). https://doi.org/10.1364/JOSAA.32.000886

Garcia, R., Nicosevici, T., Cufí, X.: On the way to solve lighting problems in underwater imaging. In OCEANS'02 MTS/IEEE, Vol. 2, pp. 1018–1024. IEEE (2002)

Fu, X., Cao, X.: Underwater image enhancement with global-local networks and compressed-histogram equalization. Signal Process. Image Commun., 115892 (2020)

Bekaert, P., Haber, T., Ancuti, C.O., Ancuti, C.: Enhancing underwater images and videos by fusion. In: 2012 IEEE Conference on Computer Vision and Pattern Recognition, pp. 81–88. IEEE (2012)

Gibson, K.B.: Preliminary results in using a joint contrast enhancement and turbulence mitigation method for underwater optical imaging. In: OCEANS'15 MTS/IEEE Washington (pp. 1–5). IEEE (2015)

Arnold-Bos, A., Malkasse, J.P., & Kervern, G.: A preprocessing framework for automatic underwater images denoising. In: European Conference on Propagation and Systems (2005)

Rizzi, A., Gatta, C., Marini, D.: A new algorithm for unsupervised global and local color correction. Pattern Recogn. Lett. 24(11), 1663–1677 (2003)

Lu, H., Li, Y., Serikawa, S.: Single underwater image descattering and color correction. In: Acoustics, Speech and Signal Processing (ICASSP), 2015 IEEE International Conference on (pp. 1623–1627). IEEE (2015)

Liang, Z., Wang, Y., Ding, X., Mi, Z., Fu, X.: Single underwater image enhancement by attenuation map guided color correction and detail preserved dehazing. Neurocomputing 86, 115892 (2020)

Li, Y., Lu, H., Li, J., Li, X., Li, Y., Serikawa, S.: Underwater image de-scattering and classification by deep neural network. Comput. Electr. Eng. 54, 68–77 (2016)

Schmid, M.S., Aubry, C., Grigor, J., Fortier, L.: The LOKI underwater imaging system and an automatic identification model for the detection of zooplankton taxa in the Arctic Ocean. Methods Oceanogr. 15–16, 129–160 (2016). https://doi.org/10.1016/j.mio.2016.03.003

Mahmood, A., Bennamoun, M., An, S., Sohel, F., Boussaid, F., Hovey, R. Fisher, R.B.: Deep learning for coral classification. In: Handbook of Neural Computation (pp. 383–401) (2016)

Qing, X., Nie, D., Qiao, G., Tang, J.: Classification for underwater small targets with different materials using bio-inspired Dolphin click. In: Ocean Acoustics (COA), 2016 IEEE/OES China, pp. 1–6. IEEE (2016)

Faillettaz, R., Picheral, M., Luo, J.Y., Guigand, C., Cowen, R.K., Irisson, J.O.: Imperfect automatic image classification successfully describes plankton distribution patterns. Methods Oceanogr. 15–16, 60–77 (2016). https://doi.org/10.1016/j.mio.2016.04.003

Mobley, C.D.: Light and Water: radiative Transfer in Natural Waters. Academic Press, Cambridge (1994)

Schettini, R., Corchs, S.: Underwater image processing: state of the art of restoration and image enhancement methods. EURASIP J. Adv. Signal Process. 1, 1–14 (2010). https://doi.org/10.1155/2010/746052

Hou, W.W.: A simple underwater imaging model. Opt. Lett. 34(17), 2688–2690 (2009)

Yang, M., Hu, J., Li, C., Rohde, G., Du, Y., Hu, K.: An in-depth survey of underwater image enhancement and restoration. IEEE Access 7, 123638–123657 (2019). https://doi.org/10.1109/ACCESS.2019.2932611

Anwar, S., Li, C.: Diving deeper into underwater image enhancement: A survey. 2019. arXiv 1907.07863

Murez, Z., Treibitz, T., Ramamoorthi, R., & Kriegman, D.: Photometric stereo in a scattering medium. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 3415–3423. (2015)

Åhlén, J., Sundgren, D., Bengtsson, E.: Application of underwater hyperspectral data for color correction purposes. Pattern Recogn. Image Anal. 17(1), 170–173 (2007)

Chambah, M., Semani, D., Renouf, A., Courtellemont, P., Rizzi A.: Underwater color constancy: enhancement of automatic live fish recognition In: Proceedings Volume and SPIE 5293, Color Imaging IX: Processing Hardcopy and Applications, pp 157–169 (2003). https://doi.org/10.1117/12.524540

Lu, H., Li, Y., Xu, X., He, L., Li, Y., Dansereau, D., Serikawa, S.: Underwater image descattering and quality assessment. In: Image Processing (ICIP), 2016 IEEE International Conference on (pp. 1998–2002). IEEE (2016)

Hou, W., & Weidemann, A.D.: Objectively assessing underwater image quality for the purpose of automated restoration. In: Visual Information Processing XVI, Vol. 6575, p. 65750Q. International Society for Optics and Photonics (2007)

Hollinger, G.A., Mitra, U., & Sukhatme, G.S.: Active classification: theory and application to underwater inspection. In: Robotics Research, pp. 95–110. Springer, Cham (2017)

Kumar, N., Mitra, U., Narayanan, S.S.: Robust object classification in underwater sidescan sonar images by using reliability-aware fusion of shadow features. IEEE J. Ocean. Eng. 40(3), 592–606 (2015)

Yu, X., Wei, Y., Zhu, M., & Zhou, Z.: Automated classification of zooplankton for a towed imaging system. In: OCEANS 2016-Shanghai, pp. 1–4. IEEE (2016)

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Moghimi, M.K., Mohanna, F. Real-time underwater image enhancement: a systematic review. J Real-Time Image Proc 18, 1509–1525 (2021). https://doi.org/10.1007/s11554-020-01052-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11554-020-01052-0