Abstract

In this paper, a two-step image enhancement is presented. In the first step, color correction and underwater image quality enhancement are conducted if there are artifacts such as darkening, hazing and fogging. In the second step, the image resolution optimized in the previous step is enhanced using the convolutional neural network (CNN) with deep learning capability. The main reason behind the adoption of this two-step technique, which includes image quality enhancement and super-resolution, is the need for a robust strategy to visually improve underwater images at different depths and under diverse artifact conditions. The effectiveness and robustness of the real-time algorithm are satisfactory for various underwater images under different conditions, and several experiments have been undertaken for the two datasets of images. In both stages and for each of image datasets, the mean square error (MSE), peak signal to noise ratio (PSNR), and structural similarity (SSIM) evaluation measures were fulfilled. In addition, the low computational complexity and suitable outputs were obtained for different artifacts that represented divergent depths of water to achieve a real-time system. The super-resolution in the proposed structure for medium layers can offer a proper response. For this reason, time is also one of the major factors reported in the research. Applying this model to underwater imagery systems will yield more accurate and detailed information.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Underwater imaging plays an effective role in ocean exploration but often suffer from severe quality degradation due to artifacts and light absorption. Today, most of the underwater vehicles used for underwater exploration are usually equipped with optical cameras to capture visual data of underwater objects, shipwrecks, coral reefs, pipelines and telecommunications lines in the seas and oceans, etc., [1, 2]. For example, for regular inspections of oil and gas pipes in the seas and oceans, ROVs equipped with these cameras are recruited with the human operators onshore analyzing images transmitted by these devices [3,4,5]. However, the color images captured with these cameras, due to the physical properties of the aquatic environment, have non-real-time manner, poor visual quality, opacity or luminosity with a poor field of view. The light is exponentially attenuated when travelling through the water, causing these images to have low contrast and opacity [6,7,8,9,10,11,12]. Absorption, which diminishes the energy of light, and scattering, which alters the path of light radiation, are two main factors responsible for underwater light attenuation [11, 13]. These floating particles, known as “sea snow” [14], are of diverse type and concentration, increasing the degree of absorption and scattering. Another challenge in this area is the expanded range of vision by artificial light that concentrates light at the center of the image rather than its edges [8]. Another issue is the greenish or bluish color of images taken underwater.

The real-time image processing techniques is always in high demand for multiple requisitions used in automated systems such as remote sensing, manufacturing process and multimedia applications; those required to have high performance. Based on that requirement, image processing and machine learning systems have been proposed in some studies using real-time concepts for underwater imaging [68, 69].

Underwater light attenuation restricts the vision range to about 2 m in clearwater [15, 16] and to less than 2 m in murky water [17, 18]. As we go deeper into the water, the light is dimmed, and the colors disappear in the order of their wavelength [19,20,21]. For example, red disappears at a depth of 3 m, and as we plunge into the water, orange starts to fades until it completely disappears at a depth of 5 m. Then, yellow mostly disappears at a depth of 10 m and eventually green and purple vanish at more profound depths. In addition to the above, low resolution of images will probably complicate the detection process.

Many methods developed to overcome the challenge of the low resolution of underwater images. Nevertheless, some inherent limitations such as artifacts, blurring, and noise, have highlighted the importance of developing methods and strategies for underwater photography and consequently image processing. One of the methods that has recently received growing scholarly attention for optimization of image quality is a super-resolution method that can yield promising results.

For the rest of this research paper, the organization is as follows. In Sect. 2, we review some related works including real-time techniques for underwater applications. Section 3 introduces the proposed method and describes its related aspects. Section 4 presents the results of the simulation in the form of quantitative outputs and qualitative features. Practical results will be discussed in Sect. 4.5 of the research and finally conclusions are given in Sect. 5.

2 Related work

Noise is a major cause of poor image resolution. Noise reduction is the process of removing noise from the main signal. In the underwater images, in addition to artifacts such as noise, there are other parameters such no inadequate light, noise and low contrast, non-uniform lighting, shades, suspended particles and other disturbing factors [22, 23].

In images [24,25,26,27], light wavelength compensation -physical and non-physical models [28, 29] and polarization (hardware) [30, 31] have been shown.

Bianco and Neumann [29] used a non-uniform fast method to improve underwater images, which is based on the gray-world assumption in color space transformation. The color correction is commensurate with the local changes in luminance and chrominance underlined by the summed-area table strategy. Galdran et al. [25] used red channel as one of the color decomposition strategies.

In [26,27,28,29,30], the effective color correction strategy based on linear segmental transform was introduced to respond to color distortion. As an innovative contrast enhancement method, it was able to reduce artifacts to achieve low contrast. Since most of the computations are performed for the pixel set, their proposed method was suitable for implementation and real-time execution.

In the past years, convolutional neural networks (CNN) have been the subject of increasing attention [31]. In the same vein, deep convolutional neural networks have recently been used as an inseparable part of machine learning not only in the categorization but also in other fields [32, 33]. There are, however, few studies on deep learning techniques for image retrieval and resolution enhancement. Conventional methods in the field include multilayer perceptron neural networks, which are finally interconnected and used for natural images such as the removal of noise [34] and fogging [35]. One of the methods of image restoration and image resolution improvement, which is less concerned with noise removal, is the one proposed by Cue et al. [36], which utilized auto-encoder networks for resolution enhancement processes including pipe-lines methods [37].

Super-resolution can provide deeper insights into the analysis of images. Different methods have been proposed for this purpose, each with their own advantages and disadvantages [38,39,40]. In general, there are two basic methods for correcting low resolution in images: reconstruction-based methods and learning-based methods. In the former, input images are analyzed based on the data contained in the image itself, and based on rules such as edge extension, curvature preservation and interpolation of pixels [41,42,43,44,45,46].

2.1 Real-time underwater image enhancement

Underwater absorption and scattering processes influence the overall performance of marine systems. Forward scattering (low-angle refraction) refers to the random change of light travelling from the object to the camera, which results in blurry images. Backward scattering describes the light reflected by waterborne particles on the camera before reaching the object. Backward scattering typically limits image contrast. The adsorption and scattering effect is not solely induced by the water, and other factors such as insoluble natural substances and small visible particles floating in the water are also involved in this process [13].

The real-time design of algorithms and hardware sections is necessary for the enhancement process of noisy images. Some enhancement algorithms commonly have been developed for real-time manner where include methods such as CLAHE [8], Retinex [58, 59], color correction [60,61,62,63], and classification models [64,65,66,67].

In a study [68], the Fourier transform structure is employed for images with high complexity to real-time detect targets in images. Sometimes unsupervised methods, as a separate network, not only enhance image quality but also are able to effectively real-time detect the objects appearing in the images [69]. The attempt made in this field is an unsupervised generative network to produce high-quality underwater images and to correct the color of the images.

2.2 Main focus and contribution on real-time processing

One of the main causes of image quality loss is the absorption of light by water. To compensate for light absorption; underwater cameras illuminate the ambience by means of artificial lights for image acquisition, while non-uniform artificial lighting produces shadows in the scene.

However, an alternative strategy in these approaches is an improvement in lighting and sensing systems for illumination an object before capturing the image. The time performance and computational complexity of some method will increase significantly based on these reasons. The loss of underwater image quality can be due to the absorption and backscattering of light beams. Actually, the degradation in image occurs by suspended underwater particles and the hazing, fogging, and other destructions arise from inappropriate imaging. The enhancement considers an important preprocessing operation for further processing of the image whereby, some challenges such as high dimension, good resolution, and high density of unwanted noises in underwater images increase the time of processing and computational complexity. More of traditional enhancement procedures have successfully been shown to improve the quality of underwater images, but computational complexity is high and, therefore, a limited number of methods have real-time or near real-time properties to process and enhance different underwater noisy images.

Due to the fact that the number of investigations in the field of image processing, is less than other subjects but the efficiency and use of the processing based method is high and important. One of the important manner in processing is real-time procedure that can be used to improve performance of underwater image enhancements.

The main aim of this study is to improve image quality parameters and measure, comparing the extent of image enhancement by a number of different criteria. In the proposed method, to achieve the goals, underwater images are divided into three categories: greenish images, bluish images and images of desirable color balance. Then, by introducing some changes to the new dark component method, the fogging is removed. Subsequently, we applied histogram equalization to both green and blue components for greenish images, and histogram stretching to both green and blue components for bluish images to attain overall color balance in these two components. Finally, deep learning technique is employed to improve resolution.

3 The proposed method

The proposed method consists of two phases. In the first phase, we correct the color and remove artifacts such as haze and fogging to improve the contrast-base quality, and in the second phase we use super-resolution with deep learning to improve the resolution of the underwater images. Figure 1 depicts the proposed steps to improve underwater images.

3.1 Color correction and artifacts removing

The dark component can be expressed as (1):

where Jc is a color component of J and Ω (x) is a local space centered by x. According to the previous dark component, except for the open space (sky), the intensity of the dark component is low and near zero. The intensity of the dark component in a hazy or fogging image is a non-uniform approximation of the fog thickness, which is crucial for eliminating fog or haze; however, for underwater images, the previous dark component may be ineffective in many cases. Simply put, the dark component can be expressed as (2):

And the dark component I can be re-written as (3):

As we go deeper, the light is diminished and colors disappear in the order of their wavelength. Among the three colored components of light, red is more significantly attenuated due to its long wavelength compared to other two components (green and blue) and in many underwater images, the values of the dark component or Idark (x) are small and close to zero. As a result, the dark component of the underwater image approaches zero. Thus, the dark component of this underwater image cannot provide information about the depth of the landscape on which a new dark component suitable for the underwater images is defined as (4):

where is called underwater dark component Jnew. Like the previous dark component, the intensity of the underwater dark component must also be low and near zero. Empirically, it can be stated that the underwater landscape backgrounds are blue in seas and oceans and green in lakes. Due to color displacement caused by the background, the intensity of green and blue components of the underwater images must be high in areas distant from the camera. Hence, the dark underwater component can qualitatively reflect the underwater distance between the viewing point and the camera. A general step involves removing hazy or fogging effect by estimating the backlight, scattering ratio, and transmission rate estimation [11].

After removing haze and fog from the underwater images, considering that green and blue images depend on color enhancement and color intensity balance, the two methods of histogram equalization and histogram stretching are used. In green and blue images, to prevent the attenuation of the red component, rather than applying the histogram equalization and histogram stretching to the three color components, it is only applied to the green and blue components and the red component is multiplied by a coefficient. This coefficient can be expressed for green images as (5):

In this respect, αg is the red component coefficient of green images, and Mg and Mb are the mean green and blue components of the image after applying histogram equalization, respectively, and Mr is the mean red component of the image. To balance the mean values of color components of the image, we need to balance the greenish and bluish components as (6) [47]:

Subsequently, the red component is multiplied by a coefficient. This coefficient can be calculated for greenish images as (7):

In this relation, αb is the red component coefficient of green images. Mg and Mb are the mean of green and blue components of the image after applying equalization histogram, and Mr is the mean of the red component of the image.

3.2 Image super-resolution

Scattered automatic coding is initially used to learn the features of images. In the second step, images from a reduced dataset can be categorized using the layers of the convolutional and pooling layer. The random correlation method is used in the proposed convolutional neural network model to facilitate the analysis of the network. The elements are selected based on their probability values in the feature map. In other words, the elements with high probabilities are selected. Unlike the maximum pooling in which the majority of elements are selected, in the mean pooling, only average elements are selected. In Fig. 2, the overall configuration of the deep convolutional neural network for resolution improvement is shown.

3.2.1 Image restoration

Conventional methods used to remove noise and blurring based on the image convolution model first construct the feature map and then applies the deconvolution to the feature map to enhance image quality with artifacts. The optimum effect that can be gained from image restoration against image twisting is that it is not necessary to determine the type of artifacts that could be applied to the image. It can also eliminate pre-processing phase before the construction of the convolution-deconvolution network. For this purpose, we use a quasi-inverse kernel to create a deconvolution process that is consistent with the kernel separation theory. The inverse convolution can be expressed as (8):

where \(\widehat{K}\) is inverse kernel and uj and vj are the jth columns of U and V, respectively. The kernel itself can also be expressed as USVT, which is actually the decomposition of single values, and sj is jth single value. Assuming smaller value for sj will provide suitable conditions for separable filters and thus \(\widehat{K}\) can be approximated for the desired settings. Hence, the main idea raised in this research is implementing the deconvolution process based on the separable kernel kernels with the aim of recovering the feature map outputs. The network can be generally assumed as (9):

where Wl is the weighted mapping of (l-1)th layer on the first lth and bl-1 is the vector bias. It is also worth noting that σ (.) is a nonlinear function, which can be either a sigmoidal function or a hyperbolic tangent. Unlike similar approaches, we used two hidden layers where the first hidden layer (h1) is created by applying kernels to dimensions smaller than the original image.

3.2.2 Loss function

To network training is conducted using a recursive descending gradient algorithm, which comprises two steps of back propagation feed-forward neural network of errors (BPFNN). The squared error function is also expressed as (10):

where TkN and YkN are kth dimension of nth pattern of the corresponding label and the predicted image of higher resolution provided by the convolutional neural network. It should be noted that the measures of transparency as well as the normalized correlation have been used in the cost function to improve weights.

3.3 Real-time design

The approximate time of the calculations for N × N underwater images is equal to about 2n−9 + 27. In this approximation, the processed image is divided into N × N × 64–2 blocks with a size of 64 × 64. If the image is a 16-bit 512 × 512, the processing clock cycles will be estimated as 64 Mega cycles (Mc) similar to the previous approximation. In a similar way if underwater image is an 8-bit 512 × 512, then this value is decreased to its lower limit of 32 Mc. Based on this computation, if we suppose that the computer is working to enhance the underwater images, the processor will operate to work at least at the rate of 2 GHz. Accordingly, this volume of computation is too high cost for an underwater image with today's technology, and, therefore, the image size needs to be reduced to work as a real-time process. We notice that some of the researchers proposed the upgrading operators in hardware implementation methodologies such as FPGA, DSP, and parallel architectures for different images [56, 57, 70, 71].

4 Experimental results

4.1 Datasets

Two sets of underwater photos were used to evaluate the performance of the hybrid algorithm. The first image dataset was taken from the National Institute of Ocean. Underwater images were taken from a depth of 1019 m during deep-sea mineral exploration. The ROSUB 6000 is capable of capturing images of rare underwater corals, fish, various worm species and other objects [48, 49]. The second dataset of images contained a series of images produced by PBRT, which were derived artificially from underwater images. This set is composed of numerous images, most of which are accessible [50] and used by researchers in many studies [51].

All images have three colorful channels including blue, red and green in JPG format. The dimensions of all the images were the same and the resolution of all of them was equal.

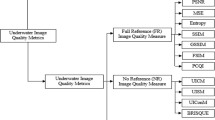

4.2 Evaluation metrics

In the first evaluation step, mean square error (MSE), peak signal to noise ratio (PSNR) and structural similarity (SSIM) measures are evaluated. In fact, as suggested by a review of previous studies, mean square error is one of the most popular and common criteria. Each of these relations is represented as (11)–(13).

where x and y are dimensions of the improved images (q) and the reference image (p). In the third equation, μp, μq, σp and σq are the mean brightness of the reference images, mean brightness of the enhanced image, standard deviation of the reference image, and the standard deviation of the enhanced image, respectively. In addition, C1 and C2 are arbitrary and constant values. This evaluation index determines the position of points in the reference image that corresponds to the optimized image points. Moreover, time is another important measure considered in image optimization. To calculate the time required for enhancement of sample images with different dimensions, MATLAB software's tic-toc function was used, which offers the user an estimate of the processing time.

4.3 Results

Tables 1 and 2 show the results of the simulating underwater image quality enhancement with the aim of color correction for the first and second datasets. There are reference images in each category and the darkening, hazy, and fogging effects are applied step by step. Each of the MSE, PSNR, SSIM and time measures have been estimated in these tables. A darkening, haze, and fogging intensity of 5–40% were applied progressively to images. 200 images from the first dataset and 400 images from the second dataset were randomly selected and incorporated into the quantitative evaluation process. Although using artifacts undermined the desirability of measures, they still had acceptable values compared to various strategies. This reveals the satisfactory performance of the algorithm in the first part. In Tables 3 and 4, the results of the resolution improvement by the convolutional learning model are calculated. Some measuring methods were compared with the proposed method in terms of resolution enhancement, and this analogy was evaluated with respect to measures estimation. The image correction is assumed to be carried out in the first phase and then the super-resolution is applied by the proposed algorithm. At the time of the comparison, different image scales are analyzed and similar methods such as Bicubic, SRCNN [39], FSRCNN [40], A + [41] and finally RFL [42] techniques are compared. Overall, the outputs of the proposed method are greater than previous models.

The outputs of the proposed method in improving the quality of the underwater images are shown in Figs. 3 and 4 in two separate phases. These figures show the percentage increase of darkening, haze, and fogging. Figures 5 and 6 reveal the effect of the proposed super-resolution algorithm using deep learning on two image samples with four scales, respectively.

First part of image quality enhancement: The sample is an image from the first dataset where the first column contains images with 10, 20, 30, and 40% artifacts applied in left-to-right direction. The second column includes the first step of color correction and quality improvement for the first column images

The first part of the image quality enhancement contains a sample image from the second dataset, where the first column includes images with 10, 20, 30, and 40% artifacts in the left-to-right direction. The second column outlines the first step of color correction and quality enhancement for the first column images

4.4 Computational complexity

Data analysis has been performed in the 2019 MATLAB environment and the used system for implementation specified with Intel (R), Core (TM) and Core i7 processors with a frequency of 2.8 GHz, 4 GB RAM and 64-bit operating system. Computational complexity and time analysis in research are such that the integrated algorithm can be considered as Real-Time or near Real-Time methods. The reason for this claim is taking no much time to achieve an enhanced image with the lowest possible level of error by the proposed method. The temporal stability of the algorithm in improving image quality in both databases of underwater images is evident by applying different levels of noise. In the first set of images with dimensions of 32 × 32 by applying different noise levels, the variance level and the mean time are 2.36 s and 0.1088, respectively. The same values for images with dimensions of 256 × 256 are equivalent to 3.74 s and 0.407, respectively. The same criteria for images of the second database for images with dimensions of 32 × 32 and 256 × 256 are 2.26–0.0046 (s) and 0.98–0.607 (s), respectively. Although the image is 8-times larger, the time spent has not changed much. On the other hand, the variance for applying different levels of noise such as fogging and hazing indicates the low computational complexity of the method. Another reason is in calculations, there are several stages of enhance. Therefore, compared to similar solutions, this time consuming is satisfactory. The lightweight structure used for the quality improvement phase with super-resolution is also acceptable comparing to similar methods. Some deep learning-based practices require considerable time to minimize the loss function. The proposed method takes less time than SRCNN, FSRCNN, A + , FSRCNN, and RFL methods. In the case of the BiCubic method, the computational rate is better for the first dataset, but this method has a higher relative error in improving the resolution. It should be noted that the proposed method is based on a deep structure. Figures 7 and 8, show a comparison of time complexity for different artifact enhancement levels and other methods in improving resolution, respectively.

Figures 8 and 9 also show the competitive complexity comparison for low/high dimensional optimized images among different methods in comparing with the proposed method in Fig. 10.

4.5 Discussion

The improvement set of the first part in Fig. 11 has been compared to other analogous methods. In this figure, the performance of the proposed algorithm in a sample of underwater natural sample is compared with similar strategies.

The output of each image represents its satisfactory performance compared to other similar methods. The original underwater images arranged in the first column from left to right with artifacts. In second column, He et al. [50], Third column, Drews et al. [52], Fourth column, Tarel et al. [53], Fifth column, Ancuti et al. [54], sixth column, Barbosa et al. [55], Moghimi et al. [19], and last column proposed are displayed, respectively.

In the set shown in Fig. 12, the average loss function for all four scales of the image change is shown, converges on the optimal minimum values after 50 epochs. There are two main reasons for early emission and convergence to the minimum value. First, the proposed super-resolution algorithm is successful due to the lightweight structure and low layers and the convolutional mapping is removed from the processing set.

The performance accuracy of a super-resolution depends on the number of layers so that more layers can slightly enhance accuracy, but a trade-off is required for the right amount of time and specific repetitions.

That is, if the number of layers is increased to a certain level, then the convergence accuracy and, therefore, super-resolution at each scale is improved, but it will take a longer time, which is not desirable. In Figs, 13, 14, and 15, MSE, PSNR and SSIM measures are computed for the two datasets used, which are derived from low-artifact, medium-artifact and high-artifact modes, respectively. In the same vein, in Figs. 16, 17, 18, all three measures are calculated for the super-resolution in the network with low, medium, and high number of layers, with the mean layers producing better outputs.

5 Conclusion

Due to the low quality of underwater images, an efficient model with qualitative enhancement capability has been proposed. In this study, a two-step approach is proposed. In the first step, artifacts such as darkening, haze, and fogging are removed along with color correction. At this point, color correction of the images together with the removal of the artifacts yields an optimal optimization model. The second step involves the resolution enhancement of the image, which is performed at various scales using the deep learning neural network method. The results of the present study are applicable to systems that require multiple simultaneous underwater imaging functions. The results can contribute to the improvement of imaging systems and affect their multiple capabilities. Due to the poor image quality, sometimes the operator analysis will be time consuming and laborious and, therefore, an effective model is required.

The remarkable point of the algorithm in responding is that it uses a lightweight structure due to applying an efficient algorithm for different levels of noise, as well as exploiting super-resolution of images. Therefore, there is no have high computational complexity. In the future, by optimizing the image quality in both steps, we intend to optimize results and separate image parts.

This method can be used in the real world and suitable for various underwater images.

References

Tang, C., von Lukas, U.F., Vahl, M., Wang, S., Wang, Y., Tan, M.: Efficient underwater image and video enhancement based on Retinex. SIViP 13(5), 1011–1018 (2019)

Kanaev, A.V., Smith, L.N., Hou, W.W., Woods, S.: Restoration of turbulence degraded underwater images. Opt. Eng. 51(5), 057007 (2012)

Yu, J., Wang, Y., Zhou, S., Zhai, R., Huang, S.: Unmanned aerial vehicle (UAV) image haze removal using dark channel prior. J. Phys. Conf. Ser. 1324(1), 012036 (2019)

Golts, A., Freedman, D., Elad, M.: Unsupervised single image dehazing using dark channel prior loss. IEEE Transactions on Image Processing (2019).

Khosravi, M.R., Samadi, S.: Data compression in ViSAR sensor networks using non-linear adaptive weighting. EURASIP J. Wirel. Commun. Netw. 2019(1), 1–8 (2019)

Cho, Y., Malav, R., Pandey, G., Kim, A.: DehazeGAN: underwater haze image restoration using unpaired image-to-image translation. IFAC-PapersOnLine. 52(21), 82–85 (2019)

Schettini, R., Corchs, S.: Underwater image processing: state of the art of restoration and image enhancement methods. EURASIP J. Adv. Signal Process. 2010, 1–4 (2010)

Lu, H., Li, Y., Zhang, Y., Chen, M., Serikawa, S., Kim, H.: Underwater optical image processing: a comprehensive review. Mobile Netw. Appl. 22(6), 1204–1211 (2017)

Sethi, R., Sreedevi, I.: Adaptive enhancement of underwater images using multi-objective PSO. Multimed. Tools Appl. 78(22), 31823–31845 (2019)

Lu, H., Li, Y., Xu, X., Li, J., Liu, Z., Li, X., Yang, J., Serikawa, S.: Underwater image enhancement method using weighted guided trigonometric filtering and artificial light correction. J. Vis. Commun. Image Represent. 1(38), 504–516 (2016)

Chiang, J.Y., Chen, Y.C.: Underwater image enhancement by wavelength compensation and dehazing. IEEE Trans. Image Process. 21(4), 1756–1769 (2011)

Khosravi, M.R., Moghimi, M.K.: Underwater optical image processing. Modern Approaches Oceanogr Petrochem. Sci 1(1), 1–2 (2018). https://doi.org/10.32474/MAOPS.2018.01.000101

Anwer, A., Ali, S.S., Khan, A., Mériaudeau, F.: Real-time underwater 3D scene reconstruction using commercial depth sensor. In 2016 IEEE International Conference on Underwater System Technology: Theory and Applications (USYS) 2016 Dec 13 (pp. 67–70). IEEE.

Çelebi, A.T., Ertürk, S.: Visual enhancement of underwater images using empirical mode decomposition. Expert Syst. Appl. 39(1), 800–805 (2012)

Abas, P.E., De Silva, L.C.: Review of underwater image restoration algorithms. IET Image Proc. 13(10), 1587–1596 (2019)

Han, M., Lyu, Z., Qiu, T., Xu, M.: A review on intelligence dehazing and color restoration for underwater images. IEEE Transactions on Systems, Man, and Cybernetics: Systems. 2018 Jan 23.

Shortis, M., Abdo, E.H.: A review of underwater stereo-image measurement for marine biology and ecology applications. In Oceanography and Marine Biology 2016 Apr 19 (pp. 269–304). CRC Press.

Li, C., Guo, J., Guo, C.: Emerging from water: underwater image color correction based on weakly supervised color transfer. IEEE Signal Process. Lett. 25(3), 323–327 (2018)

Moghimi, M.K., Mohanna, F.: A joint adaptive evolutionary model towards optical image contrast enhancement and geometrical reconstruction approach in underwater remote sensing. SN Appl. Sci. 1(10), 1242 (2019)

Ji, T., Wang, G.: An approach to underwater image enhancement based on image structural decomposition. J. Ocean Univ. Chin. 14(2), 255–260 (2015)

Khosravi, M.R.: The shortfalls of underwater sensor network simulators. Sea Technol. 60(5), 41–41 (2019)

Ghani, A.S., Isa, N.A.: Underwater image quality enhancement through Rayleigh-stretching and averaging image planes. Int. J. Naval Archit. Ocean Eng. 6(4), 840–866 (2014)

Seemakurthy, K., Rajagopalan, A.N.: Deskewing of underwater images. IEEE Trans. Image Process. 24(3), 1046–1059 (2015)

Panetta, K., Gao, C., Agaian, S.: Human-visual-system-inspired underwater image quality measures. IEEE J. Oceanic Eng. 41(3), 541–551 (2015)

Galdran, A., Pardo, D., Picón, A., Alvarez-Gila, A.: Automatic red-channel underwater image restoration. J. Vis. Commun. Image Represent. 1(26), 132–145 (2015)

Bianco, G., Muzzupappa, M., Bruno, F., Garcia, R., Neumann, L.: A new color correction method for underwater imaging. Int. Arch. Photogramm., Remote Sens Spatial Inf. Sci. 40(5), 25 (2015)

Fu, X., Fan, Z., Ling, M., Huang, Y., Ding, X.: Two-step approach for single underwater image enhancement. In 2017 International Symposium on Intelligent Signal Processing and Communication Systems (ISPACS) 2017 Nov 6 (pp. 789–794). IEEE.

Iqbal, K., Salam, R.A., Osman, A., Talib, A.Z.: Underwater Image Enhancement Using an Integrated Colour Model. IAENG International Journal of computer science. 2007 Dec 1;34(2).

Liu, Y., Xu, H., Shang, D., Li, C., Quan, X.: An underwater image enhancement method for different illumination conditions based on color tone correction and fusion-based descattering. Sensors 19(24), 5567 (2019)

Fiuzy, M.M., Rezaei, K.F., Haddadnia, J.M.: A novel approach for segmentation special region in an image. Majlesi J. Multimed. Process. 2011 Sep 23;1(2).

Arel, I., Rose, D.C., Karnowski, T.P.: Research frontier: deep machine learning–a new frontier in artificial intelligence research. IEEE Comput. Intell. Mag. 5(4), 13–18 (2010)

He, K., Zhang, X., Ren, S., Sun, J.: Spatial pyramid pooling in deep convolutional networks for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 37(9), 1904–1916 (2015)

Krizhevsky, A., Sutskever, I., Hinton, G.E.: Imagenet classification with deep convolutional neural networks. In Advances in neural information processing systems 2012 (pp. 1097–1105).

Burger, H.C., Schuler, C.J., Harmeling, S.: Image denoising: Can plain neural networks compete with BM3D?. In 2012 IEEE conference on computer vision and pattern recognition 2012 Jun 16 (pp. 2392–2399). IEEE.

Schuler, C.J., Christopher, B.H., Harmeling, S., Scholkopf, B.: A machine learning approach for non-blind image deconvolution. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2013 (pp. 1067–1074).

Cui, Z., Chang, H., Shan, S., Zhong, B., Chen, X.: Deep network cascade for image super-resolution. In European Conference on Computer Vision 2014 Sep 6 (pp. 49–64). Springer, Cham.

Arun, P.V., Buddhiraju, K.M.: A deep learning based spatial dependency modelling approach towards super-resolution. In 2016 IEEE International Geoscience and Remote Sensing Symposium (IGARSS) 2016 Jul 10 (pp. 6533–6536). IEEE.

Aymaz, S., Köse, C.: A novel image decomposition-based hybrid technique with super-resolution method for multi-focus image fusion. Information Fusion. 1(45), 113–127 (2019)

Yao, T., Luo, Y., Chen, Y., Yang, D., Zhao, L.: Single-image super-resolution: a survey. In International Conference in Communications, Signal Processing, and Systems 2018 Jul 14 (pp. 119–125). Springer, Singapore.

Khosravi, M.R., Basri, H., Rostami, H., Samadi, S.: distributed random cooperation for vbf-based routing in high-speed dense underwater acoustic sensor networks. J. Supercomput. 74(11), 6184–6200 (2018)

Zhang, Y., Li, K., Li, K., Wang, L., Zhong, B., Fu, Y.: Image super-resolution using very deep residual channel attention networks. In Proceedings of the European Conference on Computer Vision (ECCV) 2018 (pp. 286–301).

Kim, J., Kwon, L.J., Mu, L.K.: Deeply-recursive convolutional network for image super-resolution. In Proceedings of the IEEE conference on computer vision and pattern recognition 2016 (pp. 1637–1645).

Dong, C., Loy, C.C., He, K., Tang, X.: Image super-resolution using deep convolutional networks. IEEE Trans. Pattern Anal. Mach. Intell. 38(2), 295–307 (2015)

Dong, C., Loy, C.C., Tang, X.: Accelerating the super-resolution convolutional neural network. In European conference on computer vision 2016 Oct 8 (pp. 391–407). Springer, Cham.

Timofte, R., De Smet, V., Van Gool, L.: A+: Adjusted anchored neighborhood regression for fast super-resolution. In Asian conference on computer vision 2014 Nov 1 (pp. 111–126). Springer, Cham.

Schulter, S., Leistner, C., Bischof, H.:Fast and accurate image upscaling with super-resolution forests. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2015 (pp. 3791–3799).

Prabhakar, C.J., Kumar, P.U.: An image based technique for enhancement of underwater images. arXiv preprint, arXiv:1212.0291. 2012 Dec 3.

Ramadass, G.A., Ramesh, S., Selvakumar, J.M., Ramesh, R., Subramanian, A.N., Sathianarayanan, D., Harikrishnan, G., Muthukumaran, D., Jayakumar, V.K., Chandrasekaran, E., Murugesh, M.: Deep-ocean exploration using remotely operated vehicle at gas hydrate site in Krishna-Godavari basin, Bay of Bengal. Curr. Sci. 99(6), 809–815 (2010)

Srividhya, K., Ramya, M.M.: Accurate object recognition in the underwater images using learning algorithms and texture features. Multimed. Tools Appl. 76(24), 25679–25695 (2017)

He, K., Sun, J., Tang, X.: Single image haze removal using dark channel prior. IEEE Trans. Pattern Anal. Mach. Intell. 33(12), 2341–2353 (2010)

Mobley, C.D.: Light and water: radiative transfer in natural waters. Academic press, Cambridge (1994)

Drews, P., Nascimento, E., Moraes, F., Botelho, S., Campos, M.: Transmission estimation in underwater single images. In Proceedings of the IEEE international conference on computer vision workshops 2013 (pp. 825–830).

Tarel, J.P., Hautiere, N.: Fast visibility restoration from a single color or gray level image. In 2009 IEEE 12th International Conference on Computer Vision 2009 Sep 29 (pp. 2201–2208). IEEE.

Ancuti, C., Ancuti, C.O., Haber, T., Bekaert, P.: Enhancing underwater images and videos by fusion. In 2012 IEEE Conference on Computer Vision and Pattern Recognition 2012 Jun 16 (pp. 81–88). IEEE.

Barbosa, W.V., Amaral, H.G., Rocha, T.L., Nascimento, E.R.: Visual-quality-driven learning for underwater vision enhancement. In 2018 25th IEEE International Conference on Image Processing (ICIP) 2018 Oct 7 (pp. 3933–3937). IEEE.

Hitam, M.S., Awalludin, E.A., Yussof, W.N., Bachok, Z.: Mixture contrast limited adaptive histogram equalization for underwater image enhancement. In2013 International conference on computer applications technology (ICCAT) 2013 Jan 20 (pp. 1–5). IEEE.

Khosravi, M.R., Basri, H., Rostami, H.: Efficient routing for dense UWSNs with high-speed mobile nodes using spherical divisions. J. Supercomput. 74(2), 696–716 (2018)

Zhang, S., Wang, T., Dong, J., Yu, H.: Underwater image enhancement via extended multi-scale Retinex. Neurocomputing. 5(245), 1–9 (2017)

Mercado, M.A., Ishii, K., Ahn, J.: Deep-sea image enhancement using multi-scale retinex with reverse color loss for autonomous underwater vehicles. InOCEANS 2017-Anchorage 2017 Sep 18 (pp. 1–6). IEEE.

Torres-Méndez, L.A., Dudek, G.: Color correction of underwater images for aquatic robot inspection. InInternational Workshop on Energy Minimization Methods in Computer Vision and Pattern Recognition 2005 Nov 9 (pp. 60–73). Springer, Berlin, Heidelberg.

Petit, F., Capelle-Laizé, A.S., Carré, P.: Underwater image enhancement by attenuation inversionwith quaternions. In 2009 IEEE International Conference on Acoustics, Speech and Signal Processing 2009 Apr 19 (pp. 1177–1180). IEEE.

Rizzi, A., Gatta, C., Marini, D.: A new algorithm for unsupervised global and local color correction. Pattern Recogn. Lett. 24(11), 1663–1677 (2003)

Lu, H., Li, Y., Serikawa, S.: Single underwater image descattering and color correction. In 2015 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP) 2015 Apr 19 (pp. 1623–1627). IEEE.

Li, Y., Lu, H., Li, J., Li, X., Li, Y., Serikawa, S.: Underwater image de-scattering and classification by deep neural network. Comput. Electr. Eng. 1(54), 68–77 (2016)

Hollinger, G.A., Mitra, U., Sukhatme, G.S.: Active classification: Theory and application to underwater inspection. In Robotics Research 2017 (pp. 95–110). Springer, Cham.

Mahmood, A., Bennamoun, M., An, S., Sohel, F., Boussaid, F., Hovey, R., Kendrick, G., Fisher, R.B.: Deep learning for coral classification. InHandbook of Neural Computation 2017 Jan 1 (pp. 383–401). Academic Press, Cambridge

Kim, J.H., Dowling, D.R.: Blind deconvolution of extended duration underwater signals. J. Acoust. Soc. Am. 135(4), 2200 (2014)

Lu, H., Li, Y., Hu, X., Yang, S., Serikawa, S.: Real-time underwater image contrast enhancement through guided filtering. In International Conference on Image and Graphics 2015 Aug 13 (pp. 137–147). Springer, Cham.

Li, J., Skinner, K.A., Eustice, R.M., Johnson-Roberson, M.: WaterGAN: Unsupervised generative network to enable real-time color correction of monocular underwater images. IEEE Robot. Autom. Lett. 3(1), 387–394 (2017)

Reza, A.M.: Realization of the contrast limited adaptive histogram equalization (CLAHE) for real-time image enhancement. J. VLSI Signal Process. Syst. Signal Image Video Technol. 38(1), 35–44 (2004)

Yang, J., Jiang, B., Lv, Z., Jiang, N.: A real-time image dehazing method considering dark channel and statistics features. J. Real-Time Image Proc. 13(3), 479–490 (2017)

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

We have no conflict of interest to declare.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Moghimi, M.K., Mohanna, F. Real-time underwater image resolution enhancement using super-resolution with deep convolutional neural networks. J Real-Time Image Proc 18, 1653–1667 (2021). https://doi.org/10.1007/s11554-020-01024-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11554-020-01024-4