Abstract

Recently, cooperative and distributed processing has been attracted a lot of attention, especially in wireless sensor networks, to prolong the network’s lifetime. So, distributed adaptive filtering, which operates in a distributed and adaptive manner, has been established. In the distributed adaptive networks, in addition to filter coefficients, the length of the adaptive filter is also unknown in general, and it should be estimated. The distributed incremental fractional tap-length (FT) algorithm is an approach to determine the adaptive filter length in a distributed scheme. In the current study, we analyze the steady-state behavior of the distributed incremental variable FT LMS algorithm. According to the analysis, we derive the mathematical expression for the steady-state tap-length at each particular sensor. The obtained results indicate that this algorithm overestimates optimal tap-length. Numerical simulations are provided to confirm the theoretical analyses.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Wireless sensor network (WSN) has been adopted as one of the most potential applications in many research fields, such as environmental monitoring, factory instrumentation, power transmission and distribution systems, and military surveillance [1]. In many applications, the objective is to estimate a desired unknown parameter employing measurements collected from the sensor nodes and the distributed solution is usually used to estimate the parameter. The distributed estimation extracts the information collected from the sensors distributed in an area. The distribution of the sensors, in addition to the temporal dimension, provides spatial diversity to improve the robustness of the processing tasks [2]. In the distributed solution, each sensor node relies on its local measurements, communicates with its immediate neighbors, and the processing is distributed between all sensors. In this solution, the processing and communications measurements are significantly decreased.

Recently, distributed adaptive estimation approaches (or adaptive networks) have been proposed to solve the linear estimation problem in a distributed and adaptive manner. The distributed adaptive approach extends the adaptive filters to the network domain, which is performed without prior knowledge of data statistics. The adaptive networks’ performance strongly depends on the cooperation modes of incremental and diffusion [3]. In the incremental scheme, a cyclic path is needed, and the sensors communicate with their neighbors inside the path. The incremental LMS algorithm [4], incremental RLS algorithm [5], incremental affine projection-based adaptive (APA) algorithm [6] are distributed adaptive estimations that use incremental cooperation. When more communications and energy resources are accessible, a cooperative diffusion mode is utilized. In this mode, each node communicates with all its neighbors as managed by the network topology, and it is not required any cyclic path. The diffusion LMS [7], diffusion RLS algorithm [8], and diffusion APA [9] are distributed adaptive estimation algorithms that use diffusion cooperation.

In most of the previous studies of distributed adaptive estimation algorithms [4,5,6,7,8,9], the tap-length of the adaptive filters is constant for each node. This issue is not proper in general because the optimal value of tap-length is variable or unknown. The tap-length of a linear filter significantly influences its performance. The deficient tap-length likely increases the mean-squared error (MSE), whereas the excess mean-squared error (EMSE) and computational cost will be high when the tap-length is too large. Hence, there is an optimal value for tap-length to balance the conflicting requirements of performance and complexity. Furthermore, in many applications, the optimal tap-length may be time-variant. So, a variable tap-length algorithm is required to determine the optimal length. In the single filter domain, many algorithms have been introduced for this purpose [10,11,12,13,14,15,16,17,18,19,20,21]. The most efficient algorithm among them is the FT algorithm [21]. This algorithm, like the LMS and its variants [22,23,24] is simple and has an excellent performance. Thus, it is considered a popular algorithm, and in the adaptive networks domain, it is more suitable than all variable tap-length algorithms. In [25], the FT algorithm has developed in the context of incremental learning for distributed networks. Two recursive algorithms run concurrently, one for estimating the tap-length and the other for estimating the tap-weights. The authors in [25] only provide a steady-state analysis to examine the steady-state performance of the tap-weight vector. This issue is performed by deriving the theoretical expressions for the mean-squared deviation (MSD), MSE, and EMSE for each node within the network. For this purpose, the authors assume a fixed tap-length at the steady-state scenario, but they have not provided any theoretical expression for this steady-state tap-length. In this paper, we investigate a theoretical expression for steady-state tap-length achieved in the incremental structure tap-length adaption for each node. The achieved results indicate that this algorithm overestimates the optimal tap-length. Simulation results validate the derived theoretical expressions.

The remains of the paper are organized as follows. In Sect. 2, we provide the background that includes the estimation problem and its incremental-based solution. In part 3, we analyze the performance of distributed incremental variable FT LMS algorithm. In this part, we also calculate the mathematical expression for the steady-state tap-length at each sensor. The comparison of the simulation results and theoretical analyses is given in Sect. 4, while conclusion remarks are presented in Sect. 5.

Notation: For ease of reference, the main symbols used in this paper are listed in Table 1.

2 Incremental FT-LMS algorithm

We consider N sensor nodes spatially distributed in a region. The objective is to estimate the coefficients and the length \(L_{opt}\) of an unknown vector \(\mathbf{w}^o_{L_{opt}}\) from multiple measurements obtained from N sensors of the network. The adaption rules for the coefficients and the length of \(\mathbf{w}^o_{L_{opt}}\) are decoupled, where the selection of each node does not depend on the other. As we will present later, the tap-length L is calculated using the tap-length estimation algorithm. We also suppose that each node k has access to time realizations \(\{d_k(i), \mathbf{u}_{k,i}\}\) of zero-mean spatial data \(\{d_k,\mathbf{u}_k\}\), where \(d_k\) and \(\mathbf{u}_k\) are respectively the scalar and \(1 \times L\) row regression vector.

By collecting the measurement data and regression into global matrices, the following definitions are made:

First, we consider the unknown parameter coefficients estimation. The aim is the estimation of \(L\times 1\) vector \(\mathbf{w}\) that solves \(arg \ min {\mathop {}_\mathbf{w}{J_L(\mathbf{w})}}\), where

Let \({\varvec{\psi } }^{(i)}_k\) be the local estimation of the unknown parameter at node k in time instant i. In [4], a distributed incremental LMS (DILMS) approach is reported to solve the above optimization problem as follows:

where the parameter \(\mu _k\) is the proper selected positive local step-size. Each sensor k emploies the local data \(d_k(i)\), \(\mathbf{u}_{k,i}\) and \({\varvec{\psi }}^{(i)}_{k-1}\) received from the sensor \(k-1\). Then, \({\varvec{\psi }}^{(i)}_N\) is used as the initial condition for the next time at sensor node \(k=1\).

Considering the tap-length L, the segmented cost function is defined as [25]:

where \(1 \le M \le L\), \(\mathbf{U}_M\) and \(\mathbf{w}_M\) respectively contain the initial M column vectors of \(\mathbf{U}\) and M elements of \(\mathbf{w}\) such as:

where w(j) is the j’th element of \(\mathbf{w}\) and \(\mathbf{u}_k(1:M)\) consists of the M initial elements of \(\mathbf{u}_k\). In [25], to explore the optimal length \(L_{opt}\), the smallest difference of MSE estimation is proposed as:

where \(\varepsilon \) is a small positive value and it is predetermined by the system requirements. The integer value \(\Delta \) prevents the tap-length to be suboptimal.

In [25], to solve the optimization problem (6) a solution by developing the FT algorithm within the context of incremental cooperation has been proposed. With the assumption that \({\ell }_{k,f}(i)\) denotes the local estimation of the FT at sensor k in time instant i, in the specific topological circle, sensor k receives the calculated FT \({\ell }_{k-1,f}(i)\) from sensor \(k-1\) and updates its local estimation as:

where

and

In which \(\mathbf{u}_{k,i}(1:L_k(i)-\Delta )\) and \({\varvec{\psi }}^{(i)}_{k-1}(1:L_k(i)-\Delta )\) consist of the initial \(L_k(i)-\Delta \) elements of \(\mathbf{u}_{k,i}\) and \({\varvec{\psi }}^{(i)}_{k-1}\) respectively. In (7) \({\alpha }_k\) denotes the local leakage factor and \({\gamma }_k\) indicates the local step-size for \({\ell }_{k,f}(i)\) adaption at sensor k. \({\ell }_{k,f}(i)\) is no longer forced to be an integer value, and the local estimation of integer tap-length \(L_k(i)\) is achieved from the FT as follows:

where \(\lfloor .\rfloor \) indicates the floor operation, which rounds down to the nearest integer, and \({\delta }_k\) is a small integer. Also the tap-length during its evolution is conditioned to be not less than a lower floor value \(L_{min}\), where \(L_{min}>\Delta \) (usually \(L_{min}={\Delta }+{\delta }_k+1\)), i.e., when the tap-length fluctuates under \(L_{min}\), it is set to \(L_{min}\). The necessity of this operation is due to using \(L_k(i)-\Delta \) as a tap-length in the incremental FT algorithm.

With \(L_k(i)\), the tap coefficients are then updated by (3) in which the length of vectors \(\mathbf{u}_{k,i}\) and \({\varvec{\psi }}^{(i)}_{k-1}\) are adjusted by \(L_k(i)\).

3 Steady-state analysis of incremental variable FT LMS networks

In this part, we intend to obtain the mathematical expression for the steady-state tap-length of incremental FT algorithm for each sensor node. First, we assume a linear model as:

where \(v_k(i)\) is temporally and spatially white noise element with zero mean and variance \({\sigma }^2_{v,k}\) and independent of \(\mathbf{u}_{L_{opt}\ell ,j}\) and \(d_{\ell }(j)\) for all \(\ell \), j. In (11), \(\mathbf{u}_{L_{opt}k,i}\) is a vector of length \(L_{opt}\). Since in this scenario, the length of the vectors varies in each iteration, so it is convenient to define an upper bound for the length and pad the vectors by zeros to achieve this length. So, we obtain vectors with the same length that simplifies the calculations. Thus, we define the upper bound for length \(L_{ub}\) as:

By this definition, using \(\mathbf{w}_{L_{ub}}\) we denote the unknown parameter \(\mathbf{w}^\mathbf{o}_{L_{opt}}\), where \(\mathbf{w}_{L_{ub}}\) is obtained by padding \(\mathbf{w}^\mathbf{o}_{L_{opt}}\) with \(L_{ub}-L_{opt}\) zeros. In this way, we define the vector \({\varvec{\psi }}^{(i)}_{L_{ub},k-1}\) that is obtained by padding \({\varvec{\psi }}^{(i)}_{k-1}\) with \(L_{ub}-L_k(i)\) zeros. Now we partition the unknown parameter \(\mathbf{w}_{L_{ub}}\) into three parts as:

where \(\dot{\mathbf{w}}\) is the part modeled by \({\dot{\varvec{\psi }}}^{(i)}_{k-1}\), and \(\ddot{\mathbf{w}}\) is the part modeled by \({\ddot{\varvec{\psi }}}^{(i)}_{k-1}\) such that:

where \({\varvec{\psi }}^{(i)}_{k-1}(1:L_k(i)-\Delta )\) consists of the initial \(L_k(i)-\Delta \) elements of \({\varvec{\psi }}^{(i)}_{k-1}\) and \({\varvec{\psi }}^{(i)}_{k-1}(L_k(i)-\Delta +1:L_k(i))\) consists of the last \(\Delta \) elements of \({\varvec{\psi }}^{(i)}_{k-1}\) and \(\mathop \mathbf{w}\limits ^{...}\) is the undermodeled part. Now we define the vector \(\overline{\varvec{\psi }}^{(i)}_{L_{ub},k-1}\), that computes the difference among the estimated weight at sensor \(k-1\) and the desired solution \(\mathbf{w}_{L_{ub}}\) as:

and it is partitioned into three parts as:

For convenience of analysis, similar to \(\mathbf{w}_{L_{ub}}\) and \(\overline{\varvec{\psi }}^{(i)}_{L_{ub},k-1}\), we partition the regression vector \(\mathbf{u}_{L_{ub},k,i}\) with length \(L_{ub}\) into three parts \(\dot{\mathbf{u}}_{k,i}\), \(\ddot{\mathbf{u}}_{k,i}\) and \({\mathop {{\varvec{u}}}\limits ^{...}}_{k,i}\). Considering the above notations and replacing (11) and (15) into (8) and (9) and padding all the vectors in (8), (9) and (11) with zeros to make their length equal to \(L_{ub}\), we have:

and

The main term in the FT updating (7), is written as:

Substituting (17) and (18) in (19) results:

By multiplying the parentheses in (20), we get:

For the ease of presentation, we define the following parameters:

From (7), (21) and (22) we have:

Now, according to the update equation, we analyze the steady-state performance. For simplicity of analysis, the following assumptions are made.

-

1.

The regressors \(\mathbf{u}_{L_{ub},k,i}\) are spatially and temporally independent.

-

2.

The components of \(\mathbf{u}_{L_{ub}k,i}\) are obtained from white Gaussian random process with zero mean and variance \({\sigma }^2_{u,k}\). The covariance matrix of \(\mathbf{u}_{L_{ub}k,i}\) is \(\mathbf{R}_{u,k}={\sigma }^2_{u,k}\mathbf{I}\).

-

3.

In the steady-state, the tap-length in node k approximately converges to the constant value \(L_k(\infty )\). Also we assume that at steady-state, \(L_k(\infty )>L_{opt}\). This overestimate phenomenon will be justified by the results of the analysis.

-

4.

The elements of the unknown parameter are obtained from the zero-mean random sequence with variance \({\sigma }^2_o\).

-

5.

We assume that in the steady-state \(\{\left[ \begin{array}{c} {\dot{\overline{\varvec{\psi }}}}^{(i)}_{k-1} \\ {\ddot{\overline{\varvec{\psi }}}}^{(i)}_{k-1} \end{array} \right] {\left[ \begin{array}{c} {\dot{\overline{\varvec{\psi }}}}^{(i)}_{k-1} \\ {\ddot{\overline{\varvec{\psi }}}}^{(i)}_{k-1} \end{array} \right] }^T\}={\sigma }^2_{\overline{\varvec{\psi }},k-1}I, \)where \({\sigma }^2_{\overline{\varvec{\psi }},k-1}\) is the variance of the elements of \({\dot{\overline{\varvec{\psi }}}}^{(i)}_{k-1}\) and \({\ddot{\overline{\varvec{\psi }}}}^{(i)}_{k-1}\).

As a result of these assumptions, we can say that \({\varvec{\psi }}^{(i)}_{k-1}\) and consequently \({\overline{\varvec{\psi }}}^{(i)}_{L_{ub},k-1}\) (or \({\dot{\overline{\varvec{\psi }}}}^{(i)}_{k-1}\) and \({\ddot{\overline{\varvec{\psi }}}}^{(i)}_{k-1}\)) are independent from \(\mathbf{u}_{L_{ub},k,i}\) and \(v_k(i)\).

Considering the assumptions 1-5 and performing the mathematical expectations from both sides of (23) in steady-state, we have:

With the assumption of \(L_k(\infty )\) and \(L_{k-1}(\infty )\) as steady-state tap-length of nodes k and \(k-1\) we have:

To continue, we evaluate the moments in (25). From the assumptions 1–5 and their results we have:

According to assumption in the steady-state, \(L_k(\infty )>L_{opt}\), so, in steady-state \(\mathop \mathbf{w}\limits ^{...}=0\) and it is resulted that in the steady-state the terms G and H will disappear. From this and substituting(26) in (25) result:

If \((L_{k-1}(\infty )-{\Delta }){>}L_{opt}\), then in the steady-state \(\ddot{\mathbf{w}}=0\), but, if \((L_{k-1}(\infty )-{\Delta }){\le }L_{opt}\), then \(\ddot{\mathbf{w}}\) consists of \(L_{opt}-L_{k-1}(\infty )+{\Delta }\) nonzero elements of \(\mathbf{w}^{o}_{L_{opt}}\), so we have:

In fact, if \(L_k(\infty )\) and \(L_{k-1}(\infty )\) are not exactly equal, but are almost equal. We can temporarily assume \(L_k(\infty )=L_{k-1}(\infty )\), and rewrite (27) as:

Now if we assume that \(L_{k-1}(\infty ){>}(L_{opt}+{\Delta })\), then according to (28) discrepancy is seen between the parties of (29). Because of this assumption, the left-hand side of (29) is higher than zero, but the right-hand side has a negative value. Thus, we conclude that \({L}_{opt}{\le }L_{k-1}(\infty ){\le }(L_{opt}+{\Delta })\) will hold true. According to this and by defining \(\mathbf{P}_{L_{ub},k-1}={\overline{\varvec{\psi }}}^{({\infty })}_{L_{ub},k-1}\) and partitioning of \(\mathbf{P}_{L_{ub},k-1}\) similar to \({\overline{\varvec{\psi }}}^{(i)}_{L_{ub},k-1}\) in (16) into three parts \({\dot{\mathbf{P}}}_{k-1}\), \({\ddot{\mathbf{P}}}_{k-1}\) and \({\mathop \mathbf{P}\limits ^{...}}_{k-1}\) we can rewrite (27) as:

In order to evaluate the term \(E\{{\Vert {\ddot{\mathbf{P}}}_{k-1}\Vert }^2\}\) we need the steady-state MSD of the deficient length DILMS algorithm. In [26], we have introduced the concept of deficient length DILMS algorithm and derived an expression for its steady-state MSD. The main results are included in appendix A to make the paper self-contained. Here we rewrite the resulting MSD that appears in appendix A, (71), as:

In comparison with (71) here the subscript \(L_{opt}\) in \(E\{{\Vert \mathbf{P}_{L_{opt}, k-1}\Vert }^2\}\) is modified as \(L_{ub}\), since here we are assuming that the length of the unknown parameter equals to \(L_{ub}\) . In (31), \(\Pi _{k,1}\) and \(s_k\) are defined as:

with

and

By comparing (33) and (34) with (66) and (69), we observe that in (33) and (34), M has changed to \(L_{k-1}(\infty )\), since here in the steady-state the length of adaptive filter in sensor \(k-1\) is equal to \(L_{k-1}(\infty )\), while in appendix A, length M is considered for the adaptive filter of each sensor. Also \({\Vert {\ddot{\mathbf{w}}}_{L_{opt}-M}\Vert }^2\) in (69) has changed to \({\Vert \mathop \mathbf{w}\limits ^{...}\Vert }^2\) in (34), since according to partitioning in (13), here \({\ddot{\mathbf{w}}}_{L_{opt}-M}\) is equivalent to \(\mathop \mathbf{w}\limits ^{...}\), which is also consistent with \(\mathop \mathbf{w}\limits ^{...}=0\) in the steady-state. Now using (31)-(34) we want to compute \(E\{{\Vert {\ddot{\mathbf{P}}}_{k-1}\Vert }^2\}\). In order to do so, first we divide the steady-state MSD into three parts as:

Using the assumption 5, and since \({{\mathop \mathbf{P}\limits ^{...}}_{k-1}=\mathop {\overline{\varvec{\psi }}}\limits ^{...}}^{(\infty )}_{k-1} =\mathop \mathbf{w}\limits ^{...}=0\), we have:

Substituting (36) into (35), we get:

According to (31), the eqution (38) is rewritten as:

or

where

with

Substituting (40) into (30) results:

Equation (43) can be arranged as the following:

In the resulting equation, \(\Pi _{k,1}\) depends on \({\beta }_k\) and according to (33) depends on \(L_{k-1}(\infty )\). But, on the other hand, as previously explained, we know \(L_{opt}{\le }L_{k-1}(\infty ){\le }(L_{opt}+{\Delta })\), and in practice \(\Delta {\ll } L_{opt}\); thus, \(L_{k-1}(\infty )\) is very close to \(L_{opt}\). The equation (33) can be rewritten as:

By defining \(h_k\) and \(g_k\) as:

(44) can be written as:

It should be noted that (47) is a coupled equation: it contains \(L_k(\infty )\) and \(L_{k-1}(\infty )\), i.e., information of two different locations. To mitigate this issue, we use ring topology [4]- [6]. Thus, by iterating (47), we have:

Observe that according to (48), \(L_{k-1}(\infty )\) can be express in term of \(L_{k-3}(\infty )\) as:

By iterating in this manner, we have:

We use N quantities for each sensor k as:

and \(m_k\) is defined as:

From (53), we illustrate the desired steady-state tap-length as:

The equation (54) renders a mathematical expression of the steady-state tap-length. Because of the complicated form of the obtained results for the steady-state tap-length, it is almost difficult to make beneficial information about the steady-state tap-length of each sensor node. To achieve a clear view of the steady-state tap-length, we simplify the equation (54). For this issue, we assume that all sensors employ the same step size for the tap-length adaption, i.e., \({\gamma }_k=\gamma ,\ \forall k\epsilon {\mathcal {N}}\), then, we assume that \(\mathbf{R}_{u,k}={\sigma }^2_u\mathbf{I}\). With these assumptions, we have:

Furthermore, we assume \(\gamma {\sigma }^2_u{\sigma }^2_o\ll 1\) such that:

Also, \(m_k\) can be approximate as:

Substituting (56) and (57) in (54) result:

Since the value of steady-state tap-length in (58) rarely is an integer, the steady-state tap-length usually varies nearby this value. The last two terms of the right-hand side of (58) are small and can be ignored. So, the steady-state tap-length is close to the value \(L_{opt}+\Delta \), as will be presented in the simulations. This gives the result that the incremental FT algorithm overestimates the steady-state tap-length, and the third assumption is confirmed.

4 Simulation results

In this part, simulations are presented to verify the theoretical results of the paper. Numerical simulations are made under three low noise, high noise, and large step sizes for tap-length adaption conditions.

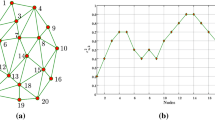

4.1 Low-noise scenario (SNR=20dB)

We assume a network with N=12 nodes exploring an unknown parameter with length \(L_{opt}\)=20. The elements of the unknown parameter are obtained from a white and zero-mean random sequence with variance \({\sigma }^2_o\)=0.01. The regressors are independent zero-mean Gaussian variables, and their covariance matrix is \(\mathbf{R}_{u,k}=\mathbf{I}\). The observation noise is zero-mean white Gaussian and scaled to have the SNR=20dB. The step size for the DILMS algorithm is set to \({\mu _k}\)=0.05 for all nodes. The parameters of the incremental FT algorithm are chosen as \({\delta }_k\)=1, \({\alpha }_k\)=0.001, \({\gamma }_k\)=6, \(\Delta \)=6 with initial tap-length that are set in the minimum value \(L_{min}\)=8. Figures 1 and 2 respectively show the evolutions of the fractional and integer tap-length, under a low noise condition for node k=1. To obtain the integer values for the theoretical tap-lengths, we applied the floor operator \(\lfloor .\rfloor \) to (54). As shown in the figures, this node converges to a steady-state tap-length that corresponds well with the value obtained for the theoretical steady-state tap-length. We observe through the simulations that this is true for all nodes.

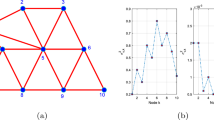

4.2 High-noise scenario (SNR=0dB)

In this case, a high-noise environment is considered for simulations. The parameters used in the simulations are the same as that of the previous case, but the observation noise is scaled to reach the SNR=0dB. The step size of the DILMS and tap-length adaption algorithm are set to \({\mu }_k\)=0.001, and \({\gamma }_k\)=2, respectively. The evolutions of the fractional and integer tap-length, under this condition, are shown in Figs. 3 and 4 for node k=1. It is observed that the simulation results support the theoretical analyses.

4.3 Large step size for tap-length adaption

The simulation setup of this scenario is the same as that of the first scenario, except that, in this case, we use a significant value for the tap-length adaption step size \({\gamma }_k\)=30. However, increasing the step size \({\gamma }_k\) increases the convergence rate, but a large \({\gamma }_k\) causes a significant fluctuation of the evolution curve. The evolution curves of the fractional and integer tap-length, under this condition, are presented in Figs. 5 and 6 for node k=1. It is easily seen from the figures that the steady-state tap-length fluctuates are about the theoretical values.

Table 2 shows the simulation results for different SNR values and compares them with the theoretical results. All parameters are following the previous experiments except for \(\mu _k\)=0.001 and \(\gamma _k\)=2. As the table shows, for different values of SNRs, the theoretical results are well compatible with the simulation results.

Table 3 also shows the results for different \(L_{opt}\) values and compares them with the theoretical results. In this simulation, \(\mu _k\)=0.001 and \(\gamma _k\)=10. As shown, first, the simulation results are compatible with the theoretical results. Secondly, all these results show that the length of the steady-state is overestimated almost as much as \(\Delta \). This overestimation of length is important because it shows that length is not estimated deficiently. As shown in [26], deficient length estimation increases the steady-state error. On the other hand, a length much larger than the optimal value increases the computational complexity, which is not proper for a network of low-power sensors. However, the overestimation of the length is not significant and almost equal to \(\Delta \), which is much smaller than the optimal length.

5 Conclusions

In this paper, we investigated the performance of the distributed incremental variable fractional tap-length (FT) LMS algorithm. Since in this scenario, the length of the vectors varies in each iteration, so we defined an upper bound for the size and padded the vectors by zeros to achieve this length. We obtained the vectors with the same sizes that simplified the calculations. Based on these findings, we analyzed the incremental variable FT LMS algorithm and derived the mathematical expression for the steady-state tap-length at each sensor node. The most important result is that this algorithm overestimates the optimal value of the tap-length. Computer simulation results confirmed the mathematical analysis and the derived closed-form expression of tap-length.

References

Liu, J., Zhao, Z., Ji, J., & Miaolong, H. (2020). Research and application of wireless sensor network technology in power transmission and distribution system. Intelligent and Converged Networks, 1(2), 199–220.

Zhou, Y., Yang, L., Yang, L., & Ni, M. (2019). Novel energy-efficient data gathering scheme exploiting spatial-temporal correlation for wireless sensor networks. Wireless Communications and Mobile Computing,2019.

Azarnia, G., Tinati, M. A., Sharifi, A. A., & Shiri, H. (2020). Incremental and diffusion compressive sensing strategies over distributed networks. Digital Signal Processing, 101, 102732.

Saeed, M. O. B., & Zerguine, A. (2019). An incremental variable step-size lms algorithm for adaptive networks. IEEE Transactions on Circuits and Systems II: Express Briefs, 67(10), 2264–2268.

Dimple, K., Kotary, D. K., & Nanda, S. J. (2017). An incremental rls for distributed parameter estimation of iir systems present in computing nodes of a wireless sensor network. Procedia Computer Science, 115, 699–706.

Li, L., Chambers, J. A., Lopes, C. G., & Sayed, A. H. (2009). Distributed estimation over an adaptive incremental network based on the affine projection algorithm. IEEE Transactions on Signal Processing, 58(1), 151–164.

Huang, W., Li, L., Li, Q., & Yao, X. (2018). Diffusion robust variable step-size lms algorithm over distributed networks. IEEE Access, 6, 47511–47520.

Rastegarnia, A. (2019). Reduced-communication diffusion rls for distributed estimation over multi-agent networks. IEEE Transactions on Circuits and Systems II: Express Briefs, 67(1), 177–181.

Li, L., & Chambers, J. A. (2009). Distributed adaptive estimation based on the apa algorithm over diffusion networks with changing topology. In 2009 IEEE/SP 15th Workshop on Statistical Signal Processing, (pp. 757–760). IEEE.

Riera-Palou, F., Noras, J. M., & Cruickshank, D. G. M. (2001). Linear equalisers, with dynamic and automatic length selection. Electronics Letters, 37(25), 1553–1554.

Shaozhong, F., Jianhua, G., & Yong, W. Fast adaptive algorithms for updating variable length equalizer based on exponential. In 2008 4th International Conference on Wireless Communications, Networking and Mobile Computing.

Rusu, C., & Cowan, C. F. N. (2001). Novel stochastic gradient adaptive algorithm with variable length. In European Conference on Circuit Theory and Design (ECCTD’01).

Bilcu, R. C., Kuosmanen, P., & Egiazarian, K. (2002). A new variable length lms algorithm: Theoretical analysis and implementations. In 9th International conference on Electronics, Circuits and Systems, volume 3, (pp. 1031–1034). IEEE.

Bilcu, R. C., Kuosmanen, P., & Egiazarian, K. (2006). On length adaptation for the least mean square adaptive filters. Signal Processing, 86(10), 3089–3094.

Yuantao, G., Tang, K., Cui, H., & Wen, D. (2003). Convergence analysis of a deficient-length lms filter and optimal-length sequence to model exponential decay impulse response. IEEE Signal Processing Letters, 10(1), 4–7.

Zhang, Y., Chambers, J. A., Sanei, S., Kendrick, P., & Cox, T. J. (2007). A new variable tap-length lms algorithm to model an exponential decay impulse response. IEEE Signal Processing Letters, 14(4), 263–266.

Shi, K., Ma, X., Tong, G., & Zhou. (2009). A variable step size and variable tap length lms algorithm for impulse responses with exponential power profile. In 2009 IEEE International Conference on Acoustics, Speech and Signal Processing (pp. 3105–3108). IEEE.

Mayyas, K. (2005). Performance analysis of the deficient length lms adaptive algorithm. IEEE Transactions on Signal Processing, 53(8), 2727–2734.

Yuantao, G., Tang, K., & Cui, H. (2004). Lms algorithm with gradient descent filter length. IEEE Signal Processing Letters, 11(3), 305–307.

Gong, Yu., & Cowan, C. F. N. (2004). Structure adaptation of linear mmse adaptive filters. IEE Proceedings-Vision, Image and Signal Processing, 151(4), 271–277.

Gong, Yu., & Cowan, C. F. N. (2005). An lms style variable tap-length algorithm for structure adaptation. IEEE Transactions on Signal Processing, 53(7), 2400–2407.

Ali, A., Moinuddin, M., & Al-Naffouri, T. Y. (2021). The nlms is steady-state schur-convex. IEEE Signal Processing Letters, 28, 389–393.

Lu, L., Chen, L., Zheng, Z., Yi, Yu., & Yang, X. (2020). Behavior of the lms algorithm with hyperbolic secant cost. Journal of the Franklin Institute, 357(3), 1943–1960.

Luo, L., & Xie, A. (2020). Steady-state mean-square deviation analysis of improved l0-norm-constraint lms algorithm for sparse system identification. Signal Processing, 175, 107658.

Li, L., Zhang, Y., & Chambers, J. A. (2008). Variable length adaptive filtering within incremental learning algorithms for distributed networks. In 2008 42nd Asilomar Conference on Signals, Systems and Computers (pp. 225–229). IEEE.

Azarnia, G., & Tinati, M. A. (2015). Steady-state analysis of the deficient length incremental lms adaptive networks. Circuits, Systems, and Signal Processing, 34(9), 2893–2910.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflicts of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix A.

Appendix A.

In [26], we have presented the concept of a short-length DILMS algorithm, and we have provided an expression for its steady-state MSD. This steady-state MSD is needed for the evaluation of the term \(E\{{\Vert {\ddot{\mathbf{P}}}_{k-1}\Vert }^2\}\) in (30), so the main results are included here. For this aim, we assume a set of N sensors in order to find an \(L_{opt}\times 1\) unknown vector \(\mathbf{w}^o_{L_{opt}}\) with unknown length \(L_{opt}\) from multiple measurements collected at N sensor nodes in the network. As previously mentioned, to find the length of the unknown parameter, a variable tap-length algorithm is needed. But, we assume that such an algorithm is not applicable for reasons such as energy storage (since energy consumption is an essential issue in WSNs). So, at each sensor, a conjectural length M for the unknown parameter is considered. More clearly, each sensor is equipped with an adaptive filter with M coefficients (\(M<L_{op}\)). Now at each sensor node, only the algorithm (3) is applicable in which all vectors have length M.

Now, we present a steady-state analysis for this deficient length case. To perform this analysis, first, we partition the unknown parameter \(\mathbf{w}^o_{L_{opt}}\) as follow:

where \({\dot{\mathbf{w}}}_M\) is the partition of \(\mathbf{w}^o_{L_{opt}}\) that is modeled by \({\varvec{\psi }}^{(i)}_k\) in each sensor, and \({\ddot{\mathbf{w}}}_{L_{opt}-M}\) is the partition of \(\mathbf{w}^o_{L_{opt}}\) that excluded in the estimation of \(\mathbf{w}^o_{L_{opt}}\). The partitioning simplifies the work with the variable-length vectors. Regarding the partitioning and using the data model (11), the update equation (3) is expressed as:

where the vector \({\overline{\varvec{\psi }}}^{(i)}_{L_{opt},k-1}\) with length \(L_{opt}\), computes the difference among the weight at sensor \(k-1\) and \(\mathbf{w}^o_{L_{opt}}\) as:

By padding the \(L_{opt}-M\) zeros in vectors with length M in (60), and subtracting the unknown parameter \(\mathbf{w}^o_{L_{opt}}\) from both of its sides results in:

where

To demonstrate the steady-state MSD, first we write the \({\Vert {\overline{\varvec{\psi }}}^{(i)}_{L_{opt},k}\Vert }^2\) as:

Considering the assumptions 1, 2, and employing the mathematical expectations from both sides of (64), after some tedious algebra leads to:

where

and

Now we write (65) in the brief form of below:

where

According to the steady-state analysis, as \((i \rightarrow \infty )\) in (68), and assuming \({\mathbf{P}_{L_{opt},k}=\overline{\varvec{\psi }}}^{(\infty )}_{L_{opt},k}\), (68) is rewritten as:

This equation has a similar structure with (47), therefore in the same manner that was used for the solving of the recursive equation (47) we have:

where

and

Rights and permissions

About this article

Cite this article

Azarnia, G., Sharifi, A.A. Steady-state analysis of distributed incremental variable fractional tap-length LMS adaptive networks. Wireless Netw 27, 4603–4614 (2021). https://doi.org/10.1007/s11276-021-02754-4

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11276-021-02754-4