Abstract

This paper proposes a subspace identification method for closed-loop EIV (errors-in-variables) problems based on instrumental variables . First, a unified framework is derived, and then the reason is discussed why some existing subspace methods based on instrumental variables could be biased under closed-loop conditions. Afterwards a remedy is given to eliminate the bias by simply replacing the instrumental variable. Using orthogonal projection, the resulting instrumental variable method is very simple and easy to extend. In addition, simulation studies illustrate the effects of different instrumental variables.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In this Big Data era, massive data are generated, processed, transmitted, and stored in various field [4, 6, 19]. Industrial control systems are no exception. Advanced control approaches are generally based on mathematical models which are usually data-driven models obtained from the input and output data of dynamic systems through system identification methods. Subspace identification methods (SIMs) [13, 17, 21, 25] are attractive since a state-space realization is estimated directly from input-output data, without non-linear optimization as generally required by the prediction error methods (PEMs) [15]. Moreover, SIMs have a better numerical reliability and a modest computational complexity compared with PEMs, particularly when the number of outputs and states is large. If the available data records exceed hundreds of thousands or even millions of samples, cloud computing can be adopted to solve the system identification problem [2, 7, 18], since cloud computing has rapidly emerged as an accepted big data computing paradigm in many areas such as smart city [29], smart medical [1], smart transportation [30] and image processing [28].

However, most of the SIM algorithms only consider output errors and assume the input variables are noise-free. This assumption is far from being satisfactory since all variables contain noise in practice. The effect of disturbances can be eliminated by appropriate selection of the instrument variables that are independent of disturbances. Chou and Verhaegen [3] developed an instrumental variable subspace method which can be applied to errors-in-variables (EIV) and closed-loop identification problem, but it handles white-noise input differently from correlated input. Gustaffson [9] investigated an alternative IV-approach to subspace identification and proposed an improvement of EIV-algorithm of [3].

In parallel, Wang and Qin [26] proposed the use of parity space and principal component analysis (SIMPCA) for errors-in-variables identification with colored input excitation, which can also be applied to closed-loop identification. Huang et al. [11] developed a new closed-loop subspace identification algorithm (SOPIM) by adopting the EIV model structure of SIMPCA, and proposed a revised instrumental variable method to avoid identifying the parity space of the feedback controller. Wang and Qin [27] introduced appropriate column weighting for SIMPCA which seemed to improve the accuracy of the estimation, and pointed out that the parameter estimates from this method (SIMPCAw) is equivalent to that from SOPIM.

In this paper, by adopting the EIV model structure, closed-loop subspace identification methods are proposed based on instrumental variables using orthogonal projection. It is shown that the existing subspace identification methods via instrumental variables for EIV problems yield biased solution in closed-loop at least under the condition that the external excitation is white noise. Therefore a remedy is proposed to eliminate the bias. Compared with the subspace methods using PCA, the present methods using orthogonal projection are quite simple and easy to extend.

The rest of this paper is organized as follows. In Section 2, the problem formulation and assumptions are presented. Section 3 addresses the subspace algorithms based on instrumental variables under a unified framework–for convenience, called SIV framework– which includes the methods proposed in [3, 9]. Besides, an easier SIV method is proposed in terms of implementation in Section 2. Under the SIV framework, Section 4 presents new closed-loop subspace methods which yield unbiased estimation for EIV problems. In Section 5, two numerical examples are illustrated. The final section concludes the paper.

2 Problem Formulation

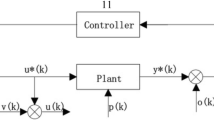

Suppose that the plant depicted in Fig. 1 can be described by the following discrete time linear time-invariant system:

where \(x(k) \in \mathbb {R}^{n}\), \(\tilde {u}(k) \in \mathbb {R}^{m}\) and \(\tilde {y}(k) \in \mathbb {R}^{l}\) are the state variables, noise-free inputs and noise-free outputs, respectively. \(w(k)\in \mathbb {R}^{n}\) is the process noise term. A, B, C and D are system matrices with appropriate dimensions. The available observations for identification are the measured input signals u(k) and output signals y(k):

where \(o(k)\in \mathbb {R}^{m}\) and \(v(k)\in \mathbb {R}^{l}\) are input and output noise.

We introduce the following assumptions:

-

A1. The system is asymptotically stable, i.e. the eigenvalues of A are strictly inside the unit circle.

-

A2. w(k),v(k),o(k) are zero-mean white noises, and

$$ E\left\{\begin{bmatrix}w(k)\\v(k)\\o(k)\end{bmatrix} \begin{bmatrix}w^{T}(j) & v^{T}(j) & o^{T}(j)\end{bmatrix}\right\}=\begin{bmatrix}R_{w} & R_{wv} & R_{wo}\\R_{vw} & R_{v} & R_{vo}\\R_{ow} & R_{ov} & R_{o} \end{bmatrix}\delta_{kj} $$(3)here, E{⋅} denotes statistical expectation, Rw denotes the autocorrelation function of the random variable v, Rwv denotes the cross-correlation function of the random variables w and v, and similar definition for the other R(⋅). δkj is the Kronecker delta function, i.e.,

$$ \delta_{kj}=\begin{cases} 0, & \text{if $k \neq j $}\\ 1, & \text{if $k = j $} \end{cases} $$(4) -

A3. \(\tilde {u}(k)\) is a quasi-stationary deterministic sequence and satisfy the persistence of excitation condition [15].

-

A4. The pair (A,C) is observable, and the pair \((A,[B \quad R_{w}^{1/2}]\) is reachable [5].

3 Notations and the SIV Framework

Firstly, we define the stacked past and future output vectors and the block Hankel output matrices as the following:

where subscript p,f are user-defined integers (p ≥ f > n) and stand for past horizon and future horizon, respectively. The input and noise block Hankel matrices Up,Uf,Of,Wf,Vf are defined similarly to Yp,Yf.

Through the iteration of Eqs. 1 and 2, we can derive the following subspace matrix equation

where

is the state sequence,

is the extended observability matrix with rank n,

and

are two block Toeplitz matrices.

Unlike traditional subspace identification methods [16, 20, 23, 24], SIV first eliminates the effect of the noises via instrumental variables (IV). Suppose the IV vector \(\xi (k) \in \mathbb {R}^{n_{\xi }}\) is available, for example, a natural choice is \(\xi (k)=[{u_{p}^{T}}(k) {y_{p}^{T}}(k)]^{T}\) in the EIV case. Construct the IV matrix

such that

For notational convenience, all noise terms are collected in Nf = Vf − HfOf + GfWf. Postmultiplying both sides of Eq. 8 by ΞT, we get

where \( \hat {R}_{y_{f} \xi }:= \frac {1}{L}Y_{f} {\varXi }^{T}\), \(\hat {R}_{x \xi }:= \frac {1}{L}X_{k} {\varXi }^{T}\), and \(\hat {R}_{u_{f} \xi },\hat {R}_{n_{f} \xi }\) are defined conformably with \(\hat {R}_{y_{f} \xi }\). From the condition Eq. 14,

Next SIV removes the effect of the future input on the future output by orthogonal projection.

where \({\varPi }_{u_{f} \xi }^{\bot }\) denotes the orthogonal projection matrix onto the nullspace of \(\hat {R}_{u_{f} \xi }\). Assuming that \(\hat {R}_{u_{f} \xi }\) has full rank,

Then like other subspace identification methods [10, 12, 14, 22], SIV uses SVD to obtain the extended observability matrix

where W2 is a weighting matrix. The matrix U1 consists of the n principal left singular vectors of Ω, S1 and S2 are diagonal matrices with the non-increasing singular values of Ω. Due to the existing of noises, S2≠ 0 and a decision on the order of the system must be made. The extended observability matrix is obtained as Γf = U1T, T is a non-singular matrix and typical choices are T = I or \(T=S_{1}^{1/2}\).

For numerical implementation, the first step of SIV is to compute the following QR factorization [8]

From Eq. 20 it is clear that \(\hat {R}_{y_{f} \xi } {\varPi }_{u_{f} \xi }^{\bot }=R_{22}{Q_{2}^{T}}\), so Γf can be obtained by performing SVD on R22. Once Γf has been estimated, the system matrices A,B,C,D can be computed as in [13, 21].

Suppose \(Z_{p}=[{U_{p}^{T}} {Y_{p}^{T}}]^{T}\), W2 = I, and the instruments are chosen as Ξ = Zp, then SIV boils down to the method proposed in [3]. Gustafsson [9] found an improvement to this method by modifying the instruments as \({\varXi }=(\frac {1}{L}Z_{p} {Z_{p}^{T}})^{-1/2}Z_{p}\). For simplicity, these two methods are referred to as SIV and SIVw.

On the other side, we choose the instruments as \({\varXi }={\varPi }_{Z_{p}}^{\bot }={Z_{p}^{T}}(Z_{p} {Z_{p}^{T}})^{-1}Z_{p}\), and call the corresponding method SIVp. In terms of the estimation accuracy, SIVp is equivalent to SIVw, because

However, SIVp, which uses the orthogonal projection as the instruments, is easier to implement. Up to now, it seems that these three methods can work under closed-loop conditions, because they avoid projecting out Uf directly. However, simulation results indicate that this is not always the case for closed-loop systems.

4 New Closed-Loop Subspace Methods Under SIV Framework

To find the problem, let us consider the controller described by the following state space model:

where r(k) is the setpoint excitation. Based on Eq. 22, we can derive the following subspace matrix equation

Post-multiplying both sides of Eq. 23 by ΞT,

If the following condition

is satisfied, then

Combining Eqs. 16 and 26 gives

Consequently, both the row space of Rxξ and \(R_{x^{c} \xi }\) fall in the row space of \(\begin {bmatrix} R_{y_{f} \xi } \\ R_{u_{f} \xi } \end {bmatrix}\). This means \(\begin {bmatrix} R_{y_{f} \xi } \\ R_{u_{f} \xi } \end {bmatrix}\), which is used to identify the process model, contains also the controller information. In order to exclude the controller information, the IV matrix Ξ must satisfy the following conditions:

Suppose

It is natural to choose the IV matrix as follows:

With this modification, all the computation procedures discussed in the last section are valid for closed-loop identification. We call the modified algorithms as CSIV and CSIVp.

5 Numerical Examples

In this section a simulation study is presented to demonstrate the performance of the new algorithms proposed in this paper.

5.1 Example 1: A First Order System

The simulation example is a first order SISO system under closed-loop operation,

The feedback has the following structure:

where r(k) is the excitation signal and is generated as

where r0(k) is zero mean white noise with unit variance. The innovation process e(k) is zero mean white noise with variance Re = 1.44. Monte-Carlo simulations are conducted with 30 runs. Each simulation generates 2000 data points. In addition, the input and the output are contaminated with zero mean white noises whose variances are tuned so as for the signal to noise ratios (SNR)

to be 10.

For this example, we will apply the following subspace identification methods: SIMPCA in [26], the three methods (SOPIM, CSIMPCA, CSOPIM) in [11], SIV in [3], and the proposed methods (SIVp, CSIV, CSIVp) in this paper. The horizons are chosen as f = p = 2.

Table 1 shows some Monte-Carlo simulation results. From the table, we can see that the results of the methods using the same weighting matrix are close, for example, SIV and SIMPCA, SIVp and SOPIM, CSIV and CSIMPCA, CSIVp and CSOPIM respectively. For the methods in which Rf is introduced into the weighting matrix, i.e., CSIV, CSIVp, CSIMPCA and CSOPIM, the estimate of b1 are better than that of the other methods. Figure 2 gives the visual representation in Bode plots. It is very different for the four methods (SIV, SIVp, CSIV and CSIVp) in the low frequency estimates.

If we enhance the effects of the measurement noises by reducing both SNRs to 5, the simulation results are showed in Table 2. The methods in which Rf is introduced into the weighting matrix, give good results, and the other methods yield somewhat biased estimates.

If the setpoint signal is white noise, Table 3 shows the simulation results. The methods in which Rf is introduced into the weighting matrix, give consistent results while the other methods yield biased estimates.

5.2 Example 2: A Fifth Order System

The system to be considered is expressed in the innovation state space form:

and D = 0. The state space model of the controller is:

and Dc = 0.61. The block diagram of this example is shown in Fig. 3.

The simulation conditions are exactly the same as those used in [11]: the reference signal r is Gaussian white noise with variance 1; the innovation e is Gaussian white noise with variance 1/9. Monte-Carlo simulations are conducted with 30 runs. Each simulation generates 1200 data points. Moreover, we add zero mean white noises with variance 0.2 to the input and the output measurements.

Fig. 4 shows the pole plots of the fifth order system by SIV and SIVp. It is obvious that the results are biased due to the correlation between the input and the unmeasured disturbance under feedback control. When the IV matrix is contained Rf, the corresponding methods CSIV and CSIVp give consistent pole results which are shown in Fig. 5(a). Nevertheless, the bode plots in Fig. 5(b) show that the low frequency responses of CSIVp are much better than that of CSIV which confirms CSIVp is more efficient than CSIV without column weighting.

In this example, we will explore the effect of different IV matrices. Besides the IV matrices abovementioned, we also consider the following IV matrices:

The resulting methods called CSIVx or CSIVpx, where x denotes the number corresponding to the IV matrix. For example, CSIV1 uses the IV matrix \( \left [ \begin {smallmatrix} R_{f} \\ Y_{p} \end {smallmatrix} \right ] \) and CSIVp1 uses the projection form of the IV matrix. For all methods, the horizons are chosen as f = p = 20.

Figure 6 displays the pole plots of the fifth order system by CSIV1, CSIV2, CSIV3, CSIV4, CSIV5 and CSIV6. The simulation results of the fifth order system by

CSIVp1, CSIVp2, CSIVp3, CSIVp4, CSIVp5 and CSIVp6 are shown in Figs. 7 and 8. As for the pole estimates, the methods using the projection IV matrices give slightly better results. For this example, the methods using the projection IV matrices which contain Yp yield better results not only for pole estimates but also for the low frequency responses. However, it is not always the case for other systems.

6 Conclusions

In this paper, closed-loop subspace identification methods for EIV problems are developed using orthogonal projection as instrumental variables. They can provide consistent estimates regardless of whether the reference signal is white noise. In addition, the effect of different IV matrices is explored. Compared with the subspace methods based on PCA, the methods proposed here are simple to implement and easy to extend. Two simulation examples are given to illustrate the performance of the proposed methods in closed-loop EIV identification and the effect of instrumental variables.

References

Chen, M., Zhang, Y., Qiu, M., Guizani, N., Hao, Y. (2018). Spha: Smart personal health advisor based on deep analytics. IEEE Communications Magazine, 56(3), 164–169.

Chenaru, O., Stanciu, A., Popescu, D., Florea, G., Sima, V., Dobrescu, R. (2015). Modeling complex industrial systems using cloud services. In International Conference on Control Systems and Computer Science. https://doi.org/10.1109/CSCS.2015.109 (pp. 565–571).

Chou, C.T., & Verhaegen, M. (1997). Subspace algorithms for the identification of multivariable dynamic errors-in-variables models. Automatica, 33(10), 1857–1869.

Dai, W., Qiu, L., Wu, A., Qiu, M. (2016). Cloud infrastructure resource allocation for big data applications. IEEE Transactions on Big Data, 4(3), 313–324.

Dorf, R.C., & Bishop, R.H. (2011). Modern control systems, 12th edn. Upper Saddle River: Prentice Hall.

Gai, K., Qiu, M., Zhao, H. (2016). Security-aware efficient mass distributed storage approach for cloud systems in big data. In 2016 IEEE 2Nd international conference on big data security on cloud (bigdatasecurity), IEEE international conference on high performance and smart computing (HPSC), and IEEE international conference on intelligent data and security (IDS) (pp. 140–145): IEEE.

Gai, K., Qiu, M., Zhao, H., Sun, X. (2017). Resource management in sustainable cyber-physical systems using heterogeneous cloud computing. IEEE Transactions on Sustainable Computing, 3(2), 60–72.

Golub, G.H., & Van Loan, C.F. (2013). Matrix computations, 4th. Baltimore: The Johns Hopkins University Press.

Gustafsson, T. (2001). Subspace identification using instrumental variable techniques. Automatica, 37(12), 2005–2010.

Gustafsson, T. (2002). Subspace-based system identification: weighting and pre-filtering of instruments. Automatica, 38(3), 433–443.

Huang, B., Ding, S.X., Qin, S.J. (2005). Closed-loop subspace identification: an orthogonal projection approach. Journal of Process Control, 15(1), 53–66.

Jansson, M., & Wahlberg, B. (1996). A linear regression approach to state-space subspace system identification. Signal Processing, 52(2), 103–129.

Katayama, T. (2005). Subspace methods for system identification. London: Springer.

Larimore, W.E. (1990). Canonical variate analysis in identification, filtering, and adaptive control. In 29th IEEE conference on decision and control (pp. 596–604): IEEE.

Ljung, L. (1999). System identification-theory for the user, 2nd edn. Upper Saddle River: Prentice-Hall.

Picci, G., & Katayama, T. (1996). Stochastic realization with exogenous inputs and ’subspace-methods’ identification. Signal Processing, 52(2), 145–160.

Qin, S.J. (2006). An overview of subspace identification. Computers & Chemical Engineering, 30 (10-12), 1502–1513.

Qiu, M., Jia, Z., Xue, C., Shao, Z., Sha, E.H.M. (2007). Voltage assignment with guaranteed probability satisfying timing constraint for real-time multiproceesor dsp. The Journal of VLSI Signal Processing Systems for Signal, Image, and Video Technology, 46(1), 55–73.

Qiu, M.K., Zhang, K., Huang, M. (2004). An empirical study of web interface design on small display devices. In IEEE/WIC/ACM International conference on web intelligence (WI’04) (pp. 29–35): IEEE.

Van Overschee, P., De Moor, B. (1994). N4sid: Subspace algorithms for the identification of combined deterministic-stochastic systems. Automatica, 30(1), 75–93.

Van Overschee, P., & De Moor, B. (1996). Subspace identification for linear systems: Theory—Implementation—Applications. London: Kluwer Academic Publishers.

Verhaegen, M. (1994). Identification of the deterministic part of mimo state space models given in innovations form from input-output data. Automatica, 30(1), 61–74.

Verhaegen, M., & Dewilde, P. (1992). Subspace model identification part 1: the output-error state space model identification class of algorithms. International Journal of Control, 56(5), 1187–1210.

Verhaegen, M., & Dewilde, P. (1992). Subspace model identification part 2: Analysis of the elementary output-error state space model identification class of algorithm. International Journal of Control, 56 (5), 1211–1241.

Viberg, M. (1995). Subspace-based methods for the identification of linear time-invariant systems. Automatica, 31(12), 1835– 1851.

Wang, J., & Qin, S.J. (2002). A new subspace identification approach based on principal component analysis. Journal of Process Control, 12(8), 841–855.

Wang, J., & Qin, S.J. (2006). Closed-loop subspace identification using the parity space. Automatica, 42(2), 315–320.

Xiong, Z., Wu, Y., Ye, C., Zhang, X., Xu, F. (2019). Color image chaos encryption algorithm combining crc and nine palace map. Multimedia Tools and Applications, 78(22), 31035–31 055.

Zhang, Q., Huang, T., Zhu, Y., Qiu, M. (2013). A case study of sensor data collection and analysis in smart city: provenance in smart food supply chain. International Journal of Distributed Sensor Networks, 9(11), 382132.

Zhu, M., Liu, X.Y., Tang, F., Qiu, M., Shen, R., Shu, W., Wu, M.Y. (2016). Public vehicles for future urban transportation. IEEE Transactions on Intelligent Transportation Systems, 17 (12), 3344–3353.

Acknowledgements

This work was partially supported by the Science and Technology Research Projects of Hubei Provincial Department of Education (No.Q20162706), the National Natural Science Foundation of China (No.61972136), and the Hubei Provincial Department of Education Outstanding Youth Scientific Innovation Team Support Foundation (No.T201410).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Li, Y., Xiong, Z., Ye, C. et al. Subspace Identification of Closed-Loop EIV System Based on Instrumental Variables Using Orthoprojection. J Sign Process Syst 93, 345–355 (2021). https://doi.org/10.1007/s11265-020-01618-y

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11265-020-01618-y