Abstract

Internet services, such as review sites, FAQ sites, online auction sites, online flea markets, and social networking services, are essential to our daily lives. Each Internet service aims to promote information exchange among people who share common interests, activities, or goods. Internet service providers aim to have users of their services actively communicate through their services. Without active interaction, the service falls into disuse. In this study, we consider that an Internet service has a network externality as its main feature, and we model user behavior in the Internet service with network externality (ISNE) as a dynamic game. In particular, we model the diffusion process of users of an ISNE as an infinite-horizon extensive-form game of complete information in which: (1) each user can choose whether or not to use the ISNE in her/his turn and (2) the network effect of the ISNE depends on the history of each player’s actions. We then apply Markov perfect equilibrium to analyze how to increase the number of active users. We derive the necessary and sufficient condition under which the state in which every player is an active user is the unique Markov perfect equilibrium outcome. Moreover, we propose an incentive mechanism that enables the number of active users to increase steadily.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

This study deals with Internet services such as review sites, FAQ sites, online auction sites, online flea markets, and social networking services, and investigates how a service could be used widely and actively over long periods. Internet services are currently essential to our daily lives. An Internet service is an online membership service or site that aims to promote information exchange among people who share common interests, activities, or goods. Internet service providers aim to have their subscribers actively communicate through their services, because, without active interaction, an Internet service becomes inactive.Footnote 1 In our analysis, we particularly note that one of the main features of Internet services is the presence of a network externality, originally defined as the effect, or property, by which the value of a good increases with the number of consumers of the good. For example, a telephone is a good with a network externality, in that as the popularity of telephones increases, each user’s telephone becomes more valuable, because the number of friends or contacts that the user can call or receive calls from also increases. Similarly, as the number of active users of an Internet service increases, users can exchange more information with other users, and the convenience of the service improves. This study focuses on this mechanism and models user behavior on the Internet service with network externality (ISNE) as a dynamic game. It then examines how to increase the number of active users on the ISNE. It is well known that any good or service rapidly becomes more common once the number of users exceeds a certain level (Rohlfs 1974, 2003).

In the literature subsequent to Rohlfs (1974), such as Katz and Shapiro (1985) and Farrell and Saloner (1985), an increase in the number of users of the same good or compatible goods also increases the value of the good. Nonetheless, some goods and services with limited capacities, such as road and information infrastructure, might have negative network externalities. This is because an increase in users causes congestion in these goods or services, because the resource has a capacity constraint. In contrast, in our study, we deal with only Internet services that employ well-maintained infrastructure. Therefore, we assume that only a positive network externality exists. The existing studies in which the single good diffusion process draws on a dynamic game model include Gale (1995), Ochs and Park (2010), and Shichijo and Nakayama (2009). For example, Gale (1995) analyzed a dynamic N-person game in which N players simultaneously decide whether to adopt a good. In this model, the utility each player gains depends only on the number of adopters of the good. As mentioned above, this setting is common in the above-mentioned literature. Gale (1995) also focused on the equilibrium in which all players are adopters in a certain period, especially after the second period. This is an equilibrium with delay, by which it is shown that if the length of a unit period is sufficiently short, then the equilibrium with delay could be removed. In addition, Gale (1995) showed the existence of an equilibrium in which no one adopts the good.

Our model is an extension of Gale’s dynamic model (Gale 1995). However, we additionally include the following properties: (1) in each period, only one player makes her/his decision, and (2) the reversible behavior of players is considered. The first modification arises from a characteristic of Internet services. If we set the duration to be sufficiently short, players cannot choose their actions at the same time; this is because it takes time to observe other players’ decisions. Thus, most previous studies have employed a simultaneous model in which all players simultaneously decide their actions. By contrast, in the ISNE, users can observe others’ actions immediately after their actions have been chosen. Therefore, we assume that only one player makes her/his decision in each period. The second modification is to allow for the reversible behavior of players. In most of the literature discussed, it is generally assumed that the status “consumer adopted the good/service” is an irreversible transition from the status “consumer has not adopted the good/service”.Footnote 2 This means that once a player adopts the good in period t in her/his strategy, her/his action after \(t+1\) must be adoption. However, as in some ISNE, there is no irreversibility in consumer behavior, because consumers can easily cease use of the service. Thus, in our model, we allow every consumer to adopt and not to adopt, even if she/he has already adopted previously. Moreover, it is reasonable to assume that the value of an ISNE depends not on the number of members, or users who have used the service at least once, but rather on the number of active users who actively communicate through the ISNE. The utility gained by a player in each period then depends not on the number of active users but on the action history. In the above model, we study Markov perfect equilibrium (MPE). We focus on an equilibrium in which every player uses the ISNE actively in her/his turn, and we call this active equilibrium. Then, we show that for the existence of an active equilibrium, it is crucial decision whether active behavior is beneficial on condition that other users always use the service actively. It turns out that all versions of MPE are active equilibria if choosing active behavior is beneficial in the moment, and that, by contrast, there is no active equilibrium if staying off the ISNE is beneficial. Furthermore, we show the condition in which equilibrium exists, such that no one uses the ISNE. Along the lines of Shichijo and Nakayama (2009), we propose an incentive system to resolve the problem of delay, and show that we can construct the incentive system in which all MPE are equilibria without delay.

The rest of this paper is organized as follows. The next section, Sect. 2, provides a literature review. In Sect. 3, we model the diffusion process of ISNE users as a dynamic game by focusing on these features of the ISNE. In Sect. 4, we study the MPE of the game and derive a necessary and sufficient condition in which all users rapidly become active users. In addition, we show the condition for equilibrium in which all users do not use the ISNE. In Sect. 5, we propose an incentive system to increase the number of active users rapidly, even if the original game has the problem of inactiveness. We devote Sect. 6 to a model that includes the participation cost for the ISNE. We then show that an analogy of the main theorem continues to hold when we allow all players to know the current number of ISNE users.

2 Literature review

Rohlfs (1974) first investigated the diffusion process of communication services or devices, including telephones and facsimiles, that have a network externality. David (1985) pointed out that the QWERTY keyboard also has a network externality in his historical paper. By the 1980s, the markets of two or more goods with a network externality had begun to be studied actively. For example, Katz and Shapiro (1985) and Farrell and Saloner (1985) analyzed the incentive to achieve compatibility of goods with a network externality. As mentioned above, in this literature, only a positive network externality is considered.

Ochs and Park (2010) and Shichijo and Nakayama (2009) analyzed the diffusion process through a dynamic game model like Gale (1995). The model in Shichijo and Nakayama (2009) is similar to the model in Gale (1995), in that both models have multiple equilibria, including inefficient equilibrium with delay. To solve this problem, Shichijo and Nakayama (2009) proposed a two-step subsidy scheme after considering participation fees. The authors then derived the condition in which the delay in equilibrium decreases and all players eventually adopt the good. Finally, the model in Ochs and Park (2010) includes incomplete information.Footnote 3 Here, Ochs and Park (2010) defined a cut-off strategy and focused on symmetric equilibrium. They showed that, if the discount factor \(\delta \) is sufficiently large, there is unique symmetric equilibrium.

The aforementioned literature studied the diffusion process by deriving equilibria of dynamic game model as we do in our paper. However, we introduce reversible behavior of players, while the previous studies consider only irreversible behavior. Allowing reversible behavior broadens players’ alternatives in the sense that each player can a make decision at many times, which is an interesting feature of our study.

Network externalities have been studied in many aspects other than game theory. For instance, Arthur (1987, 1989)) and Arthur et al. (1987) investigated network externality using stochastic processes, especially the Polya–Eggenberger process. Dosi et al. (1994) and Dou and Ghose (2006) also modeled the diffusion process as a stochastic process.Footnote 4 However, these studies do not deal with consumers’ decision-making and do not derive equilibrium in contrast to our game theoretical approach.

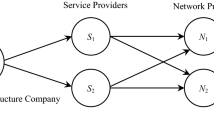

In addition, recently, artificial market simulations have been used extensively. For instance, the existence of a “locality,” in which the distribution of consumer locations influences the diffusion process, is found in Iba et al. (2001). Delre et al. (2007), Uchida and Shirayama (2008), and Heinrich (2016) also used agent-based models with network structure to study the diffusion process.Footnote 5 Moreover, Homma et al. (2010) analyzed the diffusion model of a two-sided service system in which there is a network effect between one good and a complementary good, such as electric vehicles and electric vehicle chargers.Footnote 6 The analysis in that study extended Rohlfs’ model to a two-sided service system, and conducted a computer simulation of the market for an electronic money system. This literature computationally seeks the consequence of the diffusion process, which is quite different from our approach of deriving the equilibria theoretically.

3 Model

Let \(I = \{1, 2, \dots , N \}\) be the set of consumers including potential users. Suppose that each consumer is randomly given opportunities to select her/his action from two alternatives: “active” and “no-action.” For example, “active” means posting a message and review or sending a reaction on an ISNE, whereas “no-action” indicates that none of these actions are undertaken. Ours is a binary model in which there are only two alternatives,Footnote 7 because most previous studies mentioned in Sect. 1 employed binary models.

In our analysis, we consider the discrete-time dynamics. As discussed, on the ISNE, users can observe other’s action immediately after the action is chosen. We, therefore, assume that, in each period, only one player is selected in the period by setting the period to be sufficiently short. Moreover, we assume that the probability that each player is selected is the same. That is, we assume that, in each period, a player is randomly selected with probability \(w = 1/N\). Only the selected player chooses her/his action in each period and she/he does not necessarily choose the same action chosen by her/him in the past period.

Let \(h^t_x = (x^1, x^2, \dots , x^t)\) denote a history of players selected in periods \(\tau = 1, 2, \dots , t\), where \(\tau \)th element \(x^\tau \) indicates the player chosen in period \(\tau \). We refer to this as the player history. For each \(\tau \), let \(\alpha ^\tau \) be the action actually chosen in period \(\tau \), such that \(\alpha ^\tau \) will be either a (active) or n (no-action) and an action history by t is \(h^t_\alpha = (\alpha ^1, \alpha ^2, \dots , \alpha ^t)\). We refer to the pair of the player history and the action history \(h^t = (h^t_x, h^t_\alpha )\) as the history of period t. Let \(h^0 = (h^0_x, h^0_\alpha )\) be the null history. Let us denote the set of all histories of t by \(H^t\), and \(H = \cup _{t=0}^\infty H^t\), where H is the set of all histories. Similarly, let us denote the set of all action histories of t by \(H^t_\alpha \), and \(H_\alpha = \cup _{t=0}^\infty H^t_\alpha \), where \(H_\alpha \) is the set of all action histories.

We introduce the following notation. Let (a : k) be an action history in which action a is chosen k times successively, where it does not matter which player selects “active.” Then, let \((h^t_\alpha , a)\) be an action history in which action a is selected only once (immediately) after history \(h^t_\alpha \); thus, \((h^t_\alpha , a)\) is the action history \(h^{t+1}_\alpha \) with length \(t+1\). Similarly, \((h^t_\alpha , n)\) is an action history where action n is chosen only once after \(h^t_\alpha \). Finally, we denote with \((h^t_\alpha , a: k)\) an action history in which action a is chosen k times one after the other after history \(h^t_\alpha \).

Suppose that if a player selects “active,” the network effect of the action lasts several periods. For instance, on an ISNE, a message posted in the first period is read by others and brings about a positive externality, but the effect may decrease as the information becomes dated. In addition, suppose that the network effect does not depend on which player selects the active action, i.e., the model does not consider network structures. Consequently, in each period t, an instant payoff to player \(x^t\) depends only on the action history \(h^t_\alpha \).

Denote the instant payoff to player \(x^t\) as \(u(h^t_\alpha )\). Note that \(u(\cdot )\) depends not only on the action history before player \(x^t\) selects an action but also the action chosen in period t. The instant payoff to player \(x^t\) is thus defined as a function of an action history \(h^t_\alpha \) for period t. When player \(x^t\) selects “no-action,” i.e., \(\alpha ^t = n\), let the instant payoff to her/him be zero (i.e., \(u(h^{t-1}_\alpha , n) = 0\)), regardless of \(h^{t-1}_\alpha \).

We now introduce the following assumptions about the instant payoff function \(u(\cdot )\). To shorten this notation, we sometimes denote the instant payoff of history \((h^\tau _\alpha , {\tilde{h}}^t_\alpha )\) in a more compact way, whereby \(u((h^t_\alpha , {\tilde{h}}^\tau _\alpha )) \) is denoted by \(u(h^t_\alpha , {\tilde{h}}^\tau _\alpha )\), and so on.

Assumption 1

Basic assumption of instant payoff function

-

(A1)

For any finite period \(t < \infty \):

$$\begin{aligned} u(h^t_\alpha ) = u(n, h^t_\alpha ) \quad \text {for all } h^t_\alpha \in H^t_\alpha . \end{aligned}$$ -

(A2)

For any finite periods \(t , \tau < \infty \):

$$\begin{aligned} u(h^t_\alpha , a, {\tilde{h}}^\tau _\alpha ) \ge u(h^t_\alpha , n, {\tilde{h}}^\tau _\alpha ) \quad \text {for all } h^t_\alpha \in H^t_\alpha \text { and } {\tilde{h}}^\tau _\alpha \in H^\tau _\alpha . \end{aligned}$$ -

(A3)

For any finite periods \(t , \tau < \infty \):

$$\begin{aligned} u(n, h^t_\alpha , {\tilde{h}}^\tau _\alpha ) \ge u(h^t_\alpha , n, {\tilde{h}}^\tau _\alpha ) \quad \text {for all } h^t_\alpha \in H^t_\alpha \text { and } {\tilde{h}}^\tau _\alpha \in H^\tau _\alpha . \end{aligned}$$ -

(A4)

\(u(a) < 0.\)

-

(A5)

For some \(t < \infty \), there exists a finite action history \(h^t_\alpha \in H^t_\alpha \), such that \(u(h^t_\alpha )>0.\)

-

(A6)

There exist an infimum \(\underline{U} > -\infty \) and a supremum \({\overline{U}} < + \infty \) of value of utility function. That is, \(\underline{U}< u(h^t_\alpha ) < \overline{U}\) for all \(h^t_\alpha \in H^t_\alpha \) for any finite period \(t < \infty \).

(A1) means that the action history (n) is equivalent to the history \(h^0_\alpha \). (A2) assumes that if a player changes her/his action from “active” to “no-action” in a period, the instant payoff will decrease. (A3) states that newer n reduce the instant payoff more. In other words, the lack of older information is not important in the ISNE. Likewise, older information, such as a message or review, is less important than new information. (A4) assumes selecting “active” provides a negative instant payoff when “active” has not been selected before. Conversely, we assume, in (A5), that an action history exists, such that the instant payoff of the history is strictly positive. Finally, (A6) indicates that the instant payoffs are uniformly bounded.

The following provides a natural example of a utility function that satisfies the basic assumptions (A1)–(A6). For later use, we introduce an indicator function:

Example 1

Let \(D \in {\mathbb {N}}\), and suppose that \(u(h^t_\alpha ) = \sum _{\tau = \max \{1, t - D \}}^t {\mathbf {1}}_a(\alpha ^\tau )\). In this example, a player’s utility is determined by the number of “active” taken during the most recent D periods. This utility function can be intuitively understood, because information older than a certain period may not be reliable, or may be hidden in reality. The above utility function satisfies assumptions (A1)–(A6).

The next example generalized Example 1, in the sense that value of older information gradually decreases.Footnote 8

Example 2

Let us consider a function \(q: {\mathbb {N}} \rightarrow {\mathbb {R}}\), such that \(q(0) \ge q(1) \ge q(2) \ge \dots \ge q(t-1)\) for any \(t < \infty \), and a function \(f: {\mathbb {R}} \rightarrow {\mathbb {R}}\) satisfying the following three properties:

-

There exist a infimum \(\underline{U} > -\infty \) and a supremum \(\overline{U} < + \infty \), such that \(\underline{U}< f(x) < \overline{U}\) for all x,

-

\(f(q(0)) < 0\),

-

\(f( \sum _{\tau =0}^\infty q(\tau )) > 0\).

For \(h^t_\alpha = (\alpha ^1, \alpha ^2, \dots , \alpha ^t)\), let \(u(h^t_\alpha ) = f(\sum _{\tau =1}^t {\mathbf {1}}_a(\alpha ^\tau ) q(t-\tau ))\), where \({\mathbf {1}}_a(\alpha ^\tau )\) is 1 if the action chosen in period \(\tau \) is “active” (i.e., \(\alpha ^\tau = a\)), otherwise 0.

According to the above utility function, users gain more from selecting “active” in a more recent period than in an older period. The network effect of “active” also decreases with time. Such a utility function satisfies assumptions (A1)–(A6).

Given an infinite history h, the payoff that player i gains is the discounted sum of the instant payoffs. Thus, we can calculate it using:

where \(\delta < 1\) is the discount factor.

In this study, we focus on the payoff-relevant strategies. Player i’s strategy \(\sigma _i\) consists of her/his behavioral strategy \(\sigma ^{t}_i\), that is \(\sigma _i = (\sigma ^{t}_i)_t\). In general, in the payoff-relevant strategy of player i, \(\sigma _i\), player i’s action at time t depends only on a certain variable which determines player’s payoff rather than on the whole history, \(h^t\) (see Maskin and Tirole (2001) for details). From Eq. (1), we can see that a payoff after period \(t+1\) does not depend on \(h^t_x\) in our model. In fact, only action history \(h^t_\alpha \) influences the payoff at time \(t+1\). Thus, in this analysis, we consider a payoff-relevant strategy, which does not depend on the players’ history \(h^t_x\), but does depend on the action history \(h^t_\alpha \).

The payoff-relevant behavioral strategy \(\sigma ^{t+1}_i\) of player i at time \(t+1\) is a function \(\sigma ^{t+1}_i: h^t_\alpha \mapsto p \in [0,1]\) that assigns to each action history \(h^t_\alpha \) the probability of choosing “active” at time \(t+1\). We can see that this satisfies \(\sigma ^{t+1}_i(h^t_\alpha ) = \sigma ^{t+1}_i(n: k, h^t_\alpha )\) as \(u(h^t_\alpha ) = u(n: k, h^t_\alpha )\) by (L1) in Lemma 1 (see the appendix). For example, the payoff-relevant behavioral strategy \(\sigma ^{t+1}_i\) assigns the same probability to action history (a) where “active” is chosen in the first period, and (n : k, a) where action a is chosen once after n is chosen k times one after the other, i.e., \(\sigma ^2_i(a) = \sigma ^{2}_i(n: k, a)\).Footnote 9 In the rest of this paper, let us denote the set of all payoff-relevant strategies of player i by \(\varDelta _i\), and suppose that \(\varDelta = \varDelta _1 \times \varDelta _2 \times \dots \times \varDelta _N\).

We introduce strategy \(\overline{\sigma }_i\), called the maximum strategy, such that player i always chooses “active”, i.e., \(\overline{\sigma }^{t+1}_i(h^t_\alpha ) = 1\) for every \(t < \infty \), and \(h^t_\alpha \in H^t_\alpha \).Footnote 10

Strategy profile \(\sigma \) is a profile of players’ strategies, that is \(\sigma = (\sigma _i)_{i \in I}\). We often denote by \(\sigma _{-i} \in \prod _{j \ne i} \varDelta _j\), the strategy profile where every player other than player i, say player j, uses \(\sigma _j\). For example, in strategy profile \((\sigma '_i, \sigma _{-i})\), player i employs \(\sigma '_i\), but all players except for player i adhere to \(\sigma \). If \(\sigma ^t_i = \sigma ^t_j\) for any i, j, and t (\(0 \le t < \infty \)), we can say that strategy profile \(\sigma = (\sigma _i)_{i \in I}\) is symmetric. Let us denote by \(\overline{\sigma } = (\overline{\sigma }_i)_{i \in I}\) the symmetric strategy profile where all players use the maximum strategy, and let us call it the maximum strategy profile.

Given strategy profile \(\sigma \), let us denote an expected payoff to player i after history \(h^t_\alpha \) by \(\varPi _i( \sigma \mid h^t_\alpha )\).Footnote 11 This expected payoff is calculated without knowing whether \(x^{t+1} = i\) or not, and:

The first line of the formula is the expected payoff where player i is selected in period t with probability \(w ( = 1/N)\), and the second line is that where player j other than i is selected.

Given \(h^t\), we denote by \(\varPi ^a_i(\sigma \mid h^t_\alpha )\) the expected payoff to player i where i is selected in period \(t+1\), and s/he selects “active” in period \(t+1\) and plays according to \(\sigma _i\) after \(t+2\). From the formula (2), we can write this as follows:

Given that \(\varPi ^a_i(\sigma \mid h^t_\alpha )\) is the expected payoff to the player selected at time \(t+1\), w does not appear in the first term. Similarly, the expected payoff to player i where \(i = x^{t+1}\) selects “no-action” in period \(t+1\) and plays according to \(\sigma _i\) after \(t+2\) is as follows:

If \(\sigma \) is symmetric, we omit the subscript i from Eq. (2) and denote the expected payoff by \(\varPi ( \sigma \mid h^t_\alpha )\):

Similarly, for symmetric strategy, we can arrange Eqs. (3) and (4), and obtain (6) and (7), respectively. After history \(h^t\), the expected payoff to \(i = x^{t+1}\) if s/he selects “active” in period \(t+1\) and plays according to symmetric strategy \(\sigma \) after \(t+2\) is as follows:

If player \(i = x^{t+1}\) selects “no-action” in period \(t+1\), then the expected payoff can be written as follows:

Definition 1

We say that a utility function has a positive externality if \(\varPi _i(\sigma _i, \overline{\sigma }_{-i} \mid h^t_\alpha ) \ge \varPi _i(\sigma \mid h^t_\alpha )\) for all \(\sigma \in \varDelta \) and \(h^t_\alpha \in H^t_\alpha \) for any \(t < \infty \).

4 Condition for the existence of active equilibrium

In this section, we study the equilibria in the above model. In this model, the state where there is no inactive user in the ISNE is considered as the state where every user selects a (active) in her/his turn.

In the rest of this paper, we focus on the particular type of equilibria in which the equilibrium outcome is that every user selects “active” in her/his turn from the first period. We refer to this equilibrium as the active equilibrium. An outcome of every active equilibrium is the infinite history \((a, a, a, \dots )\). Note that \(\overline{\sigma }\) is also an active equilibrium.

We also focus on the equilibrium in which the equilibrium outcome is that there is no “active” in the equilibrium outcome. That is, the equilibrium outcome must be the history \((n, n, n, \dots )\). Thus, we refer to this equilibrium as the no-action equilibrium.

When all users play the game according to strategy profile \(\sigma \), the sum of the users’ expected payoffs from the first period is \(\sum _i \varPi _i(\sigma \mid h^0_\alpha )\). We, thus, have the following theorem about equilibrium with the highest consumer surplus.

Theorem 1

-

(i)

If the expected payoff of the maximum strategy profile \(\overline{\sigma }\) is nonnegative, then the active equilibria attain the maximum consumer surplus among all strategy profiles. That is, if \(\varPi (\overline{\sigma } \mid h^0_\alpha ) \ge 0\), then \(\sum _i \varPi _i(\sigma \mid h^0_\alpha ) \le N \varPi (\overline{\sigma } \mid h^0_\alpha )\) for all \(\sigma \in \varDelta \).

-

(ii)

If the expected payoff of the maximum strategy profile \(\overline{\sigma }\) is negative, then no-action equilibria yield the maximum consumer surplus among all strategy profiles. That is, if \(\varPi (\overline{\sigma } \mid h^0_\alpha ) < 0\), then \(\sum _i \varPi _i(\sigma \mid h^0_\alpha ) \le 0\) for all \(\sigma \in \varDelta \).

The proof is given in the appendix. This theorem says that, if the expected payoff of the maximum strategy profile is not less than zero, then the outcome in which “active” succeeds leads to a desirable state for users. In contrast, if the expected payoff is negative, the desirable outcome for all users is the state where all users never choose active. Theorem 1 is quite important, because we can see which state is the socially optimal one just by observing at the expected payoff of the maximum strategy profile. This implies that the maximum strategy profile is useful to estimate whether the ISNE becomes popular or it becomes inactive, even if the active equilibrium is rarely observed in reality.Footnote 12

An analogy of Theorem 1 for any history \(h^t_\alpha \) can be proved in a similar way. We, therefore, obtain the following corollary for symmetric strategy profiles.

Corollary 1

For any symmetric payoff-relevant strategy \(\sigma \) and any history \(h^t\), the following hold:

-

(i)

If \(\varPi (\overline{\sigma } \mid h^t_\alpha ) \ge 0\) then \(\varPi (\sigma \mid h^t_\alpha ) \le \varPi (\overline{\sigma } \mid h^t_\alpha )\).

-

(ii)

If \(\varPi (\overline{\sigma } \mid h^t_\alpha ) < 0\), then \(\varPi (\sigma \mid h^t_\alpha ) \le 0\).

We then analyze the conditions for the existence and uniqueness of the active equilibrium. In this study, we apply theMarkov perfect equilibrium (MPE) (Maskin and Tirole 2001) concept, where MPE is a payoff-relevant strategy profile that satisfies subgame perfection.

Definition 2

Strategy profile \(\sigma ^*\) is said to be MPE if for each i and \(h^t \in H^t\) for any \(t < \infty \), \(\varPi _i(\sigma ^* \mid h^t) \ge \varPi _i(\sigma _i, \sigma ^*_{-i} \mid h^t)\) for all \(\sigma _i \in \varDelta _i\).

As \(\delta \in (0,1)\) and, from (A6), \(u(h^t_\alpha )\) is bounded, there must be an MPE (Fudenberg and Tirole 1991).

Intuitively, a no-action equilibrium appears to perpetually exist, because each player is unlikely to choose “active” when no one choose it. In practice, no-action equilibrium is always a Nash equilibrium. However, the following main theorem states that no-action equilibrium is not MPE in some cases. Moreover, in each case, we can see which equilibrium is MPE just by looking at magnitude relationship between \(\varPi ^a(\overline{\sigma } \mid h^0_\alpha )\) and \(\varPi ^n(\overline{\sigma } \mid h^0_\alpha )\). Recall here that \(\varPi ^n(\overline{\sigma } \mid h^0_\alpha )\) stands for the expected payoff when player selects “no-action” in period 1 and every player plays according to the maximum strategy profile \(\overline{\sigma }\) after period 2.

Theorem 2

-

(i)

All MPE are active equilibria if and only if \(\varPi ^a(\overline{\sigma } \mid h^0_\alpha ) > \varPi ^n(\overline{\sigma } \mid h^0_\alpha )\). That is, \(\varPi ^a(\overline{\sigma } \mid h^0_\alpha ) > \varPi ^n(\overline{\sigma } \mid h^0_\alpha )\) is a necessary and sufficient condition for the action history \((a, a, a,\ldots )\) to be the unique MPE outcome.

-

(ii)

All symmetric MPE are active equilibria if \(\varPi ^a(\overline{\sigma } \mid h^0_\alpha ) = \varPi ^n(\overline{\sigma } \mid h^0_\alpha )\).

-

(iii)

There is no MPE that is an active equilibrium if \(\varPi ^a(\overline{\sigma } \mid h^0_\alpha ) < \varPi ^n(\overline{\sigma } \mid h^0_\alpha )\).

The idea of the proof of Theorem 2 (i) is as follows: We first prove that each user naturally takes “active” if “active” has been taken a certain number of times, say \(m^*\) or more, in a row. We show that every user selects “active” when \(m^* -1\) “actives” are taken in a row, and we proceed by induction on the number of “actives”.Footnote 13 See the appendix for the details of the proof. The first part of the theorem gives the necessary and sufficient condition that all MPE are active equilibria. This states that we can know if the MPE is an active equilibrium by checking only the maximum strategy profile \(\overline{\sigma }\), which is just one of the strategies that results in \((a, a, a, \dots )\), and by focusing only on whether the player has an incentive to choose “no-action” in the first period. We obtain the second part of the theorem by adding symmetry. When \(\varPi ^a(\overline{\sigma } \mid h^0_\alpha ) > \varPi ^n(\overline{\sigma } \mid h^0_\alpha )\), only active equilibria are MPE without assuming the symmetry of strategy profiles. However, there is an asymmetric MPE that is not an active equilibrium when \(\varPi ^a(\overline{\sigma } \mid h^0_\alpha ) = \varPi ^n(\overline{\sigma } \mid h^0_\alpha )\). This asymmetric MPE is that only one player selects “no-action” with a positive probability (see the proof of Theorem 2 in the appendix). Finally, the third part says that even if \(\varPi (\overline{\sigma } \mid h^0)>0\) holds, and active equilibria are desirable for users, the active equilibria may not be achieved when \(\varPi ^a(\overline{\sigma } \mid h^0_\alpha ) < \varPi ^n(\overline{\sigma } \mid h^0_\alpha )\).

Example 3

Let the number of agents be 2. Suppose that, for any action history \(h^t_\alpha \), \(u(h^t_\alpha ) > 0\), if \(\alpha ^t = \alpha ^{t-1} = a\), \(u(h^t_\alpha ) = -x < 0\) otherwise. Let \(\varPi ^a(\overline{\sigma } \mid h^0_\alpha ) > 0 \). In this case, we have the following:

The difference between the above expected payoffs \( \epsilon \) is as follows:

From this, we obtain \(x = \frac{(1-\delta )}{1-\delta /2} \delta \varPi (\overline{\sigma } \mid a) - \epsilon /(1-\delta /2) \) . Substituting this formula into (8) yields the following equation:

Consider the free-ride strategy of choosing “active” only if positive instant payoff can be obtained, and choosing “no-action” otherwise. Consider the case that agent 1 adopts the free-rider strategy \( {\tilde{\sigma }}_1 \) and that agent 2 adopts the maximum strategy \( \overline{\sigma }_2 \). That is, for any \( t \in {\mathbb {N}} \) and \( h^t_\alpha \in H^t_\alpha \):

and \( \overline{\sigma }_2^{t+1}(h^{t}_\alpha ) =1 \). In the following, we obtain the expected payoff for agent 1 on the condition that agent 1 is selected at period 1 and chooses “no-action”. To obtain this, consider the following cases.

Case 1 (agent 2 is selected at period 2): This case occurs with probability 1/2. In this case, agent 2 chooses “active” at period 2. From period 3, agent 1 also chooses “active” when s/he is selected and obtains the expected payoff \( \varPi (\overline{\sigma } \mid a) \) .

Case 2 (agent 1 is selected at period 2 and agent 2 is selected at period 3): This case occurs with probability 1/4.p In this case agent 1 chooses “no-action” at period 2 and agent 2 chooses “active” at period 3. From period 4, agent 1 chooses “active” and obtains the expected payoff \( \varPi (\overline{\sigma } \mid a) \).

In the same way as above, the following equation can be obtained by the following:

On the other hand, the expected payoff to agent 2 when agent 2 is selected at period 1 is \( \varPi ^a_2({\tilde{\sigma }}_1, \overline{\sigma }_2 \mid h^0_\alpha ) = \varPi ^a(\overline{\sigma } \mid h^0_\alpha ) > 0\). There are three cases \( \epsilon >0, \epsilon = 0, \epsilon <0 \). The details of the proof are omitted. We have the following equilibria for each case.

Case 1 (\( \epsilon > 0 \)): the free-rider strategy is not the best response to the maximum strategy, since \( \varPi ^n_1( {\tilde{\sigma }}_1, \overline{\sigma }_2 \mid h^0_\alpha ) < \varPi ^a_1( \overline{\sigma }_1, \overline{\sigma }_2 \mid h^0_\alpha ) \). As we see in Theorem 2 (i), only active equilibria are Markov perfect.

Case 2 (\( \epsilon = 0 \)): in this case, the free-rider strategy and the maximum strategy are the best responses to the maximum strategy, since (12) is equal to (10). The profile of free-rider strategy and the maximum strategy is an MPE. As we see in Theorem 2 (ii), all MPE are active equilibrium.

Case 3 (\( \epsilon < 0 \)): in this case, the maximum strategy is not the best response to the maximum strategy. There is no MPE that is an active equilibrium as we see in Theorem 2 (iii). On the other hand, the free-rider strategy is the best response to the maximum strategy. The profile of free-rider strategy and the maximum strategy is an MPE. Moreover, there exits mixed strategy equilibrium as we see in Example 4.

Example 4

Let the number of agents be 2. Suppose that, for any action history \(h^t_\alpha \), \(u(h^t_\alpha ) > 0\) if \(\alpha ^t = \alpha ^{t-1} = a\), \(u(h^t_\alpha ) = -x < 0\) otherwise. That is, in each period, if “active” is chosen in the previous period, then choosing “active” brings positive instant payoff and is the best for each user after the period.

Let \(\varPi ^a(\overline{\sigma } \mid h^0_\alpha ) < \varPi ^n(\overline{\sigma } \mid h^0_\alpha )\) and \(\varPi ^a(\overline{\sigma } \mid h^0_\alpha )>0\).

In this case, from Theorem 2 (iii), there is no active equilibrium in MPE, but there exists asymmetric equilibrium as we saw in Example 3. Moreover, we have symmetric mixed MPE \( \sigma \) as we see in the following.

Let q be the probability of choosing “active” when “active” has not been chosen before. Formally, for any \(i \in I\)\(\sigma _i^{t+1}(h^0_\alpha ) = \sigma _i^{t+1}(n: t) = q\) for all t. On the other hand, equilibrium strategy should choose “active” with probability 1 when “active” is chosen in the previous period, i.e., \( \sigma ^{t+1}_i(h^t_\alpha ) = 1 \) if \(\alpha ^t = a\).

We denote the expected payoff of \( \sigma \) by V, i.e., \(V = \varPi (\sigma \mid h^0_\alpha )\). From the assumption of relevant strategy, we have \(\varPi (\sigma \mid n: t, n) = \varPi (\sigma \mid n: t) = V\). Thus, we have \(V= q \Big [ -x/2 + \delta \varPi ( \overline{\sigma } \mid a) \Big ] + (1-q)\delta V\). We, therefore, obtain the following:

Assume that \( \sigma \) is strictly mixed strategy profile, i.e., \( 0< q < 1 \). Then, noting that the payoffs of “active” and “no-action” are the same, we have the following:

and

We can easily check that there is no incentive to deviate from \( \sigma \) for the history with \( h^0_\alpha \) or n : t. Moreover, there is no obvious incentive to deviate from \( \sigma \) for the history with \( \alpha ^t = a \). Hence, there is a symmetric MPE, in which players choose “active” with probability q when no one has chosen “active” before, and players choose “active” with probability 1 if at least one user has chosen “active” before.

Incidentally, if \( \varPi ^a( \overline{\sigma } \mid h^0_\alpha ) = \varPi ^n( \overline{\sigma } \mid h^0_\alpha )\), then q is equal to 1 from the above equations. Thus, there is no symmetric proper mixed strategy equilibrium. This is consistent with Theorem 2 (ii).

In the next theorem, we show that, if the discount factor \(\delta \) increases, then the active equilibrium is easily achieved.

Theorem 3

Given \(u(\cdot )\) and w, if all MPE are active equilibria for some \(\delta \), then, for any \(\delta ' (>\delta )\), all MPE are active equilibria.

Proof

We have the following:

which is a monotone increasing function in \(\delta \). \(\square \)

Recall that if the expected payoff of the maximum strategy profile \(\overline{\sigma }\), \(\varPi (\overline{\sigma } \mid h^t_\alpha )\), is nonnegative then active equilibria is socially desirable equilibrium (see Theorem 1). The following theorem shows that, if \(\varPi ^a(\overline{\sigma } \mid h^t_\alpha ) < 0\), then even any equilibrium where every user eventually selects “active” cannot be achieved. Thus, if \(\varPi (\overline{\sigma } \mid h^t_\alpha ) > 0\), but \(\varPi ^a(\overline{\sigma } \mid h^t_\alpha ) < 0\), then the problem of inactiveness is more serious in the sense that the desirable equilibrium never occur.

Theorem 4

If \(\varPi ^a(\overline{\sigma } \mid h^t_\alpha ) < 0\), in the subgame after history \(h^t=(h^t_x, h^t_\alpha )\), “active” does not appear on any equilibrium path of a symmetric MPE. Thus, all symmetric MPE are no-action equilibria.

Proof

Case 1 (Case that \(\varPi (\overline{\sigma } \mid h^t_\alpha , a) \ge 0\)): Given \(\varPi (\overline{\sigma } \mid h^t_\alpha , a) \ge 0\), by Corollary 1(i), we obtain \(\varPi (\sigma \mid h^t_\alpha , a) \le \varPi (\overline{\sigma } \mid h^t_\alpha , a)\). Given \(\varPi ^a(\sigma \mid h^t_\alpha ) = u(h^t_\alpha ,a) + \delta \varPi (\sigma \mid h^t_\alpha , a)\) for any symmetric strategy profile \(\sigma \), we have \(\varPi ^a(\sigma \mid h^t_\alpha ) \le \varPi ^a(\overline{\sigma } \mid h^t_\alpha ) < 0\). If a player selects “no-action” in her/his every turn then \(\varPi ^n(\sigma \mid h^t_\alpha ) = 0\). Thus, when “active” has not been picked before, “active” is dominant and would never be chosen.

Case 2 (Case that \(\varPi (\overline{\sigma } \mid h^t_\alpha , a) < 0\)): From Corollary 1(ii), we can see that \(\varPi (\sigma \mid h^t_\alpha ,a)\le 0\) holds. Given \(\varPi (\overline{\sigma } \mid h^t_\alpha , a) < 0\), we have \(u(h^t_\alpha ,a) < 0\).

Thus, \(\varPi ^a(\sigma \mid h^t_\alpha ) = u(h^t_\alpha ,a) + \delta \varPi (\sigma \mid h^t_\alpha , a)< 0\). \(\square \)

Remark 1

There might also be an asymmetric equilibrium (strategy profile) where player i selects “active” in a period and does not choose it for a while after the period, but another player \(j \ne i\) plays “active.”

While Theorem 2 (i) and (ii) showed the condition where only active equilibria are (symmetric) MPE, we showed the condition that only no-action equilibria are MPE in Theorem 4. Example 4 shows the equilibrium that occurs when neither case applies.

5 Incentive system

In this section, we design an incentive system that enables the number of active users to increase steadily, even if there is no active equilibrium,Footnote 14 i.e., \(\varPi ^a(\overline{\sigma } \mid h^0_\alpha ) < \varPi ^n(\overline{\sigma } \mid h^0_\alpha )\). In this study, we assume that ISNE providers wish to achieve the situation in which almost all users actively use the ISNE in the future. Consequently, let ISNE providers be sufficiently patient, and the discount factor \(\delta '\) for the providers be sufficiently close to 1. Then, assume that, to attract more users, the providers plan to give incentive rewards to users that actively communicate through the ISNE when the ISNE starts up.

In particular, given history \(h^t = (h^t_x, h^t_\alpha )\), we assign incentive reward \(\eta (h^t_\alpha ) \ge 0\) to the selected player \(x_t\) only when s/he selects “active” at time t.

That is, in period t, the player gains \(u(h^t_\alpha ) + \eta (h^{t}_\alpha )\) (or 0) if s/he selects “active” (or “no-action,” respectively). Let us denote an instant payoff including the incentive reward by \(u_W(h^\tau _\alpha )=u(h^\tau _\alpha ) + \eta (h^\tau _\alpha )\). Denoting the total payoff that i gains by period t by \(P_{Wi}\), we have the following:

From Lemma 3, if \(\varPi ^a(\overline{\sigma } \mid a:t'-1) > \varPi ^n(\overline{\sigma } \mid a:t'-1)\) for any \(t'\ge t\), after action history \((a:t-1)\) every player selects “active” in her/his turn without any incentive reward. Accordingly, we denote with \(t^*\) the minimum t satisfying the condition above.Footnote 15 More formally:

Using \(t^*\) as defined above, we set incentive \(\eta (h^t_\alpha )\) as follows:

-

(I1)

\(\eta (a:t)=0\) if \(t \ge t^*\).

-

(I2)

\(\eta (a:t) = \max \Big [ \sup _{\sigma _i \in \varDelta _i} \varPi ^n(\sigma _i, \overline{\sigma }_{-i} \mid a:t-1) - \varPi ^a(\overline{\sigma } \mid a:t-1) - w \sum _{\tau =t+1}^{t^*-1} \eta (a:\tau ) \delta ^{\tau -t} +\epsilon , 0\Big ] \), where \(\epsilon > 0\).

-

(I3)

For an action history \(h^t_\alpha \) other than that mentioned above, \(\eta (h^t_\alpha )=0\), which means that, for any action history, including at least one “no-action,” the ISNE provider does not pay any incentives.

Theorem 5

Given the incentive system defined above, the series of “actives” is the unique equilibrium outcome.

Proof

Given \(h^{t^*-1}=(h^{t^*-1}_x, (a:t^*-1))\), by selecting “active”, a user can earn expected payoff \(\varPi ^a( \overline{\sigma } \mid a:t^*-1) + \sup _{\sigma _i \in \varDelta _i} \varPi ^n(\sigma _i, \overline{\sigma }_{-i} \mid a:t^*-1) - \varPi ^a(\overline{\sigma } \mid a:t^*-1) + \epsilon > \sup _{\sigma _i \in \varDelta _i} \varPi ^n(\sigma _i, \overline{\sigma }_{-i} \mid a:t^*-1) \), because after \(t^*\) all players select “active” in their turn. Thus, the player \(x^t\) is better off selecting “active”, and the theorem is proved by induction. \(\square \)

6 Participation costs

When a player starts to use ISNE, s/he has to pay some participation cost. The cost here does not indicate a monetary cost, rather the time cost. S/he has to learn the usage of the ISNE and input some personal data for registration. In this section, we take into account this participation cost \(C (>0)\). In the model defined in the previous section, we assume that the instant payoff to a player that first selects “active” is \(u(\cdot ) - C\). We refer to a player who has selected “active” and paid the participation cost as a member of the ISNE. We refer to a player who has not selected “active” before as a nonmember. Until the preceding section, we assumed that the strategy of a player depends only on the action history as the player history has no effect on the payoff. In this section, a payoff to each player depends on whether the player is a member. Each player is, therefore, concerned with not only if s/he is a member, but also if the other player is a member when s/he makes a decision. In practice, nonmembers cannot know the detailed information of ISNE members, for example, who exactly is a member or when s/he joins the ISNE. However, nonmembers can know the number of ISNE members from news or statistics provided on the Internet. Some ISNE also detail the number of members on their own sites. For instance, we can find a list of the number of Facebook users by country on the Internet, and Facebook provides the number of active users on its own site (under newsroom). In fact, the number of members is still very useful for players when deciding whether to join the ISNE and become a new member. We thus make the following assumption.Footnote 16

Assumption 2

Each player knows the number of members and whether or not s/he is a member before s/he selects her/his action.

In this section, the strategy of a player depends on the action history, the number of members, and whether s/he is a member. Purely for notational convenience, we assume that the strategy depends on the whole history \(h^t\), which is the pair of the action history \(h^t_\alpha \) and the player history \(h^t_x\). However, in fact, the number of members only matters for nonmembers, and we do not need to assume that players know the entire player history. Our proof of the following results uses only the number of members. Formally, suppose that the behavioral strategy \(\sigma ^{t+1}_i\) of player i at time \(t+1\) is a function \(\sigma ^{t+1}_i: h^t \mapsto p \in [0,1]\) that assigns to each history \(h^t\) the probability of choosing “active” at time \(t+1\). In this section, we continue to consider the payoff-relevant strategy, and therefore, \(\sigma _i\) satisfies \(\sigma _i((j,h^t_x),(n,h^t_\alpha )) = \sigma _i(h^t_x,h^t_\alpha )\) for all \(h^t = (h^t_x, h^t_\alpha ) \in H\) and all \(j \in I\).

We denote expected payoffs after action history \(h_\alpha ^t\), \(\varPi (\sigma \mid h_\alpha ^t), \varPi ^a(\sigma \mid h_\alpha ^t)\), and \(\varPi ^n(\sigma \mid h_\alpha ^t)\) by \(\varPi _C(\sigma \mid h^t), \varPi _C^a(\sigma \mid h^t)\), and \(\varPi _C^n(\sigma \mid h^t)\), respectively. Given strategy profile \(\sigma \), for example, we denote by \(\varPi _C(\sigma \mid h^t)\) an expected payoff to player i after the whole history \(h^t\).

Now, let us consider symmetric strategy \(\overline{\sigma }\). Given history \(h^t\), \(\varPi _C^a(\overline{\sigma } \mid h^t)\) denotes the expected payoff to the selected player if s/he i selects “active” in period \(t+1\) and has already chosen “active” before. Otherwise (i.e., when s/he first selects active in period \(t+1\)), the expected payoff is \(\varPi _C^a(\overline{\sigma } \mid h^t) - C\), because player i should pay the participation cost at time \(t+1\). On the other hand, if player i selects “no-action” in period \(t+1\), the expected payoff to the player is \(\varPi _C^n(\overline{\sigma } \mid h^t)\) if s/he has already chosen “active” before period t, \(\varPi _C^n(\overline{\sigma } \mid h^t) - \sum _{\tau =1}^\infty (1-w)^{\tau -1} \delta ^{\tau } w C = \varPi _C^n(\overline{\sigma } \mid h^t) - \delta w C/( 1- (1-w)\delta )\) otherwise (i.e., when s/he has not chosen “active” by period \(t+1\)). We have a similar theorem to Theorem 2.

Theorem 6

-

(i)

Every MPE is an active equilibrium if and only if the following inequality holds:

$$\begin{aligned} \varPi _C^a(\overline{\sigma } \mid h^0) - \frac{(1-\delta )C}{1-(1-w)\delta } > \varPi _C^n(\overline{\sigma } \mid h^0). \end{aligned}$$ -

(ii)

All symmetric MPE are active equilibria if the following equality holds:

$$\begin{aligned} \varPi _C^a(\overline{\sigma } \mid h^0) - \frac{(1-\delta )C}{1-(1-w)\delta } = \varPi _C^n(\overline{\sigma } \mid h^0). \end{aligned}$$ -

(iii)

There is no MPE that is an active equilibrium if the following equality holds:

$$\begin{aligned} \varPi _C^a(\overline{\sigma } \mid h^0) - \frac{(1-\delta )C}{(1-(1-w)\delta )} < \varPi _C^n(\overline{\sigma } \mid h^0). \end{aligned}$$

Proof

The proof follows the same logic as the proof of Theorem 2. \(\square \)

7 Concluding remarks

In this study, we modeled an ISNE as a dynamic game in which the players are ISNE users, including potential users, and studied the condition that every user actively communicates through the ISNE. In particular, we focused on the active equilibria where each user always selects “active” in her/his turn, and derived the necessary and sufficient condition that the active equilibrium is an MPE. In our main theorem, we also obtained the condition that every symmetric MPE is an active equilibrium. The main theorem states that we can know that the MPE is an active equilibrium by only checking the expected payoff of the maximum strategy profile \(\overline{\sigma }\). Moreover, we showed in Theorem 4 that there is no active action in the equilibrium path. Section 5 proposed an incentive mechanism that achieves an active equilibrium even if the assumption of Theorem 4 holds. In Sect. 6, we took into account the participation cost and assumed that a player would pay a constant cost C when s/he first selects “active.” We then showed that a similar result to the main theorem holds when we allow all players to know the current number of ISNE members.

In terms of future research, we intend to extend the binary model to consider the level of participation in a community in an ISNE. In addition, as we mentioned in the literature review, most dynamic game models, including our own, assumed the complete network in which all (potential) users have connections each other. There are theoretical studies on games with network structures called network games (e.g., Ballester et al. 2006; Bramoullé and Kranton 2007; Galeotti et al. 2010; Belhaj et al. 2014; Bramoullé et al. 2014; Allouch 2015; Zhang and Du 2017). These studies derive the equilibrium of a network game, and analyze the relationship between the features of equilibrium and network structures. Moreover, Candogan et al. (2012), Bloch and Quérou (2013) , Cohen and Harsha (2013), and Makhdoumi et al. (2017) investigated the relationship between network structures and the optimal price of the good as well as network structures and the equilibrium. In the first three studies, the relationship between price and centrality (e.g., Bonacich centrality) is discussed using a one-shot network game. The last one deals with the multi-period (specifically, finite period) model and studies the optimal price in each period for specific network structures. However, all aforementioned studies investigate the diffusion process of goods with network externality and each customer’s action is irreversible unlike in our model. It would be interesting to introduce network structures into a dynamic game model with reversible actions.

Notes

For instance, Rakuten auction, an online auction site, and Mobli, a media-sharing service, closed in 2016.So.cl, a social networking service, closed in March 2017.

Ochs and Park (2010) analyzed the situation in which players can reverse their decisions at no cost in an incomplete information model.

Aoyagi (2013) also examined incomplete information models of a monopoly good with a network externality. Although his model is not a dynamic game, Aoyagi (2013) proposed a price-posting scheme that assigns each adopter a monetary transfer according to the number of adopters, and derived the conditions under which the revenue-maximizing scheme maximizes the network size subject to the participation constraints.

In these literature, it is well known that the result depends on the network structure, while all potentially users are supposed to have connections each other in most studies of a dynamic game.

This type of network externality is often known as an indirect network externality (Katz and Shapiro 1985).

Although our model is a binary one, each player’s set of alternatives is much wider than in the traditional binary models, because we introduce reversible behavior, and players can make a decision several times.

In fact, suppose that \(q(t - \tau ) = 1\) if \(\tau \le D\) and \(q(t - \tau ) = 0\); otherwise, and \(f(x) = x\). Then, Examples 1 and 2 are identical.

A payoff-relevant strategy is time homogeneous, that is, for any t and \(t'\)\(\sigma ^t_i(h^t_\alpha ) = \sigma ^{t'}_i(h^t_\alpha )\). Thus, naturally \(\sigma ^2_i(a) = \sigma ^{k+2}_i(n: k, a)\).

We name \(\overline{\sigma }_i\) the maximum strategy, because the probability of choosing “active” over the whole time is maximum among all strategies. For instance, we can call the minimum strategy \(\underline{\sigma }_i\), such that \(\underline{\sigma }^{t+1}_i(h^t_\alpha ) = 0\) for every \(t < \infty \), and \(h^t_\alpha \in H^t_\alpha \).

\(\varPi _i( \sigma \mid h^t_\alpha )\) is the expected payoff to i in the subgame starting at \(h^t_\alpha \).

It may be possible that the equilibrium is observed in reality as if consumers have perfect rationality, even though they are not perfectly rational and use heuristic decision-making (cf. “as if” hypotheses of Friedman 1953).

The precise proof is complex, because our model considers infinite history.

In this section, we use the original definitions of \(\varPi (\sigma \mid h^t), \varPi ^a(\sigma \mid h^t)\) and \(\varPi ^n(\sigma \mid h^t)\), again.

Given that \(\varPi ^a(\overline{\sigma } \mid a:t-1) - \varPi ^n(\overline{\sigma } \mid a:t-1)\) is not necessarily monotonically increasing in t, \(t^*\) may differ with \(\min \{ t: \varPi ^a(\overline{\sigma } \mid a:t-1) > \varPi ^n(\overline{\sigma } \mid a:t-1) \}\).

Instead of this assumption, we can use the assumption that there exists an action history, such that \(u(h^t_\alpha )- C > 0\) to obtain the same theorem.

\(\sigma _i(\cdot ) < 1\) occurs at finite times.

To be precise, we generally suppose that \(\varDelta (t)\) may include nonpayoff-relevant strategies. That is, \(\sigma \in \varDelta (t)\) allows \(\sigma ^{\tau +1}(n: \tau ) \ne \sigma ^1(h^0_\alpha )\) for some \(\tau \ge t\), while it satisfies \(\sigma ^{\tau +1}(n: \tau ) = \sigma ^1(h^0_\alpha )\) for all \(\tau < t\).

References

Allouch, N. (2015). On the private provision of public goods on networks. Journal of Economic Theory, 157, 527–552.

Aoyagi, M. (2013). Coordinating adoption decisions under externalities and incomplete information. Games and Economic Behavior, 77(1), 77–89.

Arthur, W. B. (1987). Self-reinforcing mechanisms in economics. The Economy as an Evolving Complex System, 1987, 9–31.

Arthur, W. B. (1989). Competing technologies, increasing returns, and lock-in by historical events. The Economic Journal, 99(394), 116–131.

Arthur, W. B., Ermoliev, Y. M., & Kaniovski, Y. M. (1987). Path-dependent processes and the emergence of macro-structure. European Journal of Operational Research, 30(3), 294–303.

Ballester, C., Calvó-Armengol, A., & Zenou, Y. (2006). Who’s who in networks. Wanted: The key player. Econometrica, 74(5), 1403–1417.

Belhaj, M., Bramoullé, Y., & Deroïan, F. (2014). Network games under strategic complementarities. Games and Economic Behavior, 88, 310–319.

Bloch, F., & Quérou, N. (2013). Pricing in social networks. Games and Economic Behavior, 80, 243–261.

Bramoullé, Y., & Kranton, R. (2007). Public goods in networks. Journal of Economic Theory, 135(1), 478–494.

Bramoullé, Y., Kranton, R., & D’Amours, M. (2014). Strategic interaction and networks. American Economic Review, 104(3), 898–930.

Candogan, O., Bimpikis, K., & Ozdaglar, A. (2012). Optimal pricing in networks with externalities. Operations Research, 60(4), 883–905.

Cohen, M., & Harsha, P. (2013). Designing price incentives in a network with social interactions. https://doi.org/10.2139/ssrn.2376668

David, P. A. (1985). Clio and the economics of QWERTY. American Economic Review, 75(2), 332–337.

Delre, S. A., Jager, W., & Janssen, M. A. (2007). Diffusion dynamics in small-world networks with heterogeneous consumers. Computational and Mathematical Organization Theory, 13(2), 185–202.

Dosi, G., Ermoliev, Y., & Kaniovski, Y. (1994). Generalized urn schemes and technological dynamics. Journal of Mathematical Economics, 23(1), 1–19.

Dou, W., & Ghose, S. (2006). A dynamic nonlinear model of online retail competition using Cusp Catastrophe Theory. Journal of Business Research, 59(7), 838–848.

Farrell, J., & Saloner, G. (1985). Standardization, compatibility and innovation. The RAND Journal of Economics, 16(1), 70–83.

Friedman, M. (1953). Essays in positive economics. Chicago: University of Chicago Press.

Fudenberg, D., & Tirole, J. (1991). Game theory. Cambridge: MIT.

Gale, D. (1995). Dynamic coordination games. Economic Theory, 5(1), 1–18.

Galeotti, A., Goyal, S., Jackson, M. O., Vega-Redondo, F., & Yariv, L. (2010). Network games. The Review of Economic Studies, 77(1), 218–244.

Heinrich, T. (2016). A discontinuity model of technological change: Catastrophe theory and network structure. Computational Economics, 51(3), 407–425.

Homma, K., Yano, K., & Funabashi, M. (2010). A diffusion model for two-sided service systems (in Japanese). IEEJ Transactions EIS, 130(2), 324–331.

Iba, T., Takenaka, H., & Takefuji, Y. (2001). Reappearance of video cassette format competition using artificial market simulation (in Japanese). Transactions of Information Processing Society of Japan, 42(SIG 14(TOM 5), 73–89.

Katz, M. L., & Shapiro, C. (1985). Network externalities, competition, and compatibility. American Economic Review, 75(3), 424–440.

Makhdoumi, A., Malekian, A., & Ozdaglar, A. E. (2017). Strategic dynamic pricing with network effects, Rotman School of Management Working Paper, No. 2980109. https://doi.org/10.2139/ssrn.2980109

Maskin, E., & Tirole, J. (2001). Markov perfect equilibrium: I. Observable actions. Journal of Economic Theory, 100(2), 191–219.

Ochs, J., & Park, I.-U. (2010). Overcoming the coordination problem: Dynamic formation of networks. Journal of Economic Theory, 145, 689–720.

Rohlfs, J. H. (1974). A theory of interdependent demand for a communications service. Bell Journal of Economic and Management Science, 5(1), 16–37.

Rohlfs, J. H. (2003). Bandwagon effect in high-technology industries. Cambridge: MIT.

Shichijo, T., & Nakayama, Y. (2009). A two-step subsidy scheme to overcome network externalities in a dynamic game, The B.E. Journal of Theoretical Economics, 9(1), 4.

Uchida, M., & Shirayama, S. (2008). Influence of a network structure on the network effect in the communication service market. Physica A: Statistical Mechanics and Its Applications, 387(21), 5303–5310.

Zhang, Y., & Du, X. (2017). Network effects on strategic interactions: A laboratory approach. Journal of Economic Behavior and Organization, 143, 133–146.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This work is supported by the Japan Society for the Promotion of Science (JSPS KAKENHI Grant Numbers 26380242 and 16H03596).

Appendix

Appendix

This appendix contains lemmas and proofs of theorems.

1.1 Appendix A: Lemmas for Section 3

First, we derive the following properties from these basic assumptions (A1)–(A6).

Lemma 1

For the instant payoff function \(u(\cdot )\), properties (L1)–(L4) hold:

-

(L1)

For any finite period \(t < \infty \) and \(h^t_\alpha \in H^t_\alpha \):

$$\begin{aligned} u(h^t_\alpha ) = u(n: k, h^t_\alpha ) \quad \text {for any } 0 \le k < +\infty . \end{aligned}$$ -

(L2)

For any finite periods \(t , \tau < \infty \):

$$\begin{aligned} u(h^t_\alpha , {\tilde{h}}^\tau _\alpha ) \ge u({\tilde{h}}^\tau _\alpha ) \quad \text {for all } h^t_\alpha \in H^t_\alpha \text { and } {\tilde{h}}^\tau _\alpha \in H^\tau _\alpha . \end{aligned}$$ -

(L3)

u(a : k) is nondecreasing in k.

-

(L4)

There exists \(m^*\), such that \(u(a: m^* - 1) \le 0 < u(a: m^*)\).

Proof

We prove each item using the corresponding assumption.

-

(L1)

From (A1), it immediately follows that \(u(h^t_\alpha ) = u(n, h^t_\alpha )=u(n: 2, h^t_\alpha )=\dots =u(n: k, h^t_\alpha )\).

-

(L2)

In each period from (A2), replacement of any action by “no-action” reduces the instant payoff \(u(h^t_\alpha , {\tilde{h}}^\tau _\alpha ) \ge u(n: k, {\tilde{h}}^\tau _\alpha )\). Given \(u(n: k, {\tilde{h}}^\tau _\alpha )=u({\tilde{h}}^\tau _\alpha )\) by (L1), \(u(h^t_\alpha , {\tilde{h}}^\tau _\alpha ) \ge u({\tilde{h}}^\tau _\alpha )\).

-

(L3)

From (L2), we have \(u(a: k+1) = u(a, a: k) \ge u(a: k)\) for all k.

-

(L4)

There exists \(h^t_\alpha \), such that \(u(h^t_\alpha )>0\), by (A5). If we replace the action by “active” in every period from (A2), then the instant payoff increases: \(u(a: t) \ge u(h^t_\alpha )\). Thus, \(u(a: t) > 0\). Moreover, there exists \(m^*\), such that \(u(a: m^* - 1) \le 0 < u(a: m^*)\), by assumption (A4) and (L3).\(\square \)

Lemma 2

If the instant payoff function \(u(\cdot )\) satisfies assumptions (A1)–(A6), the following properties of expected payoff (L5)–(L7) hold:

-

(L5)

Expected payoff function \(\varPi _i\) has a positive externality. Likewise, \(\varPi _i^a\) and \(\varPi _i^n\) have a positive externality.

-

(L6)

For any finite \(h^t_\alpha \in H^t_\alpha \), \(\varPi ^a( \overline{\sigma } \mid h^t_\alpha ) = (1-w) u(h^t_\alpha , a) + \varPi ( \overline{\sigma } \mid h^t_\alpha ).\)

-

(L7)

\(\varPi ^a(\overline{\sigma } \mid h^0_\alpha ) > \varPi ^n( \overline{\sigma } \mid h^0_\alpha )\) implies that \(\varPi ( \overline{\sigma } \mid h^0_\alpha )> \varPi ^n( \overline{\sigma } \mid h^0_\alpha ) > 0\).

Proof

(L5) follows straightforwardly from (A2) and the definition of \(\varPi _i\). Clearly, (L6) holds by the definitions of \(\varPi \) and \(\varPi ^a\).

Let us show (L7). From property (L6), we have:

If \(\varPi (\overline{\sigma } \mid h^0_\alpha ) \le 0\), by (A4), \(\varPi ^a( \overline{\sigma } \mid h^0_\alpha ) - \varPi ^n( \overline{\sigma } \mid h^0_\alpha ) < 0\). Thus, if \(\varPi ^a( \overline{\sigma } \mid h^0_\alpha ) - \varPi ^n( \overline{\sigma } \mid h^0_\alpha ) > 0\), we have \(\varPi ( \overline{\sigma } \mid h^0_\alpha ) > 0\). Moreover, as \(\varPi ^n( \overline{\sigma } \mid h^0_\alpha ) = \delta \varPi ( \overline{\sigma } \mid h^0_\alpha )\), \(\varPi ( \overline{\sigma } \mid h^0_\alpha )> \varPi ^n( \overline{\sigma } \mid h^0_\alpha ) > 0\). \(\square \)

1.2 Appendix B: Proofs and Lemmas for Section 4

Proof of Theorem 1

Through this proof, let \(h=(h_x, h_\alpha )\) be an infinite history that takes place when every player plays according to \(\sigma \). We prove the theorem in three steps. We first show (i) in Steps 1 and 2.

Step 1: First, we show that the total utility of all players \(\sum _i P_i(h)\) satisfies \(w \sum _i P_i(h) \le \varPi (\overline{\sigma } \mid h^0_\alpha )\) for any \( h \in H \). In the following, we fix an infinite history \(h=(h_x, h_\alpha )\). For \( h_\alpha \), consider the set of periods when “active” is selected, \( L=\{ \tau \mid \alpha ^{\tau } = \alpha \} \). Let us denote the smallest element of L by \( \tau _1 \) and \( \ell \)th smallest element of L by \( \tau _\ell \).

The finite action history of period t taken out from \(h_\alpha \) is denoted by \(h^t_\alpha =(\alpha ^1,\alpha ^2,\dots , \alpha ^t)\). If \( u(h^{\tau _\ell }_\alpha ) \le 0 \) for all \( \tau _{\ell } \in L \), then, obviously, we have \(w \sum _i P_i(h) \le \varPi (\overline{\sigma } \mid h^0_\alpha )\). On the other hand, \( u(h^{\tau _1}) < 0 \) from (A4). Thus, we assume that there exists \( \tau _{\ell ^*} \), such that \( u(h^{\tau _{\ell ^*}}_\alpha ) > 0\) and \( u(h^{\tau _{\ell }}_\alpha ) \le 0 \) for any \( \ell < \ell ^* \).

Using the notations, we have:

Now, we consider an action history \( h'_\alpha \), such that “no-action” is selected until \( \tau _{\ell ^*} -\ell ^* \) period and “active” is selected after that. For example, if \( h_\alpha = (n,a,n,a,a,n,a\dots ) \) and \( \tau _{\ell ^*} = 4 \), then \( h'_\alpha = (n,n,a,a,a,a,a, \dots ) \). The sum of total payoff of \( h'_\alpha \) is as follows:

We now show that \( \sum _i P_i(h_\alpha ) \le \sum _i P_i(h'_\alpha ) \). For \( k < \ell ^* \), we have \( u(h^{\tau _k}_\alpha ) \le 0 \), \( \tau _k \le \tau _{\ell ^*} - \ell ^* + k \) and \( u(h^{\tau _k}_\alpha ) \le u(a:k) \) from (A3) . Thus, we have \( u(h^{\tau _k}_\alpha ) \delta ^{\tau _k - 1} \le \delta ^{\tau _{\ell ^*} - \ell ^*} u(a:k) \delta ^{k-1} \). On the other hand, for \( k \ge \ell ^* \), we have \( u(\tau _k) \le u(a:k)\), \( u(a:k) >0 \) and \( \tau _k \ge \tau _{\ell ^*} - \ell ^* + k \). Thus, we have \( u(h^{\tau _k}_\alpha ) \delta ^{\tau _k - 1} \le \delta ^{\tau _{\ell ^*} - \ell ^*} u(a:k) \delta ^{k-1} \). Therefore, we have \( \sum _i P_i(h_\alpha ) \le \sum _i P_i(h'_\alpha ) \).

Since \( \sum _i P_i(h'_\alpha ) = N \delta ^{\tau _{\ell ^*} - \ell ^*} \varPi (\overline{\sigma } \mid h^0_\alpha ) \le N \varPi (\overline{\sigma } \mid h^0_\alpha )\), we finally have \( w \sum _i P_i(h_\alpha ) \le w \sum _i P_i(h'_\alpha ) \le \varPi (\overline{\sigma } \mid h^0_\alpha )\).

Step 2: For a given strategy profile \(\sigma \), let us denote the probability measure that history h occurs by \(\mu _\sigma (h)\). Then:

Thus, by \(w \sum _i P_i(h) \le \varPi (\overline{\sigma } \mid h^0_\alpha )\) for all h, we obtain: \(w \sum _i \varPi _i (\sigma \mid h^0_\alpha ) \le \varPi (\overline{\sigma } \mid h^0_\alpha ).\) From Steps 1 and 2, we have proven part (i).

Step 3: We finally prove (ii). By the proof of (i), for given \( h_\alpha \), (1) if there does not exit \( \ell ^* \), such that \( u(h^{\tau _{\ell ^*}}_\alpha ) > 0\) then \(\sum _i P_i(h) \le 0\), and (2) if there exists \( \ell ^* \), such that \( u(h^{\tau _{\ell ^*}}_\alpha ) > 0\), then we have \(\sum _i P_i(h) \le \varPi ( \overline{\sigma } \mid h^0_\alpha ) < 0\).

In both cases (1) and (2), \(\sum _i P_i(h) \le 0\) holds. Thus, we have \(\sum _i \varPi _i(\sigma \mid h_\alpha ) \le 0\) for each history h. Therefore, \(\sum _i \varPi _i(\sigma \mid h^0_\alpha ) \le 0\) for any strategy profile \(\sigma \), which implies that no-action equilibria achieves the maximum consumer surplus. \(\square \)

The proof of Theorem 2 is complex, because the model considers infinite history. Thus, we need the following two lemmas to show the condition for the uniqueness of the active equilibrium of MPE. We first consider the case where “active” is selected \(k^*\) times one after the other. We then derive the condition that every player always selects “active” in the equilibrium after the history.

Lemma 3

Suppose that \(\varPi ^a(\overline{\sigma } \mid h^t_\alpha ) \ge \varPi ^n(\overline{\sigma } \mid h^t_\alpha )\) for any \(h^t_\alpha \). Given an integer \(k^*\), suppose that \(\varPi ^a(\overline{\sigma } \mid a:k') > \varPi ^n(\overline{\sigma } \mid a:k')\) for \(k' \ge k^*\). Then, in all MPE, “active” is selected successively after \(h^{k^*}=(h^{k^*}_x, a:k^*)\).

Proof

Case 1 (that \(k \ge m^* - 1\)): given \(u(a: k, a) > 0\) for all k, such that \(k \ge m^* - 1\) and (L3), it follows that \(u(a:k+\tau ) > 0\) for \(\tau =1,2,\dots \). Hence, for any history \(h^{k} = (h^{k}_x, a: k)\) in an equilibrium in a subgame after \(h^{k}\), every player selects “active” in her/his turn.

Case 2 (that \(k^* \le k < m^*-1\)): suppose that, for any history \(h^{k+1} = (h^{k+1}_x, a: k+1)\), in an equilibrium of a subgame after \(h^{k+1}\), every player selects “active” after the (\(k+2\))th period. We then show that every player selects “active” after the (\(k+1\))th period in an MPE of a subgame after any history \(h^{k} = (h^{k}_x, a: k)\).

Case 2A (that “active” is chosen in the (\(k+1\))th period): in a subgame after \(h^{k} = (h^{k}_x, a: k)\), if a player that is selected in period \(k+1\) selects “active,” then \(h^{k+1} = (h^{k+1}_x, a: k+1)\). From the assumption, in an equilibrium of a subgame after \(h^{k+1}\), players always select “active” after the \(k+2\) period. Thus, the expected payoff to a player when s/he selects “active” in the (\(k+1\))th period is \(\varPi ^a(\overline{\sigma } \mid a:k)\).

Case 2N (that “no-action” is chosen in the \((k+1)\)th period):

Let us show that \(\varPi ^a_i(\overline{\sigma } \mid a:k) > \varPi ^n_i(\sigma \mid a:k)\) for any \(\sigma \), where \(\varPi ^n_i(\sigma \mid a:k)\) is the expected payoff when player i is selected in period \(k+1\) and chooses “no-action,” i.e., \(h^{k+1}_\alpha = (a:k, n)\), in a subgame from \(h^{k} = (h^{k}_x, a: k)\).

Case 2N-1 (that a player selects “no-action” with some positive probability at finite times):Footnote 17 consider strategy profile \(\sigma \), where player i always selects “active” after a finite history/period.

Let \(\varDelta (k+Q)\) be the set of strategy profiles in which every player selects “active” after the (\(k+Q\))th period,Footnote 18 including period \(k+Q\). That is, \(\sigma \in \varDelta (k+Q)\) is a strategy profile, such that for every player \(i \in I\): \(\sigma ^{k+Q+\tau }_i(h^{k+Q-1+\tau }_\alpha ) = 1\) for any \(h^{k+Q-1+\tau }_\alpha \in H^{k+Q-1+\tau }\), \(\tau = 0, 1, 2, 3, \dots \) for any \(\sigma \in \varDelta (k+Q)\).

Then, we show \(\varPi ^n_i(\overline{\sigma } \mid a:k) \ge \varPi ^n_i(\sigma \mid a:k)\) for all \(\sigma \in \varDelta (k+Q)\). First, from the definition, for any \(h^{k+Q-2}\), \(\varPi ^n_i(\overline{\sigma } \mid h^{k+Q-2}_\alpha ) = \varPi ^n_i(\sigma \mid h^{k+Q-2}_\alpha )\) and \(\varPi ^a_i(\overline{\sigma } \mid h^{k+Q-2}_\alpha ) = \varPi ^a_i(\sigma \mid h^{k+Q-2}_\alpha )\) hold. By assumption of this lemma, \(\varPi ^a_i(\overline{\sigma } \mid h^{k+Q-2}_\alpha ) \ge \varPi ^n_i(\overline{\sigma } \mid h^{k+Q-2}_\alpha )\) for all \(h^{k+Q-2} \in H^{k+Q-2}\). Thus, when we check the maximum payoff to player i, we can assume that s/he selects “active” at time \(k+Q-1\). From the positive externality, we can also assume that other players also select “active” in the (\(k+Q-1\))th period. Hence, we obtain \(\varPi ^n_i(\overline{\sigma } \mid h^{k+Q-3}_\alpha ) \ge \varPi ^n_i(\sigma \mid h^{k+Q-3}_\alpha )\). In a similar way, we can derive \(\varPi ^n_i(\overline{\sigma } \mid a:k) \ge \varPi ^n_i(\sigma \mid a:k)\) for all \(\sigma \in \varDelta (k+Q)\). We can apply the same argument for any \(Q=0,1,2, \dots (<+\infty )\).

Case 2N-2 (that a player selects “no-action” with some positive probability at infinite times): Consider that a strategy profile where player i may choose “no-action” with a positive probability at an infinite time. Given \(\delta < 1\), a T exists that satisfies:

By Case 2N-1, we have the following:

On the other hand, because differences in the payoffs after the (\(T+1\))th period are at most \(\overline{U} - \underline{U}\), it follows that:

Thus, we obtain the following:

where the first inequality follows from (3) and (4), and the second follows from (2).

Therefore, after history \(h^k = (h^k_x, a:k)\), selecting “active” and earning \(\varPi ^a(\overline{\sigma } \mid a:k)\) are strictly better than choosing “no-action,” and hence a player selects “active” in the (\(k+1\))th period. Given \(h^{k+1} = (h^{k+1}_x, a:k+1)\), the outcome where every player selects “active” after \(k+2\) is the unique MPE outcome.

From Cases 1 and 2, the proof is completed by induction on k. \(\square \)

We prove the following lemma to simplify the condition of the previous lemma.

Lemma 4

For any finite action history \(h^t_\alpha \), the following inequality holds: \(\varPi ^a(\overline{\sigma } \mid h^t_\alpha ) - \varPi ^n(\overline{\sigma } \mid h^t_\alpha ) \ge \varPi ^a(\overline{\sigma } \mid h^0_\alpha ) - \varPi ^n(\overline{\sigma } \mid h^0_\alpha )\).

Proof

By (A3), \(u(h^t_\alpha , a: k) \ge u(h^t_\alpha , n, a: k)\) for any \(k \ge 0\). Thus, we obtain the following:

Using the above, we have the following:

where the last inequality follows from (L2). \(\square \)

From the above two lemmas, we can prove the main theorem.

Proof of Theorem 2

We prove the theorem in four steps.

Step 1: We first show (iii). Suppose that MPE \(\sigma \) is an active equilibrium. Given that the active equilibrium results in the action history that is a series of “actives” \((a, a, a, \dots )\), \(\varPi ^a_i(\sigma \mid h^0_\alpha ) = \varPi ^a(\overline{\sigma } \mid h^0_\alpha )\).

From the fact that \(\sigma \) is a payoff-relevant strategy, we have \(\sigma ^{t+1}(h^t_\alpha ) = \sigma ^{t+1}(n, h^t_\alpha )\). That is, even if “no-action” is chosen in the first period, “active” will be chosen successively after the second period, because strategy \(\sigma \) assigns the same action to \(h^0_\alpha \) and (n). Therefore, \(\varPi ^n_i(\sigma \mid h^0_\alpha ) = \varPi ^n(\overline{\sigma } \mid h^0_\alpha )\).

When \(\varPi ^a( \overline{\sigma } \mid h^0_\alpha ) < \varPi ^n( \overline{\sigma } \mid h^0_\alpha )\), “no-action” must be the best reply in the first period after \(h^0\), which contradicts the assumption that \(\sigma \) is MPE.

Step 2: We first show “if” is part of (i). From Lemma 4 and \(\varPi ^a(\overline{\sigma } \mid h^0_\alpha ) > \varPi ^n(\overline{\sigma } \mid h^0_\alpha )\), we obtain the assumption of Lemma 3. Thus, all MPE are active equilibria.

Step 3: Next, we show (ii) of Theorem 2 in three substeps, 3-1, 3-2, and 3-3.

(3-1) We first show that if \(\varPi ^a( \overline{\sigma } \mid h^0_\alpha ) = \varPi ^n( \overline{\sigma } \mid h^0_\alpha )\), then all MPE are active equilibria in any subgame after \(h^1 = (h^x, a)\) where “active” is chosen in the first period.

To show this, we prove that \(\varPi ^a( \overline{\sigma } \mid a:t) > \varPi ^n( \overline{\sigma } \mid a:t)\) for \(t (\ge 1)\) if \(\varPi ^a( \overline{\sigma } \mid h^0_\alpha ) = \varPi ^n( \overline{\sigma } \mid h^0_\alpha )\). The following inequality follows from (A3):

On the other hand, we have \(\varPi ^a( \overline{\sigma } \mid h^0_\alpha ) - \varPi ^n( \overline{\sigma } \mid h^0_\alpha )=(1-w \delta ) u(a) + (1-\delta ) w \sum _{k=1}^\infty u(a:k+1) \delta ^k = 0\). Given \(u(a:k+1) \ge u(a:k)\) for all \(k \in {\mathbb {N}} \cup \{0 \}\) by (L3), from (A5) and (A6) there exists a \(\tau \), such that \(u(a:\tau +1) > u(a:\tau )\).

Noting that the above strict inequality holds, we can see that:

Hence, from Lemma 3, every MPE is an active equilibrium in a subgame after \(h^1 = (h^x, a)\).

(3-2) Next, we show that there is no MPE where more than one player selects “no-action” with a positive probability in the first period. That is, we show that \(\sigma ^1_j(h^0_\alpha )=1\) for all \(j(\ne i)\) for all MPE \(\sigma \) if \(\sigma ^1_i(h^0_\alpha ) < 1\).

As we have shown in (3-1), if “active” is selected in the first period, then every player selects “active” after the second period. Thus, for any MPE \(\sigma \), \(\varPi ^a( \sigma \mid h^0_\alpha ) = \varPi ^a( \overline{\sigma } \mid h^0_\alpha )\).

Here, we assume that \(\sigma \) is an MPE:

As \( \varPi _j( \sigma \mid a) = \varPi _j(\overline{\sigma } \mid a ) = ( \varPi ^a_j( \overline{\sigma } \mid h^0_\alpha ) - u(a))/ \delta \), we have the following:

From the above equality, we obtain the following:

where \({\tilde{\sigma }}^t_j( h^{t-1}_\alpha ))=1\) for all \(t>1\). Given \(\varPi ^n(\overline{\sigma } \mid h^0_\alpha ) = w \delta \varPi ^a(\overline{\sigma } \mid h^0_\alpha ) + (1-w )\delta [ \varPi ^a(\overline{\sigma } \mid h^0_\alpha ) - u(a) ]\), if \(\varPi ^a(\overline{\sigma } \mid h^0_\alpha ) = \varPi ^n(\overline{\sigma } \mid h^0_\alpha )\), then \((1-\delta ) \varPi ^a(\overline{\sigma } \mid h^0_\alpha ) + u(a) ( 1 - w ) \delta = 0\) holds. If \(\sigma ^1_i( h^0_\alpha ) < 1\) and \(\sigma ^1_j( h^0_\alpha ) > {\tilde{\sigma }}^1_j( h^0_\alpha )\), we have the following:

Therefore, \(\varPi _j( \sigma \mid h^0_\alpha )\) is strictly increasing in \(\sigma ^1_j( h^0_\alpha )\). Thus, player j can be made better off by selecting “active” in the first period, which states that if \(\sigma ^1_i(h^0_\alpha ) < 1\) then \(\sigma ^1_j(h^0_\alpha ) = 1\) for all \(j (\ne i)\) when \(\sigma \) is an MPE.

(3-3) From (3-2), we can see that if a symmetric MPE exists, then it is an active equilibrium. Next, to show the existence of an active equilibrium, we prove that \(\overline{\sigma }\) is an equilibrium.

In a similar manner to the above, we can derive the following:

where \(\sigma ^t_i(h^{t-1}) = 1\) for any \(t>1\). The last equality comes from \((1-\delta ) \varPi ^a(\overline{\sigma } \mid h^0_\alpha ) + u(a) ( 1 - w ) \delta = 0\) . Therefore, the player cannot be made better off by varying \(\sigma ^1_i(h^0_\alpha )\). Thus, \(\overline{\sigma }\) is an equilibrium.

Step 4: Finally, we show the “only if” part of (i).

From equation (B.4) and (B.5), we see that, if \(\varPi ^a(\overline{\sigma } \mid h^0_\alpha ) = \varPi ^n(\overline{\sigma } \mid h^0_\alpha )\), then there exists an asymmetric equilibrium, where \(\sigma ^1_i(h^0_\alpha )<1\) and \(\sigma ^1_j(h^0_\alpha )=1\) for \(j\ne i\). Moreover, there is no active equilibrium if \(\varPi ^a(\overline{\sigma } \mid h^0_\alpha ) < \varPi ^n(\overline{\sigma } \mid h^0_\alpha )\) from Step 1. \(\square \)

1.3 Appendix C: Lemmas for Section 6

In the following, we provide a lemma and its proof for Section 6. For history \(h^t\), the difference between the expected payoff of selecting “no-action” at time \(t+1\) and that of selecting “active” at time \(t+1\) is \(\varPi ^a(\overline{\sigma } \mid h^t) - C - \left[ \varPi ^n(\overline{\sigma } \mid h^t) - \delta w C/( 1- (1-w)\delta )\right] = \varPi ^a(\overline{\sigma } \mid h^t)-(1-\delta ) C/( 1- (1-w)\delta ) - \varPi ^n(\overline{\sigma } \mid h^t) \). Using this, we have the following lemma.

Lemma 5