Abstract

The literature on incentive-based regulation in the electricity sector indicates that the size of this sector in a country constrains the choice of frontier methods as well as the model specification itself to measure economic efficiency of regulated firms. The aim of this study is to propose a stochastic frontier approach with maximum entropy estimation, which is designed to extract information from limited and noisy data with minimal statements on the data generation process. Stochastic frontier analysis with generalized maximum entropy and data envelopment analysis—the latter one has been widely used by national regulators—are applied to a cross-section data on thirteen European electricity distribution companies. Technical efficiency scores and rankings of the distribution companies generated by both approaches are sensitive to model specification. Nevertheless, the stochastic frontier analysis with generalized maximum entropy results indicate that technical efficiency scores have similar distributional properties and these scores as well as the rankings of the companies are not very sensitive to the prior information. In general, the same electricity distribution companies are found to be in the highest and lowest efficient groups, reflecting weak sensitivity to the prior information considered in the estimation procedure.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Incentive-based regulation in the electricity sector has been introduced in many countries during the last three decades. Although there are a wide variety of incentive-based schemes used for electricity utilities (e.g., Jamasb and Pollitt 2001), most regulation practices are based on benchmarking (i.e., assessing a firm’s efficiency against a reference performanceFootnote 1) in order to promote economic efficiency (e.g., productive efficiency, cost efficiency) of regulated firms.Footnote 2

The size of a country’s electricity sector, defined by the number of companies in the electricity value chain, constrains the choice of benchmarking methods, as well as the specification of the frontier model (e.g., Haney and Pollitt 2009, 2011, 2013; Pollitt 2005; Per Agrell and Bogetoft 2014). Data problems (or lack of data) and the size of a country’s electricity sector are among the reasons pointed out by some national regulators for not employing frontier approaches (Haney and Pollitt 2009).

Energy regulators employing frontier methods are, in general, associated with countries with a large number of regulated companies (e.g., Finland, Britain, Germany). In contrast, there are a number of countries with very few regulated companies (e.g., Portugal, Slovenia and Panama) that employ frontier methods using international data. Even in these cases, the sample size may not be enough to allow the use of some frontier methods, due to the limited number of appropriate comparators that can be identified. Transmission and distribution electricity utilities are heterogeneous, in the sense that utilities vary in size and other characteristics that are critical for regulation, namely ownership, governance, task provision, size of operational areas, number of customers, and financial accounting system (e.g., Per Agrell and Bogetoft 2014; Cullmann and Nieswand 2016).

The international survey of regulators conducted by Haney and Pollitt (2009) indicates that data envelopment analysis (DEA) is strongly preferred to corrected ordinary least squares (COLS) and maximum likelihood (ML) with stochastic frontier analysis (SFA) in the electricity sector. For an interesting literature survey on the application of DEA to energy (and environmental) issues, please see Zhou et al. (2008). There is a latent idea that DEA requires a relatively low number of observations and this may be one of reasons for the stronger preference for DEA over COLS and ML with SFA. Furthermore, there are also the drawbacks of employing COLS and ML in extremely small data samples (for instance, it is important to note that ML is attractive mainly due to its large-sample properties). Yet, DEA suffers from the curse of dimensionality which casts doubts on its results.

Some national regulators have been facing a problem of ill-posed frontier models. Ill-posedness of a model may arise from several reasons (e.g., Golan et al. 1996; Golan 2018). In the case of regulation of the electricity sector, an ill-posed model arises mainly from (i) limited information available—small sample sizes, incomplete data, and when the number of unknown parameters exceeds the number of observations; (ii) models affected by collinearity and/or outliers; and (iii) missing data (e.g., unobserved heterogeneity). Thus, the question is how to achieve the best possible results with an ill-posed model? The answer is not straightforward and the choice of a specific methodology is usually controversial. An attractive approach is based on some maximum entropy (ME) estimators, which are designed to extract information from limited and noisy data using minimal statements on the data generation process.

The purpose of this study is to show that with generalized maximum entropy estimation, all the available information can be included in the model, without the usual need to convert ill-posed into well-posed problems required by traditional estimation techniques. This study proposes a frontier approach, based on stochastic frontier analysis (SFA) with the generalized maximum entropy (GME) estimator to measure productive (technical) efficiency of a sample of thirteen European electricity distribution companies. The sample was employed by the Portuguese regulator of the electricity sector (ERSE) to set the regulatory parameters for the distribution companies in the period of 2012–2014 (ERSE 2011). Several possible model specifications are considered specifying different returns to scale and, input and output variables. SFA with GME and DEA (the most preferred method by national regulators) are applied to the ERSE data set and the efficiency results are compared, as well as the efficiency rankings.

The remainder of the paper is organized as follows. In Sect. 2, a brief literature review is presented focusing on the performance of the most common frontier methods used in the electricity sector. Section 3 presents the radial input distance function, used to measure technical efficiency, and the GME estimation. The data sample on electricity distribution companies is discussed in Sect. 4, as well as the empirical resultsFootnote 3 obtained from SFA with GME and DEA. Concluding remarks are presented in Sect. 5.

2 A brief literature review

The most common benchmarking methods used in the electricity sector are econometric modeling, involving constrained ordinary least squares (COLS) and SFA, indexing (e.g., unit costs and total factor productivity indexes), and mathematical modeling, using DEA (e.g., Lowry and Getachew 2009). More recently, Kuosmanen and Kortelainen (2012) propose a two-stage method, called the stochastic non-smooth envelopment of data (StoNED), to estimate a frontier model. However, there are still some unsolved issues underlying the StoNED (c.f., Andor and Hesse 2014; Kuosmanen and Kortelainen 2012; Kuosmanen and Johnson 2010; Kuosmanen 2006), namely: (1) the StoNED model in Kuosmanen and Kortelainen (2012) involves one output and multiple inputs; (2) while statistical properties of the univariate convex nonparametric least squares (CNLS) estimator are well established (consistency and rate of convergence), the same does not apply to the multivariate CNLS estimator; and (3) the composite error term assumptions imported from SFA are very restrictive and may be inappropriate.Footnote 4

Several studies compare the performance of some frontier methods in the context of regulating the electricity sector or/and using a Monte Carlo simulation study. In particular, those studies report dissimilarities of efficiency estimates among different methods and model specifications. Jamasb and Pollitt (2003) discuss the effect of the choice of frontier methods (DEA, COLS and SFA models) using an international cross sectional sample of 63 regional electricity distribution utilities in six European countries. This study indicates that the selection of frontier methods, model specification, and variables (choice of inputs, outputs and environmental variables) can affect not only the efficiency scores but also the ranking of the companies. Additionally, frontier approaches are sensitive to shocks and errors in the data. This is particularly true when cross-sectional data is used and with frontier methods that are deterministic, such as the DEA and COLS (Jamasb and Pollitt 2001, 2003).

Farsi and Filippini (2004) attempt to investigate whether the problems presented by Jamasb and Pollitt (2003) are due to the limitations associated with cross-section data models. The sensitivity of inefficiency estimates to different stochastic parametric frontier models is evaluated using an unbalanced panel of 59 distribution utilities in Switzerland over a time period of 9 years. The individual inefficiency scores and ranks vary across different models. These problems are not limited to cross-sectional data and cannot be completely overcome through panel data models (Farsi and Filippini 2004). Dissimilarities in efficiency estimates across methods are also reported by Estache et al. (2004) for distribution utilities in South America and Farsi et al. (2006) for a panel data of distribution companies in Switzerland.

The variation of inefficiency estimates across methods and models is an important issue, since the robustness and accuracy of the estimated X-factors can be questioned. The X-factor is one of the regulatory tools in price or revenue caps regulation, on the basis of which utilities are rewarded or punished.Footnote 5 Thus, the inefficiency estimates can have important financial effects for the regulated firms (e.g., Farsi and Filippini 2004).

3 Distance function and GME estimation

Technical efficiency can be estimated using the radial input distance function, which provides an input-based measure of technical efficiency.Footnote 6 An input-oriented technical efficiency measure, rather than an output-based technical efficiency measure, is considered appropriate for the electricity distribution utilities, since the demand for distribution services is a derived demand that is not controlled by the utilities (e.g., Giannakis et al. 2005).

Definition 1

The radial input distance function is a function \( D:\Re_{ + }^{M} \times \Re_{ + }^{N} \to \Re_{ + } \cup \left\{ { + \infty } \right\} \) defined as follows:

where x is a N-input vector, y is a M-output vector and V(y) is the input (requirement) set for y.

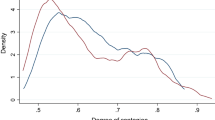

By definition, \( D(y,x) \ge 1\,\, \Leftrightarrow \,\,x \in V(y) \). Figure 1 illustrates the radial input distance function in the case of two inputs and one output. Consider the input requirement set for yo, V(yo), and the input vector xo. In this case, \( D(y^{o} ,x^{o} ) > 1 \), and xo is technically inefficient.

A flexible functional form is used to specify the radial input distance function.Footnote 7 Flexible forms, such as the translog, are not employed when the sample size is small (due to an excessive number of parameters to be estimated) and to avoid the potential risk of collinearity among second order terms because of strong correlation between outputs (e.g., Farsi et al. 2006). The radial input distance function for the case of M outputs and N inputs is specified as a translog functionFootnote 8

where i denotes the ith firm in the sample. Given that the distance function is differentiable, the symmetry restrictions are: \( \alpha_{mli} = \alpha_{lmi} \), m, l = 1,…,M, and \( \beta_{nki} = \beta_{kni} \), n, k = 1,…,N. Homogeneity of degree (+ 1) in inputs requires the following restrictions: \( \sum\nolimits_{n} {\beta_{ni} } = 1 \), \( \sum\nolimits_{n} {\beta_{nki} } = 0 \), k = 1,…,N, and \( \sum\nolimits_{k} {\gamma_{mki} } = 0 \), m = 1,…,M. Also, the restrictions to test for separability between inputs and outputs are: \( \gamma_{mni} = 0 \), m = 1,…,M; n = 1,…,N.

Choosing input x1 and imposing homogeneity of degree 1 in the inputs, the distance function in (1) can be rewritten as

or, equivalently,

where \( x_{ni}^{*} = x_{ni} /x_{1i} \) and \( \varepsilon_{i} = - \ln D_{i} \), which is the error term.

The ME estimation, also known as information-theoretic estimation, by avoiding criticisms and difficulties of DEA and SFA, appears to be a promising approach in efficiency analysis (e.g., Campbell et al. 2008; Rezek et al. 2011; Tonini and Pede 2011; Macedo et al. 2014; Robaina-Alves et al. 2015). Traditional distributional assumptions for the two-error component in SFA with ML estimation are defined reflecting expectations regarding the behaviour of the errors (e.g., Kumbhakar and Lovell 2000, p. 74). These formal statistical distributions (truncated normal, exponential, gamma, among others) are not used with GME estimation, which represent an important advantage. Moreover, with the strategy used by Macedo et al. (2014) that includes the use of DEA to define an upper bound for the inefficiency error supports, the main criticism on DEA is used in this context as an advantage. In this work only the GME estimator is considered and its features in SFA are briefly discussed next.

Rewriting the stochastic frontier model in (2) as

where V is the \( (S \times K) \) matrix of the variables on the right-hand side of (2), including the intercept, \( \theta \) is the \( (K \times 1) \) vector of the parameters in (2) and ε in (2) is defined as a composed error term, \( \varepsilon \, = \,\nu \, - \,u \), with ν being a random noise error term and u representing technical inefficiency.

The reparameterizations of the \( (K \times 1) \) vector \( \theta \), the \( (S \times 1) \) vector \( v \) and the \( (S \times 1) \) vector \( u \) follow the same procedures as in the traditional regression model (Golan et al. 1996; Golan 2018). Each parameter is treated as a discrete random variable with a compact support and T possible outcomes; each error ν is defined as a finite and discrete random variable with J possible outcomes; and each error u is defined as a finite and discrete one-sided random variable with L possible outcomes, which implies that the lower bound for the supports is zero for all error values (the full efficiency case).Footnote 9 Thus, the reparameterizations are given by \( \theta = Zp \), with Z being \( (K \times KT) \) a matrix of support points and p a \( (KT \times 1) \) vector of unknown probabilities; \( v = Aw \), with A a \( (S \times SJ) \) matrix of support points and w a \( (SJ \times 1) \) vector of unknown probabilities; and \( u = B\rho , \) with B a \( (S \times SL) \) matrix of support points and \( \rho \) a \( (SL \times 1) \) vector of unknown probabilities.

The GME estimator in Golan et al. (1996) extended to the SFA context is given by

subject to the model constraints

and the set of additivity constraints

where ⊗ represents the Kronecker product. The support matrices Z and A are defined by the researcher based on prior information. When such information does not exist for the parameters of the model, symmetric supports around zero with wide bounds can be used without expecting extreme risk consequences (Golan et al. 1996; Golan 2018). On the other hand, the traditional 3-sigma rule with some scale estimate for the errors is usually considered to establish the supports in matrix A. Finally, concerning the support matrix B, i.e., the supports for the inefficiency error component, although the traditional specific distributional assumptions with ML estimation are not considered here, as previously mentioned, the same beliefs in the distribution of technical inefficiency estimates are expressed in the model through the error supports (e.g., Campbell et al. 2008; Macedo et al. 2014; Moutinho et al. 2018). It is important to note that, as mentioned by Rezek et al. (2011), while this information defines expectations on efficiency estimates, it does not predetermine any outcome beforehand, which represents an important feature of GME estimation in this context. Additionally, an efficiency prediction from DEA is used in SFA with GME estimation to define an upper bound for the supports, which means that the main criticism on DEA (it does not account for noise; all deviations from the production frontier are estimated as technical inefficiency) is used here as an advantage, since it provides a possible worst case scenario to establish the bound for the supports. The details are presented in Sect. 4.

4 Data and empirical results

The data sample of this study was employed by the Portuguese regulator of the electricity sector (ERSE) to set the regulatory parameters for the distribution companies in the period of 2012–2014 (ERSE 2011). “Appendix A” reports the data.Footnote 10 The data consist of a cross-section sample with thirteen European distribution companies that are suitable comparators with respect to operational expenses (OPEX)Footnote 11 and the main cost drivers. OPEX are costs controlled by the companies and calculated on the basis of 2009 constant prices. In the case of ESB (Ireland) and SP distribution (UK) whose year of the data is not 2009, OPEX is calculated considering the inflation rate of those countries. The OPEX of each country is calculated in US$ PPP. The number of customers and energy delivered (GWh) are cost drivers in all models specified below. Network length (km) is considered a cost driver in two of the models, while in the other two it is specified as a fixed input.

Table 1 reports the Pearson product-moment correlation coefficient between each pair of variables. There is a high correlation namely between the main cost drivers, indicating a positive linear dependence between them and an expected severe collinearity problem in the estimation of model (2).

Regarding the model specification, four models are considered specifying different returns to scale and variables specification. The four models are

-

Model 1 CRS, x1 = OPEX, y1 = energy delivered, y2 = number of customers, y3 = network length;

-

Model 2 CRS, x1 = OPEX, x2 = network length, y1 = energy delivered, y2 = number of customers;

-

Model 3 VRS, x1 = OPEX, y1 = energy delivered, y2 = number of customers, y3 = network length;

-

Model 4 VRS, x1 = OPEX, x2 = network length, y1 = energy delivered, y2 = number of customers;

where CRS and VRS represent, respectively, constant returns to scale and variable returns to scale.Footnote 12

Network length is specified in some empirical studies as an output variable with the purpose of measuring the difficulty of topology (Pollitt 2005). In other studies, network length, as part of the physical inventory of existing real capital, is considered a proxy for capital stocks or asset utilization (Jamasb and Pollitt 2003; Lins et al. 2007). In models 1 and 3, network length is defined as an output; in models 2 and 4, network length is a fixed input factor.

Due to the extremely small size of the sample (thirteen observations), it is not recommended to use COLS or SFA with ML. Thus, DEA and SFA with GME are employed in this study. The DEA models, employed in this study, are presented in “Appendix B”.

Table 2 reports the DEA efficiency scores as well as the rankings of the companies (presented in parenthesis). The sensitivity of the efficiency scores is high to the specification of network length as an output variable or a fixed input variable. The efficiency scores either increase or remain constant when the network length changes from the specification of an output variable to a fixed input. In fact, the mean and the median of the efficiency scores are greater in model 2 (model 4) than in model 1 (model 3).

Moreover, the rankings of the companies change, in general, across models (Table 2). The rankings are very sensitive to the specification of the network length as an output variable or a fixed input. Regarding the hypothesis of returns to scale, the rankings of the companies change substantially. EPS, Vÿchodoslovenská, and Sibelga are the least efficient in models 1 and 2; EPS, Vÿchodoslovenská and ESB are the least efficient companies in models 3 and 4. HEP-DOS is fully efficient in all models.

Adding the constraint \( \sum {z^{j} } \le 1 \) in the DEA model in “Appendix B”, efficiency scores are generated under the hypothesis of non-increasing returns to scale. These efficiency scores are equal to the ones generated with CRS (models 1 and 2), which indicate that electricity distribution companies are operating at increasing returns to scale.Footnote 13

Our DEA results are, in general, consistent with the findings in some previous studies (e.g., Farsi and Filippini 2004; Jamasb and Pollitt 2003). “Our experience in Europe shows, however, that different versions of a DEA model will give quite different results and that there is no way to tell which set of results is most reliable.(…)” This finding from Shuttleworth (2003, p. 45) is also evident in this study.

Before discussing the estimation procedures of SFA with the GME estimator, as well as the results from this method, it is important to state that these are ill-posed models, namely ill-conditioned (the collinearity problem revealed by the analysis of Table 1) and under-determined (the number of parameters to estimate exceeds the number of observations available in some models). Therefore, the use of traditional estimation techniques in SFA should be avoided in this empirical application and ME estimators are recommended.

As mentioned previously, the support matrices Z and A are defined by the researcher based on prior information. In this work, the supports in Z are defined through [− 10,10] for all the parameters of the models (wide bounds; the impact on the estimates using supports of higher amplitude were negligible; e.g., Preckel 2001), and the supports in the matrix A are defined by [− 4,4] and [− 2,2] considering the standard deviation of the noisy observations and the 3-sigma rule as a guide. The supports in matrix B are established accordingly to Macedo et al. (2014), where the upper bound of each support is given by \( - \ln (DEA_{n} ) \), being \( DEA_{n} \) the lower efficiency estimate obtained by DEA.Footnote 14 Five points in the supports \( (T = J = L = 5) \) of each support matrix are considered (a usual value in literature).

Tables 3 and 4 present the estimated coefficients of the input distance function with its corresponding standard errors using bootstrap by resampling residuals in 1000 trials. The median of each estimated coefficient is generated as the median of the 1000 estimates obtained by bootstrapping residuals.

Most of the parameter estimates of models 1 and 3 are not statistically significant for both set of supports, namely for the set [− 10,10] and [− 4,4]. Yet, most of the parameter estimates of models 2 are statistically significant for both set of supports. Estimation results for model 4 indicate that there is separability between outputs (number of customers and energy delivered) and the fixed input factor (network length).Footnote 15 Note that the parameter estimate of network length in models 2 and 4 is statistically significant at the 1% level, contrasting with the statistical insignificance of the parameter estimate of the network length in models 1 and 3, where this variable is specified as an output.

In summary, SFA with GME results indicate that model 2 seems a stable specification of the technology. This means that the specification of network length as a fixed input may be more appropriate than as an output variable and CRS may be more adequate than the hypothesis of VRS. However, the choice of network length as an input variable or an output variable deserves further research work.

Tables 5 and 6 present the efficiency scores and the rankings of the companies (presented in parenthesis) generated by SFA with GME for each set of supports. Although there are differences in the standard deviation, for every model the efficiency scores are not very sensitive to the set of supports in terms of central tendency. The average (median) of the efficiency scores, for example, in model 1 is 0.4369 (0.4223) in the case of the set of supports being [− 10,10] and [− 4,4] and 0.4338 (0.4140) when the supports are [− 10,10] and [− 2,2].

As DEA efficiency scores, the GME efficiency scores are also sensitive to the specification of the network length as an output variable or a fixed input variable. Consider, for example, the supports [− 10,10] and [− 2,2].Footnote 16 The efficiency scores increase, in general, when the specification of network length changes from an output variable to a fixed input (compare models 1 and 2, and models 3 and 4).

Although the rankings of the companies change across models, there are a few of companies that are in the highest and lowest efficiency groups in all models: HEP-DOS is the most efficient company and EPS and Vÿchodoslovenská are the least efficient for both set of supports. Interestingly, the DEA rankings indicate, as mentioned before, that HEP-DOS is fully efficient and EPS and Vÿchodoslovenská are the lowest efficient companies in all models.

Table 7 presents the Pearson correlation coefficient between the DEA efficiency scores and the SFA with GME efficiency scores generated with supports [− 10,10], [− 4,4] (denoted by GME4) and [− 10,10], [− 2,2] (denoted by GME2), as well as between GME2 and GME4. Moreover, results from the Kruskal–Wallis and the median tests are also reported in this table. Both are nonparametric tests, the first one with a null hypothesis that different populations have an identical distribution, and the second with a null hypothesis that different populations have identical medians.

The correlation between DEA and each of the SFA with GME efficiency scores is positive and very strong in model 1. For the other models, the correlation is moderately positive. However, the correlation between GME2 and GME4 is very strong in each model (as expected).

The following decisions on Kruskal–Wallis and median tests can be performed, for example, at 2% significance level. The null hypothesis that the DEA and the two SFA with GME efficiency scores originate from the same distribution (i.e., the three populations have equal distribution) is rejected in models 1 and 4; yet the null hypothesis is not rejected when it considers that the GME2 and GME4 population efficiency scores originate from the same distribution. Results for the median test are similar in the sense that the null hypothesis considering that the DEA and the two GME population efficiency scores have the same median is rejected in models 1 and 4. However, the hypothesis that the GME2 and GME4 population efficiency scores have the same median is not rejected.

Table 8 reports the Spearman correlation coefficient on the efficiency rankings obtained by DEA and SFA with GME. Results indicate a significant positive monotonic trend between each pair of efficiency rankings, namely between the ones generated with GME2 and GME4, in all models.

5 Conclusions

The main purpose of this study is to propose an alternative stochastic frontier approach that can be used by national regulators of electricity utilities. Some national regulators have been facing a problem of ill-posed frontier models. In the case of regulation of the electricity sector, an ill-posed model arises mainly from (i) limited information available—small sample sizes, incomplete data, and under-determined models; (ii) models affected by collinearity and/or outliers; and (iii) missing data. Information-theoretic methods, where generalized maximum entropy is included, are useful in the estimation of such ill-posed models.

The empirical study involves a sample data on thirteen European electricity distribution companies used by the Portuguese regulator of the electricity sector to set the regulatory parameters for the distribution companies in the period of 2012–2014. SFA with GME and DEA methods are employed and the estimates of technical efficiency are compared, as well as the efficiency rankings.

Considering the SFA with the GME estimator, it is important to note that the models are ill-posed. Additionally, the number of parameters to be estimated is greater than the number of observations in some models. The results from SFA with the GME indicate that model 2 seems a stable specification of the technology. This has two implications in the technology specification of the electricity distribution utilities: the specification of network length as a fixed input rather than an output variable may be more appropriate, as well as the hypothesis of constant returns to scale. Yet, further studies are needed addressing in particular the specification of network length as an output or an input variable.

The SFA with GME and DEA efficiency scores as well as the rankings of the companies are very sensitive to model specification, namely to returns to scale and the specification of the network length as an output variable or a fixed input. The Kruskal–Wallis and the median tests indicate that DEA and the two SFA with GME efficiency scores do not originate from the same distribution and do not have the same median. However, those statistical tests indicate that the two SFA with GME efficiency scores originate from the same distribution and have the same median. Also, the correlation between the two SFA with GME efficiency scores is very strong and there is a significant positive monotonic trend between each pair of efficiency rankings in all models.

Furthermore, the empirical results indicate that (i) the SFA with GME efficiency scores and rankings are not very sensitive to prior information (set of supports) and have similar distributional properties; (ii) SFA with GME using different prior information rank the electricity distribution utilities in approximately the same order; and (iii) SFA with GME using different prior information find mostly the same electricity distribution companies to be in the highest and lowest efficiency groups. The empirical results of this study indicate that it may be useful for national regulators of distribution electricity companies, namely in countries with very few regulated companies, to employ SFA with GME to set price controls within incentive-based regulation.

In this empirical study, quality of service in distribution networks, such as technical quality, is not considered. Additionally, high penetration of renewable distributed generation (DG) puts new challenges which has not been understood and incorporated homogeneously in distribution regulation across Europe. The connection of renewable DG to distribution networks has a double impact on costs: network costs and energy losses. The situation across EU is that not all member states regulators consider renewable DG as a cost driver, at least explicitly (Cossent et al. 2009).

The research issue of this study is crucial for national regulators and the electricity sector. The SFA with GME approach allows national regulators, namely the ones that regulate a few firms, to set price controls using this frontier method. Moreover, the GME estimation can be extremely useful and a robust empirical methodology for investigating the nexus between the incentive-based regulation and investment behavior of electric utilities, an issue recently debated in the literature in different countries (e.g., Cambini and Rondi 2010; Cullmann and Nieswand 2016; Huang and Söder 2017). Investment in the electricity sector, in general, and in the electricity distribution, in particular, is increasingly important with the energy transition, which involves installing new capacity and replacing existing assets. Investments are also induced by new loads such as electric vehicles, and the widespread use of smart metering systems which imply very large investments for the distribution utilities. Given that distribution utilities are regulated, the design of incentive mechanisms becomes crucial for the energy sector (e.g., Cambini et al. 2014; Banovac et al. 2009; Cullmann and Nieswand 2016).

Notes

Regulation of the electricity distribution sector is changing from an efficiency-oriented instrument to one that also includes the provision of service quality (e.g., Cambini et al. 2014).

A very brief discussion of the radial input distance function and GME estimation, using a sample with eleven companies, were presented at the Conference EEM 2016 (Silva et al. 2016). It was a first preliminary study where efficiency scores were generated but there was neither statistical analysis nor a full interpretation of the efficiency results. The sample used in this study is different and includes two additional companies considered as outliers by ERSE.

Due to these unsolved issues underlying the StoNED model, namely the fact that involves only one output, this method is not used in this study. The models employed in the empirical application involve more than one output.

Price (revenue) caps are established on the basis of the general formula RPI – X, that is the maximum rate of price (revenue) increase is equal to the inflation rate of the retail price index, RPI, minus the expected efficiency savings (X).

Flexible functional forms are either second-order numerical or second-order differential approximations to an arbitrary function and impose considerable fewer restrictions prior to estimation than the traditional technologies, such as Cobb–Douglas, Leontief and CES. The translog form is a second-order numerical approximation of the natural logarithm of an arbitrary function (Chambers 1988).

Besides a small sample size, there is a strong correlation between outputs in this study, as discussed in Sect. 4. The GME estimator is an adequate information-theoretic method to use under these circumstances.

The supports are defined as closed and bounded intervals in which each parameter or error is restricted to lie.

The Serbian EPS and Croatian HEP-ODS are considered outliers by ERSE (ERSE 2011).

In the regulatory period of 2012–2014, ERSE attempts to improve the methodology employed in the distribution activity, with the objective of decreasing OPEX, without harming investment. As a result, the price-cap methodology is applied only to OPEX, where capital costs (CAPEX) are analyzed separately. Excluding CAPEX to set the price-cap, the company is required to propose and accomplish an amount of investment for the regulatory period, avoiding, in this way, the effects of excessive investment. Moreover, this implies remunerating the accepted investment at the company’s cost of capital (ERSE 2011).

VRS is the most relaxed form of returns to scale in the sense that allows not only constant returns to scale but also increasing returns to scale and decreasing returns to scale (Fried et al. 2008, chapter 1).

For details on this procedure, see Fӓre et al. (1994), chapter 3.

Another strategy based on Campbell et al. (2008) was implemented to define the supports in matrix B. Although the efficiency estimates are different, the rankings in terms of efficiency are equal and the elasticities computed at the mean values of inputs and outputs are identical.

Separability between the outputs and the fixed input factor implies that the marginal rate of transformation between the two outputs does not depend on the network length.

For the set of supports [-10,10] and [-4,4], the results are similar.

References

Agrell, J., & Bogetoft, P. (2014). International benchmarking of electricity transmission system operators. In W. Mielczarski & T. Siewierski (Eds.), The 11th international conference on the European energy market, EEM 2014. Los Alamitos, CA: IEEE.

Andor, M., & Hesse, F. (2014). The StoNED age: The departure into a new era of efficiency analysis? A monte carlo comparison of StoNED and the “oldies” (SFA and DEA). Journal of Productivity Analysis, 41, 85–109.

Banovac, E., Glavić, M., & Tešnjak, S. (2009). Establishing an efficient regulatory mechanism—Prerequisite for successful energy activities regulation. Energy, 34(2), 178–189.

Cambini, C., Croce, A., & Fumagalli, E. (2014). Output-based incentive regulation in electricity distribution: Evidence from Italy. Energy Economics, 45, 205–216.

Cambini, C., & Rondi, L. (2010). Incentive regulation and investment: Evidence from European energy utilities. Journal of Regulatory Economics, 38, 1–26.

Campbell, R., Rogers, K., & Rezek, J. (2008). Efficient frontier estimation: A maximum entropy approach. Journal of Productivity Analysis, 30(3), 213–221.

Chambers, R. G. (1988). Applied production analysis: A dual approach. New York: Cambridge University Press. ISBN: 0521314275.

Cossent, R., Gómez, T., & Frías, P. (2009). Towards a future with large penetration of distributed generation: Is the current regulation of electricity distribution ready? Regulatory recommendations under a European perspective. Energy Policy, 37, 1145–1155.

Cullmann, A., & Nieswand, M. (2016). Regulation and investment incentives in electricity distribution: An empirical assessment. Energy Economics, 57, 192–203.

ERSE. (2011). Parâmetros de regulação para o período 2011 a 2014. Lisbon.

Estache, A., Rossi, M. A., & Ruzzier, C. A. (2004). The case for international coordination of electricity regulation: Evidence from the measurement of efficiency in South America. Journal of Regulatory Economics, 25, 271–295.

Färe, R., & Primont, D. (1995). Multi-output production and duality: Theory and applications. Dordrecht: Kluwer Academic Publishers. ISBN: 9789401042840.

Farsi, M., & Filippini, M. (2004). Regulation and measuring cost-efficiency with panel data models: Application to electricity distribution utilities. Review of Industrial Organization, 25, 1–19.

Farsi, M., Filippini, M., & Greene, W. (2006). Application of panel data models in benchmarking analysis of the electricity distribution sector. Annals of Public and Cooperative Economics, 77, 271–290.

Fried, H. O., Lovell, C. A. K., & Schmidt, S. S. (2008). The measurement of productive efficiency and productivity growth. New York: Oxford University Press. ISBN: 9780195183528.

Giannakis, D., Jamasb, T., & Pollitt, M. (2005). Benchmarking and incentive regulation of quality of service: An application to the UK electricity distribution networks. Energy Policy, 33, 2256–2271.

Golan, A. (2018). Foundations of info-metrics: Modeling, inference, and imperfect information. New York: Oxford University Press.

Golan, A., Judge, G., & Miller, D. (1996). Maximum entropy econometrics: Robust estimation with limited data. Chischester: Wiley. ISBN: 9780471953111.

Haney, A. B., & Pollitt, M. G. (2009). Efficiency analysis of energy networks: An international survey of regulators. Energy Policy, 37, 5814–5830.

Haney, A. B., & Pollitt, M. G. (2011). Exploring the determinants of “best practice” benchmarking in electricity network regulation. Energy Policy, 39, 7739–7746.

Haney, A. B., & Pollitt, M. G. (2013). International benchmarking of electricity transmission by regulators: A contrast between theory and practice? Energy Policy, 62, 267–281.

Huang, Y., & Söder, L. (2017). Assessing the impact of incentive regulation on distribution network investment considering distributed generation integration. International Journal of Electric Power and Energy Systems, 89, 126–135.

Jamasb, T., & Pollitt, M. (2001). Benchmarking and regulation: International electricity experience. Utilities Policy, 9, 107–130.

Jamasb, T., & Pollitt, M. (2003). International benchmarking and regulation: An application to European electricity distribution utilities. Energy Policy, 31, 1609–1622.

Kumbhakar, S. C., & Lovell, C. A. K. (2000). Stochastic frontier analysis. Cambridge: Cambridge University Press. ISBN: 0521481848.

Kuosmanen, T. (2006). Stochastic nonparametric envelopment data: combining virtues of SFA and DEA in a unified framework. MTT discussion paper 3/2006.

Kuosmanen, T., & Johnson, A. L. (2010). Data envelopment analysis as nonparametric least squares regression. Operations Research, 58(1), 149–160.

Kuosmanen, T., & Kortelainen, M. (2012). Stochastic non-smooth envelopment of data: Semi-parametric frontier estimation subject to shape constraints. Journal of Productivity Analysis, 38, 11–28.

Lins, M. P. E., Sollero, M. K. V., Calôba, G. M., & Silva, A. C. M. (2007). Integrating the regulatory and utility firm perspectives, when measuring the efficiency of electricity distribution. European Journal of Operational Research, 181, 1413–1424.

Lowry, M. N., & Getachew, L. (2009). Statistical benchmarking in utility regulation: Role, standards and methods. Energy Policy, 37, 1323–1330.

Macedo, P., Silva, E., & Scotto, M. (2014). Technical efficiency with state-contingent production frontiers using maximum entropy estimators. Journal of Productivity Analysis, 41(1), 131–140.

Moutinho, V., Robaina, M., & Macedo, P. (2018). Economic-environmental efficiency of European agriculture—A generalized maximum entropy approach. Agricultural Economics—Czech, 64(10), 423–435.

Pollitt, M. (2005). The role of efficiency estimates in regulatory price reviews: Ofgem’s approach to benchmarking electricity networks. Utilities Policy, 13, 279–288.

Preckel, P. V. (2001). Least squares and entropy: A penalty function perspective. American Journal of Agricultural Economics, 83(2), 366–377.

Rezek, J. P., Campbell, R. C., & Rogers, K. E. (2011). Assessing total factor productivity growth in Sub-Saharan African agriculture. Journal of Agricultural Economics, 62(2), 357–374.

Robaina-Alves, M., Moutinho, V., & Macedo, P. (2015). A new frontier approach to model the eco-efficiency in European countries. Journal of Cleaner Production, 103, 562–573.

Shephard, R. W. (1953). Cost and production functions. Princeton: Princeton University Press.

Shuttleworth, G. (2003). Firm-specific productive efficiency: A response. The Electricity Journal, April, 42–50.

Silva, E., Macedo, P., & Soares, I. (2016). An alternative benchmarking approach for electricity utility regulation using maximum entropy. In J. T. Saraiva & J. N. Fidalgo (Eds.), The 13th international conference on the European energy market, EEM 2016. IEEE: New York, NY.

Tonini, A., & Pede, V. (2011). A generalized maximum entropy stochastic frontier measuring productivity accounting for spatial dependency. Entropy, 13(11), 1916–1927.

Zhou, P., Ang, B. W., & Poh, K. L. (2008). A survey of data envelopment analysis in energy and environmental issues. European Journal of Operational Research, 189, 1–18.

Funding

This work was supported in part by the Portuguese Foundation for Science and Technology (FCT—Fundação para a Ciência e a Tecnologia), through CIDMA—Center for Research and Development in Mathematics and Applications, within project UID/MAT/04106/2013.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

We would like to express our gratitude to the Editor, Professor Menahem Spiegel, and two anonymous referees. They offered extremely valuable suggestions for improvements.

Appendices

Appendix A

See Table 9.

Appendix B: DEA models

DEA is a non-parametric, mathematical programming-based method to generate the efficient frontier in a given data set and measure the efficiency of each firm relative to the frontier. It fully envelops the data and makes no accommodation for noise (Fried et al. 2008, chapter 1).

The DEA model, assuming CRS, to generate technical efficiency for each firm i, is given as:

where \( {\text{y}}^{\text{i}} \) and \( {\text{x}}^{\text{i}} \) are, respectively, the M-output vector and the N-input vector of firm i, z is a J × 1 intensity vector, where J is the total number of firms in the data set. λ is a scalar whose optimal value is the technical efficiency score of firm i, \( {\text{TE}}({\text{y}}^{\text{i}} ,{\text{x}}^{\text{i}} ) \), which, in turn, is equal to the inverse of the value of the radial distance function.

In the minimization problem, technical efficiency of firm i is assessed in terms of its ability to contract its input vector subject to the efficient frontier. If a radial contraction of the input vector is possible for firm i, its optimal λ < 1 (i.e., firm i is technically inefficient), while if the radial contraction is not possible, its optimal λ = 1 (i.e., firm i is technically efficient).

For model 1, M = 3 and N = 1. For model 2, M = 2 and N = 2, where network length is considered a fixed input. Models 3 and 4 are similar to, respectively, models 1 and 2, except that the former models assume VRS. This assumption is modeled by adding the convexity constraint \( \sum\nolimits_{j} {z^{j} } = 1 \) in the minimization problem, presented above.

Rights and permissions

About this article

Cite this article

Silva, E., Macedo, P. & Soares, I. Maximum entropy: a stochastic frontier approach for electricity distribution regulation. J Regul Econ 55, 237–257 (2019). https://doi.org/10.1007/s11149-019-09383-y

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11149-019-09383-y