Abstract

As it is well known from the time-series literature, GARCH processes with non-normal shocks provide better descriptions of stock returns than GARCH processes with normal shocks. However, in the derivatives literature, American option pricing algorithms under GARCH are typically designed to deal with normal shocks. We thus develop here an approach capable of pricing American options with non-normal shocks. The approach uses an equilibrium pricing model with shocks characterized by a Johnson \(S_{u}\) distribution and a simple algorithm inspired from the quadrature approaches recently proposed in the option pricing literature. Numerical experiments calibrated to stock index return data show that this method provides accurate option prices under GARCH for non-normal and normal cases.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The variance clustering phenomenon observed in stock returns is a well-known empirical regularity. ARCH and GARCH processes introduced in Engle (1982) and Bollerslev (1986), have now become standard models capable of capturing this critical characteristic. Another salient feature of stock returns is the non-normality of the innovations filtered with these models. For example, as shown in Bollerslev (1987), Hsieh (1989) and Hansen (1994), the estimation of various GARCH specifications on stock returns with distributions allowing for skewness and kurtosis different from those of a normal distribution provide more adequate descriptions of the data dynamics. Building on these empirical observations, the option pricing literature has recently examined models incorporating these two key characteristics.Footnote 1 In this literature, most of the papers examine European-style options. However, the majority of exchange-traded options are of American-style and require numerical algorithms that can handle the early exercise features associated with these securities.

In the GARCH option pricing literature, Stentoft (2008) examines American option pricing with non-normal shocks. Using least-squares Monte Carlo simulations, he computes American option values with shocks parameterized by a normal Inverse Gaussian distribution. Although the least-squares Monte Carlo approach represents a viable and flexible pricing tool, as outlined in Glasserman (2004), such an algorithm is not free of concerns. For example, the accuracy of the computed prices depends on the choice of the basis functions representing the independent variables in the regressions. Also, in finite samples, because these algorithms produce a suboptimal early exercise policy, they provide a lower bound of the correct option prices. A numerical method less prone to such caveats would be a welcomed addition to the financial analyst toolbox. With this objective in mind, we develop here a pricing algorithm for options under GARCH with non-normal shocks inspired by the recent literature on quadrature option pricing algorithms.

This literature, developed in the early 2000s, consists in a series of papers which sequentially solved the different problems associated with the application of quadrature techniques to option pricing. In a nutshell, these quadrature approaches compute option values recursively from the maturity of the instrument on a grid of stock prices. At each time step, the early exercise and continuation values are computed and compared to obtain the option value for a stock price on the grid. A critical step in this recursive procedure is the computation of the continuation value. In this step, the integral associated with the expected value is computed using quadrature techniques such as the trapezoid, Simpson or Gauss–Legendre. In Sullivan (2000), a first attempt was put forward with Gaussian quadratures and function approximations using Chebyshev polynomials. In Andricopoulos et al. (2003), the quadrature approach is put on more solid grounds with a more widely applicable algorithm which bypassed the need of Chebyshev polynomials. For underlying securities processes with transition densities available in closed form, their algorithm allows fast and accurate computations of option prices for path dependent cases on one underlying asset, together with American-style options. In Andricopoulos et al. (2007), the method developed in their earlier paper is extended to cover more complex and challenging problems in option valuations involving one or more underlying. More recently, Chen et al. (2014) lift the requirement of underlying securities with closed-form transition densities by using precise density approximations, which results in a genuinely universal method capable of pricing derivative securities in a wide variety of contexts. Other contributions to this literature include Chung et al. (2010) who apply a fast quadrature engine to the algorithm of Andricopoulos et al. (2003). Finally, Cosma et al. (2016), Simonato (2016), and Su et al. (2017) show how the quadrature approaches can be used with a time-homogenous and equally spaced price grid to provide substantial savings in computational efforts without losses in precision.

Here, we contribute to the literature on American options in the GARCH context by showing how a quadrature approach can be developed to handle GARCH processes with non-normal shocks. Such an extension is non-trivial as it requires a different pricing and distributional framework. For this purpose, we use the equilibrium pricing model proposed in Duan (1999) coupled with random shocks characterized by a Johnson \(S_{u}\) distribution (Johnson 1949). As discussed in Simonato and Stentoft (2015), Johnson \(S_{u}\) error terms fit the data well and offer a range of attainable skewness and kurtosis similar to that of commonly used alternatives such as the non-central t. Importantly, in the context of the equilibrium pricing model used here, they represent a natural choice for which it becomes possible to obtain the distribution of the random shocks under the risk-neutral measure. We also contribute to the quadrature literature by extending the simplified quadrature approach examined in Simonato (2016) to the more complex GARCH case. While the typical quadrature scheme uses a time-varying grid, the procedure examined here uses an evenly spaced, time-homogenous price grid which results in a simple and efficient algorithm.

Compared to the other numerical techniques that have been developed to compute American options under GARCH for the Normal case, the algorithm presented here shows important differences. A first difference is the use of the density function instead of the distribution function. For example, in Duan and Simonato (2001) and Ben-Ameur et al. (2009), the repeated use of the Gaussian distribution function is a crucial requirement. Here, as in other quadrature schemes, the use of the density function leads to a more flexible algorithm since these are typically easier to compute than distribution functions which must be approximated numerically, even in the simplest case. Second, the algorithm we propose is also different from the lattice techniques developed in Ritchken and Trevor (1999), Cakici and Topyan (2000) and Lyuu and Wu (2005). Here the actual dynamics of the process is used directly while these lattices only provide an approximate GARCH dynamics. Third, the algorithm is also different from the quadrature algorithm of Chung et al. (2010) which can only handle the Gaussian GARCH case, and that must use interpolations in the variance dimension. Their procedure is also more complex and computationally involved than the one we propose as it requires recomputing the densities at every time steps. Finally, we note that the algorithm we develop shows some similarities to the quadrature based approaches independently developed in Cosma et al. (2016) and Simonato (2016). Although they do not tackle the GARCH case, their algorithms also use a time-homogenous grid of prices in the quadrature context. The algorithm we present extends these papers by developing a simple and efficient implementation strategy designed explicitly for the GARCH case which brings numerous advantages regarding computing speed and memory requirements.

The remainder of the paper is as follows. The physical and risk-neutral processes are described in Sect. 2. Section 3 presents the quadrature algorithm developed here while Sect. 4 discuss some key implementation issues. Section 5 examines numerical results under the Gaussian and Johnson \(S_{u}\) cases. In that section, we show the relevance of our non-normal time series model with a maximum likelihood analysis. Using the parameter estimates from this analysis, we examine the performance of the pricing algorithm for the Gaussian and non-Gaussian case. We then compare the results of our algorithm with those from the Markov chain approach of Duan and Simonato (2001). Section 6 concludes.

2 The physical and risk neutral processes

We assume the following dynamics for the stock price under the physical probability measure:

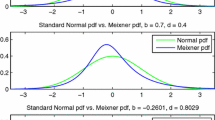

where \({\mathcal {F}}_{t}\) is the set containing all information up to and including time \(t, \ p_{t+1}=\ln P_{t+1}\) is the log of the stock price at \(t+1,\) and \(\sigma _{t+1}^{2}\) is the known conditional variance of the continuously compounded return. Here, \(\alpha\) is the expected return over the next period while \(\gamma _{t+1}\) is mean correction factor defined as \(e^{\gamma _{t+1}}=E_{t}\left( e^{\sigma _{t+1}\varepsilon _{t+1}}\right)\) which implies \(E_{t}\left[ P_{t+1}\right] =P_{t}e^{\alpha }\). The conditional variance follows a standard NGARCH(1,1) process with the usual parameter interpretation (see for example Duan and Simonato 2001). This GARCH specification is used here as an example. Other GARCH specifications are possible with only minor changes. The non-normal random shocks \(\left. \varepsilon _{t+1}\right| {\mathcal {F}}_{t}\) are assumed to be distributed according to a Johnson \(S_{u}\) distribution (introduced in Johnson 1949), with parameters a and b, denoted as \(J_{S_{u}}(a,b)\). Formally, \(\varepsilon _{t+1}\) is given by

where \(z_{t+1}\) is a standard normal random variable, and c and d are location and scale parameters that are functions of a and b. Computing these parameters can be done with

where \(M\left( \cdot \right)\) and \(V\left( \cdot \right)\) are simple functions described in “Appendix 1”. Such shocks have a mean of zero and a standard deviation of one. The skewness and kurtosis of these shocks are different from the normal case, and the values taken by a and b jointly determine the magnitude of these moments. A positive (negative) value of a induces a negative (positive) skewness while smaller (larger) values of b lead to larger (smaller) kurtosis values. However, these relationships are not independent since the skewness and kurtosis are simultaneous functions of a and b.

As shown in Simonato and Stentoft (2015), using the above physical process with the equilibrium pricing model of Duan (1999) and the assumption of a constant pricing parameter \(\lambda\), we obtain the following risk-neutral system which can be used to compute the prices of derivative securities:

and

where \(p_{t+1}^{*}\) is the log of the stock price under the risk-neutral measure \(Q, \ \sigma _{t}^{*}\) is the risk-neutral volatility, r is the periodic continuously compounded risk-free rate and \(z_{t}^{*}\) is a standard Gaussian random variable under measure Q. Here, \(\lambda\) is a constant pricing parameter which can be estimated using a maximum likelihood approach as in Stentoft (2008) and Simonato and Stentoft (2015). Although this parameter can be time-varying as in Duan (1999), Simonato and Stentoft (2015) show that option pricing with a time-varying or constant pricing parameter obtain very similar prices.Footnote 2 With this pricing parameter, the risk-neutral random shock \(\varepsilon _{t}^{*}\) becomes a four parameter Johnson \(S_{u}\) random variable, with parameters \(a^{*},\ b, \ c\) and d with moments \(\mu _{i}^{*}=E_{t} ^{Q}\left[ \varepsilon _{t+1}^{*i}\right]\) and \(a^{*}=a+\lambda .\) These expected values are available in closed form and are described in “Appendix 1”. We note that the above risk-neutral process uses an approximation which is required to compute some key expected values. The approximation uses a fourth order Taylor series which is, as shown in Simonato and Stentoft (2015), precise enough for all practical purposes.

3 American option prices: a quadrature algorithm

For the purpose of pricing American options in the above context, we use the following well-known recursive dynamic programming formulation:

with

where \(v_{t}\left( p_{t}^{*},\sigma _{t+1}^{2*}\right)\) is the option price at t, which is a function of the current log of stock price level, and the known variance level \(\sigma _{t+1}^{2*}.\) Inside the bracket of Eq. (9), the discounted expected value denotes the continuation value, while \(g\left( e^{p_{t}^{*}},K\right)\) is the payoff function of the option with strike price K when \(t=T\), or the early exercise value when \(t<T\), with \(\rho =1\) for a call option and \(\rho =-1\) for a put. Given the risk-neutral process specified in Sect. 2, the continuation value can be written more explicitly as:

where \(f\left( p_{t+1}^{*}\mid p_{t}^{*},\sigma _{t+1}^{2*}\right)\) is the risk-neutral conditional density of the log of stock price next period, given the current price and volatility level. In the context of Johnson \(S_{u}\) error terms, this density function is given by Eq. (20) in “Appendix 1”. The next subsection examines how a simple quadrature algorithm can be used to compute this dynamic programming problem on a grid of log of stock prices and variances.

3.1 The quadrature algorithm

Denote the time-homogenous grid of m equally spaced log of stock prices as \(\overline{{\mathbf {p}}}=\left[ {\overline{p}}_{1},{\overline{p}}_{2} ,\ldots ,{\overline{p}}_{m}\right] ^{\prime }\) with m an odd number and the currently observed log of the stock price as \({\overline{p}}_{\frac{m+1}{2} }=p_{0}\). The time-homogeneity will allow substantial computational savings since the transition densities required in the computations will be the same at all time steps, unlike the typical quadrature approaches. The prices on the grid must be equally spaced. As seen in a later section, substantial savings in memory will be achieved with such a spacing.

A time-homogenous grid of n values for the variance, denoted as \(\overline{\varvec{\sigma }}^{2}=\left[ {\overline{\sigma }}_{1} ^{2},{\overline{\sigma }}_{2}^{2},\ldots ,{\overline{\sigma }}_{n}^{2}\right] ^{\prime }\), is also required. As for the stock price grid, one of the elements of the variance grid must be set equal to the known variance level prevailing at \(t=0.\) Unlike the log of stock price, this grid need not be equally spaced. Details about how to build these grids are in the next section. Using the Cartesian product of sets \(\overline{{\mathbf {p}}}\) and \(\overline{\varvec{\sigma }}^{2}\), we obtain a two-dimensional grid with \(m\times n\) points, whose elements \(\left( i,j\right)\) represent the log of the stock price level in state i and the variance level in state j. A bar over a letter denotes a quantity associated with a point on this grid.

As noticed in Broadie and Detemple (1996), when performing numerical computations, one period before maturity, the continuation value of an American option can be replaced by a European option value. For the GARCH case, the option has a constant variance at this time step. A quasi-analytical formula can be derived and used to compute the European option price at time \(T-1\) on a point \(\left( i,j\right)\) on the grid. More specifically, we compute

for \(i=1\) to m and \(j=1\) to n, where \({\overline{v}}_{i,j,T-1}\) is the option price in state \(\left( i,j\right)\) and time \(T-1, \ A\left( \cdot \right)\) is a generic function denoting the quasi-analytical formula, \(\tau =1\) is the maturity of the option in number of periods, with r and \({\overline{\sigma }}_{j}\) being periodic values. “Appendix 2” provides the quasi-analytical formula to compute the option value for the model presented in the above section. This formula is quasi-analytical since it involves an integral over a standard normal which cannot be computed analytically. As indicated in the appendix, a Gauss–Legendre approach can be used to compute this integral in a fraction of a second with high precision.

For time-steps \(T-2\) to 0, the discounted continuation value given by Eq. (10) is computed numerically using a trapezoid rule (see for example Judd (1998)).Footnote 3 For the case of a GARCH process, when performing these computations, care must be taken to pick the prices in the appropriate variance state. “Appendix 3” shows that, given a stock price and variance in state (i, j), the continuation values can be computed recursively with the following formula:

for \(i=1\) to m and \(j=1\) to n. Here \(l_{i\rightarrow k}\) represents the index value of the variance on the \(k\)-th line of the grid which is the nearest to a quantity that we describe as the inherited variance level. We denote this quantity as \({\widehat{\sigma }}^{2}\) (notice the “hat”). We require this concept since, for GARCH processes, the variance next period is an inherited value, i.e., a function of the current shock to the stock price. This inherited variance is computed as

where

is the random shock that would induce a transition from state i to state k. The notation used to describe the index \(l_{i\rightarrow k}\), although a bit tedious, emphasizes that this value is a function of the prices transiting from state i to state k. This notation will prove useful in the next section where an efficient algorithm for the identification of these \(l_{i\rightarrow k}\) indexes will be proposed. This algorithm will allow substantial savings in memory and computing efforts. The Johnson density value is denoted by \(f\left( {\overline{p}}_{k}\mid {\overline{p}}_{i},\overline{\sigma }_{j}^{2}\right)\) and can be computed with Eq. (20) from “Appendix 1”, and \(w_{j}\) is the weight associated to the trapezoid integration scheme. Denoting by \(\Delta {\overline{p}}\) the difference between the equidistant points on the grid of log of stock prices, these weights are computed as \(w_{1}=w_{m}=\Delta {\overline{p}}/2\) and \(w_{j} =\Delta {\overline{p}}\) otherwise. With the above continuation values, we compute the option prices on the grid with

for \(i=1\) to m and \(j=1\) to n. At time \(t=0,\) the American option price is \({\overline{v}}_{\frac{m+1}{2},j_{0},0}\) where \(j_{0}\) is the index value of the variance grid which was set equal to the known variance at \(t=0.\)

3.2 Computing the grid

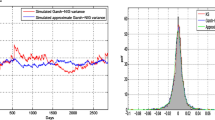

In order to compute the grid of stock prices and variances, we require an interval of representative values at the maturity of the option. For example, in the Gaussian GARCH case examined by Duan and Simonato (2001), these grids are obtained using the known standard deviations of the log of stock prices and variances at maturity. In the case of non-Gaussian shocks, there are no available formulas for such quantities. We therefore rely on a Monte Carlo simulation to obtain the required values. More specifically, for the log of stock price, the minimum and maximum values are computed as

where std\(\left( \left\{ {\widetilde{p}}_{j,T}\right\} _{j=1}^{M}\right)\) denotes the standard deviation of a sample of M simulated risk-neutral paths of log of stock prices at T, and q is a multiplication factor for the standard deviations. We compute the equally spaced grid in the log of stock price dimension with

for \(i=1\) to m and \(\delta _{p}=\left( {\overline{p}}_{m}-{\overline{p}} _{1}\right) /\left( m-1\right) .\) For the variance, we also rely on simulated values to determine the interval computed as

where \(\left\{ \ln \left( {\widetilde{\sigma }}_{j,T}^{2}\right) \right\} _{j=1}^{M}\) denotes a sample of M simulated risk-neutral paths of log of variances at T. We use the maximum and minimum values since the distribution is much less symmetric than the one of the log from the stock price. We then compute the grid with

for \(i=1\) to n and \(\delta _{\sigma }=\left( \ln \left( {\overline{\sigma }} _{n}^{2}\right) -\ln \left( {\overline{\sigma }}_{1}^{2}\right) \right) /\left( n-1\right) .\) These log values are then converted into levels, resulting in an unevenly spaced grid in the variance dimension. For options under GARCH, the initial variance (the variance next period) is one of the parameters required to compute the price. Because this variance is most probably not a part of this grid, we fix the element of the grid which is the closest to the initial variance, equal to this initial variance.

As it is the case with many numerical algorithms, the design of an efficient implementation procedure is a crucial step. The next section provides the details about how the continuation values can be efficiently computed by exploiting some regularities that are inherent to the above algorithm.

4 Implementation

The time-homogeneous and equally spaced grid of stock prices can bring significant reductions in computing efforts by exploiting regularities associated with the density values. Using the simplified representation explained below, “Appendix 4” presents a compact and efficient algorithm for computing the \(m\times 1\) vector of continuation values in a variance state \({\overline{\sigma }}_{j}^{2}\). The algorithm allows for the following advantages in computation and storage when compared to a naive implementation: (1) computation and storage of \(\left( 2m-1\right) \times n\) density values instead of \(\left( m\times m\right) \times n\) values; (2) computation and storage of \(\left( 2m-1\right) \times n\) index values of inherited variance level positions, instead of \(\left( m\times m\right) \times n\) index values; (3) avoids multiplications by negligible density values. The fundamental regularities leading to the above advantages are explained below in the rest of this section. Readers wishing to skip this section can jump directly to Sect. 5 which presents numerical results about the performance of the algorithm.

A first set of critical elements leading to significant simplification are the conditional density values. Given a state of variance \({\overline{\sigma }}_{j}^{2},\) the conditional density values required to compute the continuation value in Eq. (12) are the same for all time-steps, unlike the typical quadrature scheme for which it will be time-varying. Hence, avoiding the repeated computation of this set of quantities results in substantial computational savings. We can store these values in a \(m\times m\) matrix written

It is important to notice that, because the points on the log of stock price grid are equally spaced, we can obtain all the elements of matrix \({\mathbf {F}}\left( {\overline{\sigma }}_{j}^{2}\right)\) that are above the diagonal from the elements of the last line. Conversely, we can obtain all the elements below the diagonal from the elements of the last line. All the elements on the diagonal are equal to \(f\left( {\overline{p}}_{1}\mid {\overline{p}}_{1},{\overline{\sigma }}_{j}^{2}\right)\). These regularities can be summarized more formally as:

where \(F_{i,k}\left( {\overline{\sigma }}_{j}^{2}\right)\) is element \(\left( i,k\right)\) of matrix \({\mathbf {F}}\left( {\overline{\sigma }}_{j}^{2}\right) .\) Given such a structure, we reduce the storage and computational costs from \(\left( m\times m\right) \times n\) elements, to \(\left( m\times 2-1\right) \times n\) elements. Another important point to notice is about the magnitude of the elements. The elements on the first line are monotonically decreasing towards zero, while the elements on the last line are monotonically increasing towards \(f\left( {\overline{p}}_{m}\mid {\overline{p}}_{m},{\overline{\sigma }} _{j}^{2}\right) .\) Using a threshold value below which the density value is considered too small to be relevant, we can easily predict which elements need to be multiplied when performing the expected value computations.

A second set of elements leading to important simplifications is the set of indexes of nearest variance values. These are required to pick the appropriate variance state when computing the continuation values. Similarly to the conditional density values, these index can be stored in a matrix depicted as

where \(l_{i\rightarrow j}\) is the index value of the variance on line i of the grid which is the nearest to \({\widehat{\sigma }}^{2}\), the inherited variance level determined by the transition of the stock price from state i to j. We observe that all the elements of this matrix of indexes can also be obtained from the first and last line using the relations summarized as:

where \(L_{i,k}\left( {\overline{\sigma }}_{j}^{2}\right)\) is element i, k of matrix \({\mathbf {L}}\left( {\overline{\sigma }}_{j}^{2}\right) .\) Again, the storage and computational costs can be reduced from \(\left( m\times m\right) \times n\) elements, to \(\left( 2m-1\right) \times n\) elements. All in all, these above compact representations for the transition densities and nearest variance indexes represent a reduction in storage and computation of \(\left( m\times m\right) \times 2n\) elements, to \(\left( 2m-1\right) \times 2n\) elements.

The simple and compact algorithm presented in “Appendix 4” uses the above simplifications and monotonicity properties of density values to computes a \(m\times 1\) vector of non-discounted continuation values for a given variance state j. Discounting the continuation values given in output by this algorithm, a vector of option prices in variance state j at time t can then be computed with Eq. (15).

5 Numerical results

In this section, we first present some maximum likelihood analysis of the NGARCH model with the assumption of a constant pricing parameter under the Gaussian and Johnson distribution hypothesis. The numerical performance of the algorithm for computing the values of European and American style options is then examined using the estimated parameters.

5.1 Parameter estimates

As in Stentoft (2008) and Simonato and Stentoft (2015), under the assumption of a constant pricing parameter, the inputs required to compute the option prices can be obtained from the following system:

where

For the \(Normal\left( 0,1\right)\) case, which corresponds to the model in Duan (1995), we obtain the likelihood function from the Gaussian density. For the \(J_{s_{u}}\left( a,b\right)\) case, we use the Johnson \(S_{u}\) density given by Eq. (20). Table 1 presents the parameters obtained by maximizing the log-likelihood function for both cases with daily S&P 500 value-weighted returns (including dividends) from CRSP for the beginning of 1990 to the end of 2015. For both cases, the estimated parameters are all significant and very close to each other. Such a result is anticipated since the maximum likelihood estimator of the Gaussian density can be seen as a quasi-maximum likelihood estimator capable of estimating the parameters of a GARCH process with non-Gaussian shocks. Although the two models are non-nested, it is interesting to notice that the likelihood value obtained with the Johnson \(S_{u}\) density shows a much higher value with only two additional parameters, which are both statistically significant. Since a Johnson \(S_{u}\) random variable is built from an invertible function of a standard normal random variable, using the filtered \(\varepsilon _{t}\) from the MLE approach, it is possible to obtain the corresponding standard normal shocks. A normality test about these standard shocks can then be used to assess the relevance of the assumed distribution. The Jarque–Berra test shows that the hypothesis of the standard normal residual at the source of the Johnson shocks cannot be rejected. A visual inspection with a quantile-to-quantile plot also confirms this result.

5.2 Option prices

In this section, we examine the performance of the algorithm for pricing European and American put options. For the European case, we benchmark our analysis with precise Monte Carlo simulated prices. For the American case, there are no benchmarks for GARCH with non-normal shocks. Nevertheless, the convergence results obtained for European options should translate to the American case.

Although the goal of the paper is to examine American option pricing in the non-normal GARCH context, it is interesting to first examine the performance of the algorithm in the more straightforward Gaussian case. For this case, we use the quadrature algorithm of Sect. 3.1 implemented with the analytical formula at the penultimate time step given by a Black–Scholes price and the normal density. More specifically, we compute the analytical formula in Eq. (11) with

where \(BSput\left( \cdot \right)\) is the Black and Scholes (1973) formula for a put option computed with a daily variance and interest rate.

For the subsequent computations with Eq. (12), we use the normal density

where \(\mu _{i,j}={\overline{p}}_{i}+r-\frac{1}{2}{\overline{\sigma }}_{j}^{2}\). In these computations, it is important to notice that the weights that are applied to the value function in Eq. (12) are the product of a density and a trapezoid weight \(\Delta {\overline{p}}.\) We use a target value for this product in order to determine the threshold value used in the algorithm presented in “Appendix 4” and below which the density value is considered negligible. More specifically, we compute the algorithm threshold with

For all the results presented in this paper, we use a target threshold of \(1\times 10^{-7}\). The use of a smaller threshold produces results that are virtually identical. Finally, to determine the minimum and maximum values of the log of stock prices, we set \(q=20\) in Eq. (16), i.e., an interval with 20 standard deviations for the log of stock prices.

Table 2 looks at European and American option prices replicating the examples found in Duan and Simonato (2001) and Ben-Ameur et al. (2009). The parameters from these examples present a low unconditional level and a low persistence level of the variance process. The persistence level indicates how close is the variance process from being non-stationary (integrated). A persistence smaller (larger) than one indicates a stationary (non-stationary) variance process. A persistence level smaller but very close to one indicates a case with high persistence. In the GARCH context, this is an important feature since such variance processes can generate larger variance levels, which in turn can affect the precision of the numerical approach. For this example, we obtain an unconditional variance and a persistence level of 0.200 and 0.909 for the physical process, and 0.221 and 0.925 for the risk-neutral process.Footnote 4 For the European option case, the benchmarks are accurate Monte Carlo European put prices computed with 10 million sample paths and a Black–Scholes control variate. As shown by the numbers in the table, as we increase the values of m and n, the quadrature prices become remarkably close to the benchmarks, with differences at the fourth decimal place. Shorter maturities converge more quickly since the range of possible values is smaller. We observe a similar behavior for the American put prices with only minimal differences from the benchmark values.

The second example presented in Table 3 also examines a Gaussian case. It uses the maximum likelihood parameters obtained for the normal case in Table 1 with an initial variance equal to the unconditional level of the physical variance process. This case presents a higher unconditional level and a higher persistence of the variance process when compared to the one examined in the previous table. The annualized unconditional physical variance and the persistence level are computed as 0.237 and 0.991 for the risk-neutral process. Table 3 shows results which are qualitatively similar to those we find in the previous table, with a small loss in precision for larger maturities. We attribute these differences to the higher persistence level of the risk-neutral variance process.

The first panel of Table 4 presents the computing times (in seconds) for American options computed with the parameters from the second example and various grid sizes. These computing times for this example are typically larger than those of the low persistence level example. The reported times do not include the time to compute the Monte Carlo inputs (which is the same for all cases with similar maturities). The prices are computed with Matlab and compiled C coded functions automatically generated with the Matlab Coder. For values of m smaller or equal to 1001, the computing times are quite low and range from a few seconds to a couple of minutes for longer maturities. These times grow quickly for larger values of m and n and can become excessive for long maturities and large values of m and n such as 2, 001. Fortunately, these large computation times can be cut down by computing the prices with \(n<m\). This is possible since the magnitude of daily variances are much smaller (and easier to cover with a smaller grid) than the magnitude of log of prices. Values of n that are smaller than m by a factor of three or four provide answers converging to the benchmarks. Table 4 shows the computation times that are obtained with \(n\approx m/3,\) while Table 5 presents the option prices computed with this modification. Significant gains are made in computing efforts, with few losses in terms of precision, as the computing times are (roughly) divided by three.

The third and fourth examples are presented in Tables 6 and 7. These tables examine the Johnson \(S_{u}\) cases for the low and high variance persistence level. The computations are implemented with the algorithm described in Sect. 3. Table 6 uses the same parameters as those in Table 2 with the additional Johnson parameters set to \(a=0.5\) and \(b=2.5.\) Such parameter values generate shocks with skewness and kurtosis of \(-0.27\) and 3.93. The physical unconditional variance and persistence level for the physical variance process are thus identical to those of the example in Table 2.Footnote 5 As in the Gaussian case, we see that the convergence towards the Monte Carlo benchmark is very good with perhaps slightly larger pricing errors for deep in-the-money options. For the American case, there are no benchmark values, but as in the Gaussian case, the numbers stabilize nicely at different values of m and n, depending on the maturity. Table 7 examines the case where the parameters are obtained from the MLE analysis. For larger maturities, convergence is slower but the computed prices also reach the benchmark values with a good precision, again more quickly for smaller than longer maturities.

For the Johnson case, computing times are typically much larger than the Gaussian case. These larger computing times are caused by the larger probabilities of transiting to prices that are far away because of the negative skewness and larger kurtosis values. Hence, for a same target threshold value, there are more computations to be performed by the algorithm in the Johnson case. The first panel of Table 8 reports the computing times that are achieved for at-the-money American put options using the parameters obtained from the MLE analysis presented above. As in the second Gaussian example, this case presents a higher unconditional level and a higher-persistence of the variance process. Again, it is possible to reduce the computing times to much lower levels by reducing the number of grid points in the variance dimension, as indicated in the second of the table. Table 9 presents the quadrature prices for this case with \(n\approx m/3\). Again, the convergence to the Monte Carlo benchmarks is very good, with some discrepancies for the larger maturity and deep in-the-money options.

5.3 Markov-chain option prices

As stated in the introduction, pricing algorithms for American options in the Gaussian GARCH cases are available in the literature. In the GARCH model we consider, the Johnson \(S_{u}\) error terms are continuous and monotone transformations of standard normal error terms, making it possible to adapt methods designed for the Gaussian case to this non-Gaussian framework. In this section, we examine how the Markov-chain approach of Duan and Simonato (2001) can be adapted to price American options under the risk-neutral dynamics examined in the above sections.

The idea behind the construction of an approximating Markov chain to compute options under GARCH is as follows. The real line, the support of the log of the stock price distribution, is first partitioned into m distinct cells. We build a similar partition for the log of the variance with n cells. We then assign a numerical value to each cell. These values yield an \(m\times n\) grid of log of stock prices and variances. Given the values on point i, j of this grid, the probability of reaching a pair of price and volatility cells can then be computed analytically, using the distribution function of the random shocks associated to the stock price. Since in our case the error term is a Johnson \(S_{u}\) random variable, we can compute its distribution function with a standard normal distribution function. With these transition probabilities, it then becomes possible to compute the conditional expected values appearing in the Belman recursion.

As shown by this short description, the Markov chain and the simplified quadrature approach share many similarities. They both use a grid of stock prices and variances, and the option prices are computed recursively on each point of the grid. The main difference between the Markov chain approach and the simplified quadrature scheme is about the computation of the conditional expected value in the Bellman recursion. While the quadrature approach uses a numerical integration scheme such as the trapeze or Simpson rule, the Markov chain approach computes the conditional expected values using the transition probabilities of the approximating Markov chain. More specifically, the continuation values given by Eq. (12) for the quadrature case are computed as follows with the Markov chain approach:

where \(\Pr \left( {\overline{p}}_{k}\mid {\overline{p}}_{i},{\overline{\sigma }} _{j}^{2}\right)\) is the transition probability of going from state \(\left\{ {\overline{p}}_{i},{\overline{\sigma }}_{j}^{2}\right\}\) to the cells associated with state \(\left\{ {\overline{p}}_{k},{\overline{\sigma }}_{l_{i\rightarrow k} }^{2}\right\}\).Footnote 6 “Appendix 5” describes how these transition probabilities can be computed. Duan and Simonato (2001) store these probabilities into a square matrix of dimension \(mn\times mn\), and prices are computed backward with simple matrix multiplications. Sparse matrices techniques are then used to save on storage and computation time. To compare the Markov chain and simplified quadrature approaches, we implement the Markov chain with the procedure outlined in Sect. 4. As in Simonato (2011), where the Markov chain approach is examined for the geometric Brownian motion and jump cases, we use a simple algorithm similar to the one presented in “Appendix 4” to save on computing effort and memory.

Table 10 presents the prices computed with the Markov chain algorithm for the Johnson \(S_{u}\) case with a high variance persistence level. To enable valid comparisons, we implement the Markov chain with the same grid as the one used for the simplified quadrature approach. Overall, for the small and medium maturity cases, the prices computed with the Markov chain converge to the target prices, but more slowly, and with more oscillation than those obtained with the simplified quadrature scheme in Table 7. The algorithm also encounters difficulties for the long maturity case of 189 days. While the quadrature algorithm was able to achieve accurate results with m and n around 2500, the Markov chain algorithm fails to converge to the benchmarks. Finally, we notice that computing times with the Markov chain are similar to those obtained for the quadrature.

6 Conclusion

GARCH models with non-normal errors are now commonly used by analysts for modeling financial returns. The choice of algorithms to compute American option prices in this context is however limited. We have proposed a simple quadrature approach to compute American and European option values in this context. The algorithm is shown to converge well to benchmark values obtained from accurate Monte Carlo simulations. Computing times can be long, but effective strategies are available to temper down this disadvantage with small impacts on the precision. Finally, we also show that, within the pricing context presented here, it is possible to adapt the Markov chain approach of Duan and Simonato (2001) which was explicitly designed to deal with Gaussian GARCH cases. Numerical results show that the quadrature approach we propose here is more efficient than this Markov chain approach.

Notes

See, for example, the recent survey in Christoffersen et al. (2013).

Other quadrature rules could also be used with minimal changes. For the GARCH context examined here, it is found that the simple trapezoid rule works slightly better than the more sophisticated Simpson rule.

GARCH parameters are typically obtained with time series with 252 trading days. Hence, for the physical process, we compute the annual unconditional volatility as \(\sqrt{\beta _{0}/\left( 1-\beta _{1}-\beta _{2}\left( 1+\theta ^{2}\right) \right) \times 252}\). The persistence level is given by \(\beta _{1}+\beta _{2}\left( 1+\theta ^{2}\right) .\) The corresponding values for the risk-neutral case are obtained with the same formula but with \(\theta\) replaced by \(\theta +\lambda .\)

Unlike for the Gaussian case, there are no available formulas to compute the unconditional variance and persistence level for the risk-neutral process.

In this Appendix, without loss of generality, we drop the time subscript and the stars denoting risk neutral quantities.

References

Andricopoulos A, Widdicks M, Newton D, Duck P (2007) Extending quadrature methods to value multi-asset and complex path dependent options. J Financ Econ 83:471–499

Andricopoulos A, Widdicks M, Duck P, Newton D (2003) Universal option variation using quadrature. J Financ Econ 67:447–471

Ben-Ameur H, Breton M, Martinez JM (2009) Dynamic programming approach for valuing options in the GARCH Model. Manag Sci 55:252–266

Black F, Scholes M (1973) The pricing of options and corporate liabilities. J Polit Econ 81:637–659

Bollerslev T (1986) Generalized autoregressive conditional heteroskedasticity. J Econ 31:307–327

Bollerslev T (1987) A Conditionally heteroskedastic time-series model for speculative prices and rates of return. Rev Econ Stat 69:542–547

Broadie M, Detemple J (1996) American option valuation: new bounds, approximations, and a comparison of existing methods. Rev Financ Stud 9:1211–1250

Cakici N, Topyan K (2000) The GARCH option pricing model: a lattice approach. J Comput Finance 3:71–85

Chen D, Härkönen H, Newton D (2014) Advancing the universality of quadrature methods to any underlying process for option pricing. J Financ Econ 114:600–612

Christoffersen P, Elkamhi R, Feunou B, Jacobs K (2010) Option valuation with conditional heteroskedasticity and non-normality. Rev Financ Stud 23:2139–2183

Christoffersen P, Jacobs K, Ornthanalai C (2013) GARCH option valuation: theory and evidence. J Deriv 21:8–41

Chung SL, Ko K, Shackleton M, Yeh CY (2010) Efficient quadrature and node positioning for exotic option valuation. J Futures Mark 30:1026–1057

Cosma A, Galluccio S, Pederzoli P, Scaillet O (2016) Early exercise decision in american options with dividends, stochastic volatility and jumps, Swiss Finance Institute Research paper no. 16-73. https://ssrn.com/abstract=2883202

Duan JC (1995) The GARCH option pricing model. Math Finance 5:13–32

Duan JC (1999) Conditionally fat-tailed distributions and the volatility smile in options, working paper, Hong Kong University of Science and Technology

Duan JC, Simonato JG (2001) American option pricing under GARCH by a Markov chain approximation. J Econ Dyn Control 25:1689–1718

Engle R (1982) Autoregressive conditional heteroskedasticity with estimates of the variance of UK inflation. Econometrica 50:987–1008

Glasserman P (2004) Monte Carlo Methods in Financial Engineering. Springer, New York

Hansen B (1994) Autoregressive conditional density estimation. Int Econ Rev 35:705–730

Hsieh D (1989) Modeling heteroscedasticity in daily foreign-exchange rates. J Bus Econ Stat 7:307–317

Johnson N (1949) Systems of frequency curves generated by methods of translation. Biometrika 36:149–176

Judd K (1998) Numerical methods in economics. MIT Press, Cambridge

Lyuu Y, Wu C (2005) On accurate and provably efficient GARCH option pricing algorithms. Quant Finance 5:181–198

Ritchken P, Trevor R (1999) Pricing options under generalized GARCH and stochastic volatility processes. J Finance 54:377–402

Simonato JG (2011) Computing American option prices in the lognormal jump-diffusion framework with a Markov chain. Finance Res Lett 8:220–226

Simonato JG (2012) GARCH processes with skewed and leptokurtic innovations: revisiting the Johnson \(S_{u}\) case. Finance Res Lett 9:213–219

Simonato JG (2016) A simplified quadrature approach for computing Bermudan option prices. Int Rev Finance 16:647–658

Simonato JG, Stentoft L (2015) Which pricing approach for options under GARCH with non-normal innovations? CREATES research paper 2015-32

Sullivan M (2000) Valuing American put options using Gaussian quadrature. Rev Financ Stud 13:75–94

Stentoft L (2008) American option pricing using GARCH models and the Normal Inverse Gaussian distribution. J Financ Econom 6:540–582

Su H, Chen D, Newton D (2017) Option pricing via QUAD: from Black–Scholes–Merton to Heston with jumps. J Deriv 24:9–27

Acknowledgements

This research was supported by the Social Sciences and Humanities Research Council of Canada. I would like to thank Lynn Mailloux for helpful comments

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix 1: Moments of Johnson \(S_{u}\) random variables

This appendix describes in more details the Johnson \(S_{u}\) random variables that have been introduced in Johnson (1949).

A zero-mean, unit variance random variable following a two-parameter Johnson \(S_{u}\) distribution with parameters a and b is written

where \(\ x=\sinh \left( \frac{z-a}{b}\right) , \ \ z\) is a standard normal random variable and \(c=-M\left( a,b\right) /\sqrt{V(a,b)}\), \(d=1/\sqrt{V(a,b)}\) with

and \(w=e^{\frac{1}{b^{2}}}\) and \(\Omega =\frac{a}{b}.\) The parameters a and b control the skewness and kurtosis. The functions \(\cosh \left( u\right) =\left( e^{u}+e^{-u}\right) /2\) and \(\sinh \left( u\right) = \left( e^{u}-e^{-u}\right) /2\) are the hyperbolic cosine and sine functions. Here \(M\left( \cdot \right)\) and \(V\left( \cdot \right)\) are the mean and variance of \(x_{t},\) the unstandardized two-parameter Johnson \(S_{u}\) random variable. The values c and d, which are functions of a and b, are used to change the location and scale of x in such a way that it becomes a standardized Johnson \(S_{u}\) random variable.

The first four moments of the standardized Johnson \(S_{u}\) random shock can be computed with:

where the first four moments of x can be derived to be:

Because Johnson error terms are a monotone, continuous and invertible transformation of a standard normal, the conditional density of the stock price can be obtained from the standard normal density with a change of variable (see Simonato 2012). The conditional density of the risk-neutral price described by Eq. (5) can be written as:

where

Appendix 2: Quasi-analytical option price for the one period case

For a one-period to maturity case, pricing options in the GARCH context amounts to a constant variance case since the variance is a predictable process, i.e., the variance next period is a known quantity. In such a context, it is thus possible to obtain a quasi-analytical option pricing formula for the GARCH model outlined in Sect. 2. The solution is quasi-analytic since it involves an integral over a standard normal random variable that must be solved numerically This integral can be computed numerically in a fraction of a second using a precise Gauss–Legendre approach. This Appendix derives this quasi-analytical solution.

Denote the logarithm of the risk-neutral price for the next period of time asFootnote 7

where the expressions for \(\phi ,\ \varepsilon\) and \(\sigma\) are given in Sect. 2. The value of a put option with a one-period to maturity and a strike price K is

Using the change of variable \(\ln \left( P\right) =\phi +\varepsilon \sigma\), the above integral can be rewritten in terms of the Johnson \(S_{u}\) random shock as

where \(k=\frac{\ln K-\phi }{\sigma }.\) Using another change of variable given by \(\varepsilon =c+d\times \sinh \left( \frac{z-a}{b}\right) ,\) we rewrite the above expression as a function of standard normal random shock given by

where \(N\left( \cdot \right)\) is the distribution function of a standard normal random variable, and \(q=a+b\sinh ^{-1}\left( \frac{k-c}{d}\right) .\) The integral in this expression can be computed very efficiently using a Gauss–Legendre numerical integration technique (see Judd 1998 for a detailed description). For example, using parameter values from the empirical section, the Gauss–Legendre approach with 15 integration nodes obtains prices in 0.0003 s on a standard laptop computer. These prices are identical, up to the fourth digit, to prices obtained with a precise Monte Carlo simulation with 100 million sample paths.

Appendix 3: Continuation values in the GARCH context

For time-steps \(T-2\) to 0, the discounted continuation value given by Eq. (10) is computed numerically on a point (i, j) of the grid using a trapezoid quadrature rule. For such computations, it is important to notice that, for GARCH processes, given that the stock price is presently in state i, a transition next period to a price in state k will automatically determine the variance level that will be inherited from the price movement. Such an inherited variance level is caused by the nature of the GARCH variance dynamics which specifies that next period variance is a function of the current shock leading to the stock price. More specifically, given that the stock price and variance are currently in state (i, j), the shock that would be required to go next period to a price in state k can be computed from Eq. (5) with:

This shock implies that the inherited variance level associated with price \({\overline{p}}_{k}\) will be

Recall that for the kth price on the grid, there are n different variance levels. The inherited variance will almost never be equal to one of these points. We therefore choose the kth price with the variance level which is the closest to the inherited variance. Denoting by \(l_{i\rightarrow k}\) the index of the closest variance level, the computation for the continuation value at time t on a point \(\left( i,j\right)\) of the grid can thus be written as:

where \(w_{k}\) is the trapezoid rule weight described in Sect. 3.

Appendix 4: Algorithm for continuation values computation

This appendix describes the pseudo-code which can be used to compute a vector of (non-discounted) continuation values in variance state j without the need to compute and store all the elements of matrix \({\mathbf {F}}\left( {\overline{\sigma }}_{j}\right) .\) It also avoids multiplying densities that are too close to zero according to a pre-specified threshold value (\(1\times 10^{-10}\) for example). The following inputs, which can be computed using the equations described in the main text, are required by the pseudo-code:

-

Ftop: an \(m\times 1\) vector \(= \left[ f\left( \overline{p}_{1}\mid {\overline{p}}_{1},{\overline{\sigma }}_{j}^{2}\right) , \ldots ,f\left( {\overline{p}}_{m}\mid {\overline{p}}_{1} ,{\overline{\sigma }}_{j}^{2}\right) \right] ^{\prime }\)

-

Fbot: an \(m\times 1\) vector \(= \left[ f\left( \overline{p}_{1}\mid {\overline{p}}_{m},{\overline{\sigma }}_{j}^{2}\right) , \ldots ,f\left( {\overline{p}}_{m}\mid {\overline{p}}_{m} ,{\overline{\sigma }}_{j}^{2}\right) \right] ^{\prime }\)

-

Ltop: an \(m\times 1\) vector \(= \left[ l_{1\rightarrow 1}, \ldots ,l_{1\rightarrow m}\right] ^{\prime }\)

-

Lbot: an \(m\times 1\) vector \(= \left[ l_{m\rightarrow 1}, \ldots ,l_{m\rightarrow m}\right] ^{\prime }\)

-

nzfirst and nzlast: the number of non-zero elements in Ftop and Fbot

-

cbeg : an \(m\times 1\) vector with the ith element equal to \(\max \left( i-nzlast+1,1\right)\)

-

cend : an \(m\times 1\) vector with the ith element equal to \(\min \left( i+nzfirst-1,m\right)\)

-

f11: a scalar equal to \(f\left( {\overline{p}}_{1}\mid {\overline{p}}_{1},{\overline{\sigma }}_{j}^{2}\right)\)

-

w: an \(m\times 1\) vector of trapezoid rule weights

The pseudo-code below gives the output in the \(m\times 1\) vector of non-discounted continuation values \(\texttt {c{:}}\)

Appendix 5: Computing transition probabilities with the Markov-chain approach

The Markov chain algorithm is implemented as follows. First, an \(m\times n\) grid of log stock prices and variances is built using the procedure described in Sect. 3.2. Using these points, the \(m+1\) boundaries of the intervals partitioning the support of the log of prices are build with

and \(B_{1}=-1.0\times 10^{6}\) and \(B_{m+1}=1.0\times 10^{6}.\) With these, conditional on a point \(i, j\) on the grid, the probability of going from state \(\left\{ {\overline{p}}_{i},{\overline{\sigma }}_{j}^{2}\right\}\) to the cells around state \(\left\{ {\overline{p}}_{k},{\overline{\sigma }} _{l_{i\rightarrow k}}^{2}\right\}\) is computed as

where \(N\left( \cdot \right)\) is the distribution function of a standard normal random variable and

Rights and permissions

About this article

Cite this article

Simonato, JG. American option pricing under GARCH with non-normal innovations. Optim Eng 20, 853–880 (2019). https://doi.org/10.1007/s11081-019-09421-w

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11081-019-09421-w