Abstract

The paper is concerned with a generalization of Floater–Hormann (briefly FH) rational interpolation recently introduced by the authors. Compared with the original FH interpolants, the generalized ones depend on an additional integer parameter \(\gamma >1\), that, in the limit case \(\gamma =1\) returns the classical FH definition. Here we focus on the general case of an arbitrary distribution of nodes and, for any \(\gamma >1\), we estimate the sup norm of the error in terms of the maximum (h) and minimum (\(h^*\)) distance between two consecutive nodes. In the special case of equidistant (\(h=h^*\)) or quasi–equidistant (\(h\approx h^*\)) nodes, the new estimate improves previous results requiring some theoretical restrictions on \(\gamma \) which are not needed as shown by the numerical tests carried out to validate the theory.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In this paper, we explore the interpolation problem of a function f(x) within a finite interval [a, b], given its values at the nodes

We consider arbitrary distributions of such nodes basing our estimates on the maximum and minimum distance between two consecutive nodes, namely

As a particular case, we consider the case of equidistant or quasi-equidistant configurations of nodes for which one has \(h=h^*\) (equidistant nodes) or \(h/h^*\le {\mathcal {C}}\) holds for all \(n\in {\mathbb N}\) with \({\mathcal {C}}>1\) an absolute constant (quasi–equidistant nodes).

In their work [9], Huybrechs and Trefethen compare various methods for approximating a function with equidistant nodes. One such method employs Floater-Hormann (FH) interpolating rational functions denoted by r(x) [6]. Such interpolants (briefly FH interpolants) generalize Berrut’s rational interpolation [1] by introducing a fixed integer parameter \(0\le d\le n\) to speed up the convergence getting, in theory, arbitrarily high approximation orders.

When \(d=0\), the FH interpolant reduces to the Berrut’s first interpolant from [1] (see also [7]). For any \(1 \le d \le n\), similarly to Berrut’s approximant, Floater and Hormann established that the approximant r(x) lacks poles on the real line, coincides with f on the set of nodes \(X_n=\{x_0,x_1,\ldots ,x_n\}\), and, for any \(x\not \in X_n\), provides a barycentric representation for efficient and stable computations [5].

Moreover, as \(h\rightarrow 0\) (and hence \(n\rightarrow \infty \)), the FH approximation error behaves, for any fixed \(d\in {\mathbb N}\), as [6, Thm. 2]:

For an overview of linear barycentric rational interpolation, interested readers can refer to the paper by Berrut and Klein [2].

While applicable to any node configuration, the Floater-Hormann method proves particularly effective for equidistant setups due to the logarithmic growth of the Lebesgue constants [4, 8]. In this case, (3) continues to hold but the convergence is not guaranteed for less regular functions, e.g. for functions that are only continuous on [a, b].

To overcome this problem, in [12], we extended the Floater-Hormann method by defining a new family of linear rational approximants denoted by \(\tilde{r}(x)\). These approximants depend on d and an additional parameter \(\gamma \in {\mathbb N}\). When \(\gamma = 1\), \(\tilde{r}(x)\) reduces to the original FH interpolant r(x).

Similarly to the original FH interpolants, we showed that, for all \(\gamma >1\), \(\tilde{r}(x)\) has no real poles, interpolates the data, preserves polynomials of degree \(\le d\), and has a barycentric-type representation. Moreover, in the case of equidistant or quasi-equidistant nodes, we proved the uniform boundedness of the Lebesgue constants and

Concerning the approximation rate, in [12] we established several error estimates depending on the smoothness class of f. In particular, for arbitrarily fixed \(d\in {\mathbb N}\) and equidistant or quasi-equidistant nodes, as \(n\rightarrow \infty \), we proved that

holds provided that \(\gamma >s+1\), and

holds provided that \(\gamma >2\).

In this paper, for the previous classes of functions, we aim to derive error estimates valid for arbitrary configurations of nodes (Theorems 3.1 and 3.4). Moreover, in the special case of equidistant or quasi-equidistant nodes, we aim to state the previous results but without any restriction on \(\gamma \) besides \(\gamma >1\) (Corollaries 3.3 and 3.5).

The outline of the paper is the following. In Section 2 we briefly recall the definition and the properties proved in [12] for generalized FH interpolants at arbitrary distribution of nodes. In Section 3 we state the new estimates. In Section 4 we show several numerical tests. Finally, in Section 5 we give conclusions.

2 Generalized Floater–Hormann interpolation

For any pair of integer parameters \(0\le d\le n\) and \(\gamma \ge 1\), the generalized FH approximation of f at the nodes (1) is given by [12]

where

and \(p_i(x)\) is the unique polynomial of degree at most d interpolating f at the \((d+1)\) nodes \(x_i<x_{i+1}<\ldots <x_{i+d}\).

In the case \(\gamma =1\), \(\tilde{r}(f,x)\) coincides with the original FH interpolants introduced in [6]. In the sequel, we will also use the notation \(\tilde{r}(f)\) to represent the function \(\tilde{r}(f,x)\) as defined in (4).

For completeness, we recall below the properties already proved for the generalized FH approximants [6, 12]. Unless otherwise specified, they are valid for any distribution of nodes and for any value of the parameters \(0\le d\le n\) and \(\gamma \ge 1\).

-

1.

Poles: The generalized FH rational function \(\tilde{r}(f)\) has no real poles in the interval [a, b].

-

2.

Interpolation: We have \(\tilde{r}(f,x_k)=f(x_k)\), \(k=0,\ldots ,n\).

-

3.

Preservation of polynomials: If f is a polynomial of degree at most d then we have \(\tilde{r}(f)=f\).

-

4.

Barycentric–type form: For all \(x\notin \{x_0,\ldots , x_n\}\) we have

$$\begin{aligned} \tilde{r}(f,x) = \frac{\sum _{k=0}^n \frac{w_k(x)}{(x-x_k)^\gamma } \ f(x_k)}{\sum _{k=0}^n \frac{ w_k(x)}{(x-x_k)^\gamma }}, \end{aligned}$$(6)where

$$\begin{aligned} w_k(x) = \sum _{i \in J_k} (-1)^{i\gamma } \prod _{s=i,s\ne k}^{i+d} \frac{1}{(x_k-x_s)(x-x_s)^{\gamma -1}},\qquad k=0,\ldots ,n, \end{aligned}$$(7)with \(J_k=\left\{ i\in \{0,\ldots , n-d\} \, \ k-d\le i\le k\right\} \).

-

5.

Lebesgue constants: They are defined as \(\Lambda _n=\sup _{f\ne 0}\frac{\Vert \tilde{r}(f)\Vert _\infty }{\Vert f\Vert _\infty }\), \(\forall n\in {\mathbb N}\). For all parameters \(\gamma > 1\) and \(1\le d\le n\), we have [12]

$$\begin{aligned} \Lambda _n\le {\mathcal {C}}\ d 2^d \left( \frac{h}{h^*}\right) ^{\gamma +d} \end{aligned}$$(8)where \({\mathcal {C}}>0\) is a constant independent of n, h, d and \(\gamma \). For \(\gamma =1\), \(0\le d\le n\), and equidistant or quasi–equidistant distribution of nodes, we have [3, 4, 8]

$$\begin{aligned} \Lambda _n\le {\mathcal {C}}\ 2^d \log n, \end{aligned}$$(9)where \({\mathcal {C}}>0\) is a constant independent of n, d.

3 Main results

In the space \(C^s[a,b]\) of all functions that are s–times continuously differentiable in [a, b], we state the following result.

Theorem 3.1

Let \(d,\gamma \in {\mathbb N}\) be arbitrarily fixed with \(\gamma >1\). For all \(f\in C^{s}[a,b]\) with \(1\le s\le d+1\), and any configuration of nodes \(a=x_{0}< x_{1}<\) ... \(< x_n=b\), with \(n\ge d\), the associated generalized FH interpolant \(\tilde{r}(f)\) satisfies

where \(\mathcal {C}>0\) is a constant independent of \(n,h,h^*\).

Proof

Let \(x\in [a,b]\) be arbitrarily fixed. We shall prove that \(|f(x)-\tilde{r}(f,x)|\) is not greater than the right–hand side of (10) with \({\mathcal {C}}>0\) independent of \(n,h,h^*\), and x too. Recalling the interpolation property, we may suppose \(x\notin \{x_0,\ldots , x_n\}\), otherwise it is trivial.

By the definition of \(\tilde{r}(f,x)\) (cf. (4)) we get

where, for brevity, we set

Recalling that the interpolation error for \(p_i\) can be expressed by the Newton formula

by (11) we obtain

Concerning the last factor, in the case \(f\in C^{s}[a,b]\) with \(s=d+1\), we recall that the divided differences of order \(d+1\) satisfy

where \(\xi _i\in [\min \{x,x_i\}, \max \{x,x_{i+d}\}]\). In the case \(s=d\) and \(f\in C^d[a,b]\), we note that

and using

we get

More generally, in the case \(f\in C^s[a,b]\) with \(s=d+1-k\), reasoning by induction on \(k=0,\ldots , d\), it can be proved that

where \(c>0\) is a constant depending on d but not on x, f and \(i\in \{0,\ldots , n-d\}\).

Summing up, setting

In the following we estimate the summation S(x). To this aim, we recall that [12, Eq. (24)]

where

\(\ell \in \{0,\ldots ,n-1\}\) depends on x, and is defined by the condition \(x_\ell<x<x_{\ell +1}\).

Note that \(d\ge 1\) implies that \(I_\ell \ne \emptyset \) holds for any \(\ell \). Moreover, note that \(i\in I_\ell \) ensures that \(\ell \in \{i,\ldots , i+d-1\}\). Hence, for any \(i\in I_\ell \) we can write

and taking into account that

we get

and therefore

Furthermore, by collecting (16) and (18), we also get

Given this, let us consider the following decomposition

of the summation S(x) with the convention that empty summations are null (i.e., \(\sum _{i=n_1}^{n_2}a_i=0\) if \(n_1>n_2\)), and that always \(i\in \{0,\ldots , n-d\}\).

For \(\ell \ge (d+1)\) the (nonempty) summation \(S_1(x)\), can be estimated by applying (19),

and using (13) we get

where \((\gamma -1)(d+1)\ge 2\) implies

Hence, we conclude that

where \({\mathcal {C}}_1= (d!)^\gamma \sum _{j=1}^\infty \frac{1}{j^2}\) is independent of x, n, h and \(h^*\).

Now, for \(\ell +2\le n-d\), let us show that (21) also holds for \(S_3(x)\) by using similar arguments. Indeed, by (19), (13), and (20), we have

Finally, let us estimate \(S_2(x)\). To this aim, by applying (16), (17) and (13), we note that

and similarly

Moreover, by (16) and (18), we get

Consequently, by the above estimates, we obtain

that is \(S_2(x)\) can be estimated as

where \({\mathcal {C}}_2=d! d +\frac{2(d+1)!}{d^\gamma }\) is independent of x, n, h and \(h^*\).

In conclusion, from (15), (21), (22), and (23), we deduce

and the statement follows by taking into account that \(\gamma +d+1\le \gamma (d+1)\) holds for all \(\gamma ,d\in {\mathbb N}\) with \(\gamma >1\). \(\square \)

Remark 3.2

We remark that the case \(d=0\) and \(\gamma >1\), and the case \(\gamma =1\), \(0\le d\le n\), are not covered in Thm. 3.1. In the latter case, estimates can be found in [2, 6, 10, 11]. Moreover, a case close to taking \(d=0\) and \(\gamma =2\) has been considered in [13].

Concerning the bounds on s hypothesized in Thm. 3.1, we remark that if we have \(s>d+1\) then the estimate proven for \(s=d+1\) continues to hold. Indeed, looking at the proof of Thm. 3.1, in the case \(s>d+1\) we can replace (15) with

where \(c >0\) is a constant independent of f, x and \(n,h,h^*\). Hence, by applying the estimate proved for S(x), we conclude

where \({\mathcal {C}}>0\) is a constant independent of \(n,h,h^*\).

Note that in the limit case \(\gamma =1\) the right-hand side of (24) agrees with (3).

In the special case of equidistant or quasi–equidistant distribution of nodes, by the previous theorem and remark we get the following result.

Corollary 3.3

Let \(d,\gamma \in {\mathbb N}\) be arbitrarily fixed with \(\gamma >1\). For all \(f\in C^{s}[a,b]\) with \(s\in {\mathbb N}\), and any set of \(n+1\) nodes \(a=x_0<x_1<...<x_n=b\) with \(n\ge d\) such that \(h\sim h^*\sim n^{-1}\), the associated generalized FH interpolant \(\tilde{r}(f)\) satisfies

where \({\mathcal {C}}>0\) is a constant independent of \(n,h,h^*\).

We remark that in [12, Thm. 5.3] the same estimate was proved under the additional hypothesis that \(\gamma >r+1\) holds.

Finally, we consider the case of less smooth functions f that are only Lipschitz continuous on [a, b], i.e., satisfying

with \(L>0\) independent of x, y.

By the following theorem, we prove that in the space Lip[a, b] of such functions, we get the same error estimate proved in \(C^1[a,b]\).

Theorem 3.4

Let \(d,\gamma \in {\mathbb N}\) be arbitrarily fixed with \(\gamma >1\). For all \(f\in \text {Lip[a,b]}\) and any configuration of nodes \(a=x_0<x_1<...<x_n=b\), with \(n\ge d\), the associated generalized FH interpolant \(\tilde{r}(f)\) satisfies

where \({\mathcal {C}}>0\) is a constant independent of n,h,h\(^{*}\).

Proof

The proof is similar to the previous one. The only change regards the estimate of the factor \(|f[x_i,\ldots , x_{i+d}, x]|\) in (12), that in the case of Lipschitz continuous functions can be estimated as

where \({\mathcal {C}}>0\) is independent of x, h and \(h^*\).

Such a bound can be easily proved reasoning by induction on \(d\in {\mathbb N}\). Indeed, for \(d=1\) it is true since by (26) we have

and consequently

Moreover, assuming that (28) holds for \(d\ge 1\), we get

i.e., (28) holds for \(d+1\) too. \(\square \)

Finally, by Thm. 3.4 we get the following result concerning the case of equidistant and quasi– equidistant nodes

Corollary 3.5

Let \(d,\gamma \in {\mathbb N}\) be arbitrarily fixed with \(\gamma >1\). For all \(f\in \text {Lip}[a,b]\) and any set of \(n+1\) nodes \(a=x_0<x_1<...<x_n=b\) with \(n \ge d\) such that \(h\sim h^*\sim n^{-1}\), the associated generalized FH interpolant \(\tilde{r}(f)\) satisfies

where \({\mathcal {C}}>0\) is a constant independent of \(n,h,h^*\).

We remark that in [12, Thm. 5.2] the same estimate was proved under the stronger hypothesis that \(\gamma >2\) holds.

4 Numerical experiments

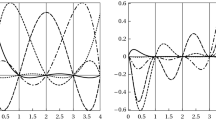

First in Fig. 1, we show the generalized FH interpolants for \(d=0,1,2,3\), with fixed \(\gamma = 2\). \(h/h^* = 5\) and \(h^* = 0.05\), approximating the Runge function \(f(x) = 1/(1+25x^2)\) in the interval \([-1,+1]\). The interpolation nodes are constructed using the procedure described below, resulting in 13 quasi-equidistant interpolation nodes.

Next, we investigate the behavior of the error

as a function of \(\frac{h}{h^*}\) and \(h^*\) for a fixed value each for the degree d and the parameter \(\gamma \). In all numerical experiments we performed it turns out that E behaves as:

This means that

To determine \(\log ({D})\), \(\alpha \) and \(\beta \), we can use linear least squares approximation when we have different measurements for the values of the function \(\log E\left( \frac{h}{h^*},h^*\right) \) for different values of \(\log \left( \frac{h}{h^*}\right) \) and \(\log (h^*)\). Consider the interval \([a,b] = [-1,+1]\). Given \(\log \left( \frac{h}{h^*}\right) \) and \(\log (h^*)\), the corresponding points \(x_i\) consist of two subsets. In the first subset there are all points \(-1, -1+h+h^*, -1+2(h+h^*), \ldots \) in the interval \([-1,+1]\). In the second subset there are all points of the first subset shifted by h to the right, i.e., \(-1+h, -1+h+(h+h^*), -1 +h+2(h+h^*),\ldots \) in the interval \([-1,+1]\). Note that the endpoint \(+1\) does not necessarily belong to the point set but it is at a maximum distance of h from the right-most point in the point set. The values taken for h and \(h^*\) are all combinations of a value of \(h^*\) chosen from

and a value of g among

with \(h = g h^*\). The norm \(\Vert f \Vert _\infty \) is approximated by looking at the largest value of |f| in \(10^5\) Chebyshev points in the interval \([-1,+1]\).

Let us take the function \(f(x) = x^2 |x| \in C^2[-1,+1]\), the degree \(d = 1\) and the parameter \(\gamma = 2\). A plot of the function \(E\left( \frac{h}{h^*},h^*\right) \) is given in Fig. 2. From this figure, it can be seen that the function E can be approximated very well by an affine function in \(\frac{h}{h^*}\) and \(h^*\). A similar plot was obtained for the other examples given in this section.

Solving the least squares problem gives us the values

In view of (10), the value \(\alpha \) should be compared to \(\gamma (d+1) = 4\) and the value \(\beta \) to \(s = 2\). Note that the value of D is small, the value of \(\alpha \) is much smaller than \(\gamma (d+1) = 4\) and the value of \(\beta \) is approximately equal to \(s=2\).

In Table 1, we give several other examples. Theorem 3.1 is illustrated by the examples with \(i=1,4,5\). Theorem 3.4 is illustrated by the example with \(i=7\). Note that for \(i = 2,3,10,11\), the value of \(\beta \) is larger than predicted by the theory of Theorem 3.1 or Theorem 3.4.

Based on these results, we give the following conjecture which we were not able to prove. Let \(C^{s,\alpha }[a,b]\) be the class of functions that are s-times continuously differentiable and whose s–th derivative is Hölder continuous with exponent \(0<\alpha \le 1\).

Conjecture 4.1

Let \(d,\gamma \in {\mathbb N}\) be arbitrarily fixed with \(\gamma >1\). For all \(f\in C^{s,\alpha }[a,b]\) with \(1\le s\le (d+1)\), and any set of \(n+1\) nodes \(a=x_0<x_1<...<x_n=b\) with \(n\ge d\) such that \(h\sim h^*\sim n^{-1}\), the associated generalized FH interpolant \(\tilde{r}(f)\) satisfies

where \({\mathcal {C}}>0\) is a constant independent of \(n,h,h^*\).

Note that in Table 1, for \(i = 6,8,9\), we also considered \(d=0\) which is not covered by the theory of Theorem 3.1 or Theorem 3.4.

When \(\gamma =1\), experimental results for \(f(x)=|x|\) are given in [6] and for \(f(x)=|x|^{0.5}\) in [1]. To illustrate the behavior of the appproximation when \(\gamma \) is varied, we consider the function \(f(x)=|x|\), \(d=1\), \(h^* =\) 1.0e-3 and \(h = 5 h^*\). For \(\gamma = 1,2,\ldots ,7\), the error function \(|f(x)-\tilde{r}(f,x)|\) is plotted in Fig. 3. When \(\gamma \) increases, the error is more and more concentrated around the x-value 0. The same behavior was also observed for equidistant points [12].

5 Conclusions

In [12], we proved several results concerning the convergence rate of generalized FH interpolants corresponding to equidistant and quasi-equidistant distributions of nodes. These results required a restriction on the value of the parameter \(\gamma \). In this paper, we proved similar convergence results without a need for a restriction on the value of \(\gamma \).

More generally, for arbitrary distributions of nodes, we stated error estimates in terms of h and \(h^*\) for continuously differentiable functions and for Lipschitz continuous functions.

Several numerical experiments confirmed the theoretical results. In the case of non–differentiable functions with isolated singularities, they show that, for fixed d and increasing values of \(\gamma \), even if the maximum absolute errors are almost comparable, we get an improvement in the pointwise approximation close to the singularities. Moreover, they indicate that even stronger results are possible in the case of continuously differentiable functions having a Hölder continuous highest derivative. The proof of this conjecture remains an open problem.

Availability of Supporting Data

Not Applicable

References

Berrut, J.-P.: Rational functions for guaranteed and experimentally well-conditioned global interpolation. Comput. Math. App. 15(1), 1–16 (1988)

Berrut, J.-P., Klein, G.: Recent advances in linear barycentric rational interpolation. J. Comput. Appl. Math. 259, 95–107 (2014)

Bos, L., De Marchi, S., Hormann, K.: On the Lebesgue constant of Berrut’s rational interpolant at equidistant nodes. J. Comput. Appl. Math. 236(4), 504–510 (2011)

Bos, L., De Marchi, S., Hormann, K., Klein, G.: On the Lebesgue constant of barycentric rational interpolation at equidistant nodes. Numer. Math. 121, 461–471 (2012)

Camargo, A.P.: On the numerical stability of Floater–Hormann’s rational interpolant. Numer. Algoritm. 72, 131–152 (2016)

Floater, M.S., Hormann, K.: Barycentric rational interpolation with no poles and high rates of approximation. Numer. Math. 107, 315–331 (2007)

Hormann, K.: Barycentric interpolation. In: Fasshauer, G.E., Schumaker, L.L. (eds.) Approximation Theory XIV: San Antonio 2013, pp. 197–218. Springer International Publishing, Cham (2014)

Hormann, K., Klein, G., De Marchi, S.: Barycentric rational interpolation at quasiequidistant nodes. Dolomites Res. Notes Approx. 5, 1–6 (2012)

Huybrechs, D., Trefethen, L.N.: AAA interpolation of equispaced data. BIT Numer. Math. 63(2), 21 (2023)

Mascarenhas, W.-F.: On the rate of convergence of Berrut’s interpolant at equally spaced nodes. arXiv:1708.04235v3 (2018)

Mastroianni, G., Szabados, J.: Barycentric interpolation at equidistant nodes. Jean J. Approx. 9, 25–36 (2017)

Themistoclakis, W., Van Barel, M.: A generalization of Floater–Hormann interpolants. J. Comput. Appl. Math. 441, 95–107 (2024)

Zhang, R.-J., Liu, X.: Rational interpolation operator with finite Lebesgue constant. Calcolo 59(1), 10 (2022)

Acknowledgements

This work was partially supported by GNCS-INDAM. The research of Woula Themistoclakis has been accomplished within RITA - Research ITalian network on Approximation - and UMI - T.A.A. working group.

Funding

Open access funding provided by IAC - NAPOLI within the CRUI-CARE Agreement. Marc Van Barel was supported by the Research Council KU Leuven, C1-project C14/17/073 and by the Fund for Scientific Research–Flanders (Belgium), EOS Project no 30468160 and project G0B0123N.

Author information

Authors and Affiliations

Contributions

All authors have contributed equally to this work.

Corresponding author

Ethics declarations

Ethical Approval

Not Applicable

Competing Interests

The authors have no competing interests

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Themistoclakis, W., Van Barel, M. A note on generalized Floater–Hormann interpolation at arbitrary distributions of nodes. Numer Algor (2024). https://doi.org/10.1007/s11075-024-01933-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11075-024-01933-6