Abstract

Classical rational interpolation usually enjoys better approximation properties than polynomial interpolation because it avoids wild oscillations and exhibits exponential convergence for approximating analytic functions. We develop a rational interpolation operator, which not only preserves the advantage of classical rational interpolation, but also has a finite Lebesgue constant. In particular, it is convergent for approximating any continuous function, and the convergence rate of the interpolants approximating a function is obtained using the modulus of continuity.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Let f be a real-valued continuous function defined on some interval [a, b] of the real line, and let \(x_0,x_1,\ldots ,x_n\) be \(n+1\) distinct interpolation nodes in [a, b] with \(a=x_0<x_1<\cdots <x_n=b\). Then, the interpolation operator \(\Lambda _n\) is defined by

where \(\lambda _j(x_i)=\delta _{ij}(i=0,1,\ldots ,n; j=0,1,\ldots ,n)\), and \(\delta _{ij}\) is the Kronecker \(\delta\), defined by \(\delta _{ij}=0\) if \(i\ne j\) and \(\delta _{ij}=1\) if \(i=j\). Evidently, we obtain

When \(\lambda _j (x)=l_j (x)\) with

the interpolant operator is a well-known Lagrange interpolant operator. We define [10]

which are the Lebesgue function and Lebesgue constant of the interpolant operator \(L_n\) [10], respectively. In approximation theory, the Lebesgue constant of the interpolation operator plays a significant role in estimating the approximation rate or the divergence of the operator. The Lebesgue constant of the operator depends on the node distributions. The Lebesgue constant of the Lagrange interpolant operator is bounded as (Erdös, Brutman [8]).

However, the Lebesgue constant can achieve the optimal order of \(2/{\pi } \ln n\) for Chebyshev nodes. The Lebesgue constant of the Lagrange interpolant operator is infinite as the node number \(n\rightarrow \infty\). Another main problem of using high degree polynomial interpolation is Runge’s phenomenon, that is, the oscillation at the edges of an interval. Berrut [3] proposed a rational interpolant operator by replacing \(\lambda _j (x)\) with \(b_j (x)\) as follows:

for some real values \(\omega _j\) to avoid this phenomenon. When considering \(\omega _j=(-1)^j(j=0,1,\ldots ,n)\), Berrut used this interpolation operator to Runge’s function, and his numerical experiments suggested that it has good approximation effect. Immense literature [1,2,3,4,5,6,7, 12,13,14,15,16] deals with the practical construction of node sets such that the Lebesgue constant of this rational interpolation operator has moderate growth with the node number n. For example, the Chebyshev nodes and evenly spaced nodes have been extensively studied. For evenly spaced nodes, few studies show that the Lebesgue constants of Berrut’s interpolant are bounded as [5, 15]

where \(C_1\) and \(C_2\) are positive constants. This finding implies that the associated Lebesgue constant grows logarithmically in the node number n, and it is infinite with the node number \(n\rightarrow \infty\). On the basis of the Uniform Boundedness Principle, the existence of a continuous function f, whose interpolation operator \(\Lambda _n(f,x)\) diverges in \(n\rightarrow \infty\), can be confirmed. In 2007, Floater and Hormann [11] constructed a rational interpolation without poles and arbitrarily high approximation orders; it includes Berrut’s operator as a special case. However, the Lebesgue constants of their operator still indicate a logarithmic growth with the node number n. We will discuss this conclusion in another study. This finding shows that the interpolation operator approximation still diverges for some continuous functions.

This result motivates the construction of a new rational interpolation operator with finite Lebesgue constants for any node number n. This paper aims to report an improved rational interpolation as follows:

where \(\sigma _j(x_i)=\delta _{ij}(i=0,1,\ldots ,n; \quad j=0,1,\ldots ,n)\), and \(x\ne x_i\)

or written as

In a subsequent section of this article, we will show that the interpolation operator \(T_n (f,x)\) not only preserves the advantage of classical rational interpolation, but also has finite Lebesgue constants for the evenly spaced nodes or for well-arranged nodes [13]; especially, it converges when it approximates any continuous function.

2 The interpolation with the finite Lebesgue constant

For the interpolation operator \(T_n (f,x)\) in (4) the following theorem holds

Theorem 1

The Lebesgue constant \(\Vert T_n\Vert\) of the rational interpolant \(T_n (f,x)\) given by (4) at evenly spaced nodes is bounded by

Proof

For simplicity, we assume, without loss of generality, that the interpolation interval is [0,1]. Thus, the interpolation nodes are equally spaced with distance 1/n, i.e., \(x_j=\frac{j}{n}, j=0,1,\ldots ,n\). If \(x = x_k\) for any k, then the interpolation property of the basis functions suggests that \(T_n(x) = 1\). As a result, let \(x_k< x < x_{k+1}\) for some k, and the Lebesgue function according to (4) and (5) is written as

Multiplying the numerator and denominator in (7) by the product \((x-x_{k})^{2}(x_{k+1}-x)^2\) yields

We initially estimate the upper bound in(6). Then, we have to bound the numerator \(N_k(x)\) from above and the denominator \(D_k(x)\) from below. For the numerator, we obtain

Let

Considering \(\frac{1}{x_{i}-x_{j}}=\frac{n}{i-j}(i \ne j)\) and using some elementary inequalities

we derive

Considering the denominator \(D_{k}(x)\) in (8), if k and n are even, then removing the absolute value in (8) does not require changing the sign and obtaining

Pairing the positive and negative terms in the right most factor adequately provides

We obtain

because the leading term and all paired terms are positive. By applying this estimate to (11) and considering (9), we get

This bound also holds if n is odd and k is even because this condition only adds a single positive term \(1 /(x_{n}-x)\) to \(S_{k}(x)\) in (11).

If k and n are odd numbers, removing the absolute value in (8), then we need to change the sign and obtain

Similar to the front method, pairing the positive and negative terms in the rightmost factor adequately provides

We obtain

and further

because the leading term and all paired terms are negative. Thus (13) is still valid, as well as (15). This bound also holds if n is even and k is odd because all paired terms are negative and this condition only adds a single negative term \(1 /(x_{n}-x)\) to \(S_{k}(x)\) in (14). In summary, regardless of the parity of k and n, we obtain (12). Therefore,

By applying the following expressions

we further get by (16)

Let \(t(\frac{1}{n}-t)=u\)(\(u \in (0,\frac{1}{4n^2})\)), we obtain by (18)

Let \(n^2u=\alpha\)(\(\alpha \in (0,\frac{1}{4})\)), and further

We then estimate \(q_1(\alpha )\). The first derivative of \(q_1(\alpha )\) is easy to compute as follows:

Given that \(\alpha \in (0,\frac{1}{4})\), then \(q_1^{\prime }(\alpha )>0\). Thus, \(q_1(\alpha )\) increases in \((0,\frac{1}{4})\). This condition leads to

Combining (16), (18), and(19) with (20) yields

Subsequently, we estimate the lower bound in (6). Let \(x=\frac{1}{2n}\), and the numerator in (8) provides

and the denominator provides

Thus, combining (21) with (22) yields

This completes the proof of Theorem 1. \(\square\)

An important property of the interpolants in (4) is that they are free of poles. In fact, let \(x_k<x<x_{k+1}\), the interpolants in (4) can be changed into

Then, showing that the interpolant has no pole is equivalent to showing that the denominator in this expression or the denominator in (8) is not equal to zero in R. This conclusion is evident after reviewing the estimate of the denominator in the proof process of Theorem 1. Thus we obtain the following corollary:

Corollary

3 Approximation error

We should review the concept about the modulus of continuity to illustrate the approximation error of the operator to continuous function. Let f(x) be a closed interval [a, b], and let

The function \(\omega (\delta )\) is called the modulus of continuity of f(x). A well-known inequality about \(\omega (\delta )\) holds for a factor \(\lambda\):

We introduce that the error bound of the rational interpolation operator (4) is approximately a continuous function f(x).

Theorem 2

Let f(x) be a continuous function, \(T_n (f,x)\) is defined by (4), and \(x_k\) represents evenly spaced nodes, then

Proof

Assuming that x is closest to \(x_{k}\), then

Thus, we have

Applying (23) yields

We are required to bound the term \(V_{n,k}(x):=\sum \limits _{j=0}^{n}|k-j| |\sigma _{j}(x)|\) in (26) because we have bounded

in the proof process of Theorem 1. We obtain

after reviewing (4). Thus, we need to bound the numerator \(R_k(x)\) and the denominator \(D_k(x)\) . We obtain \(D_{k}(x)>(\frac{1}{n}-t)^2+t^2-\frac{5}{4}n^2t^2(\frac{1}{n}-t)^2\) by using (12). Thus, the numerator \(R_k(x)\) should be estimated and rewritten as

Similar to the previous calculation, we get

where we used \(t^2 < \left( \frac{1}{n}-t\right) ^2\) (Note: x is closest to \(x_{k}\))and further

A treatment similar to (18,19) yields

We compute the first derivative of \(q_2(\alpha )\) to estimate \(q_2(\alpha )\), as follows:

We get \(q_2^{\prime }(\alpha )>0\), considering \(\alpha \in (0,\frac{1}{4})\); thus, \(q_2(\alpha )\) increases in \((0,\frac{1}{4})\). This condition yields

We finally arrive at

which completes the proof of Theorem 2 by combining (25), (26), (27), (28), (29), and (30) with (31). \(\square\)

\(\lim \nolimits _{n\rightarrow \infty } \omega (f,\frac{\ln {n}}{n})=0\) for the continuous function f(x), the conclusion of Theorem 2 leads to

thereby implying that the interpolant \(T_n\) is convergent for any continuous function.

Note: From (32), we obtain

for a sufficiently large n.

4 Numerical results

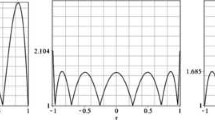

We provide few numerical experiments to further prove or analyze the behavior of the Lebesgue function and the Lebesgue constant of the proposed interpolant, as well as few approximation effect figures and approximation error figures that use the proposed interpolant and other interpolants. First, Fig. 1 shows the Lebesgue function for interpolation at \(n+1\) equidistant nodes for \(n = 20, 40, 80, 200\). The figures suggest that the maximum is always obtained near the center of the interpolation interval. The four subgraphs show that the Lebesgue constant of the interpolant is approximately 1.35 but not larger than 1.35 (Fig. 1). This finding further confirms the accuracy of Theorem 1. Second, we tested the behavior of oscillations by using Runge function \(f(x)=\frac{1}{1+x^2}\). The results in Fig. 2 show that the approximation effect improves as the number of equidistant nodes grows, and it resists the wild oscillations. Third, we constructed a function with discontinuous derivatives at multiple points to test the approximation effect. The function is as follows:

The results also show that the approximation effect improves as the number of equidistant nodes grows, and it resists the wild oscillations (Fig. 3). Finally, we compared the approximation errors of the classical Berrut’s interpolant with that in the present study by using the function \(g_{3}(x)\). The results show that their effects are similar in general when the node number n is not very large (Fig. 4). Theoretically, the interpolant has advantages when n is very large in terms of Theorem 2. However, an experiment is difficult to conduct due to the limitation of computing power. We programmatically calculate the constants of our operator for nodes ranging from 10 to 200 and display our upper and lower bounds in Fig. 5.

5 Conclusion

We have introduced a new linear rational interpolant and provided the upper and lower bounds of the Lebesgue constant in equidistant node case. These bounds are the finite constants, which are very smaller to that of the other rational interpolant. We have also proven the convergence of using the new rational interpolant to approximate any continuous functions. Furthermore, we have obtained the approximation error of using the interpolant to approximate any continuous functions in terms of its modulus of continuity. These results are supported by numerical examples. Finally, we have made a comparison with the other interpolant. The results show that the approximation error is almost the same as Berrut’s interpolant. For a larger n, the other interpolant and functions with discontinuous derivatives are compared.

References

Berrut, J.-P., Elefante, G.: A periodic map for linear barycentric rational trigonometric interpolation. Appl. Math. Comput. 371, 124924 (2020)

Berrut, J.-P., Klein, G.: Recent advances in linear barycentric rational interpolation. J. Comput. Appl. Math. 259, 95–107 (2004)

Berrut, J.-P.: Rational functions for guaranteed and experimentally well-conditioned global interpolation. Comput. Math. Appl. 15(1), 1–16 (1988)

Berrut, J.-P., Trefethen, L.N.: Barycentric Lagrange interpolation. SIAM Rev. 46, 501–517 (2004)

Bos, L., De Marchi, S., Hormann, K.: On the Lebesgue constant of Berrut’s rational interpolant at equidistant nodes. J. Comput. Appl. Math. 236(4), 504–510 (2011)

Bos, L., De Marchi, S., Hormann, K., Sidon, J.: Bounding the Lebesgue constant for Berrut’s rational interpolant at general nodes. J. Approx. Theory 169, 7–22 (2013)

Bos, L., De Marchi, S., Hormann, K., Klein, G.: On the Lebesgue constant of barycentric rational interpolation at equidistant nodes. Numer. Math. 121(3), 461–471 (2012)

Brutman, L.: Lebesgue functions for polynomial interpolation—a survey. Ann. Numer. Math. 4, 111–127 (1997)

Carnicer, J.M.: Weighted interpolation for equidistant nodes. Numer. Algorithms 55(2–3), 223–232 (2010)

Cheney, W., Light, W.: A Course in Approximation Theory. American Mathematical Society, Providence (2000)

Floater, M.S., Hormann, K.: Barycentric rational interpolation with no poles and high rates of approximation. Numer. Math. 107(2), 315–331 (2007)

Smith, S.J.: Lebesgue constants in polynomial interpolation. Ann. Math. Inform. 33, 109–123 (2006)

Trefethen, L.N., Weideman, J.A.C.: Two results on polynomial interpolation in equally spaced points. J. Approx. Theory 65(3), 247–260 (1991)

Wang, Q., Moin, P., Iaccarino, G.: A rational interpolation scheme with superpolynomial rate of convergence. SIAM J. Numer. Anal. 47(6), 4073–4097 (2010)

Zhang, R.: Optimal asymptotic Lebesgue constant of Berruts rational interpolation operator for equidistant nodes. Appl. Math. Comput. 294, 139–145

Zhang, R.: An improved upper bound on the Lebesgue constant of Berrut’s rational interpolation operator. J. Comput. Appl. Math. 255(1), 652–660 (2014)

Acknowledgements

This work is supported by the National Natural Science Foundation of China (Grant No. 61772025) and Natural Science Foundation of Zhejiang Province (No. LY20F020004).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Zhang, RJ., Liu, X. Rational interpolation operator with finite Lebesgue constant. Calcolo 59, 10 (2022). https://doi.org/10.1007/s10092-021-00454-1

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10092-021-00454-1