Abstract

A linear two-dimensional singularly perturbed convection-diffusion boundary-value problem is considered. The problem is discretized by the upwind finite-difference method. The analysis of this method on Shishkin-type meshes has been well-established, but the discretization mesh in this paper is the original Bakhvalov mesh, introduced in 1969 as the first layer-adapted mesh. We analyze the error of the numerical solution in the maximum norm and prove first-order pointwise accuracy, uniform in the perturbation parameter. This is the first complete analysis of this kind for two-dimensional convection-diffusion problems discretized on the Bakhvalov mesh. Our numerical experiments validate the theoretical analysis.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction and the model problem

In this paper, we consider the two-dimensional singularly perturbed convection-diffusion problem

where ε is the perturbation parameter such that 0 < ε ≤ ε∗≪ 1, b1, b2, c and f are sufficiently smooth functions, and b1(x,y) > β1 > 0, b2(x,y) > β2 > 0, c(x,y) ≥ 0 for all \((x,y)\in \bar {{\varOmega }}\). We refer the reader to [9, 20] (see also [22, Theorem 7.17]) for a detailed exposition of the compatibility conditions guaranteeing that the problem (1) has a unique solution u in some suitable normed space. In general, the solution u of the problem (1) has exponential boundary layers along the edges x = 0 and y = 0. As opposed to this, when setting b1(x,y) ≡ 0, b2(x,y) ≡ 0, and c(x,y) > 0 on Ω in (1), one gets singularly perturbed reaction-diffusion problems whose solutions typically have characteristic boundary layers along all four edges of the unit square.

Singularly perturbed differential equations arise in various practical applications and mathematical models. For example, convection-diffusion problems are found in many formulations of fluid flow problems (such as the linearization of the Navier-Stokes equations and transport problems) and semi-conductor device simulation. More details on these two significant applications can be found in [30, pp. 1–4], whereas mathematical models involving systems of reaction-diffusion problems appear, for example, in simulation of chemical reactions, wave-current interaction, and biological applications [3, 6].

When solving singular perturbation problems such as (1) numerically, the goal is to design numerical methods that yield solutions that converge to the corresponding exact solutions uniformly in the perturbation parameter ε. This task is very challenging because the conventional numerical methods do not give satisfactory results when applied to singular perturbation problems [8]. Therefore, special methods are devised to achieve parameter-robust convergence. In the research literature, there are two main approaches: the use of exponentially fitted finite-difference operators on uniform meshes and the use of layer-adapted meshes with certain discretization methods. The former is less popular in spite of the fact that fitted schemes, like the Il’in-Allen-Southwell (I-A-S) scheme, work well when applied to the one-dimensional analogue of (1). However, their extensions to 2D problems are difficult to analyze, and even impossible in the case of reaction-diffusion equations [8, Example 2.2]. Indeed, a recent result due to Roos and Schopf [32] shows that one can achieve first-order convergence with the I-A-S scheme on uniform meshes for two-dimensional convection-dominated problems, but with restricted assumptions b1(x,y) ≡ b1(x) and b2(x,y) ≡ b2(y). A similar result is obtained in [10] for exponentially fitted Galerkin and Petrov-Galerkin methods. Another rare successful use of uniform meshes in a very unique setting to obtain ε-uniform convergence for one-dimensional quasilinear convection-diffusion problems is the inversion method introduced by Vulanović and Nhan [37], but this special approach remains inapplicable to 2D problems.

As opposed to the fitted-operator approach, the use of layer-adapted meshes—from their first appearance more than five decades ago—has attained much attention of singular perturbation researchers. As early as in 1969, Bakhvalov [2] introduced his phenomenal idea of the mesh construction for one- and two-dimensional reaction-diffusion equations in such a way that the mesh is dense inside the boundary layers and coarse outside the layers. Essentially, in the layers, the mesh points are generated by a function which corresponds to the inverse of the boundary layer function. Moreover, the Bakhvalov mesh-generating function is continuously differentiable on the whole interval of integration. The Bakhvalov-type meshes due to Vulanović [39] generalize the Bakhvalov mesh still retaining the elegant smooth property of the mesh-generating function. However, the smoothness of these Bakhvalov-type meshes generally results in implicitly defined transition points between the fine and coarse parts of the mesh. This makes the error analysis more complicated than in the case of the meshes with predefined transition points, such as Shishkin-type meshes in the sense of Roos and Linß [17, 29] (see also [27]). The Shishkin-type meshes modify the original piecewise uniform Shishkin mesh [34] keeping its transition point. They are distinct from the Bakhvalov-type meshes because their mesh-generating functions are not smooth. For more detailed exposition of the layer-adapted mesh design, we refer the reader to Roos’ most recent survey [33], as well as to the monographs [7, 22, 30, 36], where the analysis of numerical methods applied on these meshes can also be found.

Reaction-diffusion problems are more easily solved and analyzed [31, Section 1.1] than the convection-diffusion problem (1). For instance, when finite-difference methods are used for two-dimensional reaction-diffusion elliptic problems, the complete analysis for Shishkin meshes is given in [5, 13], whereas a simpler unified analysis for both Bakhvalov-type and Shishkin-type meshes can be found in [12]. On the other hand, when a convection-dominated problem is discretized by finite-difference methods on Bakhvalov-type meshes rather than Shishkin-type meshes, the analysis becomes much more difficult and delicate [33, Section 1.2]. Indeed, the analysis of Shishkin-type meshes for the problem (1) was accomplished in 1999 by Linß and Stynes [18, 19] (see also their 2003 survey [17] and the error-expansion analysis on the standard Shishkin mesh by Kopteva [16]). This happened even before the first central finite-difference error analysis of reaction-diffusion problems obtained in 2005 by Clavero et al. [5], and later in [4] for the use of a third-order HODIE finite-difference scheme. However, for Bakhvalov-type meshes no similar result, even for the standard upwind scheme, has been known for two decades (see [31, Question 6]). The very first attempt to solve this important open problem is given in a recent work by Nhan and Vulanović [28]. The main result in [28] is a complete analysis of the one-dimensional analogue of (1) discretized on the simplest Bakhvalov-type mesh from [39] for which the transition point can be written explicitly, but the authors also briefly outline an extended analysis for 2D problems. An early use of this simplest Bakhvalov-type mesh for two-dimensional problems can be found in [40], where a second-order hybrid finite-difference method is designed and analyzed. However, the problem considered there is much simpler than (1) (b2(x,y) ≡ 0 and b1(x,y) ≡ b1(x) are assumed) and ε-uniform convergence is not proved.

The purpose of the current paper is to provide for the first time a complete analysis of the original Bakhvalov mesh when it is employed to discretize the stationary convection-diffusion problem (1) by the upwind scheme. Therefore, our result fills a long-standing theoretical gap in the analysis of the Bakhvalov-type meshes for higher-dimensional convection-diffusion problems. The analysis presented in this paper is even more compelling because, in 1D, several special techniques have been used to provide parameter-uniform convergence proofs for Bakhvalov-type meshes, such as the use of the hybrid-stability inequality [1, 14, 15, 23, 29] and the preconditioning-based analysis proposed recently in [24,25,26, 38], but none of them has been extended to 2D problems (cf. [22, Remark 9.2] and [24]). On the other hand, the truncation error and barrier function approach, which has been widely used in the analysis of Shishkin-type meshes [11, 18, 19, 21, 35], has not been applied to any Bakhvalov-type mesh even for 1D problems until the recent appearance of [28]. It is the same truncation error and barrier function approach from [28] that we follow here.

The paper is organized as follows. In the next section, we review some well-known preliminary results regarding the behavior of the exact solution and we also introduce the upwind difference discretization on an arbitrary mesh. This is followed by Section 3, where we describe the Bakhvalov mesh for the convection-diffusion problem (1) and established its important properties for the later analysis. A complete truncation error analysis is given in Section 4, and then an appropriate barrier function is constructed in Section 5 and used to prove the parameter-uniform convergence. Numerical results that support our theoretical findings are reported in Section 6. Finally, a short concluding remark is presented in the last section.

2 Preliminaries and the upwind discretization

In accordance with the established practice, we use C to denote a generic positive constant independent of ε and the discretization parameter N. Let \(C^{n}(\bar {{\varOmega }})\) and \(C^{n,1}(\bar {{\varOmega }})\) be the spaces of functions defined on \(\bar {{\varOmega }}\) whose derivatives up to the n-th order are continuous and, respectively, Lipschitz-continuous. Let ∥⋅∥n denote the \(L^{\infty }\)-type norm in \(C^{n}(\bar {{\varOmega }})\) and |⋅|n, |⋅|n,1 denote the \(L^{\infty }\)-type seminorms in \(C^{n}(\bar {{\varOmega }})\) and \(C^{n,1}(\bar {{\varOmega }})\).

Lemma 1 (Shishkin-type decomposition)

Suppose that f satisfies the compatibility conditions (see, for instance, [19, Theorem 2.1]). Then the boundary-value problem (1) has a classical solution \(u\in C^{3,1}\left (\bar {{\varOmega }}\right )\) and this solution can be decomposed as

where, for all \((x,y)\in \bar {{\varOmega }}\), we have

and

for 0 ≤ k + ℓ ≤ 3. Furthermore,

and

Let \({\varOmega }^{N}=\left \{(x_{i},y_{j}):\ i,j=0,1,\ldots , N\right \}\) be the discretization mesh, where the mesh point coordinates xi and yj satisfy

We denote \({\varGamma }^{N}= {\varGamma } \bigcap {\varOmega }^{N}\), and also set \(h_{x,i}=x_{i}-x_{i-1},\ \hbar _{x,i}=(h_{x,i+1}+h_{x,i})/2\) and \(h_{y,j}=y_{j}-y_{j-1},\ \hbar _{y,j}=(h_{y,j+1}+h_{y,j})/2\). Given a mesh function \(\{{w}_{ij}^{N}\}\) on ΩN, we discretize the problem (1) by the standard upwind scheme as follows,

with

We split \({\mathscr{L}}^{N}\) into \({{\mathscr{L}}}_{x}^{N}+ {{\mathscr{L}}}_{y}^{N}\), where

The matrix associated with the discrete operator \({\mathscr{L}}^{N}\) is an M-matrix. Therefore, the following discrete comparison principle holds.

Lemma 2

The operator \({\mathscr{L}}^{N}\) satisfies a discrete comparison principle, that is, if \(\left \{v_{ij}\right \}\) and \(\left \{w_{ij}\right \}\) are two mesh functions satisfying \(\left |v_{ij}\right |\le w_{ij}\) on ΓN, and \(\left |{\mathscr{L}}^{N}v_{ij}\right |\le {\mathscr{L}}^{N} w_{ij}\) on ΩN ∖ΓN, then \(\left |v_{ij}\right |\le w_{ij}\) on ΩN.

We also have the standard truncation error bounds for the discrete operator \({\mathscr{L}}^{N}\) as below.

Lemma 3 (cf. Lemma 3.2 in 19 and Lemma 8 in [18])

Let g(x,y) be a smooth function defined on Ω. Then the following estimates for the truncation error hold:

and

for 0 < i, j < N, with analogous estimates for \( \left |{{\mathscr{L}}}_{y}^{N} g_{ij}-\left ({\mathscr{L}}_{y}g\right )_{ij}\right |\).

3 The Bakhvalov mesh for convection-diffusion problems

The original Bakhvalov mesh is presented in [2] as a discretization mesh for the reaction-dominated problems with characteristic boundary layers. In this section, we adopt his idea and describe the version for the problem (1) with exponential layers. Furthermore, a careful analysis of mesh properties that are needed for the later error analysis is also given.

The coordinates of the mesh points, xi and yj, of the Bakhvalov mesh are generated by functions λ1(t) and λ2(t) with t ∈ [0,1] in the sense that xi = λ1(ti) and yi = λ2(ti), where ti = i/N for i = 0,1,…,N. Then we form the mesh ΩN using the Bakhvalov mesh points (xi,yj). For simplicity, from now on, we only consider identical mesh-generating functions λ1 and λ2, denoting them jointly by λ.

The mesh-generating function λ is defined as follows:

with ψ(t) = aεϕ(t) and \(\phi (t):=\ln \frac {q}{q-t}\) for t ∈ [0,q), where a and q are fixed positive mesh parameters such that q ∈ (0,1) and \(\psi ^{\prime }(0)<1\), that is, aε∗ < q. Speaking of the mesh points on either the x- or the y-axis, the value of ψ(α) represents the transition point from the mesh graded in the layer to the uniform mesh outside the layer. The transition point is defined implicitly via α, which is the solution of the nonlinear equation

(the part of λ on [α,1] is the tangent line from the point (1,1) to ψ, touching ψ at (α,ψ(α)).

Remark 1

If we want the points xi and yi, i = 0,1,…,N, to be generated by two different respective functions λ1 and λ2, then each function has its own constants a and q, and consequently, different values of α.

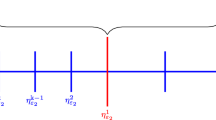

Since we do not know the point α in general, we consider auxiliary points α1 and α2 such that

The line through the points (q,0) and (1,1) has the slope 1/(1 − q) and it is steeper than the tangent line from (1,1) to ψ(t), whose slope is \(\psi ^{\prime }(\alpha )\). Therefore, α < α1 < q because \(\psi ^{\prime }(t)\) is monotonically increasing on [0,q). Similarly, we also have 0 < α2 < α. Figure 1 illustrates this construction graphically.

We now prove some properties of the Bakhvalov mesh. We state them for hi, which stands for both hx,i and hy,i, i = 1,2,…,N.

Lemma 4

and

Proof

It is easy to see that \(\phi ^{\prime \prime }(t)=\frac {1}{(q-t)^{2}}>0\) for t ∈ [0,q), and

Therefore, \(\lambda ^{\prime }(t)\) is non-decreasing and (12) follows from

To show (13), use \(\psi ^{\prime }(\alpha _{1})= \frac {1}{1-q}\) again to get

It follows that α1 = q − (1 − q)aε which yields the second inequality in (13). A similar work with \(\psi ^{\prime }(\alpha _{2})=1\) yields the remaining estimate in (13). □

The estimates (13) imply important lower and upper bounds for q − α, which are occasionally used later in the error analysis:

Let J be the index such that

The next lemma provides more estimates of the mesh width in the layer region.

Lemma 5

Let tJ ≤ q. Then we have

Proof

By (17), for i ≤ J − 1 we have

On the other hand,

which completes the proof of (18). □

Remark 2

Without the condition tJ ≤ q, the estimate in Lemma 5 are true for i ≤ J − 2.

We now consider step-size estimates for the case q < tJ. We also define

Lemma 6

Let q < tJ. Then the following estimates are satisfied:

-

When α ≤ tJ− 1/2, we have

$$ h_{J}\ge \left( 2N\right)^{-1}. $$(19) -

When tJ− 1/2 < α, we have

$$ h_{J-1}\le 2a\varepsilon. $$(20)

Proof

First, consider α ≤ tJ− 1/2. In this case, \(h_{J}^{}=x_{J}^{}-x_{J-1}^{}=(x_{J}-x_{\alpha })+(x_{\alpha }-x_{J-1}) \) with xα = ψ(α). It follows that

where we used (16) in the last inequality.

Second, for tJ− 1/2 < α, because tJ− 1 < tJ− 1/2 < α < q, we have

which gives (20). □

4 The truncation error analysis

The numerical solution \({w}_{ij}^{N}\) of the upwind finite-difference discretization is decomposed analogously to its continuous counterpart:

for which

and

Let

be the truncation (consistency) error of the upwind discretization of the problem (1) on the Bakhvalov mesh. We establish the upper bounds for the truncation error by using

where we set

and

We will bound each term of \({\tau }_{ij}^{x}\) and \(\tau _{ij}^{y}\) separately. For the regular part S of the solution, the standard argument (see also [19]) is applied by invoking Lemma 1 and the first estimate in Lemma 3, as well as the mesh property (12) to obtain

Let

and let \(\bar E_{ij}^{y}\) be defined in the same way with β2 instead of β1.

In the next two lemmas we provide the truncation error estimate for the layer parts E1 and E2, as well as for the corner part E12.

Lemma 7

Let aβ1 ≥ 2. Then, for any j = 1,2,…,N − 1, the bound on \(\left |{{\mathscr{L}}}_{x}^{N} \left (E_{1,ij}-E_{1,ij}^{N}\right ) \right |\) can be given as follows.

-

For i ≥ J,

$$ \left|{\mathcal{L}}_{x}^{N} \left( E_{1,ij}-E_{1,ij}^{N}\right) \right|\le CN^{-1}. $$(24) -

For i ≤ J − 2,

$$ \left|{\mathcal{L}}_{x}^{N} \left( E_{1,ij}-E_{1,ij}^{N}\right) \right|\le C\left( N^{-1}+\varepsilon^{-1}\bar E_{ij}^{x}N^{-1}\right). $$(25) -

For i = J − 1,

$$ \left|{\mathcal{L}}_{x}^{N} \left( E_{1,ij}-E_{1,ij}^{N}\right) \right|\le \begin{cases} C\left( N^{-1}+\varepsilon^{-1}\bar E_{ij}^{x}N^{-1}\right),& \text{if } \ h_{x,i}\le \varepsilon,\\ C\left( N^{-1}+h_{x,i+1}^{-1}\bar E_{ij}^{x}N^{-1}\right),&\text{if } \ h_{x,i}>\varepsilon. \end{cases} $$(26)

Also,

Proof

Throughout the proof, let 1 ≤ j ≤ N − 1. For the detail of this proof technique, we refer the reader to [28, Lemma 3.1].

We begin by asserting (24) for i ≥ J + 1. We apply (11) to E1, use (3), and note that in this case ti− 1 ≥ tJ ≥ α. Then we have

where we have used (16) and aβ1 ≥ 2 in the last inequality.

To prove (24) for i = J, we consider two cases: ε ≤ N− 1 and ε > N− 1. First, for i = J and ε ≤ N− 1, our approach is

and then we bound each term on the right-hand side separately. Using the estimate (6) at i = J we get

where we used (16).As for \(\left |{{\mathscr{L}}}_{x}^{N} \left (E_{1,ij}\right ) \right |\), we have

where \( P_{ij}^{x}= \varepsilon \left |D_{x}^{^{2}} E_{1,ij}\right |, \ Q_{ij}^{x} = b_{1,ij}\left |D_{x}^{+}E_{1,ij}\right |, \ \ R_{ij}^{x}=c_{ij}\left |E_{1,ij}\right |, \) and

where we have used tJ− 1 < α ≤ tJ and (16) again. The same argument is employed to get \(Q_{J,j}^{x}\le CN^{-1}\). For \(R_{J,j}^{x}\), it is clear from (3) that

because of the arguments used in (29).

Second, for i = J and ε > N− 1, it follows that hx,J ≤ Cε because of (12). Therefore, we use (11) to continue as follows:

This completes the proof of (24).

We combine the estimates (25) and (26) and prove them together. We consider two subcases:

-

1.

when ti− 1 ≤ q − 3/N, and

-

2.

when q − 3/N < ti− 1 < α.

-

Subcase 1. Note that, when ti− 1 ≤ q − 3/N, we have ti+ 1 ≤ q − 1/N < q, so \(\lambda ^{\prime }(t_{i+1}) \le a\varepsilon \phi ^{\prime }(t_{i+1})\) due to (14). Hence,

$$ \begin{array}{ll} \left|{\mathcal{L}}_{x}^{N} \left( E_{1,ij}-E_{1,ij}^{N}\right) \right| &\le CN^{-1}\lambda^{\prime}(t_{i+1})\varepsilon^{-2}e^{-\beta_{1} x_{i-1}/\varepsilon}\\ &\le C\varepsilon^{-1}N^{-1}\left( \frac{1}{q-t_{i+1}}\right)\left( e^{-\phi(t_{i-1})}\right)^{a\beta_{1}/2}e^{-\beta_{1} x_{i-1}/(2\varepsilon)}\\ &\le C\varepsilon^{-1}N^{-1}\bar{E}^{x}_{ij}. \end{array} $$

Here, for i = 1,2,…,J − 2, we used hx,i ≤ aε due to Remark 2, and for i = J − 1, the assumption hx,J− 1 ≤ ε in (26).

-

Subcase 2. Note that in this case we have (q − ti− 1) < 3N− 1. Hence, we can proceed as follows:

$$ \begin{array}{ll} \left|{\mathcal{L}}_{x}^{N} \left( E_{1,ij}-E_{1,ij}^{N}\right) \right| &\le C\varepsilon^{-1} e^{-\beta_{1} x_{i-1}/2\varepsilon}e^{-\beta_{1} x_{i-1}/2\varepsilon}\\ &\le C\varepsilon^{-1} e^{-\beta_{1} x_{i}/(2\varepsilon)}e^{\beta_{1} h_{x,i}/(2\varepsilon)}\left( e^{-\phi(t_{i-1})}\right)^{a\beta_{1}/2}\\&\le C\varepsilon^{-1}\bar{E}^{x}_{ij}N^{-1}. \end{array} $$This completes the proof of (25) and the first estimate in (26).

Lastly, we show the second estimate in (26), that is, when i = J − 1 and hx,i > ε, by considering the following cases:

First, when tJ ≤ q, due to (12), one gets ε ≤ CN− 1. We again use the estimate in the form of (28), invoking (16) and (6) to get

For \(P_{J-1,j}^{x}\) (and analogously for \(Q_{J-1,j}^{x}\)), we use (16) and (18) again to obtain

This implies that \( \left |{{\mathscr{L}}}_{x}^{N} \left (E_{1,ij}\right ) \right | \le Ch_{x,J}^{-1} \bar {E}_{J-1,j}^{x}N^{-1}\) and, together with (32), asserts the first case of (31).

For hx,i > ε, i = J − 1, \(\alpha \le t_{J-1/2}^{}\) and q < tJ, we use (19) to get

because tJ− 1 < q < tJ. We apply a similar argument to \(Q_{J-1,j}^{x}\), and also

This implies that \(\left |{{\mathscr{L}}}_{x}^{N} \left (E_{1,ij}-E_{1,ij}^{N}\right ) \right |\le CN^{-1}\), which is the second estimate in (31).

For hx,i > ε (again, ε ≤ CN− 1 by (12)), i = J − 1, α > tJ− 1/2, and q < tJ, because of (20), we can bound \(P_{J-1,j}^{x}\) and \(Q_{J-1,j}^{x}\) as in (33), and \(\left |\left ({\mathscr{L}}E_{1}\right )_{ij}\right |\) as in (32), which yields the last case of (31).

For \(\left |{{\mathscr{L}}}_{x}^{N} \left (E_{2,ij}-E_{2,ij}^{N}\right ) \right |\), because of (4) and (11), we can easily show that, with arbitrary hy,j,

where we used the property hx,i ≤ CN− 1,i = 1,2,…,N. This completes the proof. □

Lemma 8

Let aβ1 ≥ 2. The upper bound on \(\left |{{\mathscr{L}}}_{x}^{N} \left (E_{12,ij}-E{}_{12,ij}^{N}\right ) \right |\), for any j = 1,2,…,N − 1, satisfies the following.

-

For i ≥ J,

$$ \left|{\mathcal{L}}_{x}^{N} \left( E_{12,ij}-E{}_{12,ij}^{N}\right) \right|\le CN^{-1}. $$ -

For i ≤ J − 2,

$$ \left|{\mathcal{L}}_{x}^{N} \left( E_{12,ij}-E{}_{12,ij}^{N}\right) \right|\le C\left( N^{-1}+\varepsilon^{-1}\bar E_{ij}^{x}N^{-1}\right). $$ -

For i = J − 1,

$$ \left|{\mathcal{L}}_{x}^{N} \left( E_{12,ij}-E{}_{12,ij}^{N}\right) \right|\le \begin{cases} C\left( N^{-1}+\varepsilon^{-1}\bar E_{ij}^{x}N^{-1}\right)& \text{if } \ h_{x,i}\le \varepsilon,\\ C\left( N^{-1}+h_{x,i+1}^{-1}\bar E_{ij}^{x}N^{-1}\right) &\text{if } \ h_{x,i}>\varepsilon. \end{cases} $$

Proof

We observe the following two key estimates that make the analysis in the proof of Lemma 7 work for the E12 component. Lemma 1 implies

and

Hence, arguments analogous to those in the proof of Lemma 7 can be applied to prove the assertions. □

Combining Lemmas 7 and 8 with (23) and invoking (22), we arrive at the main result of this section, the truncation error estimate for the upwind discretization of the problem (1) on the Bakhvalov mesh.

Theorem 1

Let aβ1 ≥ 2 and aβ2 ≥ 2. Then the truncation error of the upwind discretization of the problem (1) on the Bakhvalov mesh satisfies

where for \({\tau }_{ij}^{x}\) and any j = 1,2,…,N − 1, we have,

-

for i ≥ J,

$$ {\tau}_{ij}^{x}\le CN^{-1}, $$(34) -

for i ≤ J − 2,

$$ {\tau}_{ij}^{x}\le C\left( N^{-1}+\varepsilon^{-1}\bar E_{ij}^{x}N^{-1}\right) $$ -

for i = J − 1,

$$ {\tau}_{ij}^{x}\le \begin{cases} C\left( N^{-1}+\varepsilon^{-1}\bar E_{ij}^{x}N^{-1}\right) & \text{if } \ h_{x,i}\le \varepsilon,\\ C\left( N^{-1}+h_{x,i+1}^{-1}\bar E_{ij}^{x}N^{-1}\right) &\text{if } \ h_{x,i}>\varepsilon, \end{cases} $$(35)

whereas \(\tau _{ij}^{y}\), for any i = 1,2,…,N − 1, can be bounded as follows:

-

for j ≥ J,

$$ \tau_{ij}^{y}\le CN^{-1}, $$ -

for j ≤ J − 2,

$$ \tau_{ij}^{y}\le C\left( N^{-1}+\varepsilon^{-1}\bar E_{ij}^{y}N^{-1}\right) $$ -

for j = J − 1,

$$ \tau_{ij}^{y}\le \begin{cases} C\left( N^{-1}+\varepsilon^{-1}\bar E_{ij}^{y}N^{-1}\right)& \text{if } \ h_{y,j}\le \varepsilon,\\ C\left( N^{-1}+h_{y,j+1}^{-1}\bar E_{ij}^{y}N^{-1}\right) &\text{if } \ h_{y,j}>\varepsilon. \end{cases} $$

5 The barrier function and uniform convergence result

We now proceed to form a barrier function. Set

with

where Ck, k = 1,2,3,4, are appropriately chosen positive constants independent of both ε and N.

Lemma 9

Let aβ1 ≥ 2 and aβ2 ≥ 2. Then, there exist sufficiently large constants Ck, k = 1,2,3,4, such that

where

and

Proof

It is easy to verify that \({{\mathscr{L}}}_{x}^{N}\gamma _{ij}\ge {\kappa }_{ij}^{x}\) and \({{\mathscr{L}}}_{y}^{N}\gamma _{ij} \ge {\kappa }_{ij}^{y}\), see, for instance, [11, 27, 35]. Therefore, using Theorem 1, we will show that

for various values of the index i.

Let 1 ≤ j ≤ N − 1 throughout the proof again. It is clear from (34) that

It remains to prove that \({\tau }_{ij}^{x}\le {\kappa }_{ij}^{x}\) for i ≤ J − 1. If i ≤ J − 3, then hx,i+ 1 ≤ aε because of Remark 2, in which the estimate (18) holds for i ≤ J − 2. Therefore,

Next, for i = J − 2,J − 1, we consider two cases: hx,i > ε and hx,i ≤ ε. First, when hx,i > ε, it is clear that hx,i+ 1 ≥ hx,i which immediately yields \({\tau }_{J-1,j}^{x} \le \kappa ^{x}_{J-1,j}\) because of (35).

For i = J − 2 and hx,J− 2 > ε (which implies CN− 1 > ε), we can also prove that

by invoking (28), (30), hx,J− 2 ≤ aε by Remark 2, while analogous arguments can be used to obtain the bounds of \(Q_{J-2,j}^{x}\), \(\left |\left ({\mathscr{L}}E_{1}\right )_{J-2,j}\right |,\) and \(\left |\left ({\mathscr{L}}E_{12}\right )_{J-2,j}\right |\).

Second, we consider hx,i ≤ ε and i = J − 2,J − 1. If hx,i+ 1 ≤ ε, we have the same situation as in the estimate (36). On the other hand, when hx,i+ 1 > ε for i = J − 2,J − 1, this implies that \(\max \limits \{\varepsilon , h_{x,i+1}\}=h_{x,i+1}\) and ε ≤ CN− 1, so, by modifying the approach in (33), we can also show that

Similar arguments, when applied to \(Q^{x}_{ij},\) \(\left |\left ({\mathscr{L}}_{x}E_{1}\right )_{ij}\right |,\) and \(\left |\left ({\mathscr{L}}_{x}E_{12}\right )_{ij}\right |\) give,

Using analogous reasoning for \(\tau _{ij}^{y}\), we are done. □

Theorem 2

Let aβ1 ≥ 2 and aβ2 ≥ 2. Then, for the upwind finite-difference method applied on the Bakhvalov mesh to the convection-diffusion problem (1), the error satisfies

Proof

It is straightforward from (21) that

Furthermore, Lemma 9 gives

Applying the discrete comparison principle, Lemma 2, we complete the proof. □

Remark 3

The above ε-uniform convergence result is more robust than what can be proved when the upwind scheme is used on the standard Shishkin mesh, in which case \(\ln N\)-factors occur in the error. Moreover, proofs of ε-uniform convergence on Shishkin-type meshes usually invoke the assumption ε ≤ N− 1 (see [18, Assumption 3] and [22, page 12], for instance), which our proof method does not require.

6 Numerical results

In this section, we illustrate the numerical performance of the Bakhvalov mesh as the discretization mesh of the standard upwind difference scheme for the two-dimensional convection-diffusion problems of type (1). We consider two test problems. The first one is taken from [22, page 261],

where f(x,y) is chosen so that

is the exact solution. It is clear that the solution has exponential boundary layers along the x = 0 and y = 0 edges. In this test problem, b1(x,y) ≥ 2 and b2(x,y) ≥ 3 for \((x,y)\in \bar {\varOmega }\). Therefore, the choice of the Bakhvalov mesh parameter a = 2 satisfies aβ1 ≥ 2 and aβ2 ≥ 2 as required by Theorem 2. We also choose q = 1/2.

The computed errors, denoted by EN, are shown in Table 1, together with the rate of convergence ρ which is approximated numerically as

Table 9.1 in [22] shows the errors for the same test problem with ε = 10− 8 when the standard Shishkin mesh and the Bakhvalov-Shishkin mesh are used. We point out that the errors in Table 1 are less.

Our second test problem is

for which we do not know the exact solution. Therefore, we compute the errors by the double-mesh principle [7]. We use the same mesh parameters like above, a = 2 (here bk(x,y) ≥ 1 for k = 1,2 and \((x,y)\in \bar {\varOmega }\)) and q = 1/2. The upwind approximations and the rates of convergence are shown in Table 2. A computed solution when N = 26 and ε = 10− 3 is plotted in Fig. 2.

The computed solution by double-mesh principle to the test problem (38)

7 Conclusion

Motivated by a long-standing open question in [31], related to the finite-difference analysis of convection-dominated problems, we generalized the new approach of the truncation error and barrier function technique, recently introduced in [28], from one-dimensional problems to 2D problems. Moreover, one of the simplest modifications of the Bakhvalov mesh was used in [28], whereas in the present paper, we considered the original Bakhvalov discretization mesh. For the upwind finite-difference scheme, we proved first-order convergence uniform in the perturbation parameter. We also confirmed the theoretical results experimentally. Our novel technical approach opens the door to the analysis of other unanswered questions related to Bakhvalov-type meshes in conjunction with convection-diffusion problems.

References

Andreev, V., Kopteva, N.: On the convergence, uniform with respect to a small parameter, of monotone three-point finite difference approximations. Differ. Equ. 34 (7), 921–929 (1998)

Bakhvalov, N.S.: The optimization of methods of solving boundary value problems with a boundary layer. USSR Comp. Math. Math. Phys. 9, 139–166 (1969)

Britton, N.F.: Reaction-Diffusion Equations and Their Applications to Biology. Academic, London (1986)

Bujanda, B., Clavero, C., Gracia, J.L., et al.: A high order uniformly convergent alternating direction scheme for time dependent reaction-diffusion singularly perturbed problems. Numer. Math. 107, 1–25 (2007)

Clavero, C., Gracia, J.L., O’Riordan, E.: A parameter robust numerical method for a two dimensional reaction-diffusion problem. Math. Comput. 74(252), 1743–1758 (2005)

Estep, D.J., Larson, M.G., Williams, R.D.: Estimating the error of numerical solutions of systems of reaction-diffusion equations. Mem. Amer. Math. Soc. 146 (696), viii+ 109 (2000)

Farrell, P.A., Hegarty, A.F., Miller, J.J.H., O’Riordan, E., Shishkin, G.I.: Robust Computational Techniques for Boundary Layers. Chapman & Hall, Boca Raton (2000)

Franz, S., Roos, H.-G.: The capriciousness of numerical methods for singular perturbations. SIAM Rev. 53, 157–173 (2011)

Han, H., Kellogg, R.B.: Differentiability properties of solutions of the equation − ε2Δu + ru = f(x,y) in a square. SIAM J. Math. Anal. 21, 394–408 (1990)

Hegarty, A.F., O’Riordan, E.: M. Stynes. A comparison of uniform convergent difference schemes for two-dimensional convection-diffusion problems. J Comput. Phys. 105(1), 24–32 (1993)

Kellogg, R.B., Tsan, A.: Analysis of some difference approximations for a singular perturbation problem without turning points. Math. Comput. 32, 1025–1039 (1978)

Kellogg, R.B., Linß, T., Stynes, M.: A finite difference method on layer-adapted meshes for an elliptic reaction-diffusion system in two dimensions. Math. Comp. 77(264), 2085–2096 (2008)

Kellogg, R.B., Madden, N., Stynes, M.: A parameter-robust numerical method for a system of reaction-diffusion equations in two dimensions. Numer. Meth. Part. Differ. Equa. 24(1), 312–334 (2008)

Kopteva, N.: On the convergence, uniform with respect to the small parameter, of a scheme with central difference on refined grids. Comput. Math. Math. Phys. 39, 1594–1610 (1999)

Kopteva, N.: Uniform pointwise convergence of difference schemes for convection-diffusion problems on layer-adapted meshes. Computing 66, 179–197 (2001)

Kopteva, N.: Error expansion for an upwind scheme applied to a two-dimensional convection-diffusion problem. SIAM J. Num. Anal. 41, 1851–1869 (2003)

Linß, T.: Layer-adapted meshes for convection-diffusion problems. Comput. Methods Appl. Mech. Eng. 192(9-10), 1061–1105 (2003)

Linß, T.: An upwind difference scheme on a novel Shishkin-type mesh for a linear convection-diffusion problem. J. Comput. Appl. Math. 110(1), 93–104 (1999)

Linß, T., Stynes, M.: A hybrid difference scheme on a Shishkin mesh for linear convection-diffusion problems. Appl. Numer. Math. 31(3), 255–270 (1999)

Linß, T., Stynes, M.: Asymptotic analysis and Shishkin-type decomposition for an elliptic convection-diffusion problem. J. Math. Anal. Appl. 262(2), 604–632 (2001)

Linß, T.: Robust convergence of a compact fourth-order finite difference scheme for reaction-diffusion problems. Numer. Math. 111, 239–249 (2008)

Linß, T.: Layer-Adapted Meshes for Reaction-Convection-Diffusion Problems Lecture Notes in Mathematics, vol. 1985. Springer, Berlin (2010)

Linß, T., Roos, H.-G., Vulanović, R.: Uniform pointwise convergence on Shishkin-type meshes for quasilinear convection-diffusion problems. SIAM J. Numer. Anal. 38, 897–912 (2001)

Nhan, T.A., Stynes, M., Vulanović, R.: Optimal uniform-convergence results for convection-diffusion problems in one dimension using preconditioning. J. Comput. Appl. Math. 338, 227–238 (2018). https://doi.org/10.1016/j.cam.2018.02.012

Nhan, T.A., Vulanović, R.: Preconditioning and uniform convergence for convection-diffusion problems discretized on Shishkin-type meshes. In: Advances in Numerical Analysis. Article ID 2161279 (2016)

Nhan, T.A., Vulanović, R.: Uniform convergence on a Bakhvalov-type mesh using preconditioning approach: technical report arXiv:1504.04283(2015)

Nhan, T.A., Vulanović, R.: A note on a generalized Shishkin-type mesh. Novi Sad J. Math. 48(2), 141–150 (2018). https://doi.org/10.30755/NSJOM.07880

Nhan, T.A., Vulanović, R.: Analysis of the truncation error and barrier-function technique for a Bakhvalov-type mesh. ETNA 51, 315–330 (2019)

Roos, H.-G., Linß, T.: Sufficient conditions for uniform convergence on layer-adapted grids. Computing 63, 27–45 (1999)

Roos, H.-G., Stynes, M., Tobiska, L.: Numerical Methods for Singularly Perturbed Differential Equations Springer Series in Computational Mathematics, 2nd edn., vol. 24. Springer, Berlin (2008)

Roos, H.-G., Stynes, M.: Some open questions in the numerical analysis of singularly perturbed differential equations. CMAM 15, 531–550 (2015)

Roos, H.-G., Schopf, M.: An optimal a priori error estimate in the maximum norm for the Il’in scheme in 2D. BIT 55(4), 1169–1186 (2015)

Roos, H.-G.: Layer-adapted meshes: milestones in 50 years of history. Appl. Math. arXiv:1909.08273v1 (2019)

Shishkin, G.I.: Grid Approximation of Singularly Perturbed Elliptic and Parabolic Equations (In Russian). Second Doctoral thesis, Keldysh Institute Moscow (1990)

Stynes, M., Roos, H.-G.: The midpoint upwind scheme. Appl. Numer. Math. 23, 361–374 (1997)

Stynes, M., Stynes, D.: Convection-diffusion problems: an introduction to their analysis and numerical solution, vol. 196. 156 pp (2018)

Vulanović, R., Nhan, T.A.: A numerical method for stationary shock problems with monotonic solutions. Numer. Algor. 77(4), 1117–1139 (2017)

Vulanović, R., Nhan, T.A.: Uniform convergence via preconditioning. Int. J. Numer. Anal. Model. Ser. B 5, 347–356 (2014)

Vulanović, R.: On a numerical solution of a type of singularly perturbed boundary value problem by using a special discretization mesh. Univ. u Novom Sadu Zb. Rad. Prir. Mat. Fak. Ser. Mat. 13, 187–201 (1983)

Vulanović, R.: Non-equidistant finite difference methods for elliptic singular perturbation problems. In: Miller, J.J.H. (ed.) Computational Methods for Boundary and Interior Layers in Several Dimensions. Boole Press, Dublin (1991)

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Nhan, T.A., Vulanović, R. The Bakhvalov mesh: a complete finite-difference analysis of two-dimensional singularly perturbed convection-diffusion problems. Numer Algor 87, 203–221 (2021). https://doi.org/10.1007/s11075-020-00964-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11075-020-00964-z