Abstract

In this paper, a class of singularly perturbed coupled linear systems of second-order ordinary differential equations of convection–diffusion type is considered on the interval [0, 1]. Due to the presence of different perturbation parameters multiplying the diffusion terms of the coupled system, each of the solution components exhibits multiple layers in the neighbourhood of the origin. This fact is proved in the estimates of the derivatives of the solution. A numerical method composed of an upwind finite difference scheme applied on a piecewise uniform Shishkin mesh that resolves all the layers is suggested to solve the problem. The method is proved to be almost first-order convergent in the maximum norm uniformly in all the perturbation parameters. Numerical examples are provided to support the theory.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

- Singular perturbation problems

- System of convection-diffusion equations

- Finite difference method

- Shishkin Mesh

- Parameter uniform method

1 Introduction

Singularly perturbed differential equations of convection–diffusion type appear in several branches of applied mathematics. Roos et al. [1] describes linear convection–diffusion equations and related non-linear flow problems. Modelling real-life problems such as fluid flow problems, control problems, heat transport problems, river networks results in singularly perturbed convection–diffusion equations. Some of those models were discussed in [2]. A form of linearized Navier Stokes equations called Oseen system of equations, which models many of the physical problems, is a system of singularly perturbed convection–diffusion equations. Also systems of singularly perturbed convection–diffusion equations have applications in control problems [3].

For a broad introduction to singularly perturbed boundary value problems of convection–diffusion type and robust computational techniques to solve them, one can refer to [4,5,6]. In [7], a coupled system of two singularly perturbed convection–diffusion equations is analysed and a parameter uniform numerical method is suggested to solve the same. Here, in this paper, the following weakly coupled system of n-singularly perturbed convection–diffusion equations is considered.

where \(\mathbf {u}(x)=\big (u_1(x),u_2(x),\ldots ,u_n(x)\big )^T\), \(\mathbf {f}(x)=\big (f_1(x),f_2(x),\ldots ,f_n(x)\big )^T,\)

Here, \( \varepsilon _1, \varepsilon _2,...,\varepsilon _n\) are distinct small positive parameters and for convenience, it is assumed that \(\varepsilon _i < \varepsilon _j,\) for \(i<j\). The functions \(a_i, b_{ij}\) and \(f_i\), for all i and j, are taken to be sufficiently smooth on \(\overline{\varOmega }\). It is further assumed that, \(a_i(x)\ge \alpha > 0,\;b_{ij}(x) < 0,\; i\ne j\) and \(\displaystyle \sum _{ j=1}^{n}b_{ij}(x) \ge \beta >0\), for all \(i=1,2,\ldots ,n.\) The case \(a_i(x)<0\) can be treated in a similar way with a transformation of x to \(1-x.\)

In [9], Linss has analysed a broader class of weakly coupled system of singularly perturbed convection–diffusion equations and presented an estimate of the derivatives of \(u_i\) depending only on \(\varepsilon _i,\) for \( i=1,2,\ldots ,n\). He has claimed first order and almost first-order convergence if solved on Bakhvalov and Shishkin meshes, respectively, with the classical finite difference scheme.

The reduced problem corresponding to (1)–(2) is

where \(\mathbf {u}_0(x)=(u_{01}(x),u_{02}(x),...,u_{0n}(x))^T.\)

If \(u_k(0)\ne u_{0k}(0)\) for any k such that \(0\le k\le n\), then a boundary layer of width \(O(\varepsilon _k)\) is expected near \(x=0\) in each of the solution component \(u_i,\; 1\le i\le k\).

Notations. For any real valued function y on D, the norm of y is defined as \(\Vert y\Vert _D= \displaystyle {\sup _{x \in D}}|y(x)| \). For any vector valued function \(\mathbf {z}(x)=(z_1(x),z_2(x),\ldots ,z_n(x))^T\), \(\Vert \mathbf {z}\Vert _D=\max \big \{\Vert z_1\Vert _D, \Vert z_2\Vert _D,\ldots ,\Vert z_n\Vert _D\big \}.\) For any mesh function Y on a mesh \(D^N=\big \{x_j\big \}^N_{j=0}\), \(\Vert Y\Vert _{D^N}= \displaystyle {\max _{0\le j\le N}}|Y(x_j)| \) and for any vector valued mesh function \(\mathbf {Z}=(Z_1,Z_2,\ldots ,Z_n)^T\), \(\Vert \mathbf {Z}\Vert _{D^N}=\max \big \{\Vert Z_1\Vert _{D^N}, \Vert Z_2\Vert _{D^N}, \ldots , \Vert Z_n\Vert _{D^N}\big \}.\)

Throughout this paper, C denotes a generic positive constant which is independent of the singular perturbation and discretization parameters.

2 Analytical Results

In this section, a maximum principle, a stability result and estimates of the derivatives of the solution of the system of Eqs. (1)–(2) are presented.

Lemma 1

(Maximum Principle) Let \(\mathbf {\psi }=(\psi _1, \psi _2, ..., \psi _n)^T\) be in the domain of L with \(\mathbf {\psi }(0) \ge \mathbf {0}\; and\; \mathbf {\psi }(1) \ge \mathbf {0}.\) Then \(L\mathbf {\psi } \le \mathbf {0}\; on\; \varOmega \) implies that \(\mathbf {\psi } \ge \mathbf {0}\) on \(\overline{\varOmega }.\)

Lemma 2

(Stability Result) Let \(\mathbf {\psi }\) be in the domain of L, then for \( x \in \overline{\varOmega }\) and \(1\le i\le n\)

Theorem 1

Let \( {\mathbf {u}}\) be the solution of (1)–(2), then for x \(\in \overline{\varOmega }\) and \(1\le i\le n\), the following estimates hold.

Proof

The estimate (4) follows immediately from Lemma 2 and Eq. (1). Let \(x \in [0,1]\), then for each \(i,\;1\le i\le n\), there exists \(a\in [0,1- \varepsilon _{i}\)] such that \(x \in N_{a}=[a,a+\varepsilon _{i}].\) By the mean value theorem, there exists \(y_{i} \in (a, a+\varepsilon _{i})\) such that

and hence

Also,

Substituting for \(u_i^{\prime \prime }(s)\) from (1), \(|u_{i}^{\prime }(x)| \le C\varepsilon _{i}^{-1}\Big (\Vert \mathbf {u}\Vert + \varepsilon _{i}\Vert \mathbf {f}\Vert \Big ).\) Again from (1), \(|u_{i}^{\prime \prime }(x)|\le C\varepsilon _{i}^{-2}\Big (\Vert \mathbf {u}\Vert + \varepsilon _{i}\Vert \mathbf {f}\Vert \Big ).\) Differentiating (1) once and substituting the above bounds lead to

2.1 Shishkin Decomposition of the Solution

The solution \(\mathbf {u}\) of the problem (1)–(2) can be decomposed into smooth \(\mathbf {v}=(v_1,...,v_n)^T\) and singular \(\mathbf {w}=(w_1,...,w_n)^T\) components given by \(\mathbf {u}=\mathbf {v}+\mathbf {w}\), where

where \(\mathbf {\gamma }=(\gamma _1, \gamma _2, \ldots , \gamma _n)^T\) is to be chosen.

2.1.1 Estimates for the Bounds on the Smooth Components and Their Derivatives

Theorem 2

For a proper choice of \(\mathbf {\gamma }\), the solution of the problem (7) satisfies for \(1\le i\le n\) and \(0\le k\le 3\),

Proof

Considering the layer pattern of the solution, first, the decomposition is done with \(\varepsilon _n\), for all the components of \(\mathbf {v}\). The second level decomposition with \(\varepsilon _{n-1}\) is for the first \(n-1\) components of \(\mathbf {v}\). Then, the decomposition continues with \(\varepsilon _{n-2}\) for the first \(n-2\) components of \(\mathbf {v}\) and so on. It is carried out in the following way.

First, the smooth component \(\mathbf {v}\) is decomposed into

where \(\mathbf {y}_{n}=\left( y_{n1},y_{n2},\ldots ,y_{nn}\right) ^T\) is the solution of

\(\mathbf {z}_{n}=\left( z_{n1},z_{n2},\ldots ,z_{nn}\right) ^T\) is the solution of

and \(\mathbf {q}_{n}=\left( q_{n1},q_{n2},\ldots ,q_{nn}\right) ^T\) is the solution of

Using the fact that \(\varepsilon _n^{-1}E\) is a matrix of bounded entries, and from the results in [10] for (10) and (11), it is not hard to see that

Now, using Theorem 1 and (13), with the choice that \(q_{nn}(0)=0,\)

Then from (9), it is clear that \(v_n(0)=\gamma _n=y_{nn}(0)+\varepsilon _nz_{nn}(0)\). Also from (13) and (14),

Now, having found the estimates of \(v_n^{(k)},\) to estimate the bounds \(v_i^{(k)},\) for \(1\le i\le n-1\), the following notations are introduced, for \(1\le l\le n,\)

\(\tilde{\mathbf {q}}_l=\left( q_{l1},q_{l2},\ldots ,q_{l(l-1)}\right) ^T\), \(\mathbf {g}_{(l-1)}=\left( g_{(l-1)1},g_{(l-1)2},\ldots ,g_{(l-1)(l-1)}\right) ^T\), with \(g_{(l-1)j}=-\dfrac{\varepsilon _j}{\varepsilon _{l}}z_{lj}^{\prime \prime }+b_{jl}q_{ll}\).

Now, considering the first (\(n-1\)) equations of the system (12), it follows that

where \(\tilde{\mathbf {q}}_{n}(1)=\mathbf {0}\; \text {and}\;\tilde{\mathbf {q}}_{n}(0)\) remains to be chosen.

Furthermore, decomposing \(\tilde{\mathbf {q}}_{n}\) in a similar way to (9), we obtain

where \(\mathbf {y}_{n-1}=\left( y_{(n-1)1},y_{(n-1)2},\ldots ,y_{(n-1)(n-1)}\right) ^T\) is the solution of the problem

\(\mathbf {z}_{n-1}=\left( z_{(n-1)1},z_{(n-1)2},\ldots ,z_{(n-1)(n-1)}\right) ^T\) is the solution of the problem

and \(\mathbf {q}_{n-1}=\left( q_{(n-1)1},q_{(n-1)2},\ldots ,q_{(n-1)(n-1)}\right) ^T\) is the solution of the problem

Now choose \(\mathbf {q}_{n-1}(0)\) so that its \((n-1)\mathrm{th}\) component is zero (i.e. \(q_{(n-1)(n-1)}(0)=0\)).

Problem (18) is similar to the problem (11). Using the estimates (13)–(14), the solution of the problem (18) satisfies the following bound for \(0\le k\le 3\).

Using (21) and Lemma 2.2 in [10], the solution of the problem (19) satisfies

and from (19), for \(1\le k\le 3\),

Now, using Theorem 1 and (23), the following estimate holds:

By the choice of \(q_{(n-1)(n-1)}(0)\), from (9) and (17), it is clear that \(v_{n-1}(0)=\gamma _{n-1}=y_{n(n-1)}(0)+\varepsilon _nz_{n(n-1)}(0)+\varepsilon _n^2y_{(n-1)(n-1)}(0)+\varepsilon _n^2\varepsilon _{n-1}z_{(n-1)(n-1)}(0)\). Also, the estimates (21)–(24) imply that

Proceeding in a similar way, one can derive singularly perturbed systems of l equations, \(l = n-2,\; n-3,\ldots ,\; 2,\;1\),

with \(\tilde{\mathbf {q}}_{l+1}(1)=\mathbf {0}\;\text {and}\;\tilde{\mathbf {q}}_{l+1}(0),\) to be chosen.

Now, decomposing \(\tilde{\mathbf {q}}_{l+1}\) in a similar way to (9), we obtain

where \(\mathbf {y}_{l}=\left( y_{l1},y_{l2},\ldots ,y_{ll}\right) ^T\) and \(\mathbf {z}_l=\left( z_{l1},z_{l2},\ldots ,z_{ll}\right) ^T\) satisfy

respectively and \(\mathbf {q}_l=\left( q_{l1},q_{l2},\ldots ,q_{ll}\right) ^T\) is the solution of the problem

We choose \(\mathbf {q}_l(0)\) so that its \(l\mathrm{th}\) component is zero (i.e. \(q_{ll}(0)=0\)).

From (28) it is clear that, for \(0\le k\le 3\),

Using (31) in (29), \(\Vert \mathbf {z}_l\Vert \le C\left( 1+\varepsilon _{l+1}^{-1}\right) \prod _{i=l+2}^n\varepsilon _i^{-2}\) and for \(1\le k\le 3\),

Now, using Theorem 1 for \(\mathbf {q}_l\), we obtain

Since \(q_{ll}(0)=0\), it is clear that

Also, the estimates (31)–(33) imply that

Thus, by the choice made for \(\gamma _{n},\;\gamma _{n-1},\ldots ,\gamma _{2},\;\gamma _{1}\), the solution \(\mathbf {v}\) of the problem (7) satisfies the following bound for \(1 \le i\le n\) and \(0\le k\le 3\)

2.1.2 Estimates for the Bounds on the Singular Components and Their Derivatives

Let \(\mathscr {B}_i(x),\; 1\le i\le n,\) be the layer functions defined on [0, 1] as

Theorem 3

Let \( \mathbf {w}(x)\) be the solution of (8), then for x \(\in \overline{\varOmega }\) and \(1\le i\le n\) the following estimates hold.

Proof

Consider the barrier function \(\mathbf {\phi }=(\phi _1,\phi _2,\ldots ,\phi _n)^T\) defined by \(\phi _i(x)=C\mathscr {B}_n(x),\) \(1\le i\le n.\) Put \(\mathbf {\psi }^{\pm }(x)=\mathbf {\phi }(x)\pm \mathbf {w}(x)\), then for sufficiently large C, \(\mathbf {\psi }^{\pm }(0)\ge \mathbf {0},\) \(\mathbf {\psi }^{\pm }(1)\ge \mathbf {0}\) and \(L\mathbf {\psi }^{\pm }(x)\le \mathbf {0}\). Using Lemma 1, it follows that, \(\mathbf {\psi }^{\pm }(x)\ge \mathbf {0}\). Hence, estimate (37) holds. From (8), for \(1\le i\le n\)

where \(g_i(x) = \displaystyle \sum _{ j=1}^{n}b_{ij}(x)w_j(x)\). Let \(\mathscr {A}_i(x)=\displaystyle \int _0^x a_i(s)ds\), then solving (41) leads to

Using Theorem 1 for \(\mathbf {w}\), \(|w_i^\prime (0)|\le C\varepsilon _i^{-1}\). Further from the inequalities, \(\exp \big (-(\mathscr {A}_i(x)\) \(-\mathscr {A}_i(t))/\varepsilon _i\big ) {\le } \exp \big (-\alpha (x-t)/\varepsilon _i\big )\) for \(t\le x\) and \(|g_i(t)|\le C \mathscr {B}_n(t)\), it is clear that

Using integration by parts, it is not hard to see that

Differentiating (41) once leads to

Then,

Using \(|w_i^{\prime \prime }(0)|\le C\varepsilon _i^{-2}, |h_i(t)|\le C \displaystyle \sum _{k=1}^{n}\varepsilon _k^{-1} \mathscr {B}_k(t)\) and hence

Using the bounds given in (42) and (44) in (43), (40) can be derived.

As the estimates of the derivatives are to be used in the different segments of the piecewise uniform Shishkin meshes, the estimates are improved using the layer interaction points as given below.

2.1.3 Improved Estimates for the Bounds on the Singular Components and Their Derivatives

For \(\;\mathscr {B}_i\), \(\mathscr {B}_j,\) each \(\;i,j, \;1 \le i < j \le n\;\) and each \(\;s=1,2\;\) the point \(\;x^{(s)}_{i,j}\;\) is defined by

Lemma 3

For all \(\,i,j\,\) such that \(\;1 \le i < j \le n\;\) and \(s=1,2 \;\) the points \(\;x_{i,j}^{(s)}\;\) exist, are uniquely defined and satisfy the following inequalities

In addition, the following ordering holds

Proof

Proof is similar to the Lemma 2.3.1 of [8].

Consider the following decomposition of \(w_i(x)\)

where the components \(\;w_{i,q}\;\) are defined as follows.

and, for each \(\;q,\;\;n-1 \ge q \ge i\),

and, for each \(\;q,\;\;i-1 \ge q \ge 2\),

with \(p_{i,q}=w_i-\displaystyle \sum _{k=q+1}^{n} w_{i,k}\)

and

Theorem 4

For each \(\,q\,\) and \(\;i,\;\;1 \le q \le n,\;\;1 \le i \le n\;\) and all \(\;x \in \overline{\varOmega },\;\) the components in the decomposition (48) satisfy the following estimates.

Proof

Differentiating (49) thrice,

Then for \(x\in [0,x^{(2)}_{n-1,n})\), using Theorem 3,

Since \(x_{k,n}^{(2)}\le x_{n-1,n}^{(2)}\) for \(k<n\), using (46) \(\varepsilon _k^{-2}\mathscr {B}_k(x^{(2)}_{n-1,n})\le \varepsilon _n^{-2}\mathscr {B}_n(x^{(2)}_{n-1,n})\) and hence

For \(x\in [x^{(2)}_{n-1,n},1]\),

As \(x\ge x^{(2)}_{n-1,n}\), using (46) \(\varepsilon _k^{-2}\mathscr {B}_k(x)\le \varepsilon _n^{-2}\mathscr {B}_n(x)\) and hence for \(x\in [x^{(2)}_{n-1,n},1]\)

From (49) and (50), it is not hard to see that for each \(q,\;n-1 \ge q \ge i\) and \(x\in [x^{(2)}_{q,q+1},1]\), \(w_{i,q}(x)=p_{i,q}(x)=w_i(x)-\displaystyle \sum _{k=q+1}^{n} w_{i,k}(x)=w_i(x)-w_i(x)=0.\) Differentiating (50) thrice, on \(x\in [0,x^{(2)}_{q-1,q})\)

For \(x\in [x^{(2)}_{q-1,q},x^{(2)}_{q,q+1})\), using Lemma 3,

From (50) and (51), it is not hard to see that for each \(q,\;i-1 \ge q \ge 2\) and \(x\in [x^{(1)}_{q,q+1},1]\), \(w_{i,q}(x)=0.\) Differentiating (51) thrice on \(x\in [0,x^{(1)}_{q-1,q})\)

For \(x\in [x^{(1)}_{q-1,q},x^{(1)}_{q,q+1})\), using Lemma 3,

From (51) and (52), it is not hard to see that \(w_{i,1}(x)=0\) for \(x\in [x^{(1)}_{1,2},1]\) and for \(x\in [0,x^{(1)}_{1,2})\), \(|w_{i,1}^{\prime \prime \prime }(x)|\le |w_i^{\prime \prime \prime }(x)| \le C\varepsilon _i^{-2}\varepsilon _1^{-1} \mathscr {B}_1(x).\) Since \(w_{i,q}^{\prime \prime }(1)=0\), for \(q<n\), it follows that for any \(x\in [0,1]\) and \(i>q\),

Hence,

Similar arguments lead to

and

3 Numerical Method

To solve the BVP (1)–(2), a numerical method comprising of a Classical Finite Difference(CFD) Scheme and a piecewise uniform Shishkin mesh fitted on the domain [0, 1] is suggested.

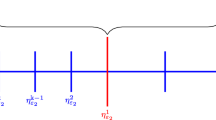

3.1 Shishkin Mesh

A piecewise uniform Shishkin mesh with N mesh-intervals is now constructed. The mesh \(\;\overline{\varOmega }^N\;\) is a piecewise uniform mesh on \(\;[0,1]\;\) obtained by dividing [0, 1] into \(n+1\) mesh-intervals as \([0,\tau _1]\cup [\tau _1,\tau _2]\cup \dots \cup [\tau _{n-1},\tau _n]\cup [\tau _n,1].\) Transition parameters \(\tau _r,\;1\le r\le n\), are defined as \(\tau _{n} = \min \displaystyle \left\{ \frac{1}{2},\;2\frac{\varepsilon _n}{\alpha }\ln N\right\} \) and, for \(\;r=n-1,\,\dots \,1\), \(\tau _{r}=\min \displaystyle \left\{ \frac{r\tau _{r+1}}{r+1},\;2\frac{\varepsilon _r}{\alpha }\ln N\right\} .\) On the sub-interval \(\;[\tau _n,1], \;\frac{N}{2}+1\;\) mesh-points are placed uniformly and on each of the subintervals \(\;[\tau _r,\tau _{r+1}),\;\;r=n-1,\,\dots \, 1,\) a uniform mesh of \(\;\frac{N}{2n}\;\) mesh-points is placed. A uniform mesh of \(\;\frac{N}{2n}\;\) mesh-points is placed on the sub-interval \([0,\tau _1).\)

The Shishkin mesh is coarse in the outer region and becomes finer and finer in the inner (layer) regions. From the above construction, it is clear that the transition points \(\;\tau _r,\;r=1,\dots , n,\;\)are the only points at which the mesh-size can change and that it does not necessarily change at each of these points.

If each of the transition parameters \(\;\tau _r,\;r=1,\dots , n,\;\) are with the left choice, the Shishkin mesh \(\;\overline{\varOmega }^N\;\) becomes the classical uniform mesh with \(\;\tau _r = \frac{r}{2n},\;r=1,\dots , n,\;\) and hence the step size is \(\;N^{-1}\;\).

The following notations are introduced: \(\;h_j = x_j-x_{j-1}\) and if \(\;x_j=\tau _r,\;\) then \(\;h_r^- = x_j-x_{j-1},\;\;h_r^+ = x_{j+1}-x_j,\;\;J = \{\tau _r: h_r^+ \ne h_r^-\}.\) Let \(H_r=2n\,N^{-1}(\tau _r-\tau _{r-1}),\;2\le r\le n\) denote the step size in the mesh interval \((\tau _{r-1},\tau _r]\). Also, \(H_1 = 2\, n N^{-1}\tau _1\) and \(H_{n+1}=2\,N^{-1} (1-\tau _n)\). Thus, for \(\;1 \le r \le n-1,\;\) the change in the step size at the point \(\;x_j = \tau _r\;\) is

where \(d_r = \frac{r\tau _{r+1}}{r+1}-\tau _r\) with the convention \(\;d_n =0,\) when \(\tau _n=1/2 .\;\) The mesh \(\;\overline{\varOmega }^N\;\) becomes a classical uniform mesh when \(\;d_r = 0\;\) for all \(\;r=1,\;\dots ,\;n\;\) and \(\tau _r \le C\,\varepsilon _r \ln N, \; \;\; 1 \le r \le n.\) Also \(\tau _r=\frac{r}{s}\tau _{s}\;\;\mathrm {when}\;\; d_r=\dots =d_s =0, \; 1 \le r \le s \le n.\)

3.2 Discrete Problem

To solve the BVP (1)–(2) numerically the following upwind classical finite difference scheme is applied on the mesh \(\overline{\varOmega }^N\).

where \(\mathbf {U}(x_j)=(U_1(x_j),U_2(x_j),\ldots ,U_n(x_j))^T\) and for \(1\le j\le N-1,\)

with

4 Numerical Results

In this section a discrete maximum principle, a discrete stability result and the first-order convergence of the proposed numerical method are established.

Lemma 4

(Discrete Maximum Principle) Assume that the vector valued mesh function \(\mathbf {\psi }(x_j)=(\psi _1(x_j),\psi _2(x_j),\ldots ,\psi _n(x_j))^T\) satisfies \(\mathbf {\psi }(x_0) \ge \mathbf {0}\) and \(\mathbf {\psi }(x_N)\ge \mathbf {0}\). Then \(L^N\mathbf {\psi }(x_j) \le \mathbf {0}\) for \(1\le j\le N-1\) implies that \(\mathbf {\psi }(x_j) \ge \mathbf {0}\) for \(0 \le j \le N.\)

Lemma 5

(Discrete Stability Result) If \(\mathbf {\psi }(x_j)=(\psi _1(x_j),\psi _2(x_j),\ldots ,\psi _n(x_j))^T\) is any vector valued mesh function defined on \(\overline{\varOmega }^N,\) then for \(1\le i\le n\) and \(0\le j \le N\),

4.1 Error Estimate

Analogous to the continuous case, the discrete solution \(\mathbf {U}\) can be decomposed into \(\mathbf {V}\) and \(\mathbf {W}\) as defined below.

Lemma 6

Let \(\mathbf {v}\) be the solution of (7) and \(\mathbf {V}\) be the solution of (63), then

Proof

For \( 1\le j\le N-1\),

By the standard local truncation used in the Taylor expansions,

Since \((x_{j+1}-x_{j-1}) \le CN^{-1}\), by using (35),

As v and V agree at the boundary points, using Lemma 5,

To estimate the error in the singular component \((\mathbf {W}-\mathbf {w})\), the mesh functions \(B_i^N(x_j)\) for \(1\le i\le n\) on \(\overline{\varOmega }^N\) are defined by

with \(B_i^N(x_0)=1.\) It is to be observed that \(B_i^N\) are monotonically decreasing.

Lemma 7

The singular components \(W_i\), \(1\le i\le n\) satisfy the following bound on \(\overline{\varOmega }^N\);

Proof

Consider the following vector valued mesh functions on \(\overline{\varOmega }^N\),

where \(\mathbf {e}\) is the n- vector \(\mathbf {e}=(1,1,\ldots ,1)^T\).

Then for sufficiently large C, \(\mathbf {\psi }^{\pm }(x_0) \ge \mathbf {0}\), \( \mathbf {\psi }^{\pm }(x_N) \ge \mathbf {0}\) and \(L^N \mathbf {\psi }^{\pm }(x_j) \le \mathbf {0},\) for \(1\le j\le N-1\). Using Lemma 4, \(\mathbf {\psi }^{\pm }(x_j)\ge \mathbf {0}\) on \(\overline{\varOmega }^N,\) which implies that

Lemma 8

Assume that \(d_r=0,\;\text {for}\; r=1, 2, \ldots , n.\) Let \(\mathbf {w}\) be the solution of (8) and \(\mathbf {W}\) be the solution of (64). Then

Proof

By the standard local truncation used in the Taylor expansions,

where the norm is taken over the interval \({[x_{j-1},x_{j+1} ]}\).

Since \(d_r=0\), the mesh is uniform, \(h=N^{-1}\) and \(\varepsilon _k^{-1}\le C \ln N.\) Then,

Consider the barrier function \(\mathbf {\phi }=(\phi _1(x_j),\phi _2(x_j),\ldots ,\phi _n(x_j))^T\) given by

where \(\gamma \) is a constant such that \(0<\gamma < \alpha \),

It is not hard to see that, \(0\le Y_k(x_j) \le 1,\;\;\; D^+Y_k(x_j)\le -\frac{\gamma }{\varepsilon _k}\exp (-\gamma x_{j+1}/\varepsilon _k)\) and \( (\varepsilon _k\delta ^2+\gamma D^+)Y_k(x_j)=0. \) Hence,

Consider the discrete functions

Then for sufficiently large C, \(\mathbf {\psi }^{\pm }(x_0) > \mathbf {0}\), \(\mathbf {\psi }^{\pm }(x_N)\ge \mathbf {0}\) and \(L^N\mathbf {\psi }^{\pm }(x_j)\le \mathbf {0}\) on \(\varOmega ^N\).

Using Lemma 4, \(\mathbf {\psi }^{\pm }(x_j)\ge \mathbf {0}\) on \(\overline{\varOmega }^N\). Hence, \(|(\mathbf {W}-\mathbf {w})_i(x_j)|\le CN^{-1}\ln N\) for \(1\le i\le n\), implies that

Lemma 9

Let \(\mathbf {w}\) be the solution of (8) and \(\mathbf {W}\) be the solution of (64); then

Proof

This is proved for each mesh point \(\;x_j \in (0,1)\;\) by dividing (0, 1) into \(n+1\) subintervals (a) \((0,\tau _1),\) (b) \([\tau _1,\tau _2),\) (c) \([\tau _m,\tau _{m+1})\) for some \(\;m,\; 2 \le m \le n-1\;\) and (d) \(\;[\tau _n,1).\;\)

For each of these cases, an estimate for the local truncation error is derived and a barrier function is defined. Lastly, using these barrier functions, the required estimate is established.

Case (a): \( x_j \in (0,\tau _1)\).

Clearly \(\;x_{j+1} - x_{j-1} \;\le \; C \varepsilon _1 N^{-1}\ln N.\;\) Then, by standard local truncation used in Taylor expansions, the following estimates hold for \(x_j \in (0,\tau _1)\) and \(1\le i\le n.\)

Consider the following barrier functions for \(x_j \in (0,\tau _1)\) and \(1\le i\le n.\)

Case (b): \( x_j \in [\tau _1,\tau _2)\).

There are 2 possibilities: Case (b1): \(\mathbf{\;d_1 = 0\;}\) and Case (b2): \(\mathbf{\;d_1 > 0.\;}\)

Case (b1): \(\mathbf{\;d_1 = 0\;}\)

Since the mesh is uniform in \(\;(0,\tau _2),\;\) it follows that \(x_{j+1} - x_{j-1} \;\le \; C\,\varepsilon _1 N^{-1}\ln N,\) for \(x_j\in [\tau _1,\tau _2)\) . Then,

Now for \(x_j\in [\tau _1,\tau _2)\;\text {and}\;1\le i\le n\), define,

Case (b2): \({\mathbf{d}}_{\mathbf{1}} > \mathbf{0}.\;\)

For this case, \(\;x_{j+1} - x_{j-1} \;\le \; C\,\varepsilon _2 N^{-1}\ln N\;\), and hence for \(x_j \in [\tau _1,\tau _2)\)

By the standard local truncation used in Taylor expansions

Now using Theorem 4, it is not hard to derive that

and for \(2\le i\le n\),

Define

and for \(2\le i\le n\),

Case (c): \(x_j\in (\tau _m,\tau _{m+1}]\). There are 3 possibilities:

Case (c1): \(d_1=d_2=\dots =d_m=0,\)

Case (c2): \(d_r>0\) and \(\; d_{r+1}=\;\dots \;=d_m=0\) for some \(r,\; 1 \le r \le m-1\) and

Case (c3): \(d_m>0\).

Case (c1): \(d_1=d_2=\dots =d_m=0,\)

Since \(\;\tau _1=C\tau _{m+1}\;\) and the mesh is uniform in \(\;(0,\tau _{m+1}),\;\) it follows that, for \(x_j\in (\tau _m,\tau _{m+1}]\), \(\;x_{j+1} - x_{j-1} \;\le \; C\,\varepsilon _1 N^{-1}\ln N\;\) and hence

For \(1\le i\le n\),

Case (c2): \(d_r>0\) and \(\; d_{r+1}=\;\dots \;=d_m=0\) for some \(r,\; 1 \le r \le m-1\)

Since, \(\;\tau _{r+1} = C \tau _{m+1}\), the mesh is uniform in \(\;(\tau _{r},\tau _{m+1}),\;\) it follows that \(\;x_{j+1} - x_{j-1} \;\le \; C\,\varepsilon _{r+1}N^{-1}\ln N,\) for \( x_j\in (\tau _m,\tau _{m+1}].\;\)

By the standard local truncation used in Taylor expansions

Now using Theorem 4, it is not hard to derive that for \( i\le r\)

and for \( i > r\)

Now define, for \( i\le r\)

and for \( i > r\)

Case (c3): \(d_m>0\)

Replacing r by m in the arguments of the previous case Case(c2) and using \(x_{j+1} - x_{j-1}\le C\varepsilon _{m+1}N^{-1}\ln N,\) the following estimates hold for \(x_j\in (\tau _m,\tau _{m+1}].\)

For \( i\le m\),

and for \( i > m\)

For \( i\le m\), define,

and for \( i > m\)

Case (d): There are 3 possibilities.

Case (d1): \(d_1=\;\;\dots \;\;=d_n=0,\)

Case (d2): \(d_r>0\) and \(\; d_{r+1}=\;\dots \;=d_n=0\) for some \(r,\; 1 \le r \le n-1\) and

Case (d3): \(d_n>0\).

Case (d1): \(d_1=\;\;\dots \;\;=d_n=0,\)

The mesh is uniform in [0, 1] and the result is established in the Lemma 8.

Case (d2): \(d_r>0\) and \(\; d_{r+1}=\;\dots \;=d_n=0\) for some \(r,\; 1 \le r \le n-1\)

In this case from the definition of \(\tau _n\) it follows that \(\;x_{j+1} - x_{j-1} \;\le \; C\,\varepsilon _{r+1}N^{-1}\ln N\;\) and arguments similar to the Case(c2) lead to the following estimates for \(x_j \in (\tau _n,1]\).

For \( i\le r\),

and for \( i > r\)

Define the barrier functions \(\phi _i\) for \( i\le r\) by

and for \( i > r\)

Case (d3): \(d_n>0\)

Now \( \tau _n = 2\dfrac{\varepsilon _n}{\alpha }\ln N\). Then on \((\tau _n,1]\),

Hence,

Now using the estimates derived and the barrier functions \(\phi _i,\; 1\le i\le n,\) defined for all the four cases, the main proof is split into two cases

Case 1: \(d_n>0\). Consider the following discrete functions for \(0\le j\le N/2\),

where \(\mathbf {\phi }(x_j)=(\phi _1(x_j),\phi _2(x_j),\ldots ,\phi _n(x_j))^T\).

For sufficiently large C, it is not hard to see that

Then by Lemma 4, \(\mathbf {\psi }^{\pm }(x_j)\ge \mathbf {0}\) for \(0 \le j \le N/2.\) Consequently,

Hence, (84) and (86) imply that, for \(d_n>0\)

Case 2: \(d_n=0\). Consider the following discrete functions for \(0\le j\le N\),

For sufficiently large C, it is not hard to see that

Then by Lemma 4, \(\mathbf {\psi }^{\pm }(x_j)\ge \mathbf {0}\) for \(0 \le j \le N.\) Hence, for \(d_n=0\),

Theorem 5

Let u be the solution of the problem (1)–(2) and U be the solution of the problem (61)–(62), then,

Proof

From the Eqs. (7), (8), (63) and (64), we have

5 Numerical Illustrations

Example 1

Consider the following boundary value problem for the system of convection–diffusion equations on (0, 1)

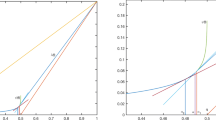

The above problem is solved using the suggested numerical method and plot of the approximate solution for \(N=1536, \varepsilon _1=5^{-4}, \varepsilon _2=3^{-4}, \varepsilon _3=2^{-5}\) is shown in Fig. 1.

Approximate solution of Example 1

Parameter uniform error constant and the order of convergence of the numerical method for \(\varepsilon _1=\eta /625,\; \varepsilon _2=\eta /81\; \text {and}\; \varepsilon _3=\eta /32\) are computed using a variant of the two mesh algorithm suggested in [6] and are shown in Table 1.

It is found that the parameter \(\varepsilon _i\) for any i, influences the components \(u_1, u_2, \ldots ,u_{i}\) and causes multiple layers for these components, in the neighbourhood of the origin and has no significant influence on \(u_{i+1},u_{i+2},\ldots ,u_n\). The following examples illustrate this.

Example 2

Consider the following boundary value problem for the system of convection–diffusion equations on (0, 1)

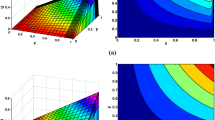

The above problem is solved using the suggested numerical method. As \(u_2(0)\ne u_{02}(0)\) and \(u_i(0)=u_{0i}(0),\;i=1,3\) for this problem, a layer of width \(O(\varepsilon _2)\) is expected to occur in the neighbourhood of the origin for \(u_1\) and \(u_2\) but not for \(u_3\). Further \(u_1\) cannot have \(\varepsilon _1\) layer or \(\varepsilon _3\) layer. The plot of an approximate solution of this problem for \(N=384, \varepsilon _1=5^{-4}, \varepsilon _2=3^{-4}, \varepsilon _3=2^{-5}\) is shown in Fig. 2a–d.

Approximation of solution components of Example 2

Example 3

Consider the following boundary value problem for the system of convection–diffusion equations on (0, 1)

The above problem is solved using the suggested numerical method. As \(u_3(0)\ne u_{03}(0)\) and \(u_i(0)=u_{0i}(0),\;i=1,2\) for this problem, a layer of width \(O(\varepsilon _3)\) is expected to occur in the neighbourhood of the origin for \(u_1,u_2\) and \(u_3\). Further \(u_1\) will not have \(\varepsilon _1\) layer or \(\varepsilon _2\) layer. Similarly \(u_2\) will not have \(\varepsilon _2\) layer. The plot of an approximate solution of this problem for \(N=384, \varepsilon _1=5^{-4}, \varepsilon _2=3^{-4}, \varepsilon _3=2^{-5}\) is shown in Fig. 3a–d.

Approximation of solution components of Example 3

6 Conclusions

The method presented in this paper is the extension of the work done for the scalar problem in [4]. The novel estimates of derivatives of the solution help us to establish the desired error bound for the Classical Finite Difference Scheme when applied on any of the \(2^n\) Shishkin meshes.

The examples given are to facilitate the reader to note the effect of coupling with the assumed order of the perturbation parameters.

References

Roos, H.-G., Stynes, M., Tobiska, L.: Robust Numerical Methods for Singularly Perturbed Differential Equations. Springer Series in Computational Mathematics, Berlin (1996)

Morton, K.W.: Numerical solution of convection diffusion problems. Appl. Math. Math. Comput. (1995)

Kokotovic, P.V.: Applications of singularly perturbation techniques to control problems. SIAM Rev. 26(4), 501–550 (1984)

Miller, J.J.H., O’Riordan, E., Shishkin, G.I.: Fitted Numerical Methods for Singular Perturbation Problems. World Scientific Publishing Co., Singapore (1996)

Doolan, E.P., Miller, J.J.H., Schilders, W.H.A.: Uniform Numerical Methods for Problems with Initial and Boundary Layers. Boole press, Dublin, Ireland (1980)

Farrell, P.A., Hegarty, A., Miller, J.J.H., O’Riordan, E., Shishkin, G.I.: Robust Computational Techniques for Boundary Layers. Chapman and Hall/CRC Press, Boca Raton (2000)

Saravana Sankar, K., Miller, J.J.H., Valarmathi, S.: A parameter uniform fitted mesh method for a weakly coupled system of two singularly perturbed convection-diffusion equations. Math. Commun. 24, 193–210 (2019)

Paramasivam, M., Valarmathi, S., Miller, J.J.H.: Second order parameter-uniform convergence for a finite difference method for a singularly perturbed linear reaction-diffusion system. Math. Commun. 15(2), 587–612 (2010)

Linss, T.: Analysis of an upwind finite difference scheme for a system of coupled singularly perturbed convection-diffusion equations. Computing 79(1), 23–32 (2007)

Valarmathi, S., Miller, J.J.H.: A parameter-uniform finite difference method for singularly perturbed linear dynamical systems. Int. J. Numer. Anal. Model. 7(3), 535–548 (2010)

Acknowledgements

The first author wishes to acknowledge the financial support extended through Junior Research Fellowship by the University Grants Commission, India, to carry out this research work. Also the first and the third authors thank the Department of Science & Technology, Government of India for the support to the Department through the DST-FIST Scheme to set up the Computer Lab where the computations have been carried out.

Author information

Authors and Affiliations

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2021 The Author(s), under exclusive license to Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper

Kalaiselvan, S., Miller, J.J.H., Sigamani, V. (2021). Fitted Mesh Methods for a Class of Weakly Coupled System of Singularly Perturbed Convection–Diffusion Equations. In: Sigamani, V., Miller, J.J.H., Nagarajan, S., Saminathan, P. (eds) Differential Equations and Applications. ICABS 2019. Springer Proceedings in Mathematics & Statistics, vol 368. Springer, Singapore. https://doi.org/10.1007/978-981-16-7546-1_9

Download citation

DOI: https://doi.org/10.1007/978-981-16-7546-1_9

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-16-7545-4

Online ISBN: 978-981-16-7546-1

eBook Packages: Mathematics and StatisticsMathematics and Statistics (R0)