Abstract

The paper investigates the event-triggered sub-optimal control problem for two-time-scale systems with unknown slow dynamics. Specifically, a composite event-triggered optimal controller design is proposed for the decoupled slow and fast subsystems with different triggering conditions. To handle the unknown dynamics, the actor and critic networks are utilized to approximate the optimal controller employing tuning laws applied to the weights. The results indicate that a system employing the proposed event-triggered mechanism can achieve asymptotic stability and afford a sub-optimal controller. Furthermore, Zeno behavior is excluded with rigorous proof, and several simulation examples and comparative studies demonstrate the method’s effectiveness.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

In many practical systems, such as electric circuits [1], power systems [2], and spacecraft systems [3], slow and fast dynamics coexist and are coupled. These systems can be modeled as two-time-scale systems (TTSSs), where the optimal control objective of TTSSs is to minimize a predefined performance index concerning the control energy, state variables, or other indicators. Such problems have always been a hot topic in the control field for TTSSs. To address these issues, it is often required to deal with Hamilton–Jacobi–Bellman (HJB) equations, which are hard to solve analytically. As a result, methods focusing on finding an approximate solution have become popular to realize near-optimal control for practical needs [4, 5]. Another concern is that applying a traditional optimal control of single-time-scale systems directly to TTSSs may pose ill-conditioned numerical and high-dimensional issues. Therefore, some effective methods have been proposed [6,7,8,9,10,11,12,13,14]. For instance, when the fast dynamics decay rapidly, the controller can be designed solely based on the slow dynamics [6]. However, when the fast dynamics are not globally asymptotically stable, we can decompose the original problem into two reduced subproblems with separate time scales and then design a composite control strategy [7,8,9,10,11,12,13]. In [14], the authors proposed a feasible alternative to utilizing a fixed-point iteration method.

The optimal control methods for TTSSs mentioned above [7, 8, 10,11,12, 14] assume that the system’s dynamics are completely known. However, precisely parameterized system models are always hard to obtain in real applications. Based on the practical background of industrial thickening and flotation processes, model-free optimal operational [15, 16] and optimal tracking [17] control strategies have been suggested. Utilizing prior model identification, the unknown system dynamics are estimated through a multi-time-scale dynamic neural network, and then the near-optimal controller is designed [18, 19]. However, this method is still based on precise modeling. To directly obtain the optimal solution with unknown slow dynamics, an adaptive composite near-optimal control strategy is designed in the framework of adaptive dynamic programming [20]. Nevertheless, the controllers are continuously updated in real-time [15,16,17,18,19,20], posing implementation challenges due to the physical devices and communication limitations.

To overcome the difficulty of continuous updating, the event-triggered mechanism has been proposed so that the controller updates when necessary [21], enhancing the efficient usage of restricted resources. The event-triggered optimal control (ETOC) offers a tradeoff between performance and resources. Thus, a sub-optimal performance is obtained if selecting a less-resourced oriented controller, such as an event-triggered controller [22]. Furthermore, the upper bound of the performance index [23, 24], unmatched uncertainties [25] and double-channel communication [26] have also been studied within the scope of ETOC. The case of nonlinear stochastic systems is considered in [27]. However, related works on ETOC are mainly about single-time-scale systems. For example, considering the unknown dynamics, an event-triggered adaptive dynamic programming method has been proposed based on the policy and value iteration algorithms [28]. The adaptive neural network (NN) control or the adaptive fuzzy control is also a valid tool to deal with nonparametric uncertainties [29, 30]. Additionally, a model-free Q-learning-based algorithm has been designed where the Q-function is considered a parametrization for the state and the input [31]. Finally, some related works concern event-triggered mechanism (ETM) for TTSSs [6, 9, 32, 33], but optimality and unknown dynamics have not been simultaneously considered.

Based on the above discussions, ETOC for TTSSs with unknown dynamics is still an open issue to be investigated, as methods for single-time-scale systems [23,24,25, 31, 34] do not apply to TTSSs directly. The main challenge is that the errors of the slow and the fast states are always coupled, which is not the case in single-time scale systems.

This paper considers unknown slow dynamics and proposes a Q-learning ETOC algorithm for TTSSs. The Q-learning method is an adaptive dynamic programming method using the actor-critic structure. An action-depend function that estimates the expected performance under a given action and a given state through the collected input/output data selects the optimal action based on the previous observations. A significant advantage of such a strategy is that it does not require accurately modeling the systems. The main contribution of this paper is twofold: (1) Considering the event-triggered sub-optimal control for TTSSs, as existing event-triggered controllers for TTSSs mainly focus on stabilization [6, 9, 32, 33] and neglect performance indicators during the control process, or focus on using a continuous-time optimal controller without an event-triggering mechanism [18,19,20]. (2) Proposing an online approach without knowing the system’s slow dynamics, thus not requiring prior offline training [20] or identifying unknown dynamics [18, 19]. The optimal controller can be approximated directly with the state, input information, and partially known dynamics.

The remainder of the paper is organized as follows. Section 2 presents the background concerning the decomposition of slow and fast modes and the optimal control theory for TTSSs. Section 3 designs the event-triggered sub-optimal control with an unknown slow dynamic and analyzes the stability of the original system. Section 4 demonstrates the efficiency of the proposed strategy using numerical examples, and finally, Sect. 5 concludes this work.

Notation: \(I_{n}\) denotes the identity matrix of dimension n, \(\parallel \!\cdot \!\parallel \) represents the Euclidean norm for vectors or the spectral norm for matrices, \({{\mathcal {R}}}_{+}\) is the set of positive real numbers, and \({{\mathcal {N}}}_{+}\) denotes the set of positive integers. For a matrix \(A\in {{\mathcal {R}}}^{n \times m}\), \(vec(A) =[a^T_1, a^T_2, \dots , a^T_m ]^T\), where \(a_i \in R^n\) is the ith column of \(A,\ i=1,2,\dots m\). \(tr(A)=\sum _{i = 1}^{n}a_{ii}\) indicates the trace, \( \otimes \) indicates the Kronecker product, \({\bar{\lambda }}(R),\, {\underline{\lambda }}(R)\) denote the maximum and minimum eigenvalue of matrix R, respectively, and \(O(\cdot )\) is the order of magnitude defined in [10]: vector function \(f(t,\varepsilon )\in {{\mathcal {R}}}^n\) is said to be \(O(\varepsilon )\) over an interval \([t_1,t_2]\) if there exist positive constants k and \(\varepsilon ^*\) such that

2 Problem formation

Consider the following linear continuous-time TTSSs described by

where \(x(t) \in {{\mathcal {R}}}^{n_{1}}\) is the slow state vector, \(z(t)\in {{\mathcal {R}}}^{n_{2}}\) is the fast state vector, \(u(t)\in {{\mathcal {R}}}^{m}\) and \(y(t)\in {{\mathcal {R}}}^{p}\) are the input and output, respectively. \(\varepsilon \) represents a small positive singular perturbation parameter. \(A_{11}\in {{\mathcal {R}}}^{n_{1}\times n_{1}},\ A_{12}\in {{\mathcal {R}}}^{n_{1}\times n_{2}},\ A_{21}\in {{\mathcal {R}}}^{n_{2}\times n_{1}},\ A_{22}\in {{\mathcal {R}}}^{n_{2}\times n_{2}}, B_{1}\in {{\mathcal {R}}}^{n_{1}\times m},\ B_{2}\in {{\mathcal {R}}}^{n_{2}\times m}\).

The objective is adjusting u to minimize the performance index

Let \(A=\begin{bmatrix} A_{11} &{} A_{12}\\ \frac{1}{\varepsilon } A_{21} &{} \frac{1}{\varepsilon }A_{22} \end{bmatrix}, B=\left[ \begin{array}{c} {B_1} \\ \frac{1}{\varepsilon }B_2 \end{array}\right] , C=[C_1, C_2].\) \(X=\left[ \begin{array}{c} x \\ z \end{array}\right] \), and the initial state \(X(t_0)=X_0=\left[ \begin{array}{c} x^0 \\ z^0 \end{array}\right] \). From the optimal control theory, the exact optimal control for the original system (1) with a performance index (2) is

where matrix P is the solution of the following Riccati equation

and the corresponding performance index is \(J_{opt}=\frac{1}{2}X_0^TPX_0\).

However, the traditional method cannot solve (4) directly due to numerical illness. An effective way is to divide the original system into two separate time-scale subsystems and design the controllers separately. Additionally, to reduce resource waste and consider the triggering factor, the controller only updates at the triggering instants, i.e., \(\{t_x^k\}\) and \(\{t_z^k\}\), \(k \in {{\mathcal {N}}}_{+}\), for the slow and fast subsystems, respectively. The input is written as

where \(K_1\in {{\mathcal {R}}}^{m\times n_{1}}\), \(K_2\in {{\mathcal {R}}}^{m\times n_{2}}\), and the sampled states \( {\hat{x}},\ {\hat{z}}\) are defined as

The errors between the sampled states and the real states are defined as

For convenience, we denote \(E=(e_1^T \ e_2^T)^T,\ K=(K_1 \ K_2)\).

Assumption 1

\(A_{22}\) is nonsingular.

Under Assumption 1, the following Chang transformation [8] will be used to decouple the slow and the fast modes.

where \(T=\begin{bmatrix} I_{n}-\varepsilon ML &{} -\varepsilon M\\ L &{} I_{m} \end{bmatrix}\), \(\xi \) and \(\eta \) denote the decoupled slow and fast dynamics of the original system, respectively. \(L\in {{\mathcal {R}}}^{n_{2}\times n_{1}}\) and \(M\in {{\mathcal {R}}}^{n_{1}\times n_{2}}\) are the solutions of the following equations,

where \(\varLambda _{11}=A_{11}+B_{1}K_{1},\,\varLambda _{12}=A_{12}+B_{1}K_{2},\,\varLambda _{21}=A_{21}+B_{2}K_{1},\,\varLambda _{22}=A_{22}+B_{2}K_{2}\).

Following the Chang transformation, the dynamics of the original system (1) with the input (5) can be transformed into

Let

With transformation (6), the input \(u_d\) can be divided into two parts, \(u_{d}=u_{sd}+u_{fd}\), concerning \({\hat{\xi }}\) and \({\hat{\eta }}\), respectively

where \(K_0\) and \(K_2\) will be separately designed for the slow and fast subsystems, respectively. By substituting \(\xi \), \(\eta \) with x and z by (6) and compare the parameters in (5), we obtain \(K_1=K_0+K_2L\). From [7], \(L=A_{22}^{-1}(A_{21}+B_2K_0)+O(\varepsilon )\). Let \(A_0=A_{11}-A_{12}A_{22}^{-1}A_{21},\ B_0=B_1-A_{12}A_{22}^{-1}B_2,\, \varLambda _{0}=A_0+B_0K_0\). In [9] and [10], it is pointed out that for \(O(\varepsilon )\) approximations, \(\varLambda _{11}-\varLambda _{12}L\) can be approximated by \(\varLambda _{0}\) and L can be approximated by \(\varLambda _{22}^{-1}\varLambda _{21}\). Along with (6), the transformed variables \(\xi , \eta \) can be approximated by

Remark 1

Note that although the anti-diagonal elements of the system matrix are zero in (7), the errors \(e_1\) and \(e_2\) coexist in the dynamic of \(\xi \) and \(\eta \), respectively, posing difficulties in the design of ETM.

When the inputs are updated continuously, \(E=0\), and if Assumption 1 holds, the transformed system (7) can be approximated by two subsystems. Then, the original optimal control problem of system (1) with performance index (2) can be converted to two subproblems as follows [20].

(1) For the approximated slow subsystem

where \(C_0=C_1-C_2A_{22}^{-1}A_{21}, D_0=-C_2A_{22}^{-1}B_2\). The objective is adjusting \(u_s\) to minimize the performance index

The reward \(r_s(t)\) can also be written in a standard quadratic form

where \(R_0=R+D_0^{T}D_0, \ G_s=C_0^T(I-D_0R_0^{-1}D_0^T) C_0, \ u_{sc}=u_s+R_0^{-1}D_0^TC_0x_s\). Note that \(I-D_0R_0^{-1}D_0^T =(I+D_0R^{-1}N_0^T)^{-1}>0\).

We define the value function as

Let \(A_s=A_0-B_{0}R_0^{-1}D_0^TC_0\). From the optimal control theory [11], if the Riccati equation

has a positive semidefinite stabilizing solution \(P_s\), then the optimal control can be constructed by

Accordingly, the optimal control of the slow subsystem (11) is

Since the system (11) is linear, under the optimal control \(u^*_s\), the optimal value function can be denoted in a quadratic form, that is

For the approximated slow subsystem, we define Hamilton function as

Under the optimal control \(u^*_{s}(t)\), it has

From the form of \(u_s^*\) in (15), it can be deduced that

(2) For the approximated fast subsystem

The objective is adjusting \(u_f\) to minimize the performance index

where \(G_f=C_2^TC_2\).

If the Riccati equation

has a positive semidefinite stabilizing solution \(P_f\), then the optimal control of the fast subsystem (18) is \(u_{f}^*=-R^{-1}B_2^TP_fz_f\).

Since the system (18) is linear, under the optimal control \(u^*_f\), the optimal value function can be denoted in a quadratic form, that is

Similarly, the Hamilton function of the fast subsystem is defined as

Then under the optimal control \(u^*_{f}(\eta )\)

With the obtained \(P_s\) and \(P_f\) by solving the above two subproblems, the optimal composite input of the original system (1) with the performance index (2), operating on the actual states x and z is

Assumption 2

The triples \((A_0,B_0,C_0)\) and \((A_{22},\, B_2, C_2)\) are stabilizable-detectable.

Lemma 1

[7] For the dynamics of the transformed system (7) with \(E=0\) and approximated by reduced order subsystems (11) and (18), the approximated states satisfy \(\xi (t)=x_s(t)+O(\varepsilon )x+O(\varepsilon )z, \ \eta (t)=z_f(t)+O(\varepsilon )x\). If Assumptions 1 and 2 hold, then (14) and (19) have unique positive semi-definite solutions \(P_s\) and \(P_f\), respectively. The composite feedback control \(u_c^*\) in (21) is \(O(\varepsilon ) \) close to \(u_{opt}\) defined in (3). Further, \(J_c\), the value of the performance index obtained by (2) with the controller \(u_c^*\), satisfies

\(\square \)

A brief proof of Lemma 1 is presented in the appendix part.

From Lemma 1 and considering the triggering factor, the optimal control for the slow subsystem \(u_{sd}^*\) is according to (15)

and the optimal control for the fast subsystem \( u_{fd}^*\) is

Hence, the event-triggered sub-optimal composite control is

For consistency with the standard quadratic form, we define \(u_{sdc}\) for the slow subsystem, which is an adaption form of \(u_{sc}\) in (12) as follows

3 Main results

In this part, we consider the situation where the slow dynamics are unknown, i.e., \(A_{11},A_{12}, B_1\) in (1) are unknown. So the solution of the Riccati equation (14) cannot be obtained directly. To deal with this problem, a Q-learning method based on available data is proposed.

For convenience, denote \(B_0'=B_{1}-M(B_2+\varepsilon LB_1)\). Referring to the time-triggered Hamiltonian function (16), (7) and their approximations, the event-triggered Hamiltonian functions are rewritten as follows.

Combining (9) leads to

Similarly,

With the derivation results in (25), define a Q function

Let

Then (26) can be written as

Since the vector \(W_c\) is an unknown constant vector, an approximated critic weight vector \({\hat{W}}_c\in {{\mathcal {R}}}^{n_{1}^2+3mn_1}\) is utilized. Accordingly, we define the following function \({\hat{Q}}\) to estimate Q.

Similarly, the actor weight \({\hat{W}}_a \in {{\mathcal {R}}}^{n_{1}}\) is introduced to construct the controller

the tuning laws of \({\hat{W}}_{c}\) and \({\hat{W}}_{a}\) will be introduced later.

Remark 2

Note that the unknown parameters are all integrated in \(W_c\), so \(Q(\xi ,u_{sd},KE)\) is considered as two separate parts, i.e., unknown and available information. Additionally, the second part of \(W_c\), \(vec(P_sB_0+C_0^TD_0)\), contains all the unknown information needed in the optimal controller in (22).

The overall controller is

As for the fast subsystem, the optimal controller, i.e., \(K_2=-R^{-1}B_2^TP_f\), is chosen.

According to the performance index defined in (12) and the Hamilton function (17), under optimal control (24), it can be obtained that

where T denotes a small fixed time interval.

Define the error related to the critic network as

Define the error related to the actor as

where \({\hat{W}}_{cp}=W_c(n_1^2+1:n_1^2+mn_1)\). Let \(\sigma =\varPhi _s(t)-\varPhi _s(t-T)\), \(K_c=\frac{1}{2}\Vert e_c\Vert ^2\), \(K_a=\frac{1}{2}\Vert e_a\Vert ^2\).

The tuning law for the weight of the critic network is designed as

where the convergence speed can be adjusted through \(\alpha _{c}\in {{\mathcal {R}}}_+\).

Due to the event-triggered mechanism, the controller only updates at triggering instants. The tuning law for the weight of the actor is designed as

where \(\alpha _{a}\in {{\mathcal {R}}}_+\) presents the convergence speed.

Let \(V_1(\xi )=\frac{1}{2}\xi ^{T} P_s \xi , \ V_2(\eta )=\frac{1}{2}\varepsilon \eta ^T P_f \eta \),

Apply (17) to replace \(P_sA_0+A_0^TP_s\)

From (9), (12) and the equation \(\xi ^TP_sB_0=-{u_{sc}^*}^T R_0\), it can be rewritten as

The performance index of the fast subsystem satisfies

Similarly, for \(V_2\), apply (20) to take the place of \(P_fA_{22}+A_{22}^TP_f\).

Since \(e_f=Le_1+e_2\), then

When \(\varepsilon \) is small enough, there exist \(G_s-O(\varepsilon )>G_s'>0, G_f-O(\varepsilon )>G_f'>0\). Let

By applying following inequalities to (34)

where \(\alpha _1, \alpha _2, \alpha _3\) and \(\alpha _4\) are parameters to be designed later.

Choose \(c_1,\ c_2,\ c_3\) and \(c_4\) which satisfies

\(c_1,\ c_2,\ c_3,\ c_4\) are assumed positive and \(c_2,\ c_3\), and \(c_4\) can be positive by adjusting the value of \(\alpha _1, \alpha _2, \alpha _3\), and \(\alpha _4\). The positivity of \(c_1\) will be easy to obtain after proving that \(l_1\) tends to zero as \({\tilde{W}}_a\) tends to the origin.

\(\mathbf {Theorem\ 1}.\) Consider the two-time-scale system (1) and suppose that Assumptions 1, 2, and (35) hold. The weights of the networks tune as (29)-(31) and the controller is designed as (28). As long as the corresponding controller updates when either of the following conditions is satisfied,

then, there exists \(\varepsilon ^{*}>0\), such that for all \(\varepsilon \in (0,\varepsilon ^*]\) and any bounded initial conditions \(x^0,\, z^0\), the states of (1) converge asymptotically to the origin, and the controller is sub-optimal. In addition, the optimal cost is guaranteed by

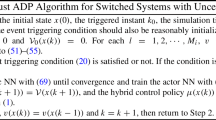

Figure 1 illustrates a flowchart of the implementation process, where (37) and (38) are the two triggering conditions for the slow and fast subsystems, respectively. Once they are triggered, the input controller will be updated.

Proof

First, we prove that the system is asymptotically stable. According to previous analysis,

The performance index of the fast subsystem satisfies

Note that the sum of terms concerning errors in \({\dot{V}}_1,\ {\dot{V}}_2\) satisfies

Then (34) can be rewritten as

If the system triggers when (37) or (38) is satisfied, then \( {\dot{V}}_1+{\dot{V}}_2<0\), and thus the states of the original system asymptotically converge to the origin.

Next, we prove that the parameters are convergent.

We define the weight estimation errors as

Then

We set \(V_3({\tilde{W}}_a)=\frac{1}{2}tr\{{\tilde{W}}_a^T{\tilde{W}}_a\}\) and define \( \bigtriangleup V_3=V_3({\tilde{W}}_a^{+})-V_3({\tilde{W}}_a)\). Then from the dynamic of \({\tilde{W}}_a\) in (40)

Since \(tr(AB)=tr(BA)\), it gets

By utilizing the following inequalities

where \(\beta _b\) can be arbitrary positive real number.

Then, it can be deduced that

Choosing proper \(\alpha _a, \beta _b\) to ensure \(1-\frac{\Vert 1-\alpha _a\Vert \beta _b{\bar{\lambda }}(R_0^{-1})^2}{2} -\frac{\alpha _a}{2}> 0\), then \(\bigtriangleup V_3< 0\) when \({\tilde{W}}_{a}\) is beyond a certain range than \({\tilde{W}}_{cp}\). Since \({\tilde{W}}_{cp}\), as part of \({\tilde{W}}_{c}\), converges to the origin, then \(\xi ^T(\frac{\Vert 1-\alpha _a\Vert }{2\beta _b}+\frac{\alpha _a}{2}{\bar{\lambda }}(R_0^{-1})^2){\tilde{W}}_{cp}{\tilde{W}}_{cp}^T)\xi \) tends to converge to the origin. So \(\hat{W_a}\) is near optimal. In addition, it implies that \(l_1\) becomes smaller and \(c_1>0\) in (36) is easier to satisfied.

Third, we prove that Zeno behavior is excluded.

For the slow mode, from (37), the triggering condition satisfies \(\frac{\Vert e_1(t)\Vert }{\Vert \xi (t)\Vert }\ge \frac{c_1}{c_2}\). For convenience, we denote \(s(t)=\frac{\Vert e_1(t)\Vert }{\Vert \xi (t)\Vert }\). From the dynamics of the subsystems (7), since the parameters are bounded, \({{\mathcal {F}}}_1\in {{\mathcal {R}}}_+\) satisfy \( \Vert {\dot{\xi }}(t)\Vert \le {{\mathcal {F}}}_1(\Vert \xi (t)\Vert +\Vert e_1(t)+\Vert e_2(t)\Vert )\) and \( \Vert {\dot{e}}_1(t)\Vert \le {{\mathcal {F}}}_1(\Vert \xi (t)\Vert +\Vert e_1(t)\Vert +\Vert e_2(t)\Vert )\). Then, it is further deduced that

As the states are convergent, it has \({{\mathcal {C}}}_1\in {{\mathcal {R}}}_+\) satisfying \({{\mathcal {C}}}_1\ge {{\mathcal {F}}}_1(1+\frac{\Vert {e}_1(t)\Vert }{\Vert \xi \Vert })\frac{\Vert {e}_2(t)\Vert }{\Vert \xi \Vert }\), then \(\frac{dt}{ds}\le \frac{1}{{{\mathcal {F}}}_1(1+s)^2+{{\mathcal {C}}}_1}\). Since s(t) changes from 0 to a number larger than \(\frac{c_1}{c_2}\) between the two triggering instants, then the triggering interval \(\tau _s=t_{x}^{k+1}-t_{x}^k>\int _0^{\frac{c_1}{c_2}}\frac{1}{{{\mathcal {F}}}_1(1+s)^2+{{\mathcal {C}}}_1}ds>0\).

Similarly, for the fast mode, \({{\mathcal {F}}}_2,{{\mathcal {C}}}_2\in {{\mathcal {R}}}_+\) satisfies \( \Vert {\dot{\eta }}(t)\Vert \le {{\mathcal {F}}}_2(\Vert \eta (t)\Vert +\Vert e_2(t)\Vert )\), \( \Vert {\dot{e}}_2(t)\Vert \le {{\mathcal {F}}}_2(\Vert \xi (t)\Vert +\Vert e_2(t)\Vert )\) and \({{\mathcal {C}}}_2\ge {{\mathcal {F}}}_2(1+\frac{\Vert {e}_2(t)\Vert }{\Vert \eta \Vert })\frac{\Vert {e}_1(t)\Vert }{\Vert \eta \Vert }\), the triggering interval \(\tau _f=t_{z}^{k+1}-t_{z}^k>\int _0^{\frac{c_3}{c_4}} \frac{1}{{{\mathcal {F}}}_2(1+s)^2+{{\mathcal {C}}}_2}ds>0\). Thus Zeno behavior is excluded.

Regarding the performance index employing the proposed event-triggered mechanism, [24] provides a similar proof. Under a precise optimal control \(u_{opt}\) in (3) and the sub-optimal event-triggered control \(u_{d}^*\), the performance indexes have the relation described in (39).

This completes the proof. \(\square \)

4 Simulation

This part conducts three simulation examples involving a four-dimensional system, a practical motor example, and a comparison study. The slow mode dynamics are assumed unknown, which means that \(A_{11}, A_{12}, B_1\) are unavailable.

(1)The effectiveness of the proposed method

Consider a four-dimensional system, the parameters are \(A_{11}={\left[ \begin{array}{cc} 0 &{} 0.4 \\ 0 &{} 0 \end{array} \right] }, A_{12}= {\left[ \begin{array}{cc} 0 &{} 0 \\ 0.345 &{} 0 \end{array} \right] }, A_{21}={\left[ \begin{array}{cc} 0 &{} -0.524 \\ 0 &{} 0 \end{array} \right] }, A_{22}={\left[ \begin{array}{cc} -0.465 &{} 0.262 \\ 0 &{} -1 \end{array} \right] },\ B_{1}\!=\! {\left[ \begin{array}{cc}0&0\end{array}\right] }^{T}\!, \ B_{2}= {\left[ \begin{array}{cc}0&1\end{array}\right] }^{T}\). We set \(\varepsilon =0.1\), \(C_1=C_2=\left[ 1\ 1\right] \), \(R=1\). The initial states are \(x(0)={\left[ \begin{array}{cc}6&5\end{array}\right] }^{T},\ z(0)={\left[ \begin{array}{cc}4&2\end{array}\right] }^{T}\) and \(\alpha _a=0.5, \alpha _c=2\). The trajectories of the states, triggering time, and inputs are depicted in Fig. 2. Furthermore, to highlight the approximate optimality of the proposed method, the optimal control is also presented in Fig. 2. By solving the Riccati equations of the slow and fast subsystems (14), and (19) respectively, we obtain \(u_c^*=[-2.0000 -2.5632]x+[-0.5953 -0.5205]z\) from (21). The solid line depicts the case under the proposed event-triggered control with unknown slow dynamics, while the dotted line illustrates that under optimal control. From Fig. 2, we observe that the states converge asymptotically to the origin. The minimum triggering intervals for the slow and fast dynamics are \(0.6730\ \mathrm {s}\) and \(0.0320\ \mathrm {s}\), respectively, and the average triggering intervals are \(1.0288\ \mathrm {s}\) and \(0.3182\ \mathrm {s}\).

(2) A DC-motor example

In this simulation case, a practical DC-motor example is considered, as described in [20], where the electromagnetic transient is regarded as a fast mode, while the torque response is rather slow. The dynamics of such systems can be modeled as

where \(J_m\) is the equivalent moment of inertia, \(\omega \) is the angular speed, b is the equivalent viscous friction coefficient, and \(k_m\) and \(k_b\) are the torque and back electromotive force, respectively. i, u and \(R_0\) represent the armature current, voltage, and resistance, respectively, \(L_i\) is a small inductance constant, and the system parameters are as follows: \(J_m = 0.093\ \mathrm{{kgm^2}}, k_m = 0.7274\ \mathrm{{Nm}}, k_b = 0.6\ \mathrm{{vs/rad}}, L_i = 0.006\ \mathrm {H}, R_0 = 0.6\ {\Omega }, b = 0.008\). Rewriting the latter formula in the form of (1), the parameters are \(\varepsilon =0.06\), \(A_{11}=-0.086, A_{12} =7.82, A_{21} =-0.6, A_{22}=-0.6, B_1=0, B_2=1\). Let \(C_1=2, C_2=1.\) We set \(\alpha _a=0.5, \alpha _c=20\). The calculated optimal control for the slow mode is \(u_{sc}^*(\xi )=-0.4741\xi \), and the initial values of the states are chosen as \(x_1(0)=6, x_2(0)=2\). At \(t=0.75\ \mathrm {s}, {\hat{W}}_a=-0.4769\). The error between the actor network weight with the proposed method and the one using the optimal control, tunes as Fig. 3 shows, indicating that \({\tilde{W}}_a\) is near the origin after \(0.75\ \mathrm {s}\), suggesting that the control gain is near-optimal. The triggering condition is also depicted in Fig. 3.

(3) Comparing ETM and the proposed method

This simulation aims to compare our work and [9]. The system parameters are the ones in the first case. In [9], the controller is also in the form of (5) where the control gains are \(K_1= [-0.6861 -\!1.1784]\), \(K_2=-R^{-1}B_2^{T}P_f=[-0.5953 -\!0.5205]\). Accordingly, \(K_0=[-0.2\ -0.1]\). The triggering condition is designed as \(\Vert e_{i}(t)\Vert \ge c_0+c_{1}e^{-{\alpha _{0}} t} \) where \(i=1,2\), \(e_{1}(t)\) and \(e_{2}(t)\) denote the error between the sampled and the real states of the slow and fast modes, respectively. Here \({\alpha _0}=0.1, c_0=0.05\) and \(c_{1}=0.2\) as [9].

Regarding the proposed method, to be consistent with the basic parameters in [9], the initial control gains, \(K_0, K_1\), and \(K_2\) are equal. Table 1 compares the triggering numbers, minimum triggering intervals and average triggering intervals between the proposed method and [9] for \(90\ \mathrm {s}\). The trajectories of the states and the triggering time are depicted in Fig. 4 along with the performance index. The latter figure highlights that in \(90\ \mathrm {s}\), although the triggering numbers of the proposed method are much smaller than ETM in [9], the suggested method has a lower cost. In [9], because of the triggering mechanisms, after some time, the threshold of the triggering condition has a minor change. The states influence the error’s rate of change, posing a critical impact on the triggering frequency (Fig. 3), while in the proposed method, the thresholds change together with the states. The triggering frequency in [9] is relatively high at the beginning and reduces when time goes to infinity, while for the proposed method, the change of the triggering frequency is rather gentle.

5 Conclusion

This paper presents an event-triggered sub-optimal control for TTSSs with unknown slow dynamics. Based on the singular perturbation theory, a composite event-triggered controller is designed. Additionally, we utilize a Q-function as a critic network in the form of the product of available information and unknown parameters. The controller is constructed using an actor network and is updated to be sub-optimal under the proposed event-triggered mechanism. Furthermore, we prove that the triggering time intervals are strictly positive, and thus, Zeno behavior is excluded. Moreover, the system’s global asymptotic stability is guaranteed. Future work will address TTSSs with actuator failure or fully unknown parameters for more general applications.

Data Availability

Data sharing is not applicable to this article as no datasets were generated or analyzed during the current study.

References

Podhaisky, H., Marszalek, W.: Bifurcations and synchronization of singularly perturbed oscillators: an application case study. Nonlinear Dyn. 69, 949–959 (2012)

Ganjefar, S., Mohammadi, A.: Variable speed wind turbines with maximum power extraction using singular perturbation theory. Energy 106, 510–519 (2016)

Bajodah, A.H.: Singularly perturbed feedback linearization with linear attitude deviation dynamics realization. Nonlinear Dyn. 53, 321–343 (2008)

Ha, M., Wang, D., Liu, D.: Event-triggered adaptive critic control design for discrete-time constrained nonlinear systems. IEEE Trans. Syst. Man Cybern. Syst. 50(9), 3158–3168 (2020)

Wang, N., Gao, Y., Zhao, H., Ahn, C.K.: Reinforcement learning-based optimal tracking control of an unknown unmanned surface vehicle. IEEE Trans. Neural Netw. Learn. Syst. 32(7), 3034–3045 (2021)

Abdelrahim, M., Postoyan, R., Daafouz, J.: Event-triggered control of nonlinear singularly perturbed systems based only on the slow dynamics. Automatica 52, 15–22 (2015)

Chow, J., Kokotovic, P.: A decomposition of near-optimum regulators for systems with slow and fast modes. IEEE Trans. Autom. Control 21(5), 701–705 (1976)

Chang, K.W.: Singular perturbations of a general boundary value problem. SIAM J. Math. Anal. 3(3), 520–526 (1972)

Bhandari, M., Fulwani, D.M., Gupta, R.: Event-triggered composite control of a two time scale system. IEEE Trans. Circuits Syst. II Express Briefs 65(4), 471–475 (2018)

Kokotović, P., Khalil, H.K., O’Reilly, J.: Singular perturbation methods in control: analysis and design. Society for Industrial and Applied Mathematics, USA (1999)

Anderson, B., Moore, J.B., Molinari, B.P.: Linear optimal control. IEEE Trans. Syst. Man Cybern. 93(4), 559 (1971)

Tognetti, E. S., Calliero, T. R., Morarescu, I. C., Daafouz, J.: Lmi-based output feedback control of singularly perturbed systems with guaranteed cost. In 2020 59th IEEE Conference on Decision and Control (CDC), (2020)

Babaghasabha, R., Khosravi, M.A., Taghirad, H.D.: Adaptive robust control of fully constrained cable robots: singular perturbation approach. Nonlinear Dyn. 85, 607–620 (2016)

Kodra, K., Gajic, Z.: Optimal control for a new class of singularly perturbed linear systems. Automatica 81, 203–208 (2017)

Li, J., Kiumarsi, B., Chai, T., Lewis, F.L., Fan, J.: Off-policy reinforcement learning: optimal operational control for two-time-scale industrial processes. IEEE Trans. Cybern. 47(12), 4547–4558 (2017)

Xue, W., Fan, J., Lopez, V.G., Li, J., Jiang, Y., Chai, T., Lewis, F.L.: New methods for optimal operational control of industrial processes using reinforcement learning on two time scales. IEEE Trans. Ind. Inf. 16(5), 3085–3099 (2020)

Xue, W., Fan, J., Lopez, V. G., Jiang, Y., Chai, T., Lewis, F. L.: Off-policy reinforcement learning for tracking in continuous-time systems on two time scales. IEEE Transactions on Neural Networks and Learning Systems, pp. 1–13, (2020)

Zhijun, F., Xie, W., Rakheja, S., Na, J.: Observer-based adaptive optimal control for unknown singularly perturbed nonlinear systems with input constraints. IEEE/CAA J. Autom. Sin. 4(1), 48–57 (2017)

Zhi-Jun, F., Xie, W.-F., Rakheja, S., Zheng, D.-D.: Adaptive optimal control of unknown nonlinear systems with different time scales. Neurocomputing 238, 179–190 (2017)

Yang, C., Zhong, S., Liu, X., Dai, W., Zhou, L.: Adaptive composite suboptimal control for linear singularly perturbed systems with unknown slow dynamics. Int. J. Robust Nonlinear Control 30(7), 2625–2643 (2020)

Lei, Y., Wang, Y.-W.: Irinel-Constantin Morarescu, and Romain Postoyan. Event-triggered fixed-time stabilization of two-time-scale linear systems. IEEE Trans. Autom. Control, p. 1, (2022)

Luo, B., Huang, T., Liu, D.: Periodic event-triggered suboptimal control with sampling period and performance analysis. IEEE Trans. Cybern. 51(3), 1253–1261 (2021)

Luo, B., Yang, Y., Liu, D., Huai-Ning, W.: Event-triggered optimal control with performance guarantees using adaptive dynamic programming. IEEE Trans. Neural Netw. Learn. Syst. 31(1), 76–88 (2020)

Vamvoudakis, K.G.: Event-triggered optimal adaptive control algorithm for continuous-time nonlinear systems. IEEE/CAA J. Autom. Sin. 1(3), 282–293 (2014)

Xue, S., Luo, B., Liu, D.: Event-triggered adaptive dynamic programming for unmatched uncertain nonlinear continuous-time systems. IEEE Trans. Neural Netw. Learn. Syst. 32(7), 2939–2951 (2021)

Deng, Y., Gong, M., Ni, T.: Double-channel event-triggered adaptive optimal control of active suspension systems. Nonlinear Dyn. 108, 3435–3448 (2022)

Zhang, G., Zhu, Q.: Event-triggered optimal control for nonlinear stochastic systems via adaptive dynamic programming. Nonlinear Dyn. 105, 387–401 (2021)

Zhao, F., Gao, W., Liu, T., Jiang, Z.-P.: Adaptive optimal output regulation of linear discrete-time systems based on event-triggered output-feedback. Automatica 137, 110103 (2022)

Zhang, Y., Zhang, J.-F., Liu, X.-K.: Implicit function based adaptive control of non-canonical form discrete-time nonlinear systems. Automatica 129, 109629 (2021)

Zhang, Y., Zhang, J.-F., Liu, X.-K.: Matrix decomposition-based adaptive control of non-canonical form mimo discrete-time nonlinear systems. IEEE Trans. Autom. Control, p. 1, (2021)

Vamvoudakis, K.G., Ferraz, H.: Model-free event-triggered control algorithm for continuous-time linear systems with optimal performance. Automatica 87, 412–420 (2018)

Yan, Y., Yang, C., Ma, X., Zhou, L.: Observer-based event-triggered control for singularly perturbed systems with saturating actuator. Int. J. Robust Nonlinear Control 29(12), 3954–3970 (2019)

Hua, T., Xiao, J.-W., Lei, Y., Yang, W.: Dynamic event-triggered control for singularly perturbed systems. Int. J. Robust Nonlinear Control 31(13), 6410–6421 (2021)

Guo, Z., Yao, D., Bai, W., Li, H., Renquan, L.: Event-triggered guaranteed cost fault-tolerant optimal tracking control for uncertain nonlinear system via adaptive dynamic programming. Int. J. Robust Nonlinear Control 31(7), 2572–2592 (2021)

Kucera, V.: A contribution to matrix quadratic equations. IEEE Trans. Autom. Control 17(3), 344–347 (1972)

Acknowledgements

This work is supported by the National Natural Science Foundation of China under Grants 62233006, 62173152 and 62103156 and the Natural Science Foundation of Hubei Province of China (2021CFB052).

Author information

Authors and Affiliations

Corresponding authors

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

Proof of Lemma 1

For the dynamics of the transformed system (7) and approximated reduced order subsystems (11) and (18), the approximated states satisfy \(\xi (t)=x_s(t)+O(\varepsilon )x+O(\varepsilon )z, \ \eta (t)=z_f(t)+O(\varepsilon )x\). If Assumptions 1 and 2 hold, then (14) and (19) have unique positive semi-definite solutions \(P_s\) and \(P_f\), respectively. The composite feedback control \(u_c^*\) in (21) is \(O(\varepsilon ) \) close to \(u_{opt}\) defined in (3). Furthermore, \(J_c\), the value of the performance index J defined in (2) of system (1) with control input (21), satisfies

With the substitution \(K_0=R_0^{-1}(D_0^TC_0+B_0^TP_s),\ K_2=-R^{-1}B_2^TP_f \), the closed loop of the system (1) with the feedback controller (21) is

where \( A^*=\)

With the transformation (6), from [10], it has

where \(A_s=A_0-\varepsilon A_{12}A_{22}^{-1}L(A_{11}-A_{12}L), \ B_s=B_0-\varepsilon A_{12}A_{22}^{-1}LB_1,\ A_f=A_{22}+\varepsilon LA_{12}, B_f=B_2+\varepsilon LB_1.\) Compared to (11) and (18), if \( K_2\) are designed such that \(Re \lambda (A_{22}+B_2K_2)<0\), the states \(\xi \) and \(x_s\), \(\eta \) and \(z_f\) are starting from the same bounded initial conditions, respectively. Then there exists \(\varepsilon ^*>0\), \(\forall \varepsilon \in [0,\varepsilon ^*] \), \(\xi (t)=x_s(t)+O(\varepsilon )x+O(\varepsilon )z\) and \( \eta (t)=z_f(t)+O(\varepsilon )x\) hold for all finite \(t\ge t_0\).

Then we prove the condition of the existence of \(P_s,\ P_f\).

It has been proved in [35] that the stabilizing solution \(P_s, P_f\) of the Riccati equation (14), (19) exist if \((A_s, B_0, {\hat{C}}_0)\) and \((A_{22}, B_2, C_2)\) is stabilizable-detectable, respectively, where \({\hat{C}}_0^T{\hat{C}}_0=G_s=C_0^T(I-D_0R_0^{-1}D_0^T)C_0\). Since \((A_s, B_0)\) is stabilizable if and only if \((A_0, B_0)\) is stabilizable, \(I-D_0R_0^{-1}D_0^T=(I+D_0R^{-1}N_0^T)^{-1}>0\), then there exists a nonsingularly \(Q_0\) such that \((Q_0C_0)^T(Q_0C_0)=G_s\). So, \((A_s, Q_0C_0)\) is detectable if and only if \((A_0, C_0)\) is detectable.

Finally, we give a brief proof about the performance between the composite control and optimal control from [7].

With (19), the composite controller (21) can be rewritten as

where \(P_m=[P_s(B_1R^{-1}B_2^TP_f-A_{12})-(A_{21}^TP_f+C_1^TC_2)](A_{22}-B_2R^{-1}B_2^TP_f)^{-1}\).

The solution of the Riccati equation (4) concerning the exact optimal control \(P(\varepsilon )\) possesses a power series expansion at \(\varepsilon =0\), that is \(P=\begin{bmatrix} P_1 &{} \varepsilon P_2\\ \varepsilon P_2^T &{}\varepsilon P_3 \end{bmatrix}+\sum _{n = 1}^{\infty }\frac{\varepsilon ^i}{i!} \begin{bmatrix} P_1^{(i)} &{} \varepsilon P_2^{(i)}\\ \varepsilon {P_2^{(i)}}^T &{}\varepsilon P_3^{(i)} \end{bmatrix}\) and bring it to (4), it can be deduced that

Since \(J_{opt}=\frac{1}{2}{X_0}^TP{X_0}\), \(J_{c}=\frac{1}{2}{X_0}^TP_c{X_0}\) where \(P_c\) is the positive definite solution of the following function \(P_c(A-SM_c)+(A-SM_c)^TP_c=-M_c^TSM_c-C^TC\), let \(P_c-P=W\). Comparing it with (4), deduces that \(W(A-SM_c)+(A-SM_c)^TW=-(P-M_c^T)S(P-M_c)\). W can also be written in a power series form at \(\varepsilon =0\), \(W=\sum _{n = 0}^{\infty }\frac{\varepsilon ^i}{i!} \begin{bmatrix} W_1^{(i)} &{} \varepsilon W_2^{(i)}\\ \varepsilon {W_2^{(i)}}^T &{}\varepsilon W_3^{(i)} \end{bmatrix}\), then from (41) and (42), \((P-M_c^T)S(P-M_c)=O(\varepsilon ^2)\). Then \(W_j^{(0)}=0\) and \(W_j^{(1)}=0, j=1,2,3\). Then \(W=O(\varepsilon ^2)\), which implies that \(J_c=J_{opt}+O(\varepsilon ^2)\). \(\square \)

Rights and permissions

Springer Nature or its licensor holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Hua, T., Xiao, JW., Liu, XK. et al. Event-triggered sub-optimal control for two-time-scale systems with unknown dynamics. Nonlinear Dyn 111, 2487–2500 (2023). https://doi.org/10.1007/s11071-022-07970-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11071-022-07970-x