Abstract

In this paper, two methods for parameter estimation of bilinear state-space systems with colored noise, which are expressed by ARMA model, are proposed. Using the hierarchical identification principle and gradient method, to reduce the computational cost, both the four-stage recursive least squares algorithm and the four-stage stochastic gradient algorithm are exploited by which parameter estimation error is reduced and the speed of convergence of parameters is increased. In addition, a bilinear state observer for state estimation is designed to make use of the estimated states in the four-stage recursive least squares and the four-stage stochastic gradient algorithms. Finally, a numerical example and a practical example are provided to indicate the superiority of the proposed methods. The results show that due to the data length increase, the estimation error of the parameters is reduced. Furthermore, the estimated parameters converge to the actual values in a short time.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

System identification has been the focus of many researchers in recent decades in various areas, for modeling linear [1, 2] and nonlinear systems [3,4,5]. The main goal of system identification is to utilize input/output data to obtain an appropriate model, in a way that the behavior of the identified model is as close as possible to the behavior of the actual system, as per a predefined criterion. The mathematical model thus created can be used for analysis or controller design [6,7,8,9].

Mathematical modeling is possible in several ways: analytical modeling (white-box), experimental modeling (black-box) and hybrid modeling (gray-box). In the white-box case, considering the nature of the components of the system and the physical laws governing them, mathematical models are formed between the input and the output of the system. In black-box approach, there is no information about the internal components of the system, and only by using the input /output data, a mathematical relationship is obtained between the input and the output. In the gray-box approach, the physical components of the system are distinctive; however, their values are unknown, and by using the input/output data, the unknown parameters can be determined. Several system identification methods have been proposed for linear and nonlinear systems [10, 11]. Since most industrial processes are complex and nonlinear, nonlinear systems identification has attracted a lot of attention in the past two decades. However, nonlinear system identification is much more difficult than linear systems identification [12]. In addition, due to the complexity of the systems, it is difficult to obtain physical models since the exact system model is not always available. Therefore, data-driven methods that are dependent on historical information of the system are recently developed to identify the system behavior. These methods do not require complex mathematical tools and are very useful in practice [13, 14].

Bilinear systems are a class of nonlinear systems that are widely studied and used due to their simplicity, and they can be considered as a suitable model for many physical systems [15,16,17]. In recent years, bilinear systems have drawn a significant amount of attention due to their intrinsic simplicity and wide applications [18]. The importance of such systems is due to their wide range of applications, not only in engineering but also in biology, economics and chemistry [19]. Such a system can explain many physical phenomena and it has been used in many fields, such as air conditioning control [20], immune system, heart regulator, control of carbon dioxide in the lungs and blood pressure [21,22,23]. There are a couple of methods that have been proposed for bilinear system identification, such as least-squares methods that are based on minimizing the error, i.e., in other words, the summation of square errors [24, 25], gradient methods [26, 27], maximum likelihood methods [28], iterative methods [29, 30], recursive methods [31,32,33] and error prediction methods [34]. In addition, interaction matrices approach is utilized for identification and observer design of bilinear systems [35, 36]. In [35], optimal bilinear observers are designed for bilinear state-space models, and a new method for identification of bilinear system is introduced in [36]. As can be seen in this paper, by using the interaction matrix formulation, the bilinear system state is expressed in terms of input/output measurement. In the iterative methods, to improve the estimation of the parameters, the algorithm uses all the data in every iteration and the results of the previous iteration. In these methods, the algorithm is applied once the input–output dataset is collected. In the recursive algorithms, the algorithm uses only the current data to improve the previous estimation. In [37], recursive extended least squares and maximum likelihood methods have been used to identify the bilinear system parameters. Gibson et al. [28] have provided maximum likelihood parameter estimation algorithm for bilinear system identification. Also, Kalman filtering algorithm for parameter estimation of bilinear systems has been proposed in [38]. In [39], the state-space model of bilinear systems has been converted to a transfer function by removing state variables and the recursive least squares algorithm and multi-innovation theory are used to increase the parameter estimation accuracy. In a multi-innovation identification algorithm, increasing the innovation length can increase parameter estimation accuracy and reduce the algorithm’s sensitivity to noise.

Li et al. [25] have presented a least-squares iterative algorithm to identify bilinear system parameters using the maximum likelihood. The maximum likelihood iterative least-squares algorithm can provide a more accurate estimate of bilinear systems than the iterative least-squares algorithm. In [40], an iterative algorithm based on the hierarchical principle is proposed to alleviate the complexity of computational load. The proposed algorithm can improve the accuracy of parameter estimation and reduce the computational load. In [41], to achieve a better accuracy, an algorithm using the Kalman filter and multi-innovation theory has been proposed. Both algorithms work well, and the Kalman filter-based multi-innovation recursive extended least squares algorithm has a higher parameter estimation accuracy than the Kalman filter-based recursive extended least squares algorithm. Using the principle of hierarchical identification [40] and data filtering method, a gradient iterative algorithm and a filtering-based gradient iterative algorithm have been presented in [26]. The proposed algorithms can provide very accurate estimates of bilinear systems. Two-step gradient-based iterative algorithm has less computational cost than gradient-based iterative algorithm and its convergence speed is faster than other two methods in this paper. The algorithms presented in [26] provide a better parameter estimation accuracy than the methods presented in [27]. In [42], a state filtering-based hierarchical identification algorithm has been proposed. In [43], multi-innovation-based stochastic gradient algorithm is presented by using the decomposition method. Decomposition-based multi-innovation stochastic gradient algorithm has higher accuracy than the decomposition-based stochastic gradient algorithm. Also, pursuant to hierarchical principle and data filtering, a least squares iterative algorithm is proposed for identification of bilinear systems [44]. Ding et al. [45] have provided stochastic gradient algorithm and gradient iterative algorithm to estimate the parameters of bilinear systems using an auxiliary model. Auxiliary model-based gradient iterative algorithm uses all the input–output data measured in each iteration which is more suitable than the auxiliary stochastic gradient algorithm. The auxiliary model-based gradient iterative algorithm is effective for bilinear systems in a white noise environment. With the help of subspace identification method, a data-driven design approach has been presented in [46]. In [47], the stochastic gradient algorithm using hierarchical identification principle and multi-innovation idea is developed. In addition, in [48], considering the data filtering method, an extended stochastic gradient algorithm has been proposed.

Although the recursive least-squares method is the most common estimation method among the various previously published methods and has a high convergence rate, it suffers from some drawbacks such as high computational burden. Therefore, in order to overcome this problem, other identification methods such as the principle of hierarchical identification are proposed that divide the main system into multiple subsystems with smaller dimensions to estimate unknown parameters. For example, in [25, 48], the computational load for identifying the system is high, and the hierarchical identification principle has been utilized to obtain an effective method for parameter estimation with higher computational efficiency. It has been shown that the suggested algorithms have less parameter estimation error compared to other presented algorithms.

Motivated by the above-stated concerns, a four-stage hierarchical identification algorithm is used in this paper to identify a bilinear system based on state-space equations. In this regard, a four-stage recursive least squares algorithm and a four-stage stochastic gradient algorithm are proposed for the identification of bilinear systems. To improve the computational efficiency using the hierarchical identification principle, the identification model is decomposed into four subsystems and the information vector is separately decomposed into four subvectors with smaller dimensions. In addition, an ARMA colored noise model is used in the presented model. Since only input/output data of the system is available in these algorithms, a state observer is used to estimate the system states, and then, the estimated states are used in the identification algorithm. Finally, the proposed algorithm presented for bilinear system identification is simulated and the convergence of the identified parameters is reported. The main contributions of this paper are listed as follows:

-

A four-stage recursive least squares algorithm and a four-stage stochastic gradient are proposed using the hierarchical identification principle to reduce the computational efficiency. The principle of hierarchical identification divides the main system into several subsystems with small dimensions. Also, the information vector is broken down into several information subdivisions.

-

A bilinear state observer is presented based on the Kalman filter algorithm for bilinear state-space estimation.

-

To show the high efficiency of the four-stage recursive least squares algorithm, a comparison of the computational efficiency between two recursive least squares and four-stage recursive least squares algorithm is provided.

The rest of this paper is organized as follows: In Sect. 2, the preliminary definitions, problem statement and the bilinear state-space system is presented. In Sect. 3, a four-stage recursive least squares algorithm is described. Section 4 shows the computational efficiency of the 4S-RLS algorithm. Section 5 presents a four-stage stochastic gradient algorithm. A numerical example and a practical example are presented in Sect. 6 to show the effectiveness of the proposed algorithm. Finally, the paper is ended by Sect. 7 with some concluding points.

2 Problem statement

In this section, first, a number of notations are explained. Superscript T represents the matrix transpose. \(\widehat{\rho }({t})\) is the estimation of the parameter \(\rho \) in time. I (\({I}_{n})\) represents identity matrix \(n\times n\), and \(q\) is the unit shift operator as

Figure 1 shows the state-space representation of bilinear systems. According to this figure, the bilinear system state-space model is defined as follows:

where \({\varvec{z}}(t)=[{{z}_{1}(t),{z}_{2}(t),\cdots ,{z}_{n}(t)]}^{{T}}\in {\mathbb{R}}^{n}\) is the state vector, \(\overline{u }\left(t\right)\) is the system input, \(\overline{y }\left(t\right)\) is the system output, \(\omega \left(t\right)=\frac{D\left(q\right)}{C\left(q\right)}v(t)\) is a colored noise and \(v\left(t\right)\in {\mathbb{R}}\) is a zero mean white noise. \(A\in {\mathbb{R}}^{n\times n}\), \(B\in {\mathbb{R}}^{n\times n}\), \(f\in {\mathbb{R}}^{n}\) and \(h\in {\mathbb{R}}^{1\times n}\) are the system matrices and vectors with an appropriate dimension as follows:

Using the shift operator, the polynomials \(D\left(q\right)\) and \(C\left(q\right)\) are defined as

According to the method presented in [48] and also using Eqs. (1) and (2), it can be concluded that

The parameter vector \(\rho \) is defined as:

where

and

In addition, the information vector \(\varphi \left(t\right)\) is defined as follows:

From (2), the colored noise equation can be written as

where the information vector \({\varphi }_{n}\left(t\right)\) is defined as follows:

Substituting (3) in (2) and according to the definition of information vectors, the bilinear system identification model in (1) and (2) can be expressed as:

2.1 Input–output model of bilinear system

The input–output relationship of a bilinear state-space system is obtained for the identification purpose by eliminating the state variables. By removing the state vector in Eqs. (1) and (2), the input–output relationship of the bilinear system is expressed as follows:

The following steps have been carried out to obtain (6). Therefore, using (1), one can write

Then, by using (7), the following equations are obtained directly:

Multiplying both sides of last equation of (8) by operator \(q\), we have

Replacing (9) in the last equation of (7) yields

Now, according to matrix representation of (8) and (10), one can write

In order to simplify (11), we define two vectors as follows:

Then, (11) can be written as

where

Then, Eq. (14) can be rewritten as

By substituting \(t\) with \(t-n\), we have

Replacing \({z}_{1}\left(t\right)\) in relation (2), the input–output relation of the bilinear state-space system in (1) and (2) is obtained as follows:

3 Four-stage recursive least squares algorithm

In this section, a four-stage recursive least squares algorithm is proposed to alleviate computational load, increase the convergence rate of the parameters to actual values and reduce the error simultaneously. According to the hierarchical principle, the main system is broken down into four subsystems; then, an algorithm is presented to estimate the unknown parameters of the bilinear system. Consider the following performance index:

Using the least-squares principle and minimizing the performance index, the recursive least squares algorithm can be written as

where \(R\left(t\right)\) is the covariance matrix and \({K}\left(t\right)=R\left(t\right)\varphi \left(t\right)\) is the gain vector. The first problem of identification is that only the input and output data are available. Since \(\varphi \left(t\right)\) contains unknown state variables, and \({\varphi }_{n}\left(t\right)\) consists of the noise variable \((\omega \left(t-i\right) ,i=1,2,..m)\), it is not possible to estimate the parameter \(\widehat{\rho }\left(t\right)\) with Eqs. (16)–(18). Therefore, a bilinear state observer must be designed for state estimation.

3.1 Bilinear state observer algorithm

As we know, Kalman filter algorithm is commonly used to estimate the states of linear systems. Here, in order to apply the Kalman filter for bilinear systems, Eqs. (1) and (2) should be written in the following form:

which may be considered as a Linear state-space model. Therefore, the Kalman filter can be used to design a bilinear state observecr [48].

where \({\widehat{R}}_{v}\left(t\right)=\frac{1}{t}\sum_{j=1}^{t}{[\overline{y }\left(j\right)-h\widehat{{\varvec{z}}}\left(j\right)]}^{2}\), \({K}_{z}\left(t\right)\) is the optimal vector of state observer and \({R}_{z}\left(t+1\right)\) is the covariance matrix state estimation error.

If the vectors and matrices \(A\), \(B\), \(f\) and n are unknown, the bilinear state observer in (19)–(21) cannot be used. Therefore, \(\widehat{{\varvec{z}}}\left(t\right)\) should be estimated by considering the estimated parameters.

Therefore, the parameter estimation vectors are defined as follows:

By substituting \(A\), \(B\) and \(f\) in (19)–(21) with the estimated matrices and vectors \(\widehat{A}(t)\), \(\widehat{B}(t)\) and \(\widehat{f}(t)\), we have

Thus, based on the bilinear state observer the estimates \(\widehat{{\varvec{z}}}(t)\) of the unknown states z(t) can be calculated.

Replacing an unknown state \({\varvec{z}}\left(t-i\right)\) with estimated \(\widehat{{\varvec{z}}}\left(t-i\right)\) and an unknown noise term \(\omega \left(t-i\right)\) with estimated \(\widehat{\omega }\left(t-i\right)\), the information estimation vectors are defined as:

It should be noted that the estimations of \(\omega \left(t\right)\) and \(v\left(t\right)\) are defined as:

The identification models 4 and 5 can be written in four subsystems as follows:

According to the least squares principle, by defining the criterion function, the recursive relationships are as follows:

The information vectors \({\varphi }_{z}\), \({\varphi }_{z\overline{u} }\) and \({\varphi }_{n}\) contain unknown states \(z\left(t\right)\), and Eqs. (29), (32), (35) and (38) are used to estimate unknown parameters. Therefore, the algorithm (29)–(40) cannot directly estimate the unknown parameters. Consequently, by substituting their estimations, we have the following relations:

Equations (41)–(61) consist of four recursive least-squares algorithms for the bilinear system (1) and (2). In summary, the steps of the above algorithm are given in Algorithm 1.

3.2 Convergence analysis

Assumption 1

Assume \(\{v\left(t\right)\}\) is random white noise with zero mean and abounded variance \({\sigma }^{2}\)

Lemma 1

For the four-stage recursive least squares algorithm in (41)–(61), for any c > 1, the following inequalities hold:

The proof of Lemma 1can be found in [49].

Theorem 1

For the system in (1)–(2) and the four-stage recursive least squares algorithm in (41)–(61), let

Assume that Conditions (62) and (63) hold, for any c > 1, we have

Proof

See the detailed proof in [50]. □

Theorem 2

For the identification model in Eq. (5) and the four-stage recursive least squares algorithm in Eqs. (41)–(61), assume that there are positive constants \({\alpha }_{1}\), \({\alpha }_{2}\), \({\alpha }_{3}\), \({\alpha }_{4}\), \({\beta }_{1}\), \({\beta }_{2}\), \({\beta }_{3}\), \({\beta }_{4}\) and large \(t\) such that the following persistent excitation conditions hold:

Then, four-stage recursive least squares parameter estimation errors converge to zero as t goes to infinity.

The proof is expressed in Appendix section.

4 The computational efficiency

Utilizing flops is an useful way to determine computsational efficiency [51]. Here, a flop is each operation of addition, multiplication, subtraction or division. In general, a division is presumed as a multiplication and a subtraction is presumed as an addition. Therefore, the algorithm can be represented by additions and multiplications. The number of multiplications and additions of the proposed algorithms are listed in Tables 1 and 2. In order to show the computational efficiency in the 4S-RLS algorithm, an RLS algorithm is presented for the sake of comparison. Tables 3 and 4 show that the computational load of the proposed algorithm is less than the RLS algorithm.

The flop difference between the RLS algorithm and the 4S-RLS algorithm is as follows: \(N_{2} - N_{1} = 6\left( {2n_{2} + n_{3} + n_{4} } \right)^{2} + 6\left( {2n_{2} + n_{3} + n_{4} } \right) - \left[ {6\left( {2n_{2}^{2} + n_{3}^{2} + n_{4}^{2} } \right) + 8\left( {n_{2}^{2} + 2n_{2} } \right) + 4\left( {2n_{2} + n_{3} + n_{4} } \right) } \right] = 4n_{2}^{2} + 24n_{2} n_{3} + 24n_{2} n_{4} + 12n_{3} n_{4} - 12n_{2} + 2n_{3} + 2n_{4} > 0\). Therefore, \({N}_{1}<{N}_{2}\) which means that the 4S-RLS algorithm is more efficient than the RLS algorithm.

5 Four-stage stochastic gradient algorithm

In this part, a four-stage stochastic gradient algorithm is considered to estimate the unknown parameters and reduce the computational burden.

The second-order criterion function is considered as follows:

By computing the gradient of \(J\), we have

According to the gradient search principle and minimizing the objective function, the stochastic gradient algorithm is obtained as follows:

Assume that \(\frac{1}{\mu (t)}\) is the step size.

where \(0\le \propto <1\) is a forgetting factor that can improve the accuracy of parameter estimation. Hence, the four-stage stochastic gradient algorithm is obtained as follows:

In summary, the steps of the proposed algorithm are presented in Algorithm 2.

6 Simulation results

In order to show the efficiency of the proposed method, two numerical examples are provided. The first example is a bilinear state-space system that should be identified, and the second one is a practical example where the system is represented by bilinear state-space model.

6.1 Numerical example

Consider a bilinear state-space system as follows:

The parameter vector for identification is

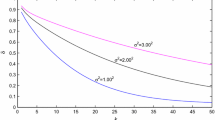

For simulation studies, consider a persistent excitation input signal \(u(t)\), where \(v(t)\) is a white noise with zero mean and variance \({\sigma }^{2}={0.10}^{2}\), \(\beta =0.88\), \(\propto =0.998\) and the data length is L = 3000. The parameter estimation error is calculated by \(\delta = \left\| {\rho \left( t \right) - \rho } \right\|/\left\| \rho \right\|\). The results of the parameters estimations and the error with the four-stage recursive least squares algorithm are presented in Fig. 2 and Table 5. In addition, the results of the four-stage stochastic gradient algorithm are shown in Fig. 3 and Table 6.

From the simulation results presented in the figures and the tables, the following conclusions can be derived: Convergence analysis shows that the proposed algorithms are effective and the estimated parameters by the proposed algorithms can converge to their real values. Figures 2 and 3 show that the estimation error decreases with a suitable speed. Also, the results presented in Tables 5 and 6 show the convergence of the parameters to the real values. The 4S-RLS algorithm can provide an effective parameter estimation compared to the 4S-SG algorithm. Due to the data length and the noise variance, the 4S-RLS algorithm has a smaller estimation error than the 4S-SG algorithm and also has a higher parameter estimation accuracy. The system states and their estimates are shown in Figs. 4 and 5, respectively. The estimated states correspond well to the actual system states, indicating that the bilinear state observer is effective.

6.2 Practical example

The pH neutralization would be a case study to demonstrate the effectiveness of the proposed methods. For this process, the input/output data are gathered using the GMN test signal [32]. In this process, which is a highly nonlinear process, acid flow (HNO3), base flow (NaOH) and buffer (NaHco3) are considered as input to the system, and the pH level is considered as the system output. The inputs can be regarded as (u1), (u2), (u3). In this process, it can be assumed that acid flow rate and tank capacity are constant. The structure of the pH neutralization process is shown in Fig. 6.

Structure of pH neutralization process [17]

In this example, it is assumed that only input/output data is available and the proposed methods are used to identify the system with bilinear state-space models. To identify the system, 4S-RLS and 4S-SG algorithms are used to estimate the vector parameter \(\widehat{\rho }\left(t\right)\) with data length t = N = 1280 and variance \({\sigma }^{2}={0.10}^{2}\). To confirm the results, the estimated output and the actual output data for the test dataset are shown in Figs. 7 and 8. The estimation error is calculated as \(e :=\frac{\Vert \widehat{y}\left(t\right)-y\Vert }{\Vert y\Vert }\times 100\). According to the simulation results, the obtained error value for the 4S-RLS algorithm is 3.9734% and for the 4S-SG algorithm is 5.5564%.

7 Conclusion

In this paper, the parameter identification of bilinear state-space systems with colored noise which is expressed by the ARMA model was investigated. The proposed methods are based on the hierarchy principle. Since the states of the system need to be used for identification and only the system input/output data are available, a bilinear state observer was designed to estimate the system states. By using the hierarchical identification principle and the gradient search, a four-stage recursive least-squares algorithm and a four-stage stochastic gradient algorithm were presented to reduce the computational burden.The simulation results have demonstrated that 4S-SG algorithm is efficient for identifying the bilinear systems, and the 4S-RLS algorithm outperforms the 4S-SG algorithm, with less estimation error. In addition, with increasing data length for different noise variances, the accuracy of the proposed method increases.

Data availability

Data sharing is not applicable to this article as no new data were created or analyzed in this study.

References

Ding, F., Wang, F., Xu, L., Wu, M.: Decomposition based least squares iterative identification algorithm for multivariate pseudo-linear ARMA systems using the data filtering. J. Frankl. Inst. 354(3), 1321–1339 (2017)

Ding, F., Xu, L., Zhu, Q.: Performance analysis of the generalised projection identification for time-varying systems. IET Control Theory Appl. 10(18), 2506–2514 (2016)

Wang, D.: Hierarchical parameter estimation for a class of MIMO Hammerstein systems based on the reframed models. Appl. Math. Lett. 57, 13–19 (2016)

Kazemi, M., Arefi, M.M., Alipouri, Y.: Wiener model based GMVC design considering sensor noise and delay. ISA Trans. 88, 73–81 (2019)

Wang, D., Zhang, W.: Improved least squares identification algorithm for multivariable Hammerstein systems. J. Frankl. Inst. 352(11), 5292–5307 (2015)

Zhang, C., Li, J.: Adaptive iterative learning control for nonlinear pure-feedback systems with initial state error based on fuzzy approximation. J. Frankl. Inst. 351(3), 1483–1500 (2014)

Zhang, H., Luo, Y., Liu, D.: Neural-network-based near-optimal control for a class of discrete-time affine nonlinear systems with control constraints. IEEE Trans. Neural Netw. 20(9), 1490–1503 (2009)

Xu, L.: A proportional differential control method for a time-delay system using the Taylor expansion approximation. Appl. Math. Comput. 236, 391–399 (2014)

Xu, L.: The parameter estimation algorithms based on the dynamical response measurement data. Adv. Mech. Eng. 9(11), 1687814017730003 (2017)

Beauduin, T., Fujimoto, H.: Identification of system dynamics with time delay: a two-stage frequency domain approach. IFAC-PapersOnLine 50(1), 10870–10875 (2017)

Bianchi, F., Prandini, M., Piroddi, L.: A randomized two-stage iterative method for switched nonlinear systems identification. Nonlinear Anal. Hybrid Syst. 35, 100818 (2020)

Liu, Q., Ding, F.: Auxiliary model-based recursive generalized least squares algorithm for multivariate output-error autoregressive systems using the data filtering. Circuits Syst. Signal Process. 38(2), 590–610 (2019)

Yin, S., Gao, H., Kaynak, O.: Data-driven control and process monitoring for industrial applications—part I. IEEE Trans. Ind. Electron. 61(11), 6356–6359 (2014)

Yin, S., Gao, H., Qiu, J., Kaynak, O.: Fault detection for nonlinear process with deterministic disturbances: a just-in-time learning based data driven method. IEEE Trans. Cybern. 47(11), 3649–3657 (2016)

Zhang, X., Ding, F., Xu, L., Yang, E.: State filtering-based least squares parameter estimation for bilinear systems using the hierarchical identification principle. IET Control Theory Appl. 12(12), 1704–1713 (2018)

Bruni, C., Dipillo, G., Koch, G.: Bilinear systems: an appealing class of" nearly linear" systems in theory and applications. IEEE Trans. Autom. Control 19(4), 334–348 (1974)

Hafezi, Z., Arefi, M.M.: Recursive generalized extended least squares and RML algorithms for identification of bilinear systems with ARMA noise. ISA Trans. 88, 50–61 (2019)

Hwang, C., Chen, M.-Y.: Parameter identification of bilinear systems using the Galerkin method. Int. J. Syst. Sci. 16(5), 641–648 (1985)

Balatif, O., Abdelbaki, I., Rachik, M., Rachik, Z.: Optimal control for multi-input bilinear systems with an application in cancer chemotherapy. Int. J. Sci. Innov. Math. Res. (IJSIMR) 3(2), 22–31 (2015)

Arguello-Serrano, B., Velez-Reyes, M.: Nonlinear control of a heating, ventilating, and air conditioning system with thermal load estimation. IEEE Trans. Control Syst. Technol. 7(1), 56–63 (1999)

Tsai, S.-H., Hsiao, M.-Y., Tsai, K.-L.: LMI-based fuzzy control for a class of time-delay discrete fuzzy bilinear system. In IEEE International Conference on Fuzzy Systems, pp. 796–801 (2009)

Figalli, G., Cava, M.L., Tomasi, L.: An optimal feedback control for a bilinear model of induction motor drives. Int. J. Control 39(5), 1007–1016 (1984)

Mohler, R.R.: Nonlinear Systems (vol. 2) Applications to Bilinear Control. Prentice-Hall, Inc., Englewood Cliffs (1991)

Dai, H., Sinha, N.: Robust recursive least-squares method with modified weights for bilinear system identification. IEE Proce. Control Theory Appl. 136(3), 122–126 (1989)

Li, M., Liu, X., Ding, F.: The maximum likelihood least squares based iterative estimation algorithm for bilinear systems with autoregressive moving average noise. J. Frankl. Inst. 354(12), 4861–4881 (2017)

Li, M., Liu, X., Ding, F.: The gradient-based iterative estimation algorithms for bilinear systems with autoregressive noise. Circuits Syst. Signal Process. 36(11), 4541–4568 (2017)

Li, M., Liu, X., Ding, F.: Least-squares-based iterative and gradient-based iterative estimation algorithms for bilinear systems. Nonlinear Dyn. 89(1), 197–211 (2017)

Gibson, S., Wills, A., Ninness, B.: Maximum-likelihood parameter estimation of bilinear systems. IEEE Trans. Autom. Control 50(10), 1581–1596 (2005)

Xu, L., Ding, F.: Iterative parameter estimation for signal models based on measured data. Circuits Syst. Signal Process. 37(7), 3046–3069 (2018)

Ding, F., Liu, P.X., Liu, G.: Gradient based and least-squares based iterative identification methods for OE and OEMA systems. Digital Signal Process. 20(3), 664–677 (2010)

Xu, H., Ding, F., Yang, E.: Modeling a nonlinear process using the exponential autoregressive time series model. Nonlinear Dyn. 95(3), 2079–2092 (2019)

Kazemi, M., Arefi, M.M.: A fast iterative recursive least squares algorithm for Wiener model identification of highly nonlinear systems. ISA Trans. 67, 382–388 (2017)

Xu, L., Ding, F., Gu, Y., Alsaedi, A., Hayat, T.: A multi-innovation state and parameter estimation algorithm for a state space system with d-step state-delay. Signal Process. 140, 97–103 (2017)

Fnaiech, F., Ljung, L.: Recursive identification of bilinear systems. Int. J. Control 45(2), 453–470 (1987)

Phan, M.Q., Vicario, F., Longman, R.W., Betti, R.: Optimal bilinear observers for bilinear state-space models by interaction matrices. Int. J. Control 88(8), 1504–1522 (2015)

Phan, M.Q., Čelik, H.: A superspace method for discrete-time bilinear model identification by interaction matrices. J. Astronaut. Sci. 59(1), 421–440 (2012)

Gabr, M.: A recursive (on-line) identification of bilinear systems. Int. J. Control 44(4), 911–917 (1986)

Hizir, N.B., Phan, M.Q., Betti, R., Longman, R.W.: Identification of discrete-time bilinear systems through equivalent linear models. Nonlinear Dyn. 69(4), 2065–2078 (2012)

Meng, D.: Recursive least squares and multi-innovation gradient estimation algorithms for bilinear stochastic systems. Circuits Syst. Signal Process. 36(3), 1052–1065 (2017)

Liu, S., Ding, F., Xu, L., Hayat, T.: Hierarchical principle-based iterative parameter estimation algorithm for dual-frequency signals. Circuits Syst. Signal Process. 38(7), 3251–3268 (2019)

Cui, T., Ding, F., Jin, X.-B., Alsaedi, A., Hayat, T.: Joint multi-innovation recursive extended least squares parameter and state estimation for a class of state-space systems. Int. J. Control Autom. Syst. 1–13 (2019)

Tsai, S.-H., Li, T.-H.S.: Robust fuzzy control of a class of fuzzy bilinear systems with time-delay. Chaos, Solitons Fractals 39(5), 2028–2040 (2009)

Lu, X., Ding, F., Alsaedi, A., Hayat, T.: Decomposition-based gradient estimation algorithms for multivariable equation-error systems. Int. J. Control Autom. Syst. 17(8), 2037–2045 (2019)

Li, M., Liu, X.: The least squares based iterative algorithms for parameter estimation of a bilinear system with autoregressive noise using the data filtering technique. Signal Process. 147, 23–34 (2018)

Ding, F., Xu, L., Meng, D., Jin, X.-B., Alsaedi, A., Hayat, T.: Gradient estimation algorithms for the parameter identification of bilinear systems using the auxiliary model. J. Comput. Appl. Math. 112575 (2019)

Luo, H., Li, K., Huo, M., Yin, S., Kaynak, O.: A data-driven process monitoring approach with disturbance decoupling. In: Data Driven Control and Learning Systems Conference, pp. 569–574 (2018)

Zhang, X., Ding, F., Xu, L.: Recursive parameter estimation methods and convergence analysis for a special class of nonlinear systems. Int. J. Robust Nonlinear Control 30(4), 1373–1393 (2020)

Zhang, X., Xu, L., Ding, F., Hayat, T.: Combined state and parameter estimation for a bilinear state space system with moving average noise. J. Frankl. Inst. 355(6), 3079–3103 (2018)

Chen, H., Ding, F.: Hierarchical least squares identification for Hammerstein nonlinear controlled autoregressive systems. Circuits Syst. Signal Process. 34(1), 61–75 (2015)

Zhang, X., Ding, F.: Recursive parameter estimation and its convergence for bilinear systems. IET Control Theory Appl. 14(5), 677–688 (2019)

Ding, F., Chen, H., Xu, L., Dai, J., Li, Q., Hayat, T.: A hierarchical least squares identification algorithm for Hammerstein nonlinear systems using the key term separation. J. Franklin Inst. 355(8), 3737–3752 (2018)

Funding

The authors have not disclosed any funding.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

Proof of Theorem 2

From the covariance matrix relations, we have

Considering the left side of inequalitie in (71) and the covariance relation in (A1), we have

Define

where \({r}_{0}\) is positive constant. As the elements of \({{R}_{1}}^{-1}(0)\) are nonnegative, by adding \({R}_{1}\left(0\right)\) to the right side of inequality in (A5), one can write

Therefore, we have

and

Similarly

Now, consider the right side inequalities in (71) and Theorem 1.

We add \({{R}_{1}}^{-1}(0)\) to the both sides of inequality (A11):

Similarly

According to Theorem 1, we have

Now, the proof is complete. □

Rights and permissions

Springer Nature or its licensor holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Shahriari, F., Arefi, M.M., Luo, H. et al. Multistage parameter estimation algorithms for identification of bilinear systems. Nonlinear Dyn 110, 2635–2655 (2022). https://doi.org/10.1007/s11071-022-07749-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11071-022-07749-0