Abstract

This paper investigates the event-triggered dissipative filtering problem for a class of networked semi-Markov jump systems. As a first attempt, the event-triggered communication scheme is introduced to save the limited network bandwidth and preserve the fixed system performance. By using the stochastic analysis, the information on the sojourn time between the mode jumps of the underlying systems is fully considered. By employing time-delay approach, the filtering performance analysis for the considered systems is presented, and then a co-design approach for the event-triggered mechanism and the dissipative filter is adopted such that the filtering error system is strictly dissipative. Finally, a numerical comparative example is used and a mass-spring system model as a realistic example is also provided to show the reduced conservatism and applicability of the proposed filtering scheme.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Networked control systems (NCSs) are a special kind of feedback control systems with control loops closed through a real-time network [8, 17, 33]. Roughly speaking, a typical networked control system consists of four fundamental components: (a) plants; (b) sensors/actuators; (c) controllers/filters; and (d) a shared communication network. More specifically, the information from control system components is transmitted through a communication network in NCSs. It has been recognized that there exist many advantages of NCSs compared to traditional ones, such as reducing system wiring, the low installation and maintenance costs, increasing system flexibility and high reliability. Therefore, it is easy to explain why NCSs have been attracting considerable attention and successfully applied in practice in several areas. Examples range from manufacturing, industrial process control, automation, to robotics. For more details, we refer readers to [1, 2, 37].

A fact in NCSs is that the communication bandwidth resource becomes more and more limited as the complexity of the network increases [12, 15]. As a consequence, some phenomena include, but are not limited to, congestion, quantization errors, network-induced delays, packet dropouts, inevitably exist in the applications of NCSs, which lead to some unfavorable factors for system performance or even instability [10, 11, 20, 22, 23, 38]. How to overcome such a difficulty is therefore a hot topic in the study of NCSs. Taking limited communication capacity into consideration, the last decades have witnessed a rapid growth in investigating data sampling schemes in the development of NCSs. Usually, there are two typical schemes to be applied, i.e., the time-triggered sampling scheme and the event-triggered one. The former has advantages of reducing the complexity and difficulty of analysis and design, and then has been extensively used to address the problem of state estimation or control for NCSs. It should be pointed out that the time-triggered scheme with the fixed sampling interval cannot perform the case when the measurement signals have little fluctuating [19]. Thankfully, the latter, i.e., the event-triggered scheme (ETS) has been proposed to screen the unnecessary information, which the trigger condition of transferring information is determined by the occurrence of an “event.” Its superiority in reducing the utilization of the scarce communication resource has been demonstrated in many works, e.g., see in [37] and [19]. It is hardly surprising that the design issue of NCSs based on the ETS has been widely concerned and an abundance of the literature has emerged in recent years. For instance, the problem of distributed event-triggered \(H_{\infty }\) filtering was presented in [4] for sensor networks, where each sensor node could be capable of determining whether or not to transmit the current sample information; the distributed event-triggered fuzzy filter was designed for a class of nonlinear networked control systems in [29]; the event-triggered \(H_{\infty }\) controller was designed for NCSs in [36], where a delayed system method was constructed.

On the other hand, it is known that Markov jump model has long enjoyed a good reputation for modeling many networked-induced phenomena, such as the random time delays and the packet dropouts [5, 9, 14, 24,25,26, 30,31,32] . Within the Markov jump systems (MJSs) framework, the problem of discrete-time \(H_{2}\) output tracking control for wireless NCSs was considered in [35], where the Markov chains were used to model the time delays; the \(H_{\infty }\) fault detection filter was designed in [16], in which NCSs were modeled by MJSs via using the multirate sampling method and the augmented state matrix method. As stated as previous, the event-triggered mechanism has a huge advantage in reducing the utilization of the communication resource; therefore, it is an interesting problem that the event-triggered mechanism is considered in the NCSs with Markov jump parameters [28]. However, in [5, 9, 14, 16, 24,25,26, 28, 30, 32] , the sojourn time between two successive jumps was assumed to obey the exponential probability distribution. Owing to the memoryless property of the exponential probability distribution, the transition rates of MJSs were required to be constant and independent of the past. Such a requirement may be unreasonable in many practical situation. In order to relax the limitation, the semi-Markov process has been introduced and a large quantify of results on semi-Markov jump systems (sMJSs) have been published. To name a few, the design of \(H_{\infty }\) controller for a class of sMJSs was presented in [6], where a sufficient condition for the existence of the controller was proposed; the analysis of the robust stochastic stability and the problem of robust control design for sMJSs were considered in [7, 39]. However, it is worthy noting that most of the existing results on networked MJSs have been reported based on the time-triggered sampling scheme, there are no attempts to the issue of dissipative filtering for networked sMJSs, and few efforts forward the co-design approach for the event-triggered mechanism and the dissipative filter for the underlying systems, which motivates the recent work.

In this paper, we are interested in coping with the problem of the event-triggered dissipative filtering for a class of networked sMJSs. An event-triggered mechanism is introduced as a sampling scheme aiming at the benefit of saving the limited network resources. A Markov switched Lyapunov functional is used to derive conditions of the existence of the desired filter. A networked mass-spring system model is provided to show the availability of the proposed approach. The main contributions of this paper are summarized as two following folds: (1) As a first attempt, a new class of filters named event-triggered dissipative filters are developed to reflect the limited communication links between the plant and the desired filter for networked sMJSs; (2) The improved delayed system approach is employed to deal with the event-triggered filtering problem by using some novel integral inequalities. As a result, the less conservative conditions are established than the existing ones, which is shown in Example 1 in Sect. 4.

The rest of this paper is outlined as follows. The formulation of problem under consideration is presented in Sect. 2. In Sect. 3, the dissipative filtering performance analysis and filter design are given. Two examples are provided to illustrate the efficiency of the proposed method in Sect. 4. Finally, conclusion is given in Sect. 5.

Notation Throughout this paper, \( \mathbb {R} ^{n}\) denotes the n-dimensional Euclidean space; for symmetric matrices P, the notation \(P\ge 0\) (respectively, \(P>0\)) means that the matrix P is positive semi-definite (respectively, positive definite); I and 0 represent the identity matrix and zero matrix with appropriate dimension, respectively. \(\mathcal {E}\left\{ \cdot \right\} \) denotes the expectation operator; the notation \(M^\mathrm{T}\) represents the transpose of the matrix M, and \(\mathrm{sym}\{M\}\) stands for \(M+M^\mathrm{T}\). \( \mathcal {L}_{2}\left[ 0,\infty \right) \) is the space of square-summable infinite vector sequences over \(\left[ 0,\infty \right) \). In symmetric block matrices or complex matrix expressions, an asterisk \((*)\) is employed to represent a term that is induced by symmetry. Matrices, if not explicitly stated, are assumed to have compatible dimensions.

2 Problem formulation

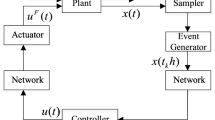

The networked system with an event-triggered communication scheme, as depicted in Fig. 1, contains a linear continuous-time system, a sensor, a sampler, an event detector, a zero-order hold (ZOH), a filter and a communication network. Indeed, the output signal of plant \(y\left( t\right) \) is transmitted over a communication network, where a networked dissipative filter will be designed to estimate the \(z\left( t\right) .\)

Considering the following plant which is characterized as a semi-Markov jump system represented by

where \(x\left( t\right) \in \mathbb {R}^{n}\) is the system state, \(y\left( t\right) \in \mathbb {R}^{m}\) is the measurement output and \(z\left( t\right) \in \mathbb {R}^{q}\) is the signal to be estimated, \(\omega \left( t\right) \in \mathbb {R}^{p}\) is assumed to be an arbitrary noise signal, and \(\omega \left( t\right) \in \mathcal {L}_{2}\left[ 0,\infty \right) \). \(A\left( \beta \left( t\right) \right) \), \(B\left( \beta \left( t\right) \right) \), \( C\left( \beta \left( t\right) \right) \), \(D\left( \beta \left( t\right) \right) \) and \(L\left( \beta \left( t\right) \right) \) are known real constant matrices with appropriate dimensions for each \(\beta \left( t\right) \in \mathcal {S}=\left\{ 1,2,\ldots ,r\right\} \). Fixed a probability space \(( \Omega ,\mathcal {F},\mathcal {P})\), where \( \Omega \) is a sample space, \(\mathcal {F}\) is the \(\sigma \)-algebra of subsets of the sample space and \(\mathcal {P}\) is the probability measure on \(\mathcal {F} \). The random variable \(\left\{ \beta \left( t\right) ,t\geqslant 0\right\} \) stands for a continuous-time discrete-state semi-Markov process and taking discrete values in a given finite set \(\mathcal {S}\) with transition probability matrix \(\prod \overset{\triangle }{=}\left\{ \pi _{ij}\left( \triangle \right) \right\} \) given by [13]

where \(\triangle >0\) is the sojourn time, lim\(_{\triangle \rightarrow 0}\left( o\left( \triangle \right) /\triangle \right) =0\) and \(\pi _{ij}\left( \triangle \right) \ge 0,\) for \(j\ne i,\) is the transition rate from mode i at time t to mode j at time \(t+\triangle \) and

In this paper, we are interested in designing a Markov switched filter described by the following state-space realization

where \(x_{f}\left( t\right) \) is the filter state vector, \(z_{f}\left( t\right) \) is the filter output vector, \(\bar{y}(t)\) is input signal of filter which from ZOH. \(A_{f}\left( \beta \left( t\right) \right) \), \(B_{f}\left( \beta \left( t\right) \right) \), \(C_{f}\left( \beta \left( t\right) \right) \) and \(D_{f}\left( \beta \left( t\right) \right) \) are the parameters of the filter to be determined. For brevity, we denote \( A_{i}=A\left( \beta \left( t\right) \right) \) and \(A_{fi}\) \(=A_{f}\left( \beta \left( t\right) \right) \) for each \(\beta \left( t\right) =i\in \mathcal {S}\), and the other symbols are similarly denoted.

Remark 1

In the time-triggered scheme, all the sampled data packets will be sent to ZOH for the filter designed. As a matter of fact, there is no need to transmit those data packets which carry little new information. In this case, how to obtain the threshold conditions to determine whether the current sampled data packets should be transmitted or not is a key question. It is obvious that the limited network bandwidth resources can be saved if we can only transmit the available sampled data packets. Fortunately, event-triggered scheme (ETS) could be applied as an effective solution to screen the unnecessary data packet transmission.

In this paper, an ETS is proposed, where the event detector is used to determine whether the newly sampled data packet \(\left( t_{k}+n,y\left( t_{k}h+nh\right) \right) \) should be stored and sent out to the filter at the same time by using the following threshold condition [36]:

where \(h\ \)is a constant sampling period, \(t_{k}h\) \(\left( k\in \mathbb {N} \right) \) is the triggered instant (or release instant), \(n=1,2,\cdots ,\rho _{k}\) with \(\rho _{k}=t_{k+1}-t_{k}-1\), \(\lambda _{i}\in \left[ 0,1\right) \) are the given scalar parameters that set the detection thresholds for each \( i\in \mathcal {S}\), \(\Lambda _{i}>0\) are the event-triggered matrices to be determined in the co-design.

Observe that the transmission delay phenomenon and the property of ZOH, we obtain

Furthermore, under the ZOH, the interval \([ t_{k}h+\tau _{t_{k}}, t_{k+1}h+\tau _{t_{k+1}}) \) can be written as

where

with \(n=1,2,\cdots ,\rho _{k}-1\), \(\mathcal {I}_{0}=\left[ t_{k}h+\tau _{t_{k}},t_{k}h+h+\hat{\tau }\right) \), and \(\mathcal {I}_{\rho _{k}}=\left[ t_{k}h+\rho _{k}h+\hat{\tau },t_{k+1}h+\tau _{t_{k+1}}\right) \), the network-induced delays \(\tau _{t_{k}}\in (0,\hat{\tau }]\), \(\hat{\tau }\) is the upper bound of \(\tau _{t_{k}},\rho _{k}\) is a positive integer.

Define the network delay \(\tau \left( t\right) \) and the error \(e_{k}\left( t\right) \) between the latest transmission data and the current sampled data as

then, we have

and \(\overset{\_}{y}(t)=\) \(y\left( t_{k}h\right) =e_{k}\left( t\right) +y\left( t-\tau \left( t\right) \right) .\)

Augmenting the system \(\left( \Sigma \right) \) to include the filter system (3), we can get the following filtering error system

where

and the error \(e_{k}\left( t\right) \) satisfies the following threshold condition

which is obtained from the triggering condition (4).

Definition 1

Given a scalar \(\alpha >0\), real matrices \(W_{1}^\mathrm{T}=W_{1}=-\bar{W} _{1}^\mathrm{T}\bar{W}_{1}\leqslant 0\), \(W_{2}\) and \(W_{3}=W_{3}^\mathrm{T}>0\), the system \(\left( 5 \right) \) is said to be stochastically stable and strictly \( \left( W_{1},W_{2},W_{3}\right) -\alpha -\)dissipative. Then, the following conditions are satisfied:

-

1.

the system \(\left( 5 \right) \) with \(\omega (t)=0\) is stochastically stable;

-

2.

under zero initial condition, the following condition is satisfied:

$$\begin{aligned}&\mathcal {E}\left\{ \int _{0}^{\gamma }e^\mathrm{T}\left( t\right) W_{1}e\left( t\right) +\mathrm{sym}\left( e^\mathrm{T}\left( t\right) W_{2}\omega \left( t\right) \right) \right. \nonumber \\&\quad \left. +\,\omega ^\mathrm{T}\left( t\right) W_{3}\omega \left( t\right) \mathrm{d}t\right\} \geqslant \alpha \int _{0}^{\gamma }\left[ \omega ^\mathrm{T}\left( t\right) \omega \left( t\right) \right] \mathrm{d}t,\nonumber \\ \end{aligned}$$(7)for any \(\gamma \geqslant 0\) and any nonzero \(\omega \left( t\right) \in \mathcal {L}_{2}\left[ 0,\infty \right) \).

Remark 2

By changing \(W_{1}\) and \(W_{2}\), the condition of (7) can degrade into the \(H_{\infty }\) performance index and the passive performance index as follows:

-

1.

when letting \(W_{1}=-I\), \(W_{2}=0\), and \(W_{3}>\alpha I\), the condition of (7) reduces to the \(H_{\infty }\) performance index;

-

2.

when letting \(W_{1}=0\), \(W_{2}=I\), and \(W_{3}>\alpha I\), the condition of (7) turns into the passive performance index.

Lemma 1

[18] Let \(f_{1},f_{2},\ldots ,f_{N}:\mathbb {R}^{m}\longrightarrow \mathbb {R}\) have positive values in an open subset \( \mathsf {A}\) of \(\mathbb {R}^{m}\). Then, the reciprocally convex combination of \(f_{i}\) over \(\mathsf {A}\) satisfies

subject to

Lemma 2

[21] For scalars \(0<h_{1}<h_{2}\) and matrices \( Z_{1}\in \mathbb {R}^{n\times n}\) and \(X=\left[ \begin{array}{cc} X_{11} &{}\quad X_{12} \\ X_{21} &{}\quad X_{22} \end{array} \right] \in \mathbb {R}^{2n\times 2n}\) satisfying

if there exists a vector function \(x:\left[ t-h_{2},t\right] \longrightarrow \) \(\mathbb {R}^{n}\) such that the integrations in the following are well defined, then

where

3 Main results

Theorem 1

For given scalars \(\alpha \), \(0\le \lambda _{i}<1\), \( h_{2}>h_{1}>0\), matrices \(W_{1}^\mathrm{T}=W_{1}=-\bar{W}_{1}^\mathrm{T}\bar{W} _{1}\leqslant 0\), \(W_{2}\) and \(W_{3}=W_{3}^\mathrm{T}>0\), if there exist real matrices \(\Lambda _{i}>0\), \(P_{i}>0\), \(Q_{1i}>0\), \(Q_{2i}>0\), \(T>0\), \( Z_{1}>0 \), \(Z_{2}>0\) and Y of appropriate dimensions such that the following matrix inequalities hold for each \(i\in \mathcal {S}\)

where

with

Then the closed-loop system \(\left( \tilde{\Sigma }\right) \) is stochastically stable and strictly \(\left( W_{1},W_{2},W_{3}\right) -\alpha - \)dissipative.

Proof

For the filtering error system \(\left( \tilde{\Sigma }\right) \), the Lyapunov–Krasovskii function for analyzing stability is constructed as

where

with \(P_{i}>0\), \(Q_{1i}>0\), \(Q_{2i}>0\), \(T>0\), \(Z_{1}>0\) and \(Z_{2}>0\).

Taking the time derivative along the trajectory of system \(\left( \tilde{ \Sigma }\right) \) yields

where

Using Jensen’s inequality, it can see that

And in light of Lemma 2, it is straightforward that

On the other hand, from Lemma 1, the following inequality holds

In view of (6), we define

and it is easy to yield that

Recall now that Definition 1 of the dissipation, we denote

Then, relying on the conditions (23) and (25), and substituting (20)–(24) to (18), (19), (23 ), it holds that

where

Then, according to condition (11) and Schur complement, it follows from (26) that,

relying on the conditions (23), one has

Under the zero initial condition, it is readily concluded that for any \( \gamma \geqslant 0\)

Thus, one can yield that

It results that the condition (7) is assured for any nonzero \( \omega \left( t\right) \in \mathcal {L}_{2}\left[ 0,\infty \right) \). Furthermore, when \(\omega (t)=0\), according to (27), there exists a scalar \(a>0\) such that

Then, following the similar line as the proof of Theorem 1 in [34], and applying Dynkin’s formula and Gronwall–Bellman lemma, we have

In this way, the considered system \(\left( \tilde{\Sigma }\right) \) with \( \omega (t)=0\) is stochastically stable. Thus, in view of Definition 1 , the system \(\left( \tilde{\Sigma }\right) \) is strictly \(\left( W_{1},W_{2},W_{3}\right) -\alpha -\)dissipative, which completes the proof of Theorem 1.

Remark 3

Note that the conditions in (11), (14) and (15) are not easy to be solved. The main reason is that they are dependent on the time-varying terms \(\sum _{j\in \mathcal {S}}\pi _{ij}\left( \triangle \right) \), and then not line matrix inequalities-based. In practice, the transition rate \(\pi _{ij}\left( \triangle \right) \) of semi-Markov process can be partly available [7]. Based on this consideration, as same as that in [7], \(\pi _{ij}\left( \triangle \right) \) is assumed to be in the bound of \(\left[ \pi _{ij}^{d},\pi _{ij}^{u}\right] \). As a result, an assumption on the \(\pi _{ij}\left( \triangle \right) \) can be naturally presented:

and

Having obtained the performance results, we are now ready to solve the event-triggered filtering problem. Note that from (11), the filter parameters are coupled with the matrices \(P_{i}\). A co-design scheme will be presented, and the dissipative filter parameters and the event-triggered matrix will be determined simultaneously based on Theorem 1.

Theorem 2

For given scalars \(\alpha \), \(0\le \lambda _{i}<1\), \( h_{2}>h_{1}>0\), matrices \(W_{1}^\mathrm{T}=W_{1}=-\bar{W}_{1}^\mathrm{T}\bar{W} _{1}\leqslant 0\), \(W_{2}\) and \(W_{3}=W_{3}^\mathrm{T}>0\), if there exist real matrices \(\Lambda _{i}>0\), \(G_{i}>0\), \(V_{i}>0\), \(Q_{1i}>0\), \(Q_{2i}>0\), \(T>0 \), \(Z_{1}>0\) and \(Z_{2}>0\) of appropriate dimensions such that (12)–( 13) and the following conditions hold for each \(i\in \mathcal {S}\)

where

with

Then the resulting filtering error system \(\left( \tilde{\Sigma }\right) \) is strictly \(\left( W_{1},W_{2},W_{3}\right) -\alpha -\)dissipative. In this case, the filtering gains \(A_{fi}\), \(B_{fi}\), \(C_{fi}\), and \(D_{fi}\) can be given by

Proof

First, since \(\pi _{ij}\left( \triangle \right) =\sum _{k=1}^{ \mathcal {M}}\chi _{k}\pi _{ij,k},\sum _{k=1}^{\mathcal {M}}\chi _{k}=1,\) \(\chi _{k}\ge 0,\) one can obtain that

which implies that \(\Xi _{1,i}<0\) is satisfied if condition (32) holds. As a similar way, we can find that \(\Xi _{2,i}<0\) is also guaranteed if condition (33) holds. Next, let us prove that conditions (30)–(31) can ensure that condition (11) is satisfied. To this purpose, we suppose that exist matrices \(P_{i}\) with the form of

and set \(G_{i}\overset{\Delta }{=}P_{1i}\), \(V_{i}\overset{\Delta }{=} P_{2i}P_{3i}^{-1}P_{2i}^\mathrm{T}\), \(P_{3i}S\overset{\Delta }{=}P_{2i}^\mathrm{T}.\) It is readily concluded that

Clearly, \(P_{i}>0.\) Furthermore, define \(J\overset{\Delta }{=}\mathrm{diag}\{I,S^\mathrm{T}, \underset{8}{\underbrace{I,\ldots ,I}}\},\) and pre- and post-multiplying both sides of (11) with J and its transpose, respectively, it follows from (28) that condition (11) holds if condition (30) is assured. According to Theorem 2, it can be concluded that the resulting filtering error system \(\left( \tilde{\Sigma }\right) \) is strictly \(\left( W_{1},W_{2},W_{3}\right) \)-\(\alpha \)-dissipative. This completes the proof.

4 Numerical examples

In this section, two examples are given to illustrate the effectiveness and improvement of the proposed design technique. In the first example, we consider a modified networked semi-Markov jump system, whose parameters are borrowed from [27]. By addressing the same issue, the less conservative results will be presented than those in [27]. In the second example, our aim is to illustrate the applicability of the proposed theoretical results, and for this end, the state estimation problem of the networked mass-spring system demonstrated in Fig. 2 will be taken into account.

A mass-spring system in Example 2

Example 1

In this example, we used the Markov jump system \(\left( \tilde{\Sigma }\right) \) with the following parameters [27]

In order to compare our results with that in [27], we first set \(D_{1}=D_{2}=0\), \(D_{f1}=D_{f2}=0\), \(h_{1}=0.01\)s and dissipative-based parameters \(\bar{W}_{1}=-1\), \(W_{2}=0\), \(W_{3}=0.26\), \(\alpha =0.1\). Then, the designed filter reduces to the \(H_{\infty }\) filter with the same \( H_{\infty }\) performance level in [27]. Let semi-Markov chain \(\beta \left( t\right) \) reduces to Markov chain with the transition matrix \( \Pi =\left[ \begin{array}{cc} -0.5 &{}\quad 0.5 \\ 0.3 &{}\quad -0.3 \end{array} \right] \). As stated in Remark 4 in [27], we also set \(S=I,\) and the next example has the same assumption. Table 1 lists the maximum \( h_{2}\) for the different thresholds \(\lambda _{i}\).

From Table 1, we can easily get two facts: on the one hand, the value of \( h_{2}\) reduces with the increasing of \(\lambda _{i}\). On the other hand, our method can tolerate bigger time-delay \(h_{2}\) than [27], which means the proposed method is superior to [27].

Example 2

As mentioned earlier, we consider a networked mass-spring system demonstrated in Fig. 2 in this example. Referring to [3], where \(x_{1}\) and \(x_{2}\) are two positions of massed \(M_{1}\) and \(M_{2}\), \(K_{c},K_{1},K_{2},K_{3},K_{4}\) are the stiffness of the springs, and c denotes the viscous friction coefficient between the masses and the horizontal surface. And the plant noise is defined by \(\omega (t).\) Denoting \(x^\mathrm{T}(t)=[x_{1}^\mathrm{T}(t),x_{2}^\mathrm{T}(t),\overset{\cdot }{x} _{1}^\mathrm{T}(t),\overset{\cdot }{x}_{2}^\mathrm{T}(t)]\), the state-space realization of the continuous-time semi-Markov jump system is described by the system (4) with the following parameters:

where \(M_{1}=1\) kg, \(M_{2}=0.5\) kg, \(K_{c}=1\) N/m, \(K_{1}=1\) N/m, \( K_{2}=1.04 \) N/m, \(K_{3}=1.09\) N/m, \(K_{4}=1.13\) N/m and \(c=0.5\) kg/s. In this example, suppose that the event-triggered thresholds are \(\lambda _{1}=0.1\), \(\lambda _{2}=0.3\), \(\lambda _{3}=0.2\), \(\lambda _{4}=0.3\), the transition rates of semi-Markov chain \(\beta \left( t\right) \) in the model are \(\pi _{12}(\triangle )\in [0,0.1]\), \(\pi _{13}(\triangle )\in [0.1,0.2]\), \(\pi _{14}(\triangle )\in [0.1,0.2]\), \(\pi _{21}(\triangle )\in [0.15,0.2]\), \(\pi _{23}(\triangle )\in [0.05,0.1]\), \(\pi _{24}(\triangle )\in [0.2,0.3]\), \(\pi _{31}(\triangle )\in [0.1,0.3]\), \(\pi _{32}(\triangle )\in [0.1,0.3]\), \(\pi _{34}(\triangle )\in [0,0.1]\), \(\pi _{41}(\triangle )\in [0.05,0.1]\), \(\pi _{42}(\triangle )\in [0.05,0.1],\) \(\pi _{43}(\triangle )\in [0.1,0.2]\), (\(i\ne j\)), which will be represented with a two-vertex polytope in view of Remark 3. The other parameters are chosen that \(\bar{W}_{1}=-1\), \(W_{2}=5\), \(W_{3}=15\), \(\alpha =0.1\), \(h_{1}=0.01\)s and \(h_{2}=1\)s. By solving the conditions in Theorem 2, we can get the event-triggered parameters \(\Lambda _{1}=2.3932\), \(\Lambda _{2}=1.2039\), \(\Lambda _{3}=1.7218\), \(\Lambda _{4}=1.1336\), and the filter gains are given as

On investigating the performance of the designed dissipative filter, we assume the initial condition \(x_{0}=\left[ \begin{array}{cccc} 1.5&-0.5&0.8&-1 \end{array} \right] ^\mathrm{T}\), \(x_{f0}=\left[ \begin{array}{cccc} 0&0&0&0 \end{array} \right] ^\mathrm{T}\) and the external disturbance is

Semi-Markov jump mode in Example 2

Release instants and intervals with an event-triggered scheme in Example 2

Giving a possible time sequences of the mode jumps for \(\beta \left( t\right) \) as in Fig. 3, the event-triggering release instants and intervals are shown in Fig. 4, and the state responses of closed-loop system are depicted in Fig. 5. Figure 6 shows the filtering error, where the curve demonstrates the effectiveness of our method. In addition, on the time interval [0, 50s] and the sampling period \(h=0.12\,\mathrm{s}\), only 137 sample data are sent to the ZOH through a communication network, which means that the transmission rate of sampled data packets (SDPs) defined as the number of successfully transmitted SDPs/the total number of SDPs is 32.9%, and it is obvious to save the resource utilization via ETS by 67.1% of the total communication resources.

State responses with an event-triggered scheme in Example 2

Filter error with an event-triggered scheme in Example 2

5 Conclusions

In this paper, the problem of co-designing a combined event-triggered communication scheme and dissipative filtering for networked control systems with semi-Markov jumping parameters has been investigated. An new event-triggered mechanism has been introduced to reduce the utilization of network bandwidth. Based on the Lyapunov–Krasovskii methodology and stochastic analysis, some sufficient conditions for the stochastic stability and the strictly dissipativity property of the resulting filtering error system have been established. Then, the explicit expression of the desired filter has been presented by solving a convex optimization problem. Finally, the effectiveness and superiority of our method have been demonstrated by a mass-spring system and a numerical example. It is noteworthy that all data package is assumed to be received in real time, which is difficult to achieve in practice. Therefore, when event-triggered scheme is adopted, how to relax such an assumption on the network communication is a significative question. Besides, the method presented in this paper is expected to be extended into more complex systems, for example, singular semi-Markov jump systems, nonlinear semi-Markov jump systems.

References

Ahn, C.K.: Switched exponential state estimation of neural networks based on passivity theory. Nonlinear Dyn. 67(1), 573–586 (2012)

Gaid, M.M.B., Cela, A., Hamam, Y.: Optimal integrated control and scheduling of networked control systems with communication constraints: application to a car suspension system. IEEE Trans. Control Syst. Technol. 14(4), 776–787 (2006)

Gao, H., Chen, T.: \(H_{\infty }\) estimation for uncertain systems with limited communication capacity. IEEE Trans. Autom. Control 52(11), 2070–2084 (2007)

Ge, X., Han, Q.-L.: Distributed event-triggered \(H_{\infty }\) filtering over sensor networks with communication delays. Inf. Sci. 291, 128–142 (2015)

He, S., Xu, H.: Non-fragile finite-time filter design for time-delayed Markovian jumping systems via T–S fuzzy model approach. Nonlinear Dyn. 80(3), 1159–1171 (2015)

Huang, J., Shi, Y.: \(H_{\infty }\) state-feedback control for semi-Markov jump linear systems with time-varying delays. ASME J. Dyn. Syst. Meas. Control 135(4), 041012 (2013)

Huang, J., Shi, Y.: Stochastic stability and robust stabilization of semi-Markov jump linear systems. Int. J. Robust Nonlinear Control 23(18), 2028–2043 (2013)

Kwon, O., Park, J.H., Lee, S.: Secure communication based on chaotic synchronization via interval time-varying delay feedback control. Nonlinear Dyn. 63(1–2), 239–252 (2011)

Lakshmanan, S., Park, J.H., Ji, D., Jung, H., Nagamani, G.: State estimation of neural networks with time-varying delays and Markovian jumping parameter based on passivity theory. Nonlinear Dyn. 70(2), 1421–1434 (2012)

Lee, T.H., Park, J.H., Jung, H., Lee, S., Kwon, O.: Synchronization of a delayed complex dynamical network with free coupling matrix. Nonlinear Dyn. 69(3), 1081–1090 (2012)

Lee, T.H., Park, J.H., Kwon, O.M., Lee, S.M.: Stochastic sampled-data control for state estimation of time-varying delayed neural networks. Neural Netw. 46, 99–108 (2013)

Lee, T.H., Park, J.H., Lee, S.M., Kwon, O.M.: Robust synchronisation of chaotic systems with randomly occurring uncertainties via stochastic sampled-data control. Int. J. Control 86(1), 107–119 (2013)

Lee, T.H., Qian, M., Xu, S., Park, J.H.: Pinning control for cluster synchronisation of complex dynamical networks with semi-Markovian jump topology. Int. J. Control 88(6), 1223–1235 (2015)

Li, Z., Park, J.H., Wu, Z.: Synchronization of complex networks with nonhomogeneous Markov jump topology. Nonlinear Dyn. 74, 65–75 (2013)

Liu, K., Suplin, V., Fridman, E.: Stability of linear systems with general sawtooth delay. IMA J. Math. Control Inf. 27, 419–436 (2011)

Mao, Z., Jiang, B., Shi, P.: \(H_{\infty }\) fault detection filter design for networked control systems modelled by discrete Markovian jump systems. IET Control Theory Appl. 1(5), 1336–1343 (2007)

Nilsson, J.: Real-time control systems with delays, vol. 1049. Department of Automatic Control, Lund Institute of Technology, Lund (1998)

Park, P., Ko, J.W., Jeong, C.: Reciprocally convex approach to stability of systems with time-varying delays. Automatica 47(1), 235–238 (2011)

Peng, C., Yang, T.C.: Event-triggered communication and \(H_{\infty }\) control co-design for networked control systems. Automatica 49(5), 1326–1332 (2013)

Sakthivel, R., Selvi, S., Mathiyalagan, K.: Fault-tolerant sampled-data control of flexible spacecraft with probabilistic time delays. Nonlinear Dyn. 79(3), 1835–1846 (2015)

Seuret, A., Gouaisbaut, F.: Jensen’s and Wirtinger’s inequalities for time-delay systems. IFAC Proc. Vol. 46(3), 343–348 (2013)

Shen, H., Huang, X., Zhou, J., Wang, Z.: Global exponential estimates for uncertain Markovian jump neural networks with reaction–diffusion terms. Nonlinear Dyn. 69, 473–486 (2012)

Shen, H., Xu, S., Lu, J., Zhou, J.: Passivity based control for uncertain stochastic jumping systems with mode-dependent round-trip time delays. J. Frankl. Inst. 349, 1665–1680 (2012)

Shen, M., Park, J.H., Ye, D.: A separated approach to control of Markov jump nonlinear systems with general transition probabilities. IEEE Trans. Cybern. 46(9), 2010–2018 (2016)

Shen, M., Yan, S., Zhang, G., Park, J.H.: Finite-time \(H_{\infty }\) static output control of Markov jump systems with an auxiliary approach. Appl. Math. Comput. 273, 553–561 (2016)

Shi, Y., Yu, B.: Robust mixed \( H_2/H_{\infty }\) control of networked control systems with random time delays in both forward and backward communication links. Automatica 47, 754–760 (2011)

Wang, H., Shi, P., Agarwal, R.K.: Network-based event-triggered filtering for Markovian jump systems. Int. J. Control 89(6), 1096–1110 (2016)

Wang, H., Shi, P., Lim, C.-C., Xue, Q.: Event-triggered control for networked Markovian jump systems. Int. J. Robust Nonlinear Control 25(17), 3422–3438 (2015)

Wang, H., Shi, P., Zhang, J.: Event-triggered fuzzy filtering for a class of nonlinear networked control systems. Signal Proc. 113, 159–168 (2015)

Wu, L., Zheng, W., Gao, H.: Dissipativity-based sliding mode control of switched stochastic systems. IEEE Trans. Autom. Control 58(3), 785–793 (2013)

Wu, Z., Shi, P., Su, H., Chu, J.: Asynchronous \(l_{2}\)-\(l_{\infty }\) filtering for discrete-time stochastic Markov jump systems with randomly occurred sensor nonlinearities. Automatica 50(1), 180–186 (2014)

Xiong, J., Lam, J.: Fixed-order robust \( H_{\infty }\) filter design for Markovian jump systems with uncertain switching probabilities. IEEE Trans. Signal Proc. 54(4), 1421–1430 (2006)

Xu, H., Jagannathan, S.: Stochastic optimal controller design for uncertain nonlinear networked control system via neuro dynamic programming. IEEE Trans. Neural Netw. Learn. Syst. 24(3), 471–484 (2013)

Xu, S., Lam, J., Mao, X.: Delay-dependent \( H_{\infty }\) control and filtering for uncertain Markovian jump systems with time-varying delays. IEEE Trans. Circuits Syst. I: Regul. Pap. 54(9), 2070–2077 (2007)

Yu, B., Shi, Y., Lin, Y.: Discrete-time \(H_{2}\) output tracking control of wireless networked control systems with Markov communication models. Wireless Commun. Mobile Comput. 11(8), 1107–1116 (2011)

Yue, D., Tian, E., Han, Q.-L.: A delay system method for designing event-triggered controllers of networked control systems. IEEE Trans. Autom. Control 58(2), 475–481 (2013)

Zhang, X.-M., Han, Q.-L.: Event-triggered dynamic output feedback control for networked control systems. IET Control Theory Appl. 8(4), 226–234 (2014)

Zhang, B., Lam, J., Xu, S.: Stability analysis of distributed delay neural networks based on relaxed Lyapunov–Krasovskii functionals. IEEE Trans. Neural Netw. Learn. Syst. 26(7), 1480–1492 (2015)

Zhang, L.X., Leng, Y., Colaneri, P.: Stability and stabilization of discrete-time semi-Markov jump linear systems via semi-Markov kernel approach. IEEE Trans. Autom. Control 61(2), 503–508 (2016)

Author information

Authors and Affiliations

Corresponding author

Additional information

This work was supported by the National Natural Science Foundation of China under Grants 61304066, 61473171, 61503002, the Natural Science Foundation of Anhui Province under Grant 1308085QF119, the Research Project of State Key Laboratory of Mechanical System and Vibration under Grant MSV201509.

Rights and permissions

About this article

Cite this article

Wang, J., Chen, M. & Shen, H. Event-triggered dissipative filtering for networked semi-Markov jump systems and its applications in a mass-spring system model. Nonlinear Dyn 87, 2741–2753 (2017). https://doi.org/10.1007/s11071-016-3224-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11071-016-3224-0