Abstract

In this paper, the problem of distributed adaptive neural control is addressed for a class of uncertain non-affine nonlinear multi-agent systems with unknown control directions under switching directed topologies. Via mean-value theorem, non-affine follower agents’ dynamics are transformed to the structures so that control design becomes feasible. Then, radial basis function neural networks are used to approximate the unknown nonlinear functions. Due to the utilization of a Nussbaum gain function technique, the singularity problem and requirement to prior knowledge about signs of derivative of control gains are removed. On the base of dynamic surface control design and minimal learning parameter approach, a simplified approach to design distributed controller for uncertain nonlinear multi-agent systems is developed. As a result, the problems of explosion of complexity and dimensionality curse are counteracted, simultaneously. By the theoretical analysis, it is proved that the closed-loop network system is cooperatively semi-globally uniformly ultimately bounded. Meanwhile, convergence of distributed tracking errors to adjustable neighborhood of the origin is also proved. Finally, simulation examples and a comparative example are shown to verify and clarify efficiency of the proposed control approach.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Investigations on the distributed control design for multi-agent systems (MASs) have become one of the most important topics in the control theory due to various applications of multi-agent systems in numerous fields, such as sensor networks, formation control, unmanned air vehicles (UAVs), and flocking models. During the past few years, two viewpoints concerning distributed control of MASs have been considered. Some researchers have employed distributed tracking approach (leader–follower consensus or synchronization to leader) to achieve prescribed common value, for instance, see [1–4]. On the other hand, some other researchers have applied distributed regulation control (leaderless consensus or synchronization) to achieve unprescribed common value, for instance, see [5–8]. However, compared with leaderless case, leader–follower configuration is an energy saving mechanism, which was found in many biology systems. Additionally, it can also enhance the communication and orientation of the flock [9]. As the development of distributed control approaches, many advanced control methods have been proposed for multi-agent systems such as robust control, adaptive control, and backstepping method. Moreover, by combining some of these advanced control methods, control performance for some kind of multi-agent systems has been improved. Hence, many significant results have been obtained for multi-agent systems with known dynamics or unknown dynamics with linearly parametric uncertainties [10–16].

On the other hand, fuzzy logic systems (FLSs) [17] and neural networks (NNs) [18] have been proved as powerful methods to approximate any smooth uncertain function over a compact set. Recently, many remarkable results have been achieved for a class of nonlinear multi-agent systems with low triangular structures and arbitrary uncertainties by combining adaptive backstepping method with FLSs or NNs. In [19] and [20], leaderless and leader–follower adaptive neural backstepping control approaches were proposed for nonlinear uncertain second-order multi-agent systems with arbitrary uncertainties. In [21] and [22], two backstepping consensus control approaches were proposed for high-order MASs. In [23], a distributed backstepping controller was developed for nonlinear stochastic multi-agent systems. However, control methods in [10–12] and [19–23] suffer from explosion of complexity, especially when the order of the follower agents increases, i.e., for high-order MASs or when the number of follower agents increases, i.e., large population MASs. To avoid this problem, DSC was firstly established in [24]. More recently, two distributed adaptive neural DSC control schemes were proposed for nonlinear strict-feedback multi-agent systems in [25] and [26].

In [19–22, 25] and [26], NNs are employed to approximate unknown nonlinear functions. However, in these control approaches the number of adaptive laws depends on the number of NN nodes. As a result, when the number of NN nodes to improve approximation accuracy is increased, corresponding adaptive parameters is also significantly increased. Hence, online learning time becomes unacceptably large. This problem is called dimensionally curse. Moreover, to achieve a close approximation of the nonlinear functions, it is important to choose the center vector and the width properly. This means that before distributed adaptive NN controller is designed, the basis function vector must be defined by choosing appropriate centers and widths for the Gaussian functions. If the nonlinear function is unknown, these parameters have to be selected by trial and error and it is difficult to select these parameters for the basis functions. These problems were removed in [27] by considering the norm of ideal weighting vector in NN as the estimation parameter instead of the elements of weighting vector for individual strict-feedback systems without considering communication on network. Motivated by [27], in this paper a novel distributed adaptive NN minimal learning parameter control approach is developed for uncertain nonlinear multi-agent systems. Via Young’s inequality only one adaptive parameter needs to be tuned regardless order of follower agent and number of NN nodes. Moreover, the proposed approach is independent of any prior knowledge of NNs.

One of the main features of the mentioned distributed control methods is that they require control inputs of MASs being in affine forms. However, control inputs can be appeared in non-affine forms in many real-world systems. Thus, these distributed control approaches cannot be directly applied for non-affine nonlinear multi-agent systems. To the best of authors’ knowledge, there are very few research results in the literature for controlling non-affine multi-agent systems and it remains as an open and challenging topic.

On the other hand, prior knowledge about signs of control gains may be unavailable in practice. This problem is so-called unknown control direction. It makes the control design to become much more difficult, since we cannot decide the direction along which the control operates [28]. Unfortunately, mentioned distributed control solutions are inadequate for multi-agent systems with unknown control directions. Luckily, [28] proved that Nussbaum gain function technique is a useful method to deal with unknown control direction problem. Then, in-depth study on unknown control direction was proposed in [29–32]. However, these controllers were designed to force the output of a single uncertain nonlinear system to follow a desired signal without exploiting of the communication graph theory. Recently, distributed controller design for multi-agent systems with unknown control directions becomes an attractive topic. As a first result, [33] proposed an adaptive consensus of nonlinear multi-agent systems with unknown identical control directions. Cooperative control of linear multi-agent systems with unknown nonidentical high-frequency-gain signs was developed in [34]. However, the results in [33] and [34] are presented for multi-agent systems for double- and single-integrator models, respectively. More recently, Ding [35] studied an adaptive consensus of high-order nonlinear multi-agent systems with unknown nonidentical control directions. However, consensus control methods in [33–35] require follower agents’ dynamics satisfy matching condition; the system functions are either known or parameterized, that is, the unknown parameters appear linearly with respect to the known nonlinear functions.

To the best of authors’ knowledge, a distributed adaptive neural control has not been reported for non-affine uncertain nonlinear multi-agent systems with unknown control directions. Toward this end, this paper proposed a distributed controller for this class of nonlinear multi-agent systems. At first via mean-value theorem, the original non-affine follower agents are converted to the structures that control design becomes feasible. RBFNNs are used to identify unknown nonlinear functions. Nussbaum gain function technique is employed to deal with unknown control directions. Additionally, the problems of “explosion of complexity” and “dimensionally curse” are removed via DSC and MLP approaches, respectively. Boundedness of all the signals in the closed-loop network is guaranteed by Lyapunov theory. Meanwhile, convergence of the distributed tracking errors to an adjustable neighborhood of the origin is also proved.

Compared with the cited references, the main contributions of the proposed method are as follows: (1) It is the first trial to design distributed adaptive neural controller for a class of nonlinear multi-agent systems in triangular structure with both of nonlinearly parameterized uncertainties and unknown control directions. In comparison, [25] and [26] presented distributed adaptive neural approaches for triangular form with known control directions. On the other hand, the proposed control approaches in [33–35] are only valuable for multi-agent systems with both of matching condition and linearly parameterized uncertainties; (2) via Young’s inequality the number of adaptive parameters in the proposed distributed controller is dramatically reduced, so that only one adaptive parameter is tuned for each follower agent. Also, the proposed controller is independent of prior knowledge of NNs. As a result, the computational burden is significantly alleviated and simpler adaptive NN is obtained than [25] and [26]; (3) the proposed adaptive control method can remove the problem of “explosion of complexity” in the conventional backstepping design and thus it becomes much simpler than the existing distributed adaptive backstepping controllers in [19–23]; and (4) unlike [29–32, 37], and [38], in this paper we consider multiple uncertain nonlinear systems, which can be regarded as follower agents and a leader agent under switching graph topology.

The remainder of this paper is organized as follows. In Sect. 2, problem statement, assumptions, and preliminaries are given. Section 3 is devoted to controller design and its stability analysis. In Sects. 4 and 5, simulation results and conclusions are reported, respectively.

2 Preliminaries and problem formulation

2.1 Preliminaries

2.1.1 Graph theory

To solve the coordination problem and model the information exchange between agents, according to [40] a brief introduction of graph theory is presented here.

Let \(G=\left\{ {v,E} \right\} \) be a directed weighted graph of order N,\(v=\left\{ {v_1 ,\ldots ,v_N } \right\} \) denotes the set of agents, \(E\subseteq v\times v\) denotes the set of edges and \(e_{ij} =(v_i ,v_j )\in E\) if and only if there exists an information exchange from agent i to agent j. The adjacency matrix represents topology of directed graph as \(A=[a_{ij} ]\in R^{N\times N}\) and \(a_{ij} >0\) if \((v_i ,v_j )\in E\); otherwise \(a_{ij} =0.\) The value \(a_{ij} \) in adjacency matrix A associated with the edges \(e_{ij} \) denotes the communication quality from the ith agent to jth agent. Throughout this paper, it is assumed that \(a_{ii} =0\) and graph topology associated with communication among agents may change over time. In other words, the adjacency matrix is time-variant. Furthermore, \(G=\left\{ {G_1 ,\ldots ,G_l } \right\} \) denotes a set including all possible communication topologies between agents. Laplacian matrix is defined as

where \(L\in R^{N\times N} \cdot D=diag(d_1 ,\ldots ,d_N )\) is the weighted degree of node i, where \(d_i =\sum \nolimits _{j=1}^N {a_{ij} }\). A directed graph has directed spanning tree if there exists agent called root such that a directed path from this agent to every other agents. Finally, define the leader adjutancy matrix as \(B_0 =diag(b_i )\in R^{N\times N} \) where \(b_i >0\) if only if ith agent has access to leader information; otherwise \(b_i =0.\) Also, H denotes \(L+B_{0}\).

2.1.2 NN approximation property

Owing to universal approximation property, learning, and fault tolerance, NNs have been widely used for the identification and control of uncertain nonlinear systems. In this paper, RBFNNs will be applied to approximate smooth uncertain nonlinear functions. RBFNNs can approximate any unknown continuous function \(f(Z):\mathfrak {R}^q \rightarrow \mathfrak {R}\) as

where \(Z\in \varOmega _Z \subset \mathfrak {R}^q \) is the input vector and q denotes the neural network input dimension. \(\Theta =[\theta _1 ,\ldots ,\theta _l ]^T \in \mathfrak {R}^l \) is the weight vector and \(l>1\) denotes the neural network nodes number. \(\varPhi (Z)=[\phi _1 (Z),\ldots ,\phi _l (Z)]^T \in \mathfrak {R}^l \) is the basis function vector where activity function\(\phi _i (Z)\) is defined as follows:

where \(\mu _i \in \varOmega _Z \) and \(\eta _i >0\) are the center and the width of the Gaussian function, respectively [18]. For any unknown nonlinear function f(Z) defined over a compact set \(\varOmega _Z \subset \mathfrak {R}^q \), there exists the neural network \(\Theta ^{{*}^T }\varPhi (Z)\) and arbitrary constant \(\delta (Z)\) such that

where \(\Theta ^{*}\) is the ideal constant weight vector defined by

and \(\delta (Z)\) denotes the approximation error and it satisfies \(\left| {\delta (Z)} \right| \le \varepsilon \).

2.2 Problem formulation

Consider a network of uncertain non-affine nonlinear strict-feedback systems consisting of N follower agents and one leader agent. The dynamics of ith follower agent is described by

where \(\overline{{x}}_{i,n_i } =[x_{i,1} ,x_{i,2} ,\ldots ,x_{i,n_i } ]^{T}\in \mathfrak {R}^{n_i },(x_i =\overline{{x}}_{i,n_i } )\) is the state vector of ith agent, \(u_i \in \mathfrak {R}\) and \(y_i \in \mathfrak {R}\) are the control input and the output of ith agent, respectively. \(\overline{{x}}_{i,k} =[x_{i,1} ,x_{i,2} ,\ldots ,x_{i,k} ]^{T}\in \mathfrak {R}^{k}(i=1,\ldots ,N,k=1,\ldots ,n_i -1)\cdot f_{i,k} (\overline{{x}}_{i,k} ):\mathfrak {R}^{k}\rightarrow \mathfrak {R}\) and \(f_{i,n_i } (u_i ,\overline{{x}}_{i,n_i } ):\mathfrak {R}^{n_i +1} \rightarrow \mathfrak {R}\) are unknown nonlinear affine and non-affine functions, respectively.

The leader agent, labeled as \(i=0\), is described by the following dynamics

where \(x_L \in \mathfrak {R}\) is a time-varying state of the leader agent and \(f(x_L ,t)\) is a bounded unknown function.

Control objective: The control objective is to design a distributed adaptive NN controller for a group of uncertain nonlinear systems consisting follower agents (4) and leader agent (5) such that all the signals in the closed-loop network remain CSGUUB and distributed tracking errors converge to an adjustable neighborhood of the origin.

To achieve the mentioned control objectives, we require some definitions and assumptions as follows:

Assumption 1

The state \(x_{i,1}\) of the ith follower agent is only known and available for the jth follower agent satisfying \(i\in N_j ,i=1,\ldots , N,j=1,\ldots , N\) and \(i\ne j\).

Remark 1

In contrast to the related literatures [10–13, 19–23, 25] and [26], only followers’ own states and outputs information between followers and their neighborhoods are required in the proposed design procedure due to the employment of the MLP approach. Hence, when the agents group has a large number of members, the proposed approach becomes more practical than existing results in the design of distributed controllers for MASs in block-triangular structures.

Assumption 2

The graph G consists of N follower agents and a leader, which contains a spanning tree rooted at the leader at all times.

Assumption 3

The leader output signal \(x_L \in R\) is bounded, known, and available for the ith follower agent if \(b_i \ne 0\). Also, its derivative \(\dot{x}_L \in R\) is bounded.

Definition 1

The trajectory \(\{x_i (t),t\ge 0\}\) of nonlinear follower agent (1) with initial conditions \(x_i (t_0 )\in \varOmega _{i0} \) (where \(\varOmega _{i0} \) is some compact set including the origin) under the communication graph is said to be cooperatively semi-globally uniformly ultimately bounded if there exist a constant \(\varepsilon _i >0\) and a time constant \(T_i (x_i (t_0 ),\varepsilon _i )>0,\) such that \(\left\| {x_i (t)} \right\| \le \varepsilon _i\), for all \(t\ge t_0 +T_i\).

Definition 2

[30] An even function \(N(\zeta ):\mathfrak {R}\rightarrow \mathfrak {R}\) is called a Nussbaum gain function, if the following properties hold

There are many functions satisfying the above conditions such as \(\zeta ^{2}\cos (\zeta )\) and \(\zeta ^{2}\cos ((\pi /2)\zeta )\).

Lemma 1

[30] Let V(t) and \(\zeta (t)\) be smooth functions which are defined on \([0,t_f )\), if there exist positive constants \(C_1 \) and \(C_2 \) satisfying the following inequality:

where \(g_{\mu _i } (t)\) is a time-varying parameter that takes values in the unknown closed interval \(I=[l^+ ,l^{-} ]\) with \(0\notin I.\) Then, \(e^{-C_1 t}\int _0^t {\sum \nolimits _{i=1}^N {\kappa _i (g_{\mu _i } (\tau )N(\zeta _i (\tau ))} } +1)\dot{\zeta }_i (\tau )e^{C_1 \tau }\mathrm{d}\tau , \quad \zeta _i (t)\) and V(t) must be bounded on \([0,t_f )\).

Lemma 2

[36] For \(\forall (x,y)\in \mathfrak {R}^2 \), the following inequality holds:

where \(\varepsilon >0,\;p>1,\;q>1\) and \((p-1)(q-1)=1\).

3 Main results

In this section, a DSC design procedure is employed to construct the distributed adaptive neural controller. The main idea is that the RBFNNs are employed to identify the uncertain nonlinear functions and the conventional adaptive methodology is used to estimate the upper bound of the norms of NNs weight vectors. Finally, a distributed adaptive neural DSC is designed via appropriate control Lyapunov functions. Furthermore, the Nussbaum function technique is utilized to remove requirement of the prior knowledge of the control gain sign.

For simplicity, we first introduce the unknown constant \(W_i^*\), which is specified as follows:

where \(N_{i,k} \ge \phi _{i,k}^T (\cdot )\phi _{i,k}(\cdot ),\) and \(W_i^*\) denotes the norm of the ideal weight vector of the neural network.

3.1 Distributed controller development

Similar to the traditional backstepping design method, our design procedure contains \(n_i \) step. The \(n_i \) step of distributed adaptive neural DSC design is based on the following change of coordinates for \(i=1,\ldots ,N,\;\;k=2,\ldots ,n_i \) as follows:

where \(z_{i,1} \) is the distributed error surface, \(z_{i,k} \) is the error surface and \(\pi _{i,k} \) is the state variable which is obtained through a first-order filter on an intermediate function \(\alpha _{i,k-1}\). Also, \(v_{i,k}\) is the filter error.

Note that the directed communication graph has infinite sequence of uniformly bounded nonoverlapping time intervals \([t_l ,t_{l+1} ),\;\;l=0,1,\ldots ,n\) with \(t_0 =0,t_{l+1} -t_l >0\) across which the communication graph is time invariant. The time sequence \(t_1 ,t_2 ,\ldots \) is named the switching sequence, at which the communication graph changes but the graph is fixed during each interval \(t\in [t_l ,t_{l+1} )\), in other words \(a_{ij} (t)=a_{ij}\) and \(b_i (t)=b_i \) for \(t\in [t_l ,t_{l+1} )\). In the following design, we study the case \(t\in [t_l ,t_{l+1} )\).

Step 1: By differentiating (9) along (4), one gets

Design the intermediate control function as follows:

where \(c_{i,1} >0\) and \(r_{i,1} >0\) are design parameters and \(\hat{{W}}_i \) is the estimation of \(W_i^*\).

Choose the Lyapunov function candidate as

By (10), (11), and (12), one has

in which

is an unknown nonlinear function. According to (3), for any given constant \(\delta _{i,1} (\overline{{Z}}_{i,1} )>0\), there exists the RBFNN \(\theta _{i,1}^{{*}T} \varphi _{i,1} (\overline{{Z}}_{i,1})\) such that

where \(\overline{{Z}}_{i,1} =[\overline{{x}}_{i,1} ,\overline{{x}}_{j,2} ,z_{i,1} ,\dot{x}_L ]^T ,j\in N_i , \delta _{i,1} (\overline{{Z}}_{i,1} )\) is defined as minimum approximation error and it satisfies \(\left| {\delta _{i,1} (\overline{{Z}}_{i,1} )} \right| \le \varepsilon _{i,1}\).

To reduce the number of tuned parameters for RBFNN, Young’s inequality is used such that only one learning parameter needs to be tuned for each follower agent as

Substituting (13) and (18) into (15) results in

where \(\tilde{W}_i=W_i^*-\hat{{W}}_i\) is the parameter error.

Introduce a new state variable \(\pi _{i,2}\), let \(\alpha _{i,1} \) pass through a first-order filter with time constant \(\ell _{i,2} >0\) as

By defining the filter error \(\nu _{i,2} =\pi _{i,2} -\alpha _{i,1}\) and using (20), one gets

where \(j\in N_i \) and \(B_{i,2} (\cdot )\) is a continuous function with the following expression

Step k \((2\le k\le n_i -1):\) From (10), time derivative of kth error surface is defined as follows:

Design the intermediate control function as follows:

where \(c_{i,k} >0\) and \(r_{i,k} >0\) are design parameters.

Consider the Lyapunov function candidate as

Applying (10), (11), (21), and (22), one has

in which

is an unknown nonlinear function. Now, we utilize the RBFNN \(\theta _{i,k}^{{*}T} \varphi _{i,k} (\overline{{Z}}_{i,k} )\) to approximate unknown continuous function \(\overline{{f}}_{i,k} (\overline{{Z}}_{i,k} )\) as

where \(\overline{{Z}}_{i,k} =[\overline{{x}}_{i,k} ,z_{i,k} ]^T ,\delta _{i,k} (\overline{{Z}}_{i,k} )\) is defined as minimum approximation error and it satisfies \(\left| {\delta _{i,k} (\overline{{Z}}_{i,k} )} \right| \le \varepsilon _{i,k}\).

According to (18), one obtains

Substituting (23) and (28) into (25) yields

Similar to the previous step, the first-order filter is defined as follows:

By defining the filter error \(\nu _{i,k+1} =\pi _{i,k+1} -\alpha _{i,k} \) and using (30), one gets

where \(B_{i,k+1} (\cdot )\) is a continuous function with the following expression

Step n \(_{{\mathbf {i}}}\): From (10), time derivative of the \(n_{i}\)th error surface is defined as follows:

Now, to simplify the distributed controller design, the mean-value theorem [36] is used to convert unknown non-affine functions into a structure, which is similar to affine form as follows:

where \(g_{\mu _i } :=g_i (\overline{{x}}_{i,n_i } ,u_{\mu _i } )=\frac{\partial f_{i,n_i } (\overline{{x}}_{i,n_i } ,u_i )}{\partial u_i }\left| {u_i =} \right. u_{\mu _i }, i=1,\ldots ,N,\) and \(u_{\mu _i } :=\mu _i u_i +(1-\mu _i )u_i^0 \) is some unknown point between \(u_i\) and \(u_i^0 \) in which \(\mu _i \) is \(0<\mu _i <1\).

Assumption 4

The sign of \(g_{\mu _i } \in \mathfrak {R}\) is unknown, and there exist unknown positive constants like \(\underline{g}_i \) and \(\overline{{g}}_i \) such that \(\underline{g}_i \le \left| {g_{\mu _i } } \right| \le \overline{{g}}_i <\infty \).

By substituting (33) into (32) and choosing \(u_i^0 =0\), we have

Take the Lyapunov function candidate as

Using similar procedure as step k, it follows

in which

Similarly, for any \(\delta _{i,n_i } (\overline{{Z}}_{i,n_i } )>0\), the RBFNN \(\theta _{i,n_i }^{{*}T} \varphi _{i,n_i } (\overline{{Z}}_{i,n_i })\) is used to approximate the unknown function \(\overline{{f}}_{i,n_i }\). According to (3), \(\overline{{f}}_{i,n_i } \) is defined as

where \(\overline{{Z}}_{i,k} =[\overline{{x}}_{i,n_i } ,z_{i,n_i }]^T ,\delta _{i,n_i } (\overline{{Z}}_{i,n_i } )\) is defined as minimum approximation error and satisfies \(\left| {\delta _{i,n_i } (\overline{{Z}}_{i,n_i } )} \right| \le \varepsilon _{i,n_i }\).

By exploiting the method utilized in (18), one obtains

Then, design the actual control function and Nussbaum function signal as follows:

where \(\kappa _i >0,\;c_{i,n_i } >0\) and \(r_{i,n_i } >0\) are design parameters.

Using (39), (40) and (41), (36) becomes

3.2 Stability analysis

In this subsection, the semi-global boundedness of all signals in the closed-loop network system is proved via Lyapunov’s direct method. Furthermore, it is shown that all error signals in the closed-loop network converge to an adjustable neighborhood of the origin by choosing appropriate design parameters.

Theorem 1

Consider the closed-loop network system consisting of non-affine nonlinear follower agents (4) and a dynamic leader agent (5) under switching directed topologies and Assumptions 1–4. Via control laws (13), (23) and (40), adaptive law as

and first-order filters (20) and (30), for any bounded initial conditions the following expressions are guaranteed

-

(1)

all signals in the closed-loop network remain CSGUUB;

-

(2)

distributed tracking errors converge to an adjustable compact set as follows:

$$\begin{aligned} \varOmega _{z_{i,1} } =\left\{ {\sum \limits _{i=1}^N {\left| {z_{i,1} } \right| ^{2}} \le 2c_0 } \right\} , \end{aligned}$$(44) -

(3)

the other error signals in the closed-loop network converge to an adjustable compact set as follows:

$$\begin{aligned}&\varOmega _\mathrm{errors}=\left\{ \sum \limits _{i=1}^N {\sum \limits _{k=2}^{n_i } {\left| {z_{i,k} } \right| ^{2}} } \le 2c_0 ,\right. \nonumber \\&\left. \quad \sum \limits _{i=1}^N {\sum \limits _{k=2}^{n_i } {\left| {v_{i,k} } \right| ^{2}} } \le 2c_0 , {\sum \limits _{i=1}^N {\left| {\tilde{W}_i} \right| ^{2}\le \sum \limits _{i=1}^N {2\gamma _i c_0 } } } \right\} ,\nonumber \\ \end{aligned}$$(45)where \(c_0 >0\) is given in the proof.

Proof

To prove the stability of closed-loop network system consisting of all the follower agents and a leader agent, the whole Lyapunov function candidate is given as

where \(\gamma _i >0\) is a design parameter.

By differentiating (46) and using (19), (29), and (42), one obtains

Applying (43) and choosing

(47) becomes

where \(c_i >0\) is a design parameter.

Based on the Young’s inequality, we have

Let \(A_{i,j} =\{V_{i,k} \le 2\upsilon _{i,k}\}\) where \((i=1,\ldots ,N,\;j=1,\ldots ,k)\) and \(\upsilon _{i,j} \) is a positive constant. Since \(A_{i,j} \subset R^{\dim (A_{i,j} )} \) and \(\varOmega _L \subset R\) are compact sets, thus, \(A_{i,j} \times \varOmega _L\) also is a compact set in \(R^{\dim (A_{i,j} )+1}.\) Hence, \(B_{i,k} (\cdot )\) have maximums on \(A_{i,j} \times \varOmega _L \) such that \(\left| {B_{i,k} (\cdot )} \right| \le M_{i,k}\). Therefore, one gets

where \(\varpi _{i,k} \) is a design parameter. Now, by choosing

and according to the (50), (49) becomes

where \({c}'_i >0\) is a design parameter.

Now, by defining constants as

(54) becomes

It implies that during each interval \([t_l ,t_{l+1} )\), V(t) is bounded. As the time increases, from (57) and Lemma 1, one infers that all the error signals in the closed-loop network system are bounded. Furthermore, ultimate bounds of error signals in the closed-loop network by evoking (46) and using Rayleigh-Ritz inequality [36] are obtained as follows:

Note the fact that

Applying Assumption 2, we have

Then, using Assumption 3, we have

where \(e_i =y_i -x_L \) is the consensus error, \(\overline{{e}}=[e_1 ,\ldots ,e_N ]^T , \quad \overline{{z}}_{N,1} =[z_{1,1} ,\ldots ,z_{N,1} ]^T ,\quad \overline{{1}}=[1,\ldots ,1]^T \in \mathfrak {R}^N , \quad \overline{{y}}=[y_1 ,\ldots ,y_N ]^T \) and \(\underline{\sigma }(H)\) denotes minimum singular value of H.

The signals \(z_{i,k}, v_{i,k}\) and \(\tilde{W}_i\) for \(i=1,{\ldots }, N, k=1,{\ldots },n_{i}\), in the closed-loop system are bounded. Further, \(\hat{{W}}_i\) is bounded as \(W_i^*\) is a constant. According to (64), we can infer that \(y_{i},i=1,{\ldots },N\) is bounded. Noting that \(z_{i,k}, v_{i,k}\), and \(\hat{{W}}_i \) are all bounded for \(i=1,{\ldots }, N, k=1,{\ldots },n_{i}\), we can conclude that for \(i = 1,{\ldots }, N, k=1,{\ldots },n_{i}-1, \alpha _{i,k}\) is bounded. Also, from (20) and (30), the boundedness of \(\pi _{i,k}\) is obtained for \(i=1,{\ldots },N,k=2,{\ldots },n_{i}\). For \(i=1,{\ldots },N,k=2,{\ldots },n_{i}\) by using \(x_{i},_{k}=z_{i,k} + \pi _{i,k }\) and the boundedness of \(z_{i},_{k}\) and \(\pi _{i,k}\), the boundedness of \(x_{i,k}\) is obtained. Finally, according to (40) and (41), we can infer that Nussbaum function signal and control input are also bounded. Hence, all the signals in the closed-loop system are bounded.

Therefore, this proof is complete. \(\square \)

Remark 2

In the proposed control design, the achievement of asymptotic stability is impossible due to the utilization of RBFNNs and DSC strategy. However, as previously proved, all the error signals in the closed-loop network converge to ultimate bounds. Two points of view are suggested to reduce the sizes of ultimate bounds: (1) increasing \(C_{1 }\) and (2) decreasing \(C_{2}\). However, the increase of \(C_{1 }\) not only reduces the sizes of ultimate bounds but also increases the convergence rate of closed-loop error signals to ultimate bounds. To attain these objectives, design parameters should be adjusted suitably as follows:

-

(1)

By increasing control gains, sizes of ultimate bounds are reduced. However, it causes those sizes of control inputs in the transient performance become very large, which is not acceptable in practical applications.

-

(2)

By decreasing sigma modification factors, sizes of ultimate bounds are reduced. However, it may lead to parameter drifts in adaptive laws.

Remark 3

In the adaptive control design methods for MASs proposed in [19–21, 25] and [26], neural networks (or fuzzy systems) are applied to approximate the uncertain nonlinearities. Hence, to achieve smaller approximation errors for the uncertain functions, it is important to choose the center and the width vectors. However, for uncertain functions, these parameters have to be selected by trial and error and it is difficult to select these parameters for the basis functions vector (or member ship function), properly. Moreover, in the mentioned approaches, the number of tuned parameters is directly depended on the order of each agent and the number of the NN nodes (or fuzzy rules). However, in the proposed approach, a priori knowledge of the centers of the receptive field and the width of the Gaussian functions is not necessary. On the other hand, only one adaptive parameter is needed to be updated for each agent regardless of the agent’s order and number of NN nodes, which makes the obtained results more useful in practice.

Remark 4

If the first equation in the multi-agent system (4) becomes \(\dot{x}_{i,k} =f_{i,k} (\overline{{x}}_{i,k+1} )\), where \(f_{i,k} (\overline{{x}}_{i,k+1} )\ne 0\) is an unknown nonlinear function and sign of its derivative about \(x_{i,k+1} \) is unknown, the above distribted adaptive control scheme can also be applicable if the following slight modifications are made. That is, the intermediate control functions (13) and (23) are modified as follows for \(i=1,\ldots ,N,k=1,\ldots ,n_i -1\)

where \(\dot{\pi }_{i,1}\) denotes 0.

4 Simulation study

In this section, two simulation examples and one simulation comparison are provided to illustrate the effectiveness and merits of the proposed distributed adaptive neural control approach.

4.1 Numerical example

Consider uncertain non-affine nonlinear follower agents with unknown control directions as follows:

where \(i=1,2,3\). Unknown nonlinear functions in the dynamics’ follower agents are \(f_{1,1} (x_{1,1} )=-0.9x_{1,1} +x_{1,1}^2 , \quad f_{1,2} (\overline{{x}}_{1,2} ,u_1 )= -3u_1 +\sin (u_1 )+x_{12} \sin (x_{1,1} ), f_{2,1} (x_{2,1} )= -x_{2,1} ,f_{2,2} (\overline{{x}}_{2,2} ,u_2 )= -7u_2 -\frac{u_2^3 }{3}+x_{1,2}^2 +0.5x_{2,1} \cos (x_{2,1}^2 ),f_{3,1} (x_{3,1})=x_{3,1} \sin (x_{3,1}),f_{3,2} (\overline{{x}}_{3,2} ,u_3)=-9u_3 -0.4x_{3,2} \sin (x_{3,1}).\)

To achieve the mentioned control objectives, design parameters for intermediate control law (13), actual control law (40), first-order filter (20), and adaptive law (43) are chosen as \(c_{1,1} =10,c_{1,2} =6,c_{2,1} =20,c_{2,2} = \quad 3, c_{3,1} =30, c_{3,2} =2, \ell _{1,2} =\ell _{2,2} =\ell _{3,2} =100, \kappa _1 =100, \kappa _2 =2,\kappa _3 =100,\gamma _1 =\gamma _2 =\gamma _3 =50,\sigma _1 =\sigma _2 =\sigma _3 =0.001.\)

The leader agent output is assumed to be \(x_L =\sin (t)\). The initial states of the three follower agents and the distributed controller are chosen as \(x_1 (0)=[0.1,0]^{T},x_2 (0) =[-0.3,0]^{T}x_3 (0)=[0,1]^{T}\zeta _1 (0)=0.9, \zeta _2 (0)=0.1, \zeta _3 (0)=0.35\) and others are set to zero.

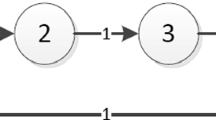

Suppose that the possible communication graphs among the agents \(G=\left\{ {G_1 ,G_2 } \right\} \), which are visualized by Fig. 1, where nodes \(F_1 ,F_2 \) and \(F_3 \) represent the follower agents and node L represents the leader agent. The graphs switch at \(t=30(s)\) according to \(G_1 \rightarrow G_2\).

By applying the proposed adaptive neural control approach for nonlinear multi-agent system with unknown control directions (67), the simulation results are plotted in Figs. 2, 3, 4, 5, 6, and 7. Figure 2 shows the trajectories of followers’ outputs and leader’s output. An enlarged portion of tracking trajectories is given in Fig. 3. Figure 4 indicates boundedness and convergence of the trajectories of consensus errors in both transient and steady states. Figure 5 demonstrates that the trajectories of adaptive parameters are bounded. Figure 6 shows Nussbaum function signals and Fig. 7 exhibits the other states of the follower agents. From simulation results which are shown in Figs. 2, 3, 4, 5, 6, and 7, one concludes that the proposed distributed adaptive neural control is able to guarantee that all of signals in the closed-loop network system remain bounded and it has a good tracking performance in spite of the unstructured uncertainties, non-affine follower agents, and lack of prior knowledge of the control gain sign.

4.2 An application example

Consider nonlinear multi-agent systems consisting of four followers and one leader. Each follower is a model of ship steering with unknown control direction and its dynamics is given by [39]:

where \(i=1,2,3,4\). Hereafter, we assume that models of all ships steering are identical for the simplicity. Unknown functions in the model of ships steering are \(f_{i,1} (x_{i,1} )=0,f_{i,2} (x_{i,2} ) =-\frac{1}{21}x_{i,2} -\frac{0.3}{21}x_{i,2}^3 \) and unknown control gains are \(b_i =\frac{0.23}{21}\).

In simulation, the design parameters are chosen as \(c_{1,1} =40,c_{1,2} =10,c_{2,1} =100,c_{2,2} =40,c_{3,1} =100, c_{3,2} =30,c_{4,1} \quad =100, c_{4,2} =10,\ell _{1,2} =\ell _{2,2} =\ell _{3,2} =\ell _{4,2} =100, \kappa _1 =\kappa _2 =\kappa _3 =\kappa _4 =1000,r_{1,1} =r_{1,2} =r_{2,1} =r_{2,2} =r_{3,1} =r_{3,2} =r_{4,1} =r_{4,2} =5,\gamma _1 \quad =\gamma _2 =\gamma _3 =\gamma _4 =1,\sigma _1 =\sigma _2 =\sigma _3 =\sigma _4 =0.1\).

The leader output is assumed to be \(x_L =\sin (t)+0.5\sin (0.5t)\). The initial states of the four ships steering and distributed controller are adopted as \(x_1 (0)=[1,-2]^{T},x_2 (0)=[2,0]^{T},x_3 (0)=[2,1]^{T},x_3 (0)=[0,3]^{T}, \zeta _1 (0)= 0.9, \zeta _2 (0)=0.1, \zeta _3 (0)=0.9, \zeta _4 (0)=0.4\) and others are set to zero.

Let us consider the communication topology shown in Fig. 8, where the leader information is only available for ship steering 1. The simulation results are depicted in Figs. 9, 10, 11, 12, and 13. The corresponding trajectory tracking is plotted in Fig. 9. It can be seen that, indeed, our control approach solves trajectory tracking problem. It follows from Figs. 10 and 11 that both of the distributed and consensus tracking errors are bounded, which illustrates the theoretical results. Furthermore, the adaptive parameters shown in Fig. 12 are also bounded. Figure 13 displays the other states of ships steering during synchronized tracking.

Remark 5

It should be emphasized that the number of adaptive parameters is dramatically reduced in the proposed controller and it is equal to the number of follower agents. However, by defining \(W_{i,k}^*=\left\{ {\left\| {\theta _{i,k}^*} \right\| ^{2};i=1,\ldots ,N,k=1,\ldots ,n_i } \right\} \) and choosing Lyapunov function as \(V=\sum \nolimits _{i=1}^N {\sum \nolimits _{k=1}^{n_i } {\left\{ {\frac{1}{2}z_{i,k}^2 } \right. +} } \left. {\frac{\gamma _{i,k} }{2r_{i,k} }\tilde{W}_{i,k}^2 } \right\} \), the control inputs and adaptive laws can be obtained as follows for \(i=1,\ldots ,N\)

where \(\dot{\pi }_{i,1} \) denotes 0.

4.3 A quantitative comparison

In order to present a quantitative comparison of the control performance among the proposed controller in Theorem 1, the proposed controller in Remark 5 and the modified controller [25], Integral of Absolute Consensus Error (IACE), Integral of Absolute Distributed Error (IADE), and Maximum Absolute Value of Consensus Error (MAVCE) are employed as the performance indexes. These control approaches are applied to simulate uncertain nonlinear multi-agent systems whose model is given in the numerical example. All of design parameters and initial conditions are chosen similar to the numerical example. Simulation results are depicted in Figs. 14, 15, and 16. Furthermore, the results of quantitative comparison of the mentioned controllers are shown in Table 1.

From Figs. 14, 15 and 16 and Table 1, it can be concluded that the proposed control method can achieve a better tracking performance than the proposed controller in Remark 5 and modified controller in [25]. In addition, our proposed control method is independent of any prior knowledge of NNs and the number of adaptive parameters is dramatically reduced.

Remark 6

Form (64), we have

Furthermore, from (58)–(61) and Assumption 3, it can be achieved that

Then, from (10), (11) and (13), we have

and

for \(k=3,\ldots ,n_i\).

Therefore, the compact approximation region \(\varOmega _{iZ} \) of the ith FBFNN inputs is described as follows:

where \(j\in N_i\).

Remark 7

In [37] and [38], two adaptive neural control schemes were presented for SISO non-affine nonlinear systems with unknown control direction. In [29], an adaptive control approach was proposed for MIMO non-affine nonlinear systems. However, obtained results in [29, 37] and [38] are only valuable for individual non-affine nonlinear systems. On the other hand, [33] studied an adaptive consensus control for multi-agent systems with unknown identical control directions, and [34] proposed a cooperative control for multi-agent systems with unknown nonidentical high-frequency-gain signs. Nevertheless, considered multi-agent systems in [33] and [34] are described by double-integrator and single-integrator models, respectively. Recently, an adaptive consensus was developed for high-order multi-agent systems with unknown nonidentical control directions in [35]. However, control approaches of [33–35] require follower agents’ dynamics satisfying matching conditions, uncertain nonlinear functions being linearly parameterized and control inputs being in affine form. Therefore, the control approaches in [29, 33–35, 37] and [38] cannot be applied to multi-agent systems (4).

5 Conclusions

This paper has proposed a distributed adaptive neural control for a class of uncertain non-affine nonlinear multi-agent systems with unknown control directions. Via mean-value theorem, non-affine follower agents have been transformed to the structures that control design becomes feasible. Then, RBFNNs have been applied to approximate uncertain nonlinear functions. Nussbaum gain function technique has been employed to handle the difficulty from unknown control directions. Besides, the explosion of complexity and the dimensionality curse have been removed in the designed controller by DSC and MLP approaches, respectively. The proposed distributed controller is able to guarantee that all of signals in the closed-loop network system remain bounded.

It is worthwhile to mention that this paper aims to make the first step for studying the distributed control design for strict-feedback multi-agent systems with unknown control direction. Owing to certain technical obstacles, there are still a number of open problems for future researches. Some of them are listed as follows for example:

-

1.

it is worthy to extend the distributed scheme to other types of multi-agent systems, such as discrete-time and stochastic systems;

-

2.

the consideration of the containment control design with unknown control direction problem is suggested;

-

3.

utilizing heuristic Nussbaum gain type functions is of interest to deal with unknown control direction problem;

-

4.

some other interesting problems include investigating the effects of certain communication network factors, such as stochastic noise, quantization, time delay, and nonlinearity;

-

5.

the distributed output-feedback control design and distributed control with partial tracking errors constrained for multi-agent systems are also important issues to be addressed.

References

Hong, Y., Chen, G., Bushnell, L.: Distributed observers design for leader-following control of multi-agent networks. Automatica 44(3), 846–850 (2008)

Li, W., Chen, Z., Liu, Z.: Leader-following formation control for second-order multi-agent systems with time-varying delay and nonlinear dynamics. Nonlinear Dyn. 72(4), 803–812 (2013)

Liu, Y., Jia, Y.: Adaptive leader-following consensus control of multi-agent systems using model reference adaptive control approach. IET Control Theory Appl. 6(13), 2002–2008 (2013)

Ren, W., Moore, K.L., Chen, Y.: High-order and model reference consensus algorithms in cooperative control of multivehicle systems. J. Dyn. Syst. Meas. Control 129(5), 678–688 (2007)

Chen, L., Hou, Z.H., Tan, M.: A mean square consensus protocol for multi-agent systems with communication noise and fixed topology. IEEE Trans. Autom. Control 59(1), 261–267 (2014)

Ren, W.: Distributed leaderless algorithms for networked Euler–Lagrange systems. Int. J. Control 82(11), 2137–2149 (2009)

Ren, W., Bread, R.W., Atkins, M.: Information consensus in multivehicle cooperative control. IEEE Control Syst. Mag. 27(2), 71–82 (2007)

Nuno, E., Ortega, R., Hill, D.: Synchronization of networks of non-identical Euler–Lagrange systems with uncertain parameters and communication delays. IEEE Trans. Autom. Control 56(4), 934–941 (2011)

Anderssson, M., Wallandar, J.: Kin selection and reciprocity in flight formation. Behav. Ecol. 15(1), 158–162 (2004)

Cheng, L., Hou, Z.G., Tan, M.: Decentralized adaptive consensus control of multi-manipulator system with uncertain dynamics. In: Processing IEEE international conference on system man and cybernetics. Singapore: IEEE, pp. 2712–2717 (2008)

Cheng, L., Hou, Z.G., Tan, M.: Multi-agent based adaptive consensus control for multiple manipulators with kinematic uncertainties. In: Processing IEEE international on symposium intelligent control, San Antonio, TX. IEEE, pp. 189–194 (2008)

Cheng, L., Hou, Z.G., Tan, M.: Decentralized adaptive leader follower control of multi manipulator system with uncertain dynamics. In: Processing 34th Annual Conference of IEEE Industrial Electronics, Orlando, FL. IEEE, pp. 1608–1613 (2008)

Lu, J., Kurths, J., Cao, J., Mahdavi, N., Huang, C.: Synchronization control for nonlinear stochastic dynamical networks: pinning impulsive strategy. IEEE Trans. Neural Netw. Learn. Syst. 23(2), 285–292 (2012)

Yu, H., Tu, Z., Xia, X, Jian, J., Shen, Y.: Decentralized adaptive consensus control of multi-agent in networks with switching topologies. In: Processing 11th IEEE International Conference on Control and Automation, Taichung. IEEE, pp. 694–699 (2014)

Chen, W., Li, X.: Observer-based consensus of secondorder multi-agent system with fixed and stochastically switching topology via sampled data. Int. J. Robust Nonlinear Control 24(3), 567–584 (2014)

Zhang, H., Lewis, F.L.: Adaptive cooperative tracking control of higher-order nonlinear systems with unknown dynamics. Automatica 48(7), 1432–1439 (2012)

Wang, L.X.: Adaptive Fuzzy Systems and Control. Prentice Hall, Englewood Cliffs (1994)

Polycarpou, M.M.: Stable adaptive neural control scheme for nonlinear systems. IEEE Trans. Autom. Control 41(3), 447–451 (1996)

Hou, Z.G., Cheng, L., Tan, M.: Decentralized robust adaptive control for the multi-agent system consensus problem using neural networks. IEEE Trans. Syst. Man Cybern. Part B Cybern. 39(3), 636–647 (2009)

Cheng, L., Hou, Z.G., Tan, M., Lin, Y., Zhang, W.: Neural-network-based adaptive leader-following control for multi-agent systems with uncertainties. IEEE Trans. Neural Netw. 21(8), 1351–1358 (2010)

Huang, J., Dou, L., Fang, H., Chen, J., Yang, Q.: Distributed backstepping-based adaptive fuzzy control of multiple high-order nonlinear dynamics. Nonlinear Dyn. 81(1–2), 63–75 (2015)

Peng, J., Ye, X.: Distributed adaptive controller of the output-synchronization of networked systems in semi strict feedback form. J. Franl. Inst. 351(1), 412–428 (2014)

Li, W., Zhang, J.F.: Distributed practical output tracking of high-order stochastic multi-agent systems with inherent nonlinear drift and diffusion terms. Automatica 50(12), 3231–3238 (2014)

Swaroop, S., Hedrick, J.C., Yip, P.P., Gerdes, J.C.: Dynamic surface control for a class of nonlinear systems. IEEE Trans. Autom. Control 45(10), 1893–1899 (2000)

Yoo, S.J.: Distributed consensus tracking for multiple uncertain nonlinear strict feedback systems under a directed graph. IEEE Trans. Neural Netw. Learn. Syst. 24(4), 666–672 (2013)

Yoo, S.J.: Distributed adaptive containment control of uncertain nonlinear multi-agent systems in strict-feedback form. Automatica 49(7), 2145–2153 (2013)

Zhou, Q., Shi, P., Xu, S., Li, H.: Observer based adaptive neural network control for nonlinear stochastic systems with time delay. IEEE Trans. Neural Netw. Learn. Syst. 24(1), 71–80 (2013)

Nussbaum, R.D.: Some remarks on a conjecture in parameter adaptive control. Syst. Control Lett. 3(5), 243–246 (1983)

Arefi, M.M., Jahed-Motlagh, M.R.: Observer-based adaptive neural control of uncertain MIMO nonlinear systems with unknown control direction. Int. J. Adap. Control Signal Process. 27(9), 741–754 (2013)

Shi, W.: Observer-based fuzzy adaptive control for multi input multi output nonlinear systems with a nonsymmetric control gain matrix and unknown control direction. Fuzzy Set Syst. 263, 1–26 (2015)

Li, T., Li, Z., Wang, D., Philip Chen, C.L.: Output-feedback adaptive neural control for stochastic nonlinear time-varying delay systems with unknown control direction. IEEE Trans. Neural Netw. Learn. Syst. 26(6), 1188–1201 (2015)

Xia, X., Zhang, T.: Adaptive output feedback dynamic surface control of nonlinear systems with unmodeled dynamics and unknown high-frequency gain sign. Neurocomputing 143, 312–321 (2014)

Chen, W., Li, X., Ren, W., Wen, C.: Adaptive consensus of multi-agent systems with unknown identical control directions based on a novel Nussbaum-type function. IEEE Trans. Autom. Control 59(7), 1887–1892 (2014)

Peng, J., Ye, X.: Cooperative control of multiple heterogeneous agents with unknown high-frequency-gain signs. Syst. Control Lett. 68, 51–56 (2014)

Ding, Z.: Adaptive consensus output regulation of a class of nonlinear systems with unknown high-frequency gain. Automatica 51(1), 348–355 (2015)

Slotine, J.J.E., Li, W.P.: Applied Nonlinear Control. Prentice Hall, Englewood Cliffs (1991)

Arefi, M.M., Ramezani, Z., Jahed-Motlagh, M.R.: Observer-based adaptive robust control of nonlinear non-affine systems with unknown gain sign. Nonlinear Dyn. 78(3), 2185–2194 (2014)

Yu, Z., Jin, Z., Du, H.: Adaptive neural control for a class of non-affine stochastic non-linear systems with time-varying delay: a Razumikhin–Nussbaum method. IET Control Theory Appl. 61(1), 14–23 (2012)

Du, J., Guo, C.S., Zhou, Y.: Adaptive autopilot design of time-varying uncertain ships with completely unknown control coefficient. IEEE J. Ocean. Eng. 32(2), 346–352 (2007)

Diestel, R.: Graph Theory. Springer, Berlin (2000)

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Shahvali, M., Shojaei, K. Distributed adaptive neural control of nonlinear multi-agent systems with unknown control directions. Nonlinear Dyn 83, 2213–2228 (2016). https://doi.org/10.1007/s11071-015-2476-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11071-015-2476-4