Abstract

Recently, operational matrices were adapted for solving several kinds of fractional differential equations (FDEs). The use of numerical techniques in conjunction with operational matrices of some orthogonal polynomials, for the solution of FDEs on finite and infinite intervals, produced highly accurate solutions for such equations. This article discusses spectral techniques based on operational matrices of fractional derivatives and integrals for solving several kinds of linear and nonlinear FDEs. More precisely, we present the operational matrices of fractional derivatives and integrals, for several polynomials on bounded domains, such as the Legendre, Chebyshev, Jacobi and Bernstein polynomials, and we use them with different spectral techniques for solving the aforementioned equations on bounded domains. The operational matrices of fractional derivatives and integrals are also presented for orthogonal Laguerre and modified generalized Laguerre polynomials, and their use with numerical techniques for solving FDEs on a semi-infinite interval is discussed. Several examples are presented to illustrate the numerical and theoretical properties of various spectral techniques for solving FDEs on finite and semi-infinite intervals.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Many phenomena in engineering, physics and other sciences can be modeled successfully by using mathematical tools inspired in the fractional calculus, that is, the theory of derivatives and integrals of non-integer order [1–19]. This allows one to describe physical phenomena more accurately. In this line of thought, FDEs have emerged as interdisciplinary area of research in the recent years. The non-local nature of fractional derivatives can be utilized to simulate accurately diversified natural phenomena containing long memory [20–35].

In recent years, there has been considerable interest in employing spectral methods for numerically solving many types of integral and differential equations, due to their flexibility of implementation over finite and infinite intervals [36–47]. The speed of convergence is one of the great advantages of spectral methods. Besides, spectral methods have exponential rates of convergence and high level of accuracy. Spectral methods can be classified into three types, namely the collocation [48–52], tau [53–56] and Galerkin [57–59] methods. As it is well known, one of the most accurate methods of discretization for solving numerous differential equations is spectral method. The spectral method employs linear combinations of orthogonal polynomials, as basis functions, and so often leads to accurate approximate solutions [60, 61]. The spectral methods based on orthogonal systems, such as Bernstein polynomials, Jacobi polynomials and their special cases, are only available for bounded domains for approximation of FDEs [62, 63]. On the other hand, several problems in finance, plasma physics, porous media, dynamical processes and engineering are set on unbounded domains. The use of spectral methods based on orthogonal systems, such as the modified generalized Laguerre polynomials and their special cases, is available for unbounded domains for approximation of FDEs [64–67].

From the numerical point of view, several numerical techniques were adapted for approximating the solution of FDEs in bounded domains. Saadatmandi and Dehghan [68] presented a Legendre tau scheme, combined with the fractional Caputo operational matrix of Legendre polynomials, for the numerical solution of multi-term FDEs. Doha et al. [69] formulated and derived the Jacobi operational matrix of the Caputo fractional derivative, which was applied in conjunction with the spectral tau scheme by means of Jacobi polynomials as a basis function, for solving linear multi-term FDEs. The Chebyshev [53] and Legendre [68] operational matrices can be obtained as special cases from Jacobi operational matrix [69]. Recently, Kazem et al. [70] defined new orthogonal functions, based on Legendre polynomials, to obtain an efficient spectral technique for multi-term FDEs. The authors of [71] extended this definition and presented the operational matrix of fractional derivative and integration for such functions to construct a new tau technique for solving two-dimensional FDEs. Moreover, Ahmadian et al. [72] adopted the operational matrix of fractional derivative for Legendre polynomials, which was applied with the tau method, for solving a class of fuzzy FDEs. Indeed, with a few noticeable exceptions, a limited work was developed on the use of spectral methods in unbounded domains to solve these important classes of FDEs.

The operation matrices of fractional derivatives and fractional integrals of generalized Laguerre polynomials were investigated for solving multi-term FDEs on a semi-infinite interval [66]. The generalized Laguerre spectral tau and collocation techniques were studied in [66] for solving linear and nonlinear FDEs on the half line. These spectral techniques were developed and generalized by means of the modified generalized Laguerre polynomials in [67]. Indeed, the authors of [73, 74] presented a Caputo fractional extension of the classical Laguerre polynomials and proposed new C-Laguerre functions.

There are different techniques for solving FDEs, fractional integro-differential equations and fractional optimal control problems, such as the methods denoted as variational iteration [75, 76], Adomian decomposition [77], operational matrix of B-spline functions [78], operational matrix of Jacobi polynomials [69, 79], Jacobi collocation [80], operational matrix of Chebyshev polynomials [81], Legendre collocation [82, 83], pseudo-spectral [60], operational matrix of Laguerre polynomials [84] and others [85–89].

The objective of this article is to present a broad survey of recently proposed spectral methods for solving FDEs on bounded and unbounded domains. The operational matrices of fractional derivatives and integrals for some orthogonal polynomials on bounded and unbounded domains are presented. These operational matrices are employed in combination with spectral tau and collocation schemes for solving several kinds of linear and nonlinear FDEs. Moreover, we present the construction of the shifted Legendre operational matrix (SLOM), shifted Chebyshev operational matrix (SCOM), shifted Jacobi operational matrix (SJOM), Laguerre operational matrix (LOM), modified generalized Laguerre operational matrix (MGLOM) and Bernstein operational matrix (BOM) of fractional derivatives and integrals that are employed with the tau method to provide efficient numerical schemes for solving linear FDEs. We also introduce a Bernstein operational matrix (BOM) of fractional derivatives with collocation method for solving linear FDEs. Finally, we present the shifted Jacobi collocation (SJC) and the modified generalized Laguerre collocation (MGLC) methods for solving fractional initial and boundary value problem of fractional order \(\nu >0\) with nonlinear terms, in which the nonlinear FDE is collocated at the \(N\) zeros of the orthogonal functions. Several illustrative examples are implemented to confirm the high accuracy and effectiveness of the use of operational matrices combined with spectral techniques for solving FDEs on bounded and unbounded domains.

The remainder of this paper is organized as follows: Sect. 2 introduces some relevant definitions of the fractional calculus theory. Section 3 is devoted to orthogonal polynomials and polynomial approximations. Sections 4 and 5 present the SLOM, SCOM, SJOM, LOM, MGLOM and BOM of fractional derivatives in the Caputo sense and the SLOM, SCOM, SJOM, LOM, MGLOM and BOM of Riemann–Liouville fractional integrals, respectively. Section 6 employs the spectral methods, based on shifted Jacobi, modified generalized Laguerre and Bernstein polynomials in combination with the SJOM, MGLOM and BOM, for solving FDEs including linear and nonlinear terms. Finally, Sect. 7 presents several examples to illustrate the main ideas of this survey.

2 Preliminaries and notations

In this section, we recall some fundamental definitions and properties of fractional calculus theory which are used in the sequel.

Definition 1

The Riemann–Liouville fractional integral \(J^{\nu }f(x)\) of order \(\nu \) is defined by

Definition 2

The Caputo fractional derivative of order \(\nu >0\) is defined by

respectively, where \(m-1<\nu \le m\), \(m\in N^{+}\) and \(\varGamma (.)\) denotes the Gamma function.

The fractional integral and derivative operator satisfies

where \(\lfloor \nu \rfloor \) and \(\lceil \nu \rceil \) are the floor and ceiling functions respectively, while \(N=\{1,2,\ldots \}\) and \(N_0=\{0,1,2,\ldots \}\).

The Caputo’s fractional differentiation is a linear operation,

where \(\lambda ,\ \mu \in R\).

Lemma 1

If \(m-1<\nu \le m,\ m\in N,\) then

3 Orthogonal polynomials and polynomial approximations

Orthogonal polynomials play the most important role in spectral methods and, therefore, it is necessary to highlight their relevant properties. This section is devoted to the study of the properties of general orthogonal polynomials. We briefly review the fundamental results on the polynomial approximations [90, 91].

3.1 Legendre polynomials

The Legendre polynomials \(L_{i}(z)\) are defined on the interval \([-1,1]\). In order to use these polynomials on the interval \(x\in [0,1]\), we defined the so-called shifted Legendre polynomials by introducing the change of variable \(z=2x-1\).

Let the shifted Legendre polynomials \(L_i{(2x-1)}\) be denoted by \(P_{i}{(x)}\). Then, \(P_{i}{(x)}\) can be obtained with the aid of the following recurrence formula:

where \(P_{0}{(x)}=1\) and \(P_{1}{(x)}=2x-1\).

The analytic form of the shifted Legendre polynomials \(P_{i}{(x)}\) of degree \(i\) is given by

where \(P_{i}{(0)}={(-1)}^i\) and \(P_{i}{(1)}=1\).

The orthogonality condition is

where \(w(x)=1\).

The special values

will be of important use later.

3.2 Chebyshev polynomials

The Chebyshev polynomials are defined on the interval \([-1,1]\) and can be determined with the aid of the following recurrence formula:

where \(T_0{(t)}=1\) and \(T_1{(t)}=t\). In order to use these polynomials on the interval \(x\in [0,L]\), we defined the so-called shifted Chebyshev polynomials by introducing the change of variable \(t=\dfrac{2x}{L}-1\).

Let the shifted Chebyshev polynomials \(T_i{\left( \dfrac{2x}{L}\!-\!1\right) }\) be denoted by \(T_{L,i}{(x)}\). Then, \(T_{L,i}{(x)}\) can be obtained as follows:

where \(T_{L,0}{(x)}=1\) and \(T_{L,1}{(x)}=\dfrac{2x}{L}-1\). The analytic form of the shifted Chebyshev polynomials \(T_{L,i}{(x)}\) of degree \(i\) is given by

where \(T_{L,i}{(0)}={(-1)}^i\) and \(T_{L,i}{(L)}=1\).

The orthogonality condition is

where \(w_L(x)=\dfrac{1}{\sqrt{L x-x^2}}\ \text {and}\ h_j = {\left\{ \begin{array}{ll} \dfrac{b_j}{2}\pi , &{} k=j, \\ 0, &{} k \ne j, \end{array}\right. }\qquad b_0=2,\ b_j=1,\ j\ge 1.\)

The special values

will be of important use later.

3.3 Jacobi polynomials

The Jacobi polynomials are defined on the interval [-1,1] and can be generated with the aid of the following recurrence formula:

where \(\alpha ,\ \beta >-1\) and

In order to use these polynomials in the interval \(x\in [0,L]\), we define the so-called shifted Jacobi polynomials by introducing the change of variable \(t=\dfrac{2x}{L}-1\).

Let the shifted Jacobi polynomials \(P^{(\alpha ,\beta )}_i{\left( \dfrac{2x}{L}-1\right) }\) be denoted by \(P^{(\alpha ,\beta )}_{L,i}{(x)}\). Then, \(P^{(\alpha ,\beta )}_{L,i}{(x)}\) can be generated from:

where

The analytic form of the shifted Jacobi polynomials \(P^{(\alpha ,\beta )}_{L,i}{(x)}\) of degree \(i\) is given by

where

The orthogonality condition of shifted Jacobi polynomials is

where \(w^{(\alpha ,\beta )}_L(x)=x^\beta (L-x)^\alpha \ \) and

3.4 Laguerre polynomials

Let \(\varLambda = (0, \infty )\) and \(w(x)=\hbox {e}^{-x}\) be the weight functions, and let \(L_\ell (x)\) be the Laguerre polynomial of degree \(\ell \), defined by

They satisfy the equations

and

The set of Laguerre polynomials is the \(L^2_w(\varLambda )\)-orthogonal system:

where \(\delta _{jk}\) is the Kronecker delta function.

The special value

where \(q\) is positive integer, will be of important use later.

3.5 Modified generalized Laguerre polynomials

Let \(\varLambda =(0,\infty )\) and \(w^{(\alpha ,\beta )}(x)=x^{\alpha }\hbox {e}^{-\beta x}\) be a weight function on \(\varLambda \) in the usual sense. Define

equipped with the following inner product and norm

Let \(L^{(\alpha ,\beta )}_{i}{(x)}\) be the modified generalized Laguerre polynomial of degree \(i\) for \(\alpha >-1\) and \(\beta >0\) is defined by

For \(\alpha >-1\) and \(\beta >0\), we have

where \(L^{(\alpha ,\beta )}_0{(x)}=1\) and \(L^{(\alpha ,\beta )}_1{(x)}=-\beta x+\frac{\varGamma (\alpha +2)}{\varGamma (\alpha +1)}\).

The set of modified generalized Laguerre polynomials is the \(L^2_{w^{(\alpha ,\beta )}}(\varLambda )\)-orthogonal system, namely

where \(\delta _{jk}\) is the Kronecker delta function and \(h_{k}=\frac{\varGamma (k+\alpha +1)}{\beta ^{\alpha +1}k!}\).

The modified generalized Laguerre polynomials of degree \(i\) on the interval \(\varLambda \), is given by

where \(L^{(\alpha ,\beta )}_{i}{(0)}= \frac{\varGamma (i+\alpha +1)}{\varGamma (\alpha +1)\varGamma (i+1)}\).

The special value

will be of important use later.

Corollary 1

In particular, the special case for Laguerre polynomials may be obtained directly by taking \(\alpha =0\) and \(\beta =1\) in the modified generalized Laguerre, which are denoted by \(L_i(x)\).

3.6 Bernstein polynomials

The Bernstein polynomials of the \(n\)th degree are defined on the interval \([0,1]\) (see [62])

These Bernstein polynomials form a complete basis on over the interval \([0,1]\). A recursive definition also can be used to generate these polynomials

where \(B_{-1,n-1}(x)=0\) and \(B_{n,n-1}(x)=0\).

Since the power basis \(\{1,x,x^2,\dots ,x^n\}\) forms a basis for the space of polynomials of degree less than or equal to \(n\), any Bernstein polynomial of degree \(n\) can be written in terms of the power basis. This can be directly calculated using the binomial expansion of \((1-x)^{n-i}\), one can show that

The fact that they are not orthogonal turns out to be their disadvantage when used in the least squares approximation. As mentioned in [62], one approach to direct least squares approximation by polynomials in Bernstein form relies on construction of the basis \(\{D_{0,n}(x),D_{1,n}(x),D_{2,n}(x),\dots ,D_{n,n}(x)\}\) that is “dual” to the Bernstein basis of degree \(n\) on \(x\in [0,1]\). This dual basis is characterized by the property

for \(i,j=0,1,2,\dots ,n.\)

Theorem 1

the \(q\)th derivative of Bernstein polynomials

(For the proof, see [62]).

4 Operational matrices of Caputo fractional derivatives

In this section, we introduce the operational matrices of Caputo fractional derivatives for some orthogonal polynomials on finite and infinite intervals.

4.1 SLOM of fractional derivatives

A function \(u(x)\), square integrable in \([0,1]\), may be expressed in terms of shifted Legendre polynomials as

where the coefficients \(c_j\) are given by

In practice, only the first \((N+1)\)-terms shifted Legendre polynomials are considered. Hence, \(u(x)\) can be expressed in the form

where the shifted Legendre coefficient vector \(C\) and the shifted Legendre vector \( \phi (x)\) are given by

Lemma 2

Let \(P_{i}(x)\) be a shifted Legendre polynomial then

In the following theorem, we prove the operational matrix of fractional derivative for the shifted Legendre vector see [68].

Theorem 2

Let \(\phi (x)\) be shifted Legendre vector defined in Eq. (4.3) and also suppose \(\nu > 0\) then

where \( \mathbf D ^{(\nu )}\) is the \((N+1) \times (N+1)\) operational matrix of derivatives of order \(\nu \) in the Caputo sense and is defined as follows:

where

Note that in \(\mathbf D ^{(\nu )}\), the first \(\lceil \nu \rceil \) rows are all zero.

(For the proof, see [68]).

4.2 SCOM for fractional derivatives

A function \(u(x)\), square integrable in \([0,L]\), may be expressed in terms of shifted Chebyshev polynomials as

where the coefficients \(c_j\) are given by

In practice, only the first \((N+1)\)-terms shifted Chebyshev polynomials are considered. Hence, we can write

where the shifted Chebyshev coefficients vector \(C\) and the shifted Chebyshev vector \( \varphi (x)\) are given by:

Lemma 3

Let \(T_{L,i}(x)\) be a shifted Chebyshev polynomial. Then

In the following theorem, we prove the operational matrix of fractional derivative for the shifted Chebyshev vector (see [53]).

Theorem 3

Let \(\varphi (x)\) be shifted Chebyshev vector defined in Eq. (4.3) and also suppose \(\nu > 0\). Then

where \( \mathbf D ^{(\nu )}\) is the \((N+1) \times (N+1)\) operational matrix of derivatives of order \(\nu \) in the Caputo sense and is defined as follows:

where

Note that in \(\mathbf D ^{(\nu )}\), the first \(\lceil \nu \rceil \) rows are all zero.

(For the proof, see [53]).

4.3 SJOM for fractional derivatives

Let \(u(x)\) be a polynomial of degree \(N\). Then, it may be expressed in terms of shifted Jacobi polynomials as

where the coefficients \(c_j\) are given by

If the shifted Jacobi coefficient vector \(C\) and the shifted Jacobi vector \( \varPhi (x)\) are written as

respectively, then the first-order derivative of the vector \( \varPhi (x)\) can be expressed by

where \(\mathbf D ^{(1)}\) is the \( (N+1)\times (N+1)\) operational matrix of derivative given by

and

(For the proof, see [92, 93], and for the general definition of a generalized hypergeometric series and special \(_3 F_2\), see [94]).

For example, for even \(N\) we have

The main objective of this section is to generalize the SJOM of derivatives for fractional calculus. By using (4.16), it is clear that

where \(n \in N\) and the superscript in \(\mathbf D ^{(1)}\), denotes matrix powers. Thus

Corollary 2

In case of \(\alpha =\beta =0,\) it is clear that the SJOM of derivatives for integer calculus is in complete agreement with Legendre operational matrix of derivatives for integer calculus obtained by Saadatmandi and Dehghan (see [68] Eq. (11)).

Corollary 3

In case of \(\alpha =\beta =-\frac{1}{2},\) it is clear that the SJOM of derivatives for integer calculus is in complete agreement with Chebyshev operational matrix of derivatives for integer calculus obtained by Doha et al. (see [53] Eq. (3.2)).

Lemma 4

Let \(P^{(\alpha ,\beta )}_{L,i}(x)\) be a shifted Jacobi polynomial. Then

Theorem 4

Let \(\varPhi (x)\) be shifted Jacobi vector defined in Eq. (4.9) and let also \(\nu > 0\). Then

where \( \mathbf D ^{(\nu )}\) is the \((N+1) \times (N+1)\) operational matrix of derivatives of order \(\nu \) in the Caputo sense and is defined by:

where

and \(\delta _{ijk}\) is given by

Note that in \(\mathbf D ^{(\nu )}\), the first \(\lceil \nu \rceil \) rows, are all zeros.

Proof

The analytic form of the shifted Jacobi polynomials \(P^{(\alpha ,\beta )}_{L,i}{(x)}\) of degree \(i\) is given by (3.10). Using Eqs. (2.4) and (2.5) in Eq. (3.10) we have

Now, approximating \(x^{k-\nu }\) by \((N+1)\) terms of shifted Jacobi series, leads to

where \(b_{kj}\) is given from (4.14) with \(u(x)=x^{k-\nu }\). This gives

Employing Eqs. (4.23)–(4.25), it yields

where \(\varDelta _{\nu }(i,j)\) is given in Eq. (4.22).

Accordingly, rewriting Eq. (4.26) as a vector form gives

Also, according to Lemma 4, one can write

A combination of Eqs. (4.27) and (4.28) leads to the desired result. \(\square \)

Corollary 4

If \(\alpha =\beta =0\) and \(L=1\). Then \(\delta _{ijk}\) is given as follows:

With the aid of properties of shifted Jacobi polynomials, and after some analytical manipulations, we have

Then, one can show that

where \(\theta _{ijk}\) is given as in Theorem 2.

It is clear that the SJOM of derivatives for fractional calculus, with \(\alpha =\beta =0,\) is in complete agreement with Legendre operational matrix of derivatives for fractional calculus obtained by Saadatmandi and Dehghan (see [68] Eq. 14).

Corollary 5

If \(\alpha =\beta =-\frac{1}{2}\). Then \(\delta _{ijk}\) is given as follows:

With the aid of properties of shifted Jacobi polynomials and (3.5), and after some manipulations, we have

Then one can show that

where \(S_{\nu }(i,j)\) is given as in Theorem 3.

It is clear that the SJOM of derivatives for fractional calculus with \(\alpha =\beta =-\frac{1}{2},\) is in complete agreement with Chebyshev operational matrix of derivatives for fractional calculus obtained by Doha et al. (see [53]).

4.4 LOM of fractional derivatives

Let \(u(x)\in L^2_{w}(\varLambda )\), then \(u(x)\) may be expressed in terms of Laguerre polynomials as

In practice, only the first \((N+1)\)-terms Laguerre polynomials are considered. Then, we have

where the Laguerre coefficient vector \(C\) and the Laguerre vector \(\emptyset \) are given by

Lemma 5

Let \(L_{i}(x)\) be the Laguerre polynomial. Then

In the following theorem, we prove the operational matrix of fractional derivative for the Laguerre vector see [84].

Theorem 5

Let \(\emptyset (x)\) be the Laguerre vector defined in Eq. (4.3) and also suppose \(\nu > 0\). Then

where \( \mathbf D ^{(\nu )}\) is the \((N+1) \times (N+1)\) operational matrix of derivatives of order \(\nu \) in the Caputo sense and is defined as follows:

where

Note that in \(\mathbf D ^{(\nu )}\), the first \(\lceil \nu \rceil \) rows are all zero.

(For the proof, see [84]).

4.5 Modified generalized Laguerre operational matrix of fractional derivatives

If \(u(x)\in L^2_{w^{(\alpha ,\beta )}}(\varLambda )\). Then, \(u(x)\) may be expressed in terms of modified generalized Laguerre polynomials as

In practice, only the first \((N+1)\)-terms modified generalized Laguerre polynomials are considered. Then, we have

where the modified generalized Laguerre coefficient vector \(C\) and the modified generalized Laguerre vector \(\psi (x)\) are given by

Lemma 6

Let \(L^{(\alpha ,\beta )}_{i}(x)\) be a modified generalized Laguerre polynomial. Then

Theorem 6

Let \(\psi (x)\) be modified generalized Laguerre vector defined in Eq. (4.37) and also suppose \(\nu > 0\). Then

where \( \mathbf D ^{(\nu )}\) is the \((N+1) \times (N+1)\) operational matrix of fractional derivative of order \(\nu \) in the Caputo sense and is defined as follows:

where

Note that in \(\mathbf D ^{(\nu )}\), the first \(\lceil \nu \rceil \) rows are all zero.

Proof

The analytic form of the modified generalized Laguerre polynomials \(L_{i}^{(\alpha ,\beta )}{(x)}\) of degree \(i\) is given by (3.16). Using Eqs. (2.4), (2.5) and (3.16) we have

Now, approximating \(x^{k-\nu }\) by \(N+1\) terms of modified generalized Laguerre series, we have

where \(b_{j}\) is given from (4.35) with \(u(x)=x^{k-\nu }\), and

Employing Eqs. (4.41)–(4.43) yields

where

Accordingly, Eq. (4.45) can be written in a vector form as follows:

Also, according to Lemma 6, we can write

A combination of Eqs. (4.46) and (4.47) leads to the desired result. \(\square \)

4.6 Bernstein operational matrix of fractional derivatives

A function \(f(x)\), square integrable in \([0,1]\), may be expressed in terms of the Bernstein basis [95]. In practice, only the first \(n+1\) term Bernstein polynomials are considered. Hence, if we write

where the Bernstein coefficient vector \(C\) and the Bernstein vector \(B(x)\) are given by

then

Authors of [62] have derived explicit representations

for the dual basis functions, defined by the coefficients

Theorem 7

Let \(B(x)\) be Bernstein vector defined in Eq. (4.49) and also suppose that \(\nu > 0\). Then

where \( \mathbf D ^{(\nu )}\) is the \((n+1) \times (n+1)\) operational matrix of fractional derivative of order \(\nu \) in the Caputo sense and is defined as follows:

Here \(\omega _{i,\ell ,j}\) is given by

where \(\lambda _{\ell ,k}\) is given in Eq. (4.52) and

Proof

Using Eqs. (3.20) and (2.5) we have

Now, if we approximate \(x^{j-\nu }\) by Bernstein polynomials. Then

where

Employing Eqs. (4.56) and (4.57) we get

where \(\omega _{i,\ell ,j}\) is given in Eq. (4.55). Rewriting Eq. (4.58) as a vector form results

This leads to the desired result. \(\square \)

5 Operational matrices of Riemann–Liouville fractional integrals

In this section, we present the operational matrices of Riemann–Liouville fractional integrals for some orthogonal polynomials on finite and infinite intervals.

5.1 SCOM to fractional integration

The main objective of this subsection is to derive an operational matrix of fractional integration for shifted Chebyshev vector \(\varphi (x)\).

Theorem 8

Let \(\varphi (x)\) be shifted Chebyshev vector defined in Eq. (4.3) and suppose that \(\nu > 0\). Then

where \( \mathbf P ^{(\nu )}\) is the \((N+1) \times (N+1)\) SCOM of order \(\nu \) in the Riemann–Liouville sense and is defined as follows:

and

(For the proof, see [81]).

5.2 LOM of fractional integration

The main objective of this section is to find the fractional integration of Laguerre vector in the Riemann–Liouville sense.

Theorem 9

Let \(\emptyset (x)\) be the Laguerre vector and \(\nu > 0\). Then

where \( \mathbf P ^{(\nu )}\) is the \((N+1) \times (N+1)\) operational matrix of fractional integration of order \(\nu \) in the Riemann–Liouville sense and is defined as follows:

where

5.3 SJOM of fractional integration

The main objective of this subsection is to present an operational matrix of fractional integration for shifted Jacobi vector \(\varPhi (x)\).

Theorem 10

Let \(\varPhi (x)\) be the shifted Jacobi vector and \(\nu > 0\). Then

where \( \mathbf P ^{(\nu )}\) is the \((N+1) \times (N+1)\) operational matrix of fractional integration of order \(\nu \) in the Riemann–Liouville sense and is defined as follows:

and

Proof

For the proof see [79]. \(\square \)

Remark 1

It is worthwhile to mention here that the operational matrices of fractional integrations, in the Riemann–Liouville sense, for shifted Legendre and shifted Chebyshev polynomials can be obtained as special cases for the operational matrix of fractional integration for Shifted Jacobi polynomials.

5.4 MGLOM of fractional integration

The main objective of this section is to derive an operational matrix of fractional integration for modified generalized Laguerre vector.

Theorem 11

Let \(\psi (x)\) be the modified generalized Laguerre vector and \(\nu > 0\). Then

where \( \mathbf P ^{(\nu )}\) is the \((N+1) \times (N+1)\) operational matrix of fractional integration of order \(\nu \) in the Riemann–Liouville sense and is defined as follows:

where

Proof

Using the analytic form of the modified generalized Laguerre polynomials \(L^{(\alpha ,\beta )}_{i}{(x)}\) of degree \(i\) (3.16) and (2.3). Then

Now, approximate \(x^{k+\nu }\) by \(N+1\) terms of modified generalized Laguerre series, we have

where \(c_{j}\) is given from (4.35) with \(u(x)=x^{k+\nu }\), that is

In virtue of (5.10) and (5.11), we get

where

Accordingly, Eq. (5.13) can be written in a vector form as follows:

Equation (5.15) leads to the desired result. \(\square \)

6 Spectral methods for FDEs

In this section, we introduce different ways to approximate linear FDEs using the tau method, based on the presented operational matrices of fractional differentiation and integration, such that it can be implemented efficiently. Also, we present the collocation method, based on the presented operational matrices, for solving nonlinear FDEs on bounded and unbounded domains.

6.1 Applications of the operational matrix of fractional derivatives

In this subsection, in order to show the fundamental importance of the operational matrices of fractional derivatives, we apply spectral tau method based on these operational matrices to solve the multi-term FDEs.

6.1.1 Shifted Jacobi tau operational matrix formulation method

Consider the linear FDE

with initial conditions

where \(\gamma _j\ (j=1,\ldots ,k+1) \) are real constant coefficients and \(m-1<\nu \le m,\ 0<\mu _1 <\mu _2 <\cdots <\mu _k <\nu \). Moreover, \(D^{\nu } u(x)\equiv u^{(\nu )}(x)\) denotes the Caputo fractional derivative of order \(\nu \) for \(u(x)\), the values of \(d_i\ (i=0, \ldots ,m-1)\) describe the initial state of \(u(x)\), and \(g(x)\) is a given source function.

The existence and uniqueness and continuous dependence of the solution of the problem are discussed in [96]. In order to solve the initial value problem (6.1)-(6.2), we approximate \(u(x)\) and \(g(x)\) by means of the shifted Jacobi polynomials as

where the vector \(G=[g_0,\ldots , g_N]^\mathrm{T}\) is known and \(C=[c_0,\ldots ,c_N]^\mathrm{T}\) is an unknown vector.

Using Theorem 4 (relation (4.20)) and (6.3), yields

Employing Eqs. (6.3)–(6.6) the residual \(R_N(x)\) for Eq. (6.1) can be written as

As in a typical tau method (see[79]), we generate \(N-m+1 \) linear equations by applying

The substitution of Eqs. (4.17) and (6.3) into Eq (6.2) yields

Equations (6.8) and (6.9) generate \( N-m+1 \) and \( m \) set of linear equations, respectively. These equations can be solved for unknown coefficients of the vector \(C\). Consequently, \(u_{N}(x)\) given in Eq. (6.3) can be obtained, which is a solution of Eq. (6.1) with the initial conditions (6.2).

Remark 2

To solve Eq. (6.1) subject to the following boundary conditions (when \(m\) is even):

We apply the same technique described above, but the \( m \) set of linear equations resulting from (6.9) is changed to

Equations (6.8) and (6.11) generate \( N+1 \) system of linear equations. This system can be solved to determine the unknown coefficients of the vector \(C\).

6.1.2 Modified generalized Laguerre tau operational matrix formulation method

Consider the linear FDE

with initial conditions

where \(\gamma _j \ \ (j=1,\ldots ,k+1)\) are real constant coefficients and \(m-1<\nu \le m,\ 0<\zeta _1 <\zeta _2 <\cdots <\zeta _k <\nu \). Moreover, \(D^{\nu } u(x)\equiv u^{(\nu )}(x)\) denotes the Caputo fractional derivative of order \(\nu \) for \(u(x)\), and the values of \(d_{i} \ \ (i=0, \ldots ,m-1)\) describe the initial state of \(u(x)\), and \(g(x)\) is a given source function.

Let \(w^{(\alpha ,\beta )}(x)=x^{\alpha }\hbox {e}^{-\beta x}\). Then, we denote by \(L_{w^{(\alpha ,\beta )}}^{2}(\varLambda )(\varLambda :=(0,\infty ))\) the weighted \(L^2\) space with inner product:

and the associated norm \(\Vert u\Vert _{w^{(\alpha ,\beta )}}=(u,u)_{w^{(\alpha ,\beta )}}^{\frac{1}{2}}\). It is known that \(\{L_{i}^{(\alpha ,\beta )}(x): i \ge 0 \}\) forms a complete orthogonal system in \(L_{w^{(\alpha ,\beta )}}^2(\varLambda )\).

To solve the fractional initial value problem, (6.12)-(6.13), we approximate \(u(x)\) and \(g(x)\) by modified generalized Laguerre polynomials as

where vector \(G=[g_0,\ldots , g_N]^\mathrm{T}\) is known and \(C=[c_0,\ldots ,c_N]^\mathrm{T}\) is an unknown vector.

By using Theorem 6 (relation Eqs. (4.39) and (6.14)) we have

After employing Eqs (6.14)-(6.17), the residual \(R_N(x)\) for Eq. (6.12) can be written as

As in a typical tau method (see [67, 69]), we generate \(N-m+1\) linear equations by applying

Also, by substituting Eq. (6.14) into Eq. (6.13), we get

Equations (6.19) and (6.20) generate \( N-m+1 \) and \(m\) set of linear equations, respectively. These linear equations can be solved for unknown coefficients of the vector \(C\). Consequently, \(u(x)\) given in Eq. (6.14) can be calculated, which give the solution of the initial value problem in Eqs. (6.12) and (6.13).

6.2 Applications of the operational matrix of fractional integration

The proposed multi-order FDE is integrated \(\nu \) times, in the Riemann–Liouville sense, where \(\nu \) is the highest fractional order and making use of the formula relating the expansion coefficients of fractional integration appearing in this integrated form of the proposed multi-order FDE to the orthogonal polynomials themselves. In this section, we present the tau method, based on the SJOM and MGLOM of fractional integrations, for solving FDE in bounded and unbounded intervals, respectively.

6.2.1 Tau method based on SJOM of fractional integration

In order to show the fundamental importance of SJOM of fractional integration, we apply it to solve the following multi-order FDE:

with initial conditions

where \(\gamma _i\ (i=1,2,\ldots ,k+1)\) are real constant coefficients and \(m-1<\nu \le m,\ 0<\beta _1 <\beta _2 <\cdots <\beta _k <\nu \).

If we apply the Riemann–Liouville integral of order \(\nu \) on (6.21) and using (2.6), we obtain the integrated form of (6.21):

where \(m_i-1<\beta _i\le m_i,\ m_i\in N,\) this leads to

where

In order to use the tau method with SJOM for solving the fully integrated problem (6.24) with initial conditions (6.22), we approximate \(u(x)\) and \(g(x)\) by means of the shifted Jacobi polynomials:

where the vector \(G=[g_0, g_1, \ldots , g_N]^\mathrm{T}\) is given but \(C=[c_0, c_1, \ldots ,c_N]^\mathrm{T}\) is an unknown vector.

Now, the Riemann–Liouville integral of orders \(\nu \) and \( \nu -\beta _j \) of the approximate solution (6.25), after making use of Theorem 10 (relation (5.5)), can be written as

and

respectively, where \( \mathbf P ^{(\nu )}\) is the \((N+1) \times (N+1)\) operational matrix of fractional integration of order \(\nu \).

After employing Eqs. (6.25)–(6.28), the residual \(R_N(x)\) of Eq. (6.24) can be written as

As in a typical tau method (see [67, 69]), we generate \( N-m+1 \) linear algebraic equations by applying

Also, substituting Eqs. (3.2) and (6.25) into Eq. (6.22) yields

Equations (6.30) and (6.31) generate \( N-m+1 \) and \( m \) set of linear equations, respectively. These linear equations can be solved for unknown coefficients of the vector \(C\). Consequently, \(u_N(x)\) given in Eq. (6.25) can be calculated, which is a solution of Eq. (6.21) with the initial conditions (6.22).

6.2.2 Tau method based on MGLOM of fractional integration

The modified generalized Laguerre tau method based on operational matrix is proposed to solve numerically the FDEs. The basic idea of this technique is as follows: (i) The FDE is converted to a fully integrated form via fractional integration in the Riemann–Liouville sense. (ii) Subsequently, the integrated form equation are approximated by representing them as linear combinations of modified generalized Laguerre polynomials. (iii) Finally, the integrated form equation is converted into an algebraic equation by introducing the operational matrix of fractional integration of the modified generalized Laguerre polynomials.

Consider the following multi-order FDE:

with initial conditions

If we apply the Riemann–Liouville integral of order \(\nu \) on (6.32) and after making use of (2.6), we get the integrated form of (6.32):

where \(m_i-1<\beta _i\le m_i,\ m_i\in N.\) This implies that

where

Let us express the approximate solution \(u(x)\) and \(g(x)\) in terms of the modified generalized Laguerre polynomials

where the vector \(G=[g_0,\ldots , g_N]^\mathrm{T}\) is given but \(C=[c_0,\ldots ,c_N]^\mathrm{T}\) is an unknown vector.

The Riemann–Liouville integral of orders \(\nu \) and \(\nu -\beta _j \) of the approximate solution (6.36), after employing Theorem 11 (relation (5.8)), can be written as

and

respectively, where \( \mathbf P ^{(\nu )}\) is the \((N+1) \times (N+1)\) operational matrix of fractional integration of order \(\nu \). Employing Eqs. (6.36)–(6.39) the residual \(R_N(x)\) of Eq. (6.35) can be written as

As in a typical tau method, we generate \( N-m+1 \) linear algebraic equations by applying

Also, by substituting Eqs. (4.35) and (6.36) in Eq. (6.33), we get

Equations (6.41) and (6.42) generate \( N-m+1 \) and \( m \) set of linear equations, respectively. These linear equations can be solved for unknown coefficients of the vector \(C\). Consequently, \(u_N(x)\) given in Eq. (6.36) can be calculated, which gives a solution of Eq. (6.32) with the initial conditions (6.33).

6.3 Collocation method for nonlinear FDEs in finite interval

Here, we apply the collocation method, based on SJOM and BOM of fractional derivatives, for nonlinear FDEs in finite interval subject to initial and boundary conditions.

6.3.1 Collocation method based on SJOM

In order to present the implementation of the Jacobi collocation method based on SJOM of fractional derivative, we consider the nonlinear FDE

with initial conditions (6.2), where \(F\) can be nonlinear in general.

In order to use SJOM for this problem, we first approximate \(u(x), D^\nu u(x)\) and \(D^{\mu _j}u(x)\ (j=1,\ldots ,k)\) as Eqs. (6.3), (6.5) and (6.6), respectively. By substituting these equations in Eq. (6.43), we get

Also, by substituting Eqs. (6.3) and (4.17) in Eq. (6.2), we obtain

To find the approximate solution \(u_{N}(x)\), we first collocate Eq. (6.44) at \( N-m+1 \) points. We choose the \(N-m+1\) shifted Jacobi polynomial roots as the collocation points. These equations together with Eq. (6.45) generate \( N+1 \) nonlinear equations which can be solved using Newton’s iterative method. Consequently, the approximate solution \(u_{N}(x)\) can be obtained.

Remark 3

For dealing with the nonlinear FDE (6.43) with boundary conditions (6.10), we apply the same technique described in this subsection, but Eq. (6.45) should be changed to (6.11). After using the collocation method with the aid of SJOM for fractional derivatives at the \( N-m+1 \) nodes, we obtain a system of \( N+1 \) nonlinear algebraic equations which may be solved by Newton’s iterative method.

6.3.2 Collocation method based on BOM

In order to show the high importance of BOM of fractional derivative, we apply it to solve multi-order fractional differential equation

with boundary or supplementary conditions

where \(0\le p<\max \{{\beta _{i},i=1,\ldots ,k}\}\le p+1\), \(\xi _{i}\in [0,1]\), \(i=0,\ldots ,p\) and \(H_i\) are linear combinations of \(u(\xi _i),u^{\prime }(\xi _i),\ldots ,u^{p}(\xi _i)\) and \(u(x)\in L^{2}[0,1]\). It should be noted that in general \(F\) can be nonlinear.

We approximate \(u(x)\) by Bernstein polynomials as

where vector \(C=[c_0,\ldots ,c_N]^{T}\) is unknown vector. Using Eqs. (4.53) and (6.48) we have

By substituting these equations in Eq. (6.46), we get

Similarly, substituting Eq. (6.48) in Eq. (6.47) yields

To find the solution \(u(x)\), we first collocate Eq. (6.50) at \( N-p \) points. For suitable collocation points, we use

These equations together with Eq. (6.51) generate \( N+1 \) algebraic equations which can be solved to find \(c_i,\ i=0,\ldots ,N\). Consequently, the unknown function \(u(x)\) given in Eq. (6.48) can be calculated.

6.4 Collocation method for nonlinear FDEs in a semi-infinite interval

In this section, in order to show the high importance of MGLOM of fractional derivative, we apply it to solve nonlinear multi-order FDE. Regarding the nonlinear multi-order fractional initial value problems on the interval \(\varLambda \) we propose a spectral modified generalized Laguerre collocation method based on MGLOM to find the solution \(u_N (x)\).

Consider the nonlinear FDE

with initial conditions (6.13), where \(F\) can be nonlinear in general.

In order to use modified generalized Laguerre polynomials for this problem, we first approximate \(u(x)\), \(D^\nu u(x)\) and \(D^{\beta _j}u(x)\), for \(j=1,\ldots ,k\) as Eqs. (6.14), (6.16) and (6.17), respectively. Therefore, Eq. (6.53) can be written as

The numerical treatment of the initial conditions as given in Eq. (6.13) yields

To find the solution \(u(x)\), we first collocate Eq. (6.54) at \( N-m \) points. For suitable collocation points, we use the \( N-m+1 \) modified generalized Laguerre roots of \(L^{(\alpha ,\beta )}_{i}(x)\). These equations together with Eq. (6.55) generate \( N+1 \) nonlinear equations which can be solved using Newton’s iterative method. Consequently, the approximate solution \(u(x)\) can be obtained.

Corollary 6

In particular, the special case for generalized Laguerre polynomials may be obtained directly by taking \(\beta =1\) in the modified generalized Laguerre, which are denoted by \(L^{(\alpha )}_i(x)\) (see [66]).

7 Fractional generalized Laguerre functions for systems of FDEs

The fractional-order generalized Laguerre functions (FGLFs) can be defined by introducing the change of variable \( t=x^\lambda \), \(\beta =1\) and \(\lambda >0\) on modified generalized Laguerre polynomials. Where the FGLFs \(L^{(\alpha )}_i{(x^\lambda )}\) be denoted by \( L^{(\alpha ,\lambda )}_{i}{(x)}\).

We use the fractional-order generalized Laguerre collocation (FGLC) method (see, [97]) to numerically solve the general form of systems of nonlinear FDE, namely

with initial conditions

where \(0<\nu _i\le 1 \).

Let

The fractional derivatives \(D^{\nu _i}u(x),\) can be expressed in terms of the expansion coefficients \(a_{ij}\) using (4.39) where \(\beta =1\). The implementation of fractional-order generalized Laguerre collocation method to solve (7.1)–(7.2) is to find \(u_{iN}(x) \in S_N(\varLambda )\) such that

is satisfied exactly at the collocation points \(x^{(\alpha , \lambda )}_{i, N,k},\ k=0,1, \ldots ,N-1\), \(i=1, \ldots ,n,\) which immediately yields

with (7.2) written in the form

This means the system (7.1) with its initial conditions has been reduced to a system of \(n(N+1)\) nonlinear algebraic equations (7.5)–(7.6), which may be solved by using any standard iteration technique.

8 Applications and numerical results

This section presents some numerical results obtained by using the algorithms presented in the previous sections. Comparisons of the spectral methods with those obtained by other methods reveal that spectral methods are very effective and convenient.

Example 1

Consider the inhomogeneous Bagley-Torvik equation, see [68]

where \(g(x)=1+x\).

The exact solution of this problem is \(u(x)=1+x\).

By applying the technique described in (Sect. 6.1.1) with \(N=2\), we may write the approximate solution and the right-hand side in the forms

Here, the operational matrices corresponding to Eq. (8.1) can be written as follows

where \(g_j\ \text {and}\ \varDelta _{\nu }(i,j)\) are computed from Eqs. (4.14) and (4.21), respectively.

Firstly, applying the tau method for (8.1) (see, Eq. (6.8)) gives

Secondly, the use of Eq. (6.9) in the initial conditions yields

Finally, if we solve the linear algebraic equations, (8.2)–(8.4). Then, the approximate solution can be written as

which is the exact solution of the problem.

Table 1 exhibits the 3 unknown coefficients \(c_0,\ c_1 \text {and} c_2\) with various choices of \(\alpha \ \text {and}\ \beta .\) We observed that in each case of the Jacobi parameters \(\alpha \) and \(\beta \), we can achieve the exact solution.

Remark 4

In case of \(\alpha =\beta =0\) and \(L=1\), the previous result is in complete agreement with the result obtained by Saadatmandi and Dehghan, (see [68] Example 1).

Example 2

Consider the FDE (see [68])

the second initial solution is for \(\nu >1\) only.

The exact solution is (see [24])

where

is the generalized Mittag-Leffler function.

The solution of this problem is obtained by applying the technique described in (Sect. 6.1.1). The maximum absolute error for \(\nu =0.85\) and various choices of \(N,\alpha \ \text {and}\ \beta \) are shown in Table 2. From Table 2, we can achieve a good approximation to the exact solution by using a few terms of shifted Jacobi polynomials. Also maximum absolute error for \(N=10\) and different values of \(\nu ,\ \alpha ,\ \text {and}\ \beta \) are shown in Table 3.

Remark 5

In case of \(\alpha =\beta =0\) and \(L=1\), this result is in complete agreement with the result obtained by Saadatmandi and Dehghan (see [68]).

Example 3

Consider the equation

whose exact solution is given by \(u(x)=x^3.\)

By applying the technique described in Sect. 6.1.2 with \(N=3\) and \(x\in \varLambda \), we approximate the solution as

Here, we have

where \(g_{j}\) and \(\varOmega _{\nu }(i,j)\) are computed from Eqs. (4.35) and (4.40), respectively.

Therefore, using Eq. (6.19), we obtain

Now, by applying Eq. (6.20) we have

Finally, by solving Eqs. (8.9)–(8.11) we have the \(4\) unknown coefficients with various choices of \(\alpha \) and \(\beta \) which are given in Table 4. Then, we get

Thus, we can write

Example 4

Consider the following linear boundary value problem

The exact solution of this problem is \(u(x)= \sqrt{\pi (x+1)}\).

Now, we apply the collocation technique based on BOM, which is described in Sect. 6.3.2, for solving Eq. (8.12). The \(L_{\infty }\) and \(L_2\) errors are presented in Table 5 for different values of \(n\). Also, in Table 5, a comparison is made between the presented method and the method based on linear B-spline functions (see [78]). The method of [78] requires the solution of a rather large systems of algebraic equations to obtain accuracy of comparable order. Indeed, in the BOM method, we obtain \( N+1 \) algebraic equations while the method of [78] requires \( 2^N+1 \) algebraic equations which increase the computational time.

Example 5

Consider the following initial value problem

whose exact solution is given by \(u(x)=x^3.\)

By applying the technique described in (Sect. 6.2.1) using SJOM of fractional integration with \(N=3\), we may write the approximate solution and the right-hand side in the form

From Eq. (5.6) one can write

where \(\varUpsilon _{\frac{3}{2}}(i,j,\alpha ,\beta )\) is given in Eq. (5.7) and

Making use of (6.28) and (6.30) yields

Applying Eq. (6.31) for the initial conditions gives

Finally, by solving Eqs. (8.14)–(8.16), we get the approximate solution. In particular, the special cases for ultraspherical basis (\(\alpha =\beta \) and each is replaced by \(\alpha -\frac{1}{2}\)) and for Chebyshev basis of the first, second, third and fourth kinds may be obtained directly by taking \(\alpha =\beta =\mp \frac{1}{2},\ \alpha =-\beta =\pm \frac{1}{2}\), respectively, and for the shifted Legendre basis by taking \(\alpha =\beta =0.\), we offer some of these special cases.

Case 1. If \(\alpha =\beta =0\), then

and the approximate solution is given by

which is the exact solution.

Case 2. If we choose \(\alpha =-\frac{1}{2}, \beta =\frac{1}{2}\), then

and

which is the exact solution.

Case 3. In the case of \(\alpha =\frac{1}{2}, \beta =-\frac{1}{2}\), we have

and

which is the exact solution.

Example 6

Consider the equation

whose exact solution is given by \(u(x)=x^3.\)

Now, we can apply the technique described in (Sect. 6.2.1) using SJOM of fractional integration with \(N=3\). The approximate solution obtained by using the proposed method for some special cases of \(\alpha \ and\ \beta \) are listed in the following cases

Case 1. If \(\alpha =\beta =0\), then

and

which is the exact solution.

Case 2. If \(\alpha =-\frac{1}{2}, \beta =\frac{1}{2}\), then

and

which is the exact solution.

Case 3. If \(\alpha =\frac{1}{2}, \beta =-\frac{1}{2}\), then

and

which is the exact solution.

Case 4. If \(\alpha =\beta =-\frac{1}{2}\), then

and

which is the exact solution.

Example 7

Consider the following fractional initial value problem

whose exact solution is given by \(u(x)=\hbox {e}^{\gamma x}.\)

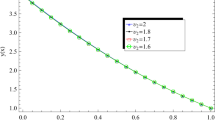

The solution of this problem is obtained by applying the technique described in Sect. 6.2.2 using MGLOM of fractional integration. The maximum absolute errors for \(\gamma = 0.01\) and various choices of \(N\), \(\alpha \) and \(\beta \) are shown in Table 6. From Table 6, we can achieve a good approximation to the exact solution by using a few terms of modified generalized Laguerre polynomials. Also maximum absolute errors for \(N = 10\) and different values of \(\gamma \), \(\alpha \) and \(\beta \) are displayed in Table 7.

Example 8

Consider the following initial value problem of multi-term nonlinear FDE

The exact solution of this problem is \(u(x) = x^3\).

In Table 8, we introduce the maximum absolute errors, using the collocation technique based on SJOM in (Sect. 6.3.1), at \(\zeta =2.5,\ \eta =1.5,\ \theta =0.9\) with various choices of \(\alpha ,\ \beta \ \text {and}\ N\). Also, the maximum absolute errors for four different choices of \( N,\ \zeta ,\ \eta ,\ \theta \) and \( \alpha =\beta =1.5\) are shown in Table 9. From Table 9, we see that as \(\zeta ,\ \eta ,\ \theta \) approach their integer values, the solution of the FDE approaches that of the integer-order differential equations and, accordingly, the approximate solutions will become accurate.

Example 9

Consider the following nonlinear initial value problem

where

and the exact solution is given by \(u(x)=\cos (\gamma x).\)

The solution of this problem is obtained by applying the technique described in (Sect. 6.2.2) in Eq. (8.14) with \(\alpha =0\) and \(\beta =1\) using LOM. The maximum absolute error for \(\gamma = \frac{1}{30}\) and \(\gamma = \frac{1}{100}\) with various choices of \(N\) and \(\nu \) are shown in Tables 10 and 11, respectively.

Example 10

Finally, we consider the following nonlinear boundary value problem

The exact solution of this problem is \(u(x)=x^2+1\).

We apply the method presented in Sect. 6.3.2 in which we use the collocation method based on BOM of fractional derivative. In Table 12, we compare the \(L_\infty (0,1)\) and \(L_2(0,1)\) errors of the BOM algorithm with the method proposed in [78].

Example 11

Consider the FDE

the exact solution is given by \( u(x)=x^2 \).

We convert Eq. (8.21) into a system of FDE by changing variable \(u_1(x)=u(x)\) and get

with initial conditions

The maximum absolute error for \(y(x)=y_1(x)\) using FGLC method at \(N=4\) and various choices of \(\alpha \) are shown in Table 13. It is clear that the approximate solutions are in complete agreement with the exact solutions.

9 Conclusion

In this article, we have presented a broad discussion of spectral techniques based on operational matrices of fractional derivatives and integrals for some orthogonal polynomials, such as the Legendre, Chebyshev, Jacobi, Bernstein, Laguerre, generalized Laguerre and modified generalized Laguerre polynomials, and their use with numerical techniques for solving fractional differential equations on finite and semi-finite intervals.

Efficient numerical integration processes for FDEs were investigated based on spectral methods in combination with operational matrices. Comparisons between the obtained approximate solutions, using spectral methods, of the problems with their exact solutions and with the approximate solutions achieved by other methods were introduced to confirm the validity and applicability of spectral techniques based on operational matrices over other methods. The proposed methods can be extended to solve the time-dependent FDES.

References

Machado, J.A.T.: The effect of fractional order in variable structure control. Comput. Math. Appl. 64, 3340–3350 (2012)

Gutierrez, R.E., Rosario, J.M., Machado, J.A.T.: Fractional order calculus: basic concepts and engineering applications. Math. Prob. Eng. (2010). Article ID 375858, 19

Pinto, C.M.A., Tenreiro Machado, J.A.: Complex order van der Pol oscillator. Nonlinear Dyn. 65, 247–254 (2011)

Bota, C., Caruntu, B.: Approximate analytical solutions of the fractional-order brusselator system using the polynomial least squares method. Adv. Math. Phys. (2015). Article ID 450235

Liu, Y., Fang, Z., Li, H., He, S.: A mixed finite element method for a time-fractional fourth-order partial differential equation. Appl. Math. Comput. 243, 703–717 (2014)

Merdan, M.: On the solutions of time-fractional generalized Hirota–Satsuma coupled-KDV equation with modified Riemann–Liouville derivative by an analytical technique. Proc. Rom. Acad. A 16, 3–10 (2015)

Inc, M., Kilic, B.: Classification of traveling wave solutions for time-fractional fifth-order KdV-like equation. Waves Random Complex Media 24, 393–403 (2014)

Katsikadelis, J.T.: Numerical solution of distributed order fractional differential equations. J. Comput. Phys. 259, 11–22 (2014)

Daftardar-Gejji, V., Sukale, Y., Bhalekar, S.: A new predictor–corrector method for fractional differential equations. Appl. Math. Comput. 244, 158–182 (2014)

Biswas, A., Bhrawy, A.H., Abdelkawy, M.A., Alshaery, A.A., Hilal, E.M.: Symbolic computation of some nonlinear fractional differential equations. Rom. J. Phys. 59(5–6), 433–442 (2014)

Parvizi, M., Eslahchi, M.R., Dehghan, M.: Numerical solution of fractional advection–diffusion equation with a nonlinear source term. Numer. Algorithms 68, 601–629 (2015)

Nagy, A.M., Sweilam, N.H.: An efficient method for solving fractional Hodgkin–Huxley model. Phys. Lett. A 378(30), 1980–1984 (2014)

Sadatia, S.J., Ghaderi, R., Ranjbar, N.: Some fractional comparison results and stability theorem for fractional time delay systems. Rom. Rep. Phys. 65, 94–102 (2013)

Leo, R.A., Sicuro, G., Tempest, P.: A theorem on the existence of symmetries of fractional PDEs. C. R. Acad. Sci. Paris Ser. I 352, 219–222 (2014)

Gaur, M., Singh, K.: On group invariant solutions of fractional order Burgers–Poisson equation. Appl. Math. Comput. 244, 870–877 (2014)

Wang, G.W., Xu, T.Z.: The improved fractional sub-equation method and its applications to nonlinear fractional partial differential equations. Rom. Rep. Phys. 66(3), 595–602 (2014)

Liu, Z., Lu, P.: Stability analysis for HIV infection of CD4+ T-cells by a fractional differential time-delay model with cure rate. Adv. Differ. Equ. 2014, 298 (2014)

Huang, Q., Zhdanov, R.: Symmetries and exact solutions of the time fractional Harry–Dym equation with Riemann–Liouville derivative. Physica A 409, 110–118 (2014)

Takači, D., Takači, A., Takači, A.: On the operational solutions of fuzzy fractional differential equations. Frac. Calc. Appl. Anal. 17, 1100–1113 (2014)

Ghomashi, A., Salahshour, S., Hakimzadeh, A.: Approximating solutions of fully fuzzy linear systems: a financial case study. J. Intell. Fuzzy Syst. 26, 367–378 (2014)

Al-Khaled, K.: Numerical solution of time-fractional partial differential equations using sumudu decomposition method. Rom. J. Phys. 60, 99–110 (2015)

Bhrawy, A.H., Zaky, M.A.: A method based on the Jacobi tau approximation for solving multi-term time-space fractional partial differential equations. J. Comput. Phys. 281, 876–895 (2015)

Gao, F., Lee, X., Fei, F., Tong, H., Deng, Y., Zhao, H.: Identification time-delayed fractional order chaos with functional extrema model via differential evolution. Expert Syst. Appl. 41, 1601–1608 (2014)

Diethelm, K., Ford, N.J., Freed, A.D.: A predictor–corrector approach for the numerical solution of fractional differential equation. Nonlinear Dyn. 29, 3–22 (2002)

Guner, O., Cevikel, A.C.: A procedure to construct exact solutions of nonlinear fractional differential equations. Sci. World J. (2014). Article ID 489495, 10 pp

Xie, W., Xiao, J., Luo, Z.: Existence of extremal solutions for nonlinear fractional differential equation with nonlinear boundary conditions. Appl. Math. Lett. 41, 46–51 (2015)

Benchohraa, M., Lazreg, J.E.: Existence and uniqueness results for nonlinear implicit fractional differential equations with boundary conditions. J. Math. Comput. Sci. 4, 60–72 (2014)

Dehghan, M., Abbaszadeh, M., Mohebbi, A.: Error estimate for the numerical solution of fractional reaction–subdiffusion process based on a meshless method. J. Comput. Appl. Math. 280, 14–36 (2015)

Ye, H., Liu, F., Anh, V., Turner, I.: Maximum principle and numerical method for the multi-term time–space Riesz–Caputo fractional differential equations. Appl. Math. Comput. 277, 531–540 (2014)

Butera, S., Paola, M.D.: Mellin transform approach for the solution of coupled systems of fractional differential equations. Commun. Nonlinear Sci. Numer. Simul. 20, 32–38 (2015)

Zhang, X.: Positive solutions for a class of singular fractional differential equation with infinite-point boundary value conditions. Appl. Math. Lett. 39, 22–27 (2015)

Pedas, A., Tamme, E.: Numerical solution of nonlinear fractional differential equations by spline collocation methods. J. Comput. Appl. Math. 255, 216–230 (2014)

Deng, J., Deng, Z.: Existence of solutions of initial value problems for nonlinear fractional differential equations. Appl. Math. Lett. (2014). doi:10.1016/j.aml.2014.02.001

Dehghan, M., Abbaszadeh, M., Mohebbi, A.: An implicit RBF meshless approach for solving the time fractional nonlinear sine-Gordon and Klein–Gordon equations. Eng. Anal. Bound. Elem. 50, 412–434 (2015)

Gao, F., Lee, X., Tong, H., Fei, F., Zhao, H.: Identification of unknown parameters and orders via cuckoo search oriented statistically by differential evolution for noncommensurate fractional-order chaotic systems. Abstr. Appl. Anal. (2013). Article ID 382834

Canuto, C., Hussaini, M.Y., Quarteroni, A., Zang, T.A.: Spectral Methods: Fundamentals in Single Domains. Springer, New York (2006)

Abdelkawy, M.A., Ahmed, E.A., Sanchez, P.: A method based on Legendre pseudo-spectral approximations for solving inverse problems of parabolic types equations. Math. Sci. Lett. 4, 81–90 (2015)

Bhrawy, A.H., Doha, E.H., Baleanu, D., Ezz-Eldien, S.S., Abdelkawy, M.A.: An accurate numerical technique for solving fractional optimal control problems. Proc. Rom. Acad. A 16, 47–54 (2015)

Xu, Q., Hesthaven, J.S.: Stable multi-domain spectral penalty methods for fractional partial differential equations. J. Comput. Phys. 257, 241–258 (2014)

Chen, F., Xu, Q., Hesthaven, J.S.: A multi-domain spectral method for time-fractional differential equations. J. Comput. Phys. (2015). doi:10.1016/j.jcp.2014.10.016

Doha, E.H., Bhrawy, A.H., Hafez, R.M., Abdelkawy, M.A.: A Chebyshev-Gauss-Radau scheme for nonlinear hyperbolic system of first order. Appl. Math. Inf. Sci. 8(2), 535–544 (2014)

Zayernouri, M., Em Karniadakis, G.: Exponentially accurate spectral and spectral element methods for fractional ODEs. J. Comput. Phys. 257, 460–480 (2014)

Parand, K., Nikarya, M.: Application of Bessel functions for solving differential and integro-differential equations of the fractional order. Appl. Math. Model. 38, 4137–4147 (2014)

Doha, E.H., Abd-Elhameed, W.M., Bassuony, M.A.: On using third and fourth kinds Chebyshev operational matrices for solving Lane–Emden type equations. Rom. J. Phys. 60, 3–4 (2015)

Keshavarz, E., Ordokhani, Y., Razzaghi, M.: Bernoulli wavelet operational matrix of fractional order integration and its applications in solving the fractional order differential equations. Appl. Math. Model. 38, 6038–6051 (2014)

Yang, L., Shen, C., Xie, D.: Multiple positive solutions for nonlinear boundary value problem of fractional order differential equation with the Riemann–Liouville derivative. Adv. Diff. Equ 2014, 284 (2014)

Doha, E.H., Bhrawy, A.H., Baleanu, D., Abdelkawy, M.A.: Numerical treatment of coupled nonlinear hyperbolic Klein–Gordon equations. Rom. J. Phys. 59, 247–264 (2014)

Ilati, M., Dehghan, M.: The use of radial basis functions (RBFs) collocation and RBF-QR methods for solving the coupled nonlinear sine-Gordon equations. Eng. Anal. Bound. Elem. 52, 99–109 (2015)

Bhrawy, A.H., Doha, E.H., Ezz-Eldien, S.S., Abdelkawy, M.A.: A numerical technique based on the shifted Legendre polynomials for solving the time-fractional coupled KdV equations. Calcolo (2015). doi:10.1007/s10092-014-0132-x

Zhang, H., Yang, X., Hanc, X.: Discrete-time orthogonal spline collocation method with application to two-dimensional fractional cable equation. Comput. Math. Appl. 68, 1710–1722 (2014)

Yang, Y., Chen, Y., Huang, Y.: Convergence analysis of the Jacobi spectral-collocation method for fractional integro-differential equations. Acta Math. Sci. 34, 673–690 (2014)

Ma, X., Huang, C.: Spectral collocation method for linear fractional integro-differential equations. Appl. Math. Model. 38, 1434–1448 (2014)

Doha, E.H., Bhrawy, A.H., Ezz-Eldien, S.S.: A Chebyshev spectral method based on operational matrix for initial and boundary value problems of fractional order. Comput. Math. Appl. 62, 2364–2373 (2011)

Saadatmandi, A., Dehghan, M.: A method based on the tau approach for the identification of a time-dependent coefficient in the heat equation subject to an extra measurement. J. Vib. Control 18, 1125–1132 (2012)

Bhrawy, A.H., Zaky, M.A., Machado, J.A.T.: Efficient Legendre spectral tau algorithm for solving two-sided space-time Caputo fractional advection-dispersion equation. J. Vib. Control (2015). doi:10.1177/1077546314566835

Lau, S.R., Price, H.: Sparse spectral-tau method for the three-dimensional helically reduced wave equation on two-center domains. J. Comput. Phys. 231, 7695–7714 (2012)

Zayernouri, M., Ainsworth, M.: Em Karniadakis, G.: A unified Petrov-Galerkin spectral method for fractional PDEs. Comput. Methods Appl. Mech. Eng. (2014). doi:10.1016/j.cma.2014.10.051

Dehghan, M., Salehi, R.: A meshless local Petrov–Galerkin method for the time-dependent Maxwell equations. J. Comput. Appl. Math. 268, 93–110 (2014)

Yang, Y.: Jacobi spectral Galerkin methods for fractional integro-differential equations. Calcolo (2014). doi:10.1007/s10092-014-0128-6

Bhrawy, A.H.: An efficient Jacobi pseudospectral approximation for nonlinear complex generalized Zakharov system. Appl. Math. Comput. 247, 30–46 (2014)

Doha, E.H., Bhrawy, A.H., Abdelkawy, M.A., Hafez, R.M.: A Jacobi collocation approximation for nonlinear coupled viscous Burgers’ equation. Cent. Eur. J. Phys. 12, 111–122 (2014)

Doha, E.H., Bhrawy, A.H., Saker, M.A.: On the derivatives of Bernstein polynomials: an application for the solution of high even-order differential equations. Bound. Value Probl. (2011). doi:10.1155/2011/829543

Bhrawy, A.H., Zaky, M.A.: Numerical simulation for two-dimensional variable-order fractional nonlinear cable equation. Nonlinear Dyn. 80, 101–116 (2015)

Ozarslan, M.A.: On a singular integral equation including a set of multivariate polynomials suggested by Laguerre polynomials. Appl. Math. Comput. 229, 350–358 (2014)

Drivera, K., Muldoon, M.E.: Common and interlacing zeros of families of Laguerre polynomials. J. Approx. Theory (2014). doi:10.1016/j.jat.2013.11.013

Baleanu, D., Bhrawy, A.H., Taha, T.M.: Two efficient generalized Laguerre spectral algorithms for fractional initial value problems. Abstr. Appl. Anal. (2013). doi:10.1155/2013/546502

Bhrawy, A.H., Alghamdi, M.M., Taha, T.M.: A new modified generalized Laguerre operational matrix of fractional integration for solving fractional differential equations on the half line. Adv. Differ. Equ. 2012, 0:179 (2012)

Saadatmandi, A., Dehghan, M.: A new operational matrix for solving fractional-order differential equations. Comput. Math. Appl. 59, 1326–1336 (2010)

Doha, E.H., Bhrawy, A.H., Ezz-Eldien, S.S.: A new Jacobi operational matrix: an application for solving fractional differential equations. Appl. Math. Model. 36, 4931–4943 (2012)

Kazem, S., Abbasbandy, S., Kumar, S.: Fractional-order Legendre functions for solving fractional-order differential equations. Appl. Math. Model. 37, 5498–5510 (2013)

Yin, F., Song, J., Wu, Y., Zhang, L.: Numerical solution of the fractional partial differential equations by the two-dimensional fractional-order Legendre functions. Abstr. Appl. Anal. (2013). Article ID 562140, 13 pages

Ahmadian, A., Suleiman, M., Salahshour, S.: An operational matrix based on Legendre polynomials for solving fuzzy fractional-order differential equations. Abstr. Appl. Anal. (2013). Article ID 505903 29

Ishteva, M., Boyadjiev, L.: On the C-Laguerre functions. C.R. Acad. Bulg. Sci. 58(9), 1019–1024 (2005)

Ishteva, M., Boyadjiev, L., Scherer, R.: On the Caputo operator of fractional calculus and C-Laguerre functions. Math. Sci. Res. 9(6), 161–170 (2005)

Yin, F., Song, J., Leng, H., Lu, F.: Couple of the variational iteration method and fractional-order Legendre functions method for fractional differential equations. Sci. World J. (2014). Article ID 928765, 9 pp

Dehghan, M., Yousefi, S.A., Lotfi, A.: The use of Hes variational iteration method for solving the telegraph and fractional telegraph equations. Int. J. Numer. Methods Biomed. Eng. 27, 219–231 (2011)

Ford, N.J., Connolly, J.A.: Systems-based decomposition schemes for the approximate solution of multi-term fractional differential equations. Comput. Appl. Math. 229, 382–391 (2009)

Lakestani, M., Dehghan, M., Irandoust-pakchin, S.: The construction of operational matrix of fractional derivatives using B-spline functions. Commun. Nonlinear Sci. Numer. Simul. 17, 1149–1162 (2012)

Bhrawy, A.H., Tharwat, M.M., Alghamdi, M.A.: A new operational matrix of fractional integration for shifted Jacobi polynomials. Bull. Malays. Math. Sci. Soc. (2) 37(4), 983–995 (2014)

Bhrawy, A.H., Abdelkawy, M.A.: A fully spectral collocation approximation for multi-dimensional fractional Schrodinger equations. J. Comput. Phys. 294, 462–483 (2015)

Bhrawy, A.H., Alofi, A.S.: The operational matrix of fractional integration for shifted Chebyshev polynomials. Apl. Math. Lett. 26, 25–31 (2013)

Bhrawy, A.H., Zaky, M.A., Baleanu, D.: New numerical approximations for space-time fractional Burgers’ equations via a Legendre spectral-collocation method. Rom. Rep. Phys. 67(2) (2015)

Mokhtary, P.: Reconstruction of exponentially rate of convergence to Legendre collocation solution of a class of fractional integro-differential equations. J. Comput. Appl. Math. 279, 145–158 (2015)

Abdelkawy, M.A., Taha, T.M.: An operational matrix of fractional derivatives of Laguerre polynomials. Walailak J. Sci. Technol. 11, 1041–1055 (2014)

Heydari, M.H., Hooshmandasl, M.R., Mohammadi, F.: Legendre wavelets method for solving fractional partial differential equations with Dirichlet boundary conditions. Appl. Math. Comput. 234, 267–276 (2014)

Prakash, P., Harikrishnan, S., Benchohra, M.: Oscillation of certain nonlinear fractional partial differential equation with damping term. Appl. Math. Lett. 43, 72–79 (2015)

El-Wakil, S.A., Abulwafa, E.M.: Formulation and solution of space–time fractional Boussinesq equation. Nonlinear Dyn. 80, 167–175 (2015)

Stokes, P.W., Philippa, B., Read, W., White, R.D.: Efficient numerical solution of the time fractional diffusion equation by mapping from its Brownian counterpart. J. Comput. Phys. 282, 334–344 (2015)

Chen, Y., Ke, X., Wei, Y.: Numerical algorithm to solve system of nonlinear fractional differential equations based on wavelets method and the error analysis. Appl. Math. Comput. 251, 475–488 (2015)

Szegö, G.: Orthogonal Polynomials. In: American Mathematical Society Colloquium Publications, vol. 23, 4th edn. American Mathematical Society, Providence, RI (1975)

Funaro, D.: Polynomial Approximations of Differential Equations. Springer, Berlin (1992)

Doha, E.H.: On the coefficients of differentiated expansions and derivatives of Jacobi polynomials. J. Phys. A Math. Gen. 35, 3467–3478 (2002)

Doha, E.H.: On the construction of recurrence relations for the expansion and connection coefficients in series of Jacobi polynomials. J. Phys. A Math. Gen. 37, 657–675 (2004)

Luke, Y.: The Special Functions and Their Approximations, vol. 2. Academic Press, New York (1969)

Saadatmandi, A.: Bernstein operational matrix of fractional derivatives and its applications. Appl. Math. Model. 38, 1365–1372 (2014)

Diethelm, K., Ford, N.J.: Multi-order fractional differential equations and their numerical solutions. Appl. Math. Comput. 154, 621–640 (2004)

Bhrawy, A.H., Alhamed, Y.A., Baleanu, D., Al-Zahrani, A.A.: New spectral techniques for systems of fractional differential equations using fractional-order generalized Laguerre orthogonal functions. Frac. Calc. Appl. Anal. 17, 1137–1157 (2014)

Acknowledgments

The authors are very grateful to the reviewers for carefully reading this article review and for their comments and suggestions which have improved the article.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Bhrawy, A.H., Taha, T.M. & Machado, J.A.T. A review of operational matrices and spectral techniques for fractional calculus. Nonlinear Dyn 81, 1023–1052 (2015). https://doi.org/10.1007/s11071-015-2087-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11071-015-2087-0