Abstract

In this paper, a class of Bidirectional Associative Memory neural networks with time-varying weights and continuously distributed delays is discussed. Sufficient conditions are obtained for the existence and uniqueness of weighted pseudo-almost periodic solution of the considered model and numerical examples are given to show the effectiveness of the obtained results.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Memory is a crucial term which goes side to side with Artificial Neural Networks (ANN). Besides, natural neural networks are usually connected in more complex structures, like recurrent synaptic connections. Unfortunately, Feed Forward Networks (FFNs) lack of dynamic memory required for certain non-linear tasks. On the other hand, in addition to feed forward links, Recurrent ANNs are featured by feedback connections between their layers Ref. [27].

So, Recurrent Neural Networks (RNN) were originally developed as a way of extending neural networks to sequential data. The addition of recurrent connections allows RNNs to make use of previous context, as well as making them more robust and suited for dynamical systems modeling. The rich dynamics created by the recurrent connections enable signals to be spread in the different layers. Hence, the capability of RNN to hold non-linear systems behavior is revealed.

With regard to practical exploits, RNN have excelled in tasks that require good or working memory, for example for time series prediction used in financial dataset to predict the future stock market price or vertical position of a levitated magnet [14], or for short-Term power production of hydropower plants [21]. RNN were also used for path planning for autonomous robot [9] or for the estimation of the bipeds next position at each time and to achieve a human-like natural walking [2].

Therefore, RNN are an important class of computational models inspired from the brain structure. Hence, diverse types of RNNs can be distinguished such as Hopfield, Elman, Long short-term memory (LSTM), Echo State Network (ESN) and Bidirectional Associative Memory (BAM).

The BAM neural network models have been extensively analysed and studied as a key class of RNN (see Fig. 1). They were first introduced by Kosko [20]. The BAM neural network is composed of neurons arranged in two layers, the \(X-\)layer and \(Y-\)layer.

The neurons in one layer are fully interconnected to the neurons in the other layer. Through iterations of forward and backward information flows between the two layer, it performs a two-way associative search for stored bipolar vector pairs and generalize the single-layer autoassociative Hebbian correlation to a two-layer pattern-matched heteroassociative circuit. Therefore, this class of networks possesses good application prospects in the area of pattern recognition, signal and image processing, robotics, etc (see [5] and [26]).

Bidirectional recurrent neural networks (BRNN) can offer an elegant solution for classification and time series prediction (see [17] and [28]). The basic idea of BRNNs is to present each training sequence forwards and backwards to two separate recurrent hidden layers, both of them are connected to the same output layer.

This structure provides the output layer with complete past and future context for every point in the input sequence. BRNNs have previously given improved results in various domains, notably protein secondary structure prediction (see [17] and [34]) and speech processing [28]. Besides, we notice that the proposed algorithm for BAM architecture clearly outperforms the Multilayered feed-forward neural network (MLFNN) architectures [30].

The stability of BAM neural networks with delays has been extensively studied; interested readers may refer to the works of Chen, Li, Liao and Shaohong (see [11, 22, 23] and [29]).

It is well-known, that there exist time delays in the information processing of neurons due to various reasons. In neural networks, time delays make the dynamic behaviors more sophisticated, and may destabilize the equilibria and admit periodic oscillations, bifurcation and chaos. Although delays arise frequently in practical applications. But, it is difficult to measure them precisely.

Liao et al. [23] study the dynamical characteristics of hybrid BAM neural networks with constant transmission delays. They employed the Lyapunov function and Halanay-type inequalities to derive the delay-independent sufficient conditions. They have, also, investigated the exponential stability associated with temporally uniform external inputs. Li et al. [22] deal with a class of memristor-based BAM neural networks with time-varying delays.

To the best of our knowledge, few authors have considered oscillatory solutions for BAM networks with both discrete and continuously delays. Hence, the main purpose of this paper is to study the existence and uniqueness for the Weighted Pseudo-Almost Periodic (WPAP) solution of BAM networks with delays. Based on a fixed point theorem and analysis technique, several novel sufficient conditions are obtained ensuring the existence and uniqueness of the WPAP solution.

Obviously, our results improve and extend works in which authors investigate the periodic and almost periodic solutions of BAM (see [11, 25, 29] and [35]). In addition, the method used here is also different to that in the work of Arik in Ref. [4] and the main results are new and complement previously known results.

The outline of the paper is divided into seven sections. In Sect. 2, we introduce some necessary definitions and the basic properties of the continuous functions and the weighted pseudo-almost periodicity. In Sect. 3, the new BAM model with time-varying weights and continuously distributed delays is explained and will be be used later. The existence and uniqueness of the WPAP of Eq. (1) in the suitable convex set of \(PAP(\mathbb {R},\mathbb {R}^{k},\varrho )\) are proved in Sect. 4. Numerical examples are given in Sect. 5 to illustrate the effectiveness of our results. In Sect. 6, a comparative study with previous works is discussed. Finally, we draw conclusions in Sect. 7.

2 Preliminaries: The Functions Spaces

\(\forall k\in \mathbb {N}\), let \(BC(\mathbb {R},\mathbb {R}^{k})\) denote the collection of bounded continuous functions.

Definition 1

Let \(k\in \mathbb {N}.\) A function \(f:\mathbb {R}\longrightarrow \mathbb {R}^{k}\) is continuous,

if \(\forall \varepsilon >0,\) it exists \(l_{\epsilon }>0\) such that

In this work, \(AP(\mathbb {R},\mathbb {R}^{k})\) will denote the set of all Almost Periodic (AP) \(\mathbb {R}^{k}\)-valued functions. It is well-known that the set \(AP(\mathbb {R},\mathbb {R}^{k})\) is a Banach space with the supremum norm. We refer the reader to Ref. [12] and [15] for the basic theory of almost periodic functions and their applications.

In order to construct new spaces and new generalizations of the concept of almost periodicity, the idea consists of enlarging the so-called ergodic component, with the help of a weighted measure \(d\mu \left( x\right) =\varrho \left( x\right) dx\), where \(\varrho :{\mathbb {R}}\) \(\longrightarrow \left] 0,+\infty \right[ \) is a locally integrable function over \({\mathbb {R} }\), which is commonly called weight. Roughly speaking, let \(\mathbb {U}\) denote the collection of all functions (weights) \(\varrho :{\mathbb {R}}\) \( \longrightarrow \left] 0,+\infty \right[ \) which are locally integrable over \({\mathbb {R}}\) such \(\varrho \left( x\right) >0\) for almost each \(x\in { \mathbb {R}}\). For \(\varrho \in \mathbb {U}\) and for \(r>0,\) we set

Notice that if \(\varrho \left( x\right) =1,\) then

Throughout this paper , the sets of weights \(\mathbb {U}_{\infty }\) and \( \mathbb {U}_{B}\) stand respectively for

and

Obviously, \(\mathbb {U}_{B}\subset \mathbb {U}_{\infty }\subset \mathbb {U}\), with strict inclusions. To introduce those weighted pseudo-almost periodic functions, we need to define the “weighted ergodic” space \(\ PAP_{0}\left( \mathbb {R},\mathbb {R} ^{k},\varrho \right) .\) Hence, WPAP functions will then appear as perturbations of almost periodic functions by elements of \(PAP_{0}\left( \mathbb {R},\mathbb {R}^{k},\varrho \right) .\) More precisely, for a fixed \(\varrho \) in \(\mathbb {U}_{\infty }\) let define

Notice that

So,

Clearly, we have the following hierarchy

Definition 2

Let \(\varrho \in \mathbb {U}_{\infty }.\) A function \(f\in BC \left( \mathbb {R},\mathbb {R}^{k}\right) \) is called WPAP if it can be expressed as \(f=g+\phi \), where \(g\in AP\left( \mathbb {R},\mathbb {R}^{k}\right) \) and \(\phi \in \) \(PAP_{0}\left( \mathbb {R},\mathbb {R}^{k},\varrho \right) \). The collection of such functions will be denoted by \(PAP\left( \mathbb {R},\mathbb {R}^{k},\varrho \right) .\) The functions g and \(\phi \) appearing above are respectively called the almost periodic and the weighted ergodic perturbation components of f.

Notice that the space \(PAP\left( \mathbb {R},\mathbb {R}^{k},\varrho \right) \) is a closed subspace of \(\left( BC\left( \mathbb {R},\mathbb {R}^{k}\right) ,\left\| \cdot \right\| _{\infty }\right) \) and consequently \(PAP\left( \mathbb {R},\mathbb {R}^{k},\varrho \right) \) is a Banach space (For more details see [12] and [18]).

3 The Model

Let us consider the following new model for (BAM) neural networks

where \(i=1,...,n\) and \(j=1,...,m\) correspond to the number of neurons in X -layer and Y-layer, respectively, \(x_{i}\) \(\left( \cdot \right) \), \( y_{j}\left( \cdot \right) \), are the activation of the i-th and j-th neurons respectively \(c_{ij}\left( \cdot \right) ,d_{ij}\left( \cdot \right) ,\alpha _{ji}\left( \cdot \right) \). \(f_{j}(y_{j}\left( t-\tau _{ji}\right) )\) and \(f_{j}(y_{j}(s))\) are respectively the activation functions of j-th neuron at time t with dicrete delay \(\tau \) and without delay.

\(g_{i}(x_{i}\left( t-\sigma _{ij}\right) )\) and \(g_{i}(x_{i}(s))\) are respectively the activation functions of i-th neuron at time t with dicrete delay and without delay.

\(w_{ji}\left( t \right) \) are connection weights at the time t, and \(I_{i}\left( t \right) \) and \( J_{j}\left( t \right) \) denote the external inputs at time t. \(a_{i}\left( t \right) \) \( \left( i\in \left\{ 1,\cdots ,n\right\} \right) ,b_{j}\left( t \right) \) \(\left( j\in \left\{ 1,\cdots ,m\right\} \right) \) represent the rate with which the i-th neuron and j-th neuron will reset its potential to the resting state in isolation when disconnected from the network and external inputs at the time t, respectively.

\(K_{ij}\left( \cdot \right) \) and \(N_{ji}\left( \cdot \right) \) denoted the refractoriness of the i-th neuron and j-th neuron after they have fired or responded.

Pose \(\tau =\max _{\begin{array}{c} 1\le i\le n \\ 1\le j\le m \end{array}}\left( \tau _{ji},\sigma _{ij}\right) \), let the initial conditions associated with Eq. (1) be of the form

where \(\varphi _{i}\left( \cdot \right) \) and \(\psi _{j}\left( \cdot \right) \) are assumed to be weighted pseudo-almost periodic functions on \(\mathbb {R}\). For arbitrary vector:

define the norm: \(\left\| (x,y)\right\| \) \(=\) \(\left\| x\right\| +\left\| y\right\| \) where,

and

Let us consider the following assumptions:

-

\(({{\varvec{H}}}_\mathbf{1 })\) \(\forall \) i, j; \(b_{j}>0\).

-

\(({{\varvec{H}}}_\mathbf{2 })\) \(\forall \) i, j; the following functions: \(I_{i}\left( .\right) ,J_{j}\left( .\right) , c_{ij}\left( .\right) , d_{ij}\left( .\right) , w_{ji}\left( .\right) , \alpha _{ji}\left( .\right) \) are weighted pseudo-almost periodic.

-

\(({{\varvec{H}}}_\mathbf{3 })\) \(\forall i, j\); there exist positive constant numbers \(L_{j}^{f},\) \(\ L_{i}^{g}\) \(>0\) such that \(\forall \) \(x,y\in \mathbb {R}\)

$$\begin{aligned} \displaystyle \left| f_{j}(x)-f_{j}(y)\right|<L_{j}^{f}\left| x-y\right| ,\ \ \left| g_{i}(x)-g_{i}(y)\right| <L_{i}^{g}\left| x-y\right| . \end{aligned}$$Furthermore, we suppose that \(f_{j}(0)=g_{i}\left( 0\right) =0\), \(\forall \) i, j.

-

\(\left( {{\varvec{H}}}_\mathbf{4 }\right) \) \(\forall \) i, j; the delay kernels \(K_{ij}:[ 0,+\,\infty [ \longrightarrow [ 0,+\,\infty [ \) and \(N_{ji}:[ 0,+\,\infty [ \longrightarrow [ 0,+\,\infty [ \) are continuous, integrable and there exist non negative constants \(k_{ij},n_{ji}\) such that

$$\begin{aligned} \displaystyle \int \limits _{0}^{+\infty }K_{ij}\left( s\right) ds\le k_{ij} \ and \ \int \limits _{0}^{+\infty }N_{ji}\left( s\right) ds\le n_{ji}. \end{aligned}$$ -

\(\left( {{\varvec{H}}}_\mathbf{5 }\right) \) Let \(\varrho \in \) \(U_{\infty }\) and assume that for each \(\tau \in \mathbb {R}\) the functions

$$\begin{aligned} \displaystyle s\longmapsto \left( \frac{\varrho \left( s+\tau \right) }{ \varrho \left( s\right) }\right)<\infty \text { and }s\longmapsto \left( \frac{m\left( s+\tau ,\varrho \right) }{m(s,\varrho )}\right) <\infty \text { } \end{aligned}$$are bounded.

4 Existence and Uniqueness of the WPAP Solution

Here, we study the existence and uniqueness of the weighted pseudo-almost periodic solution of Eq. (1). Following along the same proof of [1] and [13] it follows that:

Lemma 1

Under \(\left( H_{5}\right) \) the space \(PAP\left( \mathbb {R},\mathbb {R} ^{k},\varrho \right) \) is translation invariant.

Lemma 2

If \(\varphi ,\psi \) \(\in \) \(PAP(\mathbb {R},\mathbb {R},\varrho )\), then \( \varphi \times \psi \in PAP(\mathbb {R},\mathbb {R},\varrho )\).

Theorem 1

Suppose that assumptions \((H_{3})--(H_{5})\) hold and

\(\forall \) i, j; \(x_{i}(\cdot ), y_{j}(\cdot )\in \) \(PAP(\mathbb {R},\mathbb {R},\varrho )\)

and

Theorem 2

Suppose that assumptions \((H_{1})--(H_{5})\) hold and

Then, the BAM neural network of Eq. \(\left( 1\right) \) has a unique weighted pseudo-almost periodic solution in the convex set.

where

and

Proof

It is clear that,

and

Therefore,

and

Now, let us consider the operator \(\varGamma :\mathbb {R}^{n+m}\longrightarrow \mathbb {R}^{n+m}\) defined by

where

and

First, we shall prove that the operator \(\varGamma \) is a self-mapping from \( \mathcal {B}\) to \(\mathcal {B}\). In fact, for any \((x,y)\in \mathcal {B}\), we have

In view of \((H_{3})\), for any \(X=(x,y),U=(u,v) \in \mathbb {R}^{n+m}\), we have

which proves that \(\varGamma \) is a contraction mapping. Then, in virtue of the Banach fixed point theorem, \(\varGamma \) has a unique fixed point which is corresponding to the solution of Eq. (1) in \(\mathcal {B\subset }PAP( \mathbb {R},\mathbb {R}^{n+m},\varrho )\).

5 Numerical Examples

Example 1

Let \(\varrho \left( t\right) =e^{t}.\) Consider the model of Eq. (1) with n = m = 2 where

and \(\forall \) \(x\in \mathbb {R},\)

Then, \(L_{j}^{f}=L_{i}^{g}=1\) and

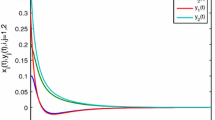

Therefore, all conditions of Theorem 2 are satisfied, then the model of example 1 has a unique WPAP solution (see Fig. 2).

Example 2

Let us consider the model of Eq. (1) with n = m = 3 where \(\left( a_{1},a_{2},a_{3}\right) ^{T}=\left( 3,5,4\right) ,\ \ \left( b_{1},b_{2},b_{3}\right) ^{T}=\left( 7,3,5\right) .\)

and \(\forall \) \(x\in \mathbb {R},\)

Clearly, \(L_{j}^{f}=L_{i}^{g}=1\) and

\(r=max(max(\frac{1.2+1.2}{3},\frac{0.5+0.7}{5}, \frac{0.6+0.6}{4}),max(\frac{0.6+0.9}{7},\frac{0.7+0.8}{3},\frac{0.9+0.9}{5}))\) \(r=max(0.8,0.5)=0.8 <1.\)

Thus, it follows from Theorem 2 that the model of example 2 has a unique WPAP solution (see Fig. 3).

6 Comparison and Discussion

In previous papers ([1] and [8]) a single-layer recurrent neural networks with delays was studied by the authors. In particular, the attractivity and exponential stability of the PAP solution of RNNs are established and discussed. But, from the viewpoint of applications, the study on the dynamics such as stability and almost periodicity for the recurrent neural networks cannot be replaced by the study on dynamics for BAM neural networks.

First, it should be mentioned that the method used in the proof of this paper is original and essentially new since this class of functions is seldom considered for neural networks.

One can remark that our results need only the activations functions \(f_{j}\) and \(g_{j}\) satisfy the hypothesis \((H_{2})\), that is the Lipschitz condition, not requiring the functions \(f_{j}\) and \(g_{j}\) to be bounded and monotone nondecreasing. Therefore, our results are novel and have some significance in theories as well as in applications of almost-periodic oscillatory neural networks.

When \(\forall \) i, j, \(c_{ij}=w_{ji}=0\) system (1) can be reduced to the model of Ref. [24], and when \(\forall \) i, j, \(d_{ij}=\alpha _{ji}=0\) system (1) degenerates to the model investigated by Chen (see Ref. [10] and [11]).

On the other hand, if \(\forall \) \(i, j, \tau _{ij}=\sigma _{ij}=0\) system (1) degenerates to the model studied by Song and Cao [31]. Besides, our condition \(\left( H_{4}\right) \) dealing with the kernel is weaker than \(\left( H_{2}\right) \) in Ref. [31]. Further, our results can be seen as a generalization and improvement of [24] since their model assumes that \(d_{ij}\left( \cdot \right) =\alpha _{ji}\left( \cdot \right) =0\) and the results of the previous papers ([24] and [25]) considered the periodic and almost periodic case with periodic environment. In [10], system (1.1) is considered and two sufficient conditions guaranteeing the existence and the attractivity of the solution are derived. Compared with the results obtained in Ref. [10], our conditions in Theorem 1 is less restrictive than those in Ref. [10]. In fact, in Theorem 2, we do not require the condition in Ref. [10]: In addition, the method used here is also different to that in [10]. Finally, it should be interesting to adapt the technics of this paper to the BAM neural networks models of Xu et al. [32] and [33].

7 Conclusion

In this paper, a generalized model of (BAM) neural networks with mixed delays have been studied. Some new sufficient conditions for the existence and uniqueness of weighted pseudo-almost periodic solution of a class of two-layer (BAM) neural networks are given. Note that we just require that the activation function is globally Lipschitz continuous, which is less conservative and less restrictive than the monotonic assumption in previous results. The method is very concise and the obtained results are new and they complement previously known results. Finally, examples are given to illustrate our theory. The results obtained in this paper are completely new and complement the previously known works of (Ref. [1, 3] and [7]). Besides, the study of the stability (asymptotic, exponential, ...) of the solution of the Theorem 2 would be an excited subject. We are starting to took closely at this issue via new methods.

References

Ammar B, Chérif F, Alimi AM (2012) Existence and uniqueness of pseudo-almost periodic solutions of recurrent neural networks with time-varying coefficients and mixed delays. IEEE Trans Neural Netw 23(1):109–118

Ammar B, Chouikhi N, Alimi AM, Chérif F, Rezzoug N, Gorce P (2013) Learning to walk using a recurrent neural network with time delay. Artificial neural networks and machine learning (ICANN), pp. 511–518

Arbi A, Aouiti C, Chérif F, Touati A, Alimi AM (2015) Stability analysis of delayed Hopfield neural networks with impulses via inequality techniques. Neurocomputing 158:281–294

Arik S (2005) Global asymptotic stability analysis of bidirectional associative memory neural networks with time delays. IEEE Trans Neural Netw 16(3):580–586

Bart K (1992) Neural networks and fuzzy systems: a dynamical systems approach to machine intelligence/book and disk, vol 1. Prentice hall, Upper Saddle River

Brahmi H, Ammar B, Cherif F, Alimi AM (2014) On the dynamics of the high-order type of neural networks with time varying coefficients and mixed delay. In: IEEE international joint conference on neural networks, pp. 2063–2070

Brahmi H, Ammar B, Chérif F, Alimi AM (2016) Stability and exponential synchronization of high-order hopfield neural networks with mixed delays. Cybern Syst 1–21

Brahmi H, Ammar B, Cherif F, Alimi AM (2016) Pseudo almost periodic solutions of impulsive recurrent neural networks with mixed delays. In: International joint conference on neural networks (IJCNN), Vancouver, BC, pp. 464–470

Brahmi H, Ammar B, Alimi AM (2013) Intelligent path planning algorithm for autonomous robot based on recurrent neural networks. In: International conference on advanced logistics and transport (ICALT), pp. 199–204

Chen A, Du D (2008) Global exponential stability of delayed BAM network on time scale. Neurocomputing 71(16–18):3582–3588

Chen A, Chen F (2009) Periodic solution to BAM neural network with delays on time scales. Neurocomputing 73(1–3):274–282

Chérif F (2011) A various types of almost periodic functions on banach spaces: part 1. Int Math Forum 6(19):921–952

Chérif F (2012) Existence and global exponential stability of pseudo almost periodic solution for SICNNs with mixed delays. J Appl Math Comput Numbers 39(1–2):235–251

Chouikhi N, Ammar B, Rokbani N, Alimi AM (2017) PSO-based analysis of Echo State Network parameters for time series forecasting. Appl Soft Comput 55:211–225

Corduneanu C (1968) Almost periodic functions. Wiley, New York

Desheng J, Zhang C (2012) Translation invariance of weighted pseudo almost periodic functions and related problems. J Math Anal Appl 391(2):350–362

Fukada T, Schuster M, Sagisaka Y (1999) Phoneme boundary estimation using bidirectional recurrent neural networks and its applications. Syst Comput Japan 30(4):20–30

Blot J, Cieutat P, Ezzinbi K (2013) New approach for weighted pseudo-almost periodic functions under the light of measure theory, basic results and applications. Appl Anal 92(3):493–526

Hamid AJ, Brahim RW (2009) Almost-periodic solution for BAM neural network. Surv Math Appl 4:53–63

Kosko B (1987) Adaptive bi-directional associative memories. Appl Optim 26(23):4947–4960

Li G, Li B, Yu X, Cheng C (2015) Echo state Network with Bayesian regularization for forecasting short-term power production of small hydropower plants. J Energies 8(10):12228–12241

Li H, Jiang H, Hu C (2016) Existence and global exponential stability of periodic solution of memristor-based BAM neural networks with time-varying delays. Neural Netw 75:97–109

Liao X, Yang S, Wong K (2003) Convergence analysis of hybrid bi-directional associative memory neural networks with discrete delays. Int J Dyn Syst 18(3):231–243

Liu Y, Tang W (2006) Existence and global exponential stability of periodic solutions of BAM neural networks with periodic coefficients and delays. Neurocomputing 69(Issues 16–18):2152–2160

Liu B (2007) Existence and exponential stability of almost periodic solutions for cellular neural networks without global Lipschitz conditions. J Korean Math Soc 44(4):873–887

Quanxin Z, Rakkiyappan R, Chandrasekar A (2014) Stochastic stability of Markovian jump BAM neural networks with leakage delays and impulse control. Neurocomputing 136:136–151

Saad E, Prokhorov D, Wunsch D (2002) Comparative study of stock trend prediction using time delay, recurrent and probabilistic neural networks. In: IEEE Transactions on neural networks, p 1456

Schuster M, Paliwal KK (1997) Bidirectional recurrent neural networks. IEEE Trans Signal Process 45:2673

Shaohong C, Zhang Q (2016) Existence and stability of periodic solutions for impulsive fuzzy BAM Cohen–Grossberg neural networks on time scales. Adv Differ Equ 1–21

Singh M, Kumar S (2013) Using multi-layered feed-forward neural network (MLFNN) architecture as bidirectional associative memory (BAM) for function approximation. IOSR J Comput Eng 13(4):34–38

Song Q, Cao J (2007) Global exponential stability of bidirectional associative memory neural networks with distributed delays. J Comput Appl Math 202:266–279

Xu Y (2017) Exponential stability of pseudo almost periodic solutions for neutral type cellular neural networks with D operator. Neural Process Lett 46(1):329

Xu C, Li, P (2016) Exponential stability for fuzzy BAM cellular neural networks with distributed leakage delays and impulses. Adv Differ Equ 276

Xu CJ, Li PL (2016) Existence and exponentially stability of anti-periodic solutions for neutral BAM neural networks with time-varying delays in the leakage terms. J Nonlinear Sci Appl 9(3):1285–1305

Zhang QH, Yang LH (2012) Dynamical analysis of fuzzy BAM neural networks with variable delays. Fuzzy Inf Eng 4(1):93–104

Acknowledgements

The authors would like to acknowledge the financial support of this work by grants from General Direction of Scientific Research (DGRST), Tunisia, under the ARUB program.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Ammar, B., Brahmi, H. & Chérif, F. On the Weighted Pseudo-Almost Periodic Solution for BAM Networks with Delays. Neural Process Lett 48, 849–862 (2018). https://doi.org/10.1007/s11063-017-9725-0

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11063-017-9725-0