Abstract

In this paper, we consider a two-dimensional random amplitude chirp signal model. It is assumed that the additive error is independent and identically distributed. This is an extension of the one dimensional random amplitude chirp model proposed by Besson et al. (IEEE Trans Signal Process 47(12):3208–3219, 1999) to two-dimension. The random amplitudes can be thought of as a multiplicative error and are assumed to be independent and identically distributed with non-zero mean such that the fourth order moment exists. The parameters are estimated by maximizing a two-dimensional chirp periodogram like function and discuss their theoretical properties. The proposed estimators are consistent and we obtain the asymptotic distribution as multivariate normal. Under normality of the additive error, the proposed estimator attains the Cramer–Rao lower bound. We propose a general multicomponent two-dimensional model of similar form. The performances of the proposed estimators for finite samples are evaluated based on numerical experiments and are reported graphically.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

A two-dimensional (2-D) complex-valued chirp signal model with random amplitude is expressed as

Here \(i = \sqrt{-1}\); and \(y(m,n)=y_R(m,n) + i y_I(m,n)\) are the complex-valued signals; \(\alpha _1^0\) and \(\alpha _2^0\) are the frequency and the chirp rate, respectively in the first dimension and \(\beta _1^0\) and \(\beta _2^0\) are the corresponding frequency and the chirp rate, respectively in the second dimension. Further, \(0< \alpha _1^0, \alpha _2^0, \beta _1^0, \beta _2^0 < \pi \) and the amplitude \(\{\psi (m,n)\}\) is a 2-D sequence of real-valued random variables which can be viewed as multiplicative error as \(\psi (m,n)\) is random and enters the model as product of the non-random signal component. The additive error \(\{X(m,n)\}\) is a 2-D sequence of complex-valued random variables with zero mean and it is assumed that the fourth moment exists. The problem is to estimate the unknown parameters \(\alpha _1^0\), \(\alpha _2^0\), \(\beta _1^0\) and \(\beta _2^0\) based on MN observations \(\{y(m,n); m=1, \ldots , M; n=1,\ldots , N\}\) under certain assumptions on the sequence of random amplitudes \(\{\psi (m,n)\}\) and the sequence of additive error \(\{X(m,n)\}\). The specific assumptions on \(\{\psi (m,n)\}\) and \(\{X(m,n)\}\) are given in Appendix A.

The 2-D chirp model as well as 2-D sinusoidal model has many applications in image analysis and telecommunications. The later is one of the basic models in statistical signal processing literature. Different other applications are found in biomedical spectral analysis, texture analysis; see Kliger and Francos (2008), Kliger and Francos (2013), Cao et al. (2006) etc. Similar models have been used in analysis of synthetic aperture radar data by Djurović et al. (2010). Grover et al. (2020) illustrated that 2-D chirp model can be effectively used in black and white texture analysis. Apart from these applications, such signals are commonly observed in surveillance system, in sonar and radar, mobile telecommunications, finger print images etc., see for example Zhang et al. (2008), Simeunović et al. (2019), Djurović and Simeunović (2018), Porwal et al. (2019) and the references cited therein.

Model (1) is a natural extension of one-dimensional (1-D) random amplitude chirp model to two dimension. Nandi and Kundu (2020b) considered this model and proved the consistency of the estimators proposed by Besson et al. (1999) and obtained their asymptotic distribution. In case of constant amplitude instead of random amplitude, this model is the usual 1-D chirp model which again generalizes the complex-valued exponential or sinusoidal model. The complex-valued exponential model is the most basic model in this class of models. The 2-D random amplitude chirp model is a more generalized version of these models.

The 2-D random amplitude chirp model can be viewed as a generalized version of 2-D chirp model when \(\psi (m,n)\) is identically equal to a non-zero constant. Several authors have studied the 2-D chirp model, see for example, Zhang et al. (2008), Cao et al. (2006), Lahiri et al. (2013), Lahiri and Kundu (2017) and Grover et al. (2018a, 2020). Model (1) can also be seen as a generalization of 2-D random amplitude sinusoidal model when \(\alpha _2^0 = \beta _2^0=0\). The 2-D sinusoidal model has many applications in grey symmetric textures. When \(\psi (m,n)\) is constant and the frequency rates \(\alpha _2^0\) and \(\beta _2^0\) are simultaneously equal to zero, then model (1) is nothing but the 2-D sinusoidal model. Therefore, model (1) is quite a general model.

The aim of this paper is to consider the problem of estimation of the unknown parameters, the frequencies \(\alpha _1^0\) and \(\alpha _2^0\) and the frequency rates \(\beta _1^0\) and \(\beta _2^0\) and study the theoretical properties of these estimators. The estimation method considered in this paper is based on the maximization of the 2-D periodogram function of \(y^2(m,n)\), interlaced with zeros. We have shown that the proposed method is equivalent to the nonlinear least squares estimation method. It is an extension of the method proposed by Besson et al. (1999) for 1-D random amplitude chirp model to two dimension. Although, this estimator has not been studied, and its properties have not been explored. We have shown that the proposed estimators of the unknown parameters are consistent and asymptotically normally distributed. A consistent estimator of an unknown parameter means that as the number of observations increase, the resulting sequence of estimators converges to the true value of the parameter. The asymptotic normality here means that the proposed estimator is asymptotically distributed with Gaussian behavior as the data sample size goes up. This asymptotic distribution also provides different rates of convergence of the estimators of the frequency and the chirp rate parameters. Based on the asymptotic distribution, the approximate variance of the estimator can be obtained at least for large sample sizes. It has also been observed that the proposed estimator attains the Cramer–Rao lower bound when the additive error is normally distributed. We perform numerical experiments extensively to see the behavior of the estimates for different sample sizes and different variances for additive as well as multiplicative error. The performances are quite satisfactory and gives an idea how the large sample results will be useful in practice for finite samples.

The rest of the paper is organized as follows. In Sect. 2, we discuss the method of estimation of the unknown parameters of model (1) and its equivalence to the nonlinear least squares method. In Sect. 3, we consider the multicomponent model and discuss how the estimation problem of the one component model can be applied to this case. In Sect. 4, numerical experiments are presented and we conclude the paper in Sect. 5. All the required assumptions, theoretical results and proofs are provided in Appendices. In Appendix A, the theoretical results of the proposed estimator for the one component model is presented including the assumptions. Similarly, for multicomponent model, the results are stated in Appendix B. The consistency of the proposed estimator for the one component model has been proved in Appendix C. The asymptotic distribution of the same has been derived in Appendix D. The outline of the proof of the consistency of the estimator for multicomponent model is presented in Appendix E.

2 Estimation of parameters of 2D-random amplitude chirp signal

In this section, we first discuss the problem of estimation of unknown parameters, namely, the frequency and the chirp rate parameters present in model (1). We consider a method of estimation which is equivalent to the nonlinear least squares estimation method.

In order to describe the estimation method, write \({\varvec{\alpha }} = (\alpha _1, \alpha _2)^\top \), \({\varvec{\beta }} = (\beta _1, \beta _2)^\top \); \({\varvec{\alpha }}^0\) and \({\varvec{\beta }}^0\) are the true values of \({\varvec{\alpha }}\) and \({\varvec{\beta }}\), respectively. Also denote \({\varvec{\xi }} = (\alpha _1, \alpha _2, \beta _1, \beta _2)^\top \) as the unknown parameter vector and \({\varvec{\xi }}^0\) be the true value of \({\varvec{\xi }}\). Then consider the estimator \(\widehat{\varvec{\xi }}\) of \({\varvec{\xi }}^0\) which maximizes the following four dimensional function.

Observe that \(Q({\varvec{\xi }})\) is the 2-D chirp periodogram function of \(y^2(m,n)\) with exponent replaced by twice the usual 2-D chirp periodogram exponent. The usual nonlinear least squares method is behind the motivation of taking this particular form of the criterion function of our proposed estimation method. In the following, we start with the form of the usual residual sum of squares corresponding to the additive error and derive the equivalence of the nonlinear least squares and the estimation method addressed in this paper.

The following derivation motivates us to maximize \(Q({\varvec{\xi }})\) to obtain the nonlinear least squares estimators of the unknown parameters present in model (1). Write model (1) as

where \(\phi ^0(m,n) = (\alpha _1^0 m + \alpha _2^0 m^2 + \beta _1^0 n + \beta _2^0 n^2)\). Considering \(\psi (m,n)\) as unknown parameters for \(m=1,\ldots ,M\) and \(n=1,\ldots ,N\), the usual nonlinear least squares estimators of \(\psi (m,n)\) and \(\alpha _1^0\), \(\alpha _2^0\), \(\beta _1^0\) and \(\beta _2^0\) are obtained by minimizing

with respect to \(\psi (1,1), \ldots , \psi (M,N)\) and \({\varvec{\xi }}=(\alpha _1, \alpha _2, \beta _1, \beta _2)^\top \). Denote

for \(m=1,\ldots ,M\) and \(n=1,\ldots ,N\). Then, the criterion function \(R({\varvec{\psi }},{\varvec{\xi }}) \) can be written as

In order to minimize \(R({\varvec{\psi }},{\varvec{\xi }})\), differentiating it with respect to \({\varvec{\psi }}_m\) for fixed \({\varvec{\xi }}\), we have

where \(\mathbf{y}_m^*\) and \(\mathbf{A}_m^*\) are complex conjugate of \(\mathbf{y}_m\) and \(\mathbf{A}_m\), respectively. Therefore, for a given \({\varvec{\xi }}\), the vectors \({\varvec{\psi }}_1, \ldots , {\varvec{\psi }}_M\) which minimize \(R({\varvec{\psi }},{\varvec{\xi }})\) is given by

Replacing \({\varvec{\psi }}_m\) by \(\widehat{\varvec{\psi }}_m({\varvec{\xi }})\) in \(R({\varvec{\psi }},{\varvec{\xi }})\)

where \(\mathbf{y}_m^H\) is the conjugate transpose of \(\mathbf{y}_m\). Now minimizing \(R(\widehat{\varvec{\psi }}({\varvec{\xi }}),{\varvec{\xi }})\) with respect to \({\varvec{\xi }}\) is equivalent to maximizing

Therefore, taking into consideration of the corresponding imaginary part, we base our estimation method on maximization of \(Q({\varvec{\xi }})\) with respect to \({\varvec{\xi }}\).

The nonlinear least squares estimation method has been addressed through the periodogram like function \(Q({\varvec{\xi }})\). The unknown parameters \(\alpha _1\), \(\alpha _2\), \(\beta _1\) and \(\beta _2\) are estimated by maximizing \(Q({\varvec{\xi }})\). Denote \(\widehat{\varvec{\xi }}^{\top }=(\widehat{\varvec{\alpha }}^{\top }, \widehat{\varvec{\beta }}^{\top })=(\widehat{\alpha }_1, \widehat{\alpha }_2, \widehat{\beta }_1, \widehat{\beta }_2)\) as the maximizer of \(Q({\varvec{\xi }})\), then

Using notation \(y^2(m,n)=z(m,n)\), and \(2\alpha _1 = a_1\), \(2\alpha _2=a_2\), \(2\beta _1 = b_1\) and \(2\beta _2 = b_2\), we note that \(Q({\varvec{\xi }})\) is the usual 2-D chirp periodogram function for 2-D chirp model. The real and imaginary parts of the squared responses z(m, n), say \(z_R(m,n)\) and \(z_I(m,n)\) are explicitly given in Appendix C. These will be required to establish the consistency and the asymptotic distribution of the proposed estimator \(\widehat{\varvec{\xi }}\). It is observed that the maximization of \(Q({\varvec{\xi }})\) can be carried out by any four-dimensional optimization method over \(\varvec{\Omega }\).

In model (1), if we fix \(n=s\), then \(\{y(m,s); m=1,\ldots ,M\}\) represents the sth column of the \(M \times N\) data matrix ((y(m, n))). Therefore, the sth column is a sample of the following 1-D complex-valued random amplitude chirp model

where the amplitude \(\delta (m,s, \beta _1^0, \beta _2^0) = \psi (m,s) e^{i(\beta _1^0 s + \beta _2^0 s^2)}\) is complex-valued. If we sum the columns over s,

Similarly, each row of the data matrix ((y(m, n))) and their sum say \(z_2(n)\) represent 1-D complex-valued random amplitude model of the same form with unknown parameters \(\beta _1^0\) and \(\beta _2^0\). Efficient estimation of \(\alpha _1^0\) and \(\alpha _2^0\) as well as \(\beta _1^0\) and \(\beta _2^0\) may be developed based on the above observation.

Model (1) is a highly nonlinear model in its parameters. Therefore, all the theoretical results of the proposed estimator \(\widehat{\varvec{\xi }}\) will be valid for large samples. The theoretical properties namely the consistency and asymptotic normality of \(\widehat{\varvec{\xi }}\) as well as the necessary assumptions required to establish these properties are stated in Appendices A, C and D.

3 Multicomponent random amplitude chirp model

In this section, we extend the idea of 2-D random amplitude chirp model to multiple components when p pairs of a frequency and a chirp rate corresponding to both the dimensions are present. The model can be formulated as

For \(k=1,\ldots ,p\), the frequencies \(\alpha _{1k}^0\) and \(\alpha _{2k}^0\) and the frequency rates \(\beta _{1k}^0\) and \(\beta _{2k}\) are unknown and needed to be estimated given a sample of size MN. The additive errors \(\{X(m,n)\}\) is a 2-D sequence of complex-valued random variables similar to model (1). The sequence of random variables \(\{\psi _k(m,n)\}\) corresponds to the kth component random amplitude, \(k=,\ldots ,p\); it is assumed that \(\{\psi _1(m,n)\} \ldots \{\psi _p(m,n)\}\) are sequences of independent and identically distributed (i.i.d.) random variables. We assume that the number of component, p is known in advance.

The method of estimation of the unknown parameters for the multicomponent model (4) is based on the same chirp periodogram like function \(Q({\varvec{\xi }})\) defined in Sect. 2. The unknown parameters are estimated by maximizing \(Q({\varvec{\xi }})\) locally. Denote \({\varvec{\xi }}_k = (\alpha _{1k}, \alpha _{2k}, \beta _{1k}, \beta _{2k})^{\top }\) and suppose \({\varvec{\xi }}_k^0\) is the true value of \({\varvec{\xi }}_k\). The maximization is carried out in a neighborhood of \({\varvec{\xi }}_k^0\) to estimate the kth component parameters. Let \(N_k\) be a neighborhood of \({\varvec{\xi }}_k^0\) such that for \(j \ne k\), \({\varvec{\xi }}_j^0 \notin N_k\). That is, \(N_k\) has to be chosen in such a way that no other \({\varvec{\xi }}_j^0\) belongs to \(N_k\) and \({\varvec{\xi }}_k^0\) and \({\varvec{\xi }}_j^0\), \(j\ne k\) are needed to be well separated. The choice of \(N_k\) for small samples depends on the variance of the additive error sequence \(\{X(m,n)\}\) also. Formally, estimate \({\varvec{\xi }}_k\) as

where y(m, n) is given in (4). The whole process of estimation can be carried out by solving p separate optimization problems and each one involves a four dimensional maximization over a bounded region.

Similar to the one component model, the theoretical properties of the estimator \(\widehat{\varvec{\xi }}_k\) of \({\varvec{\xi }}_k^0\) defined above are provided in Appendix B along with the assumptions required to develop the properties of the estimator.

4 Numerical experiments

In this section, we perform simulation experiments to evaluate the accuracy of the proposed estimators. These simulations are carried out for various choices of M, N and \(\sigma ^2\). For every \(M = N = 25, 50, 75, 100\) and \(\sigma ^2 = 0.01,0.1,0.5, 1\), 1000 replications are generated. In the first set of experiments, we consider a simple synthetic signal generated using the following model structure:

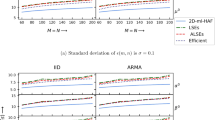

Here, the multiplicative error \(\psi (m,n)\) is assumed to be i.i.d. sequence of Gaussian random variables with mean 5 and variance 0.5. Similarly, the additive error random variables \(\{X(m,n)\}\) are assumed to be i.i.d N(0, \(\sigma ^2\)). The objective is to estimate the nonlinear parameters of the model by maximizing the function defined in (2). We use Nelder-Mead algorithm to optimize the function \(Q(\varvec{\xi })\). For the initial values for the optimization, we use the true values of the parameters. For each generated data set, we compute the proposed estimators and report their averages, mean squares errors (MSEs) and the corresponding theoretically derived asymptotic variances (avar). Figure 1 shows the results of these simulations.

MSEs and the asymptotic variances of the estimates when the data is from model (5)

In the second set of simulations, we consider a more challenging set of samples from a multiple component 2-D model with the following expression:

The amplitude random variables \(\psi _1(m,n) \sim \mathcal {N}(6,0.5)\) and \(\psi _2(m,n) \sim \mathcal {N}(5,0.5)\). The additive errors \(X(m,n) \sim \mathcal {N}(0, \sigma ^2)\). The average estimates, the MSEs and the asymptotic variances of the proposed estimators for the first and second component parameters are shown in Figs. 2 and 3 respectively.

MSEs and the asymptotic variances of the estimates of the first component when the data is from model (6)

MSEs and the asymptotic variances of the estimates of the second component when the data is from model (6)

Some noteworthy observations from the simulation results are stated below:

-

The biases of the estimates are small and are close to 0, which implies that the difference between the average estimates and the true values of the parameters is negligible.

-

For fixed values of M and N, the accuracy of the proposed estimators (measured in terms of MSEs) progressively decreases as the error variance increases.

-

The MSEs of the estimates decrease as the dimension of the data matrix increases, thereby verifying consistency of the proposed estimators.

-

The MSEs are observed to be smaller than the theoretical asymptotic variances for most of the cases.

Clearly, the results of these experiments reveal that the performance of the estimators is satisfactory. Therefore we can conclude that the proposed method yields accurate estimates in practice.

5 Concluding remarks

In this paper, we study the 2-D random amplitude chirp model and propose an estimation method to estimate the unknown parameters, the frequencies and chirp rates. The proposed method maximizes a 2-D periodogram-like function of the squared observations and are consistent and asymptotically normally distributed. A 2-D multicomponent random amplitude model has also been studied. The unknown parameters are estimated by maximizing the same periodogram function locally. The maximization is carried out in a neighborhood of the true value of the parameter vector. The implementation is done step by step. Numerical experiments have been done to see the small sample performance and reported graphically. In this paper, we have assumed that the additive error are i.i.d. It will be interesting to see how the proposed estimators work if the additive error are from a stationary linear process. We have not discussed the estimation of parameters of multiplicative as well as additive error; it needs to be addressed to use the theoretical results in practice. The number of components in multicomponent model is assumed to be known which will not be the case in practice and needs to be estimated. Further works are needed in that direction.

References

Besson, O., Ghogho, M., & Swami, A. (1999). Parameter estimation for random amplitude chirp signals’. IEEE Transactions on Signal Processing, 47(12), 3208–3219.

Besson, O., & Stoica, P. (1995). Sinusoidal signals with random amplitude: Least-squares estimation and their statistical analysis’. IEEE Transactions on Signal Processing, 43, 2733–2744.

Cao, F., Wang, S., & Wang, F. (2006). Cross-spectral method based on 2-D cross polynomial transform for 2-D chirp signal parameter estimation. In ICSP2006 Proceedings. https://doi.org/10.1109/ICOSP.2006.344475

Djurović, I., & Simeunović, M. (2018). Parameter estimation of 2D polynomial phase signals using NU sampling and 2D CPF. IET Signal Processing, 12(9), 1140–1145.

Djurović, I., Wang, P., & Ioana, C. (2010). Parameter estimation of 2-D cubic phase signal using cubic phase function with genetic algorithm. Signal Processing, 90(9), 2698–2707.

Farquharson, M., O’Shea, P., & Ledwich, G. (2005). A computationally efficient technique for estimating the parameters phase signals from noisy observations. IEEE Transactions on Signal Processing, 53, 3337–3342.

Gini, F., Montanari, M., & Verrazzani, L. (2000). Estimation of chirp signals in compound Gaussian clutter: A cyclostationary approach. IEEE Transactions on Acoustics, Speech and Signal Processing, 48, 1029–1039.

Grover, R., Kundu, D., & Mitra, A. (2018a). Asymptotic of approximate least squares estimators of parameters of two-dimensional chirp signal. Journal of Multivariate Analysis, 168, 211–220.

Grover, R., Kundu, D., & Mitra, A. (2018b). On approximate least squares estimators of parameters on one dimensional chirp signal. Statistics, 52, 1060–1085.

Grover, R., Kundu, D. & Mitra, A. (2020). An efficient methodology to estimate the parameters of a two-dimensional chirp signal model. Multidimensional Systems and Signal Processing. (to appear).

Kliger, M., & Francos, J. M. (2008). Strong consistency of a family of model order selection rules for estimating 2-D sinusoids in noise. Statistics and Probability Letters, 78, 3075–3081.

Kliger, M., & Francos, J. M. (2013). A strongly consistent model order selection rule for estimating the parameters of 2-D sinusoids in colored noise. IEEE Transactions on Information Theory, 59(7), 4408–4422.

Lahiri, A. (2013). Estimators of parameters of chirp signals and their properties. Ph.D. thesis, Indian Institute of Technology, Kanpur.

Lahiri, A., & Kundu, D. (2017). On parameter estimation of two-dimensional polynomial phase signal model. Statistica Sinica, 27(4), 1779–1792.

Lahiri, A., Kundu, D., & Mitra, A. (2013). Efficient algorithm for estimating the parameters of two dimensional chirp signal. Sankhya B, 75(1), 65–89.

Nandi, S., & Kundu, D. (2004). Asymptotic properties of the least squares estimators of the parameters of the chirp signals. Annals of the Institute of Statistical Mathematics, 56, 529–544.

Nandi, S., & Kundu, D. (2020a). Statistical signal processing: Frequency estimation. Springer.

Nandi, S., & Kundu, D. (2020b). Estimation of parameters in random amplitude chirp signal. Signal Processing, 168, 107328.

Hosseinbor, P. A., & Zhdanov, R. (2017). 2D sinusoidal parameter estimation with offset term. arXiv:1702.01858v1 [stat.AP].

Porwal, A., Mitra, S., & Mitra, A. (2019). Order estimation of 2-dimensional complex superimposed exponential signal model using exponentially embedded family (EEF) rule: Large sample consistency properties. Multidimensional Systems and Signal Processing, 30(3), 1293–1308.

Simeunović, M., Djurović, I., & Pelinkovic, A. (2019). Parametric estimation of 2D cubic phase signals using high-order Wigner distribution with genetic algorithm. Multidimensional Systems and Signal Processing, 30(1), 451–464. https://doi.org/10.1007/s11045-018-0564-6

Stoica, P., & Moses, R. (2005). Spectral analysis of signals. Prentice Hall.

Zhang, K., Wang, S., & Cao, F. (2008). Product cubic phase function algorithm for estimating the instantaneous frequency rate of multicomponent two-dimensional chirp signals. 2008 Congress on Image and Signal Processing, 5, 498–502.

Wu, C. F. J. (1981). Asymptotic theory of the non-linear least-squares estimation. The Annals of Statistics, 5, 501–513.

Acknowledgements

Part of the work of the third author has been funded by the grant no. MTR/2018/000179 of the Science and Engineering Research Board, Government of India. The authors would like to thank two unknown reviewers for their constructive suggestions which have helped to improve the manuscript significantly.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A

In this Appendix, we present the theoretical properties of the proposed estimator of the unknown parameters present in single component model (1). The following assumptions are required on the random amplitude sequence \(\{\psi (m,n)\}\) and the additive error sequence \(\{X(m,n)\}\) to develop the theoretical properties. .

Assumption 1

The random amplitude sequence \(\{\psi (m,n)\}\) is a 2-D sequence of i.i.d. real-valued random variables with mean \(\mu _\psi \), variance \(\sigma _\psi ^2\), \(\mu _\psi \ne 0\) and \(\sigma _\psi ^2 > 0\). The fourth moment of \(\{\psi (m,n)\}\) exists.

Assumption 2

The sequence of additive error \(\{X(m,n)\}\) is a 2-D sequence of complex-valued i.i.d. random variables with mean zero and variance \(\sigma ^2\). If \(X(m,n) = X_R(m,n) + i X_I(m,n)\), then both \(\{X_R(m,n)\}\) and \(\{X_I(m,n)\}\) are i.i.d. \((0, \frac{\sigma ^2}{2})\), have finite fourth moment \(\gamma \) and they are independently distributed.

Assumption 3

The sequence of random amplitudes \(\{\psi (m,n)\}\) is assumed to be independent of the sequence of additive errors \(\{X(m,n)\}\).

Define a set \(\varvec{\Omega } = [0, \pi ] \times [0, \pi ] \times [0, \pi ] \times [0, \pi ]\). The following assumption apart from Assumptions 1–3, is required on the true values of the parameters.

Assumption 4

\((\alpha _1^0, \alpha _2^0, \beta _1^0, \beta _2^0)\) is an interior point of \(\varvec{\Omega }\).

The existence of the fourth moment, stated in Assumption 1, of the random amplitudes \(\{\psi (m,n)\}\) is required to obtain the theoretical properties of the proposed estimators. The independence of \(\{\psi (m,n)\}\) and \(\{X(m,n)\}\) is an important assumption to prove the consistency and the asymptotic distribution of the proposed estimators of the frequency and frequency rate. In the following, we first discuss the method of estimation of the unknown parameters present in model (1).

We state the consistency result and the asymptotic distribution of the proposed estimators under Assumptions 1–4 in this Appendix and prove in Appendices C and D, respectively. In order to prove the consistency it is required to have non-zero mean and finite fourth order moment of the random amplitude \(\psi (m,n)\). The existence of non-zero mean is an essential assumption for random amplitude which can be thought of as a multiplicative error. We need the existence of the fourth moment to develop the asymptotic distribution. The following theorems state the results on the consistency properties and the asymptotic distribution of the proposed estimators. Theorem A.1 is proved in Appendix C and Theorem A.2 is in Appendix D. Here \({\mathop {\longrightarrow }\limits ^{d}}\) denotes convergence in distribution.

Theorem A.1

Under Assumptions 1–4, \(\widehat{\alpha }_1\), \(\widehat{\alpha }_2\), \(\widehat{\beta }_1\) and \(\widehat{\beta }_2\) defined in (3), are consistent estimators of \(\alpha _1^0\), \(\alpha _2^0\), \(\beta _1^0\) and \(\beta _2^0\), respectively. \(\square \)

Theorem A.2

Under Assumptions 1–4, as \(\min \{M,N\}\rightarrow \infty \),

where \(\displaystyle \mathbf{D} = \text {diag}\Biggl \lbrace \frac{1}{M^{\frac{3}{2}} N^{\frac{1}{2}}}, \frac{1}{M^{\frac{5}{2}} N^{\frac{1}{2}}}, \frac{1}{M^{\frac{1}{2}} N^{\frac{3}{2}}}, \frac{1}{M^{\frac{1}{2}} N^{\frac{5}{2}}} \Biggr \rbrace \),

and \(C_\psi = 8(\sigma _\psi ^2 + \mu _\psi ^2) \sigma ^2 + \frac{1}{2} \gamma + \frac{1}{8} \sigma ^4 \). \(\square \)

It is important to note that under the assumption of normality of the additive error X(m, n), the asymptotic variance of the proposed estimator \(\widehat{\varvec{\xi }}\) is the Cramer–Rao lower bound.

The following facts can be deduced from the large sample distribution of \(\widehat{\varvec{\xi }}\).

-

(1)

The large-sample variances of \(\widehat{\alpha }_1\) and \(\widehat{\alpha }_2\) depend on the random amplitude \(\psi (m,n)\), through its mean \(\mu _\psi \) and the variance \(\sigma _\psi ^2\) as well as on the additive error through the variance \(\sigma ^2\) and the fourth moment \(\gamma \). We note that

$$\begin{aligned}&\widehat{\alpha }_1=O_p(M^{-\frac{3}{2}} N^{-\frac{1}{2}} ), \;\; \widehat{\beta }=O_p(M^{-\frac{1}{2}} N^{-\frac{3}{2}} ), \;\; \\&\widehat{\alpha }_2=O_p(M^{-\frac{5}{2}} N^{-\frac{1}{2}} ), \;\; \widehat{\beta }_2= O_p(M^{-\frac{1}{2}} N^{-\frac{5}{2}} ), \end{aligned}$$according to Theorem A.2, where \(O_p(.)\) denotes bounded in probability. Therefore, the chirp rate parameters in both the dimension, \(\alpha _2\) and \(\beta _2\) can be estimated more accurately than the frequency parameters \(\alpha _1\) and \(\beta _1\) for a given sample size.

-

(2)

The marginal asymptotic distributions of the estimators of parameters in each dimension are same when \(M=N\). Then the asymptotic variances of the estimators \(\widehat{\alpha }_1\), \(\widehat{\alpha }_2\), \(\widehat{\beta }_1\) and \(\widehat{\beta }_2\) are given by

$$\begin{aligned} \text{ Var }(\widehat{\alpha }_1) = \text{ Var }(\widehat{\beta }_1) = \frac{93 C_\psi }{M^4 (\sigma _\psi ^2 + \mu _\psi ^2)^2}, \;\;\;\;\;\; \text{ Var }(\widehat{\alpha }_2) = \text{ Var }(\widehat{\beta }_2) = \frac{135 C_\psi }{M^6 (\sigma _\psi ^2 + \mu _\psi ^2)^2}. \end{aligned}$$ -

(3)

The 2-D random amplitude sinusoidal signal model of the form

$$\begin{aligned} y(m,n) = \psi (m,n) e^{i(\alpha _1^0 m +\beta _1^0 n)} + X(m,n) \end{aligned}$$is a special case of model (1) when \(\alpha _2^0 = \beta _2^0=0\). The unknown frequencies \(\alpha _1^0\) and \(\beta _1^0\) can be estimated by maximizing a similar periodogram function of \(y^2(m,n)\) as \(Q({\varvec{\xi }})\), defined in (2). The theoretical results related to the estimator follow in the same way as model (1).

Appendix B

In addition to Assumption 2, the following assumptions are required to establish the theoretical properties of the proposed estimators in case of multicomponent model.

Assumption 5

The sequence of multiplicative error corresponding to the k-th component \(\{\psi _k(m,n)\}\) is a sequence of i.i.d. real-valued random variables with mean \(\mu _{k\psi } \ne 0\), variance \(\sigma _{k\psi }^2 > 0\), and finite fourth moment for \(k=1,\ldots , p\). Additionally, \(\{\psi _j(m,n)\}\) and \(\{\psi _k(m,n)\}\) for \(j\ne k\) are independent.

Assumption 6

The sequence of additive error \(\{X(m,n)\}\) is assumed to be independent of \(\{\psi _1(m,n)\}, \ldots , \{\psi _p(m,n)\}\).

Assumption 7

\((\alpha _{1k}^0,\alpha _{2k}^0, \beta _{1k}^0, \beta _{2k}^0)\) is an interior point of \(\varvec{\Omega }\) for \(k=1,\ldots ,p\) and \((\alpha _{1k}^0,\alpha _{2k}^0, \beta _{1k}^0, \beta _{2k}^0) \ne (\alpha _{1j}^0,\alpha _{2j}^0, \beta _{1j}^0, \beta _{2j}^0)\) for \(k\ne j\), \(j, k = 1,\ldots ,p\).

Similar to the one component model, the estimator \(\widehat{\varvec{\xi }}_k\) of \({\varvec{\xi }}_k^0\) defined in Sect. 3 is a consistent estimator and stated below in Theorem B.1. The asymptotic distribution of \(\widehat{\varvec{\xi }}_k\) is stated in Theorem B.2. The outline of the proof of Theorem B.1 is discussed in Appendix E. The proof of Theorem B.2 involves similar calculations as the proof of Theorem A.2 and is not provided here.

Theorem B.1

Under Assumptions 2, and 5–7, \(\widehat{\varvec{\xi }}_k\) is a consistent estimator of \({\varvec{\xi }}_k^0\), for \(k=1,\ldots ,p\). \(\square \)

Theorem B.2

Under Assumptions 2, and 5–7, as \(\min \{M,N\}\longrightarrow \infty \)

where \(C_{k\psi } = 8(\sigma _{k\psi }^2 + \mu _{k\psi }^2) \sigma ^2 + \frac{1}{2} \gamma + \frac{1}{8} \sigma ^4\); \(\mathbf{D}\) is same as defined in Theorem A.2 and

for \(k=1,\ldots ,p\). Additionally, \((\widehat{\varvec{\xi }}_k - {\varvec{\xi }}_k^0) \mathbf{D}^{-1}\) and \((\widehat{\varvec{\xi }}_j - {\varvec{\xi }}_j^0) \mathbf{D}^{-1}\) for \(k\ne j\) are asymptotically independently distributed. \(\square \)

The estimators of the unknown parameters coming from the same chirp component are asymptotically dependent whereas estimators corresponding to different components are asymptotically independent. Because of independence of \((\widehat{\varvec{\xi }}_k - {\varvec{\xi }}_k^0) \mathbf{D}^{-1}\) and \((\widehat{\varvec{\xi }}_j - {\varvec{\xi }}_j^0) \mathbf{D}^{-1}\) for \(k\ne j\), we think that parameters can be estimated using sequential estimation method.

Appendix C

In this Appendix, we first state all the lemmas we require to prove Theorems A.1 and A.2. Then the consistency of the proposed estimator \(\widehat{\varvec{\xi }}\) will be proved using these lemmas. Lemmas 1 and 2 will be used to get a compact form of the asymptotic distribution as well as to prove the consistency of the proposed estimators of the unknown parameters. Lemma 6 provides a sufficient condition for the proposed estimator to be consistent. Lemma 3 will be used to verify the condition given in Lemma 6. Lemma 4 is important to prove the consistency of the proposed estimator in case of multicomponent model (stated in Theorem B.1). Lemma 5 states the convergence of different series involving the squares of the random variable y(m, n) under Assumptions 1–3.

Write \(z(m,n) = y^2(m,n) = z_R(m,n) + i z_I(m,n)\) and recall that \({\varvec{\xi }} = (\alpha _1, \alpha _2, \beta _1, \beta _2)^T\) and \({\varvec{\xi }}^0 = (\alpha _1^0, \alpha _2^0, \beta _1^0, \beta _2^0)^T\). Denote \(a({\varvec{\xi }}^0;m,n)=\alpha _1^0 m + \alpha _2^0 m^2 + \beta _1^0 n + \beta _2^0 n^2\), then

We now provide the lemmas which are required to prove the consistency of \(\widehat{\varvec{\xi }}\) stated in Theorem A.1 in Appendix A.

Lemma 1

If \((\omega ,\delta ) \in (0,\pi )\times (0,\pi )\), then except for a countable number of points

Lemma 2

If \((\alpha _1, \alpha _2, \beta _1, \beta _2) \in (0,\pi )\times (0,\pi ) \times (0,\pi )\times (0,\pi )\), then except for a countable number of points and for \(l,k=0,1,\ldots \), the followings are true;

Lemma 3

(Lahiri 2013) Let \(\{X(m,n)\}\) be a 2-D sequence of i.i.d. real-valued random variables with mean zero and finite variance \(\sigma ^2 >0\), then for \(k,l=0,1,\ldots \)

Lemma 4

(Grover et al. 2018a) If \((\omega _1, \omega _2, \omega _3, \omega _4) \in (0, \pi ) \times (0, \pi ) \times (0, \pi ) \times (0, \pi )\) and \((\theta _1, \theta _2, \theta _3, \theta _4) \in (0, \pi ) \times (0, \pi ) \times (0, \pi ) \times (0, \pi )\) and \((\omega _1, \omega _2, \omega _3, \omega _4) \ne (\theta _1, \theta _2, \theta _3, \theta _4)\), then except for a countable number of points, for \(k,l=0,1,\ldots \), the following results hold.

Lemma 5

Under Assumptions 1–3, the following results are true for model (1).

for \(k,l=0,1,\ldots ,4\).

Proof of Lemma 5

Note that \(E[X_R(m,n) X_I(m,n)]=0\) and \(\text {Var}[X_R(m,n)X_I(m,n)] = \frac{\sigma ^4}{4}\) and so the 2-D sequence \(\{X_R(m,n)X_I(m,n)\} {\mathop {\sim }\limits ^{i.i.d.}}(0, \frac{\sigma ^4}{4})\). Similarly, under Assumptions 1–3, it can be shown that

Consider

Then, \(\{(X_R^2(m,n) - X_I^2(m,n))\}\) is a sequence of i.i.d. random variables with mean zero and finite variance. Therefore, the second term converges to zero as \(\min \{M,N\}\longrightarrow \infty \) using Lemma 3. Similarly, the third and fourth terms also converge to zero as \(\min \{M,N\}\longrightarrow \infty \) using (15). The first term in the above expression can be written as

using Lemma 2. We have used the fact that the fourth moment of \(\psi (m,n)\) exists. The other three results, given in (12), (13) and (14) can be proved in a similar way. \(\square \)

Lemma 6

Let \(\widehat{\varvec{\xi }}=(\widehat{\alpha }_1, \widehat{\alpha }_2, \widehat{\beta }_1, \widehat{\beta }_2)^T\) be an estimate of \({\varvec{\xi }}^0 = (\alpha _1^0, \alpha _2^0, \beta _1^0, \beta _2^0)^T\) that maximizes \(Q(\varvec{\xi })\), defined in (2) and for any \(\epsilon >0\), let \(S_\epsilon ^{{\xi }^0} = \bigl \lbrace {\varvec{\xi }}: |{\varvec{\xi }} - {\varvec{\xi }}^0| > 4 \epsilon \bigr \rbrace \) for some fixed \({\varvec{\xi }}^0 \in (0,\pi )\times (0,\pi )\times (0,\pi )\times (0,\pi )\). If for any \(\epsilon >0\), as \(\min \{M,N\}\longrightarrow \infty \)

then as \(\min \{M,N\} \longrightarrow \infty \), \(\widehat{\varvec{\xi }} \longrightarrow {\varvec{\xi }}^0\) a.s.

Proof of Lemma 6

This lemma can be proved by contradiction similarly as Wu (1981). \(\square \)

Proof of Theorem A.1

Expanding \(Q(\varvec{\xi })\) around \({\varvec{\xi }}^0\) and using \(y^2(m,n)=z(m,n)=z_R(m,n)+i z_I(m,n)\), it can be written as

Using Lemma 5 with \(k=l=0\), we have

Therefore,

Now

using Lemmas 1 and 3. Similarly, we can show that \({\overline{\lim }}_{n\longrightarrow \infty } \sup _{S_\epsilon ^{\xi ^0}} T_2 {\mathop {\longrightarrow }\limits ^{a.s.}} 0\). Therefore,

Hence, using Lemma 6, \(\widehat{\alpha }_1\), \(\widehat{\alpha }_2\), \(\widehat{\beta }_1\) and \(\widehat{\beta }_2\) which maximize \(Q(\varvec{\xi })\) are consistent estimators of \(\alpha _1^0\), \(\alpha _2^0\), \(\beta _1^0\) and \(\beta _2^0\), respectively. \(\square \)

Appendix D

Theorem A.2 is proved in this Appendix which states the asymptotic distribution of the proposed estimators of the unknown parameters of the single component model (1). The proof first uses the multivariate Taylor series expansion of the first order derivative vector of \(Q({\varvec{\xi }})\) up to the first order term. The first order derivatives of \(Q({\varvec{\xi }})\) with respect to \(\alpha _k\), and \(\beta _k\), \(k=1,2\) are given below;

where

Now using Lemma 5 with \(k=l=0\), it immediately follows that

Therefore, for large M and N,

due to (b) part of (19), ignoring second terms in (17) and (18) which involve \(f_2({\varvec{\xi }})\). The second order derivatives of \(Q({\varvec{\xi }})\) with respect to \(\alpha _k\) and \(\beta _k\) for \(k = 1, 2\) with proper normalizations can be calculated.

Now, we can show the following using Lemma 5, for \(k,l=0, \ldots ,4\),

Write \(\displaystyle Q'({\varvec{\xi }}) = \left( \frac{\partial Q({\varvec{\xi }})}{\partial \alpha _1}, \frac{\partial Q({\varvec{\xi }})}{\partial \alpha _2} , \frac{\partial Q({\varvec{\xi }})}{\partial \beta _1}, \frac{\partial Q({\varvec{\xi }})}{\partial \beta _2} \right) ^T\) as the \(4\times 1\) vector of first order derivatives of \(Q({\varvec{\xi }})\) and let \(Q''({\varvec{\xi }})\) be the \(4\times 4\) matrix of second order derivatives of of \(Q({\varvec{\xi }})\). Consider the diagonal matrix \(\mathbf{D}\) given in Theorem A.2. The diagonal entries of \(\mathbf{D}\) correspond to the rate of convergence of each parameter estimator. Expand \(Q'(\widehat{\varvec{\xi }})\) using bivariate Taylor series expansion around \({\varvec{\xi }}^0\),

where \(\bar{\varvec{\xi }}\) is a point lies on the line joining \(\widehat{\varvec{\xi }}\) and \({\varvec{\xi }}^0\). Observe that \(Q'(\widehat{\varvec{\xi }}) =0\) as \(\widehat{\varvec{\xi }}\) maximizes \(Q({\varvec{\xi }})\), the above equation can be written as

provided \([\mathbf{D}Q''(\bar{\varvec{\xi }}) \mathbf{D}]\) is an invertible matrix a.s. Because \(\widehat{\varvec{\xi }} \longrightarrow {\varvec{\xi }}^0\) a.s. and \(Q''({\varvec{\xi }})\) is a continuous function of \({\varvec{\xi }}\), we have

using continuous mapping theorem. The matrix \({\varvec{\Sigma }}\) can be obtained using limits given in (20) as

Write \(\mathbf{G}_1 = \left[ \begin{array}{ll} 1 &{}\quad 1 \\ 1 &{}\quad \frac{16}{15} \\ \end{array} \right] \), then \({\varvec{\Sigma }} = \left[ \begin{array}{lll} \mathbf{G}_1 &{}\quad \mathbf{0} \\ \mathbf{0} &{}\quad \mathbf{G}_1 \\ \end{array} \right] \) where \(\mathbf{0}\) is a \(2\times 2\) zero matrix. Using (19), the elements of \(\mathbf{D} Q'({\varvec{\xi }}^0)\) are

for large M and N. Therefore, to find the asymptotic distribution of \(\mathbf{D} Q'({\varvec{\xi }}^0)\), we need to study the large sample distribution of \(\frac{1}{M^{\frac{3}{2}} N^{\frac{1}{2}}} g_1(1;{\varvec{\xi }}^0)\) in the first term, \(\frac{1}{M^{\frac{5}{2}} N^{\frac{1}{2}}} g_1(2;{\varvec{\xi }}^0)\) in the second term and so on. Replacing \(z_R(m,n)\) and \(z_I(m,n)\) in \(g_1(k;{\varvec{\xi }}^0)\), \(k=1,2\), we have

The sequence of random variables \(\{X_R(m,n) X_I(m,n)\}\), \(\{\psi (m,n) X_R(m,n)\}\), \( \{\psi (m,n) X_I(m,n)\}\) and \(\{(X_R^2(m,n) - X_I^2(m,n))\}\) are all zero mean and finite variance i.i.d. random variables (using Lemma 5). Therefore, \(E[\frac{1}{M^{\frac{3}{2}} N^{\frac{1}{2}}} g_1(1;{\varvec{\xi }}^0)] =0\) and \(E[\frac{1}{M^{\frac{5}{2}} N^{\frac{1}{2}}} g_1(2;{\varvec{\xi }}^0)] =0\) for large M and N. We observe that all the terms above satisfy the Lindeberg-Feller’s condition. So, \(\frac{1}{M^{\frac{3}{2}} N^{\frac{1}{2}}} g_1(1;{\varvec{\xi }}^0)\) and \(\frac{1}{M^{\frac{5}{2}} N^{\frac{1}{2}}} g_1(2;{\varvec{\xi }}^0)\) converge to normal distributions with zero mean and finite variances.

Similarly, \(\frac{1}{M^{\frac{1}{2}} N^{\frac{3}{2}}} h_1(1;{\varvec{\xi }}^0)\) and \(\frac{1}{M^{\frac{1}{2}} N^{\frac{5}{2}}} h_1(2;{\varvec{\xi }}^0)\) also converge to normal distribution. In order to find the large sample variances and covariances of elements of \(\mathbf{D} Q'({\varvec{\xi }}^0)\), we first find the variance of \(\frac{1}{M^{\frac{3}{2}} N^{\frac{1}{2}}} g_1(1;{\varvec{\xi }}^0)\) for large M and N.

Similarly, we can show as \(\min \{M, N\} \longrightarrow \infty \),

Now, note that \(\mathbf{D}Q'({\varvec{\xi }}^0)\) can be written as

Then, as \(\min \{M,N\} \longrightarrow \infty \), \(\frac{2}{MN} f_1({\varvec{\xi }}^0) {\mathop {\longrightarrow }\limits ^{a.s.}} 2 (\sigma _\psi ^2 + \mu _\psi ^2)\) using (19) and

where

Therefore, Slutsky’s theorem can be applied in (21) and as \(n\longrightarrow \infty \), we have

and hence

That proves the theorem. \(\square \)

Appendix E

In this Appendix, we provide an outline of the proof of Theorem B.1. Write

where y(m, n) is from the multicomponent model (4). Also write \(x(m,n) = y^2(m,n)\) and \(x(m,n) = x_R(m,n) +i x_I(m,n)\), then \(x_R(m,n)\) and \(x_I(m,n)\) are explicitly given by

using the notation \(a({\varvec{\xi }}_k^0;m,n)=\alpha _{1k}^0 m + \alpha _{2k}^0 m^2 + \beta _{1k}^0 n + \beta _{2k}^0 n^2\). To prove Theorem B.1, an equivalent lemma to Lemma 5 is required for the multicomponent model given in (4).

Lemma 7

Under Assumptions 2, 5 and 6, the following results are true for model (4).

for \(k,l=0,1,\ldots ,4\).

Proof of Lemma 7

Observe that we can show that

Consider

As \(\{X_R^2(m,n) - X_I^2(m,n)\}\), \(\{\psi _j(m,n) X_R(m,n)\}\) and \(\{\psi _j(m,n) X_I(m,n)\}\) are sequences of i.i.d. random variables with mean zero and finite variance, the second, third and fourth terms vanish for large M and N using Lemma 3. Using independence of \(\{\psi _j(m,n)\}\) and \(\{\psi _k(m,n)\}\) and part (a) of Lemma 4, the last term goes to zero as \(M,N \rightarrow \infty \). Similarly as in the proof of Lemma 5, using Lemma 2, the first term can be shown as

The other three results can be proved similarly. \(\square \)

In order to prove Theorem B.1, a lemma involving \(J({\varvec{\xi }})\), similar to Lemma 6 is also required. Then, it can be shown that for \(k=1,\ldots ,p\)

and

where \(S_{k\epsilon } =\bigl \lbrace {\varvec{\xi }}_k: |{\varvec{\xi }}_k - {\varvec{\xi }}_k^0| > \epsilon \bigr \rbrace \) for some fixed \({\varvec{\xi }}_k^0 \in (0,\pi )\times (0,\pi )\times (0,\pi ) \times (0,\pi )\). Therefore,

and the estimator \(\widehat{\varvec{\xi }}_k\) of \({\varvec{\xi }}_k^0\) that maximizes \(J({\varvec{\xi }})\) locally, is a consistent estimator. \(\square \)

Rights and permissions

About this article

Cite this article

Nandi, S., Grover, R. & Kundu, D. Estimation of parameters of two-dimensional random amplitude chirp signal in additive noise. Multidim Syst Sign Process 33, 1045–1068 (2022). https://doi.org/10.1007/s11045-022-00831-1

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11045-022-00831-1