Abstract

Underwater images generally are characterized by color cast and low contrast due to selective absorption and light scattering in water medium. Such degraded images reveal some limitations when used for further analysis. To overcome underwater image degradation, various enhancement techniques are developed. Especially, the fusion-based methods have made remarkable success in this filed. However, there are still some defects in the fusion of input images and weight maps, which cause their results to be unnatural. In this paper, we propose a novel and effective natural-based fusion method for underwater image enhancement that applies several image processing algorithms. First, we design an adaptive underwater image white balance method motivated by our statistical prior to mitigate the impact of color deviation of underwater scenes. We then derive two inputs that represent local detail-improved and global contrast-enhanced versions of the color corrected image. Instead of explicitly estimating weight map, like most existing algorithms, we propose a naturalness-preserving weight map estimation (NP-WME) method, which models the weight map estimation as an optimization problem. Particle swarm optimization (PSO) is used to solve it. Benefiting a proper weighting, the proposed method can achieve a trade-off between detail enhancement and contrast improvement, resulting a natural appearance of the fused image. Through this synthesis, we merge the advantages of different algorithms to obtain the output image. Experimental results show that the proposed method outperforms the several related methods based on quantitative and qualitative evaluations.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

The quality of visual information of underwater images plays an important role in many ocean engineering applications and scientific researches, such as aquatic robot inspection [53], underwater saliency detection [29]. However, underwater images are usually plagued by color cast and low contrast owing to the presence of selective absorption and light scattering. For underwater scenario, The selective absorption leads to the serious color cast and image information loss [2]. The light scattering blurs image features and reduces the contrast [9]. Therefore, underwater image enhancement techniques are desired in both scientific researches and computer applications.

Several attempts have been made to enhance the visual manifestation of such degraded underwater images. On the whole, the early methods mainly make use of multiple images [42, 47] or additional information [30] to restore the underwater image. Nevertheless, these algorithms are generally limited in practical applications. In recent years, researchers focus on haze removal from a single image, most of which have been made using the image formation model (IFM). The core idea of these IFM-based dehazing methods depends on various hypothesis or priors. However, for underwater scenes, these extra information may not always hold, due to the unbalanced attenuation caused by the selective absorption in water. Subsequently, with the popularity of Graphics Processing Unit (GPU), the deep learning approach has become the most advanced solution in the image dehazing field. However, these methods introduce unsatisfactory results since the lack of dataset, which contains both the real-world underwater images and their corresponding ground truth for different water types. Although various methods are proposed to imitate underwater image, there still exists a gap between synthetic and real-world underwater images [36].

Noticing these problems, several methods [3, 4, 7, 39] based on fusion have been proposed. In [3], Ancuti et al. proposed a multi-scale fusion method that combined the result images of color correction and contrast enhancement by four weight maps about laplacian contrast, local contrast, saliency and exposedness. Recently, [4] modified this previous work without calculating the exposedness weight map, to avoid amplifying some artifacts and improve the color appearance of resultant images. Although these methods make valuable achievements. But it still suffers from artifacts and unnatural appearance, because it limits itselves to these several weight maps only, without fully considering the information balance among different derived inputs version. In addition, these methods estimate weights based on single pixel, which is susceptible to noise and easy to produce visual artifacts in the fused image.

To solve the aforementioned problem, we propose a novel enhancement method that reasonably combines several algorithms to obtain final enhanced result. The specific contributions are as follows:

-

We propose an effective natural-based fusion method to enhance underwater image, which simultaneously solves the dehazing problem. Extensive experiments show that the proposed method has better results than other related methods.

-

To remove the color casts of underwater images, we propose an adaptive underwater image white balance (AUWB) method inspired by our statistical prior that the green channel is relatively well preserved. By considering the significant attenuation difference between the green channel and the degraded channels into a gain factor, the proposed AUWB method can effectively compensate various color distorted underwater images.

-

To improve the naturalness of the fused image, we design a novel naturalness-preserving weight map estimation (NP-WME) algorithm, which is formulated as an optimization problem. Particle swarm optimization (PSO) provides a helpful support to solve it. Compared with previous fusion-based algorithms, our enhanced image achieves a good trade-off on the transfer of contrast and details without the significant amplification of visual artifacts.

The rest of this paper is organized as follows: Section 2 introduces the work related to underwater dehazing. The proposed method is described in Section 3. Section 4 evaluates and compares experimental results. Section 5 concludes the paper.

2 Related work

The existing image dehazing methods can be roughly divided into the following categories: traditional methods [25, 62] and deep-learning based methods [32, 59]. The traditional methods are further classified into underwater image restoration methods and underwater image enhancement methods. Here is a brief overview of all the above methods.

2.1 Underwater image restoration method

Underwater image restoration methods [54, 55, 60] use the optical model parameters derived from prior knowledge to restore the image by reversing the degradation process. Most of these technologies are based on image formation model (IFM). To estimate the derived parameters, the dark channel prior (DCP) [24] is used. Since the DCP has been effectively proven, many researchers have proposed various underwater restoration methods based on DCP. For example, Drews Jr et al. [16] proposed the underwater DCP (UDCP) from the traditional DCP by excluding the red channel in producing the prior. Peng et al. [44] proposed a generalized dark channel prior (GDCP), which estimated ambient light using the depth-dependent color, and also used adaptive color correction incorporated into the IFM. Apart from the DCP-related priors, there are also useful priors proposed by other underwater image restoration works. Galdran et al. [20] proposed a red channel prior method to recover the lost contrast in underwater images. This prior reversed the red channel to compensate the strong attenuation of red light in the water medium. Li et al. [34] represented an effective underwater image dehazing method built on a minimum information loss principle. And then, a histogram distribution prior was proposed to increase the contrast and brightness of underwater images. Peng and Cosman [45] proposed a depth estimation method for underwater scenes based on image blurriness and light absorption, which can be used in the IFM to restore underwater images. Hou et al. [26] proposed a variational model based on non-local different operators, which successfully integrated the underwater image formation model into the variational framework.

2.2 Underwater image enhancement method

On the other hand, underwater image enhancement methods [23, 37] improve the image quality by changing the intensity values of the RGB color channels according to certain rules. For example, in [11], Chambah et al. proposed an automatic color equalization (ACE) model to correct strong and nonuniform color casts in underwater images. In [27], Iqbal et al. used histogram stretching method to restore the color balance and improve the contrast of underwater images. This approach is easy to apply and can successfully improve underwater image quality. Fu et al. [19] proposed a two-step enhancement method, which carries out a color correction process followed by a contrast enhancement process. Mi et al. [40] proposed a multi-purpose oriented method, which decomposes the input into illumination and reflectance layer. Brightness compensation is performed on the illumination layer, while enhancement of color correction and contrast are carried out on the reflectance layer through a multi-scale processing strategy. Zhang et al. [61] introduced a method called LAB-MSR, which is implemented by extending the Retinex algorithm to CIEL∗a∗b∗ color space.

2.3 Deep-learning based method

More recently, deep-learning based dehazing network is designed for underwater images. Fabbri et al. [17] proposed UGAN, which uses gradient penalty as the soft constraint on the output image. In letter [35], Li et al. proposed a weakly supervised color transformation technique inspired by cycle-consistent adversarial networks (CCAN) to correct color. Most recently, UWCNN [32] is proposed, which uses a CNN architecture trained on the underwater scene prior based underwater images. Islam et al. [28] presented a supervised enhancement model called FUnIE-GAN for estimating clear image. Guo et al. [21] proposed a multi-scale dense block, called DenseGAN that employs the use of dense connections, residual learning and multi-scales network for enhancing underwater image.

3 The proposed method

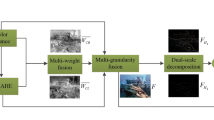

In this section, the proposed method is deliberated for generating a haze-free underwater image characterized by natural appearance and good visibility. An overview of the proposed method is shown in Fig. 1. The different steps involved in the proposed method are as follows:

3.1 Adaptive underwater image white balance

In terms of underwater image enhancement, it is necessary to correct the unwanted color appearance caused by selective absorption of colors with depth. In water, the unbalance attenuation caused by the selective absorption varies with geographical, seasonal, and climate related events [8]. For example, visible light with the longest wavelength is absorbed at the highest rate, appearing deep-blue or blue-green to the eye, when travling in clear open waters. In near-shore waters, sea water contains more suspended particles and organic matter, which strongly absorb the shortest wavelength, resulting in yellowish or greenish appearance.

When focusing on the issue of color correction, existing white balance methods are considered to search for a proper solution, and the visual results are shown in Fig. 2. However, most traditional methods perform poorly due to not considering the selective absorption. They fail to remove the color shifting, and generally look bluish. The Gray World is the best method to remove blue tone, but it suffers from severe red artifacts. Recently, some methods [4, 5, 12] have been proposed to reduce the effect of selective absorption. In [4], a constant proportional compensation model is developed to make up the difference between the degraded channel and other channels. The global visual performance can be improved. However, it obtains the degraded channels by artificially observing the tones of the image, which reduces their practical applicability. Therefore, how to effectively compensate for the light attenuation is still a challenge.

Comparative results of different white balance methods. From left to right are original images that are examples of the four color tones, and the results generated by Grey Edge [58], Shades of Grey [18], Max RGB [31], Gray World [10], and AUWB method. One to three rows are selected from UCCS [38]. The set contains three 100-image subsets of “Blue”, “Green-blue”, and “Green” tones according to the degree of color casts. Fourth row is selected from UIEBD [36], This subset contains 10 typical yellow images

To solve the problems mentioned above, an adaptive underwater image white balance (AUWB) approach is proposed. Firstly, we have noticed that images captured under water usually show four color tone types, namely, blue, blue-green, greenish and yellow. To analyze the selective absorption of each color tone, we gather an underwater image dataset from UCCS [38] and UIEBD [36]. The corresponding example images are shown in the first two columns in Fig. 2. Table 1 calculates the mean (μ) and standard deviation (σ) of the three channels in each color tone type. The bold value means the maximum value of the R-G-B channel. From Table 1, the following observations can be obtained :

1) Generally, the red component is attenuated faster than blue/green ones, which is only suitable for images with bluish and blue-green tones in clear water.

2) When the images appear greenish or yellow, the mean value of the blue channel is much smaller than the green. It reveals the fact that, the blue channel may be significantly attenuated, due to the absorption by organic matter when traveling in sea water.

3) The green channel is relatively well preserved under water, compared to the red and blue ones.

Following the above statistical prior, we design a gain factor Z to carry information about the difference in average pixel value between the green channel and the degraded channels. This information is critical for controlling the appropriate amount of pixel values that should be added to the degraded channels. The gain factor Z is defined as:

where \(\mu \left (I^{c} \right )\) is the average of color component Ic.

And then the underwater image white balance function is defined as:

where \(W\left (\cdot \right )\) is the white balance function.

To adaptively correct various color cast images, we add a threshold judgment (τ), and \(\tau =\lambda \sum \mu \left (I^{c} \right )\). If \(\mu \left (I^{g} \right )-\mu \left (I^{c} \right )\geq \tau \), then the AUWB compensates for the attenuation of degraded channels. Otherwise, it means the image has less color cast and white balance may causes unnecessary artifacts. In practice, our tests reveal that λ = 0.1 is appropriate for various illumination conditions and acquisition settings.

Overall, we present an effective and automatic AUWB method, which is able to selectively respond to color casts. For a given underwater image I(x), the color corrected image \(I_{WB}\left (x \right )\) is obtained as:

To further analyze the robustness of the AUWB, Fig. 3 shows the comparisons of several white balance techniques to measure the color corrected accuracy of images taken at different depths. Our assessment considers the CIEDE2000 [49], which measures the color difference between the reference ground truth Macbeth Color Checker and the corresponding color patch, manually located in each image. As can be seen, the AUWB approach as a pre-processing step performs better than other methods in most of cases. In addition, compared to Ancuti [4], the proposed method is adaptive and can effectively handle multiple underwater environments.

Comparisons of several well-known white balance approaches (Grey Edge [58], Shades of Grey [18], Max RGB [31], Gray World [10], and Ancuti’s white balance method [4]). The original images (selected from [46]) are captured at the depths of 3.26m, 6.06m, 8.98m, 12.25m, and 15.11m. The color checker is used as a reference for calculating CIEDE2000 [49]. The range of CIEDE2000 is [0,100], a smaller value indicates a small color difference. In other words, the smaller the metric, the better

3.2 Natural-based fusion

In this section, our goal is to develop an effective natural-based fusion method that blends the advantages of different mature image processing techniques. Specifically, the proposed technique could be divided into the following four steps:

3.2.1 Inputs

A natural and intuitive way to enhance the degraded images is simply to adjust illumination since it is linked to the naturalness of the image [57]. Therefore, in this section, the color corrected image is converted into CIEL∗a∗b∗ color space. Then, the L channel is extracted as the illumination information. Our two inputs are derived from the estimated illumination, which allows us to avoid distortion. The first input IC is designed to address the global contrast in order to clarify the dark regions of the image. Many techniques can be used to enhance global contrast, such as sigmoid function and gamma correction. In this step, we obtain the first input by applying the Contrast-Limited Adaptive Histogram Equalization (CLAHE), which useful for expanding the global contrast between adjacent structures. Since enhancing the global contrast is at cost of blurring the visual information. Thus, the second input IL is derived to improve local detail by using Laplacian filter.

In order to avoid the artifacts caused by the pixel-wise weight map estimation, we use SLIC (Simple Linear Iterative Clustering) [1] to divide the input image into N non-overlapping patches. To achieve the division of the input images, the color corrected image is divided into non-overlapping N image patches (Mask) by using SLIC. To ensure that the segmentation results of two input images are consistent, we directly apply the mask to the input images to obtain final input images \({I}_{C} = {I}_{C}^{n}\) and \({I}_{L}= {I}_{L}^{n}\), where \(n=\left (1,2,{\cdots } ,N \right )\).

3.2.2 Weights

Estimating the weight map is an important step of image fusion. Existing fusion-based methods often introduce unnatural appearance, due to the inaccurate weight map estimation. To fill this gap, the NP-WME method is proposed in this work. It is designed as an optimization problem, defined as:

where c indicates each channel, W is the initial weight map, \(GF\left (\cdot \right )\) is refinement function, \(f\left (\cdot \right )\) is the abbreviation of fusion function, \({\mathscr{L}} \left (\cdot \right )\) is the objective function. Note that \(f\left (\cdot \right )\) is a function of three parameters, W is the only unknow component.

To estimate the value of W with high accuracy, PSO is selected to solve this optimization problem. Since PSO has been proven to be effective in image enhancement field [41, 48]. Furthermore, it is uncomplicated to hybridize PSO with other algorithms and can be used in various application environments with a little modification [56].

Particle swarm optimization (PSO) is a random search algorithm based on swarm cooperation [22], which is developed by simulating the foraging behavior of birds. Each bird is regarded as a “particle”. The position of each particle represents a solution to the problem. The algorithm randomly initializes a group of particles, then iterates to find the optimal solution, and uses fitness to determine the quality of the solution. Each iteration of the particle tracks the personal best (Pbest) and the global best (Gbest) to dynamically update its velocity and position. It specifically includes the following steps:

- Parameter Initialization. :

-

Initializing the beginning parameters, such as the maximum number of iterations tmax, total population of particles m, inertia weight ω, learning factor c1 and c2, and the random numbers r1 and r2.

- Updating the Particles. :

-

The velocity of each particle is updated based on the summation of parameters, which is defined as:

$$ v_{i}^{t+1}=\omega {v_{i}^{t}}+c_{1}r_{1}\times \left (P_{best}-{x_{i}^{t}} \right )+c_{2}r_{2}\times \left (G_{best}-{x_{i}^{t}} \right ) $$(5)where \(i=\left (1,2,{\cdots } ,m \right )\), v is velocity, ω is an inertia factor. c1 and c2 are non-negative constants and learning factors, r1 and r2 are two random numbers. t represents the number of current iterations. In addition, the personal best (Pbest) is the current optimal position searched by the single particle, and the global best (Gbest) is the current optimal position searched by all particles. Both Pbest and Gbest are updated at every iteration.

Then, the particle’s new velocity \(v_{i}\left (t+1 \right )\) is used to update the position x of particle defined as:

$$ x_{i}^{t+1}={x_{i}^{t}}+v_{i}^{t+1} $$(6)In the velocity update formula (5) , the first item is the product of the inertia weight and the current velocity of the particle, which represents the trust of the particle in its current motion, and is based on the original velocity’s inertial movement. The second term represents the state of self-consciousness, which is the particle’s judgment of its own history. The third item indicates social consciousness and is the mutual cooperation and information sharing of the various particles in the group.

- Objective Function. :

-

The fitness of the particle’s current position is measured by the objective function. Once the inputs of the natural-based fusion process are obtained, it is possible to estimate fused image using enhancement properties: contrast and information loss. The objective function is formulated to maximize the contrast and minimize the information loss of the fused image, defined as:

$$ \mathcal{L}=\mathcal{L}_{con}-\mathcal{L}_{inf} $$(7)where \({\mathscr{L}}_{con}\) and \({\mathscr{L}}_{inf}\) are defined as:

$$ \mathcal{L}_{con}=\sum\limits_{c} \sum\limits_{i\in {\varOmega}_{n}} \frac{G_{c}\left (i \right )-\mu \left (G_{c} \right )}{\left | {\varOmega}_{n} \right |} $$(8)$$ \mathcal{L}_{inf}=\sum\limits_{c}\sum\limits_{i\in {\varOmega}_{n}} \left (max\left (0,J_{c}\left (i \right ) -255\right )^{2}+min\left (0,J_{c}\left (i \right ) \right )^{2} \right ) $$(9)where c indicates each channel, \(\left | {\varOmega }_{n} \right |\) is the cardinality of the n th patch Ωn, Gc is a gray-scale layer of fused image Jc, and the intensity range is set 0 to 255, and \(\mu \left (G_{c} \right )\) is the average intensity of Gc. The information loss can be counted by the sum of enhanced pixel values that are out of bounds (0,255).

In Eq.(7), the first term ensures the fused image J has rich contrast and texture information, and the second term is used to measure the lost information cost. A good fused result is described by the large value of \({\mathscr{L}}\). In other words, our weight maps can be obtained when the \({\mathscr{L}}\) achieves the largest value.

Fig. 4 shows the different weights obtained by fusion-based method [3], fusion-based method [4] and the proposed method. It can be observed that the visual effect of the proposed method is pleasing, while the contrast is well enhanced. The results of [3] and [4] lose the global lightness order and have the certain lightness distortion. Therefore, it proves that the proposed natural-based fusion method has better flexibility and can well transform the contrast and details into the fused image. For clarity, the entire procedure of calculating weights \((W^{\prime })\) is outlined in Algorithm 1.

3.2.3 Refinement

To ensure spatial consistency, we refine the weight maps of input images with guided filter. Guided filter can maintain the edges while smoothing the image. The gray-scale version of the input image is selected as the guided image. The refined weight maps can be written as:

where \(GF\left (\cdot ,\cdot ,\cdot ,\cdot \right )\) represents the guided filter operation. W is the weight map of each component of the n th image. \(W^{\prime }\) is the refined weight map. r is the size of the filter window. ε denotes the regularization parameter, which determines the blur degree of the filter. According to the experiments, r = 3 and ε = 0.15.

3.2.4 Fusion framework

The final enhanced image version J is obtained by fusing the defined inputs with the weight measures at every pixel location, which is defined as:

where IC and IL are the input images, \(W_{C}^{\prime }\) and \(W_{L}^{\prime }\) are the weight maps. The weights are normalized, and \(W_{C}^{\prime }+W_{L}^{\prime }=1\). For clarity, the detailed calculation steps of the proposed method are outlined in Algorithm 2.

4 Experimental results

In this section, we test various underwater images to examine the effectiveness of the proposed approach. Test images are selected from underwater image enhancement benchmark dataset (UIEBD) [36] and real-world underwater image enhancement (RUIE) [38] dataset. The UIEBD contains 890 degraded underwater images and 60 challenging data. RUIE is composed of three subsets, which contains a total of 3930 challenged images of marine life captured in real marine aquaculture environment.

To verify the performance of the AUWB method, we conduct comparative experiments with several traditional color constancy techniques, namely, Grey Edge [58], Shades of Grey [18], Max RGB [31] and Gray World [10]. Furthermore, the performance of the natural-based fusion method is compared with that of several underwater image enhancement/restoration methods, namely, UDCP [15], Histogram prior [33], Haze Line [6], UIBLA [45], GDCP [44], fusion-based (Ancuti2012) [3], fusion-based (Ancuti2018) [4], UGAN [17], UWCNN [32], FUnIE-GAN [28].

4.1 Parameters

4.1.1 Parameter settings

In this work, several parameters are initialized manually. They are the image patches N, the number of particles m, the maximum number of training iterations tmax, the inertia factor ω, the positive constants c1 and c2, the random numbers r1 and r2.

In the analysis done by Bratton and Kennedy [14], it was reported that under testing, a population size between 20-100 particles produced better results for the test problems. Further, in [41], this argument was also confirmed. So in this work, m is set to be 30.

The inertia weight ω is added to obtain a better balance between global exploration and local exploitation of PSO approach. A larger value of ω promotes a global exploration (searching new areas), while a smaller value encourages a local exploitation [51]. It was found that linearly decreasing the inertia weight from 0.9 to 0.4 produces good results [50]. In this work, ω is set to be 0.8.

c1 and c2 are learning factors. The cognitive acceleration (c1) starts with a higher value and linearly decreases, while the social acceleration (c2) starts with a lower value and linearly increases. The ranges of the values are the following: c1 decreases linearly from 2.5 to 0, and c2 increases linearly from 0 to 2.5. In this work, both c1 and c2 are set to be 2. r1 and r2 are random numbers in [0,1].

N is the number of image patches. When an image is divided into 400 patches, the fused image shows a pleasant result. So in this work, N is set to be 400.

4.1.2 Parameter analysis

The maximum number of iterations is highly problem-dependent. 400 patches of one original image have been tested to determine the appropriate iteration value. It can be seen from Fig. 5, the algorithm is converged before 30 times. So in this work, tmax is set to be 30.

4.2 Underwater dehazing evaluation

4.2.1 Qualitative comparison

Figure 6 presents the results obtained on seven different representative underwater images in the UIEBD through several recent dehazing methods. Experiments show that the effects of attenuation can be successfully eliminated by proposed method and the visibility of raw underwater images is effectively improved. Although qualitative comparison also shows that most of the above methods can well complete the task of underwater image enhancement, but due to the accurate estimation of the weight maps and the excellent performance of the white balance, the proposed technique can restore most scene details and maintain a natural appearance.

As shown in Fig. 6, the result of fusion-based [3] method introduce a significant color shift, due to the inaccurate color correction. The Histogram Prior [33] method produces unsatisfying results with reddish color shift and artifacts. The Haze Line [6] often fail to remove color cast. All the IFM-based methods (e.g., UDCP [15], histogram prior [33] and UIBLA [45]) fail to estimate transmission and veiling light accurately, which results in visually unpleasing restoration results.

4.2.2 Quantitative comparison

For quantitative evaluation, full-reference criteria: peak signal to noise ratio (PSNR), patch-based contrast quality index (PCQI) [52], non-reference criteria: Blur [13], underwater image quality measure (UIQM) [43] are used. For full reference evaluation, we regard the reference images supported by UIEBD as basic facts, and calculate the PSNR and PCQI between the restored result and the corresponding reference.

A higher PSNR value indicates that the result is closer to the reference in terms of image content. The Blur metric [13] evaluates the image quality in terms of blur perception, and the blur metric ranges from 0 to 1, indicating the best to the worst.

PCQI [52] method is used to predict the human perception of contrast variations. A higher PCQI score, the better image contrast. PCQI can be described as:

where M means the total number of the patches in the image, qi, qc and qs are three comparison functions.

The UIQM metric [43] is designed specifically to quantify the colorfulness(UICM), sharpness(UISM) and contrast(UIConM) that characterize underwater images. A greater value of the UIQM reprents higher image quality. UIQM can be described as:

where c1, c2 and c3 are the scale factors, we set c1 = 0.0282, c2 = 0.0953, and c3 = 3.5753 as the original paper.

Tables 2, 3, 4 and 5 represent the evaluation values of the enhanced results shown in Fig. 6 in terms of PSNR, Blur, PCQI and UIQM, respectively. The red value indicates the best result, the blue value indicates the second best. As described in these four tables, the proposed method performs better in most cases.

4.3 Naturalness-preserving analysis

For underwater image enhancement based on fusion, the fused results with naturalness are important and highly desired for many practical applications (such as object recognition, object tracking, etc.). However, The fused results obtained by using existing algorithms often have artifacts. In this section, we compare the fused results of the proposed technique with previous state-of-the-art fusion-based methods on the several challenging underwater images, as shown in Fig. 7.

From Fig. 7, it can be seen that the proposed technique is characterized by higher robustness for extreme underwater cases (such as non-uniform artificial illumination and turbid sea water). Fusion-based methods [3, 4] tend to introduce artifacts, over-enhancement and color cast (for example, reddish color). Global-based fusion results are obtained by setting the number of patches N to 1. The results show that the global-based fusion method has also been over-enhanced. In contrast, as displayed in enlarged regions, our algorithm thoroughly removes haze and naturally restores contrast.

5 Conclusion

In this paper, we propose an efficient natural-based fusion method for underwater image dehazing. Firstly, the adaptive underwater image white balance (AUWB) method is employed to reduce the color deviation, which is based on a statistical prior that the green channel is relatively well preserved under water. Secondly, to solve the problems of low contrast, a novel natural-based fusion method is proposed, which combines the result images of global contrast-enhanced and local detail-improved. And in this procedure, we modeled weight map estimation as an optimization problem. Particle swarm optimization (PSO) is used to solve it, which promotes more accurate transfer of contrast improvement and detail enhancement to the fused image. The qualitative and quantitative results demonstrate that the proposed method achieves better natural visual quality with more valuable information and higher contrast. In addition, the proposed technique still suffers from low computational efficiency, and we will further explore the solutions to this drawback in future work.

References

Achanta R, Shaji A, Smith K, Lucchi A, Fua P et al (2012) Slic superpixels compared to state-of-the-art superpixel methods. IEEE Transactions on Pattern Analysis & Machine Intelligence 34(11):2274– 2282

Akkaynak D, Tali T (2018) A revised underwater image formation model. In: IEEE Conf Comput Vis Pattern Recognit (CVPR), pp 6723–6732

Ancuti C, Ancuti CO, Haber T, Bekaert P (2012) Enhancing underwater images and videos by fusion. IEEE Conf Comput Vis Pattern Recognit, 81–88

Ancuti CO, Ancuti C, Vleeschouwer CD, Bekaert P (2018) Color balance and fusion for underwater image enhancement. IEEE Trans Image Process 27(1):379–393

Ancuti CO, Ancuti C, Vleeschouwer CD, Sbert M (2019) Color channel compensation (3c): a fundamental pre-processing step for image enhancement. IEEE Trans Image Process 29:2653–2665

Avidan S, Berman D, Treibitz T (2017) Color restoration of underwater images. In: British Machine Vision Conference (BMVC)

Bai L, Zhang W, Pan X, Zhao C (2020) Underwater image enhancement based on global and local equalization of histogram and dual-image multi-scale fusion. IEEE Access 8:128973–128990

Berman D, Levy D, Avidan S, Treibitz T (2018)

Boom BJ, et al. (2014) A research tool for long-term and continuous analysis of fish assemblage in coral-reefs using underwater camera footage. Ecological Informat 23(9):83–97

Buchsbaum G (1980) A spatial processor model for object colour perception. J Frankl Inst 310(1):1–26

Chambah M, Semani D, Renouf A, et al. (2004) Underwater color constancy: Enhancement of automatic live fish recognition. Proceedings of SPIE - The International Society for Optical Engineering

Chiang J-Y, Chen Y-C (2012) Underwater image enhancement by wavelength compensation and dehazing. IEEE Trans Image Process 21(4):1756–1769

Crete F, Dolmiere T, Ladret P, Nicolas M (2007) The blur effect: Perception and estimation with a new no-reference perceptual blur metric. In: Human Vision and Electronic Imaging XII, p 649201

Daniel B, Kennedy J (2007) Defining a standard for particle swarm optimization. In: 2007 IEEE Swarm Intelligence Symposium

Drews PLJ, Nascimento ER, Botelho SSC, Campos MFM (2016) Underwater depth estimation and image restoration based on single images. IEEE Comput. Graph. Appl 36(2):24–35

Drews PJr, do Nascimento E, Moraes F, Botelho S, Campos M (2013) Transmission estimation in underwater single images. IEEE International Conference on Computer Vision Workshops, 825– 830

Fabbri C, Islam MJ, Sattar J (2018) Enhancing underwater imagery using generative adversarial networks. In: IEEE Int Conf Robot Autom (ICRA), pp 7159–7165

Finlayson GD, Trezzi E (2004) Shades of gray and colour constancy. In: Color and Imaging Conference

Fu X , Fan Z , Ling M , Huang Y, Ding X (2017) Two-step approach for single underwater image enhancement. In: 2017 International Symposium on Intelligent Signal Processing and Communication Systems (ISPACS)

Galdran A, Pardo D, Picón A., image A. Alvarez-Gila. (2014) Automatic red-channel underwater restoration. J Vis Commun Image Represent, 26

Guo Y, Li H, Zhuang P (2020) Underwater image enhancement using a multiscale dense generative adversarial network. IEEE J Ocean Eng 45:862–870

Guo F, Liu L, Tang J (2017) Pso-based single image defogging. In: CCF Chinese Conference on Computer Vision

Hassan N, Ullah S, Bhatti N, Mahmood H, Zia M (2021) The retinex based improved underwater image enhancement. Multimed Tools Appl, (80):1839–1857

He JSK, Tang X (2011) Single image haze removal using dark channel prior. IEEE Trans Pattern Anal Mach Intell 33(12):2341–2353

Hou G, Li J, Wang G, Yang H, Huang B, Pan Z (2020) A novel dark channel prior guided variational framework for underwater image restoration. J Vis Commun Image Represent, 66

Hou G, Pan Z, Wang G, Yang H, Duan J (2019) An efficient nonlocal variational method with application to underwater image restoration. Neurocomputing 369:106–121

Iqbal K, Salam RA, Azam O, Talib AZ (2015) Underwater image enhancement using an integrated colour model. IAENG Int J Comput Sci 34(2):239–244

Islam MJ, Xia Y, Sattar J (2020) Fast underwater image enhancement for improved visual perception. IEEE Robot Autom Lett 5(2):3227–3234

Jian M, Qi Q, Dong J, Yin Y, Lam K-M (2018) Integrating qdwd with pattern distinctness and local contrast for underwater saliency detection. J Vis Commun Image Represent 53(3):31–41

Kopf J, et al. (2008) Deep photo: model-based photograph enhancement and viewing. ACM Trans Graph

Land EH (1978) The retinex theory of color vision. Sci Am 237 (6):108–128

Li C, Anwar S, Porikli F (2020) Underwater scene prior inspired deep underwater image and video enhancement. Pattern Recog 98:107038–107049

Li C, Guo J, Cong R, Pang Y, Wang B (2016) Underwater image enhancement by dehazing with minimum information loss and histogram distribution prior. IEEE Trans Image Process 25(12):5664–5677

Li C-Y, Guo J-C, Cong R-M, Pang Y-W, Wang B (2016) Underwater image enhancement by dehazing with minimum information loss and histogram distribution prior. IEEE Trans Image Process 25(12):5664–5677

Li C, Guo J, Guo C (2018) Emerging from water: Underwater image color correction based on weakly supervised color transfer. IEEE Signal Process Lett 25(3):323–327

Li C, Guo C, Ren W, Cong R, Hou J, Kwong S, Tao D (2020) An underwater image enhancement benchmark dataset and beyond. IEEE Trans Image Process

Liang Z, Wang Y, Ding X, Mi Z, Fu X (2020) Single underwater image enhancement by attenuation map guided color correction and detail preserved dehazing. Neurocomputing

Liu R, Fan X, Zhu M, Hou M, Luo Z (2020) Real-world underwater enhancement: Challenges, benchmarks, and solutions under natural light. IEEE Trans Circuits Syst Video Technol

Marques TP, Albu AB (2020) L2uwe: a framework for the efficient enhancement of low-light underwater images using local contrast and multi-scale fusion. CVPR Whorshops, 2286–2295

Mi Z, Li Y, Wang Y, Fu X (2020) Multi-purpose oriented real-world underwater image enhancement. IEEE Access, 112957–112968

Mohd Azmi KZ, Abdul Ghani AS, Md Yusof Z, Ibrahim Z (2019) Natural-based underwater image color enhancement through fusion of swarm-intelligence algorithm. Appli Soft Comput, 85

Narasimhan SG, Nayar SK (2003) Contrast restoration of weather degraded images. IEEE Transactions on Pattern Analysis & Machine Intelligence 25 (6):713–724

Panetta K, Gao C, Agaian S (2016) Human-visual-system-inspired underwater image quality measures. IEEE J Ocean Eng 41(3):541–551

Peng Y-T, Cao K, Cosman PC (2018) Generalization of the dark channel prior for single image restoration. IEEE Trans Image Process, 2856–2868

Peng Y-T, Cosman PC (2017) Underwater image restoration based on image blurriness and light absorption. IEEE Trans Image Process 26(4):1579–1594

Roznere M, Li AQ (2019) Real-time model-based image color correction for underwater robots. IEEE IROS, 7191–7196

Schechner YY, Averbuch Y (2007) Regularized image recovery in scattering media. IEEE Transactions on Pattern Analysis & Machine Intelligence 29 (9):1655–60

Sethi R, Sreedevi I (2019) Adaptive enhancement of underwater images using multi-objective pso. Multimed Tools Appl 78(22):31823–31845

Sharma G, Wu W, Dalal EN (2005) The ciede2000 color-difference formula: Implementation notes, supplementary test data, and mathematical observations. Color Research & Application 30(1):21–30

Shi Y (1998) A modified particle swarm optimizer. In: Proc of IEEE Icec conference

Shi Y, Eberhart RC (1999) Empirical study of particle swarm optimization. In: Proceedings of the 1999 congress on evolutionary computation CEC 99

Shiqi W, Kede M, Hojatollah Y, Zhou W, Weisi L (2015) A patch-structure representation method for quality assessment of contrast changed images. IEEE Signal Processing Letters 22(12):2387–2390

Torres-Méndez LA, Dudek G (2005) Color correction of underwater images for aquatic robot inspection. In: International Workshop on Energy Minimization Methods in Computer Vision & Pattern Recognition, pp 60–73

Wagner B, Nascimento ER, Barbosa WV, Campos MFM (2018) Single-shot underwater image restoration: a visual quality-aware method based on light propagation model. J Vis Commun Image Represent, 55

Wang Y, Liu H, Chau L (2018) Single underwater image restoration using adaptive attenuation-curve prior. IEEE Trans Circuits Syst I Regul Pap 65(3):992–1002

Wang D, Tan D, Liu L (2018) Particle swarm ptimization algoritm: an overview. Appl. Soft Comput 22(2):387–408

Wang S, Zheng J, Hu H-M (2013) Naturalness preserved enhancement algorithm for non-uniform illumination images. IEEE Trans Image Process 22(9):3538–3548

Weijer Jvd, Gevers T, Gijsenij A (2007) Edge-based color constancy. IEEE Trans Image Process 16(9):2207–2214

Yang M, Hu K, Du Y, Wei Z, Hu J (2019) Underwater image enhancement based on conditional generative adversarial network. Signal Process Image Commun, 81

Yu H, Li X, Lou Q, Lei C, Liu Z (2020) Underwater image enhancement based on dcp and depth transmission map. Multimed Tools Appl 79:20373–20390

Zhang S, Wang T, Dong J, Yu H (2017) Underwater image enhancement via extended multi-scale retinex. Neurocomputing 245:1–9

Zhou J , Liu Z , Zhang W , Zhang D , Zhang W (2020) Underwater image restoration based on secondary guided transmission map. Multim Tools Appl, 7771–7788

Acknowledgements

The authors sincerely thank the editors and anonymous reviewers for the very helpful and kind comments to assist in improving the presentation of our paper. This work was supported in part by the National Natural Science Foundation of China under Grant 62176037, Grant 62002043, and Grant 61802043, by the Liaoning Revitalization Talents Program under Grant XLYC1908007, by the Foundation of Liaoning Key Research and Development Program under Grant 201801728, by the Dalian Science and Technology Innovation Fund under Grant 2018J12GX037, Grant 2019J11CY001, and Grant 2021JJ12GX028.

Author information

Authors and Affiliations

Corresponding authors

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Yan, X., Wang, G., Jiang, G. et al. A natural-based fusion strategy for underwater image enhancement. Multimed Tools Appl 81, 30051–30068 (2022). https://doi.org/10.1007/s11042-022-12267-7

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-022-12267-7